点击上方蓝字关注我们

是否有种想学大数据,看到动辄十几个组件就劝退了80%的人,看我如何用Docker-compos 搭建Hadoop+Hive+Presto这个小数仓,是有多么的轻松。

一、安装docker和docker-compose

参考上一篇安装部署操作:

二、编排容器内容

1、建1个文件夹和2个文件

mkdir hadoop-hivecd hadoop-hivetouch docker-compose.ymltouch hadoop.env

将 docker-compose.yml hadoop.env 文件夹 hadoop-hive下

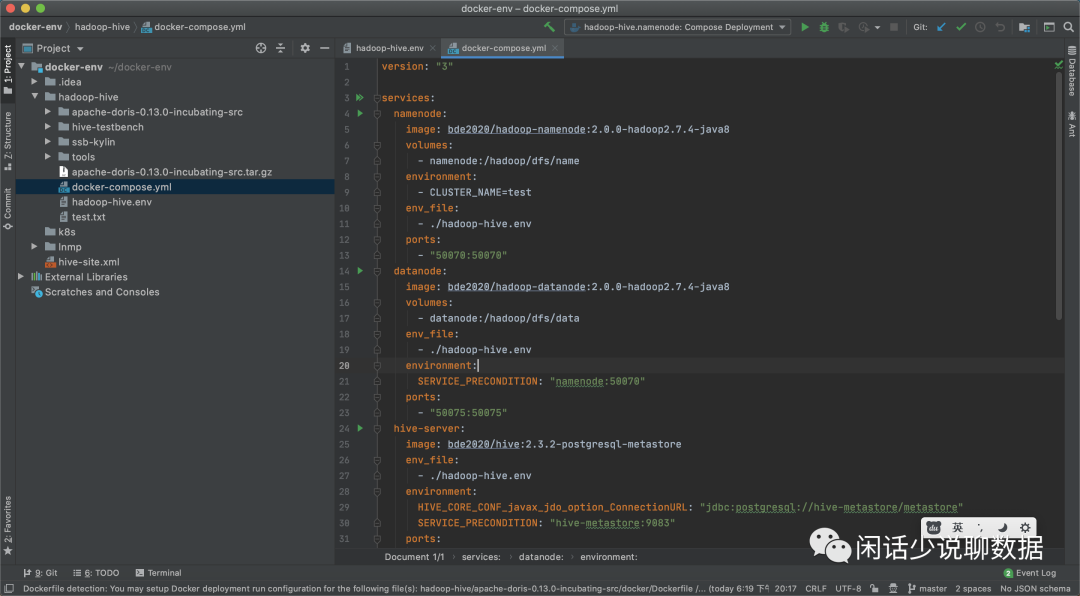

2、编辑docker-compose.yml

vi docker-compose.yml

version: "3"services:namenode:image: bde2020/hadoop-namenode:2.0.0-hadoop2.7.4-java8volumes:- namenode:/hadoop/dfs/nameenvironment:- CLUSTER_NAME=testenv_file:- ./hadoop-hive.envports:- "50070:50070"datanode:image: bde2020/hadoop-datanode:2.0.0-hadoop2.7.4-java8volumes:- datanode:/hadoop/dfs/dataenv_file:- ./hadoop-hive.envenvironment:SERVICE_PRECONDITION: "namenode:50070"ports:- "50075:50075"hive-server:image: bde2020/hive:2.3.2-postgresql-metastoreenv_file:- ./hadoop-hive.envenvironment:HIVE_CORE_CONF_javax_jdo_option_ConnectionURL: "jdbc:postgresql://hive-metastore/metastore"SERVICE_PRECONDITION: "hive-metastore:9083"ports:- "10000:10000"hive-metastore:image: bde2020/hive:2.3.2-postgresql-metastoreenv_file:- ./hadoop-hive.envcommand: /opt/hive/bin/hive --service metastoreenvironment:SERVICE_PRECONDITION: "namenode:50070 datanode:50075 hive-metastore-postgresql:5432"ports:- "9083:9083"hive-metastore-postgresql:image: bde2020/hive-metastore-postgresql:2.3.0presto-coordinator:image: shawnzhu/prestodb:0.181volumes:- ./tools:/opt/toolsports:- "8080:8080"volumes:namenode:datanode:

3、编辑 hadoop.env hadoop的运行环境

vi hadoop.env

HIVE_SITE_CONF_javax_jdo_option_ConnectionURL=jdbc:postgresql://hive-metastore-postgresql/metastoreHIVE_SITE_CONF_javax_jdo_option_ConnectionDriverName=org.postgresql.DriverHIVE_SITE_CONF_javax_jdo_option_ConnectionUserName=hiveHIVE_SITE_CONF_javax_jdo_option_ConnectionPassword=hiveHIVE_SITE_CONF_datanucleus_autoCreateSchema=falseHIVE_SITE_CONF_hive_metastore_uris=thrift://hive-metastore:9083HDFS_CONF_dfs_namenode_datanode_registration_ip___hostname___check=falseCORE_CONF_fs_defaultFS=hdfs://namenode:8020CORE_CONF_hadoop_http_staticuser_user=rootCORE_CONF_hadoop_proxyuser_hue_hosts=*CORE_CONF_hadoop_proxyuser_hue_groups=*HDFS_CONF_dfs_webhdfs_enabled=trueHDFS_CONF_dfs_permissions_enabled=falseYARN_CONF_yarn_log___aggregation___enable=trueYARN_CONF_yarn_resourcemanager_recovery_enabled=trueYARN_CONF_yarn_resourcemanager_store_class=org.apache.hadoop.yarn.server.resourcemanager.recovery.FileSystemRMStateStoreYARN_CONF_yarn_resourcemanager_fs_state___store_uri=/rmstateYARN_CONF_yarn_nodemanager_remote___app___log___dir=/app-logsYARN_CONF_yarn_log_server_url=http://historyserver:8188/applicationhistory/logs/YARN_CONF_yarn_timeline___service_enabled=trueYARN_CONF_yarn_timeline___service_generic___application___history_enabled=trueYARN_CONF_yarn_resourcemanager_system___metrics___publisher_enabled=trueYARN_CONF_yarn_resourcemanager_hostname=resourcemanagerYARN_CONF_yarn_timeline___service_hostname=historyserverYARN_CONF_yarn_resourcemanager_address=resourcemanager:8032YARN_CONF_yarn_resourcemanager_scheduler_address=resourcemanager:8030YARN_CONF_yarn_resourcemanager_resource__tracker_address=resourcemanager:8031

三、拉起容器

docker-compose up -d

第一次需要下载镜像有会慢点。成功后会有打印成功标识。

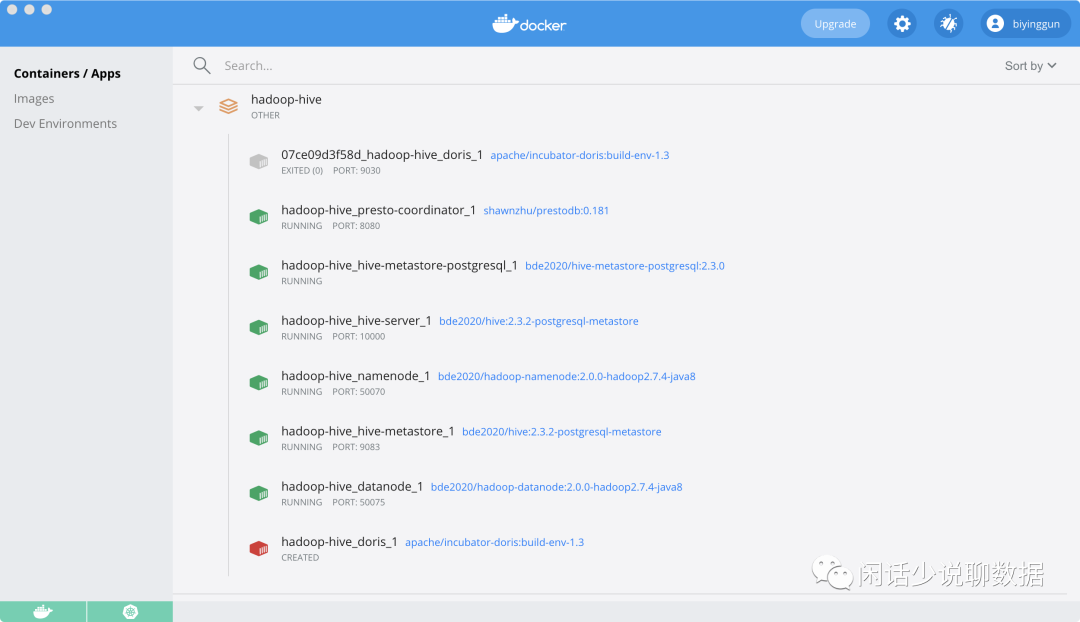

在Macos会有Docker界面显示

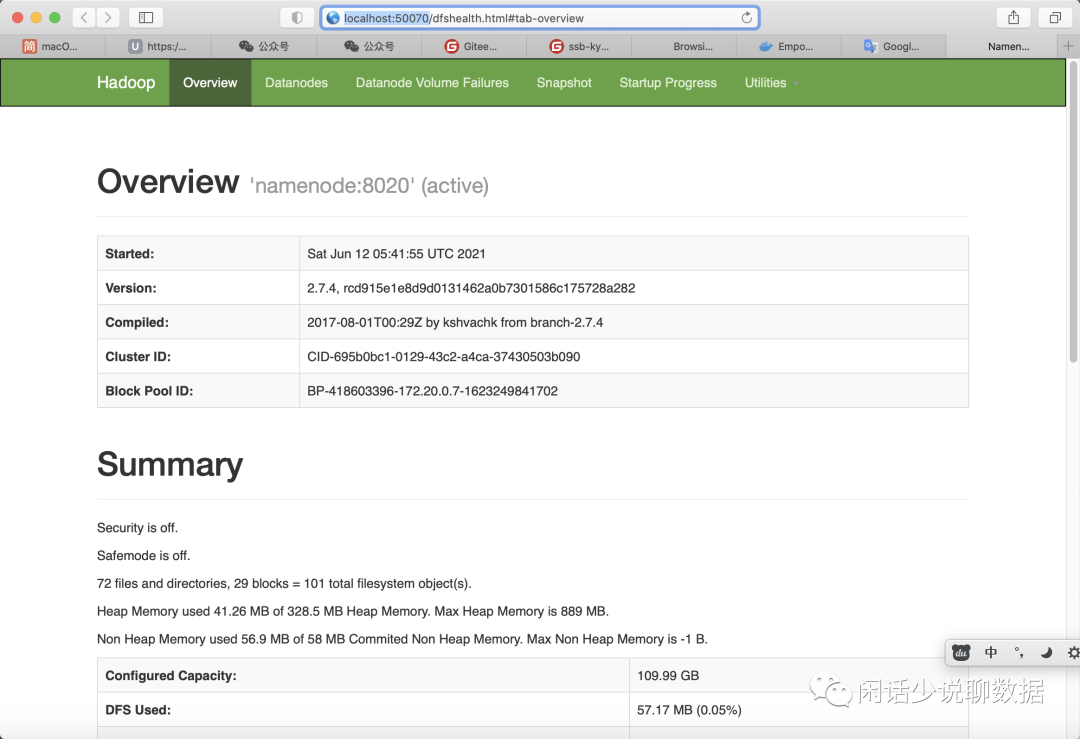

1、访问映射的namenode端口是否正常

http://localhost:50070

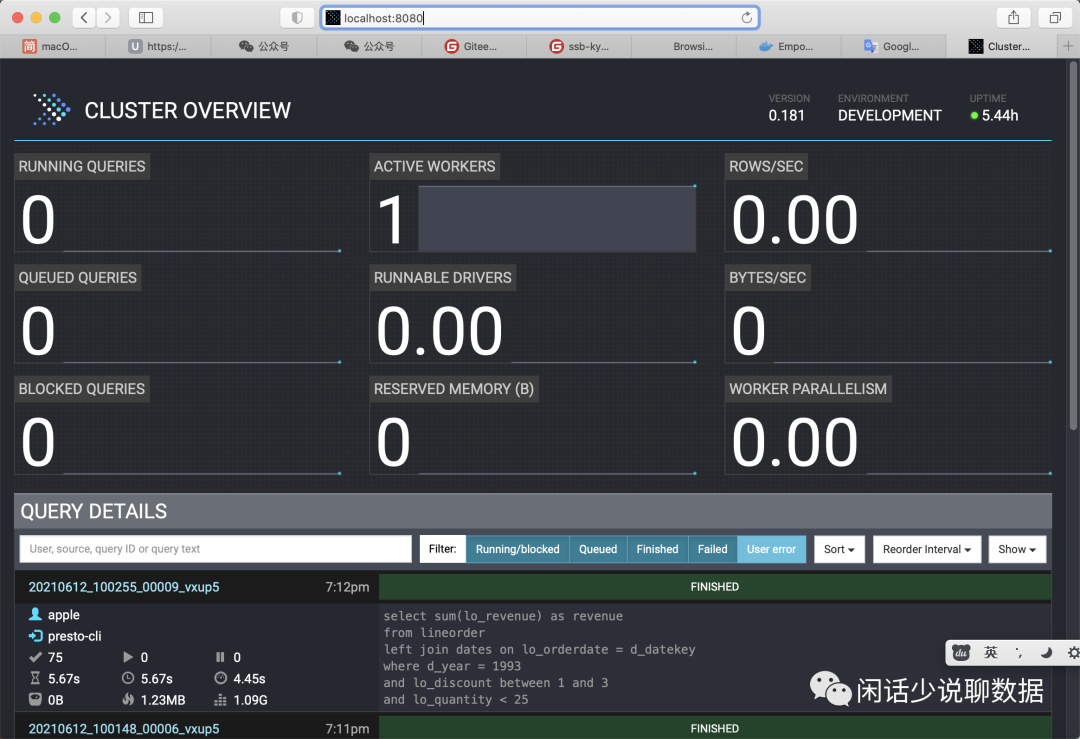

2、访问presto的8080端口

http://localhost:8080

3、登陆hive-server容器看是否正常访问hive

apple@192 hadoop-hive % docker-compose exec hive-server bashroot@3ce7116e4d84:/opt# hiveSLF4J: Class path contains multiple SLF4J bindings.SLF4J: Found binding in [jar:file:/opt/hive/lib/log4j-slf4j-impl-2.6.2.jar!/org/slf4j/impl/StaticLoggerBinder.class]SLF4J: Found binding in [jar:file:/opt/hadoop-2.7.4/share/hadoop/common/lib/slf4j-log4j12-1.7.10.jar!/org/slf4j/impl/StaticLoggerBinder.class]SLF4J: See http://www.slf4j.org/codes.html#multiple_bindings for an explanation.SLF4J: Actual binding is of type [org.apache.logging.slf4j.Log4jLoggerFactory]Logging initialized using configuration in file:/opt/hive/conf/hive-log4j2.properties Async: trueHive-on-MR is deprecated in Hive 2 and may not be available in the future versions. Consider using a different execution engine (i.e. spark, tez) or using Hive 1.X releases.hive> show databases;OKdefaultssbTime taken: 1.665 seconds, Fetched: 2 row(s)

4、使用presto-cli.jar工具在宿主机访问presto (presto-cli.jar网上下载)

./presto-cli.jar --server localhost:8080 --catalog hive --schema default

以上功能都正常的话,整个小数仓的开发(实验)环境就搭建好了。

接下来就是怎么生成数据来练习各种SQL啦,会在下写一篇整理出来。

(下一篇:你还用学生表成绩表来练习?闲哥教你怎么样生成一份生产级别的数据)

四、关键代码说明

1、docker-compose编排说明

version: "3" # 语言的版本2和3写法有差异services:namenode: #容器名称image: bde2020/hadoop-namenode:2.0.0-hadoop2.7.4-java8 #镜像名称和版本volumes: # 从宿主机映射目录或文件到容器- namenode:/hadoop/dfs/name #宿主机目录:容器目录environment:- CLUSTER_NAME=testenv_file: # hadoop和hive的配制文件- ./hadoop-hive.envports: # 映射到宿主机的端口 宿主机端口:容器端口- "50070:50070"datanode:image: bde2020/hadoop-datanode:2.0.0-hadoop2.7.4-java8volumes:- datanode:/hadoop/dfs/dataenv_file:- ./hadoop-hive.envenvironment:SERVICE_PRECONDITION: "namenode:50070"ports:- "50075:50075".......

2、yml语法注意,对语法有严格的格式,最好用IDEA来编辑

1)不能用制表符 \t 等

2)在冒号:后面加要个空格,像key: value

3、附上常用的docker-compose语法,网上收集来的

1) docker-compose up用于部署一个 Compose 应用。默认情况下该命令会读取名为 docker-compose.yml 或 docker-compose.yaml 的文件。当然用户也可以使用 -f 指定其他文件名。通常情况下,会使用 -d 参数令应用在后台启动。2) docker-compose stop停止 Compose 应用相关的所有容器,但不会删除它们。被停止的应用可以很容易地通过 docker-compose restart 命令重新启动。3) docker-compose rm用于删除已停止的 Compose 应用。它会删除容器和网络,但是不会删除卷和镜像。4) docker-compose restart重启已停止的 Compose 应用。如果用户在停止该应用后对其进行了变更,那么变更的内容不会反映在重启后的应用中,这时需要重新部署应用使变更生效。5) docker-compose ps用于列出 Compose 应用中的各个容器。输出内容包括当前状态、容器运行的命令以及网络端口。6) docker-compose down停止并删除运行中的 Compose 应用。它会删除容器和网络,但是不会删除卷和镜像。

点个在看你最好看

文章转载自闲话少说聊数据,如果涉嫌侵权,请发送邮件至:contact@modb.pro进行举报,并提供相关证据,一经查实,墨天轮将立刻删除相关内容。