一、Overlook

本文主要目的不在于部署kubernetes本身,而在于熟练ansible各模块的使用。本文中多处使用了ansible module,导致脚本篇幅很长,但是提高了可读性。本文涉及到的ansible-module有:user、group、hostname、shell、raw、command、script、file、copy、fetch、synchronize、systemd、sysctl、apt、package、modprobe、lineinfile、blockinfile、wait_for、debug、fail、assert、unarchive、get_url,tasks控制方法:block(always、rescue)、loop、with_items、when、include_tasks。

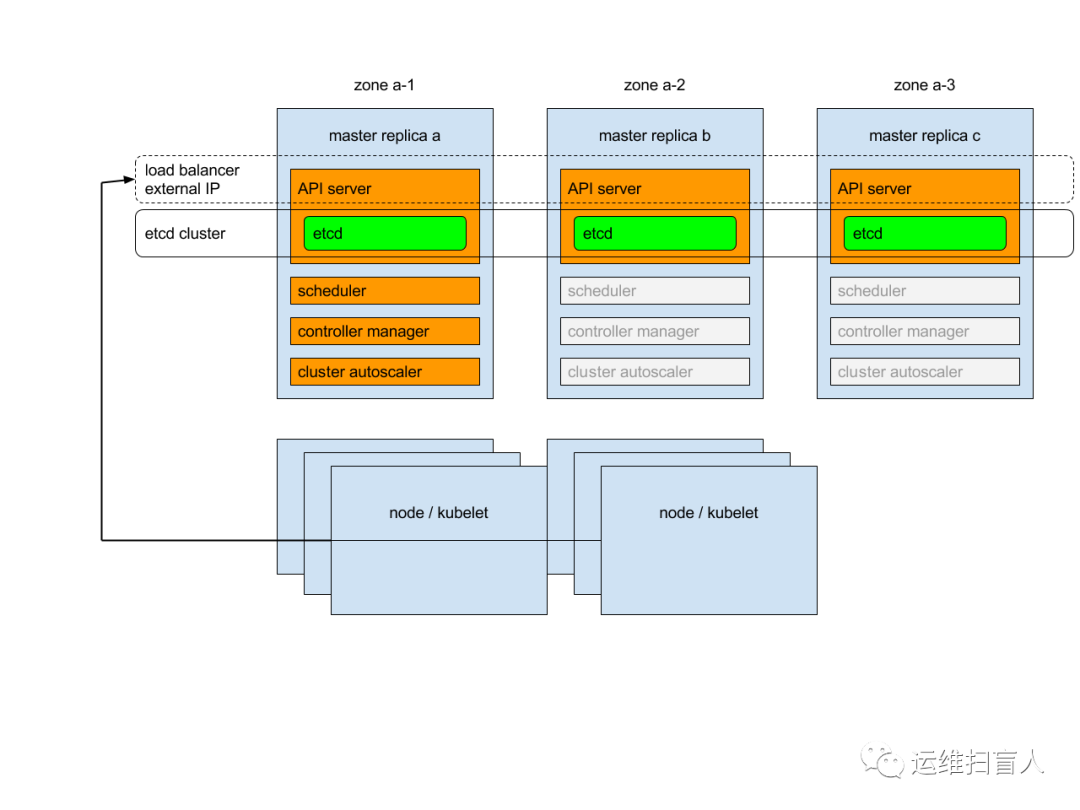

二、环境介绍

图2.1 kubernetes集群架构图

| 序号 | IP_ADDRESS | ROLE | COMPONENT |

| 1 | 192.168.81.197 | MASTER-01 | etcd、haproxy、keepalived、docker、kube-apiserver、kube-controller-manager、kube-scheduler、kubelet、kube-proxy |

| 2 | 192.168.81.198 | MASTER-02 | etcd、haproxy、keepalived、docker、kube-apiserver、kubelet、kube-proxy |

| 3 | 192.168.81.199 | WORKER-01 | etcd、docker、kubelet、kube-proxy |

| 4 | 192.168.81.200 | WORKER-02 | etcd、docker、kubelet、kube-proxy |

表2.1 kubernetes角色规划表

root@k8s-master-01:~/binary-kubernetes-double-master# cat host-k8s[etcd]192.168.81.197 NODE_NAME=etcd1 ROLE=master HOST=k8s-master-01 HAPROXY_BACKEND=app1 KEEPALIVED_ROLE=MASTER KEEPALIVED_WEIGHT=100192.168.81.198 NODE_NAME=etcd2 ROLE=master HOST=k8s-master-02 HAPROXY_BACKEND=app2 KEEPALIVED_ROLE=BACKUP KEEPALIVED_WEIGHT=90192.168.81.199 NODE_NAME=etcd3 ROLE=worker HOST=k8s-worker-01192.168.81.200 NODE_NAME=etcd4 ROLE=worker HOST=k8s-worker-02[etcd:vars]CONTROLLER_SCHEDULER=192.168.81.197MASTER_VIP=192.168.81.201KUBEAPI_SERVER_PORT_REAL=6443

三、binary-kubernetes-single-master

单节点与双节点master部署方式的区别在于:

是否需要include haproxy与keepalived;

角色定义有区别;

master high availability仅仅实现了kube-apiserver一个组件,kube-controller-manager与kube-scheduler自身已通过选举机制实现了高可用。

本测试案例只为测试学习,Product Environment中master数量需要部署为奇数;

3.1 manifest

cat /root/binary-kubernetes-double-master/main.yaml--- hosts: etcdremote_user: rootgather_facts: truevars:SERVICE_CLUSTER_IP_RANGE: "10.0.0.0/24"KUBE_APISERVER_PORT: "7443"ETCD_PEER_NODES: "{% for h in groups['etcd'] %}{{ hostvars[h]['NODE_NAME'] }}=https://{{ h }}:2380,{% endfor %}"ETCD_PEER_CLUSTER: "{{ ETCD_PEER_NODES.rstrip(',') }}"ETCD_CLIENT_NODES: "{% for h in groups['etcd'] %}https://{{ h }}:2379,{% endfor %}"ETCD_CLIENT_CLUSTER: "{{ ETCD_CLIENT_NODES.rstrip(',') }}"HOSTS: "{% for h in groups['etcd'] %}'\"{{ h }}\"',{% endfor %}"CER_HOSTS: "{{ HOSTS.rstrip(',') }}"tasks:- name: Bootstrap a host without python2 installedraw: apt install -y python2.7- name: Prerequisites:/etc/hostsblockinfile:path: /etc/hostsblock: |192.168.81.197 master k8s-master-01192.168.81.198 master k8s-master-02192.168.81.199 node1 k8s-worker-01192.168.81.200 node2 k8s-worker-02- name: Prerequisites:resolve.conflineinfile:path: /etc/resolv.confregexp: '^nameserver 114.114.114.114'insertbefore: '^nameserver 'line: 'nameserver 114.114.114.114'tags: insert- name: Prerequisites:br_netfiltermodprobe:name: br_netfilterstate: present- name: Prerequisites:sysctlsysctl:name: "{{ item }}"sysctl_file: /etc/sysctl.d/k8s.confvalue: 1sysctl_set: yesstate: presentreload: yeswith_items:- net.ipv4.ip_forward- net.bridge.bridge-nf-call-ip6tables- net.bridge.bridge-nf-call-iptables- name: Resolving prerequisitesscript: requirement.sh- name: Set hostnamehostname:name: "{{ HOST }}"- name: Install the package "conntrack"apt:name: "{{ item }}"state: presentwith_items:- conntrack- rsynctags: apt- name: Create some directoryfile:path: "{{ item }}"state: directorymode: 0755#with_items: [ opt/etcd/bin, opt/etcd/cfg, opt/etcd/ssl, root/TLS/etcd, root/TLS/k8s, opt/docker, opt/kubernetes/bin, opt/kubernetes/cfg, opt/kubernetes/ssl, opt/kubernetes/logs ]loop:- /opt/etcd/bin- /opt/etcd/cfg- /opt/etcd/ssl- /root/TLS/etcd- /root/TLS/k8s- /opt/docker- /opt/kubernetes/bin- /opt/kubernetes/cfg- /opt/kubernetes/ssl- /opt/kubernetes/logs- /opt/haproxy/bin- /opt/haproxy/cfg- /opt/haproxy/logs- /opt/keepalived/bin- /opt/keepalived/cfg- /opt/keepalived/logs- name: Download all required bin filescopy:src: '{{ item.src }}'dest: '{{ item.dest }}'mode: 0755with_items:- { src: root/binary-kubernetes-double-master/cfssl/, dest: usr/local/bin }- { src: root/binary-kubernetes-double-master/etcd/, dest: opt/etcd }- { src: root/binary-kubernetes-double-master/docker-20.10.7/, dest: opt/docker }- { src: root/binary-kubernetes-double-master/kubernetes-v1.20.8/, dest: opt/kubernetes }#haproxy && keepalived- block:- include_tasks: /root/binary-kubernetes-double-master/haproxy.yaml- include_tasks: root/binary-kubernetes-double-master/keepalived.yamlwhen: ROLE == "master"#etcd- name: etcd:Generate the etcd certificatescript: /root/binary-kubernetes-double-master/certificate-etcd.sh {{ CER_HOSTS }}when: NODE_NAME == "etcd1"- name: etcd:Register names of etcd certificate as ansible vairableshell: (cd root/TLS/etcd; find -maxdepth 1 -type f) | cut -d'/' -f2register: files_to_copywhen: NODE_NAME == "etcd1"- name: etcd:Fetch etcd certificates to the ansible loaclhostfetch:src: /root/TLS/etcd/{{ item }}dest: /root/binary-kubernetes-double-master/certificate-etcd/flat: yesvalidate_checksum: nowith_items:- "{{ files_to_copy.stdout_lines }}"when: NODE_NAME == "etcd1"- name: etcd:Distribute certificates to all etcd cluster nodescopy:src: /root/binary-kubernetes-double-master/certificate-etcd/dest: /opt/etcd/ssl- name: etcd:Deploy etcd clusterscript: /root/binary-kubernetes-double-master/etcd.sh- name: etcd:Generate etcd configration filetemplate: src=/root/binary-kubernetes-double-master/etcd.conf.j2 dest=/opt/etcd/cfg/etcd.conf- name: etcd:Manager etcd service by systemdsystemd:name: etcddaemon_reload: yesenabled: yesstate: started- name: etcd:Check etcd cluster statusshell: ETCDCTL_API=3 opt/etcd/bin/etcdctl --cacert=/opt/etcd/ssl/ca.pem --cert=/opt/etcd/ssl/server.pem --key=/opt/etcd/ssl/server-key.pem --endpoints="{{ETCD_CLIENT_CLUSTER}}" endpoint healthregister: result- debug:var: result.stderr_linesverbosity: 0#docker- name: docker:Deploy container engine--dockerscript: /root/binary-kubernetes-double-master/docker.sh- name: docker:Check container engine docker statusshell: docker version | egrep -A 1 'Client|Engine'register: docker_check_result- debug:var: docker_check_result.stdout_linesverbosity: 0#kube-apiserver- name: kube-apiserver:Generate the kube-apiserver certificatescript: /root/binary-kubernetes-double-master/certificate-kube-apiserver.sh {{ CER_HOSTS }} {{ MASTER_VIP }}when: ROLE == "master" and HOST == "k8s-master-01" and HOST == "k8s-master-01"- include_tasks: root/binary-kubernetes-double-master/synchronize.yaml- block:- name: kube-apiserver:Generate kube-apiserver configration filetemplate: src=/root/binary-kubernetes-double-master/kube-apiserver.conf.j2 dest=/opt/kubernetes/cfg/kube-apiserver.conf- name: kube-apiserver:Deploy the kube-apiserverscript: /root/binary-kubernetes-double-master/kube-apiserver.sh- name: kube-apiserver:Check kube-apiserver statusshell: /opt/kubernetes/bin/kube-apiserver --versionregister: kubeapiserver_check_result- debug:var: kubeapiserver_check_result.stdout_linesverbosity: 0rescue:- fail: msg="kube-apiserver deploy failed"always:- debug: msg="kube-apiserver deploy sucessfully"when: ROLE == "master"#kube-controller-manager- name: kube-controller-manager:Generate the kube-controller-manager certificatescript: /root/binary-kubernetes-double-master/certificate-controller-manager.shwhen: ROLE == "master" and HOST == "k8s-master-01" and HOST == "k8s-master-01"- include_tasks: root/binary-kubernetes-double-master/synchronize.yaml- block:- name: kube-controller-manager:Deploy the kube-controller-managerscript: /root/binary-kubernetes-double-master/kube-controller-manager.sh https://{{inventory_hostname}}:6443 {{CONTROLLER_SCHEDULER}}- name: kube-controller-manager:Check kube-controller-manager statusshell: /opt/kubernetes/bin/kube-controller-manager --versionregister: kubecontrollermanager_check_result- debug:var: kubecontrollermanager_check_result.stdout_linesverbosity: 0rescue:- fail: msg="kube-controller-manager deploy failed"always:- debug: msg="kube-controller-manager deploy sucessfully"when: ROLE == "master" and HOST == "k8s-master-01" and HOST == "k8s-master-01"#kube-scheduler- name: kube-scheduler:Generate the kube-scheduler certificatescript: /root/binary-kubernetes-double-master/certificate-scheduler.shwhen: ROLE == "master" and HOST == "k8s-master-01"- include_tasks: root/binary-kubernetes-double-master/synchronize.yaml- block:- name: kube-scheduler:Deploy the kube-schedulerscript: /root/binary-kubernetes-double-master/kube-scheduler.sh https://{{MASTER_VIP}}:{{KUBE_APISERVER_PORT}} {{CONTROLLER_SCHEDULER}}- name: kube-scheduler:Check kube-scheduler statusshell: /opt/kubernetes/bin/kube-scheduler --versionregister: kubescheduler_check_result- debug:var: kubescheduler_check_result.stdout_linesverbosity: 0rescue:- fail: msg="kube-scheduler deploy failed"always:- debug: msg="kube-scheduler deploy sucessfully"when: ROLE == "master" and HOST == "k8s-master-01"#kube-admin- name: kubelet:Decompression kubernetes compression package on worker nodescommand: tar zxvf kubernetes-server-linux-amd64.tar.gzargs:chdir: /opt/kubernetes/creates: /opt/kubernetes/kubernetes- name: kube-admin:Generate the kube-admin certificatescript: /root/binary-kubernetes-double-master/certificate-admin.shwhen: ROLE == "master" and HOST == "k8s-master-01"- name: kube-admin:Register names of kube-admin certificate as ansible vairableshell: (cd root/TLS/k8s; find -maxdepth 1 -name '*admin*' -type f ) | cut -d'/' -f2register: files_to_copywhen: ROLE == "master" and HOST == "k8s-master-01"- name: kube-admin:Fetch kube-admin certificates to the ansible localhostfetch:src: /root/TLS/k8s/{{ item }}dest: /root/binary-kubernetes-double-master/certificate-k8s/flat: yesvalidate_checksum: nowith_items:- "{{ files_to_copy.stdout_lines }}"when: ROLE == "master" and HOST == "k8s-master-01"- name: kube-admin:Distribute kube-admin certificates to master and node nodescopy:src: /root/binary-kubernetes-double-master/certificate-k8s/dest: /opt/kubernetes/ssl- name: kube-admin:Gernerate kube-admin kubeconfigscript: /root/binary-kubernetes-double-master/kube-admin.sh https://{{MASTER_VIP}}:{{KUBE_APISERVER_PORT}}- block:- wait_for: host=127.0.0.1 port=10251- wait_for: host=127.0.0.1 port=10252when: ROLE == "master" and HOST == "k8s-master-01"- name: kube-admin:Check kube-admin statusshell: /usr/bin/kubectl get csregister: kube_admin_check_result- debug:var: kube_admin_check_result.stdout_linesverbosity: 0when: ROLE == "master" and HOST == "k8s-master-01"#kubelet- name: kubelet:Authorizes kubete-bootstrap user request the certificateshell: kubectl create clusterrolebinding kubelet-bootstrap --clusterrole=system:node-bootstrapper --user=kubelet-bootstrapwhen: ROLE == "master" and HOST == "k8s-master-01"- name: kubelet:Fetch kube-apiserver token.cvs from master nodefetch:src: /opt/kubernetes/cfg/token.csvdest: /root/binary-kubernetes-double-master/token/flat: yesvalidate_checksum: nowhen: ROLE == "master" and HOST == "k8s-master-01"- name: kubelet:Distribute token value to worker nodescopy:src: /root/binary-kubernetes-double-master/token/token.csvdest: /tmp- name: kubelet:Declare and register an variable for the value of tokenshell: cat tmp/token.csv | awk -F',' '{print $1}'register: TOKEN- name: kubelet:Deploy kubelet on master and worker nodescript: /root/binary-kubernetes-double-master/kubelet.sh https://{{MASTER_VIP}}:{{KUBE_APISERVER_PORT}} {{TOKEN.stdout}}- name: kubelet:Delete token.csv file in tmp directoryfile:path: /tmp/token.csvstate: absent- systemd:name: kubeletstate: restarted- name: Wait_for kubelet bootstrapscript: /root/binary-kubernetes-double-master/check-node.shwhen: ROLE == "master" and HOST == "k8s-master-01"- name: kubelet:Approve certificate requestshell: kubectl certificate approve $(kubectl get csr | grep "Pending" | awk '{print $1}')when: ROLE == "master" and HOST == "k8s-master-01"- pause: seconds=10- name: kubelet:Check kubelet statusshell: kubectl get csr,nodesregister: kubelet_check_resultwhen: ROLE == "master" and HOST == "k8s-master-01"- debug:var: kubelet_check_result.stdout_linesverbosity: 0when: ROLE == "master" and HOST == "k8s-master-01"#kube-proxy- name: kube-proxy:Generate the kube-proxy certificatescript: /root/binary-kubernetes-double-master/certificate-kube-proxy.shwhen: ROLE == "master" and HOST == "k8s-master-01"- name: kube-proxy:Register names of kube-proxy certificate as ansible vairableshell: (cd root/TLS/k8s; find -maxdepth 1 -name '*kube-proxy*' -type f ) | cut -d'/' -f2register: files_to_copywhen: ROLE == "master" and HOST == "k8s-master-01"- name: kube-proxy:Fetch kube-proxy certificates to the ansible localhostfetch:src: /root/TLS/k8s/{{ item }}dest: /root/binary-kubernetes-double-master/certificate-k8s/flat: yesvalidate_checksum: nowith_items:- "{{ files_to_copy.stdout_lines }}"when: ROLE == "master" and HOST == "k8s-master-01"- name: kube-proxy:Distribute kube-proxy certificates to master and worker nodescopy:src: /root/binary-kubernetes-double-master/certificate-k8s/dest: /opt/kubernetes/ssl- name: kube-proxy:Deploy kube-proxyscript: /root/binary-kubernetes-double-master/kube-proxy.sh https://{{MASTER_VIP}}:{{KUBE_APISERVER_PORT}}- name: kube-proxy:Check kube-proxy statusshell: systemctl status kube-proxy.serviceregister: kube_proxy_check_resulttags: proxy- debug:var: kube_proxy_check_result.stdout_linesverbosity: 0tags: proxy#Calico- name: Calico:Download calico YAML fileget_url:url: '{{ item.url }}'dest: '{{ item.dest }}'mode: 0755with_items:- { url: 'https://docs.projectcalico.org/manifests/calico.yaml', dest: opt/kubernetes/cfg/calico.yaml }- { url: 'https://github.com/projectcalico/calicoctl/releases/download/v3.19.1/calicoctl', dest: usr/bin/calicoctl }when: ROLE == "master" and HOST == "k8s-master-01"- name: Calico:Deploy calicoshell: "{{ item }}"loop:- kubectl apply -f opt/kubernetes/cfg/calico.yaml- kubectl apply -f opt/kubernetes/cfg/apiserver-to-kubelet-rbac.yamlwhen: ROLE == "master" and HOST == "k8s-master-01"- name: Calico:make configuration for calicoctlscript: /root/binary-kubernetes-double-master/calicoctl.sh {{ ETCD_CLIENT_CLUSTER }}tags: calicoctlwhen: ROLE == "master" and HOST == "k8s-master-01"- name: Calico:wait for calico port startedwait_for: port=9099 state=started- name: Calico:Check calico statusshell: calicoctl node status; calicoctl get nodesregister: calico_check_resultwhen: ROLE == "master" and HOST == "k8s-master-01"- debug:var: calico_check_result.stdout_linesverbosity: 0when: ROLE == "master" and HOST == "k8s-master-01"#coredns- name: Deploy coredns for kubernetes servicescript: /root/binary-kubernetes-double-master/coredns.shwhen: ROLE == "master" and HOST == "k8s-master-01"

3.1.1 haproxy.yaml

root@k8s-master-01:~/binary-kubernetes-double-master# cat haproxy.yaml- name: Install some Packages for compiling luapackage:name: "{{ item }}"state: latestwith_items: [ gcc, build-essential, libssl-dev, zlib1g-dev, libpcre3, libpcre3-dev, libsystemd-dev, libreadline-dev ]- name: Download lua-5.3 packageunarchive:src: http://www.lua.org/ftp/lua-5.3.5.tar.gzdest: usr/local/src/remote_src: yes- name: Install lua-5.3shell: cd usr/local/src/lua-5.3.5/src/; make linux- shell: ./lua -vargs:chdir: usr/local/src/lua-5.3.5/src/register: lua_version- assert:that: "'5.3.5' in lua_version.stdout"- debug: msg="Lua 5.3.5 has been installed Sucessfully!"when: "'5.3.5' in lua_version.stdout"- name: Create some directory for haproxyfile:path: "{{ item }}"state: directorymode: 0755loop:- opt/haproxy/bin- opt/haproxy/cfg- opt/haproxy/logs- name: Download haproxy packageunarchive:#src: https://www.haproxy.org/download/2.4/src/haproxy-2.4.2.tar.gzsrc: root/binary-kubernetes-double-master/haproxy-2.4.2.tar.gzdest: opt/haproxy#remote_src: yes- name: Install haproxy by compiling!shell: make -j `lscpu |awk 'NR==4{print $2}'` ARCH=x86_64 TARGET=linux-glibc USE_PCRE=1 USE_OPENSSL=1 USE_ZLIB=1 USE_SYSTEMD=1 USE_CPU_AFFINITY=1 USE_LUA=1 LUA_INC=/usr/local/src/lua-5.3.5/src/ LUA_LIB=/usr/local/src/lua-5.3.5/src/ PREFIX=/apps/haproxy && make install PREFIX=/opt/haproxyargs:chdir: opt/haproxy/haproxy-2.4.2/- shell: opt/haproxy/sbin/haproxy -v | grep -i versionregister: haproxy_version- debug: var=haproxy_version.stdout- group: name=haproxy- user: name=haproxy group=haproxy- template: src=/root/binary-kubernetes-double-master/haproxy.cfg.j2 dest=/opt/haproxy/cfg/haproxy.cfg- name: Start haproxy serviceshell: opt/haproxy/sbin/haproxy -W -f opt/haproxy/cfg/haproxy.cfg -q -p opt/haproxy/haproxy.pid; ss -tnlp | grep haproxyregister: haproxy_process- debug: var=haproxy_process.stdout

3.1.2 keepalived.yaml

root@k8s-master-01:~/binary-kubernetes-double-master# cat keepalived.yaml- name: Create some directory for keepalivedfile:path: "{{ item }}"state: directorymode: 0755loop:- opt/keepalived/bin- opt/keepalived/cfg- opt/keepalived/logs- name: Download keepalived packageunarchive:src: https://www.keepalived.org/software/keepalived-2.2.2.tar.gzdest: opt/keepalivedremote_src: yes- name: Install keepalived by compiling!shell: ./configure --prefix=/opt/keepalived && make && make installargs:chdir: opt/keepalived/keepalived-2.2.2- group: name=keepalived- user: name=keepalived group=keepalived- template: src=/root/binary-kubernetes-double-master/keepalived.conf.j2 dest=/opt/keepalived/cfg/keepalived.conf- name: Start keepalived serviceshell: opt/keepalived/sbin/keepalived -f opt/keepalived/cfg/keepalived.conf --log-detail --pid opt/keepalived/keepalived.pid; ps -ef | grep keepalivedregister: keepalived_process- debug: var=keepalived_process.stdout- name: Wait for kube-apiserver vip upwait_for: host="{{ MASTER_VIP }}" port=22register: ping_result- debug: msg="kube-apiserver VIP is up sucessfully!"when: ping_result|changed

3.2 Resources

3.2.1 Requirement

root@k8s-master-01:~/binary-kubernetes-double-master# cat requirement.sh#!/bin/bash#date: 2021-06-25##关闭防火墙ufw disable#关闭selinux#setenforce 0 ;sed -i 's/enforcing/disabled/' etc/selinux/config#关闭swap分区swapoff -a ; sed -ri 's/.*swap.*/#&/' etc/fstab#时间同步#apt install -y chrony ##安装时间同步工具#systemctl enable chronyd && systemctl start chronyd

3.2.2 ETCD

root@k8s-master-01:~/binary-kubernetes-double-master# cat etcd.sh#!/bin/bash#date:2021-06-26## download etcd binary file#wget https://github.com/etcd-io/etcd/releases/download/v3.4.9/etcd-v3.4.9-linux-amd64.tar.gz -P opt/etcdcd opt/etcdtar zxvf etcd-v3.4.9-linux-amd64.tar.gzmv etcd-v3.4.9-linux-amd64/{etcd,etcdctl} opt/etcd/bin/#copy certificate file#cp ~/TLS/etcd/ca*pem ~/TLS/etcd/server*pem opt/etcd/ssl/#gernerate systemd filecat > lib/systemd/system/etcd.service << EOF[Unit]Description=Etcd ServerAfter=network.targetAfter=network-online.targetWants=network-online.target[Service]Type=notifyEnvironmentFile=/opt/etcd/cfg/etcd.confExecStart=/opt/etcd/bin/etcd \--cert-file=/opt/etcd/ssl/server.pem \--key-file=/opt/etcd/ssl/server-key.pem \--peer-cert-file=/opt/etcd/ssl/server.pem \--peer-key-file=/opt/etcd/ssl/server-key.pem \--trusted-ca-file=/opt/etcd/ssl/ca.pem \--peer-trusted-ca-file=/opt/etcd/ssl/ca.pem \--logger=zapRestart=on-failureLimitNOFILE=65536[Install]WantedBy=multi-user.targetEOF

3.2.3 Docker

root@k8s-master-01:~/binary-kubernetes-double-master# cat docker.sh#!/bin/bash#date: 2021-06-29##wget https://download.docker.com/linux/static/stable/x86_64/docker-20.10.7.tgzcd opt/dockertar zxvf docker-*mv docker/* usr/bin#=======cat > lib/systemd/system/docker.service << EOF[Unit]Description=Docker Application Container EngineDocumentation=https://docs.docker.comAfter=network-online.target firewalld.serviceWants=network-online.target[Service]Type=notifyExecStart=/usr/bin/dockerdExecReload=/bin/kill -s HUP $MAINPIDLimitNOFILE=infinityLimitNPROC=infinityLimitCORE=infinityTimeoutStartSec=0Delegate=yesKillMode=processRestart=on-failureStartLimitBurst=3StartLimitInterval=60s[Install]WantedBy=multi-user.targetEOF#=======mkdir etc/dockercat > etc/docker/daemon.json << EOF{"registry-mirrors": ["https://jo6348gu.mirror.aliyuncs.com"],"bip": "172.30.0.1/16"}EOF#========systemctl daemon-reloadsystemctl start dockersystemctl enable docker

3.2.4 Kube-apiserver

root@k8s-master-01:~/binary-kubernetes-double-master# cat kube-apiserver.sh#!/bin/bash#date: 2021-06-30#=========================cd opt/kubernetestar zxvf kubernetes-server-linux-amd64.tar.gzcd kubernetes/server/bincp kube-apiserver kube-scheduler kube-controller-manager opt/kubernetes/bincp kubectl usr/bin/#=========================TOKEN=`head -c 16 dev/urandom | od -An -t x | tr -d ' '`cat > opt/kubernetes/cfg/token.csv << EOF$TOKEN,kubelet-bootstrap,10001,"system:node-bootstrapper"EOF#=========================cat > lib/systemd/system/kube-apiserver.service << EOF[Unit]Description=Kubernetes API ServerDocumentation=https://github.com/kubernetes/kubernetes[Service]EnvironmentFile=/opt/kubernetes/cfg/kube-apiserver.confExecStart=/opt/kubernetes/bin/kube-apiserver \$KUBE_APISERVER_OPTSRestart=on-failure[Install]WantedBy=multi-user.targetEOF#=========================systemctl daemon-reloadsystemctl start kube-apiserversystemctl enable kube-apiserver

3.2.5 Kube-controller-manager

root@k8s-master-01:~/binary-kubernetes-double-master# cat kube-controller-manager.sh#!/bin/bash#date:2021-06-30#cat > opt/kubernetes/cfg/kube-controller-manager.conf << EOFKUBE_CONTROLLER_MANAGER_OPTS="--logtostderr=false \\--v=2 \\--log-dir=/opt/kubernetes/logs \\--leader-elect=true \\--kubeconfig=/opt/kubernetes/cfg/kube-controller-manager.kubeconfig \\--bind-address=$2 \\--allocate-node-cidrs=true \\--cluster-cidr=10.244.0.0/16 \\--service-cluster-ip-range=10.0.0.0/24 \\--cluster-signing-cert-file=/opt/kubernetes/ssl/ca.pem \\--cluster-signing-key-file=/opt/kubernetes/ssl/ca-key.pem \\--root-ca-file=/opt/kubernetes/ssl/ca.pem \\--service-account-private-key-file=/opt/kubernetes/ssl/ca-key.pem \\--cluster-signing-duration=87600h0m0s"EOF#======================cat > lib/systemd/system/kube-controller-manager.service << EOF[Unit]Description=Kubernetes Controller ManagerDocumentation=https://github.com/kubernetes/kubernetes[Service]EnvironmentFile=/opt/kubernetes/cfg/kube-controller-manager.confExecStart=/opt/kubernetes/bin/kube-controller-manager \$KUBE_CONTROLLER_MANAGER_OPTSRestart=on-failure[Install]WantedBy=multi-user.targetEOF#======================cd opt/kubernetes/sslKUBE_CONFIG="/opt/kubernetes/cfg/kube-controller-manager.kubeconfig"KUBE_APISERVER="$1"kubectl config set-cluster kubernetes \--certificate-authority=/opt/kubernetes/ssl/ca.pem \--embed-certs=true \--server=${KUBE_APISERVER} \--kubeconfig=${KUBE_CONFIG}kubectl config set-credentials kube-controller-manager \--client-certificate=./kube-controller-manager.pem \--client-key=./kube-controller-manager-key.pem \--embed-certs=true \--kubeconfig=${KUBE_CONFIG}kubectl config set-context default \--cluster=kubernetes \--user=kube-controller-manager \--kubeconfig=${KUBE_CONFIG}kubectl config use-context default --kubeconfig=${KUBE_CONFIG}#======================systemctl daemon-reloadsystemctl start kube-controller-managersystemctl enable kube-controller-manager

3.2.6 Kube-scheduler

root@k8s-master-01:~/binary-kubernetes-double-master# cat kube-scheduler.sh#!/bin/bash#date:2021-06-30#cat > opt/kubernetes/cfg/kube-scheduler.conf << EOFKUBE_SCHEDULER_OPTS="--logtostderr=false \\--v=2 \\--log-dir=/opt/kubernetes/logs \\--leader-elect \\--kubeconfig=/opt/kubernetes/cfg/kube-scheduler.kubeconfig \\--bind-address=$2"#--master=127.0.0.1:8080EOF#========================cd opt/kubernetes/sslKUBE_CONFIG="/opt/kubernetes/cfg/kube-scheduler.kubeconfig"KUBE_APISERVER="$1"kubectl config set-cluster kubernetes \--certificate-authority=/opt/kubernetes/ssl/ca.pem \--embed-certs=true \--server=${KUBE_APISERVER} \--kubeconfig=${KUBE_CONFIG}kubectl config set-credentials kube-scheduler \--client-certificate=./kube-scheduler.pem \--client-key=./kube-scheduler-key.pem \--embed-certs=true \--kubeconfig=${KUBE_CONFIG}kubectl config set-context default \--cluster=kubernetes \--user=kube-scheduler \--kubeconfig=${KUBE_CONFIG}kubectl config use-context default --kubeconfig=${KUBE_CONFIG}#==========================cat > /lib/systemd/system/kube-scheduler.service << EOF[Unit]Description=Kubernetes SchedulerDocumentation=https://github.com/kubernetes/kubernetes[Service]EnvironmentFile=/opt/kubernetes/cfg/kube-scheduler.confExecStart=/opt/kubernetes/bin/kube-scheduler \$KUBE_SCHEDULER_OPTSRestart=on-failure[Install]WantedBy=multi-user.targetEOF#=========================systemctl daemon-reloadsystemctl start kube-schedulersystemctl enable kube-scheduler

3.2.7 Kube-admin

root@k8s-master-01:~/binary-kubernetes-double-master# cat kube-admin.sh#!/bin/bash#date:2021-06-30#mkdir /root/.kubecd /opt/kubernetes/kubernetes/server/bincp kubectl /usr/bin/KUBE_CONFIG="/root/.kube/config"KUBE_APISERVER="$1"cd /opt/kubernetes/sslkubectl config set-cluster kubernetes \--certificate-authority=/opt/kubernetes/ssl/ca.pem \--embed-certs=true \--server=${KUBE_APISERVER} \--kubeconfig=${KUBE_CONFIG}kubectl config set-credentials cluster-admin \--client-certificate=./admin.pem \--client-key=./admin-key.pem \--embed-certs=true \--kubeconfig=${KUBE_CONFIG}kubectl config set-context default \--cluster=kubernetes \--user=cluster-admin \--kubeconfig=${KUBE_CONFIG}kubectl config use-context default --kubeconfig=${KUBE_CONFIG}

3.2.8 Kubelet

root@k8s-master-01:~/binary-kubernetes-double-master# cat kubelet.sh#!/bin/bash#date:2021-06-30#cd /opt/kubernetes/kubernetes/server/bincp kubelet kube-proxy /opt/kubernetes/bin#======================cat > /opt/kubernetes/cfg/kubelet.conf << EOFKUBELET_OPTS="--logtostderr=false \\--v=2 \\--log-dir=/opt/kubernetes/logs \\--hostname-override=`hostname` \\--network-plugin=cni \\--kubeconfig=/opt/kubernetes/cfg/kubelet.kubeconfig \\--bootstrap-kubeconfig=/opt/kubernetes/cfg/bootstrap.kubeconfig \\--config=/opt/kubernetes/cfg/kubelet-config.yml \\--cert-dir=/opt/kubernetes/ssl \\--pod-infra-container-image=lizhenliang/pause-amd64:3.0"EOF#======================cat > /opt/kubernetes/cfg/kubelet-config.yml << EOFkind: KubeletConfigurationapiVersion: kubelet.config.k8s.io/v1beta1address: 0.0.0.0port: 10250readOnlyPort: 10255cgroupDriver: cgroupfsclusterDNS:- 10.0.0.2clusterDomain: cluster.localfailSwapOn: falseauthentication:anonymous:enabled: falsewebhook:cacheTTL: 2m0senabled: truex509:clientCAFile: /opt/kubernetes/ssl/ca.pemauthorization:mode: Webhookwebhook:cacheAuthorizedTTL: 5m0scacheUnauthorizedTTL: 30sevictionHard:imagefs.available: 15%memory.available: 100Minodefs.available: 10%nodefs.inodesFree: 5%maxOpenFiles: 1000000maxPods: 110EOF#=========================KUBE_CONFIG="/opt/kubernetes/cfg/bootstrap.kubeconfig"KUBE_APISERVER="$1"TOKEN="$2" # 与token.csv里保持一致# 生成 kubelet bootstrap kubeconfig 配置文件kubectl config set-cluster kubernetes \--certificate-authority=/opt/kubernetes/ssl/ca.pem \--embed-certs=true \--server=${KUBE_APISERVER} \--kubeconfig=${KUBE_CONFIG}kubectl config set-credentials "kubelet-bootstrap" \--token=${TOKEN} \--kubeconfig=${KUBE_CONFIG}kubectl config set-context default \--cluster=kubernetes \--user="kubelet-bootstrap" \--kubeconfig=${KUBE_CONFIG}kubectl config use-context default --kubeconfig=${KUBE_CONFIG}#==============================cat > /lib/systemd/system/kubelet.service << EOF[Unit]Description=Kubernetes KubeletAfter=docker.service[Service]EnvironmentFile=/opt/kubernetes/cfg/kubelet.confExecStart=/opt/kubernetes/bin/kubelet \$KUBELET_OPTSRestart=on-failureLimitNOFILE=65536[Install]WantedBy=multi-user.targetEOF#==============================systemctl daemon-reloadsystemctl start kubeletsystemctl enable kubelet#=============================cat > /opt/kubernetes/cfg/apiserver-to-kubelet-rbac.yaml << EOFapiVersion: rbac.authorization.k8s.io/v1kind: ClusterRolemetadata:annotations:rbac.authorization.kubernetes.io/autoupdate: "true"labels:kubernetes.io/bootstrapping: rbac-defaultsname: system:kube-apiserver-to-kubeletrules:- apiGroups:- ""resources:- nodes/proxy- nodes/stats- nodes/log- nodes/spec- nodes/metrics- pods/logverbs:- "*"---apiVersion: rbac.authorization.k8s.io/v1kind: ClusterRoleBindingmetadata:name: system:kube-apiservernamespace: ""roleRef:apiGroup: rbac.authorization.k8s.iokind: ClusterRolename: system:kube-apiserver-to-kubeletsubjects:- apiGroup: rbac.authorization.k8s.iokind: Username: kubernetesEOF

3.2.9 Kube-proxy

root@k8s-master-01:~/binary-kubernetes-double-master# cat kube-proxy.sh#!/bin/bash#date:2021-07-01#cat > /opt/kubernetes/cfg/kube-proxy.conf << EOFKUBE_PROXY_OPTS="--logtostderr=false \\--v=2 \\--log-dir=/opt/kubernetes/logs \\--config=/opt/kubernetes/cfg/kube-proxy-config.yml"EOF#==============cat > /opt/kubernetes/cfg/kube-proxy-config.yml << EOFkind: KubeProxyConfigurationapiVersion: kubeproxy.config.k8s.io/v1alpha1bindAddress: 0.0.0.0metricsBindAddress: 0.0.0.0:10249clientConnection:kubeconfig: /opt/kubernetes/cfg/kube-proxy.kubeconfighostnameOverride: `hostname`clusterCIDR: 10.0.0.0/24EOF#===============cd /opt/kubernetes/ssl/KUBE_CONFIG="/opt/kubernetes/cfg/kube-proxy.kubeconfig"KUBE_APISERVER="$1"kubectl config set-cluster kubernetes \--certificate-authority=/opt/kubernetes/ssl/ca.pem \--embed-certs=true \--server=${KUBE_APISERVER} \--kubeconfig=${KUBE_CONFIG}kubectl config set-credentials kube-proxy \--client-certificate=/opt/kubernetes/ssl/kube-proxy.pem \--client-key=/opt/kubernetes/ssl/kube-proxy-key.pem \--embed-certs=true \--kubeconfig=${KUBE_CONFIG}kubectl config set-context default \--cluster=kubernetes \--user=kube-proxy \--kubeconfig=${KUBE_CONFIG}kubectl config use-context default --kubeconfig=${KUBE_CONFIG}#===============cat > /lib/systemd/system/kube-proxy.service << EOF[Unit]Description=Kubernetes ProxyAfter=network.target[Service]EnvironmentFile=/opt/kubernetes/cfg/kube-proxy.confExecStart=/opt/kubernetes/bin/kube-proxy \$KUBE_PROXY_OPTSRestart=on-failureLimitNOFILE=65536[Install]WantedBy=multi-user.targetEOF#===============systemctl daemon-reloadsystemctl start kube-proxysystemctl enable kube-proxy

3.2.10 Calicoctl

root@k8s-master-01:~/binary-kubernetes-double-master# cat calicoctl.sh#/bin/bash#date:2021-07-03#mkdir -pv /etc/calico/cat > /etc/calico/calicoctl.cfg << EOFapiVersion: projectcalico.org/v3kind: CalicoAPIConfigmetadata:spec:datastoreType: "kubernetes"kubeconfig: "/root/.kube/config"EOFcat > /opt/kubernetes/cfg/ippool-ipip-always.yaml << EOFapiVersion: projectcalico.org/v3kind: IPPoolmetadata:name: my-ippoolspec:cidr: 172.15.0.0/16ipipMode: AlwaysnatOutgoing: truenodeSelector: all()disabled: falseEOF#calicoctl apply -f /opt/kubernetes/cfg/ippool-ipip-always.yaml

3.2.11 CoreDNS

root@k8s-master-01:~/binary-kubernetes-double-master# cat coredns.sh#!/bin/bash#date:2021-07-03#cat > /opt/kubernetes/cfg/coredns.yaml << EOFapiVersion: v1kind: ServiceAccountmetadata:name: corednsnamespace: kube-systemlabels:kubernetes.io/cluster-service: "true"addonmanager.kubernetes.io/mode: Reconcile---apiVersion: rbac.authorization.k8s.io/v1kind: ClusterRolemetadata:labels:kubernetes.io/bootstrapping: rbac-defaultsaddonmanager.kubernetes.io/mode: Reconcilename: system:corednsrules:- apiGroups:- ""resources:- endpoints- services- pods- namespacesverbs:- list- watch- apiGroups:- ""resources:- nodesverbs:- get---apiVersion: rbac.authorization.k8s.io/v1kind: ClusterRoleBindingmetadata:annotations:rbac.authorization.kubernetes.io/autoupdate: "true"labels:kubernetes.io/bootstrapping: rbac-defaultsaddonmanager.kubernetes.io/mode: EnsureExistsname: system:corednsroleRef:apiGroup: rbac.authorization.k8s.iokind: ClusterRolename: system:corednssubjects:- kind: ServiceAccountname: corednsnamespace: kube-system---apiVersion: v1kind: ConfigMapmetadata:name: corednsnamespace: kube-systemlabels:addonmanager.kubernetes.io/mode: EnsureExistsdata:Corefile: |.:53 {errorshealth {lameduck 5s}readykubernetes cluster.local in-addr.arpa ip6.arpa {pods insecurefallthrough in-addr.arpa ip6.arpattl 30}prometheus :9153forward . /etc/resolv.conf {max_concurrent 1000}cache 30loopreloadloadbalance}---apiVersion: apps/v1kind: Deploymentmetadata:name: corednsnamespace: kube-systemlabels:k8s-app: kube-dnskubernetes.io/cluster-service: "true"addonmanager.kubernetes.io/mode: Reconcilekubernetes.io/name: "CoreDNS"spec:# replicas: not specified here:# 1. In order to make Addon Manager do not reconcile this replicas parameter.# 2. Default is 1.# 3. Will be tuned in real time if DNS horizontal auto-scaling is turned on.strategy:type: RollingUpdaterollingUpdate:maxUnavailable: 1selector:matchLabels:k8s-app: kube-dnstemplate:metadata:labels:k8s-app: kube-dnsspec:securityContext:seccompProfile:type: RuntimeDefaultpriorityClassName: system-cluster-criticalserviceAccountName: corednsaffinity:podAntiAffinity:preferredDuringSchedulingIgnoredDuringExecution:- weight: 100podAffinityTerm:labelSelector:matchExpressions:- key: k8s-appoperator: Invalues: ["kube-dns"]topologyKey: kubernetes.io/hostnametolerations:- key: "CriticalAddonsOnly"operator: "Exists"nodeSelector:kubernetes.io/os: linuxcontainers:- name: corednsimage: coredns/coredns:1.8.0imagePullPolicy: IfNotPresentresources:limits:memory: 170Mirequests:cpu: 100mmemory: 70Miargs: [ "-conf", "/etc/coredns/Corefile" ]volumeMounts:- name: config-volumemountPath: /etc/corednsreadOnly: trueports:- containerPort: 53name: dnsprotocol: UDP- containerPort: 53name: dns-tcpprotocol: TCP- containerPort: 9153name: metricsprotocol: TCPlivenessProbe:httpGet:path: /healthport: 8080scheme: HTTPinitialDelaySeconds: 60timeoutSeconds: 5successThreshold: 1failureThreshold: 5readinessProbe:httpGet:path: /readyport: 8181scheme: HTTPsecurityContext:allowPrivilegeEscalation: falsecapabilities:add:- NET_BIND_SERVICEdrop:- allreadOnlyRootFilesystem: truednsPolicy: Defaultvolumes:- name: config-volumeconfigMap:name: corednsitems:- key: Corefilepath: Corefile---apiVersion: v1kind: Servicemetadata:name: kube-dnsnamespace: kube-systemannotations:prometheus.io/port: "9153"prometheus.io/scrape: "true"labels:k8s-app: kube-dnskubernetes.io/cluster-service: "true"addonmanager.kubernetes.io/mode: Reconcilekubernetes.io/name: "CoreDNS"spec:selector:k8s-app: kube-dnsclusterIP: 10.0.0.2ports:- name: dnsport: 53protocol: UDP- name: dns-tcpport: 53protocol: TCP- name: metricsport: 9153protocol: TCPEOF#=============kubectl apply -f /opt/kubernetes/cfg/coredns.yaml

3.2.12 check-node

root@k8s-master-01:~/binary-kubernetes-double-master# cat check-node.sh#!/bin/bash#date:2021-07-09echo 0 > /root/binary-kubernetes-double-master/node_numberNODE_NUMBER=`cat /root/binary-kubernetes-double-master/node_number`until [[ $NODE_NUMBER == 4 ]]doecho $(kubectl get csr | grep "Pending" | awk '{print $1}' | wc -l) > /root/binary-kubernetes-double-master/node_numberNODE_NUMBER=`cat /root/binary-kubernetes-double-master/node_number`donerm /root/binary-kubernetes-double-master/node_number -f

3.2.13 certificate

etcd

root@k8s-master-01:~/binary-kubernetes-double-master# cat certificate-etcd.sh#!/bin/bash#date: 2021-06-25#cd /usr/local/bin/ln -s cfssl-certinfo_linux-amd64 cfssl-certinfoln -s cfssljson_linux-amd64 cfssljsonln -s cfssl_linux-amd64 cfsslcd ~/TLS/etcd#Create CAcat > ca-config.json << EOF{"signing": {"default": {"expiry": "87600h"},"profiles": {"www": {"expiry": "87600h","usages": ["signing","key encipherment","server auth","client auth"]}}}}EOFcat > ca-csr.json << EOF{"CN": "etcd CA","key": {"algo": "rsa","size": 2048},"names": [{"C": "CN","L": "Beijing","ST": "Beijing"}]}EOFcfssl gencert -initca ca-csr.json | cfssljson -bare ca -#Create certificatecat > server-csr.json << EOF{"CN": "etcd","hosts": [$1],"key": {"algo": "rsa","size": 2048},"names": [{"C": "CN","L": "BeiJing","ST": "BeiJing"}]}EOFcfssl gencert -ca=ca.pem -ca-key=ca-key.pem -config=ca-config.json -profile=www server-csr.json | cfssljson -bare server

kube-apiserver

root@k8s-master-01:~/binary-kubernetes-double-master# cat certificate-kube-apiserver.sh#!/bin/bash#date: 2021-06-30#===============cd ~/TLS/k8scat > ca-config.json << EOF{"signing": {"default": {"expiry": "87600h"},"profiles": {"kubernetes": {"expiry": "87600h","usages": ["signing","key encipherment","server auth","client auth"]}}}}EOFcat > ca-csr.json << EOF{"CN": "kubernetes","key": {"algo": "rsa","size": 2048},"names": [{"C": "CN","L": "Beijing","ST": "Beijing","O": "k8s","OU": "System"}]}EOFcfssl gencert -initca ca-csr.json | cfssljson -bare ca -#=================cat > server-csr.json << EOF{"CN": "kubernetes","hosts": ["10.0.0.1","127.0.0.1",$1,"$2","kubernetes","kubernetes.default","kubernetes.default.svc","kubernetes.default.svc.cluster","kubernetes.default.svc.cluster.local"],"key": {"algo": "rsa","size": 2048},"names": [{"C": "CN","L": "BeiJing","ST": "BeiJing","O": "k8s","OU": "System"}]}EOFcfssl gencert -ca=ca.pem -ca-key=ca-key.pem -config=ca-config.json -profile=kubernetes server-csr.json | cfssljson -bare server

kube-controller-manager

root@k8s-master-01:~/binary-kubernetes-double-master# cat certificate-controller-manager.sh#!/bin/bash#date: 2021-06-30#===============cd ~/TLS/k8scat > kube-controller-manager-csr.json << EOF{"CN": "system:kube-controller-manager","hosts": [],"key": {"algo": "rsa","size": 2048},"names": [{"C": "CN","L": "BeiJing","ST": "BeiJing","O": "system:masters","OU": "System"}]}EOFcfssl gencert -ca=ca.pem -ca-key=ca-key.pem -config=ca-config.json -profile=kubernetes kube-controller-manager-csr.json | cfssljson -bare kube-controller-manager

kube-scheduler

root@k8s-master-01:~/binary-kubernetes-double-master# cat certificate-scheduler.sh#!/bin/bash#date:2021-06-30##================cd ~/TLS/k8scat > kube-scheduler-csr.json << EOF{"CN": "system:kube-scheduler","hosts": [],"key": {"algo": "rsa","size": 2048},"names": [{"C": "CN","L": "BeiJing","ST": "BeiJing","O": "system:masters","OU": "System"}]}EOFcfssl gencert -ca=ca.pem -ca-key=ca-key.pem -config=ca-config.json -profile=kubernetes kube-scheduler-csr.json | cfssljson -bare kube-scheduler

kube-admin

root@k8s-master-01:~/binary-kubernetes-double-master# cat certificate-admin.sh#!/bin/bash#date:2021-06-30##===============cd ~/TLS/k8scat > admin-csr.json <<EOF{"CN": "admin","hosts": [],"key": {"algo": "rsa","size": 2048},"names": [{"C": "CN","L": "BeiJing","ST": "BeiJing","O": "system:masters","OU": "System"}]}EOFcfssl gencert -ca=ca.pem -ca-key=ca-key.pem -config=ca-config.json -profile=kubernetes admin-csr.json | cfssljson -bare admin

kube-proxy

root@k8s-master-01:~/binary-kubernetes-double-master# cat certificate-kube-proxy.sh#!/bin/bash#date:2021-07-01#cd ~/TLS/k8scat > kube-proxy-csr.json << EOF{"CN": "system:kube-proxy","hosts": [],"key": {"algo": "rsa","size": 2048},"names": [{"C": "CN","L": "BeiJing","ST": "BeiJing","O": "k8s","OU": "System"}]}EOFcfssl gencert -ca=ca.pem -ca-key=ca-key.pem -config=ca-config.json -profile=kubernetes kube-proxy-csr.json | cfssljson -bare kube-proxy

3.3 template

3.3.1 etcd.conf.j2

root@k8s-master-01:~/binary-kubernetes-double-master# cat etcd.conf.j2#[Member]ETCD_NAME="{{ NODE_NAME }}"ETCD_DATA_DIR="/var/lib/etcd/default.etcd"ETCD_LISTEN_PEER_URLS="https://{{inventory_hostname}}:2380"ETCD_LISTEN_CLIENT_URLS="https://{{inventory_hostname}}:2379"#[Clustering]ETCD_INITIAL_ADVERTISE_PEER_URLS="https://{{inventory_hostname}}:2380"ETCD_ADVERTISE_CLIENT_URLS="https://{{inventory_hostname}}:2379"ETCD_INITIAL_CLUSTER="{{ ETCD_PEER_CLUSTER }}"ETCD_INITIAL_CLUSTER_TOKEN="etcd-cluster"ETCD_INITIAL_CLUSTER_STATE="new"

3.3.2 haproxy.cfg.j2

root@k8s-master-01:~/binary-kubernetes-double-master# cat haproxy.cfg.j2globallog 127.0.0.1 local2chroot /opt/haproxypidfile /opt/haproxy/haproxy.pidmaxconn 4000user haproxygroup haproxydaemon# turn on stats unix socketstats socket /opt/haproxy/statsdefaultsmode tcp #支持httpslog global# option httplogoption dontlognulloption http-server-close# option forwardfor except 127.0.0.0/8option redispatchretries 3timeout http-request 10stimeout queue 1mtimeout connect 10stimeout client 1mtimeout server 1mtimeout http-keep-alive 10stimeout check 10smaxconn 3000#use_backend static if url_static#default_backend applisten stats #网页形式mode httpbind *:9443stats uri /admin/statsmonitor-uri /monitorurifrontend showDocbind *:7443use_backend kube-apiserver #必须和下面的名称一致backend kube-apiserverbalance roundrobin{%- for h in groups['etcd'] -%}{% if hostvars[h]['ROLE'] == 'master' %}server {{ hostvars[h]['HAPROXY_BACKEND'] }} {{ h }}:6443 check{%- endif -%}{%- endfor -%}

3.3.3 keepalived.conf.j2

root@k8s-master-01:~/binary-kubernetes-double-master# cat keepalived.conf.j2global_defs {notification_email {root@localhost}script_user rootrouter_id {{ KEEPALIVED_ROLE }}smtp_server 127.0.0.1vrrp_mcast_group4 224.1.101.33}vrrp_script chk_haproxy {script "/bin/bash -c 'if [[ $(netstat -nlp | grep 7443) ]]; then exit 0; else exit 1; fi'" # haproxy 检测interval 2 # 每2秒执行一次检测weight -11 # 权重变化fall 2rise 3}vrrp_instance VI_1 {interface enp4s3state {{ KEEPALIVED_ROLE }} # backup节点设为BACKUPvirtual_router_id 71 # id设为相同,表示是同一个虚拟路由组priority {{ KEEPALIVED_WEIGHT }} #初始权重advert_int 1# unicast_peer {}virtual_ipaddress {{{ MASTER_VIP }}/24 #vip}authentication {auth_type PASSauth_pass sG6enKo2}track_script {chk_haproxy}}

3.3.4 kube-apiserver.conf.j2

root@k8s-master-01:~/binary-kubernetes-double-master# cat kube-apiserver.conf.j2KUBE_APISERVER_OPTS="--logtostderr=false \--v=2 \--log-dir=/opt/kubernetes/logs \--etcd-servers={{ETCD_CLIENT_CLUSTER}} \--bind-address={{inventory_hostname}} \--secure-port={{KUBEAPI_SERVER_PORT_REAL}} \--advertise-address={{inventory_hostname}} \--allow-privileged=true \--service-cluster-ip-range={{SERVICE_CLUSTER_IP_RANGE}} \--enable-admission-plugins=NamespaceLifecycle,LimitRanger,ServiceAccount,ResourceQuota,NodeRestriction \--authorization-mode=RBAC,Node \--enable-bootstrap-token-auth=true \--token-auth-file=/opt/kubernetes/cfg/token.csv \--service-node-port-range=30000-32767 \--kubelet-client-certificate=/opt/kubernetes/ssl/server.pem \--kubelet-client-key=/opt/kubernetes/ssl/server-key.pem \--tls-cert-file=/opt/kubernetes/ssl/server.pem \--tls-private-key-file=/opt/kubernetes/ssl/server-key.pem \--client-ca-file=/opt/kubernetes/ssl/ca.pem \--service-account-key-file=/opt/kubernetes/ssl/ca-key.pem \--service-account-issuer=api \--service-account-signing-key-file=/opt/kubernetes/ssl/server-key.pem \--etcd-cafile=/opt/etcd/ssl/ca.pem \--etcd-certfile=/opt/etcd/ssl/server.pem \--etcd-keyfile=/opt/etcd/ssl/server-key.pem \--requestheader-client-ca-file=/opt/kubernetes/ssl/ca.pem \--proxy-client-cert-file=/opt/kubernetes/ssl/server.pem \--proxy-client-key-file=/opt/kubernetes/ssl/server-key.pem \--requestheader-allowed-names=kubernetes \--requestheader-extra-headers-prefix=X-Remote-Extra- \--requestheader-group-headers=X-Remote-Group \--requestheader-username-headers=X-Remote-User \--enable-aggregator-routing=true \--audit-log-maxage=30 \--audit-log-maxbackup=3 \--audit-log-maxsize=100 \--audit-log-path=/opt/kubernetes/logs/k8s-audit.log"

四、binary-kubernetes-double-master

4.1 manifest

root@k8s-master-01:~/binary-kubernetes-double-master# cat /root/binary-kubernetes-single-master/main.yaml---- hosts: etcdremote_user: rootgather_facts: truevars:SERVICE_CLUSTER_IP_RANGE: "10.0.0.0/24"KUBE_APISERVER_PORT: "6443"ETCD_PEER_NODES: "{% for h in groups['etcd'] %}{{ hostvars[h]['NODE_NAME'] }}=https://{{ h }}:2380,{% endfor %}"ETCD_PEER_CLUSTER: "{{ ETCD_PEER_NODES.rstrip(',') }}"ETCD_CLIENT_NODES: "{% for h in groups['etcd'] %}https://{{ h }}:2379,{% endfor %}"ETCD_CLIENT_CLUSTER: "{{ ETCD_CLIENT_NODES.rstrip(',') }}"HOSTS: "{% for h in groups['etcd'] %}'\"{{ h }}\"',{% endfor %}"CER_HOSTS: "{{ HOSTS.rstrip(',') }}"MASTER_IP: "{% for h in groups['etcd'] %} {%- if hostvars[h]['ROLE'] == 'master' -%} {{ h }} {%- endif -%} {% endfor %}"tasks:- name: Bootstrap a host without python2 installedraw: apt install -y python2.7- name: Prerequisites:/etc/hostsblockinfile:path: /etc/hostsblock: |192.168.81.197 master k8s-master-01192.168.81.198 node1 k8s-worker-01192.168.81.199 node2 k8s-worker-02- name: Prerequisites:resolve.conflineinfile:path: /etc/resolv.confregexp: '^nameserver 114.114.114.114'insertbefore: '^nameserver 'line: 'nameserver 114.114.114.114'tags: insert- name: Prerequisites:sysctlsysctl:name: "{{ item }}"sysctl_file: /etc/sysctl.d/k8s.confvalue: 1sysctl_set: yesstate: presentreload: yeswith_items:- net.ipv4.ip_forward- net.bridge.bridge-nf-call-ip6tables- net.bridge.bridge-nf-call-iptables- name: Prerequisites:br_netfiltermodprobe:name: br_netfilterstate: present- name: Resolving prerequisitesscript: requirement.sh- name: Set hostnamehostname:name: "{{ HOST }}"- name: Install the package "conntrack"apt:name: "{{ item }}"state: presentwith_items:- conntrack- rsynctags: apt- name: Create some directoryfile:path: "{{ item }}"state: directorymode: 0755#with_items: [ /opt/etcd/bin, /opt/etcd/cfg, /opt/etcd/ssl, /root/TLS/etcd, /root/TLS/k8s, /opt/docker, /opt/kubernetes/bin, /opt/kubernetes/cfg, /opt/kubernetes/ssl, /opt/kubernetes/logs ]loop:- /opt/etcd/bin- /opt/etcd/cfg- /opt/etcd/ssl- /root/TLS/etcd- /root/TLS/k8s- /opt/docker- /opt/kubernetes/bin- /opt/kubernetes/cfg- /opt/kubernetes/ssl- /opt/kubernetes/logs- name: Download all required bin filescopy:src: '{{ item.src }}'dest: '{{ item.dest }}'mode: 0755with_items:- { src: /root/binary-kubernetes-single-master/cfssl/, dest: /usr/local/bin }- { src: /root/binary-kubernetes-single-master/etcd/, dest: /opt/etcd }- { src: /root/binary-kubernetes-single-master/docker-20.10.7/, dest: /opt/docker }- { src: /root/binary-kubernetes-single-master/kubernetes-v1.20.8/, dest: /opt/kubernetes }#etcd- name: etcd:Generate the etcd certificatescript: /root/binary-kubernetes-single-master/certificate-etcd.sh {{ CER_HOSTS }}when: NODE_NAME == "etcd1"- name: etcd:Register names of etcd certificate as ansible vairableshell: (cd /root/TLS/etcd; find -maxdepth 1 -type f) | cut -d'/' -f2register: files_to_copywhen: NODE_NAME == "etcd1"- name: etcd:Fetch etcd certificates to the ansible loaclhostfetch:src: /root/TLS/etcd/{{ item }}dest: /root/binary-kubernetes-single-master/certificate-etcd/flat: yesvalidate_checksum: nowith_items:- "{{ files_to_copy.stdout_lines }}"when: NODE_NAME == "etcd1"- name: etcd:Distribute certificates to all etcd cluster nodescopy:src: /root/binary-kubernetes-single-master/certificate-etcd/dest: /opt/etcd/ssl- name: etcd:Deploy etcd clusterscript: /root/binary-kubernetes-single-master/etcd.sh- name: etcd:Generate etcd configration filetemplate: src=/root/binary-kubernetes-single-master/etcd.conf.j2 dest=/opt/etcd/cfg/etcd.conf- name: etcd:Manager etcd service by systemdsystemd:name: etcddaemon_reload: yesenabled: yesstate: started- name: etcd:Check etcd cluster statusshell: ETCDCTL_API=3 /opt/etcd/bin/etcdctl --cacert=/opt/etcd/ssl/ca.pem --cert=/opt/etcd/ssl/server.pem --key=/opt/etcd/ssl/server-key.pem --endpoints="{{ETCD_CLIENT_CLUSTER}}" endpoint healthregister: result- debug:var: result.stderr_linesverbosity: 0#docker- name: docker:Deploy container engine--dockerscript: /root/binary-kubernetes-single-master/docker.sh- name: docker:Check container engine docker statusshell: docker version | egrep -A 1 'Client|Engine'register: docker_check_result- debug:var: docker_check_result.stdout_linesverbosity: 0#kube-apiserver- name: kube-apiserver:Generate the kube-apiserver certificatescript: /root/binary-kubernetes-single-master/certificate-kube-apiserver.sh {{ CER_HOSTS }}when: ROLE == "master"- include_tasks: /root/binary-kubernetes-single-master/synchronize.yaml- block:- name: kube-apiserver:Generate kube-apiserver configration filetemplate: src=/root/binary-kubernetes-single-master/kube-apiserver.conf.j2 dest=/opt/kubernetes/cfg/kube-apiserver.conf- name: kube-apiserver:Deploy the kube-apiserverscript: /root/binary-kubernetes-single-master/kube-apiserver.sh- name: kube-apiserver:Check kube-apiserver statusshell: /opt/kubernetes/bin/kube-apiserver --versionregister: kubeapiserver_check_result- debug:var: kubeapiserver_check_result.stdout_linesverbosity: 0rescue:- fail: msg="kube-apiserver deploy failed"always:- debug: msg="kube-apiserver deploy sucessfully"when: ROLE == "master"#kube-controller-manager- name: kube-controller-manager:Generate the kube-controller-manager certificatescript: /root/binary-kubernetes-single-master/certificate-controller-manager.shwhen: ROLE == "master"- include_tasks: /root/binary-kubernetes-single-master/synchronize.yaml- block:- name: kube-controller-manager:Deploy the kube-controller-managerscript: /root/binary-kubernetes-single-master/kube-controller-manager.sh https://{{inventory_hostname}}:6443- name: kube-controller-manager:Check kube-controller-manager statusshell: /opt/kubernetes/bin/kube-controller-manager --versionregister: kubecontrollermanager_check_result- debug:var: kubecontrollermanager_check_result.stdout_linesverbosity: 0rescue:- fail: msg="kube-controller-manager deploy failed"always:- debug: msg="kube-controller-manager deploy sucessfully"when: ROLE == "master"#kube-scheduler- name: kube-scheduler:Generate the kube-scheduler certificatescript: /root/binary-kubernetes-single-master/certificate-scheduler.shwhen: ROLE == "master"- include_tasks: /root/binary-kubernetes-single-master/synchronize.yaml- block:- name: kube-scheduler:Deploy the kube-schedulerscript: /root/binary-kubernetes-single-master/kube-scheduler.sh https://{{MASTER_IP}}:{{KUBE_APISERVER_PORT}}- name: kube-scheduler:Check kube-scheduler statusshell: /opt/kubernetes/bin/kube-scheduler --versionregister: kubescheduler_check_result- debug:var: kubescheduler_check_result.stdout_linesverbosity: 0rescue:- fail: msg="kube-scheduler deploy failed"always:- debug: msg="kube-scheduler deploy sucessfully"when: ROLE == "master"#kube-admin- name: kubelet:Decompression kubernetes compression package on worker nodescommand: tar zxvf kubernetes-server-linux-amd64.tar.gzargs:chdir: /opt/kubernetes/creates: /opt/kubernetes/kubernetes- name: kube-admin:Generate the kube-admin certificatescript: /root/binary-kubernetes-single-master/certificate-admin.shwhen: ROLE == "master"- name: kube-admin:Register names of kube-admin certificate as ansible vairableshell: (cd /root/TLS/k8s; find -maxdepth 1 -name '*admin*' -type f ) | cut -d'/' -f2register: files_to_copywhen: ROLE == "master"- name: kube-admin:Fetch kube-admin certificates to the ansible localhostfetch:src: /root/TLS/k8s/{{ item }}dest: /root/binary-kubernetes-single-master/certificate-k8s/flat: yesvalidate_checksum: nowith_items:- "{{ files_to_copy.stdout_lines }}"when: ROLE == "master"- name: kube-admin:Distribute kube-admin certificates to master and node nodescopy:src: /root/binary-kubernetes-single-master/certificate-k8s/dest: /opt/kubernetes/ssl- name: kube-admin:Gernerate kube-admin kubeconfigscript: /root/binary-kubernetes-single-master/kube-admin.sh https://{{MASTER_IP}}:{{KUBE_APISERVER_PORT}}- name: kube-admin:Check kube-admin statusshell: /usr/bin/kubectl get csregister: kube_admin_check_result- debug:var: kube_admin_check_result.stdout_linesverbosity: 0when: ROLE == "master"#kubelet- name: kubelet:Authorizes kubete-bootstrap user request the certificateshell: kubectl create clusterrolebinding kubelet-bootstrap --clusterrole=system:node-bootstrapper --user=kubelet-bootstrapwhen: ROLE == "master"- name: kubelet:Fetch kube-apiserver token.cvs from master nodefetch:src: /opt/kubernetes/cfg/token.csvdest: /root/binary-kubernetes-single-master/token/flat: yesvalidate_checksum: nowhen: ROLE == "master"- name: kubelet:Distribute token value to worker nodescopy:src: /root/binary-kubernetes-single-master/token/token.csvdest: /tmp- name: kubelet:Declare and register an variable for the value of tokenshell: cat /tmp/token.csv | awk -F',' '{print $1}'register: TOKEN- name: kubelet:Deploy kubelet on master and worker nodescript: /root/binary-kubernetes-single-master/kubelet.sh https://{{MASTER_IP}}:{{KUBE_APISERVER_PORT}} {{TOKEN.stdout}}- name: kubelet:Delete token.csv file in tmp directoryfile:path: /tmp/token.csvstate: absent- name: kubelet:Approve certificate requestshell: kubectl certificate approve $(kubectl get csr | grep "Pending" | awk '{print $1}')when: ROLE == "master"- pause: seconds=10- name: kubelet:Check kubelet statusshell: kubectl get csr,nodesregister: kubelet_check_resultwhen: ROLE == "master"- debug:var: kubelet_check_result.stdout_linesverbosity: 0when: ROLE == "master"#kube-proxy- name: kube-proxy:Generate the kube-proxy certificatescript: /root/binary-kubernetes-single-master/certificate-kube-proxy.shwhen: ROLE == "master"- name: kube-proxy:Register names of kube-proxy certificate as ansible vairableshell: (cd /root/TLS/k8s; find -maxdepth 1 -name '*kube-proxy*' -type f ) | cut -d'/' -f2register: files_to_copywhen: ROLE == "master"- name: kube-proxy:Fetch kube-proxy certificates to the ansible localhostfetch:src: /root/TLS/k8s/{{ item }}dest: /root/binary-kubernetes-single-master/certificate-k8s/flat: yesvalidate_checksum: nowith_items:- "{{ files_to_copy.stdout_lines }}"when: ROLE == "master"- name: kube-proxy:Distribute kube-proxy certificates to master and worker nodescopy:src: /root/binary-kubernetes-single-master/certificate-k8s/dest: /opt/kubernetes/ssl- name: kube-proxy:Deploy kube-proxyscript: /root/binary-kubernetes-single-master/kube-proxy.sh https://{{MASTER_IP}}:{{KUBE_APISERVER_PORT}}- name: kube-proxy:Check kube-proxy statusshell: systemctl status kube-proxy.serviceregister: kube_proxy_check_resulttags: proxy- debug:var: kube_proxy_check_result.stdout_linesverbosity: 0tags: proxy#Calico- name: Calico:Download calico YAML fileget_url:url: '{{ item.url }}'dest: '{{ item.dest }}'mode: 0755with_items:- { url: 'https://docs.projectcalico.org/manifests/calico.yaml', dest: /opt/kubernetes/cfg/calico.yaml }- { url: 'https://github.com/projectcalico/calicoctl/releases/download/v3.19.1/calicoctl', dest: /usr/bin/calicoctl }when: ROLE == "master"- name: Calico:Deploy calicoshell: "{{ item }}"loop:- kubectl apply -f /opt/kubernetes/cfg/calico.yaml- kubectl apply -f /opt/kubernetes/cfg/apiserver-to-kubelet-rbac.yamlwhen: ROLE == "master"- name: Calico:make configuration for calicoctlscript: /root/binary-kubernetes-single-master/calicoctl.sh {{ ETCD_CLIENT_CLUSTER }}tags: calicoctlwhen: ROLE == "master"- name: Calico:wait for calico port startedwait_for: port=9099 state=started- name: Calico:Check calico statusshell: calicoctl node status; calicoctl get nodesregister: calico_check_resultwhen: ROLE == "master"- debug:var: calico_check_result.stdout_linesverbosity: 0when: ROLE == "master"#coredns- name: Deploy coredns for kubernetes servicescript: /root/binary-kubernetes-single-master/coredns.shwhen: ROLE == "master"

五、Kubernetes 与Ansible扩展补充

5.1 calico ippool修改方法

root@k8s-master-01:~/binary-kubernetes-double-master# kubectl get ippool -o yamlroot@k8s-master-01:~/binary-kubernetes-double-master# cat /opt/kubernetes/cfg/ippool-ipip-always.yamlapiVersion: projectcalico.org/v3kind: IPPoolmetadata:name: my-ippoolspec:cidr: 172.15.0.0/16ipipMode: AlwaysnatOutgoing: truenodeSelector: all()disabled: false

calicoctl apply -f /opt/kubernetes/cfg/ippool-ipip-always.yaml

root@k8s-master-01:~/binary-kubernetes-double-master# calicoctl get ippoolNAME CIDR SELECTORdefault-ipv4-ippool 172.16.0.0/16 all()my-ippool 172.15.0.0/16 all()

5.2 Ansible Module

{%- for h in groups['etcd'] -%}{% if hostvars[h]['ROLE'] == 'master' %}server {{ hostvars[h]['HAPROXY_BACKEND'] }} {{ h }}:6443 check{%- endif -%}{%- endfor -%}

register:当需要将remote_host的执行结果作为变量时,可以使用register将其注册为变量,不可跨remote_host引用;

block:当某一类task具有相同的执行条件时,可以将其放入block中,与'when'、'rescue'、'always'配合使用;

wait_for:当tasks之间存在依赖关系时,可以通过wait_for检测上一各tasks是否执行成功,可以通过监听端口或查看指定文件是否存在某字符串来实现;

include:类似与函数调用,可以在运行是将某个task加载至playbook中;

synchronize:不仅可以在local_host与remote_host之间同步文件,也可以在多个remote_host之间同步文件;

unarchive:既可以从local_host复制至remote_host并解压,也可以仅仅解压remote_host上的压缩包,这时需要明确指出源文件在remote_host上(remote_src=yes);

debug:用于将remote_host上task的执行结果输出至localhost,可配合register使用;可根据需求定义输出信息,例如result.stdout。