MySQL5.7 MGR介绍及安装

官方介绍:

https://dev.mysql.com/doc/refman/5.7/en/group-replication.html

http://dev.mysql.com/doc/refman/5.7/en/group-replication-getting-started.html

https://dev.mysql.com/doc/refman/5.7/en/group-replication-summary.html

1. MGR介绍

MySQL Group Replication(简称MGR)是MySQL官方于2016年12月,MySQL5.7版本出现的新特性,提供高可用、高扩展、高可靠(强一致性)的MySQL集群服务。同类型的技术产品有MariaDB Galera Cluster和Percona XtraDB Cluster。MGR由多个实例节点共同组成一个数据库集群,系统提交事务必须经过半数以上节点同意方可提交,在集群中每个节点上都维护一个数据库状态机,保证节点间事务的一致性.

MySQL组复制分单主模式和多主模式,mysql 的复制技术仅解决了数据同步的问题,如果 master 宕机,意味着数据库管理员需要介入,应用系统仍可能需要修改数据库连接地址或者重启才能实现。(这里也可以使用数据库中间件产品来避免应用系统数据库连接的问题,例如 mycat 和 atlas 等产品)。组复制在数据库层面上做到了,只要集群中大多数主机可用,则服务可用,也就是说3台服务器的集群,允许其中1台宕机。

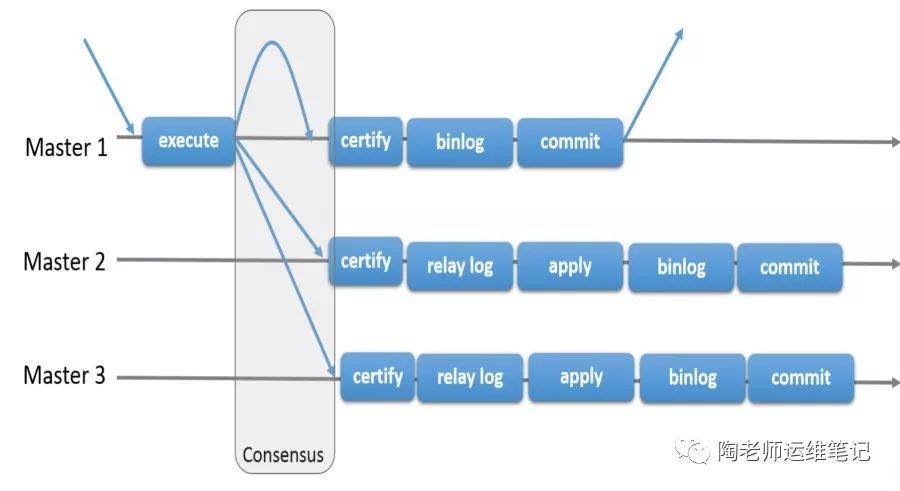

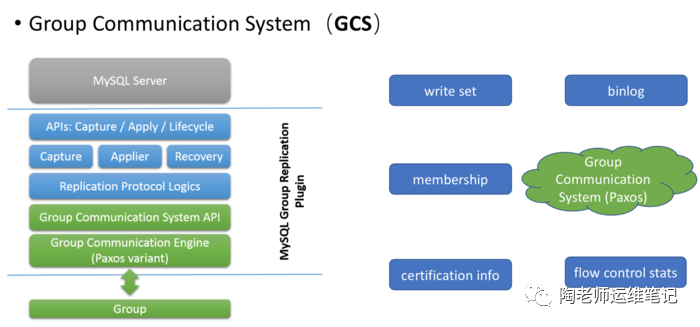

1.1 MGR架构

1.2 组复制的两种模式

MGR提供了single-primary和multi-primary两种模式。

single-primary mode(单写模式): 组内只有一个节点负责写入,读可以从任意一个节点读取,组内数据保持最终一致;

multi-primary mode(多写模式): 所有的 server 成员都可以同时接受更新;即写会下发到组内所有节点,组内所有节点同时可读,也是能够保证组内数据最终一致性。注意:一个MGR的所有节点必须配置使用同一种模式,不可混用!

1.3 MRG组复制特点

MGR优点:

高一致性,基于原生复制及paxos协议的组复制技术.

高容错性,有自动检测机制,当出现宕机后,会自动剔除问题节点,其他节点可以正常使用,当不同节点产生资源争用冲突时,会按照先到先得处理,并且内置了自动化脑裂防护机制.

高扩展性,可随时在线新增和移除节点,会自动同步所有节点上状态,直到新节点和其他节点保持一致,自动维护新的组信息.

高灵活性,以插件形式安装,有两种模式:单主模式和多主模式

限制:

存储引擎仅支持InnoDB表,并且每张表一定要有一个主键,用于做write set的冲突检测;

每个表必须提供主键;

只支持ipv4,网络需求较高;

必须打开GTID特性,二进制日志格式必须设置为ROW,用于选主与write set;

COMMIT可能会导致失败,类似于快照事务隔离级别的失败场景;

目前一个MGR集群组最多支持9个节点;

不支持外键于save point特性,无法做全局间的约束检测与部分部分回滚;

二进制日志binlog不支持Replication event checksums;

多主模式(也就是多写模式) 不支持SERIALIZABLE事务隔离级别;

多主模式不能完全支持级联外键约束;

多主模式不支持在不同节点上对同一个数据库对象并发执行DDL(在不同节点上对同一行并发进行RW事务,后发起的事务会失败);

1.4 MRG组复制使用场景

组复制的一些典型应用场景:

可伸缩的复制 - 需要非常流畅的复制架构环境,可以动态的增加、减少服务器节点数量且尽可能少地 带来副作用。例如,数据库服务部署在云上。

高可用的分片(sharding) - Sharding是实现写伸缩(scale-out)非常流行的方法。使用MySQL组复制来 实现高可用的sharding,每一个分片对应一个复制组。

可切换的master-slave复制 - 在某些特定环境下,使用单主模型会出现单主瓶颈和单点故障的问题。而写入整个组,可能会有可以有更好的可扩展性。

自动化集群系统 - 此外,你可以部署MySQL组复制,只为使用它的自动切换能力(前文和本章已经描 述过了)。

2. MRG原理及其他复制对比

来源参考: https://www.cnblogs.com/luoahong/articles/8043035.html

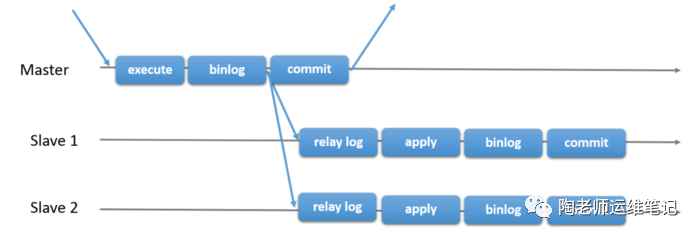

2.1 MySQL异步复制

master事务的提交不需要经过slave的确认,slave是否接收到master的binlog,master并不care。slave接收到master binlog后先写relay log,最后异步地去执行relay log中的sql应用到自身。由于master的提交不需要确保slave relay log是否被正确接受,当slave接受master binlog失败或者relay log应用失败,master无法感知。

$\color{red}{假设master发生宕机并且binlog还没来得及被slave接收,而切换程序将slave提升为新的master,就会出现数据不一致的情况!}$ 另外,在高并发的情况下,传统的主从复制,从节点可能会与主产生较大的延迟(当然mysql后续版本陆续做了优化,推出了并行复制,以此降低异步复制的延迟)

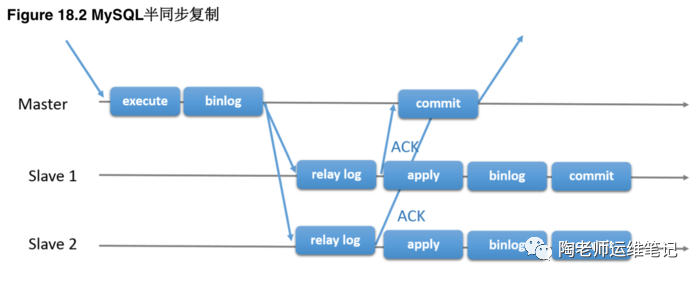

2.2 MySQL半同步复制

基于传统异步存在的缺陷,mysql在5.5版本推出半同步复制。可以说半同步复制是传统异步复制的改进,在master事务的commit之前,必须确保一个slave收到relay log并且响应给master以后,才能进行事务的commit。 但是slave对于relay log的应用仍然是异步进行的,原理如下图所示:

半同步复制,它在异步复制基础上添加了一个同步操作。master需要等待slave的 ack回复,然后才能提交事务。

2.3 MRG组复制

https://dev.mysql.com/doc/refman/5.7/en/group-replication.html

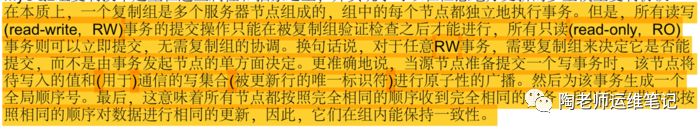

基于传统异步复制和半同步复制的缺陷——数据的一致性问题无法保证,MySQL官方在5.7.17版本正式推出组复制(MySQL Group Replication,简称MGR)。由若干个节点共同组成一个复制组,一个事务的提交,必须经过组内大多数节点(N 2 + 1)决议并通过,才能得以提交。引入组复制,主要是为了解决传统异步复制和半同步复制可能产生数据不一致的问题。组复制依靠分布式一致性协议(Paxos协议的变体),实现了分布式下数据的最终一致性,提供了真正的数据高可用方案。

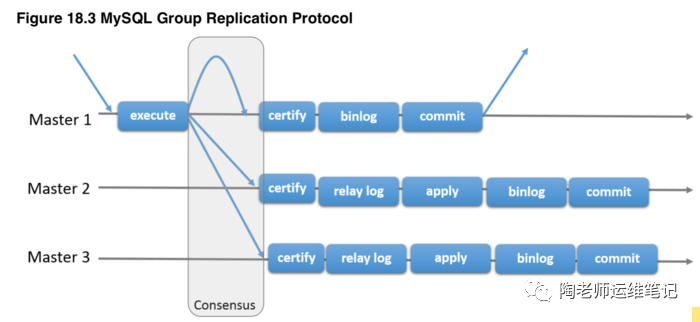

基于组的复制(Group-basedReplication)是一种被使用在容错系统中的技术。Replication-group(复制组)是由能够相互通信的多个服务器(数据库实例)组成的。在通信层,Groupreplication实现了一系列的机制:比如原子消息(atomicmessage delivery)和全序化消息(totalorderingof messages)。简而言之,一个Replication-group就是一组节点,每个节点都可以独立执行事务,而读写事务则会在于group内的其他节点进行协调之后再commit。因此,当一个事务准备提交时,会自动在group内进行原子性的广播,告知其他节点变更了什么内容/执行了什么事务。

说明:

MySQL组复制是一种 share-nothing 复制方案,其中每个 server 成员都有自己的完整数据副本。

任何 RW 事务,提交操作并不是由始发 server 单向决定的,而是由组来决定是否提交。

3. 环境准备

规划如下:

| 角色 | IP | port | server-id |

|---|---|---|---|

| DB-1 | 192.110.103.41 | 3106 | 103413106 |

| DB-2 | 192.110.103.42 | 3106 | 103423106 |

| DB-3 | 192.110.103.43 | 3106 | 103423106 |

说明:机器上port 3306已在使用被占了,只好改用3106。

4.安装MySQL软件

4.1 安装软件可以yum安装或二进制安装。本文用二进制版本安装。1)yum安装

yum localinstall https://dev.mysql.com/get/mysql57-community-release-el7-8.noarch.rpm

yum install -y mysql-community-server

#启动MySQL服务器和MySQL的自动启动

systemctl start mysqld.service

systemctl enable mysqld.service

2)二进制安装 下载软件: https://dev.mysql.com/downloads/mysql/5.7.html#downloads

#下载2进制版MySQL5.7

$wget xxx/mysql-5.7.23-linux-glibc2.12-x86_64.tar.gz .

tar -xvzf mysql-5.7.23-linux-glibc2.12-x86_64.tar.gz

mv mysql-5.7.23-linux-glibc2.12-x86_64 /usr/local/mysql-5.7.23

ln -s /usr/local/mysql-5.7.23 /usr/local/mysql

4.2 初始化

DB_BASE_DIR='/data1/mysql_3106'

mkdir -p ${DB_BASE_DIR}/{data,etc,logs,tmp}

$tree mysql_3106/

mysql_3106/

├── data

├── etc

├── logs

└── tmp

useradd mysql

chown -R mysql.mysql ${DB_BASE_DIR}

#初始化init

cd /usr/local/mysql-5.7.23/

/usr/local/mysql-5.7.23/bin/mysqld --initialize-insecure --user=mysql --basedir=/usr/local/mysql-5.7.23 --datadir=${DB_BASE_DIR}/data

5. MGR安装与配置

http://dev.mysql.com/doc/refman/5.7/en/group-replication-configuring-instances.html

https://dev.mysql.com/doc/refman/5.7/en/group-replication-deploying-in-single-primary-mode.html

5.1 my.cnf配置

在三台机器上设置环境变量,记得CURRENT_IP要相应的调整。

#在三台机器上环境变量.

PROG_BASE_DIR='/usr/local/mysql-5.7.23'

DB_BASE_DIR='/data1/mysql_3106'

CURRENT_IP='192.110.103.41' #要逐台变化

NODE1_IP='192.110.103.41'

NODE2_IP='192.110.103.42'

NODE3_IP='192.110.103.43'

PORT='3106'

SERVICE_ID=`echo ${CURRENT_IP} | awk -F"." '{printf "%s%s", $3,$4 }'`

CLUSTER_NAME='mysqlmgr-test'

生成配置文件

#mkdir -p ${DB_BASE_DIR}/{data,etc,logs,tmp}

#生成配置文件

cat > ${DB_BASE_DIR}/etc/my.cnf << EOF

[client]

port = ${PORT}

socket = ${DB_BASE_DIR}/tmp/mysql.sock

default-character-set=utf8

[mysql]

no_auto_rehash

max_allowed_packet = 16M

prompt = '\u@\h [\d]> ' # 'user@host [schema]> '

default_character_set = utf8

[mysqldump]

max_allowed_packet = 16M

[mysqld_safe]

open_files_limit = 8192

user = mysql

pid_file = ${DB_BASE_DIR}/tmp/mysql.pid

[mysqld]

# Base

port = ${PORT}

socket = ${DB_BASE_DIR}/tmp/mysql.sock

server-id=$SERVICE_ID

user = mysql

basedir = ${PROG_BASE_DIR}

plugin-dir=${PROG_BASE_DIR}/lib/plugin

datadir = ${DB_BASE_DIR}/data

tmpdir = ${DB_BASE_DIR}/tmp

max_allowed_packet = 64M

character_set_server = utf8

collation_server = utf8_general_ci

max_connections=5000

max_user_connections=1000

thread_cache_size = 128

# Query Cache

#query_cache_type = 0 # <= MySQL 8.0.3

# Session variables

sort_buffer_size = 2M

tmp_table_size = 32M

read_buffer_size = 128k

read_rnd_buffer_size = 256k

join_buffer_size = 128k

# Other buffers and caches

table_definition_cache = 1400

table_open_cache = 2000

table_open_cache_instances = 16

# MySQL error log

log-error = ${DB_BASE_DIR}/logs/mysqld.err

innodb_print_all_deadlocks = 1

# Slow Query Log

slow_query_log = 1

slow_query_log_file = ${DB_BASE_DIR}/logs/mysql_slow.log

log_queries_not_using_indexes = 0

long_query_time = 1

min_examined_row_limit = 0

# General Query Log

general_log = 0

general_log_file = ${DB_BASE_DIR}/logs/mysql_general.log

# Performance Schema

# performance_schema = OFF

# Binary logging and Replication

log_bin = mysql-bin

binlog_format = ROW

binlog_checksum = NONE # or CRC32

master_verify_checksum = OFF # ON if binlog_checksum = CRC32

slave_sql_verify_checksum = OFF # ON if binlog_checksum = CRC32

binlog_cache_size = 1M

binlog_stmt_cache_size = 3M

max_binlog_size = 512M

sync_binlog = 1

expire_logs_days = 7

log_slave_updates = 1

relay_log = mysql-relay-bin

relay_log_purge = 1

# MyISAM variables

key_buffer_size = 8M

myisam_recover_options = 'BACKUP,FORCE'

# MEMORY variables

max_heap_table_size = 64M # Should be greater or equal to tmp_table_size

# InnoDB variables

default_storage_engine = InnoDB

innodb_file_per_table = 1

innodb_data_home_dir=${DB_BASE_DIR}/data

innodb_data_file_path=ibdata1:10M:autoextend

innodb_log_group_home_dir=${DB_BASE_DIR}/data

innodb_buffer_pool_size = 4G

innodb_buffer_pool_instances = 8

innodb_stats_on_metadata=0

innodb_lock_wait_timeout=30

innodb_flush_log_at_trx_commit = 2

innodb_log_buffer_size = 8M

innodb_log_file_size = 1024M

# innodb_write_io_threads = 8 # If you have a strong I/O system or SSD

# innodb_read_io_threads = 8 # If you have a strong I/O system or SSD

# innodb_io_capacity = 1000 # If you have a strong I/O system or SSD

# Group Replication parameter

gtid_mode = ON

enforce_gtid_consistency = ON

master_info_repository = TABLE

relay_log_info_repository = TABLE

slave_parallel_workers = 10

slave_preserve_commit_order = ON

slave_parallel_type = LOGICAL_CLOCK

transaction_write_set_extraction = XXHASH64

loose-group_replication_group_name = "aaaaaaaa-aaaa-aaaa-aaaa-aaaaaaaaaaaa"

loose-group_replication_start_on_boot = OFF

loose-group_replication_local_address = "${CURRENT_IP}:${PORT}1"

loose-group_replication_group_seeds = "${NODE1_IP}:${PORT}1,${NODE2_IP}:${PORT}1,${NODE3_IP}:${PORT}1"

loose-group_replication_bootstrap_group = OFF

loose-group_replication_single_primary_mode = FALSE # = multi-primary

loose-group_replication_enforce_update_everywhere_checks=ON # = multi-primary

report_host=${CURRENT_IP}

report_port=${PORT}

EOF

#useradd mysql

chown -R mysql.mysql ${DB_BASE_DIR}

结果如下:

cat /data1/mysql_3106/etc/my.cnf

[client]

port = 3106

socket = /data1/mysql_3106/tmp/mysql.sock

default-character-set=utf8

[mysql]

no_auto_rehash

max_allowed_packet = 16M

prompt = '\u@\h [\d]> ' # 'user@host [schema]> '

default_character_set = utf8

[mysqldump]

max_allowed_packet = 16M

[mysqld_safe]

open_files_limit = 8192

user = mysql

pid_file = /data1/mysql_3106/tmp/mysql.pid

[mysqld]

# Base

port = 3106

socket = /data1/mysql_3106/tmp/mysql.sock

server-id=10341

user = mysql

basedir = /usr/local/mysql-5.7.23

plugin-dir=/usr/local/mysql-5.7.23/lib/plugin

datadir = /data1/mysql_3106/data

tmpdir = /data1/mysql_3106/tmp

max_allowed_packet = 64M

character_set_server = utf8

collation_server = utf8_general_ci

max_connections=5000

max_user_connections=1000

thread_cache_size = 128

# Query Cache

#query_cache_type = 0 # <= MySQL 8.0.3

# Session variables

sort_buffer_size = 2M

tmp_table_size = 32M

read_buffer_size = 128k

read_rnd_buffer_size = 256k

join_buffer_size = 128k

# Other buffers and caches

table_definition_cache = 1400

table_open_cache = 2000

table_open_cache_instances = 16

# MySQL error log

log-error = /data1/mysql_3106/logs/mysqld.err

innodb_print_all_deadlocks = 1

# Slow Query Log

slow_query_log = 1

slow_query_log_file = /data1/mysql_3106/logs/mysql_slow.log

log_queries_not_using_indexes = 0

long_query_time = 1

min_examined_row_limit = 0

# General Query Log

general_log = 0

general_log_file = /data1/mysql_3106/logs/mysql_general.log

# Performance Schema

# performance_schema = OFF

# Binary logging and Replication

log_bin = mysql-bin

binlog_format = ROW

binlog_checksum = NONE # or CRC32

master_verify_checksum = OFF # ON if binlog_checksum = CRC32

slave_sql_verify_checksum = OFF # ON if binlog_checksum = CRC32

binlog_cache_size = 1M

binlog_stmt_cache_size = 3M

max_binlog_size = 512M

sync_binlog = 1

expire_logs_days = 7

log_slave_updates = 1

relay_log = mysql-relay-bin

relay_log_purge = 1

# MyISAM variables

key_buffer_size = 8M

myisam_recover_options = 'BACKUP,FORCE'

# MEMORY variables

max_heap_table_size = 64M # Should be greater or equal to tmp_table_size

# InnoDB variables

default_storage_engine = InnoDB

innodb_file_per_table = 1

innodb_data_home_dir=/data1/mysql_3106/data

innodb_data_file_path=ibdata1:10M:autoextend

innodb_log_group_home_dir=/data1/mysql_3106/data

innodb_buffer_pool_size = 4G

innodb_buffer_pool_instances = 8

innodb_stats_on_metadata=0

innodb_lock_wait_timeout=30

innodb_flush_log_at_trx_commit = 2

innodb_log_buffer_size = 8M

innodb_log_file_size = 1024M

# innodb_write_io_threads = 8 # If you have a strong I/O system or SSD

# innodb_read_io_threads = 8 # If you have a strong I/O system or SSD

# innodb_io_capacity = 1000 # If you have a strong I/O system or SSD

# Group Replication parameter

gtid_mode = ON

enforce_gtid_consistency = ON

master_info_repository = TABLE

relay_log_info_repository = TABLE

slave_parallel_workers = 10

slave_preserve_commit_order = ON

slave_parallel_type = LOGICAL_CLOCK

#以便在server收集写集合的同时将其记录到二进制日志。写集合基于每行的主键,并且是行更改后的唯一标识此标识将用于检测冲突。

transaction_write_set_extraction = XXHASH64

#组的名字可以随便起,但不能用主机的GTID! 所有节点的这个组名必须保持一致!

loose-group_replication_group_name = "aaaaaaaa-aaaa-aaaa-aaaa-aaaaaaaaaaaa"

##为了避免每次启动自动引导具有相同名称的第二个组,所以设置为OFF。

loose-group_replication_start_on_boot = OFF

loose-group_replication_local_address = "192.110.103.41:31061"

loose-group_replication_group_seeds = "192.110.103.41:31061,192.110.103.42:31061,192.110.103.43:31061"

#为了避免每次启动自动引导具有相同名称的第二个组,所以设置为OFF。

loose-group_replication_bootstrap_group = OFF

##关闭单主模式的参数(本例测试时多主模式,所以关闭该项,开启多主模式的参数

loose-group_replication_single_primary_mode = FALSE # = multi-primary

loose-group_replication_enforce_update_everywhere_checks=ON # = multi-primary

report_host=192.110.103.41

report_port=3106

# 允许加入组复制的客户机来源的ip白名单

#loose-group_replication_ip_whitelist="192.110.0.0/16,127.0.0.1/8"

主要MGR配置说明:

cat my.cnf |grep group_replication -i

transaction_write_set_extraction=XXHASH64

loose-group_replication_group_name = "aaaaaaaa-aaaa-aaaa-aaaa-aaaaaaaaaaaa"

loose-group_replication_start_on_boot = OFF

loose-group_replication_local_address = "192.110.103.43:31061"

loose-group_replication_group_seeds = "192.110.103.41:31061,192.110.103.42:31061,192.110.103.43:31061"

loose-group_replication_bootstrap_group = OFF

loose-group_replication_single_primary_mode = FALSE # = multi-primary

loose-group_replication_enforce_update_everywhere_checks=ON # = multi-primary

第 1 行表示节点必须收集每个事务的写集(write set),并使用 XXHASH64 哈希算法将其编码为hash 值。

第 2 行用来告诉插件,有一个名为"aaaaaaaa-aaaa-aaaa-aaaa-aaaaaaaaaaaa"的组需要加入,或者 需要创建。

第 3 行告诉插件,在启动MySQL实例时不要自动启动组复制功能。

第 4 行告诉插件,该实例使用 192.110.103.43:31061端口作为组中成员之间的通信地址。选项 groupreplicationlocal_address 配置的本地地址必须能和组中其他所有成员通信。

第 5 行 告诉插件,如果节点要加入该组,需要联系这些主机。这些是种子成员(seed members),当 节点要连接该组时需要使用这些成员。在某节点要加入组时,需要联系其中的一个(种子),然后请求 组重新配置成员列表,以允许在组中接受该节点。注意,不需要在该选项中列出组中的所有成员,只 需要列出节点希望加入组时应该联系的服务器列表。

第6,7行 :和单主/多主模式有关。配置设置为多主模式。

单主模式配置:loose-groupreplicationsingleprimarymode=true loose-groupreplicationenforceupdateeverywhere_checks=false

5.2 设置复制账号 (所有节点执行)

启动/登录MySQL

# 启动数据库

$su - mysql -c "/usr/local/mysql-5.7.23/bin/mysqld_safe --defaults-file=/data1/mysql_3106/etc/my.cnf &"

$tail -f /data1/mysql_3106/logs/mysqld.err

# 登录数据库

/usr/local/mysql-5.7.23/bin/mysql -S ${DB_BASE_DIR}/tmp/mysql.sock

设置账号

# 修改root密码以及创建必要用户

ALTER USER 'root'@'localhost' IDENTIFIED BY 'root' PASSWORD EXPIRE NEVER;

ALTER USER 'root'@'localhost' IDENTIFIED WITH mysql_native_password BY 'root';

create user root@'127.0.0.1' identified WITH mysql_native_password BY 'root';

grant all privileges on *.* to root@'127.0.0.1' with grant option;

flush privileges;

#注:mysql_native_password为兼容老版本模式,否则老版本mysql客户端登录不了

#reset master;

root@localhost : (none) > show master logs;

root@localhost : (none) > reset master;

Query OK, 0 rows affected (0.02 sec)

root@localhost : (none) > show master logs;

+------------------+-----------+

| Log_name | File_size |

+------------------+-----------+

| mysql-bin.000001 | 150 |

+------------------+-----------+

1 row in set (0.00 sec)

#设置复制账号

SET SQL_LOG_BIN=0;

CREATE USER repl@'10.%.%.%' IDENTIFIED WITH 'mysql_native_password' BY 'repl';

GRANT REPLICATION SLAVE ON *.* TO repl@'10.%.%.%';

FLUSH PRIVILEGES;

SET SQL_LOG_BIN=1;

CHANGE MASTER TO MASTER_USER='repl', MASTER_PASSWORD='repl' FOR CHANNEL 'group_replication_recovery';

5.3 安装MGR插件 (所有节点执行)

安装MGR插件

# 安装MGR插件

root@localhost : (none) > INSTALL PLUGIN group_replication SONAME 'group_replication.so';

Query OK, 0 rows affected (0.00 sec)

root@localhost : (none) > SHOW PLUGINS;

+----------------------------+----------+--------------------+----------------------+---------+

| Name | Status | Type | Library | License |

+----------------------------+----------+--------------------+----------------------+---------+

| binlog | ACTIVE | STORAGE ENGINE | NULL | GPL |

| mysql_native_password | ACTIVE | AUTHENTICATION | NULL | GPL |

| BLACKHOLE | ACTIVE | STORAGE ENGINE | NULL | GPL |

...

| group_replication | ACTIVE | GROUP REPLICATION | group_replication.so | GPL |

+----------------------------+----------+--------------------+----------------------+---------+

5.4. 启动MGR多主模式

启动MGR,在第一节点(41)上执行。

#只在第一个节点执行这个步骤

mysql> SET GLOBAL group_replication_bootstrap_group=ON;

mysql> START GROUP_REPLICATION;

mysql> SET GLOBAL group_replication_bootstrap_group=OFF;

# 查看MGR组信息

mysql> SELECT * FROM performance_schema.replication_group_members;

+---------------------------+--------------------------------------+---------------+-------------+--------------+

| CHANNEL_NAME | MEMBER_ID | MEMBER_HOST | MEMBER_PORT | MEMBER_STATE |

+---------------------------+--------------------------------------+---------------+-------------+--------------+

| group_replication_applier | 509810ee-f3d7-11e9-a7d5-a0369fac2de4 | 192.110.103.41 | 3106 | ONLINE |

+---------------------------+--------------------------------------+---------------+-------------+--------------+

1 row in set (0.00 sec)

其它节点加入MGR:在另两台机器上执行如下命令。

# 其他节点加入MGR,在其它节点上执行

root@localhost : (none) > SELECT * FROM performance_schema.replication_group_members;

+---------------------------+-----------+-------------+-------------+--------------+

| CHANNEL_NAME | MEMBER_ID | MEMBER_HOST | MEMBER_PORT | MEMBER_STATE |

+---------------------------+-----------+-------------+-------------+--------------+

| group_replication_applier | | | NULL | OFFLINE |

+---------------------------+-----------+-------------+-------------+--------------+

1 row in set (0.00 sec)

root@localhost : (none) >show global variables like '%seed%';

+-------------------------------+-------------------------------------------------------------+

| Variable_name | Value |

+-------------------------------+-------------------------------------------------------------+

| group_replication_group_seeds | 192.110.103.41:31061,192.110.103.42:31061,192.110.103.43:31061 |

+-------------------------------+-------------------------------------------------------------+

1 row in set (0.00 sec)

root@localhost : (none) > START GROUP_REPLICATION;

Query OK, 0 rows affected (3.23 sec)

root@localhost : (none) > SELECT * FROM performance_schema.replication_group_members;

+---------------------------+--------------------------------------+---------------+-------------+--------------+

| CHANNEL_NAME | MEMBER_ID | MEMBER_HOST | MEMBER_PORT | MEMBER_STATE |

+---------------------------+--------------------------------------+---------------+-------------+--------------+

| group_replication_applier | 509810ee-f3d7-11e9-a7d5-a0369fac2de4 | 192.110.103.41 | 3106 | ONLINE |

| group_replication_applier | 53e462be-f3d7-11e9-9125-a0369fa6cce4 | 192.110.103.42 | 3106 | ONLINE |

| group_replication_applier | ee4a9cec-f3d5-11e9-9ded-a0369fa6cd30 | 192.110.103.43 | 3106 | ONLINE |

+---------------------------+--------------------------------------+---------------+-------------+--------------+

3 rows in set (0.00 sec)

>SHOW STATUS LIKE 'group_replication_primary_member';

+----------------------------------+-------+

| Variable_name | Value |

+----------------------------------+-------+

| group_replication_primary_member | |

+----------------------------------+-------+

root@localhost : test > show global variables like 'group_replication_single%';

+---------------------------------------+-------+

| Variable_name | Value |

+---------------------------------------+-------+

| group_replication_single_primary_mode | OFF |

+---------------------------------------+-------+

可以看到,3个节点状态为online。并且当前为多主模式,各节点都可以写入。

插入测试数据在任一DB节点上插入测试数据,如节点1执行。

root@localhost : (none) > CREATE DATABASE test ;

root@localhost : (none) > use test;

Database changed

root@localhost : test > create table if not exists t1 (id int(10) PRIMARY KEY AUTO_INCREMENT,name varchar(50) NOT NULL);

insert into t1 values(1,'zhang3');

其它节点查看在其它节点上可以看到数据。

root@localhost : (none) > select * from test.t1;

+----+--------+

| id | name |

+----+--------+

| 1 | zhang3 |

+----+--------+

1 row in set (0.00 sec)

root@localhost : (none) > insert into test.t1 values(2,'li4');

Query OK, 1 row affected (0.01 sec)

root@localhost : (none) > select * from performance_schema.replication_connection_status \G ;

*************************** 1. row ***************************

CHANNEL_NAME: group_replication_recovery

GROUP_NAME:

SOURCE_UUID:

THREAD_ID: NULL

SERVICE_STATE: OFF

COUNT_RECEIVED_HEARTBEATS: 0

LAST_HEARTBEAT_TIMESTAMP: 0000-00-00 00:00:00

RECEIVED_TRANSACTION_SET:

LAST_ERROR_NUMBER: 0

LAST_ERROR_MESSAGE:

LAST_ERROR_TIMESTAMP: 0000-00-00 00:00:00

*************************** 2. row ***************************

CHANNEL_NAME: group_replication_applier

GROUP_NAME: aaaaaaaa-aaaa-aaaa-aaaa-aaaaaaaaaaaa

SOURCE_UUID: aaaaaaaa-aaaa-aaaa-aaaa-aaaaaaaaaaaa

THREAD_ID: NULL

SERVICE_STATE: ON

COUNT_RECEIVED_HEARTBEATS: 0

LAST_HEARTBEAT_TIMESTAMP: 0000-00-00 00:00:00

RECEIVED_TRANSACTION_SET: aaaaaaaa-aaaa-aaaa-aaaa-aaaaaaaaaaaa:1-6:1000003

LAST_ERROR_NUMBER: 0

LAST_ERROR_MESSAGE:

LAST_ERROR_TIMESTAMP: 0000-00-00 00:00:00

2 rows in set (0.00 sec)

6. 单主-多主模式切换

6.1 切到单主模式

1)切换前是多主模式:

root@localhost : performance_schema > show global variables like 'group_replication_single%';

+---------------------------------------+-------+

| Variable_name | Value |

+---------------------------------------+-------+

| group_replication_single_primary_mode | OFF |

+---------------------------------------+-------+

1 row in set (0.00 sec)

root@localhost : performance_schema > show global variables like 'group_replication%';

+----------------------------------------------------+-------------------------------------------------------------+

| Variable_name | Value |

+----------------------------------------------------+-------------------------------------------------------------+

| group_replication_allow_local_disjoint_gtids_join | OFF |

| group_replication_allow_local_lower_version_join | OFF |

| group_replication_auto_increment_increment | 7 |

| group_replication_bootstrap_group | OFF |

| group_replication_components_stop_timeout | 31536000 |

| group_replication_compression_threshold | 1000000 |

| group_replication_enforce_update_everywhere_checks | ON |

| group_replication_flow_control_applier_threshold | 25000 |

| group_replication_flow_control_certifier_threshold | 25000 |

| group_replication_flow_control_mode | QUOTA |

| group_replication_force_members | |

| group_replication_group_name | aaaaaaaa-aaaa-aaaa-aaaa-aaaaaaaaaaaa |

| group_replication_group_seeds | 192.110.103.41:31061,192.110.103.42:31061,192.110.103.43:31061 |

| group_replication_gtid_assignment_block_size | 1000000 |

| group_replication_ip_whitelist | AUTOMATIC |

| group_replication_local_address | 192.110.103.41:31061 |

| group_replication_member_weight | 50 |

| group_replication_poll_spin_loops | 0 |

| group_replication_recovery_complete_at | TRANSACTIONS_APPLIED |

| group_replication_recovery_reconnect_interval | 60 |

| group_replication_recovery_retry_count | 10 |

| group_replication_recovery_ssl_ca | |

| group_replication_recovery_ssl_capath | |

| group_replication_recovery_ssl_cert | |

| group_replication_recovery_ssl_cipher | |

| group_replication_recovery_ssl_crl | |

| group_replication_recovery_ssl_crlpath | |

| group_replication_recovery_ssl_key | |

| group_replication_recovery_ssl_verify_server_cert | OFF |

| group_replication_recovery_use_ssl | OFF |

| group_replication_single_primary_mode | OFF |

| group_replication_ssl_mode | DISABLED |

| group_replication_start_on_boot | OFF |

| group_replication_transaction_size_limit | 0 |

| group_replication_unreachable_majority_timeout | 0 |

+----------------------------------------------------+-------------------------------------------------------------+

35 rows in set (0.00 sec)

2)所有节点执行把各节点groupreplicationsingleprimarymode改为on;

# 所有节点执行

root@localhost : none> stop group_replication;

Query OK, 0 rows affected (9.02 sec)

root@localhost : none> SELECT * FROM performance_schema.replication_group_members;

+---------------------------+--------------------------------------+---------------+-------------+--------------+

| CHANNEL_NAME | MEMBER_ID | MEMBER_HOST | MEMBER_PORT | MEMBER_STATE |

+---------------------------+--------------------------------------+---------------+-------------+--------------+

| group_replication_applier | 509810ee-f3d7-11e9-a7d5-a0369fac2de4 | 192.110.103.41 | 3106 | OFFLINE |

+---------------------------+--------------------------------------+---------------+-------------+--------------+

1 row in set (0.00 sec)

root@localhost : none> set global group_replication_enforce_update_everywhere_checks=OFF;

Query OK, 0 rows affected (0.00 sec)

root@localhost : none> set global group_replication_single_primary_mode=ON;

Query OK, 0 rows affected (0.00 sec)

root@localhost : none> show global variables like 'group_replication_single%';

+---------------------------------------+-------+

| Variable_name | Value |

+---------------------------------------+-------+

| group_replication_single_primary_mode | ON |

+---------------------------------------+-------+

1 row in set (0.00 sec)

3)在第一节点执行

SET GLOBAL group_replication_bootstrap_group=ON;

START GROUP_REPLICATION;

SET GLOBAL group_replication_bootstrap_group=OFF;

root@localhost : performance_schema > SELECT * FROM performance_schema.replication_group_members;

+---------------------------+--------------------------------------+---------------+-------------+--------------+

| CHANNEL_NAME | MEMBER_ID | MEMBER_HOST | MEMBER_PORT | MEMBER_STATE |

+---------------------------+--------------------------------------+---------------+-------------+--------------+

| group_replication_applier | 509810ee-f3d7-11e9-a7d5-a0369fac2de4 | 192.110.103.41 | 3106 | ONLINE |

+---------------------------+--------------------------------------+---------------+-------------+--------------+

1 row in set (0.00 sec)

4)在其它节点执行

root@localhost : test > SELECT * FROM performance_schema.replication_group_members;

root@localhost : test > START GROUP_REPLICATION;

root@localhost : test > SELECT * FROM performance_schema.replication_group_members;

+---------------------------+--------------------------------------+---------------+-------------+--------------+

| CHANNEL_NAME | MEMBER_ID | MEMBER_HOST | MEMBER_PORT | MEMBER_STATE |

+---------------------------+--------------------------------------+---------------+-------------+--------------+

| group_replication_applier | 509810ee-f3d7-11e9-a7d5-a0369fac2de4 | 192.110.103.41 | 3106 | ONLINE |

| group_replication_applier | 53e462be-f3d7-11e9-9125-a0369fa6cce4 | 192.110.103.42 | 3106 | ONLINE |

| group_replication_applier | ee4a9cec-f3d5-11e9-9ded-a0369fa6cd30 | 192.110.103.43 | 3106 | ONLINE |

+---------------------------+--------------------------------------+---------------+-------------+--------------+

3 rows in set (0.00 sec)

5)查看单主masterMySQL5.7 MRG不象MySQL8,不可以用replicationgroupmembers直接显示master。要用SHOW STATUS LIKE 'groupreplicationprimary_member'如下:

#

SELECT ta.* ,tb.MEMBER_HOST,tb.MEMBER_PORT,tb.MEMBER_STATE FROM performance_schema.global_status ta,performance_schema.replication_group_members tb WHERE ta.VARIABLE_NAME='group_replication_primary_member' and ta.VARIABLE_VALUE=tb.MEMBER_ID;

+----------------------------------+--------------------------------------+---------------+-------------+--------------+

| VARIABLE_NAME | VARIABLE_VALUE | MEMBER_HOST | MEMBER_PORT | MEMBER_STATE |

+----------------------------------+--------------------------------------+---------------+-------------+--------------+

| group_replication_primary_member | 509810ee-f3d7-11e9-a7d5-a0369fac2de4 | 192.110.103.41 | 3106 | ONLINE |

+----------------------------------+--------------------------------------+---------------+-------------+--------------+

root@192.110.103.41 : (none) > show variables like 'group_replication_group_seeds';

+-------------------------------+-------------------------------------------------------------+

| Variable_name | Value |

+-------------------------------+-------------------------------------------------------------+

| group_replication_group_seeds | 192.110.103.41:31061,192.110.103.42:31061,192.110.103.43:31061 |

+-------------------------------+-------------------------------------------------------------+

1 row in set (0.00 sec)

root@192.110.103.41 : (none) > SELECT * FROM performance_schema.replication_group_members;

+---------------------------+--------------------------------------+---------------+-------------+--------------+

| CHANNEL_NAME | MEMBER_ID | MEMBER_HOST | MEMBER_PORT | MEMBER_STATE |

+---------------------------+--------------------------------------+---------------+-------------+--------------+

| group_replication_applier | 509810ee-f3d7-11e9-a7d5-a0369fac2de4 | 192.110.103.41 | 3106 | ONLINE |

| group_replication_applier | 53e462be-f3d7-11e9-9125-a0369fa6cce4 | 192.110.103.42 | 3106 | ONLINE |

| group_replication_applier | ee4a9cec-f3d5-11e9-9ded-a0369fa6cd30 | 192.110.103.43 | 3106 | ONLINE |

+---------------------------+--------------------------------------+---------------+-------------+--------------+

3 rows in set (0.00 sec)

root@192.110.103.41 : (none) > SHOW STATUS LIKE 'group_replication_primary_member';

+----------------------------------+--------------------------------------+

| Variable_name | Value |

+----------------------------------+--------------------------------------+

| group_replication_primary_member | 509810ee-f3d7-11e9-a7d5-a0369fac2de4 |

+----------------------------------+--------------------------------------+

1 row in set (0.01 sec)

> select @@server_id;

> select @@server_uuid;

+--------------------------------------+

| @@server_uuid |

+--------------------------------------+

| 509810ee-f3d7-11e9-a7d5-a0369fac2de4 |

+--------------------------------------+

1 row in set (0.00 sec)

6)测试写入

root@192.110.103.42 : (none) > select * from test.t1;

+----+--------+

| id | name |

+----+--------+

| 1 | zhang3 |

| 2 | li4 |

+----+--------+

2 rows in set (0.00 sec)

root@192.110.103.42 : (none) > insert into test.t1 values(3,'wang5');

ERROR 1290 (HY000): The MySQL server is running with the --super-read-only option so it cannot execute this statement

#使用主可以insert

mysql -h 192.110.103.41 -P 3106 -uroot -proot

root@192.110.103.41 : (none) > select * from test.t1;

+----+--------+

| id | name |

+----+--------+

| 1 | zhang3 |

| 2 | li4 |

+----+--------+

2 rows in set (0.00 sec)

root@192.110.103.41 : (none) > insert into test.t1 values(3,'wang5');

Query OK, 1 row affected (0.00 sec)

root@192.110.103.41 : (none) > select * from test.t1;

+----+--------+

| id | name |

+----+--------+

| 1 | zhang3 |

| 2 | li4 |

| 3 | wang5 |

+----+--------+

如上实验说明:$\color{red}{ 单主模式,只有主可以写入,在从节点写入数据时会出错 --super-read-only. }$

6.2 切回多主模式

MGR切换模式需要重新启动组复制,因些需要在所有节点上先关闭组复制,设置 groupreplicationsingleprimarymode=OFF 等参数,再启动组复制。

# 停止组复制(所有节点执行):

stop group_replication;

set global group_replication_single_primary_mode=OFF;

set global group_replication_enforce_update_everywhere_checks=ON;

show global variables like 'group_replication_single%';

+---------------------------------------+-------+

| Variable_name | Value |

+---------------------------------------+-------+

| group_replication_single_primary_mode | OFF |

+---------------------------------------+-------+

1 row in set (0.00 sec)

show global variables like 'group_replication_enforce_%';

+----------------------------------------------------+-------+

| Variable_name | Value |

+----------------------------------------------------+-------+

| group_replication_enforce_update_everywhere_checks | ON |

+----------------------------------------------------+-------+

1 row in set (0.00 sec)

# 随便选择某个节点执行

SET GLOBAL group_replication_bootstrap_group=ON;

START GROUP_REPLICATION;

SET GLOBAL group_replication_bootstrap_group=OFF;

SELECT * FROM performance_schema.replication_group_members;

+---------------------------+--------------------------------------+---------------+-------------+--------------+

| CHANNEL_NAME | MEMBER_ID | MEMBER_HOST | MEMBER_PORT | MEMBER_STATE |

+---------------------------+--------------------------------------+---------------+-------------+--------------+

| group_replication_applier | 509810ee-f3d7-11e9-a7d5-a0369fac2de4 | 192.110.103.41 | 3106 | ONLINE |

+---------------------------+--------------------------------------+---------------+-------------+--------------+

# 其他节点执行

START GROUP_REPLICATION;

# 查看组信息,所有节点的 MEMBER_ROLE 都为 PRIMARY

SELECT * FROM performance_schema.replication_group_members;

root@localhost : (none) > SELECT * FROM performance_schema.replication_group_members;

+---------------------------+--------------------------------------+---------------+-------------+--------------+

| CHANNEL_NAME | MEMBER_ID | MEMBER_HOST | MEMBER_PORT | MEMBER_STATE |

+---------------------------+--------------------------------------+---------------+-------------+--------------+

| group_replication_applier | 509810ee-f3d7-11e9-a7d5-a0369fac2de4 | 192.110.103.41 | 3106 | ONLINE |

| group_replication_applier | 53e462be-f3d7-11e9-9125-a0369fa6cce4 | 192.110.103.42 | 3106 | ONLINE |

| group_replication_applier | ee4a9cec-f3d5-11e9-9ded-a0369fa6cd30 | 192.110.103.43 | 3106 | ONLINE |

+---------------------------+--------------------------------------+---------------+-------------+--------------+

测试写入分别在node2,node3测试写数据是否可以成功。

#在node2测试写

root@192.110.103.42 : test > select * from test.t1;

+----+--------+

| id | name |

+----+--------+

| 1 | zhang3 |

| 2 | li4 |

| 3 | wang5 |

+----+--------+

3 rows in set (0.00 sec)

root@192.110.103.42 : test > insert into test.t1 values(4,'ma6');

Query OK, 1 row affected (0.01 sec)

#在node3测试写入

root@192.110.103.43 : test > select * from test.t1;

+----+--------+

| id | name |

+----+--------+

| 1 | zhang3 |

| 2 | li4 |

| 3 | wang5 |

| 4 | ma6 |

+----+--------+

4 rows in set (0.00 sec)

root@192.110.103.43 : test > insert into test.t1 values(5,'qian7');

Query OK, 1 row affected (0.01 sec)

7. 故障切换

7.1 单主模式下故障切换

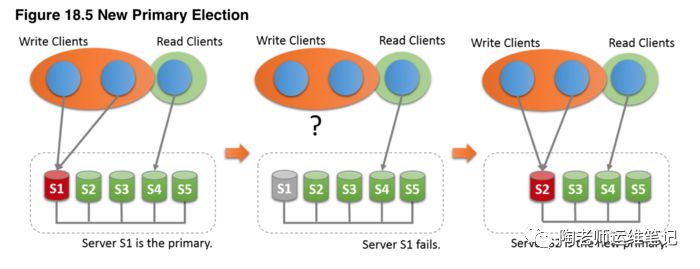

当主节点故障后,主节点自动选 举机制将会自动选出另一个主节点。

在选举主节点时,将查找新的成员视图,并根据groupreplicationmemberweigth 的值排列出潜在的主节点,如果所有成员的MySQL版本都相同, 则权重最高的节点被选举为下一个主节点,如果权重值相同,则根据字典顺序对它们的serveruuid进 行排序,然后选出列表中的第一个节点作为下一个主节点。当选举出新的主节点后,该主节点将自动设 置为 read-write,其他节点继续作为slave,且保留设置为read-only。

如果MySQL版本不支持groupreplicationmemberweigth,那么将根据 serveruuid的字典顺序来 选举新节点。在将客户端应用程序重新路由到新节点之前,等待新节点应用完 relay-log是 一个好习惯。

7.2 多主模式下故障切换

在多主模型下,没有单主的概念,没有必须进行选举的过程,因为多主模型下没有节点扮演特殊的角色。 当节点加入组时,所有节点都会设置为 read-write。

当节点加入组时,所有节点都会设置为 read-write。

7.3 查找主节点

如下演示如何查询单主模型下当前的主节点。MySQL5.7 MRG不象MySQL8,不可以用replicationgroupmembers直接显示master。要用SHOW STATUS LIKE 'groupreplicationprimary_member'如下:

SELECT ta.* ,tb.MEMBER_HOST,tb.MEMBER_PORT,tb.MEMBER_STATE FROM performance_schema.global_status ta,performance_schema.replication_group_members tb WHERE ta.VARIABLE_NAME='group_replication_primary_member' and ta.VARIABLE_VALUE=tb.MEMBER_ID;

+----------------------------------+--------------------------------------+---------------+-------------+--------------+

| VARIABLE_NAME | VARIABLE_VALUE | MEMBER_HOST | MEMBER_PORT | MEMBER_STATE |

+----------------------------------+--------------------------------------+---------------+-------------+--------------+

| group_replication_primary_member | 509810ee-f3d7-11e9-a7d5-a0369fac2de4 | 192.110.103.41 | 3106 | ONLINE |

+----------------------------------+--------------------------------------+---------------+-------------+--------------+

#SELECT VARIABLE_VALUE FROM performance_schema.global_status WHERE VARIABLE_NAME='group_replication_primary_member';

或

>SHOW STATUS LIKE 'group_replication_primary_member';

+----------------------------------+--------------------------------------+

| Variable_name | Value |

+----------------------------------+--------------------------------------+

| group_replication_primary_member | 509810ee-f3d7-11e9-a7d5-a0369fac2de4 |

+----------------------------------+--------------------------------------+

1 row in set (0.01 sec)

参考

https://dev.mysql.com/doc/refman/5.7/en/group-replication.html

http://dev.mysql.com/doc/refman/5.7/en/group-replication-getting-started.html

MySQL MGR 集群搭建

Mysql 5.7 基于组复制(MySQL Group Replication) - 运维小结

https://www.cnblogs.com/f-ck-need-u/p/9216828.html