Beam’s portability framework introduces well-defined, language-neutral data structures and protocols between the SDK and runner. It ensures that SDKs and runners can work with each other uniformly. At the execution layer, the Fn API is provided which is for language-specific user-defined function execution. The Fn API is highly abstract and it includes several generic components such as control service, data service, state service, logging service, etc which make it not only available for Beam, but also third part projects which require multi-language support. PyFlink is such a project which is built on top of Beam’s portability framework that aims to provide Python language support for Apache Flink. So, I would like to talk about how does PyFlink on Beam actually work.

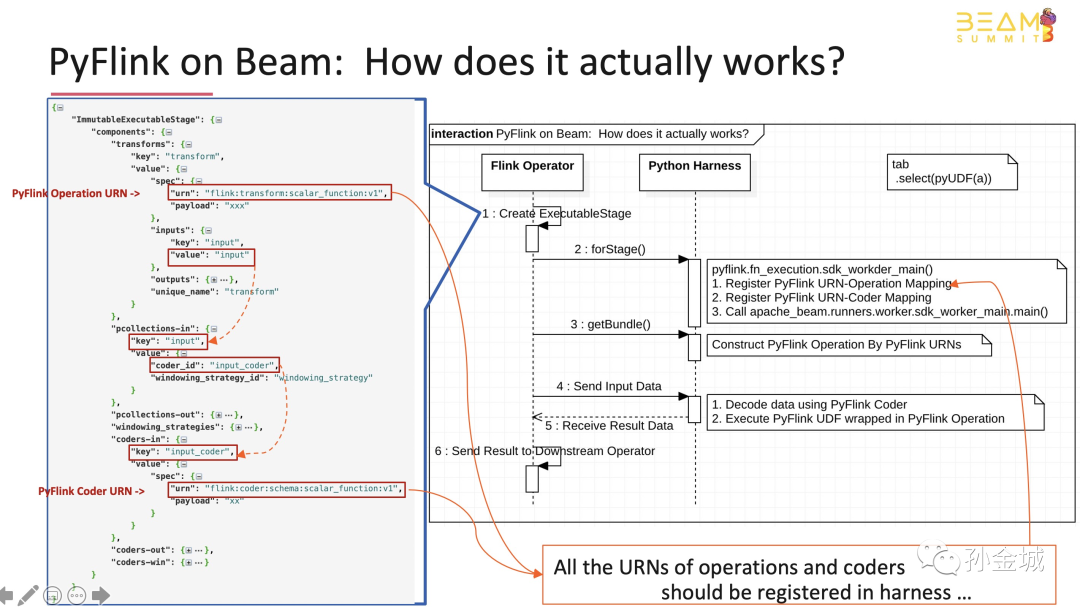

Let’s take a lookat how PyFlink on Beam actually works. The pictureshows the basic workflow. A specific Flink operator was used to execute the Pythonuser-defined functions defined in PyFlink. In the initialization phase, the Flinkoperator constructs an ExecutableStage which contains all the necessaryinformation about the Python user-defined functions to be executed.

Next, the Flinkoperator will start up the Python SDK harness. This is achieved by calling theJava library provided by Beam’s portability framework. The entrypointof the Python SDK harness is provided by PyFlink. It wraps the entrypointof Beam’s Python SDK harness. The main purpose of providing a custom entrypointin PyFlink is to register the custom operations andcoders used by PyFlink.

Lastly, the Flinkoperator could send input data to the Python SDK harness. The Python SDKharness executes Flink’s Python user-defined functions and sentback the execution results.

Let's shows an example of the ExecutableStage instance.

We can see that it contains thepayload of the Python function,

besides, it also contains a specialURN which indicates that this is a Flink scalar function.

We can see that it alsocontains the URNs and payload of theinput and output coder.

Of course, there are many details,but the most important thing about pyflink on beam are built Executable Stage andAdd Operations/Coders by registering URNs…

Jincheng Sun is an ASF Member and a PMC member of Apache Flink and Apache IoTDB, He is also a committer for Flink, Beam, IoTDB. He is also an engineering lead at Alibaba Group. During 9 years working experiences at Alibaba, he participated and lead some of the critical systems inside the company and started the development of PyFlink.