1

Open Policy Agent

Open Policy Agent简称OPA,CNCF正在孵化的项目,是一种开源的通用策略代理引擎,它统一了整个堆栈中的策略执行。

OPA提供了一种高级声明式语言(Rego),简化了策略规则的定义,以减轻程序中策略的决策负担。在微服务,Kubernetes,CI/CD,API网关等场景中均可以使用OPA定义策略。在Kubernetes中,OPA通过admission controllers来实现安全策略。

2

Kubernetes admission controllers

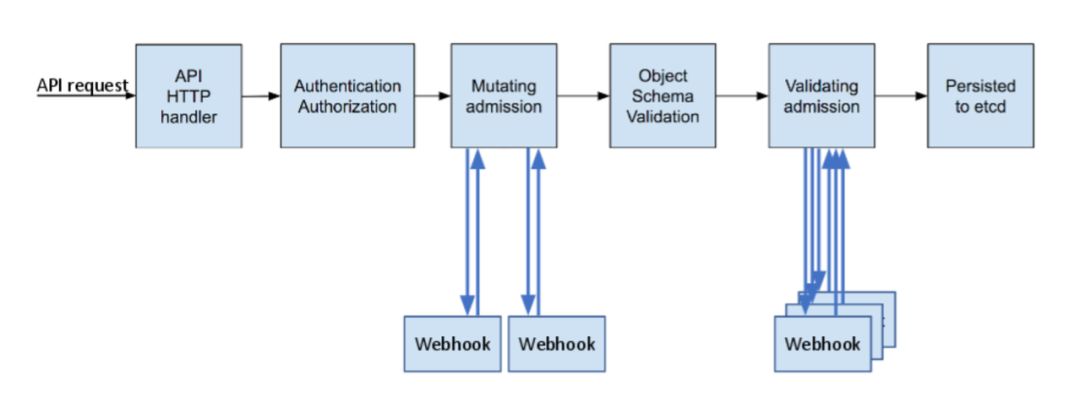

admission controllers(准入控制器)是功能强大的Kubernetes原生功能,可以定义允许在集群上运行的对象。admission controllers在对象持久化之前,截取并处理对进行身份验证和授权之后的API请求。在Kubernetes中有30个admission controllers,有两个具有特殊的作用,有着强大的灵活性 —— ValidatingAdmissionWebhooks(数据格式校验)和MutatingAdmissionWebhooks(修改请求对象),OPA可以与其进行很好的结合。

如上图所示,Kubernetes API与自定义代码实现之间的通信使用了标准的webhook接口,从而简化了与任何第三方代码的集成。

通过kube-apiserver服务的enable-admission-plugins参数,来配置启用admission controllers,官方建议启用以下admission controllers

--enable-admission-plugins=NamespaceLifecycle,LimitRanger,ServiceAccount,DefaultStorageClass,DefaultTolerationSeconds,MutatingAdmissionWebhook,ValidatingAdmissionWebhook,Priority,ResourceQuota,PodSecurityPolicy

OPA的核心概念是代码即策略。在Kubernetes中有很多使用场景,如:

安全策略

禁止容器以root用户运行;

禁止Pod以特权模式进行编排部署;

只允许指定镜像仓库拉取镜像;

...

资源控制策略

要求Pod加上资源的请求和限制,标签等;

Node和Pod的亲和性与反亲和性选择;

防止创建冲突的ingress对象,或名称相近的资源对象;

...

访问策略

允许用户访问某个namespace下所有的资源;

...

3

OPA Gatekeeper

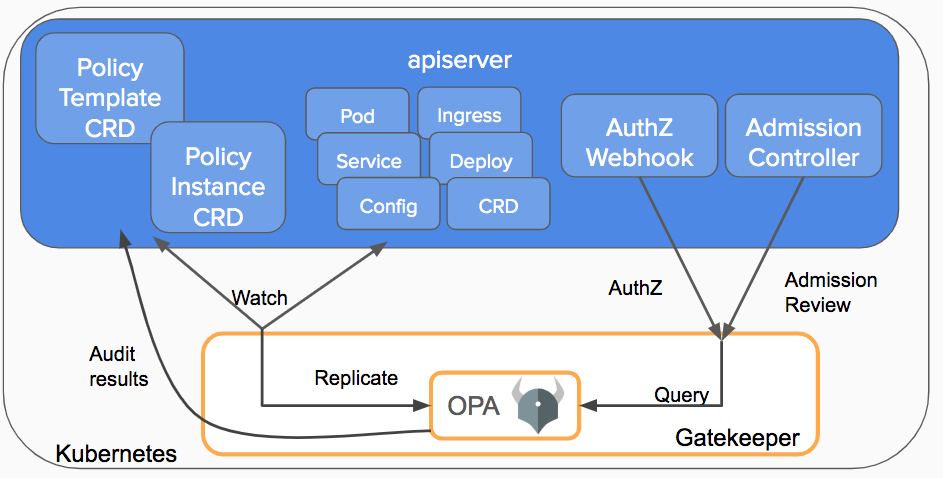

OPA Gatekeeper是一个新项目,可在OPA和Kubernetes之间提供一流的集成。Gatekeeper v3版本把admission controllers与OPA约束框架集成在一起,以实施基于CRD的策略,并允许声明性配置的策略可靠地共享。该项目是Google,Microsoft,Red Hat和Styra之间的合作,当前处于测试阶段。

OPA Gatekeeper在普通OPA的基础上添加了以下内容

可扩展的参数化策略库

用于实例化策略库的本地Kubernetes CRD(也称为"约束")

本地Kubernetes CRD,用于扩展策略库(也称为"约束模板")

审核功能

4

OPA(Kube-mgmt)安装测试

部署OPA

[root@kube-master01 opa]# kubectl create namespace opa

必须使用TLS保护Kubernetes和OPA之间的通信,使用openssl用于为OPA创建证书和密钥

[root@kube-master01 opa]# openssl genrsa -out ca.key 2048

[root@kube-master01 opa]# openssl req -x509 -new -nodes -key ca.key -days 100000 -out ca.crt -subj "/CN=admission_ca"

[root@kube-master01 opa]# cat >server.conf <<EOF

[root@kube-master01 opa]# > [req]

[root@kube-master01 opa]# cat >server.conf <<EOF

> [req]

> req_extensions = v3_req

> distinguished_name = req_distinguished_name

> [req_distinguished_name]

> [ v3_req ]

> basicConstraints = CA:FALSE

> keyUsage = nonRepudiation, digitalSignature, keyEncipherment

> extendedKeyUsage = clientAuth, serverAuth

> EOF

[root@kube-master01 opa]# openssl genrsa -out server.key 2048

[root@kube-master01 opa]# openssl req -new -key server.key -out server.csr -subj "/CN=opa.opa.svc" -config server.conf

[root@kube-master01 opa]# openssl x509 -req -in server.csr -CA ca.crt -CAkey ca.key -CAcreateserial -out server.crt -days 100000 -extensions v3_req -extfile server.conf

[root@kube-master01 opa]# kubectl create secret tls opa-server --cert=server.crt --key=server.key -n opa

部署OPA

[root@kube-master01 opa]# cat admission-controller.yaml

# Grant OPA/kube-mgmt read-only access to resources. This lets kube-mgmt

# replicate resources into OPA so they can be used in policies.

kind: ClusterRoleBinding

apiVersion: rbac.authorization.k8s.io/v1

metadata:

name: opa-viewer

roleRef:

kind: ClusterRole

name: view

apiGroup: rbac.authorization.k8s.io

subjects:

- kind: Group

name: system:serviceaccounts:opa

apiGroup: rbac.authorization.k8s.io

---

# Define role for OPA/kube-mgmt to update configmaps with policy status.

kind: Role

apiVersion: rbac.authorization.k8s.io/v1

metadata:

namespace: opa

name: configmap-modifier

rules:

- apiGroups: [""]

resources: ["configmaps"]

verbs: ["update", "patch"]

---

# Grant OPA/kube-mgmt role defined above.

kind: RoleBinding

apiVersion: rbac.authorization.k8s.io/v1

metadata:

namespace: opa

name: opa-configmap-modifier

roleRef:

kind: Role

name: configmap-modifier

apiGroup: rbac.authorization.k8s.io

subjects:

- kind: Group

name: system:serviceaccounts:opa

apiGroup: rbac.authorization.k8s.io

---

kind: Service

apiVersion: v1

metadata:

name: opa

namespace: opa

spec:

selector:

app: opa

ports:

- name: https

protocol: TCP

port: 443

targetPort: 443

---

apiVersion: apps/v1

kind: Deployment

metadata:

labels:

app: opa

namespace: opa

name: opa

spec:

replicas: 1

selector:

matchLabels:

app: opa

template:

metadata:

labels:

app: opa

name: opa

spec:

containers:

# WARNING: OPA is NOT running with an authorization policy configured. This

# means that clients can read and write policies in OPA. If you are

# deploying OPA in an insecure environment, be sure to configure

# authentication and authorization on the daemon. See the Security page for

# details: https://www.openpolicyagent.org/docs/security.html.

- name: opa

image: openpolicyagent/opa:0.16.0

args:

- "run"

- "--server"

- "--tls-cert-file=/certs/tls.crt"

- "--tls-private-key-file=/certs/tls.key"

- "--addr=0.0.0.0:443"

- "--addr=http://127.0.0.1:8181"

- "--log-format=json-pretty"

- "--set=decision_logs.console=true"

volumeMounts:

- readOnly: true

mountPath: /certs

name: opa-server

readinessProbe:

httpGet:

path: /health

scheme: HTTPS

port: 443

initialDelaySeconds: 3

periodSeconds: 5

livenessProbe:

httpGet:

path: /health

scheme: HTTPS

port: 443

initialDelaySeconds: 3

periodSeconds: 5

- name: kube-mgmt

image: openpolicyagent/kube-mgmt:0.8

args:

- "--replicate-cluster=v1/namespaces"

- "--replicate=networking.k8s.io/v1beta1/ingresses"

volumes:

- name: opa-server

secret:

secretName: opa-server

---

kind: ConfigMap

apiVersion: v1

metadata:

name: opa-default-system-main

namespace: opa

data:

main: |

package system

import data.kubernetes.admission

main = {

"apiVersion": "admission.k8s.io/v1beta1",

"kind": "AdmissionReview",

"response": response,

}

default response = {"allowed": true}

response = {

"allowed": false,

"status": {

"reason": reason,

},

} {

reason = concat(", ", admission.deny)

reason != ""

}

[root@kube-master01 opa]# kubectl apply -f admission-controller.yaml

[root@kube-master01 opa]# kubectl get pod -n opa

NAME READY STATUS RESTARTS AGE

opa-7695f48874-hxfbv 2/2 Running 0 1d4h

[root@kube-master01 opa]#

启动OPA时,kube-mgmt容器会将Kubernetes命名空间和Ingress对象加载到OPA中.

创建将OPA注册为dmission controllers的yaml文件,此webhook忽略标签为

openpolicyagent.org/webhook=ignore的命名空间.

[root@kube-master01 opa]# cat > webhook-configuration.yaml <<EOF

> kind: ValidatingWebhookConfiguration

> apiVersion: admissionregistration.k8s.io/v1beta1

> metadata:

> name: opa-validating-webhook

> webhooks:

> - name: validating-webhook.openpolicyagent.org

> namespaceSelector:

> matchExpressions:

> - key: openpolicyagent.org/webhook

> operator: NotIn

> values:

> - ignore

> rules:

> - operations: ["CREATE", "UPDATE"]

> apiGroups: ["*"]

> apiVersions: ["*"]

> resources: ["*"]

> clientConfig:

> caBundle: $(cat ca.crt | base64 | tr -d '\n')

> service:

> namespace: opa

> name: opa

> EOF

给ube-system和opa的namespace新增标签,以便OPA不控制这些namespace中的资源

[root@kube-master01 opa]# kubectl label ns kube-system openpolicyagent.org/webhook=ignore

[root@kube-master01 opa]# kubectl label ns kube-system openpolicyagent.org/webhook=ignore

[root@kube-master01 opa]# kubectl apply -f webhook-configuration.yaml

通过查看OPA的日志,可以看到Kubernetes API正在发出的Webhook请求

[root@kube-master01 opa]# kubectl logs -l app=opa -c opa -f -n opa

测试OPA的策略

创建限制Ingress的策略

[root@kube-master01 opa]# cat ingress-whitelist.rego

package kubernetes.admission

import data.kubernetes.namespaces

operations = {"CREATE", "UPDATE"}

deny[msg] {

input.request.kind.kind == "Ingress"

operations[input.request.operation]

host := input.request.object.spec.rules[_].host

not fqdn_matches_any(host, valid_ingress_hosts)

msg := sprintf("invalid ingress host %q", [host])

}

valid_ingress_hosts = {host |

whitelist := namespaces[input.request.namespace].metadata.annotations["ingress-whitelist"]

hosts := split(whitelist, ",")

host := hosts[_]

}

fqdn_matches_any(str, patterns) {

fqdn_matches(str, patterns[_])

}

fqdn_matches(str, pattern) {

pattern_parts := split(pattern, ".")

pattern_parts[0] == "*"

str_parts := split(str, ".")

n_pattern_parts := count(pattern_parts)

n_str_parts := count(str_parts)

suffix := trim(pattern, "*.")

endswith(str, suffix)

}

fqdn_matches(str, pattern) {

not contains(pattern, "*")

str == pattern

}

[root@kube-master01 opa]# cat ingress-whitelist.rego

package kubernetes.admission

import data.kubernetes.namespaces

operations = {"CREATE", "UPDATE"}

deny[msg] {

input.request.kind.kind == "Ingress"

operations[input.request.operation]

host := input.request.object.spec.rules[_].host

not fqdn_matches_any(host, valid_ingress_hosts)

msg := sprintf("invalid ingress host %q", [host])

}

valid_ingress_hosts = {host |

whitelist := namespaces[input.request.namespace].metadata.annotations["ingress-whitelist"]

hosts := split(whitelist, ",")

host := hosts[_]

}

fqdn_matches_any(str, patterns) {

fqdn_matches(str, patterns[_])

}

fqdn_matches(str, pattern) {

pattern_parts := split(pattern, ".")

pattern_parts[0] == "*"

str_parts := split(str, ".")

n_pattern_parts := count(pattern_parts)

n_str_parts := count(str_parts)

suffix := trim(pattern, "*.")

endswith(str, suffix)

}

fqdn_matches(str, pattern) {

not contains(pattern, "*")

str == pattern

}

[root@kube-master01 opa]# kubectl create configmap ingress-whitelist --from-file=ingress-whitelist.rego -n opa

查看ingress-whitelist状态,OK表示加载策略成功

[root@kube-master01 opa]# kubectl describe cm ingress-whitelist -n opa

Name: ingress-whitelist

Namespace: opa

Labels: <none>

Annotations: openpolicyagent.org/policy-status: {"status":"ok"}

创建两个新的namespace以测试Ingress策略

[root@kube-master01 opa]# cat production-namespace.yaml

apiVersion: v1

kind: Namespace

metadata:

annotations:

ingress-whitelist: "*.acmecorp.com"

name: production

[root@kube-master01 opa]# cat qa-namespace.yaml

apiVersion: v1

kind: Namespace

metadata:

annotations:

ingress-whitelist: "*.qa.acmecorp.com,*.internal.acmecorp.com"

name: qa

[root@kube-master01 opa]# kubectl create -f qa-namespace.yaml

[root@kube-master01 opa]# kubectl create -f production-namespace.yaml

定义两个Ingress对象

[root@kube-master01 opa]# cat ingress-ok.yaml

apiVersion: networking.k8s.io/v1beta1

kind: Ingress

metadata:

name: ingress-ok

spec:

rules:

- host: signin.acmecorp.com

http:

paths:

- backend:

serviceName: nginx

servicePort: 80

[root@kube-master01 opa]# cat ingress-bad.yaml

apiVersion: networking.k8s.io/v1beta1

kind: Ingress

metadata:

name: ingress-bad

spec:

rules:

- host: demo.acmecorp.com

http:

paths:

- backend:

serviceName: nginx

servicePort: 80

[root@kube-master01 opa]# kubectl create -f ingress-ok.yaml -n production

ingress.networking.k8s.io/ingress-ok created

因为host定义的对象与qa namespace定义的规则不匹配,不允许创建

[root@kube-master01 opa]# kubectl create -f ingress-bad.yaml -n qa

Error from server (invalid ingress host "demo.acmecorp.com"): error when creating "ingress-bad.yaml": admission webhook "validating-webhook.openpolicyagent.org" denied the request: invalid ingress host "demo.acmecorp.com"

[root@kube-master01 opa]#

5

Gatekeeper安装测试

官方提供Helm等安装方式,本文选择yaml文件安装

[root@kube-master01 ~]# kubectl apply -f https://raw.githubusercontent.com/open-policy-agent/gatekeeper/master/deploy/gatekeeper.yaml

[root@kube-master01 ~]# git clone https://github.com/open-policy-agent/gatekeeper.git

[root@kube-master01 ~]# cd gatekeeper/templates/gatekeeper/demo/basic

可以通过sync config将资源复制到OPA中

[root@kube-master01 ~]# cat sync.yaml

apiVersion: config.gatekeeper.sh/v1alpha1

kind: Config

metadata:

name: config

namespace: "gatekeeper-system"

spec:

sync:

syncOnly:

- group: ""

version: "v1"

kind: "Namespace"

- group: ""

version: "v1"

kind: "Pod"

[root@kube-master01 basic]# kubectl apply -f sync.yaml

创建约束模板

[root@kube-master01 basic]# kubectl create ns no-label

[root@kube-master01 basic]# cat templates/k8srequiredlabels_template.yaml

apiVersion: templates.gatekeeper.sh/v1beta1

kind: ConstraintTemplate

metadata:

name: k8srequiredlabels

spec:

crd:

spec:

names:

kind: K8sRequiredLabels

listKind: K8sRequiredLabelsList

plural: k8srequiredlabels

singular: k8srequiredlabels

validation:

# Schema for the `parameters` field

openAPIV3Schema:

properties:

labels:

type: array

items: string

targets:

- target: admission.k8s.gatekeeper.sh

rego: |

package k8srequiredlabels

violation[{"msg": msg, "details": {"missing_labels": missing}}] {

provided := {label | input.review.object.metadata.labels[label]}

required := {label | label := input.parameters.labels[_]}

missing := required - provided

count(missing) > 0

msg := sprintf("you must provide labels: %v", [missing])

}

[root@kube-master01 basic]# kubectl apply -f templates/k8srequiredlabels_template.yaml

创建约束条件

[root@kube-master01 basic]# cat constraints/all_ns_must_have_gatekeeper.yaml

apiVersion: constraints.gatekeeper.sh/v1beta1

kind: K8sRequiredLabels

metadata:

name: ns-must-have-gk

spec:

match:

kinds:

- apiGroups: [""]

kinds: ["Namespace"]

parameters:

labels: ["gatekeeper"]

[root@kube-master01 basic]# kubectl apply -f constraints/all_ns_must_have_gatekeeper.yaml

[root@kube-master01 basic]# cat good/good_ns.yaml

apiVersion: v1

kind: Namespace

metadata:

name: good-ns

labels:

"gatekeeper": "true"

[root@kube-master01 basic]# cat bad/bad_ns.yaml

apiVersion: v1

kind: Namespace

metadata:

name: bad-ns

bad-ns namespace不定义标签,不允许创建

[root@kube-master01 basic]# kubectl create -f bad/bad_ns.yaml

Error from server ([denied by ns-must-have-gk] you must provide labels: {"gatekeeper"}): error when creating "bad/bad_ns.yaml": admission webhook "validation.gatekeeper.sh" denied the request: [denied by ns-must-have-gk] you must provide labels: {"gatekeeper"}

[root@kube-master01 basic]# kubectl create -f good/good_ns.yaml

namespace/good-ns created

6

end

文中演示的例子皆来源于项目社区,由于篇幅问题,后续再更新更高级的策略写法。

2019,感谢有你!无论过去的一年收获如何,得到亦或是失去,而我依旧会期待2020,人生一定要充满希望,不是吗?

往期回顾