本节我们来看看Docker网络,我们这里主要讨论单机docker上的网络。

当docker安装后,会自动在服务器中创建三种网络:none、host和bridge,接下来我们分别了解下这三种网络:

$ sudo docker network lsNETWORK ID NAME DRIVER SCOPE528c3d49c302 bridge bridge local464b3d11003c host host localfaa8eb8310b4 none null local

一、none网络

none网络就是什么都没有的网络,使用此网络的容器除了lo没有其他任何网卡,所以此容器不提供任何网络服务,也无法访问其它容器,那么这种封闭网络有什么用呢?这个一般会用于对网络安全要求较高并且不需要联网的应用。

$ sudo docker run -it --network=none busybox/ # ifconfiglo Link encap:Local Loopbackinet addr:127.0.0.1 Mask:255.0.0.0UP LOOPBACK RUNNING MTU:65536 Metric:1RX packets:0 errors:0 dropped:0 overruns:0 frame:0TX packets:0 errors:0 dropped:0 overruns:0 carrier:0collisions:0 txqueuelen:1000RX bytes:0 (0.0 B) TX bytes:0 (0.0 B)

二、host网络

使用host网络的容器将共享docker host的网络栈,容器的网络配置与host一样。大家可以发现,使用此网络的容器能看到服务器所有网卡,这种网络模式的使用场景是那些对网络传输效率要求较高的应用,当然此模式也存在不够灵活的问题,例如端口冲突问题。

$ sudo docker run -it --network=host busybox/ # ifconfigdocker0 Link encap:Ethernet HWaddr 02:42:6B:5A:FC:54UP BROADCAST MULTICAST MTU:1500 Metric:1RX packets:0 errors:0 dropped:0 overruns:0 frame:0TX packets:17 errors:0 dropped:0 overruns:0 carrier:0collisions:0 txqueuelen:0RX bytes:0 (0.0 B) TX bytes:1526 (1.4 KiB)ens33 Link encap:Ethernet HWaddr 00:0C:29:D5:73:1Cinet addr:172.16.194.135 Bcast:172.16.194.255 Mask:255.255.255.0inet6 addr: fe80::20c:29ff:fed5:731c/64 Scope:LinkUP BROADCAST RUNNING MULTICAST MTU:1500 Metric:1RX packets:32097 errors:0 dropped:0 overruns:0 frame:0TX packets:20666 errors:0 dropped:0 overruns:0 carrier:0collisions:0 txqueuelen:1000RX bytes:24596169 (23.4 MiB) TX bytes:9708382 (9.2 MiB)lo Link encap:Local Loopbackinet addr:127.0.0.1 Mask:255.0.0.0inet6 addr: ::1/128 Scope:HostUP LOOPBACK RUNNING MTU:65536 Metric:1RX packets:270 errors:0 dropped:0 overruns:0 frame:0TX packets:270 errors:0 dropped:0 overruns:0 carrier:0collisions:0 txqueuelen:1000RX bytes:24089 (23.5 KiB) TX bytes:24089 (23.5 KiB)

三、bridge网络

Docker安装时会创建一个名为docker0的网桥(使用brctl工具可以查看Linux下所有网桥),容器启动时如果不指定--network则默认都是bridge网络。

$ brctl showbridge name bridge id STP enabled interfacesdocker0 8000.02426b5afc54 no

此时我们启动一个Nginx容器看看该网桥的变化:

$ sudo docker run -d nginx27644bd64114482f58adc47a52d3f768732c0396ca0eda8f13e68b10385ea359$ brctl showbridge name bridge id STP enabled interfacesdocker0 8000.0242cef1fc32 no vethe21ab12

我们可以看到此时一个新的网络接口vethe21ab12挂到docker0上,vethe21ab12就是Nginx容器的虚拟网卡。我们接着去Nginx容器中看看网络配置情况:

$ sudo docker exec -it 27644bd64114 bashroot@27644bd64114:/# ip a1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN group default qlen 1000link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00inet 127.0.0.1/8 scope host lovalid_lft forever preferred_lft forever45: eth0@if46: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue state UP group defaultlink/ether 02:42:ac:11:00:02 brd ff:ff:ff:ff:ff:ff link-netnsid 0inet 172.17.0.2/16 brd 172.17.255.255 scope global eth0valid_lft forever preferred_lft forever

我们看到容器中有一个网卡eth0@if46,为什么不是vethe21ab12呢?实际上,eth0@if46和vethe21ab12是一对veth-pair,那什么是veth-pair呢?

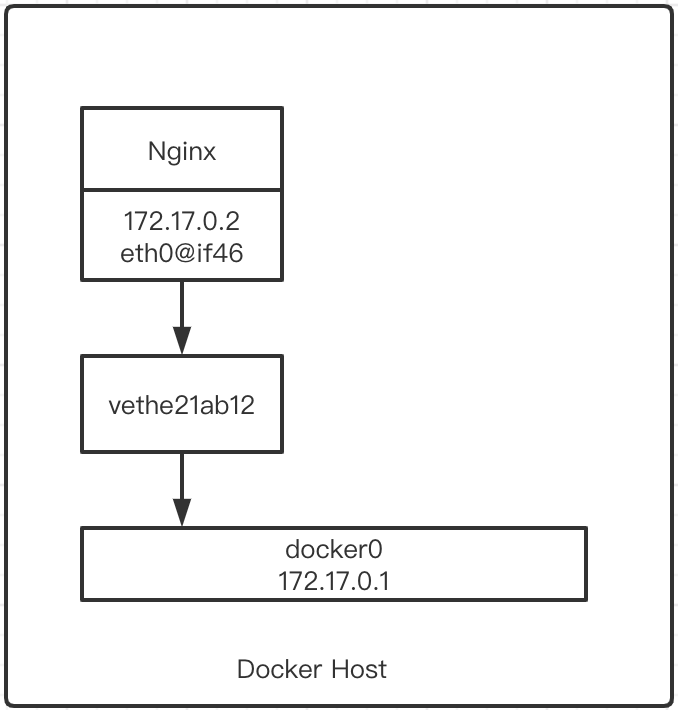

veth-pair是一种虚拟设备接口,它是成对出现的,一端连着协议栈,一端彼此相连着,在本例中大家可以想象成它们是由一根虚拟网线连接起来的一对网卡,网卡的一头(eth0@if46)在容器里,另一头(vethe21ab12)挂在网桥docker0上,最终的效果就是把eth0@if46也挂在docker0上。

我们还能看到这个容器中eth0@if46配置的ip是172.17.0.2,为什么是这个网段呢?我们看下网桥的网络配置:

$ sudo docker network inspect bridge[{"Name": "bridge","Id": "6f0087fd32cd0e3b4a7ef1133e2f5596a2e74429cf5240e42012af85d1146b9f","Created": "2021-10-17T06:43:11.849460184Z","Scope": "local","Driver": "bridge","EnableIPv6": false,"IPAM": {"Driver": "default","Options": null,"Config": [{"Subnet": "172.17.0.0/16","Gateway": "172.17.0.1"}]},"Internal": false,"Attachable": false,"Ingress": false,"ConfigFrom": {"Network": ""},"ConfigOnly": false,"Containers": {"27644bd64114482f58adc47a52d3f768732c0396ca0eda8f13e68b10385ea359": {"Name": "lucid_moore","EndpointID": "b6c9de5a52e2f0b858a6dbf6c8e51b535aec3500fca7d1f1dcca98613e57dabd","MacAddress": "02:42:ac:11:00:02","IPv4Address": "172.17.0.2/16","IPv6Address": ""}},"Options": {"com.docker.network.bridge.default_bridge": "true","com.docker.network.bridge.enable_icc": "true","com.docker.network.bridge.enable_ip_masquerade": "true","com.docker.network.bridge.host_binding_ipv4": "0.0.0.0","com.docker.network.bridge.name": "docker0","com.docker.network.driver.mtu": "1500"},"Labels": {}}]$ ifconfig docker0docker0: flags=4163<UP,BROADCAST,RUNNING,MULTICAST> mtu 1500inet 172.17.0.1 netmask 255.255.0.0 broadcast 172.17.255.255inet6 fe80::42:ceff:fef1:fc32 prefixlen 64 scopeid 0x20<link>ether 02:42:ce:f1:fc:32 txqueuelen 0 (Ethernet)RX packets 2827 bytes 115724 (115.7 KB)RX errors 0 dropped 0 overruns 0 frame 0TX packets 3853 bytes 10144700 (10.1 MB)TX errors 0 dropped 0 overruns 0 carrier 0 collisions 0

原来网桥的网络配置的subnet就是172.17.0.0,网关则是172.17.0.1。这个网关就在docker0上,所以当前容器网络拓扑图如下:

四、后记

大家在进行本篇的实验时,可能会遇到如下问题:

1、进入Nginx容器后,没有ip命令?

这个命令需要自行在容器中安装:apt-get install iproute2

2、安装iproute2时发现容器无法连接网络。

首先我们需要重建docker0网络:

$ sudo pkill docker$ sudo iptables -t nat -F$ sudo ifconfig docker0 down$ sudo btctl delbr docker0$ sudo systemctl restart docker

接着启动容器并进入容器,更新软件源:

root@27644bd64114:/# apt-get updateGet:1 http://security.debian.org/debian-security buster/updates InRelease [65.4 kB]Get:2 http://deb.debian.org/debian buster InRelease [122 kB]Get:3 http://security.debian.org/debian-security buster/updates/main amd64 Packages [307 kB]Get:4 http://deb.debian.org/debian buster-updates InRelease [51.9 kB]Get:5 http://deb.debian.org/debian buster/main amd64 Packages [7906 kB]Get:6 http://deb.debian.org/debian buster-updates/main amd64 Packages [15.2 kB]Fetched 8467 kB in 14s (603 kB/s)Reading package lists... Done

最后安装iproute2即可。