一、K8S初步基础概念了解

PS:以下描述大部分来自kubernets官网,部分主要是自己的的语言化的总结。如有纰漏,请多多指正!为了强加理解,纯手打来自官网的描述!

1 什么是kubernets(k8s)?

官方介绍:

用于自动部署,扩展和管理容器化应用程序的开源系统(自动化容器运维开源平台)

它将组成应用程序的容器组合成逻辑单元,以便于管理和服务发现

Docker容器集群的管理方案和Docker分布式系统解决方案

Kubernetes 是一个开源的容器编排引擎,用来对容器化应用进行自动化部署、 扩缩和管理。

2 kubernets特性有哪些为啥需要使用它?

Docker容器化集群部署和分发中,如何高效的管理这些Docker进行快速的进行部署安装和进行相关服务注册发现等,是当下以容器为中心架构中所遇到难题和困境。

k8s的出现主要是为了解决容器化管理提供一些解决方案。他具有以下几个特性:

能在物理机或虚拟机集群上调度和运行程序容器

自动化快速精准的部署应用和异常回滚

提供服务发现和负载均衡,无需修改程序即可使用模式的服务发现机制,并且k8s为容器提供自己的IP地址和一个DNS名称并且可以在他们之间实现负载均衡

容器自动装修和自动节点调配管理

即时的扩容和缩容服务部署

提供存储编排,自动挂载所选存储系统,包括有:本地存储,公有云提供商所提供的存储,和其他网络系统存储等

分布式的Secret 和配置管理,部署更新Secrets和应用程序的配置而不需要重新构建容器镜像,且不需要将软件堆栈配置中的关键秘钥信息等暴露出来

资源监控和调配

提供验证和授权

提供了自修复(自动部署,自动重启,自动复制,自动伸缩等)

2 kubernets核心组件简单说明

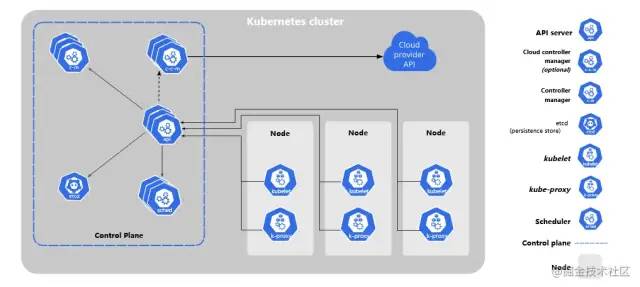

Kubernetes 集群所需的各种组件(图来自官网):

2.1 一些核心概念说明

Cluster(集群)

Cluster 是计算、存储、网络等资源的集合。它是整个k8s容器集群的基础环境。

Master集群中央控制中心和Node节点

一个完整的k8s的集群是由一组其他Node(节点)的机器组成,其中这一组的节点有至少需要有一个工作节点Node,和一个中央管理Master界面。

Master:在集群中至少需要一个Master负载集群管控和控制,它可以是物理机也可以是虚拟机。

Node:作为工作节点托管作为应用负载的组件的 Pod。

Master集群(上图中控制面板)的一些组件说明

2.2 Master节点主要组件介绍

上图中主要是Master节点下主要包括的组件有:

kube-apiserver

etcd

kube-scheduler

kube-controller-manager

2.2.1 kube-apiserver

kube-apiserver 是进程的名称

它主要是负责对我们的cli和UI等发出的相关的命令的结接收,然后发送给对应其他组件进行处理。

可以归结为其实是对k8s上相关的资源对象的CRUD一些http rest接口的封装和操作。

提供了集群管理的rest api接口

是各模块之间数据交互通信的中心枢纽

2.2.2 etcd

etcd 是兼具一致性和高可用性的键值数据库,可以作为保存 Kubernetes 所有集群数据的后台数据库.

保存的是集群中所有网络配置和资源对象或节点对象等资源状态信息。

2.2.3 kube-scheduler

负责pod的节点绑定调度工作,分配Pod安置到哪个Node工作节点上。

其中调度的策略考虑因素包括:单个Pod和Pod集合的资源需求,硬件、软件、策略约束,亲和性和反过亲和性性规范、数据位置、工作负载间的干扰和最后的时限等。

2.2.4 kube-controller-manager

controller-manager 控制管理

集群内部的管理控制中心,负责集群内的Nopde和Pod已经Pod的副本、服务端点(Endpoint)、命名空间(namespace)等管理

控制器主要包括有下列几个控制器(来自官网说明):

Node Controller: 节点控制器,主要是负责节点故障即时通知和响应和恢复处理。

Job controller:任务控制器,主要负责监测代表一次性任务的 Job 对象,然后创建 Pods 来运行这些任务直至完成。

Endpoints Controller:端点控制器,主要负责填充端点(Endpoints)对象(即加入 Service 与 Pod)

Service Account & Token Controllers:服务帐户和令牌控制器,主要负责为新的命名空间创建默认帐户和 API 访问令牌

2.3 Node工作节点主要组件介绍

节点组件在每个节点上运行,维护运行的 Pod 并提供 Kubernetes 运行环境。主要是作为工作节点存在,承担任务的执行。

在整个K8S的集群中所有的Node相互协调工作,Master会依据调度策略来分配Pod和相关负载均衡执行。

Node节点下主要运行的进程组件有:

kubelet

kube-proxy

Container Runtime(容器运行时)

2.3.1 kubelet

知识点:

每个Node几点都会启动这个kubelet进程。

kubelet主要是负责对Master节点分配的到当前节点上的任务的执行,并对当前节点的Pod进行管理。

kubelet会定期的通过Master中的kube-apiserver进行相关节点资源使用信息进行汇报

通过cAdcisor进行容器监控和节点资源。

是对Node节点上的pos一个管理代理员。

保证容器(containers)都运行在 Pod 中。

2.3.2 kube-proxy

知识点:

kube-proxy 维护节点上的网络规则。这些网络规则允许从集群内部或外部的网络会话与 Pod 进行网络通信。(来自官网说明)

kube-proxy 是集群中每个节点上运行的网络代理,实现 Kubernetes 服务(Service) 概念的一部分。(来自官网说明)

它可以创建路由规则来进行服务负载均衡。

2.3.3 Container Runtime(容器运行时)

依赖于Docker引擎,负载节点上容器的窗机和管理

kubernetes 支持多个容器运行环境: Docker、 containerd、CRI-O 以及任何实现 Kubernetes CRI (容器运行环境接口)---引用自官网!

2.4 关于pod资源对象

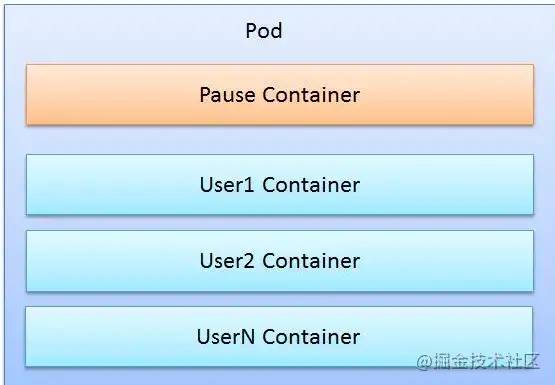

pod的组成(PS:图来自网络):

知识点理解:

运行与Node工作节点上,是对应的服务任务对象,它可以被创建和启动暂停和销毁

是K8S最小的调度工作单元

一个pod可以包含一个容器或多个容器组

一个pod可以看成是一个容器化服务逻辑单元

每个Pod都有一个根Pause容器,pod中的容器共享Pause容器的资源:网络栈(共享Pause容器IP地址)和数据挂载券Volume,

因为有同一个根Pause容器,Pod多容器之间可以仅需losct就可以相互通信

通常你不需要直接创建 Pod,甚至单实例 Pod

一般我们的创建pod是基于Deployment 或 Job 等资源对象中进行创建

通常一个pod一个实例服务,多实例服务,则需要使用Pod副本来提供横向扩展,多Pod实例的副本通常是使用相关控制器来创建。

通常很少在 Kubernetes 中直接创建一个个的 Pod,甚至是单实例(Singleton)的 Pod。这是因为 Pod 被设计成了相对临时性的、用后即抛的一次性实体。当 Pod 由你或者间接地由 控制器 创建时,它被调度在集群中的节点上运行。Pod 会保持在该节点上运行,直到 Pod 结束执行、Pod 对象被删除、Pod 因资源不足而被 驱逐 或者节点失效为止。--引用自官网

Pod 不是进程,而是容器运行的环境。在被删除之前,Pod 会一直存在。--引用自官网

2.5 关于Serivse服务资源对象(基础了解)

在kubernest中,我们的pod会被随机分配一个单独IP地址,但这个绑定的IP地址会随着pod消亡和消亡,如果pod内是一组容器的话,此时就依赖于我们的服务。一个服务可以看着是一组提供相关的服务实例的Pod的对外开放的访问接口。

2.6 关于 namespace资源对象(基础了解)

通过namespace调配区分不同项目或小组或用户组,可以理解不同的namespace是不同的项目或同以项目下不通过的环境

默认的k8s集群启动后会默认创建为一个default的默认命名空间,如果在部署我们的服务的时候,没有指定命名空间,则所有的启动的服务,都默认会被放置到这个命名空间下。

二、K8S初步linux安装和应用

基本概念大致了解和认知后就可以开始先把环境搭建,然后演练一遍,从实战钟找问题,再回溯到我们的一些理论概念中,不要一开始就穿牛角尖去学理论!

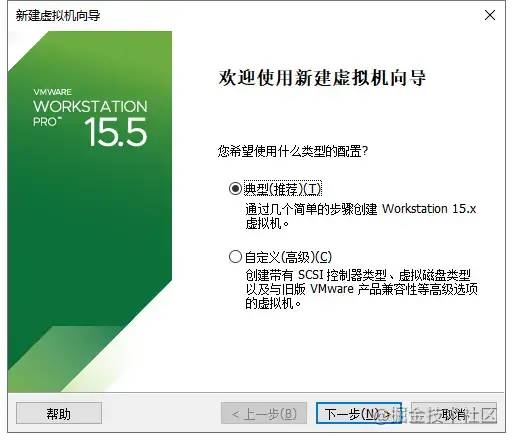

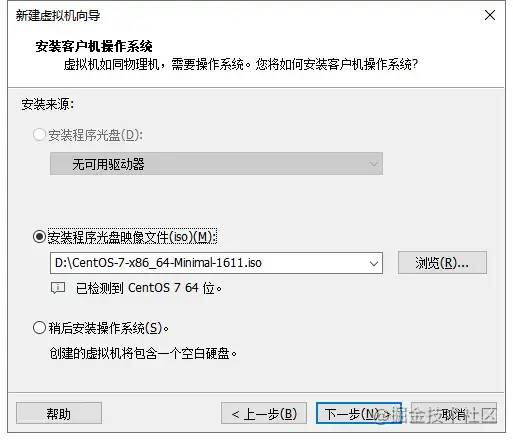

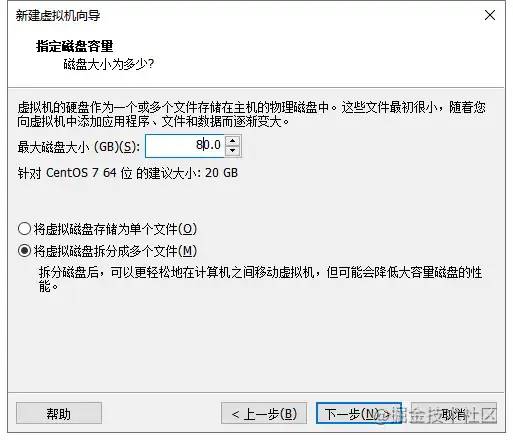

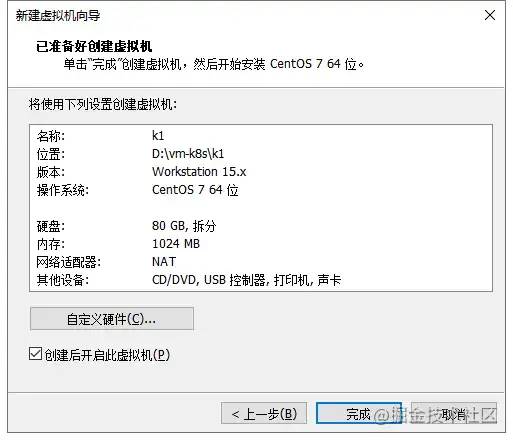

1:虚拟主机创建及克隆

1.1:新建干净的虚拟主机环境

保存虚拟主机位置:

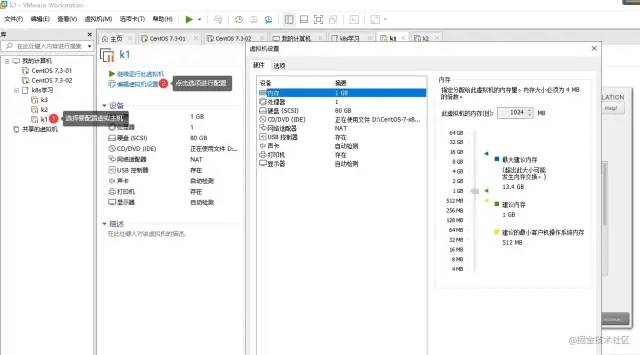

2:虚拟机一些准备工作

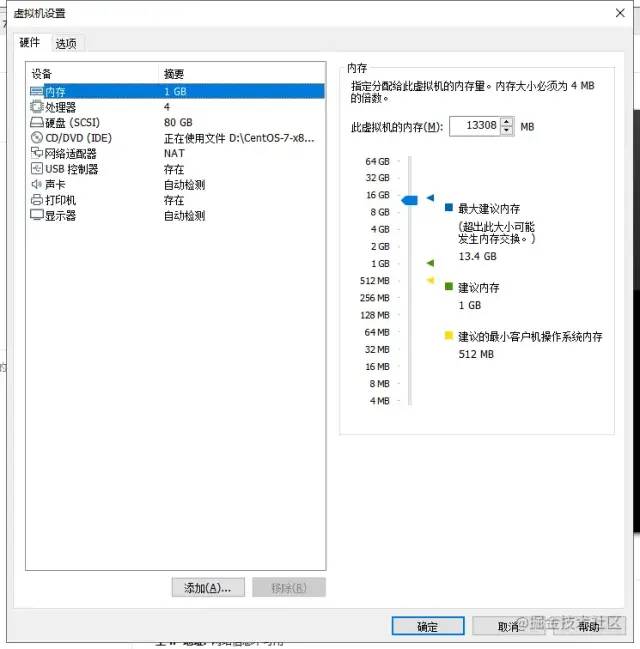

2.1 虚拟主机内存配置

PS:挂起状态下无法进行设置

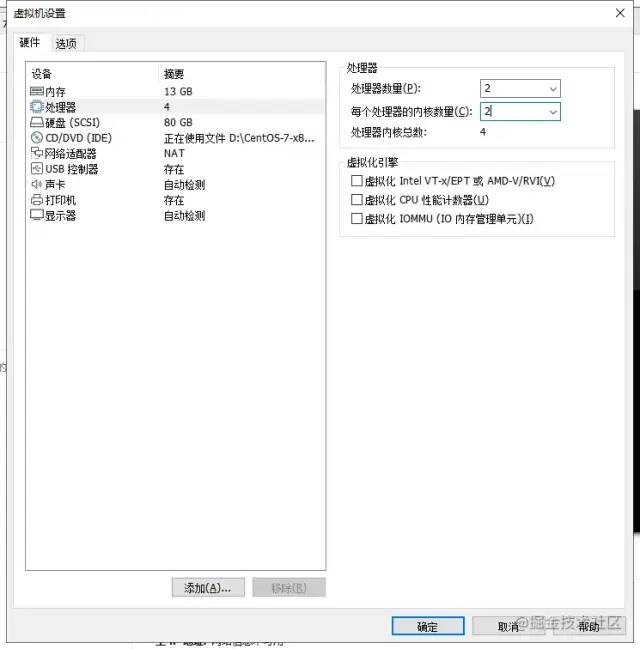

2.2 配置处理器:

2.3 配置内存:

最终主机配置信息:

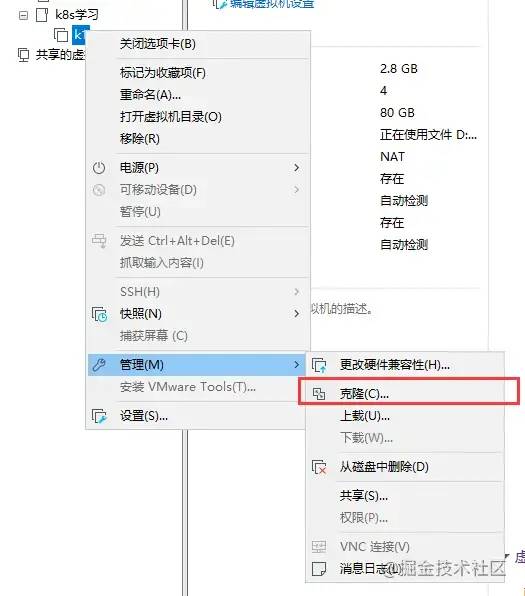

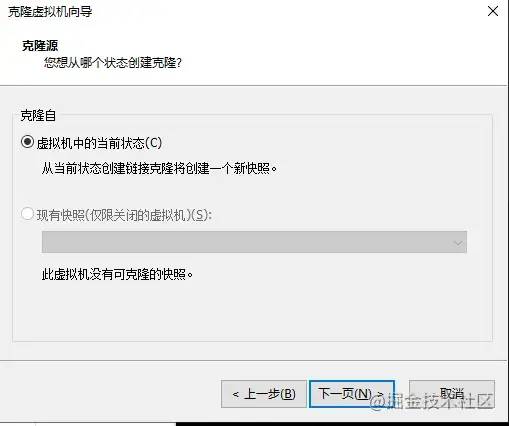

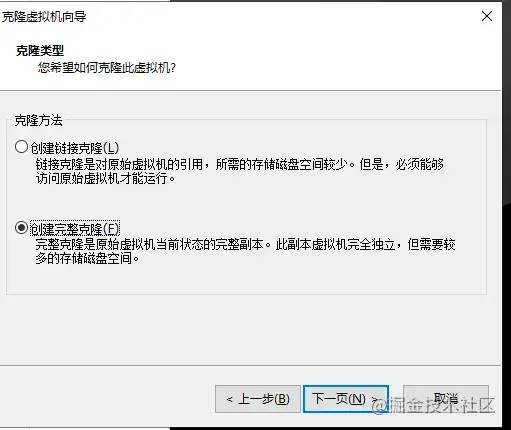

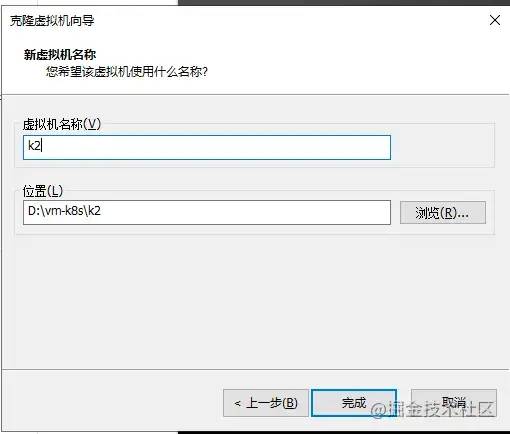

配置一台后使用克隆的方式配置其他主机!

3:启动主机后的一些配置

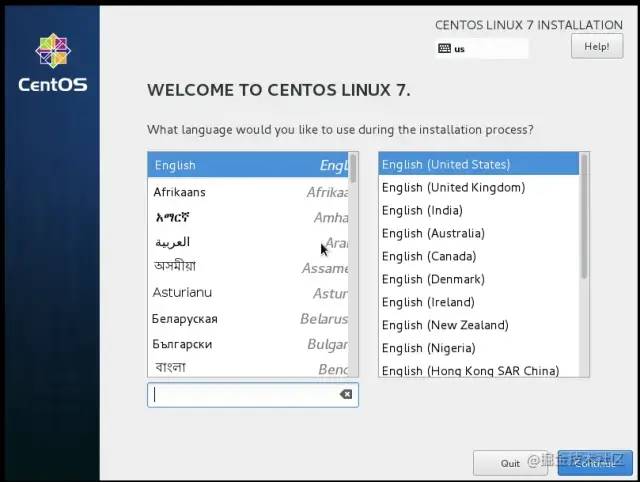

3.1 语言选择

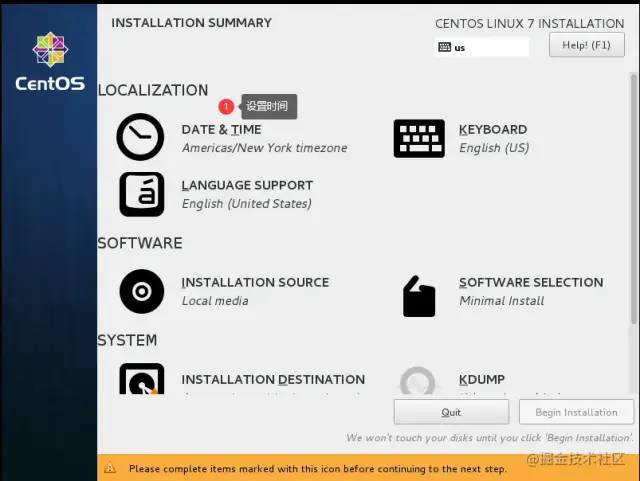

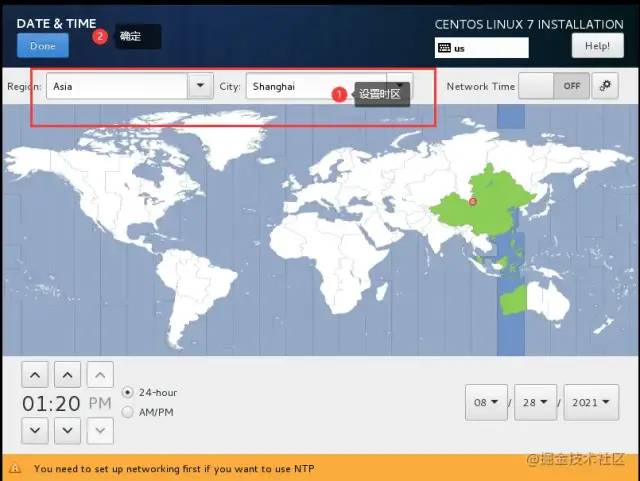

3.2 设置时区:

补充:

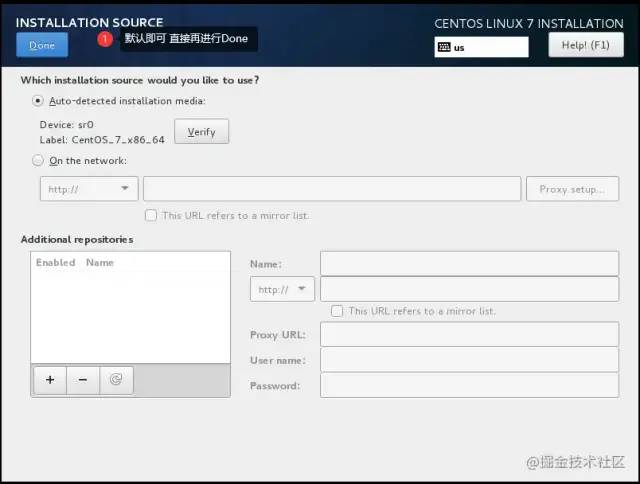

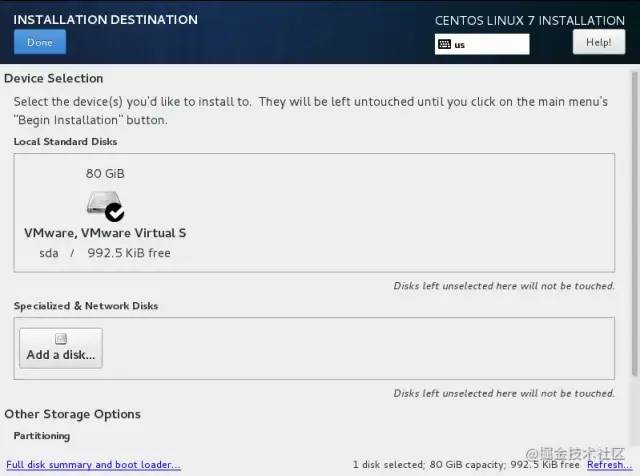

3.3 硬盘设置:

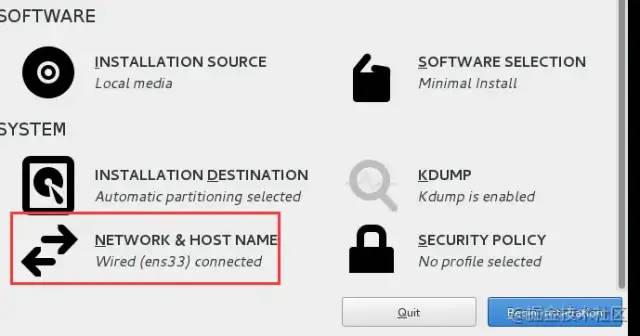

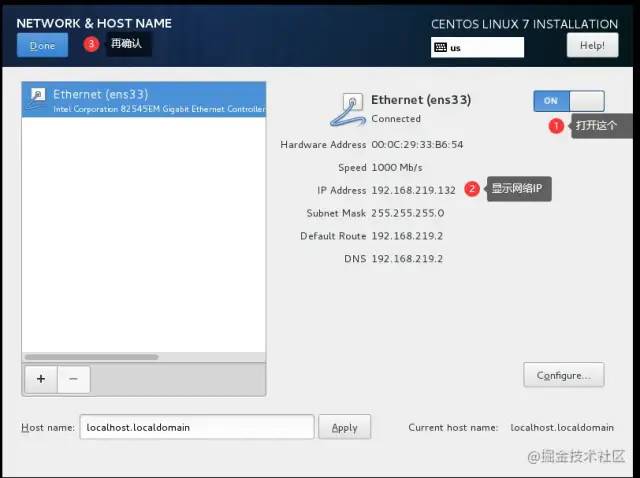

3.4 【开启网络-关键】:

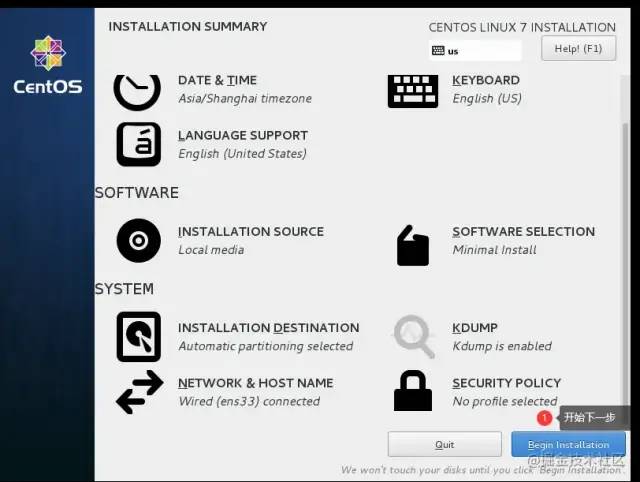

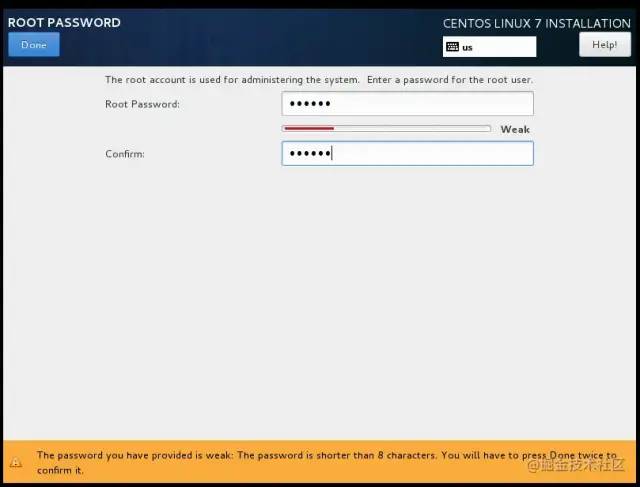

3.5 点击下一步---设置密码:

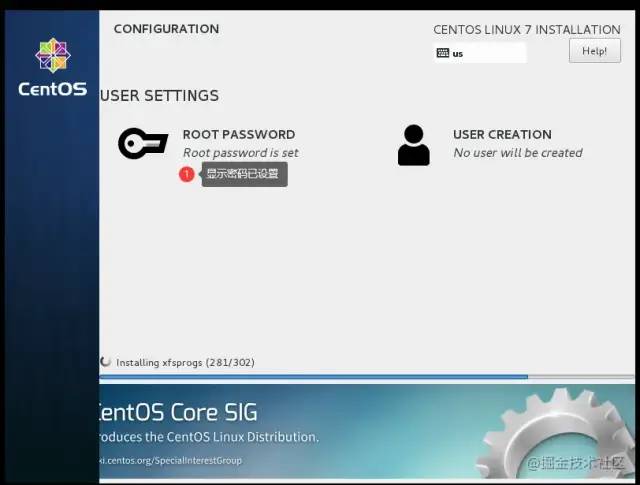

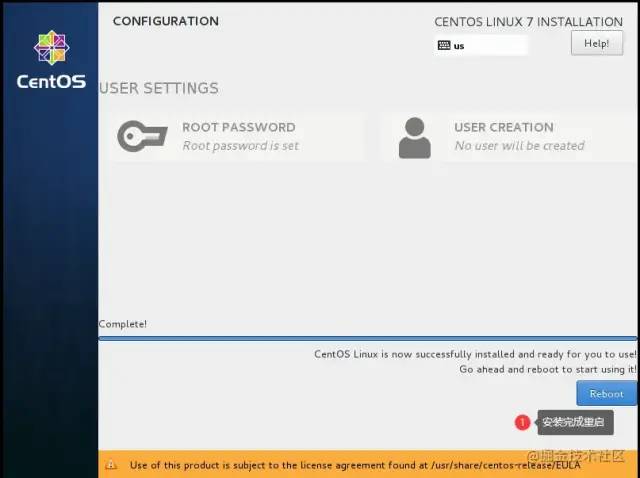

等待安装完成:

登入验证:

3.6 克隆其他虚拟机

然后关闭,进行克隆其他虚拟机出来:

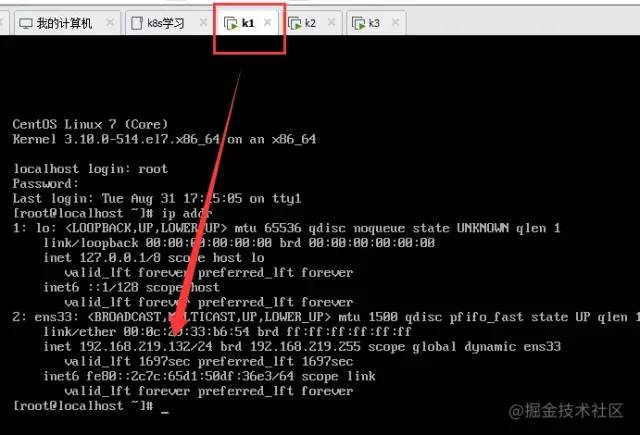

3.7 启动所有的虚拟主机

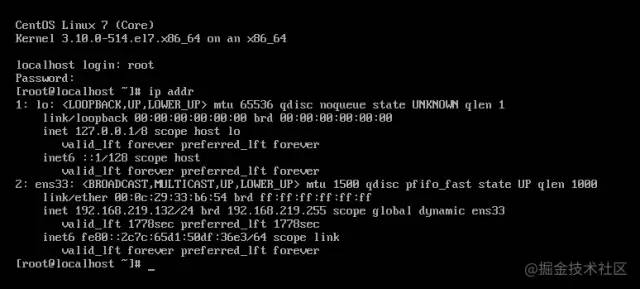

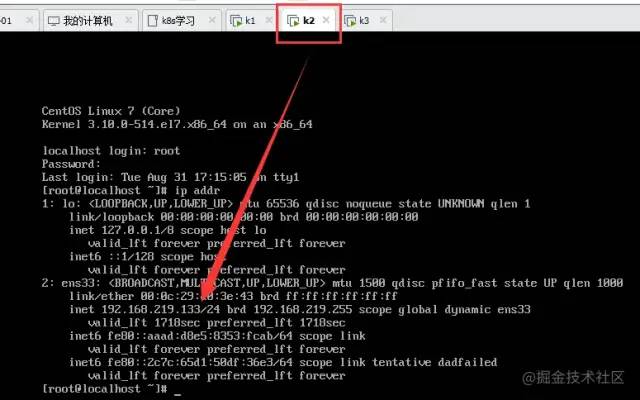

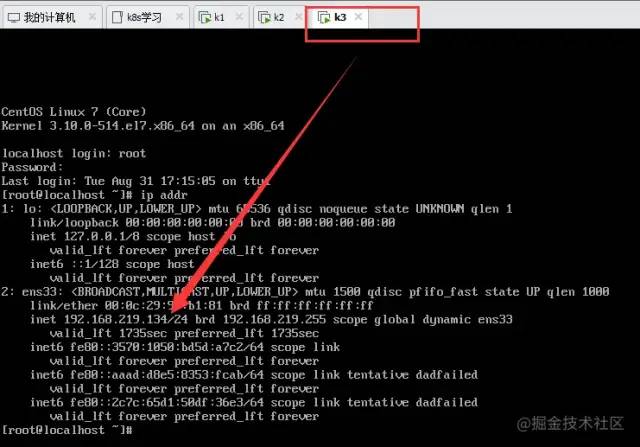

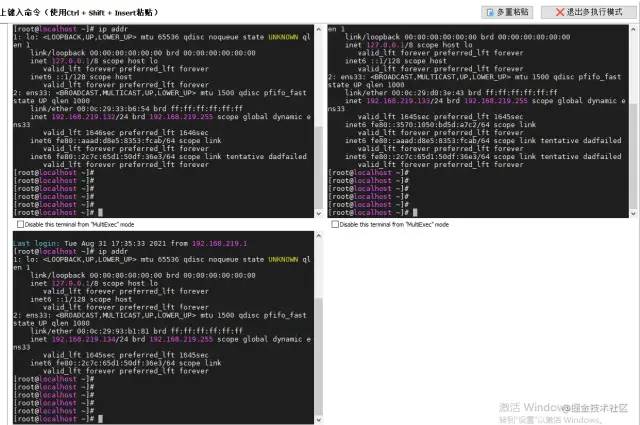

查看三台主机的IP:

4:安装前的准备节点规划

4.1 节点规划:

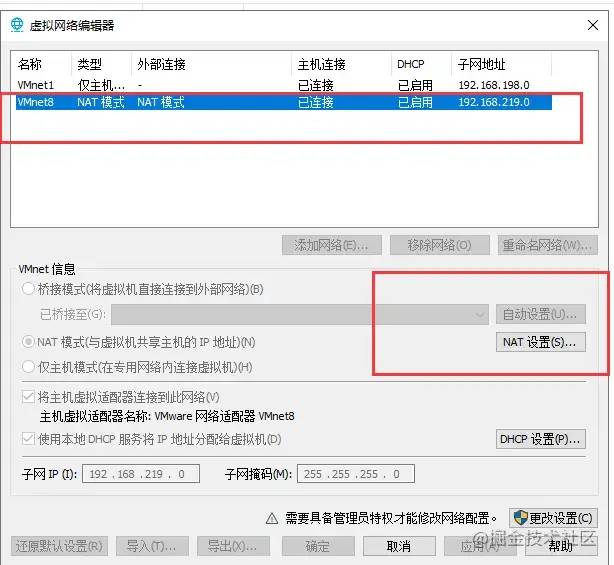

总结三个主机的IP:

192.168.219.138(master)

192.168.219.139(node2)

192.168.219.140(node3)

复制代码

4.2 节点相关基础设置:

查看主机IP另一种方式:

[root@localhost ~]# hostname -i

复制代码

4.2.1 节点规划:

| 主机名称 | 节点ip | 节点角色 | |

|---|---|---|---|

| k81-master01 | 192.168.219.138 | master | |

| k81-node02 | 192.168.219.139 | node | |

| k81-node03 | 192.168.219.140 | node |

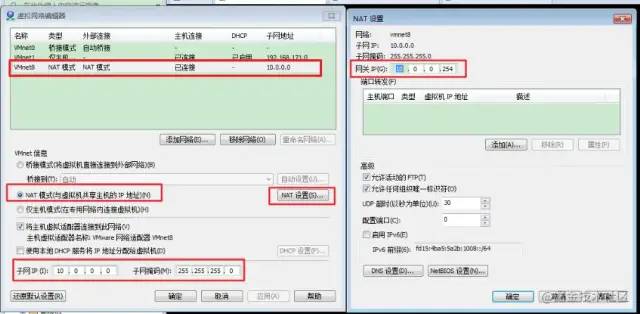

如果需要自定义虚拟机的网段的可以在这里进行修改:

4.2.2 修改hostname(三台分别修改)

1.临时修改主机名

hostname yin 临时修改,重启服务器后就不生效了

复制代码

2.永久修改

1、方法一使用hostnamectl命令

[root@localhost ~]# hostnamectl ``set``-hostname k89--xxxxx

2、方法二:修改配置文件 etc/hostname 保存退出

`[root@localhost ~]# vi etc/hostname`

复制代码

4.2.3 host主机名解析(三台都执行)

执行下面命令,写入对应的host解析中:

cat >> etc/hosts <<EOF

192.168.219.138 k8s-master01

192.168.219.139 k8s-node02

192.168.219.140 k8s-node03

EOF

复制代码

查看:

[root@k81-node03 ~]# cat etc/hosts

127.0.0.1 localhost localhost.localdomain localhost4 localhost4.localdomain4

::1 localhost localhost.localdomain localhost6 localhost6.localdomain6

192.168.219.138 k8s-master01

192.168.219.139 k8s-node02

192.168.219.140 k8s-node03

# ping看看是否可以互通

[root@k81-node03 ~]# ping k8s-master01

复制代码

4.2.3 禁用SElinux(三台都执行)

默认SELinux都是默认开启的状态,开启的情况下会导致一些服务的安装不成功。

# 查看selinux状态

[root@k81-node03 ~]# sestatus

# 临时关闭

[root@k81-master01 ~]# setenforce 0

# 永久关闭(保存并退出,然后重启系统即可)

可以修改配置文件/etc/selinux/config,将其中SELINUX设置为disabled

[root@k81-master01 ~]#sed -i 's#SELINUX=enforcing#SELINUX=disabled#' etc/selinux/config

复制代码

4.2.4 禁用防火墙(三台都执行)

[root@k81-node03 ~]# systemctl disable firewalld.service

[root@k81-node03 ~]# systemctl stop firewalld.service

复制代码

可能不同的版本还有不同的要求,有的可能需要关闭,看需求而定,我这里不关闭:

systemctl disable NetworkManager

systemctl stop NetworkManager

复制代码

4.2.5 配置epel源--非必选(三台都执行)

rpm -ivh http://mirrors.aliyun.com/epel/epel-release-latest-7.noarch.rpm

复制代码

4.2.6 更新软件包(三台都执行)

yum -y update

复制代码

4.2.7 防止开机自动挂载swap分区(三台都执行)

[root@k81-node03 ~]# iptables -P FORWARD ACCEPT

[root@k81-node03 ~]#

[root@k81-node03 ~]# swapoff -a

[root@k81-node03 ~]# sed -i '/ swap s/^\(.*\)$/#\1/g' etc/fstab

[root@k81-node03 ~]#

复制代码

4.2.8 开启内核转发(三台都执行)

cat <<EOF > etc/sysctl.d/k8s.conf

net.bridge.bridge-nf-call-ip6tables = 1

net.bridge.bridge-nf-call-iptables = 1

net.ipv4.ip_forward=1

vm.max_map_count=262144

EOF

复制代码

4.2.9 确认内核转发生效(三台都执行)

[root@k81-node03 ~]# modprobe br_netfilter

[root@k81-node03 ~]# sysctl -p etc/sysctl.d/k8s.conf

net.bridge.bridge-nf-call-ip6tables = 1

net.bridge.bridge-nf-call-iptables = 1

net.ipv4.ip_forward = 1

vm.max_map_count = 262144

[root@k81-node03 ~]#

复制代码

4.2.10 确保网络正常(三台都执行)

ping www.baidu.com

复制代码

4.2.11 配置yum基础源(三台都执行)

[root@k81-node03 ~]# curl -o etc/yum.repos.d/Centos-7.repo http://mirrors.aliyun.com/repo/Centos-7.repo

% Total % Received % Xferd Average Speed Time Time Time Current

Dload Upload Total Spent Left Speed

0 0 0 0 0 0 0 0 --:--:-- --:--:-- --:--:100 2523 100 2523 0 0 8470 0 --:--:-- --:--:-- --:--:-- 8494

[root@k81-node03 ~]#

复制代码

安装常用的命令(每个虚拟机都安装一下咯):

yum install -y net-tools vim lrzsz tree screen lsof tcpdump nc mtr nma nano wget

复制代码

4.2.12 配置docker源(三台都执行)

[root@k81-node03 ~]# curl -o etc/yum.repos.d/docker-ce.repo http://mirrors.aliyun.com/docker-ce/linux/centos/docker-ce.repo

% Total % Received % Xferd Average Speed Time Time Time Current

Dload Upload Total Spent Left Speed

0 0 0 0 0 0 0 0 --:--:-- --:--:-- --:--:100 2081 100 2081 0 0 6194 0 --:--:-- --:--:-- --:--:-- 6211

[root@k81-node03 ~]#

复制代码

4.2.13 cat生成kubernets源(三台都执行)

cat <<EOF > etc/yum.repos.d/kubernetes.repo

[kubernetes]

name=Kubernetes

baseurl=http://mirrors.aliyun.com/kubernetes/yum/repos/kuberne

tes-el7-x86_64

enabled=1

gpgcheck=0

repo_gpgcheck=0

gpgkey=http://mirrors.aliyun.com/kubernetes/yum/doc/yum-key.gpg

http://mirrors.aliyun.com/kubernetes/yum/doc/rpm-package-key.gpg

EOF

复制代码

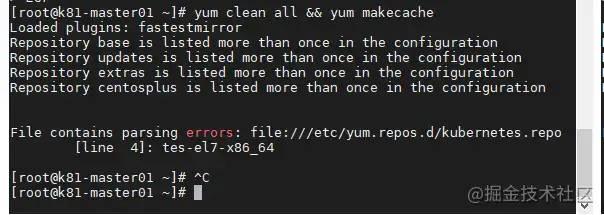

4.2.14 生成yum缓存(三台都执行)

异常问题,主要是配置文件出错!(空格问题!) 重新写如一下配置文件:

异常问题,主要是配置文件出错!(空格问题!) 重新写如一下配置文件:

cat <<EOF > etc/yum.repos.d/kubernetes.repo

[kubernetes]

name=Kubernetes

baseurl=http://mirrors.aliyun.com/kubernetes/yum/repos/kubernetes-el7-x86_64/

enabled=1

gpgcheck=0

repo_gpgcheck=0

gpgkey=http://mirrors.aliyun.com/kubernetes/yum/doc/yum-key.gpg http://mirrors.aliyun.com/kubernetes/yum/doc/rpm-package-key.gpg

EOF

复制代码

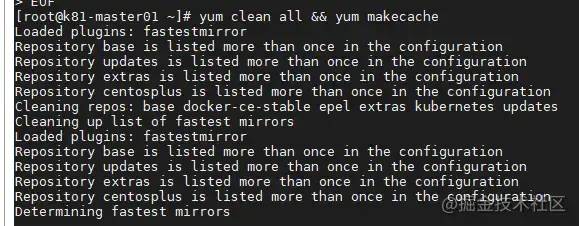

然后再执行生成缓存:

yum clean all && yum makecache

复制代码

5:安装docker和检测安装(三台都执行)

ps:安装笔记大部分来自超哥笔记,参考来源:www.bilibili.com/video/BV1yP…

5.1 安装

yum install docker-ce -y

复制代码

5.2:配置docker镜像加速(三台都执行)

# 创建目录

[root@k81-node03 ~]# mkdir -p etc/docker

# 配置地址

[root@k81-node03 ~]# nano etc/docker/daemon.json

{

"registry-mirrors": ["http://f1361db2.m.daocloud.io"]

}

# 启动docker

[root@k81-node03 ~]# systemctl enable docker && systemctl start docker

# 检测docker

[root@k81-node03 ~]# docker ps

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

复制代码

6 安装k8s集群步骤

6.1 安装 k8s-master,k8s-slave(三台都安装)

[root@k81-node03 ~]# yum install -y kubelet-1.16.2 kubeadm-1.16.2 kubectl-1.16.2 --disableexcludes=kubernetes

复制代码

6.2 检测kubeadm的版本(三台都执行)

[root@k81-master01 ~]# kubeadm version

kubeadm version: &version.Info{Major:"1", Minor:"16", GitVersion:"v1.16.2", GitCommit:"c97fe5036ef3df2967d086711e6c0c405941e14b", GitTreeState:"clean", BuildDate:"2019-10-15T19:15:39Z", GoVersion:"go1.12.10", Compiler:"gc", Platform:"linux/amd64"}

复制代码

6.3 设置kubelet开机启动(三台都执行)

作用:容器的管理

镜像下载,容器创建,容器的开启和暂停

开机自动后,可以自动的管理k8s的pod(内部是容器)

[root@k81-master01 ~]# 设置开启启动:

[root@k81-master01 ~]# systemctl enable kubelet

Created symlink from etc/systemd/system/multi-user.target.wants/kubelet.service to usr/lib/systemd/system/kubelet.service.

[root@k81-master01 ~]# 查看状态:

[root@k81-node03 ~]# systemctl status kubelet

● kubelet.service - kubelet: The Kubernetes Node Agent

Loaded: loaded (/usr/lib/systemd/system/kubelet.service; enabled; vendor preset: disabled)

Drop-In: usr/lib/systemd/system/kubelet.service.d

└─10-kubeadm.conf

Active: inactive (dead)

Docs: https://kubernetes.io/docs/

[root@k81-node03 ~]#

复制代码

6.4 Master01节点初始化文件配置(仅Master01节点执行)

生成配置文件:

[root@k81-master01 ~]# 创建目录:

[root@k81-master01 ~]# mkdir ~/k8s-install && cd ~/k8s-install

[root@k81-master01 ~]# 生成配置文件:

[root@k81-master01 k8s-install]# kubeadm config print init-defaults > kubeadm.yaml

[root@k81-master01 k8s-install]#

复制代码

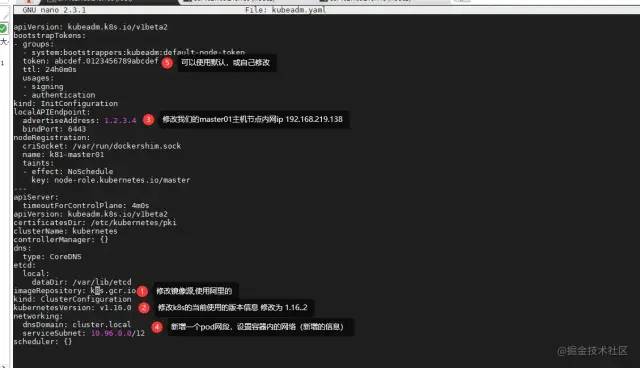

修改配置文件kubeadm.yaml:

修改后的文件:

修改后的文件:

apiVersion: kubeadm.k8s.io/v1beta2

bootstrapTokens:

- groups:

- system:bootstrappers:kubeadm:default-node-token

token: abcdef.0123456789abcdef

ttl: 24h0m0s

usages:

- signing

- authentication

kind: InitConfiguration

localAPIEndpoint:

advertiseAddress: 192.168.219.138 # 修改为k8s-master01节点内网ip

bindPort: 6443

nodeRegistration:

criSocket: var/run/dockershim.sock

name: k81-master01

taints:

- effect: NoSchedule

key: node-role.kubernetes.io/master

---

apiServer:

timeoutForControlPlane: 4m0s

apiVersion: kubeadm.k8s.io/v1beta2

certificatesDir: etc/kubernetes/pki

clusterName: kubernetes

controllerManager: {}

dns:

type: CoreDNS

etcd:

local:

dataDir: var/lib/etcd

imageRepository: registry.aliyuncs.com/google_containers # 修改为阿里镜像源

kind: ClusterConfiguration

kubernetesVersion: v1.16.2 # 修改为当前安装的k8s的版本信息

networking:

dnsDomain: cluster.local

podSubnet: 10.244.0.0/16 # 添加pod网段,设置容器内的网络

serviceSubnet: 10.96.0.0/12

scheduler: {}

复制代码

6.4 检查所包含的镜像列表(仅Master01节点执行)

[root@k81-master01 k8s-install]# kubeadm config images list --config kubeadm.yaml

registry.aliyuncs.com/google_containers/kube-apiserver:v1.16.2

registry.aliyuncs.com/google_containers/kube-controller-manager:v1.16.2

registry.aliyuncs.com/google_containers/kube-scheduler:v1.16.2

registry.aliyuncs.com/google_containers/kube-proxy:v1.16.2

registry.aliyuncs.com/google_containers/pause:3.1

registry.aliyuncs.com/google_containers/etcd:3.3.15-0

registry.aliyuncs.com/google_containers/coredns:1.6.2

[root@k81-master01 k8s-install]#

复制代码

6.5 下载k8s集群所属的镜像并查看结果(仅Master01节点执行)

下载镜像

[root@k81-master01 k8s-install]# kubeadm config images pull --config kubeadm.yaml

复制代码

查看下载镜像结果:

[root@k81-master01 k8s-install]# docker images

复制代码

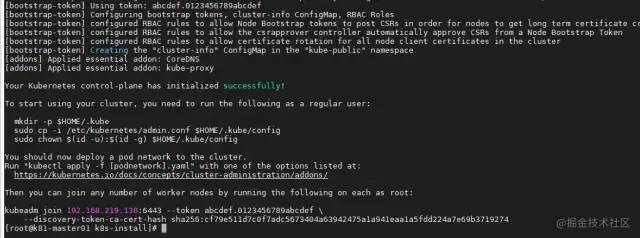

6.6 开始启动初始化k8s集群(仅Master01节点执行)

[root@k81-master01 k8s-install]# kubeadm init --config kubeadm.yaml

复制代码

确保一下信息提示成功:

Your Kubernetes control-plane has initialized successfully!

To start using your cluster, you need to run the following as a regular user:

# 执行下面的命令创建配置文件

mkdir -p $HOME/.kube

sudo cp -i etc/kubernetes/admin.conf $HOME/.kube/config

sudo chown $(id -u):$(id -g) $HOME/.kube/config

# 你可能需要创建pod网络,你的pod才能正常的工作

# k8s的集群网络,需要借助额外的插件来辅助

You should now deploy a pod network to the cluster.

Run "kubectl apply -f [podnetwork].yaml" with one of the options listed at:

https://kubernetes.io/docs/concepts/cluster-administration/addons/

# 你可以使用下面的命令,把Node节点加入集群:

Then you can join any number of worker nodes by running the following on each as root:

kubeadm join 192.168.219.138:6443 --token abcdef.0123456789abcdef \

--discovery-token-ca-cert-hash sha256:cf79e511d7c0f7adc5673404a63942475a1a941eaa1a5fdd224a7e69b3719274

[root@k81-master01 k8s-install]#

复制代码

根据相关的提示,说如果想启用集群的时候,可以做进一步的操作(初始化集群的配置文件信息):

执行初始化操作,其他后续再操作:

mkdir -p $HOME/.kube

sudo cp -i etc/kubernetes/admin.conf $HOME/.kube/config

sudo chown $(id -u):$(id -g) $HOME/.kube/config

复制代码

执行结果:

[root@k81-master01 k8s-install]# mkdir -p $HOME/.kube

[root@k81-master01 k8s-install]# sudo cp -i etc/kubernetes/admin.conf $HOME/.kube/config

[root@k81-master01 k8s-install]# sudo chown $(id -u):$(id -g) $HOME/.kube/config

[root@k81-master01 k8s-install]#

复制代码

查询当前有的pods和节点:

[root@k81-master01 k8s-install]# kubectl get pods

No resources found in default namespace.

[root@k81-master01 k8s-install]# ^C

[root@k81-master01 k8s-install]# kubectl get nodes

NAME STATUS ROLES AGE VERSION

k81-master01 NotReady master 9m2s v1.16.2

[root@k81-master01 k8s-install]#

复制代码

PS:目前节点只有它主机且状态还处于NotReady!

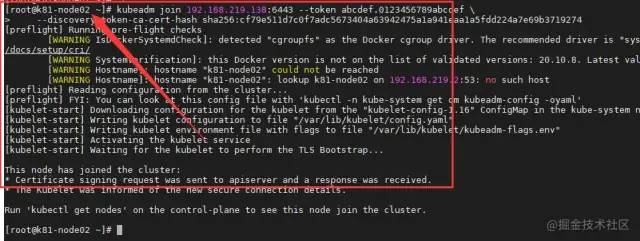

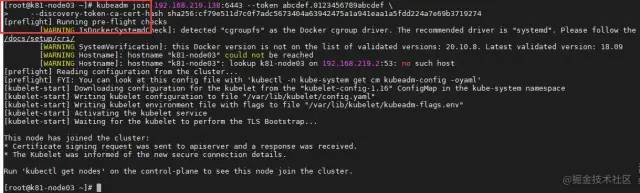

6.7 把node01和node02节点加入到集群(node1和node2节点执行)

节点加入基于token的机制认证,进行加入!

加入命令是上面的提示的:

kubeadm join 192.168.219.138:6443 --token abcdef.0123456789abcdef \

--discovery-token-ca-cert-hash sha256:cf79e511d7c0f7adc5673404a63942475a1a941eaa1a5fdd224a7e69b3719274

复制代码

图示:

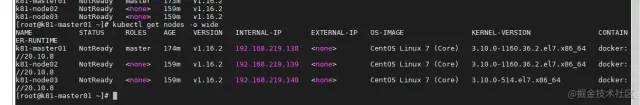

在master01节点再查看我们的节点加入的情况:

Run 'kubectl get nodes' on the control-plane to see this node join the cluster.

[root@k81-master01 k8s-install]# kubectl get nodes

NAME STATUS ROLES AGE VERSION

k81-master01 NotReady master 19m v1.16.2

k81-node02 NotReady <none> 5m25s v1.16.2

k81-node03 NotReady <none> 5m10s v1.16.2

[root@k81-master01 k8s-install]#

复制代码

观察目前我们的所有的节点的状态是处于:NotReady!网络问题!

PS:处于NotReady的原因是当前k8s的集群,因为我们的k8s的集群网络依赖第三方的的网络的插件。

所以此时我们的需要安装这个插件!!

6.8 在master01节点进行flannel网络插件

PS:下载安装过程如无法下载,多试几次!

下载插件:

[root@k81-master01 k8s-install]# wget https://raw.githubusercontent.com/coreos/flannel/2140ac876ef134e0ed5af15c65e414cf26827915/Documentation/kube-flannel.yml

--2021-09-01 13:44:13-- https://raw.githubusercontent.com/coreos/flannel/2140ac876ef134e0ed5af15c65e414cf26827915/Documentation/kube-flannel.yml

Resolving raw.githubusercontent.com (raw.githubusercontent.com)... 0.0.0.0, ::

Connecting to raw.githubusercontent.com (raw.githubusercontent.com)|0.0.0.0|:443... failed: Connection refused.

Connecting to raw.githubusercontent.com (raw.githubusercontent.com)|::|:443... failed: Connection refused.

复制代码

解决问题:在hosts里面新增一个解析:

127.0.0.1 localhost localhost.localdomain localhost4 localhost4.localdomain4

::1 localhost localhost.localdomain localhost6 localhost6.localdomain6

192.168.219.138 k8s-master01

192.168.219.139 k8s-node01

192.168.219.140 k8s-node02

192.168.219.138 k8s-master01

192.168.219.139 k8s-node02

192.168.219.140 k8s-node03

151.101.76.133 raw.githubusercontent.com

复制代码

然后保存后,再重试:

[root@k81-master01 k8s-install]# wget https://raw.githubusercontent.com/coreos/flannel/2140ac876ef134e0ed5af15c65e414cf26827915/Documentation/kube-flannel.yml

--2021-09-01 13:47:53-- https://raw.githubusercontent.com/coreos/flannel/2140ac876ef134e0ed5af15c65e414cf26827915/Documentation/kube-flannel.yml

Resolving raw.githubusercontent.com (raw.githubusercontent.com)... 151.101.76.133

Connecting to raw.githubusercontent.com (raw.githubusercontent.com)|151.101.76.133|:443... connected.

Unable to establish SSL connection.

复制代码

新的错误提示:

Resolving raw.githubusercontent.com (raw.githubusercontent.com)... 151.101.76.133

Connecting to raw.githubusercontent.com (raw.githubusercontent.com)|151.101.76.133|:443... connected.

Unable to establish SSL connection.

复制代码

手动创建文件和下载再上传的方式处理:

[root@k81-master01 k8s-install]# touch kube-flannel.yml

复制代码

文件内容:

---

apiVersion: policy/v1beta1

kind: PodSecurityPolicy

metadata:

name: psp.flannel.unprivileged

annotations:

seccomp.security.alpha.kubernetes.io/allowedProfileNames: docker/default

seccomp.security.alpha.kubernetes.io/defaultProfileName: docker/default

apparmor.security.beta.kubernetes.io/allowedProfileNames: runtime/default

apparmor.security.beta.kubernetes.io/defaultProfileName: runtime/default

spec:

privileged: false

volumes:

- configMap

- secret

- emptyDir

- hostPath

allowedHostPaths:

- pathPrefix: "/etc/cni/net.d"

- pathPrefix: "/etc/kube-flannel"

- pathPrefix: "/run/flannel"

readOnlyRootFilesystem: false

# Users and groups

runAsUser:

rule: RunAsAny

supplementalGroups:

rule: RunAsAny

fsGroup:

rule: RunAsAny

# Privilege Escalation

allowPrivilegeEscalation: false

defaultAllowPrivilegeEscalation: false

# Capabilities

allowedCapabilities: ['NET_ADMIN']

defaultAddCapabilities: []

requiredDropCapabilities: []

# Host namespaces

hostPID: false

hostIPC: false

hostNetwork: true

hostPorts:

- min: 0

max: 65535

# SELinux

seLinux:

# SELinux is unsed in CaaSP

rule: 'RunAsAny'

---

kind: ClusterRole

apiVersion: rbac.authorization.k8s.io/v1beta1

metadata:

name: flannel

rules:

- apiGroups: ['extensions']

resources: ['podsecuritypolicies']

verbs: ['use']

resourceNames: ['psp.flannel.unprivileged']

- apiGroups:

- ""

resources:

- pods

verbs:

- get

- apiGroups:

- ""

resources:

- nodes

verbs:

- list

- watch

- apiGroups:

- ""

resources:

- nodes/status

verbs:

- patch

---

kind: ClusterRoleBinding

apiVersion: rbac.authorization.k8s.io/v1beta1

metadata:

name: flannel

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: flannel

subjects:

- kind: ServiceAccount

name: flannel

namespace: kube-system

---

apiVersion: v1

kind: ServiceAccount

metadata:

name: flannel

namespace: kube-system

---

kind: ConfigMap

apiVersion: v1

metadata:

name: kube-flannel-cfg

namespace: kube-system

labels:

tier: node

app: flannel

data:

cni-conf.json: |

{

"cniVersion": "0.2.0",

"name": "cbr0",

"plugins": [

{

"type": "flannel",

"delegate": {

"hairpinMode": true,

"isDefaultGateway": true

}

},

{

"type": "portmap",

"capabilities": {

"portMappings": true

}

}

]

}

net-conf.json: |

{

"Network": "10.244.0.0/16",

"Backend": {

"Type": "vxlan"

}

}

---

apiVersion: apps/v1

kind: DaemonSet

metadata:

name: kube-flannel-ds-amd64

namespace: kube-system

labels:

tier: node

app: flannel

spec:

selector:

matchLabels:

app: flannel

template:

metadata:

labels:

tier: node

app: flannel

spec:

affinity:

nodeAffinity:

requiredDuringSchedulingIgnoredDuringExecution:

nodeSelectorTerms:

- matchExpressions:

- key: beta.kubernetes.io/os

operator: In

values:

- linux

- key: beta.kubernetes.io/arch

operator: In

values:

- amd64

hostNetwork: true

tolerations:

- operator: Exists

effect: NoSchedule

serviceAccountName: flannel

initContainers:

- name: install-cni

image: quay.io/coreos/flannel:v0.11.0-amd64

command:

- cp

args:

- -f

- etc/kube-flannel/cni-conf.json

- etc/cni/net.d/10-flannel.conflist

volumeMounts:

- name: cni

mountPath: etc/cni/net.d

- name: flannel-cfg

mountPath: etc/kube-flannel/

containers:

- name: kube-flannel

image: quay.io/coreos/flannel:v0.11.0-amd64

command:

- opt/bin/flanneld

args:

- --ip-masq

- --kube-subnet-mgr

resources:

requests:

cpu: "100m"

memory: "50Mi"

limits:

cpu: "100m"

memory: "50Mi"

securityContext:

privileged: false

capabilities:

add: ["NET_ADMIN"]

env:

- name: POD_NAME

valueFrom:

fieldRef:

fieldPath: metadata.name

- name: POD_NAMESPACE

valueFrom:

fieldRef:

fieldPath: metadata.namespace

volumeMounts:

- name: run

mountPath: run/flannel

- name: flannel-cfg

mountPath: etc/kube-flannel/

volumes:

- name: run

hostPath:

path: run/flannel

- name: cni

hostPath:

path: etc/cni/net.d

- name: flannel-cfg

configMap:

name: kube-flannel-cfg

---

apiVersion: apps/v1

kind: DaemonSet

metadata:

name: kube-flannel-ds-arm64

namespace: kube-system

labels:

tier: node

app: flannel

spec:

selector:

matchLabels:

app: flannel

template:

metadata:

labels:

tier: node

app: flannel

spec:

affinity:

nodeAffinity:

requiredDuringSchedulingIgnoredDuringExecution:

nodeSelectorTerms:

- matchExpressions:

- key: beta.kubernetes.io/os

operator: In

values:

- linux

- key: beta.kubernetes.io/arch

operator: In

values:

- arm64

hostNetwork: true

tolerations:

- operator: Exists

effect: NoSchedule

serviceAccountName: flannel

initContainers:

- name: install-cni

image: quay.io/coreos/flannel:v0.11.0-arm64

command:

- cp

args:

- -f

- etc/kube-flannel/cni-conf.json

- etc/cni/net.d/10-flannel.conflist

volumeMounts:

- name: cni

mountPath: etc/cni/net.d

- name: flannel-cfg

mountPath: etc/kube-flannel/

containers:

- name: kube-flannel

image: quay.io/coreos/flannel:v0.11.0-arm64

command:

- opt/bin/flanneld

args:

- --ip-masq

- --kube-subnet-mgr

resources:

requests:

cpu: "100m"

memory: "50Mi"

limits:

cpu: "100m"

memory: "50Mi"

securityContext:

privileged: false

capabilities:

add: ["NET_ADMIN"]

env:

- name: POD_NAME

valueFrom:

fieldRef:

fieldPath: metadata.name

- name: POD_NAMESPACE

valueFrom:

fieldRef:

fieldPath: metadata.namespace

volumeMounts:

- name: run

mountPath: run/flannel

- name: flannel-cfg

mountPath: etc/kube-flannel/

volumes:

- name: run

hostPath:

path: run/flannel

- name: cni

hostPath:

path: etc/cni/net.d

- name: flannel-cfg

configMap:

name: kube-flannel-cfg

---

apiVersion: apps/v1

kind: DaemonSet

metadata:

name: kube-flannel-ds-arm

namespace: kube-system

labels:

tier: node

app: flannel

spec:

selector:

matchLabels:

app: flannel

template:

metadata:

labels:

tier: node

app: flannel

spec:

affinity:

nodeAffinity:

requiredDuringSchedulingIgnoredDuringExecution:

nodeSelectorTerms:

- matchExpressions:

- key: beta.kubernetes.io/os

operator: In

values:

- linux

- key: beta.kubernetes.io/arch

operator: In

values:

- arm

hostNetwork: true

tolerations:

- operator: Exists

effect: NoSchedule

serviceAccountName: flannel

initContainers:

- name: install-cni

image: quay.io/coreos/flannel:v0.11.0-arm

command:

- cp

args:

- -f

- etc/kube-flannel/cni-conf.json

- etc/cni/net.d/10-flannel.conflist

volumeMounts:

- name: cni

mountPath: etc/cni/net.d

- name: flannel-cfg

mountPath: etc/kube-flannel/

containers:

- name: kube-flannel

image: quay.io/coreos/flannel:v0.11.0-arm

command:

- opt/bin/flanneld

args:

- --ip-masq

- --kube-subnet-mgr

resources:

requests:

cpu: "100m"

memory: "50Mi"

limits:

cpu: "100m"

memory: "50Mi"

securityContext:

privileged: false

capabilities:

add: ["NET_ADMIN"]

env:

- name: POD_NAME

valueFrom:

fieldRef:

fieldPath: metadata.name

- name: POD_NAMESPACE

valueFrom:

fieldRef:

fieldPath: metadata.namespace

volumeMounts:

- name: run

mountPath: run/flannel

- name: flannel-cfg

mountPath: etc/kube-flannel/

volumes:

- name: run

hostPath:

path: run/flannel

- name: cni

hostPath:

path: etc/cni/net.d

- name: flannel-cfg

configMap:

name: kube-flannel-cfg

---

apiVersion: apps/v1

kind: DaemonSet

metadata:

name: kube-flannel-ds-ppc64le

namespace: kube-system

labels:

tier: node

app: flannel

spec:

selector:

matchLabels:

app: flannel

template:

metadata:

labels:

tier: node

app: flannel

spec:

affinity:

nodeAffinity:

requiredDuringSchedulingIgnoredDuringExecution:

nodeSelectorTerms:

- matchExpressions:

- key: beta.kubernetes.io/os

operator: In

values:

- linux

- key: beta.kubernetes.io/arch

operator: In

values:

- ppc64le

hostNetwork: true

tolerations:

- operator: Exists

effect: NoSchedule

serviceAccountName: flannel

initContainers:

- name: install-cni

image: quay.io/coreos/flannel:v0.11.0-ppc64le

command:

- cp

args:

- -f

- etc/kube-flannel/cni-conf.json

- etc/cni/net.d/10-flannel.conflist

volumeMounts:

- name: cni

mountPath: etc/cni/net.d

- name: flannel-cfg

mountPath: etc/kube-flannel/

containers:

- name: kube-flannel

image: quay.io/coreos/flannel:v0.11.0-ppc64le

command:

- opt/bin/flanneld

args:

- --ip-masq

- --kube-subnet-mgr

resources:

requests:

cpu: "100m"

memory: "50Mi"

limits:

cpu: "100m"

memory: "50Mi"

securityContext:

privileged: false

capabilities:

add: ["NET_ADMIN"]

env:

- name: POD_NAME

valueFrom:

fieldRef:

fieldPath: metadata.name

- name: POD_NAMESPACE

valueFrom:

fieldRef:

fieldPath: metadata.namespace

volumeMounts:

- name: run

mountPath: run/flannel

- name: flannel-cfg

mountPath: etc/kube-flannel/

volumes:

- name: run

hostPath:

path: run/flannel

- name: cni

hostPath:

path: etc/cni/net.d

- name: flannel-cfg

configMap:

name: kube-flannel-cfg

---

apiVersion: apps/v1

kind: DaemonSet

metadata:

name: kube-flannel-ds-s390x

namespace: kube-system

labels:

tier: node

app: flannel

spec:

selector:

matchLabels:

app: flannel

template:

metadata:

labels:

tier: node

app: flannel

spec:

affinity:

nodeAffinity:

requiredDuringSchedulingIgnoredDuringExecution:

nodeSelectorTerms:

- matchExpressions:

- key: beta.kubernetes.io/os

operator: In

values:

- linux

- key: beta.kubernetes.io/arch

operator: In

values:

- s390x

hostNetwork: true

tolerations:

- operator: Exists

effect: NoSchedule

serviceAccountName: flannel

initContainers:

- name: install-cni

image: quay.io/coreos/flannel:v0.11.0-s390x

command:

- cp

args:

- -f

- etc/kube-flannel/cni-conf.json

- etc/cni/net.d/10-flannel.conflist

volumeMounts:

- name: cni

mountPath: etc/cni/net.d

- name: flannel-cfg

mountPath: etc/kube-flannel/

containers:

- name: kube-flannel

image: quay.io/coreos/flannel:v0.11.0-s390x

command:

- opt/bin/flanneld

args:

- --ip-masq

- --kube-subnet-mgr

resources:

requests:

cpu: "100m"

memory: "50Mi"

limits:

cpu: "100m"

memory: "50Mi"

securityContext:

privileged: false

capabilities:

add: ["NET_ADMIN"]

env:

- name: POD_NAME

valueFrom:

fieldRef:

fieldPath: metadata.name

- name: POD_NAMESPACE

valueFrom:

fieldRef:

fieldPath: metadata.namespace

volumeMounts:

- name: run

mountPath: run/flannel

- name: flannel-cfg

mountPath: etc/kube-flannel/

volumes:

- name: run

hostPath:

path: run/flannel

- name: cni

hostPath:

path: etc/cni/net.d

- name: flannel-cfg

configMap:

name: kube-flannel-cfg

复制代码

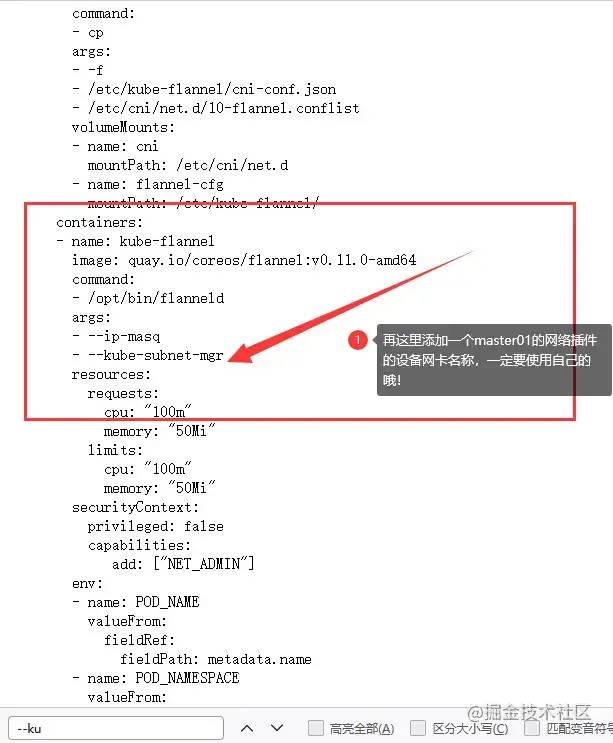

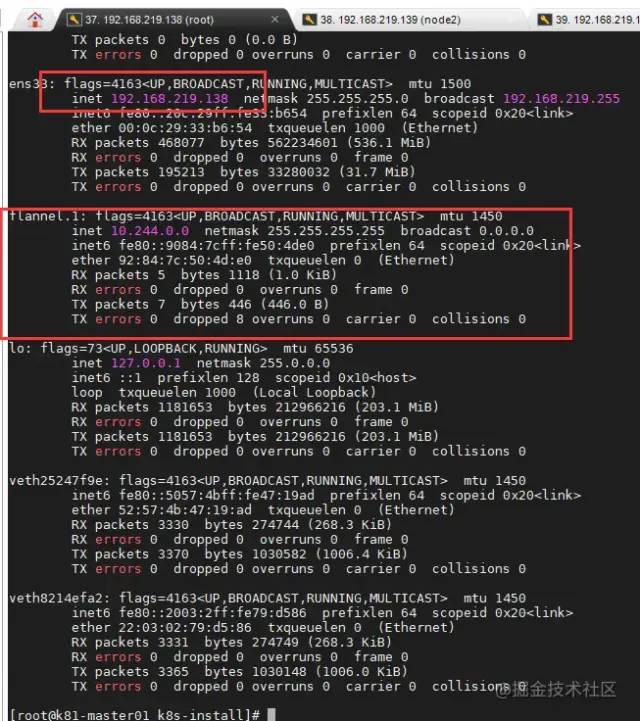

查看我们的master01的网卡信息(我们的使用ifconfig查看网卡名称)

PS:如果ifconfig无发生使用,则安装一下:yum install net-tools

查看网卡名为:ens33

[root@k81-master01 k8s-install]# ifconfig

docker0: flags=4099<UP,BROADCAST,MULTICAST> mtu 1500

inet 172.17.0.1 netmask 255.255.0.0 broadcast 172.17.255.255

ether 02:42:f9:76:b7:09 txqueuelen 0 (Ethernet)

RX packets 0 bytes 0 (0.0 B)

RX errors 0 dropped 0 overruns 0 frame 0

TX packets 0 bytes 0 (0.0 B)

TX errors 0 dropped 0 overruns 0 carrier 0 collisions 0

ens33: flags=4163<UP,BROADCAST,RUNNING,MULTICAST> mtu 1500

inet 192.168.219.138 netmask 255.255.255.0 broadcast 192.168.219.255

inet6 fe80::20c:29ff:fe33:b654 prefixlen 64 scopeid 0x20<link>

ether 00:0c:29:33:b6:54 txqueuelen 1000 (Ethernet)

RX packets 324237 bytes 440648487 (420.2 MiB)

RX errors 0 dropped 0 overruns 0 frame 0

TX packets 91362 bytes 8962995 (8.5 MiB)

TX errors 0 dropped 0 overruns 0 carrier 0 collisions 0

lo: flags=73<UP,LOOPBACK,RUNNING> mtu 65536

inet 127.0.0.1 netmask 255.0.0.0

inet6 ::1 prefixlen 128 scopeid 0x10<host>

loop txqueuelen 1000 (Local Loopback)

RX packets 261039 bytes 47961692 (45.7 MiB)

RX errors 0 dropped 0 overruns 0 frame 0

TX packets 261039 bytes 47961692 (45.7 MiB)

TX errors 0 dropped 0 overruns 0 carrier 0 collisions 0

[root@k81-master01 k8s-install]#

复制代码

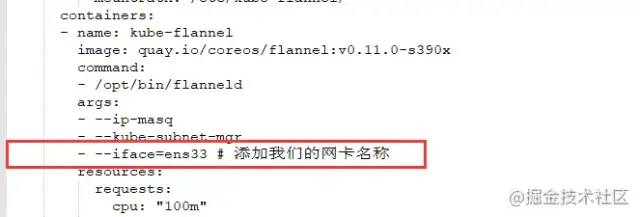

然后修改我们的kube-flannel.yml

- --iface=ens33 # 添加我们的网卡名称

复制代码

6.9 在master01下载和安装flannel网络插件镜像:

查看节点一些网络信息:

下载插件镜像:

[root@k81-master01 k8s-install]# docker pull quay.io/coreos/flannel:v0.11.0-amd64

复制代码

安装插件:

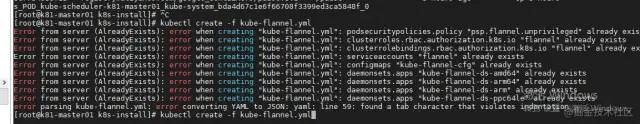

[root@k81-master01 k8s-install]# kubectl create -f kube-flannel.yml

复制代码

错误提示:

是已经完成安装的原因!

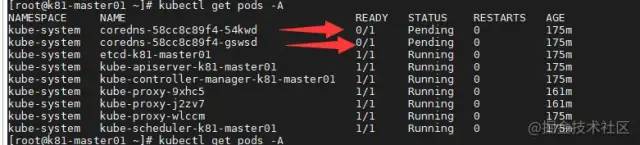

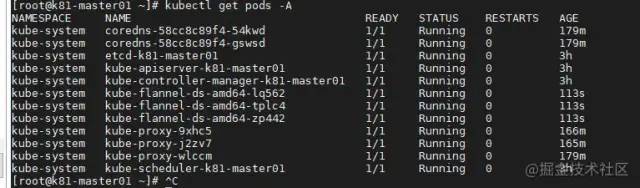

查看节点的安装运行情况:

[root@k81-master01 ~]# kubectl get pods -A

NAMESPACE NAME READY STATUS RESTARTS AGE

kube-system coredns-58cc8c89f4-54kwd 1/1 Running 0 179m

kube-system coredns-58cc8c89f4-gswsd 1/1 Running 0 179m

kube-system etcd-k81-master01 1/1 Running 0 3h

kube-system kube-apiserver-k81-master01 1/1 Running 0 3h

kube-system kube-controller-manager-k81-master01 1/1 Running 0 3h

kube-system kube-flannel-ds-amd64-lq562 1/1 Running 0 113s

kube-system kube-flannel-ds-amd64-tplc4 1/1 Running 0 113s

kube-system kube-flannel-ds-amd64-zp442 1/1 Running 0 113s

kube-system kube-proxy-9xhc5 1/1 Running 0 166m

kube-system kube-proxy-j2zv7 1/1 Running 0 165m

kube-system kube-proxy-wlccm 1/1 Running 0 179m

kube-system kube-scheduler-k81-master01 1/1 Running 0 3h

[root@k81-master01 ~]#

复制代码

ps:需要确保当前所有阶段运行的状态都是runing,k8s的集群运行才正常!

下面这种状态,还无法正常的运行哟!

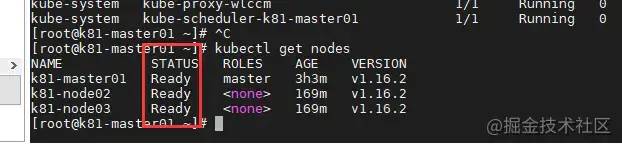

再次查看所有的节点状态(确保都处于ready状态!):

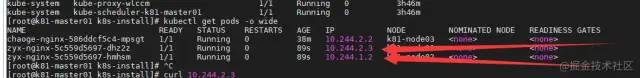

7 实验,使用k8s安装nginx应用

补充命令提示安装:

yum install -y bash-completion

source usr/share/bash-completion/bash_completion

source <(kubectl completion bash)

echo "source <(kubectl completion bash)" >> ~/.bashrc

复制代码

目标:安装一个nginx的服务(由k8s选择安装的节点)。

7. 1步骤:

1:(在master01几点上执行)使用kubectl run 名称 --image=镜像名称 跑一个服务,并且查看服务运行的状态

[root@k81-master01 k8s-install]# kubectl run chaoge-nginx --image=nginx:alpine

kubectl run --generator=deployment/apps.v1 is DEPRECATED and will be removed in a future version. Use kubectl run --generator=run-pod/v1 or kubectl create instead.

deployment.apps/chaoge-nginx created

[root@k81-master01 k8s-install]# kubectl get pods

NAME READY STATUS RESTARTS AGE

chaoge-nginx-586ddcf5c4-mpsgt 0/1 ContainerCreating 0 23s

[root@k81-master01 k8s-install]# ^C

[root@k81-master01 k8s-install]# kubectl get pods

NAME READY STATUS RESTARTS AGE

chaoge-nginx-586ddcf5c4-mpsgt 1/1 Running 0 39s

[root@k81-master01 k8s-install]#

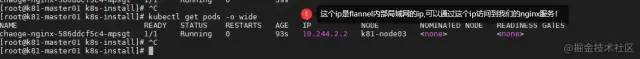

# 查看服务分配到 k81-node03 节点上:

[root@k81-master01 k8s-install]# kubectl get pods -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

chaoge-nginx-586ddcf5c4-mpsgt 1/1 Running 0 93s 10.244.2.2 k81-node03 <none> <none>

[root@k81-master01 k8s-install]#

复制代码

2: 通过访问flannel网络集群的地址,来访问nginx:

验证访问 通过flannel的局域网网络访问:

命令:

curl 10.244.2.2

复制代码

结果:

[root@k81-master01 k8s-install]# curl 10.244.2.2

<!DOCTYPE html>

<html>

<head>

<title>Welcome to nginx!</title>

<style>

body {

width: 35em;

margin: 0 auto;

font-family: Tahoma, Verdana, Arial, sans-serif;

}

</style>

</head>

<body>

<h1>Welcome to nginx!</h1>

<p>If you see this page, the nginx web server is successfully installed and

working. Further configuration is required.</p>

<p>For online documentation and support please refer to

<a href="http://nginx.org/">nginx.org</a>.<br/>

Commercial support is available at

<a href="http://nginx.com/">nginx.com</a>.</p>

<p><em>Thank you for using nginx.</em></p>

</body>

</html>

[root@k81-master01 k8s-install]#

复制代码

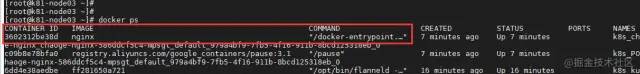

查看node3节点的运行的容器对象:

7. 2 解决外部访问pod服务:

7. 2.1 网络信息查看

master01网络信息:

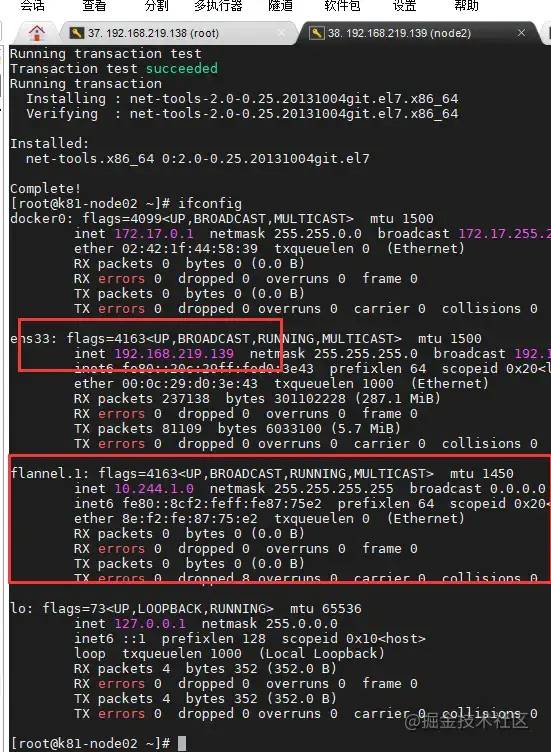

node2节点的网络信息:

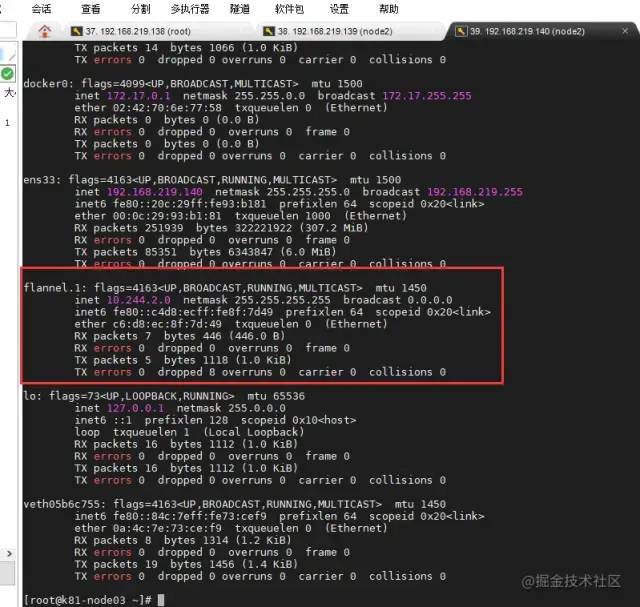

node3节点的网络信息:

node3节点的网络信息:

7.2.2 k8s容器高可用实验,创建deployment资源

实验的结果:1:节点故障自动转移,和自我修复 2:节点扩容和缩容 3:实现负载均衡

步骤:

1:编写一个yaml资源描述文件

nano zyx-nginx-des.yaml

注意格式问题:

apiVersion: apps/v1

kind: Deployment

metadata:

name: zyx-nginx

labels:

app: nginx

spec:

replicas: 2

selector:

matchLabels:

app: nginx

template:

metadata:

labels:

app: nginx

spec:

containers:

- name: nginx

image: nginx:alpine

ports:

- containerPort: 80

复制代码

2:执行yaml资源描述文件

[root@k81-master01 k8s-install]# kubectl apply -f zyx-nginx-des.yaml

复制代码

3:执行后查看pods运行情况:

[root@k81-master01 k8s-install]# kubectl get pods

NAME READY STATUS RESTARTS AGE

chaoge-nginx-586ddcf5c4-mpsgt 1/1 Running 0 36m

zyx-nginx-5c559d5697-dhz2z 1/1 Running 0 8s

zyx-nginx-5c559d5697-hmhsm 0/1 ContainerCreating 0 8s

[root@k81-master01 k8s-install]# kubectl get pods

NAME READY STATUS RESTARTS AGE

chaoge-nginx-586ddcf5c4-mpsgt 1/1 Running 0 37m

zyx-nginx-5c559d5697-dhz2z 1/1 Running 0 48s

zyx-nginx-5c559d5697-hmhsm 1/1 Running 0 48s

[root@k81-master01 k8s-install]#

[root@k81-master01 k8s-install]# kubectl get pods -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

chaoge-nginx-586ddcf5c4-mpsgt 1/1 Running 0 38m 10.244.2.2 k81-node03 <none> <none>

zyx-nginx-5c559d5697-dhz2z 1/1 Running 0 89s 10.244.2.3 k81-node03 <none> <none>

zyx-nginx-5c559d5697-hmhsm 1/1 Running 0 89s 10.244.1.2 k81-node02 <none> <none>

[root@k81-master01 k8s-install]#

复制代码

4:访问局域网内的服务情况(成功,局域内访问没问题):

访问明细:

[root@k81-master01 k8s-install]# curl 10.244.2.3

<!DOCTYPE html>

<html>

<head>

<title>Welcome to nginx!</title>

<style>

body {

width: 35em;

margin: 0 auto;

font-family: Tahoma, Verdana, Arial, sans-serif;

}

</style>

</head>

<body>

<h1>Welcome to nginx!</h1>

<p>If you see this page, the nginx web server is successfully installed and

working. Further configuration is required.</p>

<p>For online documentation and support please refer to

<a href="http://nginx.org/">nginx.org</a>.<br/>

Commercial support is available at

<a href="http://nginx.com/">nginx.com</a>.</p>

<p><em>Thank you for using nginx.</em></p>

</body>

</html>

[root@k81-master01 k8s-install]# curl 10.244.1.2

<!DOCTYPE html>

<html>

<head>

<title>Welcome to nginx!</title>

<style>

body {

width: 35em;

margin: 0 auto;

font-family: Tahoma, Verdana, Arial, sans-serif;

}

</style>

</head>

<body>

<h1>Welcome to nginx!</h1>

<p>If you see this page, the nginx web server is successfully installed and

working. Further configuration is required.</p>

<p>For online documentation and support please refer to

<a href="http://nginx.org/">nginx.org</a>.<br/>

Commercial support is available at

<a href="http://nginx.com/">nginx.com</a>.</p>

<p><em>Thank you for using nginx.</em></p>

</body>

</html>

[root@k81-master01 k8s-install]#

复制代码

7.2.3 创建一个service资源,实现负载均衡器

因为目前我们的访问我们启动的pods的时候,目前仅限于内网内的访问,外部是无法访问,如何也实现外网的访问且实现负载均衡的呐?

1:查看我们的本机有哪些的deployment资源

[root@k81-master01 k8s-install]# kubectl get deployments

NAME READY UP-TO-DATE AVAILABLE AGE

chaoge-nginx 1/1 1 1 48m

# 跑了两个副本,分配再不同的节点上了

zyx-nginx 2/2 2 2 11m

[root@k81-master01 k8s-install]#

复制代码

2:给我们的zyx-nginx应用分配一个负载均衡器

[root@k81-master01 k8s-install]# kubectl expose deployment zyx-nginx --port=80 --type=LoadBalancer

复制代码

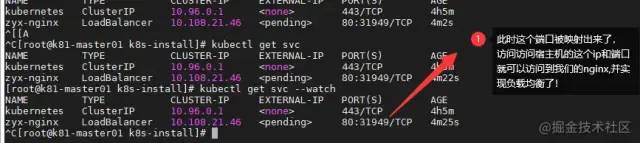

3:查看资源svc

^C[root@k81-master01 k8s-install]# kubectl get svc

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

kubernetes ClusterIP 10.96.0.1 <none> 443/TCP 4h5m

zyx-nginx LoadBalancer 10.108.21.46 <pending> 80:31949/TCP 4m22s

[root@k81-master01 k8s-install]# kubectl get svc --watch

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

kubernetes ClusterIP 10.96.0.1 <none> 443/TCP 4h5m

zyx-nginx LoadBalancer 10.108.21.46 <pending> 80:31949/TCP 4m25s

复制代码

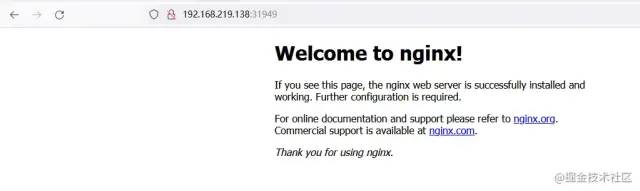

4:确定映射端口

5:外网访问验证

8 k8s图形化仪表盘安装

8.1:下载图形化仪表盘安装yaml文件

# Copyright 2017 The Kubernetes Authors.

#

# Licensed under the Apache License, Version 2.0 (the "License");

# you may not use this file except in compliance with the License.

# You may obtain a copy of the License at

#

# http://www.apache.org/licenses/LICENSE-2.0

#

# Unless required by applicable law or agreed to in writing, software

# distributed under the License is distributed on an "AS IS" BASIS,

# WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

# See the License for the specific language governing permissions and

# limitations under the License.

apiVersion: v1

kind: Namespace

metadata:

name: kubernetes-dashboard

---

apiVersion: v1

kind: ServiceAccount

metadata:

labels:

k8s-app: kubernetes-dashboard

name: kubernetes-dashboard

namespace: kubernetes-dashboard

---

kind: Service

apiVersion: v1

metadata:

labels:

k8s-app: kubernetes-dashboard

name: kubernetes-dashboard

namespace: kubernetes-dashboard

spec:

ports:

- port: 443

targetPort: 8443

selector:

k8s-app: kubernetes-dashboard

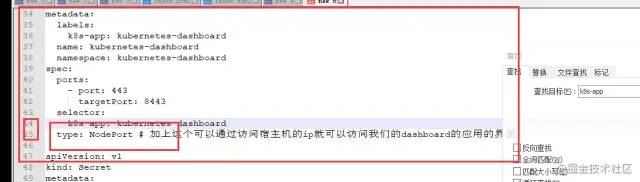

type: NodePort # 加上这个可以通过访问宿主机的ip就可以访问我们的dashboard的应用的界面

apiVersion: v1

kind: Secret

metadata:

labels:

k8s-app: kubernetes-dashboard

name: kubernetes-dashboard-certs

namespace: kubernetes-dashboard

type: Opaque

---

apiVersion: v1

kind: Secret

metadata:

labels:

k8s-app: kubernetes-dashboard

name: kubernetes-dashboard-csrf

namespace: kubernetes-dashboard

type: Opaque

data:

csrf: ""

---

apiVersion: v1

kind: Secret

metadata:

labels:

k8s-app: kubernetes-dashboard

name: kubernetes-dashboard-key-holder

namespace: kubernetes-dashboard

type: Opaque

---

kind: ConfigMap

apiVersion: v1

metadata:

labels:

k8s-app: kubernetes-dashboard

name: kubernetes-dashboard-settings

namespace: kubernetes-dashboard

---

kind: Role

apiVersion: rbac.authorization.k8s.io/v1

metadata:

labels:

k8s-app: kubernetes-dashboard

name: kubernetes-dashboard

namespace: kubernetes-dashboard

rules:

# Allow Dashboard to get, update and delete Dashboard exclusive secrets.

- apiGroups: [""]

resources: ["secrets"]

resourceNames: ["kubernetes-dashboard-key-holder", "kubernetes-dashboard-certs", "kubernetes-dashboard-csrf"]

verbs: ["get", "update", "delete"]

# Allow Dashboard to get and update 'kubernetes-dashboard-settings' config map.

- apiGroups: [""]

resources: ["configmaps"]

resourceNames: ["kubernetes-dashboard-settings"]

verbs: ["get", "update"]

# Allow Dashboard to get metrics.

- apiGroups: [""]

resources: ["services"]

resourceNames: ["heapster", "dashboard-metrics-scraper"]

verbs: ["proxy"]

- apiGroups: [""]

resources: ["services/proxy"]

resourceNames: ["heapster", "http:heapster:", "https:heapster:", "dashboard-metrics-scraper", "http:dashboard-metrics-scraper"]

verbs: ["get"]

---

kind: ClusterRole

apiVersion: rbac.authorization.k8s.io/v1

metadata:

labels:

k8s-app: kubernetes-dashboard

name: kubernetes-dashboard

rules:

# Allow Metrics Scraper to get metrics from the Metrics server

- apiGroups: ["metrics.k8s.io"]

resources: ["pods", "nodes"]

verbs: ["get", "list", "watch"]

---

apiVersion: rbac.authorization.k8s.io/v1

kind: RoleBinding

metadata:

labels:

k8s-app: kubernetes-dashboard

name: kubernetes-dashboard

namespace: kubernetes-dashboard

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: Role

name: kubernetes-dashboard

subjects:

- kind: ServiceAccount

name: kubernetes-dashboard

namespace: kubernetes-dashboard

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

name: kubernetes-dashboard

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: kubernetes-dashboard

subjects:

- kind: ServiceAccount

name: kubernetes-dashboard

namespace: kubernetes-dashboard

---

kind: Deployment

apiVersion: apps/v1

metadata:

labels:

k8s-app: kubernetes-dashboard

name: kubernetes-dashboard

namespace: kubernetes-dashboard

spec:

replicas: 1

revisionHistoryLimit: 10

selector:

matchLabels:

k8s-app: kubernetes-dashboard

template:

metadata:

labels:

k8s-app: kubernetes-dashboard

spec:

containers:

- name: kubernetes-dashboard

image: kubernetesui/dashboard:v2.0.0-rc5

imagePullPolicy: Always

ports:

- containerPort: 8443

protocol: TCP

args:

- --auto-generate-certificates

- --namespace=kubernetes-dashboard

# Uncomment the following line to manually specify Kubernetes API server Host

# If not specified, Dashboard will attempt to auto discover the API server and connect

# to it. Uncomment only if the default does not work.

# - --apiserver-host=http://my-address:port

volumeMounts:

- name: kubernetes-dashboard-certs

mountPath: certs

# Create on-disk volume to store exec logs

- mountPath: tmp

name: tmp-volume

livenessProbe:

httpGet:

scheme: HTTPS

path:

port: 8443

initialDelaySeconds: 30

timeoutSeconds: 30

securityContext:

allowPrivilegeEscalation: false

readOnlyRootFilesystem: true

runAsUser: 1001

runAsGroup: 2001

volumes:

- name: kubernetes-dashboard-certs

secret:

secretName: kubernetes-dashboard-certs

- name: tmp-volume

emptyDir: {}

serviceAccountName: kubernetes-dashboard

nodeSelector:

"beta.kubernetes.io/os": linux

# Comment the following tolerations if Dashboard must not be deployed on master

tolerations:

- key: node-role.kubernetes.io/master

effect: NoSchedule

---

kind: Service

apiVersion: v1

metadata:

labels:

k8s-app: dashboard-metrics-scraper

name: dashboard-metrics-scraper

namespace: kubernetes-dashboard

spec:

ports:

- port: 8000

targetPort: 8000

selector:

k8s-app: dashboard-metrics-scraper

---

kind: Deployment

apiVersion: apps/v1

metadata:

labels:

k8s-app: dashboard-metrics-scraper

name: dashboard-metrics-scraper

namespace: kubernetes-dashboard

spec:

replicas: 1

revisionHistoryLimit: 10

selector:

matchLabels:

k8s-app: dashboard-metrics-scraper

template:

metadata:

labels:

k8s-app: dashboard-metrics-scraper

annotations:

seccomp.security.alpha.kubernetes.io/pod: 'runtime/default'

spec:

containers:

- name: dashboard-metrics-scraper

image: kubernetesui/metrics-scraper:v1.0.3

ports:

- containerPort: 8000

protocol: TCP

livenessProbe:

httpGet:

scheme: HTTP

path:

port: 8000

initialDelaySeconds: 30

timeoutSeconds: 30

volumeMounts:

- mountPath: tmp

name: tmp-volume

securityContext:

allowPrivilegeEscalation: false

readOnlyRootFilesystem: true

runAsUser: 1001

runAsGroup: 2001

serviceAccountName: kubernetes-dashboard

nodeSelector:

"beta.kubernetes.io/os": linux

# Comment the following tolerations if Dashboard must not be deployed on master

tolerations:

- key: node-role.kubernetes.io/master

effect: NoSchedule

volumes:

- name: tmp-volume

emptyDir: {}

复制代码

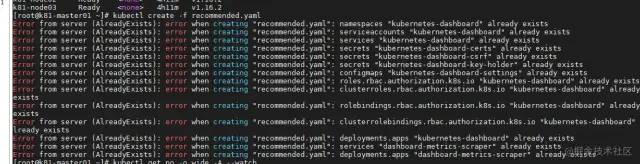

8.2:应用yaml文件资源,创建应用:

[root@k81-master01 ~]# kubectl create -f recommended.yaml

复制代码

错误问题:

已经启动了!!查看当前所有命名空间的所有pods情况:

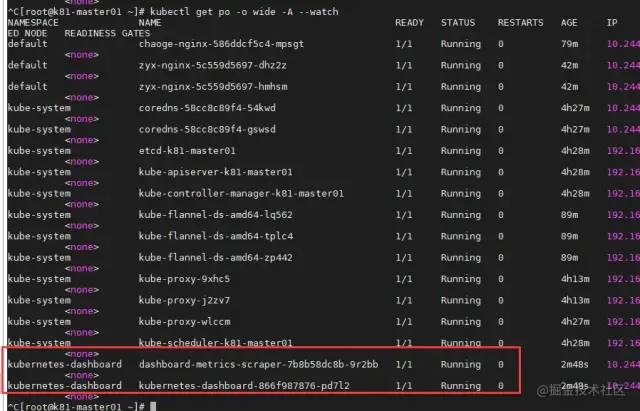

[root@k81-master01 ~]# kubectl get po -o wide -A --watch

复制代码

最终查看:已经启动完成了!

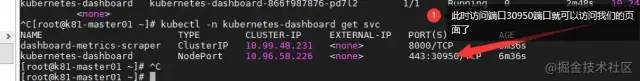

8.3:需要确保两个pods是已经运行来后,再检测我们的svc资源:

PS:查看kubernetes-dashboard命名空间的下的资源:

^C[root@k81-master01 ~]# kubectl -n kubernetes-dashboard get svc

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

dashboard-metrics-scraper ClusterIP 10.99.48.231 <none> 8000/TCP 6m36s

kubernetes-dashboard NodePort 10.96.58.226 <none> 443:30950/TCP 6m36s

[root@k81-master01 ~]#

复制代码

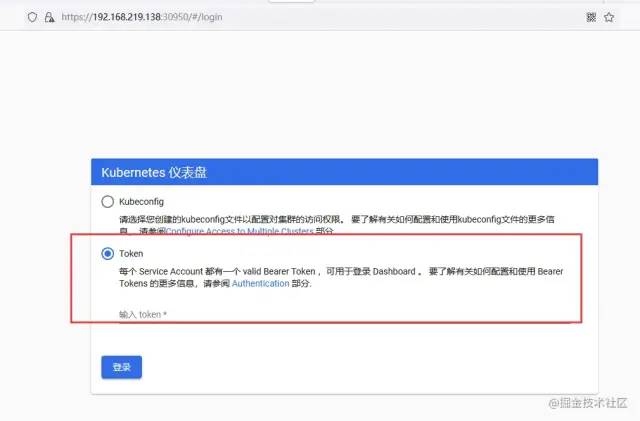

4:访问验证(火狐浏览器):

访问:http://192.168.219.138:30950/

提示需要使用HTTPS:因为是443

所以访问的应该是https://192.168.219.138:30950/

使用token登入:

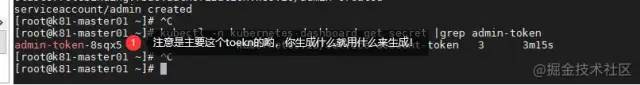

8.4:创建token认证令牌,创建账号访问dashboard:

创业admin.yaml文件:

kind: ClusterRoleBinding

apiVersion: rbac.authorization.k8s.io/v1beta1

metadata:

name: admin

annotations:

rbac.authorization.kubernetes.io/autoupdate: "true"

roleRef:

kind: ClusterRole

name: cluster-admin

apiGroup: rbac.authorization.k8s.io

subjects:

- kind: ServiceAccount

name: admin

namespace: kubernetes-dashboard

---

apiVersion: v1

kind: ServiceAccount

metadata:

name: admin

namespace: kubernetes-dashboard

复制代码

应用yaml创建服务:

[root@k81-master01 ~]# kubectl create -f admin.yaml

clusterrolebinding.rbac.authorization.k8s.io/admin created

serviceaccount/admin created

[root@k81-master01 ~]#

复制代码

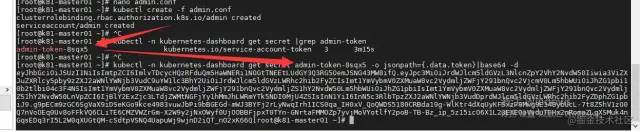

执行获取令牌信息,用于登入:

[root@k81-master01 ~]# kubectl -n kubernetes-dashboard get secret |grep admin-token

admin-token-8sqx5 kubernetes.io/service-account-token 3 3m15s

[root@k81-master01 ~]#

复制代码

执行签名token生成:

[root@k81-master01 ~]# kubectl -n kubernetes-dashboard get secret admin-token-8sqx5 -o jsonpath={.data.token}|base64 -d

eyJhbGciOiJSUzI1NiIsImtpZCI6ImlvTDcycHQzRFduQm5HaWNERi1NOGtTNEEtLUdGY3Q3RG5OemJSNG43MW8ifQ.eyJpc3MiOiJrdWJlcm5ldGVzL3NlcnZpY2VhY2NvdW50Iiwia3ViZXJuZXRlcy5pby9zZXJ2aWNlYWNjb3VudC9uYW1lc3BhY2UiOiJrdWJlcm5ldGVzLWRhc2hib2FyZCIsImt1YmVybmV0ZXMuaW8vc2VydmljZWFjY291bnQvc2VjcmV0Lm5hbWUiOiJhZG1pbi10b2tlbi04c3F4NSIsImt1YmVybmV0ZXMuaW8vc2VydmljZWFjY291bnQvc2VydmljZS1hY2NvdW50Lm5hbWUiOiJhZG1pbiIsImt1YmVybmV0ZXMuaW8vc2VydmljZWFjY291bnQvc2VydmljZS1hY2NvdW50LnVpZCI6IjBlY2ExZjc3LTdjZWMtNGFjYy1hMmJhLWRmYTk5NDI0MjU4ZSIsInN1YiI6InN5c3RlbTpzZXJ2aWNlYWNjb3VudDprdWJlcm5ldGVzLWRhc2hib2FyZDphZG1pbiJ9.g9pECm9zGC6SgVaX9iDSeKGo9kce4983vuwJbPi9bBGEGd-mWJ3BYFj2rLyNwqIrh1ICS0qa_IH0xV_QoQWDS5180CRBda19g-WlKtr4dXqUyKFSxzP9Mwg9E348ybUcL-7t8ZShV1zO0Q7nVoOEq0Uv8oFFkVQ6CLiTE6CMZVWZrGm-X2W9y2jNxOWyf0UjOOBBFjpxT0TYn-GNrtaFMMOZp7yvjMoVYotlfY2poB-TB-Bz_ip_5z15icO6X1L23EXEOaVvduTEHx2nRomaZLgXSMuk4nGqsEDq3rI5L2W0qXUGtQM-cSdtpVSNQ4UapuWj9wjnD2iQT_nO2xK66Q

复制代码

然后复制,到我们的登入界面:就可以登入我们的界面

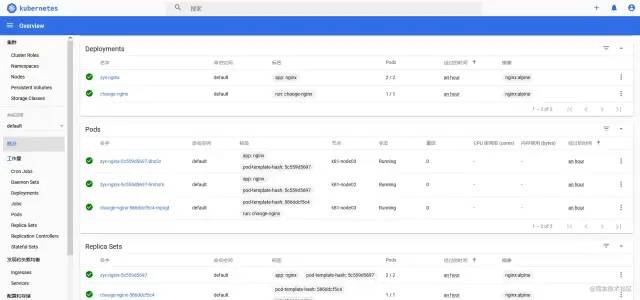

9 k8s部署deployment服务简单示例

来自官网的案例:

9.1 创建nginx Deployment应用服务yaml文件

从上面的例子可以看到,当我们的要部署应用的时候,其实就是编写我们的yaml文件,再yaml文件定义其实是使用的那些资源对象创建什么实例。

所以我们的这里也是从编写我们的yaml文件开始:

apiVersion: apps/v1

kind: Deployment

metadata:

name: nginx-deployment

spec:

selector:

matchLabels:

app: nginx

replicas: 2 # tells deployment to run 2 pods matching the template

template:

metadata:

labels:

app: nginx

spec:

containers:

- name: nginx

image: nginx:1.14.2

ports:

- containerPort: 80

复制代码

上面 YAML 文件描述了一个运行 nginx:1.14.2 Docker 镜像的 Deployment服务实例。

9.2 通过 YAML 文件创建一个 Deployment

从网络上获取yaml文件并创建 Deployment:

kubectl apply -f https://k8s.io/examples/application/deployment.yaml

复制代码

也可以下载后再本地执行

kubectl apply -f deployment.yaml

复制代码

9.3 查看 Deployment信息:

[root@k81-master01 k8s-install]# kubectl describe deployment nginx-deployment

Name: nginx-deployment

Namespace: default

CreationTimestamp: Thu, 02 Sep 2021 15:37:04 +0800

Labels: <none>

Annotations: deployment.kubernetes.io/revision: 3

kubectl.kubernetes.io/last-applied-configuration:

{"apiVersion":"apps/v1","kind":"Deployment","metadata":{"annotations":{},"name":"nginx-deployment","namespace":"default"},"spec":{"replica...

Selector: app=nginx

Replicas: 2 desired | 2 updated | 2 total | 2 available | 0 unavailable

StrategyType: RollingUpdate

MinReadySeconds: 0

RollingUpdateStrategy: 25% max unavailable, 25% max surge

Pod Template:

Labels: app=nginx

Containers:

nginx:

Image: nginx:1.14.2

Port: 80/TCP

Host Port: 0/TCP

Environment: <none>

Mounts: <none>

Volumes: <none>

Conditions:

Type Status Reason

---- ------ ------

Available True MinimumReplicasAvailable

Progressing True NewReplicaSetAvailable

OldReplicaSets: <none>

NewReplicaSet: nginx-deployment-574b87c764 (2/2 replicas created)

Events:

Type Reason Age From Message

---- ------ ---- ---- -------

Normal ScalingReplicaSet 45s deployment-controller Scaled down replica set nginx-deployment-574b87c764 to 2

[root@k81-master01 k8s-install]#

复制代码

9.4 列出 Deployment 创建的 Pods:

[root@k81-master01 k8s-install]# kubectl get pods -l app=nginx

NAME READY STATUS RESTARTS AGE

nginx-deployment-574b87c764-dwlg8 1/1 Running 0 23h

nginx-deployment-574b87c764-zzkcz 1/1 Running 0 23h

zyx-nginx-5c559d5697-dhz2z 1/1 Running 0 47h

zyx-nginx-5c559d5697-hmhsm 1/1 Running 0 47h

[root@k81-master01 k8s-install]#

复制代码

9.5 展示某一个 Pod 信息:

[root@k81-master01 k8s-install]# kubectl describe pod nginx-deployment-574b87c764-dwlg8

Name: nginx-deployment-574b87c764-dwlg8

Namespace: default

Priority: 0

Node: k81-node03/192.168.219.140

Start Time: Thu, 02 Sep 2021 17:15:35 +0800

Labels: app=nginx

pod-template-hash=574b87c764

Annotations: <none>

Status: Running

IP: 10.244.2.7

IPs:

IP: 10.244.2.7

Controlled By: ReplicaSet/nginx-deployment-574b87c764

Containers:

nginx:

Container ID: docker://ec0f195caae860f29fb0d5b80f45d6546b98ccdab796fe63605ae06cadcd6505

Image: nginx:1.14.2

Image ID: docker-pullable://nginx@sha256:f7988fb6c02e0ce69257d9bd9cf37ae20a60f1df7563c3a2a6abe24160306b8d

Port: 80/TCP

Host Port: 0/TCP

State: Running

Started: Thu, 02 Sep 2021 17:15:36 +0800

Ready: True

Restart Count: 0

Environment: <none>

Mounts:

var/run/secrets/kubernetes.io/serviceaccount from default-token-m4qkm (ro)

Conditions:

Type Status

Initialized True

Ready True

ContainersReady True

PodScheduled True

Volumes:

default-token-m4qkm:

Type: Secret (a volume populated by a Secret)

SecretName: default-token-m4qkm

Optional: false

QoS Class: BestEffort

Node-Selectors: <none>

Tolerations: node.kubernetes.io/not-ready:NoExecute for 300s

node.kubernetes.io/unreachable:NoExecute for 300s

Events: <none>

[root@k81-master01 k8s-install]#

复制代码

9.6 通过更新YAML 文件来更新 Deployment

deployment-update.yaml 文件:

apiVersion: apps/v1

kind: Deployment

metadata:

name: nginx-deployment

spec:

selector:

matchLabels:

app: nginx

replicas: 2

template:

metadata:

labels:

app: nginx

spec:

containers:

- name: nginx

image: nginx:1.16.1 # Update the version of nginx from 1.14.2 to 1.16.1

ports:

- containerPort: 80

复制代码

应用新的 YAML:

kubectl apply -f https://k8s.io/examples/application/deployment-update.yaml

复制代码

查看该 Deployment 以新的名称创建 Pods 同时删除旧的 Pods:

kubectl get pods -l app=nginx

复制代码

9.7 通过增加副本数来扩缩应用

deployment-scale.yaml 文件:

apiVersion: apps/v1

kind: Deployment

metadata:

name: nginx-deployment

spec:

selector:

matchLabels:

app: nginx

replicas: 4 # Update the replicas from 2 to 4

template:

metadata:

labels:

app: nginx

spec:

containers:

- name: nginx

image: nginx:1.14.2

ports:

- containerPort: 80

复制代码

应用新的 YAML 文件:

[root@k81-master01 k8s-install]# kubectl apply -f https://k8s.io/examples/application/deployment-scale.yaml

复制代码

验证 Deployment 有 4 个 Pods(不含zyx-nginx的):

[root@k81-master01 k8s-install]# kubectl get pods -l app=nginx

NAME READY STATUS RESTARTS AGE

nginx-deployment-574b87c764-477tb 1/1 Running 0 39s

nginx-deployment-574b87c764-dwlg8 1/1 Running 0 23h

nginx-deployment-574b87c764-jx9k8 1/1 Running 0 39s

nginx-deployment-574b87c764-zzkcz 1/1 Running 0 23h

zyx-nginx-5c559d5697-dhz2z 1/1 Running 0 47h

zyx-nginx-5c559d5697-hmhsm 1/1 Running 0 47h

[root@k81-master01 k8s-install]#

复制代码

9.7 基于名称删除 Deployment:

[root@k81-master01 k8s-install]# kubectl delete deployment nginx-deployment

deployment.apps "nginx-deployment" deleted

[root@k81-master01 k8s-install]# kubectl get pods -l app=nginx

NAME READY STATUS RESTARTS AGE

nginx-deployment-574b87c764-477tb 0/1 Terminating 0 2m22s

nginx-deployment-574b87c764-zzkcz 0/1 Terminating 0 23h

zyx-nginx-5c559d5697-dhz2z 1/1 Running 0 47h

zyx-nginx-5c559d5697-hmhsm 1/1 Running 0 47h

[root@k81-master01 k8s-install]#

[root@k81-master01 k8s-install]# kubectl get pods -l app=nginx

NAME READY STATUS RESTARTS AGE

zyx-nginx-5c559d5697-dhz2z 1/1 Running 0 2d

zyx-nginx-5c559d5697-hmhsm 1/1 Running 0 2d

[root@k81-master01 k8s-install]#

复制代码

参考资料:

阿里云:

https://github.com/AliyunContainerService/k8s-for-docker-desktop

超哥:

https://www.bilibili.com/video/BV1yP4y1x7Vj

官网:

https://kubernetes.io/zh/docs/tasks/run-application/run-stateless-application-deployment/

以上仅仅是个人结合自己的实际需求,做学习的实践笔记!如有笔误!欢迎批评指正!感谢各位大佬!

结尾

END

简书:https://www.jianshu.com/u/d6960089b087

掘金:https://juejin.cn/user/2963939079225608

公众号:微信搜【小儿来一壶枸杞酒泡茶】

小钟同学 | 文 【原创】【欢迎一起学习交流】| QQ:308711822