摘要:不借助云平台的能力,在K8S集群上安装NGINX Ingress controlle和MetalLB,并暴露services的端口给集群外部访问,还使用MetalLB搭建负载集群。

安装软件的选型和版本如下:

K8S v1.22.1

MetalLB v0.10.2

NGINX Ingress controller v1.12.1

这里先有几个问题要澄清。

1、bare metal VS cloud (裸金属服务 VS 云平台)

将K8S集群中的deploy的端口暴露给集群外部,需要使用K8S service这种资源,而service暴露端口给外部又有两中type可以选择,NodePort与LoadBalancer(它两的区别下面再说),而在云平台上,配置LoadBalancer非常简单,很多云平台(AWS,GCP)都有LoadBalancer的特定实现;而“裸金属”服务器就没有那么好的帮助了,所以环境不同,采用暴露端口的type也不同。

这里 “裸金属”之所以打引号,因为本案例使用的是阿里云的ECS,并非真正意义上的裸金属服务器。

其实不仅service这个类型有区别,不同的云平台所依赖组件的实现可能都不太相同,所以如果在云平台上安装和配置时,一定要参考相关的云平台的资料,张冠李戴可能带来安装不能正常进行的问题。例如ingress-nginx的安装文档,就分了不同的云平台和裸金属进行说明。

2、nginx-ingress vs ingress-nginx

NGINX Ingress controlle有两个实现工程,一个是nginx-ingress,由nginx团队开发,项目网站是https://github.com/nginxinc/kubernetes-ingress ,另一个是ingress-nginx,有K8S团队开发,项目网站是https://github.com/kubernetes/ingress-nginx 所以不要弄混了。两者的区别可以参考《Wait, Which NGINX Ingress Controller for Kubernetes Am I Using?》。

这里之所以强调这个问题,是经过我的实践,1.22.1版本的K8S不能安装1.12.1版本的nginx-ingress,如果安装过程中会报错

首先在apply common/ingress-class时,就会报找不到IngressClass错误

[root@k8s01 deployments]# kubectl apply -f common/ingress-class.yamlerror: unable to recognize "common/ingress-class.yaml": no matches for kind "IngressClass" in version "networking.k8s.io/v1beta1"[root@k8s01 deployments]#[root@k8s01 deployments]# cat common/ingress-class.yamlapiVersion: networking.k8s.io/v1beta1kind: IngressClassmetadata:name: nginx# annotations:# ingressclass.kubernetes.io/is-default-class: "true"spec:controller: nginx.org/ingress-controller

即使将v1beta1 修改为 v1,再次apply虽然可以成功

[root@k8s01 deployments]# cat common/ingress-class.yamlapiVersion: networking.k8s.io/v1 # modify v1beta1 to v1kind: IngressClassmetadata:name: nginx# annotations:# ingressclass.kubernetes.io/is-default-class: "true"spec:controller: nginx.org/ingress-controller[root@k8s01 deployments]# kubectl apply -f common/ingress-class.yamlingressclass.networking.k8s.io/nginx created

但是部署nginx-ingress后,容器运行时会报同样的错误

[root@k8s01 deployments]# kubectl apply -f deployment/nginx-ingress.yamldeployment.apps/nginx-ingress created[root@k8s01 deployments]# kubectl get pods --namespace=nginx-ingressNAME READY STATUS RESTARTS AGEnginx-ingress-5cd5c7549d-6254f 0/1 CrashLoopBackOff 1 (10s ago) 61sKubectl logs -n <namespace> <podName> -c <containerName> --previous[root@k8s01 deployments]# kubectl logs -n nginx-ingress nginx-ingress-5cd5c7549d-w4tgqI0915 23:02:23.864556 1 main.go:271] Starting NGINX Ingress controller Version=1.12.1 GitCommit=6f72db6030daa9afd567fd7faf9d5f ffac9c7c8f Date=2021-09-08T13:39:53Z PlusFlag=falseW0915 23:02:23.885394 1 main.go:310] The '-use-ingress-class-only' flag will be deprecated and has no effect on versions of ku bernetes >= 1.18.0. Processing ONLY resources that have the 'ingressClassName' field in Ingress equal to the class.F0915 23:02:23.886499 1 main.go:314] Error when getting IngressClass nginx: the server could not find the requested resource[root@k8s01 deployments]# kubectl logs -n nginx-ingress nginx-ingress-5cd5c7549d-w4tgq --previousI0915 23:02:23.864556 1 main.go:271] Starting NGINX Ingress controller Version=1.12.1 GitCommit=6f72db6030daa9afd567fd7faf9d5f ffac9c7c8f Date=2021-09-08T13:39:53Z PlusFlag=falseW0915 23:02:23.885394 1 main.go:310] The '-use-ingress-class-only' flag will be deprecated and has no effect on versions of ku bernetes >= 1.18.0. Processing ONLY resources that have the 'ingressClassName' field in Ingress equal to the class.F0915 23:02:23.886499 1 main.go:314] Error when getting IngressClass nginx: the server could not find the requested resource

stackoverflow上有人发现同样的问题,原因可能在于最近K8S调整Ingress类型后,nginx的团队还没有做相应的修改,导致二者不能兼容。所以本案例使用K8S团队的Ingress-nginx实现。

3、NodePort vs LoadBalancer

有太多文章比较二者的区别了,这篇《Understanding Kubernetes LoadBalancer vs NodePort vs Ingress》比较详细,借用一下其的对比表

NodePort | LoadBalancer | Ingress | |

Supported by core Kubernetes | Yes | Yes | Yes |

Works on every platform Kubernetes will deploy | Yes | Only supports a few public clouds. MetalLB project allows use on-premises. | Yes |

Direct access to service | Yes | Yes | No |

Proxies each service through third party (NGINX, HAProxy, etc) | No | No | Yes |

Multiple ports per service | No | Yes | Yes |

Multiple services per IP | Yes | No | Yes |

Allows use of standard service ports (80, 443, etc) | No | Yes | Yes |

Have to track individual node IPs | Yes | No | Yes, when using NodePort; No, when using LoadBalancer |

1 前置条件

每台ECS两个网络ip(可以都是私有ip),这是MetalLB必须的要求。参考《Bare-metal considerations》,This pool of IPs must be dedicated to MetalLB's use, you can't reuse the Kubernetes node IPs or IPs handed out by a DHCP server.

安装好的K8S集群,并且没有其他的load balancers(例如云平台提供的 LoadBalancer)

可用的K8S命令行环境,因为需要使用kubectl命令

已经配置好的DHCP服务,可以分配IP,并指定网关

安装之前建议读一下Bare-metal considerations这个网页,链接https://kubernetes.github.io/ingress-nginx/deploy/baremetal/ ,但是这篇网页的问题在于只描述了最后要达成效果,没有说明过程——这也是我为什么写本篇文章的原因,中文的类似文章太少了,而且不详细。

先说明安装配置后达的成目标,分别使用NodePort和LoadBalancer暴露deploy的端口,两者效果相同,然后安装MetalLB作为负载均衡作为一个统一的对外入口

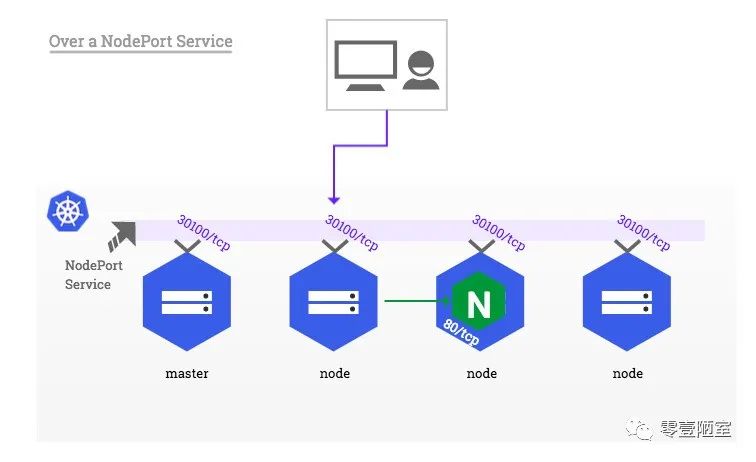

NodePort

如图,每个node上都有一个NodePort的service,将同样的30100端口暴露给集群外部,客户端可以采用任一node的端口访问集群上的应用

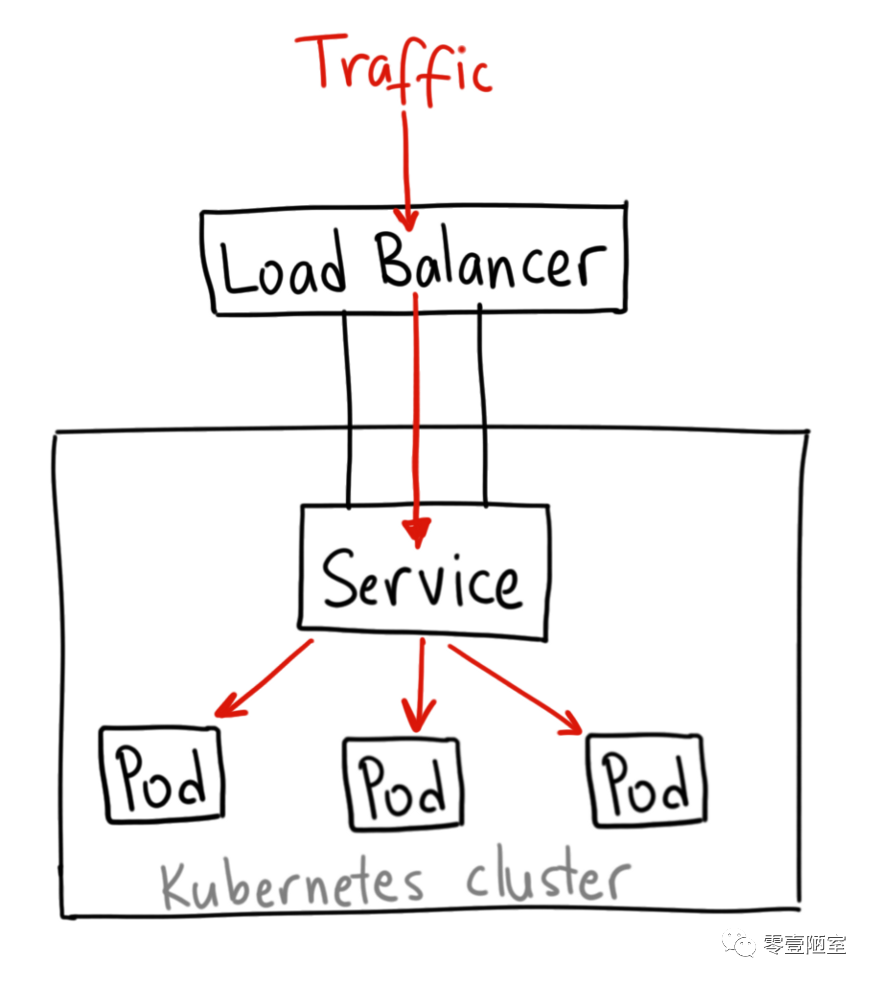

LoadBalancer

如图,每个node上都有一个 LoadBalancer的service,将同样的端口暴露给集群外部,客户端可以采用任一node的端口访问集群上的应用

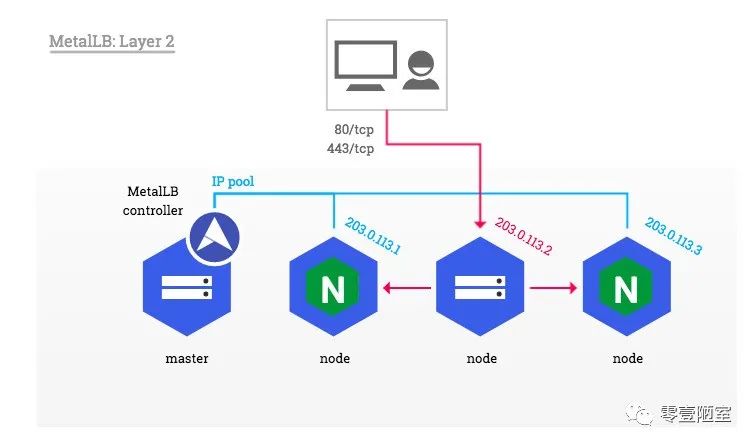

MetalLB

MetalLB使用统一的对外暴露ip和端口,图中是203.0.113.2,它收到外部请求后再转发给其他node,如果该ip所在的node 因为某种原因shutdown了,则MetalLB自动选择ip pool里其他的ip所在node,例如图中的203.0.113.1或者203.0.113.3作为替代,MetalLB会自动修改Ingress-Nginx service的外部ip。

2 安装配置步骤

[root@k8s01 ~]# kubectl get node -A -o wideNAME STATUS ROLES AGE VERSION INTERNAL-IP EXTERNAL-IP OS-IMAGE KERNEL-VERSION CONTAINER-RUNTIMEk8s01 Ready control-plane,master 2d v1.22.1 172.22.139.245 <none> CentOS Linux 7 (Core) 3.10.0-1127.19.1.el7.x86_64 docker://20.10.8k8s02 Ready worker 2d v1.22.1 172.22.139.243 <none> CentOS Linux 7 (Core) 3.10.0-1127.19.1.el7.x86_64 docker://20.10.8k8s03 Ready worker 2d v1.22.1 172.22.139.242 <none> CentOS Linux 7 (Core) 3.10.0-1127.19.1.el7.x86_64 docker://20.10.8k8s04 Ready worker 2d v1.22.1 172.22.139.244 <none> CentOS Linux 7 (Core) 3.10.0-1127.19.1.el7.x86_64 docker://20.10.8

4台服务器组成的K8S cluster已经准备好了,开始安装

2.1 安装 MetalLB

kubectl apply -f https://raw.githubusercontent.com/metallb/metallb/v0.10.2/manifests/namespace.yamlkubectl apply -f https://raw.githubusercontent.com/metallb/metallb/v0.10.2/manifests/metallb.yaml[root@k8s01 ~]# kubectl get po -ANAMESPACE NAME READY STATUS RESTARTS AGEkube-system coredns-7f6cbbb7b8-gd4v5 1/1 Running 0 64mkube-system coredns-7f6cbbb7b8-pzc6f 1/1 Running 0 64mkube-system etcd-k8s01 1/1 Running 2 64mkube-system kube-apiserver-k8s01 1/1 Running 2 64mkube-system kube-controller-manager-k8s01 1/1 Running 2 64mkube-system kube-flannel-ds-dgc8j 1/1 Running 0 59mkube-system kube-flannel-ds-f96s6 1/1 Running 0 58mkube-system kube-flannel-ds-mnb5w 1/1 Running 0 57mkube-system kube-flannel-ds-rdvn8 1/1 Running 0 57mkube-system kube-proxy-54mm9 1/1 Running 0 57mkube-system kube-proxy-7k4f7 1/1 Running 0 57mkube-system kube-proxy-bhjnr 1/1 Running 0 58mkube-system kube-proxy-bqk6g 1/1 Running 0 64mkube-system kube-scheduler-k8s01 1/1 Running 2 64mmetallb-system controller-6b78bff7d9-b2rc4 1/1 Running 0 47smetallb-system speaker-7s4td 1/1 Running 0 47smetallb-system speaker-8z8ps 1/1 Running 0 47smetallb-system speaker-9kt2f 1/1 Running 0 47smetallb-system speaker-t9zz4 1/1 Running 0 47s

2.2 安装Ingress-Nginx

因为ingress-nginx的镜像从国内pull不到,为保证正确,老办法,还是从笔记本上通过代理pull后,在传到服务器上load,不知道这个方法请参考我另一篇公众号文章《国内区域阿里云ECS使用kubeadm安装kubernetes cluster成功实践》

docker pull k8s.gcr.io/ingress-nginx/controller:v1.0.0@sha256:0851b34f69f69352bf168e6ccf30e1e20714a264ab1ecd1933e4d8c0fc3215c6docker load -i controller.v1.0.0.tardocker load -i kube-webhook-certgen.v1.0.tardocker tag ef43679c2cae k8s.gcr.io/ingress-nginx/controller:v1.0.0docker tag 17e55ec30f20 k8s.gcr.io/ingress-nginx/kube-webhook-certgen:v1.0docker images

将https://raw.githubusercontent.com/kubernetes/ingress-nginx/controller-v1.0.0/deploy/static/provider/baremetal/deploy.yaml 这个文件下载下来,修改内容,去掉"sha256"后面的内容,与docker images显示的内容一致,然后保存为 k8s-ingress-nginx.yaml文件,该文件修改后的效果如下

apiVersion: v1kind: Namespacemetadata:name: ingress-nginxlabels:app.kubernetes.io/name: ingress-nginxapp.kubernetes.io/instance: ingress-nginx---# Source: ingress-nginx/templates/controller-serviceaccount.yamlapiVersion: v1kind: ServiceAccountmetadata:labels:helm.sh/chart: ingress-nginx-4.0.1app.kubernetes.io/name: ingress-nginxapp.kubernetes.io/instance: ingress-nginxapp.kubernetes.io/version: 1.0.0app.kubernetes.io/managed-by: Helmapp.kubernetes.io/component: controllername: ingress-nginxnamespace: ingress-nginxautomountServiceAccountToken: true---# Source: ingress-nginx/templates/controller-configmap.yamlapiVersion: v1kind: ConfigMapmetadata:labels:helm.sh/chart: ingress-nginx-4.0.1app.kubernetes.io/name: ingress-nginxapp.kubernetes.io/instance: ingress-nginxapp.kubernetes.io/version: 1.0.0app.kubernetes.io/managed-by: Helmapp.kubernetes.io/component: controllername: ingress-nginx-controllernamespace: ingress-nginxdata:---# Source: ingress-nginx/templates/clusterrole.yamlapiVersion: rbac.authorization.k8s.io/v1kind: ClusterRolemetadata:labels:helm.sh/chart: ingress-nginx-4.0.1app.kubernetes.io/name: ingress-nginxapp.kubernetes.io/instance: ingress-nginxapp.kubernetes.io/version: 1.0.0app.kubernetes.io/managed-by: Helmname: ingress-nginxrules:- apiGroups:- ''resources:- configmaps- endpoints- nodes- pods- secretsverbs:- list- watch- apiGroups:- ''resources:- nodesverbs:- get- apiGroups:- ''resources:- servicesverbs:- get- list- watch- apiGroups:- networking.k8s.ioresources:- ingressesverbs:- get- list- watch- apiGroups:- ''resources:- eventsverbs:- create- patch- apiGroups:- networking.k8s.ioresources:- ingresses/statusverbs:- update- apiGroups:- networking.k8s.ioresources:- ingressclassesverbs:- get- list- watch---# Source: ingress-nginx/templates/clusterrolebinding.yamlapiVersion: rbac.authorization.k8s.io/v1kind: ClusterRoleBindingmetadata:labels:helm.sh/chart: ingress-nginx-4.0.1app.kubernetes.io/name: ingress-nginxapp.kubernetes.io/instance: ingress-nginxapp.kubernetes.io/version: 1.0.0app.kubernetes.io/managed-by: Helmname: ingress-nginxroleRef:apiGroup: rbac.authorization.k8s.iokind: ClusterRolename: ingress-nginxsubjects:- kind: ServiceAccountname: ingress-nginxnamespace: ingress-nginx---# Source: ingress-nginx/templates/controller-role.yamlapiVersion: rbac.authorization.k8s.io/v1kind: Rolemetadata:labels:helm.sh/chart: ingress-nginx-4.0.1app.kubernetes.io/name: ingress-nginxapp.kubernetes.io/instance: ingress-nginxapp.kubernetes.io/version: 1.0.0app.kubernetes.io/managed-by: Helmapp.kubernetes.io/component: controllername: ingress-nginxnamespace: ingress-nginxrules:- apiGroups:- ''resources:- namespacesverbs:- get- apiGroups:- ''resources:- configmaps- pods- secrets- endpointsverbs:- get- list- watch- apiGroups:- ''resources:- servicesverbs:- get- list- watch- apiGroups:- networking.k8s.ioresources:- ingressesverbs:- get- list- watch- apiGroups:- networking.k8s.ioresources:- ingresses/statusverbs:- update- apiGroups:- networking.k8s.ioresources:- ingressclassesverbs:- get- list- watch- apiGroups:- ''resources:- configmapsresourceNames:- ingress-controller-leaderverbs:- get- update- apiGroups:- ''resources:- configmapsverbs:- create- apiGroups:- ''resources:- eventsverbs:- create- patch---# Source: ingress-nginx/templates/controller-rolebinding.yamlapiVersion: rbac.authorization.k8s.io/v1kind: RoleBindingmetadata:labels:helm.sh/chart: ingress-nginx-4.0.1app.kubernetes.io/name: ingress-nginxapp.kubernetes.io/instance: ingress-nginxapp.kubernetes.io/version: 1.0.0app.kubernetes.io/managed-by: Helmapp.kubernetes.io/component: controllername: ingress-nginxnamespace: ingress-nginxroleRef:apiGroup: rbac.authorization.k8s.iokind: Rolename: ingress-nginxsubjects:- kind: ServiceAccountname: ingress-nginxnamespace: ingress-nginx---# Source: ingress-nginx/templates/controller-service-webhook.yamlapiVersion: v1kind: Servicemetadata:labels:helm.sh/chart: ingress-nginx-4.0.1app.kubernetes.io/name: ingress-nginxapp.kubernetes.io/instance: ingress-nginxapp.kubernetes.io/version: 1.0.0app.kubernetes.io/managed-by: Helmapp.kubernetes.io/component: controllername: ingress-nginx-controller-admissionnamespace: ingress-nginxspec:type: ClusterIPports:- name: https-webhookport: 443targetPort: webhookappProtocol: httpsselector:app.kubernetes.io/name: ingress-nginxapp.kubernetes.io/instance: ingress-nginxapp.kubernetes.io/component: controller---# Source: ingress-nginx/templates/controller-service.yamlapiVersion: v1kind: Servicemetadata:annotations:labels:helm.sh/chart: ingress-nginx-4.0.1app.kubernetes.io/name: ingress-nginxapp.kubernetes.io/instance: ingress-nginxapp.kubernetes.io/version: 1.0.0app.kubernetes.io/managed-by: Helmapp.kubernetes.io/component: controllername: ingress-nginx-controllernamespace: ingress-nginxspec:type: NodePortports:- name: httpport: 80protocol: TCPtargetPort: httpappProtocol: http- name: httpsport: 443protocol: TCPtargetPort: httpsappProtocol: httpsselector:app.kubernetes.io/name: ingress-nginxapp.kubernetes.io/instance: ingress-nginxapp.kubernetes.io/component: controller---# Source: ingress-nginx/templates/controller-deployment.yamlapiVersion: apps/v1kind: Deploymentmetadata:labels:helm.sh/chart: ingress-nginx-4.0.1app.kubernetes.io/name: ingress-nginxapp.kubernetes.io/instance: ingress-nginxapp.kubernetes.io/version: 1.0.0app.kubernetes.io/managed-by: Helmapp.kubernetes.io/component: controllername: ingress-nginx-controllernamespace: ingress-nginxspec:selector:matchLabels:app.kubernetes.io/name: ingress-nginxapp.kubernetes.io/instance: ingress-nginxapp.kubernetes.io/component: controllerrevisionHistoryLimit: 10minReadySeconds: 0template:metadata:labels:app.kubernetes.io/name: ingress-nginxapp.kubernetes.io/instance: ingress-nginxapp.kubernetes.io/component: controllerspec:dnsPolicy: ClusterFirstcontainers:- name: controllerimage: k8s.gcr.io/ingress-nginx/controller:v1.0.0imagePullPolicy: IfNotPresentlifecycle:preStop:exec:command:- /wait-shutdownargs:- /nginx-ingress-controller- --election-id=ingress-controller-leader- --controller-class=k8s.io/ingress-nginx- --configmap=$(POD_NAMESPACE)/ingress-nginx-controller- --validating-webhook=:8443- --validating-webhook-certificate=/usr/local/certificates/cert- --validating-webhook-key=/usr/local/certificates/keysecurityContext:capabilities:drop:- ALLadd:- NET_BIND_SERVICErunAsUser: 101allowPrivilegeEscalation: trueenv:- name: POD_NAMEvalueFrom:fieldRef:fieldPath: metadata.name- name: POD_NAMESPACEvalueFrom:fieldRef:fieldPath: metadata.namespace- name: LD_PRELOADvalue: /usr/local/lib/libmimalloc.solivenessProbe:failureThreshold: 5httpGet:path: /healthzport: 10254scheme: HTTPinitialDelaySeconds: 10periodSeconds: 10successThreshold: 1timeoutSeconds: 1readinessProbe:failureThreshold: 3httpGet:path: /healthzport: 10254scheme: HTTPinitialDelaySeconds: 10periodSeconds: 10successThreshold: 1timeoutSeconds: 1ports:- name: httpcontainerPort: 80protocol: TCP- name: httpscontainerPort: 443protocol: TCP- name: webhookcontainerPort: 8443protocol: TCPvolumeMounts:- name: webhook-certmountPath: /usr/local/certificates/readOnly: trueresources:requests:cpu: 100mmemory: 90MinodeSelector:kubernetes.io/os: linuxserviceAccountName: ingress-nginxterminationGracePeriodSeconds: 300volumes:- name: webhook-certsecret:secretName: ingress-nginx-admission---# Source: ingress-nginx/templates/controller-ingressclass.yaml# We don't support namespaced ingressClass yet# So a ClusterRole and a ClusterRoleBinding is requiredapiVersion: networking.k8s.io/v1kind: IngressClassmetadata:labels:helm.sh/chart: ingress-nginx-4.0.1app.kubernetes.io/name: ingress-nginxapp.kubernetes.io/instance: ingress-nginxapp.kubernetes.io/version: 1.0.0app.kubernetes.io/managed-by: Helmapp.kubernetes.io/component: controllername: nginxnamespace: ingress-nginxspec:controller: k8s.io/ingress-nginx---# Source: ingress-nginx/templates/admission-webhooks/validating-webhook.yaml# before changing this value, check the required kubernetes version# https://kubernetes.io/docs/reference/access-authn-authz/extensible-admission-controllers/#prerequisitesapiVersion: admissionregistration.k8s.io/v1kind: ValidatingWebhookConfigurationmetadata:labels:helm.sh/chart: ingress-nginx-4.0.1app.kubernetes.io/name: ingress-nginxapp.kubernetes.io/instance: ingress-nginxapp.kubernetes.io/version: 1.0.0app.kubernetes.io/managed-by: Helmapp.kubernetes.io/component: admission-webhookname: ingress-nginx-admissionwebhooks:- name: validate.nginx.ingress.kubernetes.iomatchPolicy: Equivalentrules:- apiGroups:- networking.k8s.ioapiVersions:- v1operations:- CREATE- UPDATEresources:- ingressesfailurePolicy: FailsideEffects: NoneadmissionReviewVersions:- v1clientConfig:service:namespace: ingress-nginxname: ingress-nginx-controller-admissionpath: /networking/v1/ingresses---# Source: ingress-nginx/templates/admission-webhooks/job-patch/serviceaccount.yamlapiVersion: v1kind: ServiceAccountmetadata:name: ingress-nginx-admissionnamespace: ingress-nginxannotations:helm.sh/hook: pre-install,pre-upgrade,post-install,post-upgradehelm.sh/hook-delete-policy: before-hook-creation,hook-succeededlabels:helm.sh/chart: ingress-nginx-4.0.1app.kubernetes.io/name: ingress-nginxapp.kubernetes.io/instance: ingress-nginxapp.kubernetes.io/version: 1.0.0app.kubernetes.io/managed-by: Helmapp.kubernetes.io/component: admission-webhook---# Source: ingress-nginx/templates/admission-webhooks/job-patch/clusterrole.yamlapiVersion: rbac.authorization.k8s.io/v1kind: ClusterRolemetadata:name: ingress-nginx-admissionannotations:helm.sh/hook: pre-install,pre-upgrade,post-install,post-upgradehelm.sh/hook-delete-policy: before-hook-creation,hook-succeededlabels:helm.sh/chart: ingress-nginx-4.0.1app.kubernetes.io/name: ingress-nginxapp.kubernetes.io/instance: ingress-nginxapp.kubernetes.io/version: 1.0.0app.kubernetes.io/managed-by: Helmapp.kubernetes.io/component: admission-webhookrules:- apiGroups:- admissionregistration.k8s.ioresources:- validatingwebhookconfigurationsverbs:- get- update---# Source: ingress-nginx/templates/admission-webhooks/job-patch/clusterrolebinding.yamlapiVersion: rbac.authorization.k8s.io/v1kind: ClusterRoleBindingmetadata:name: ingress-nginx-admissionannotations:helm.sh/hook: pre-install,pre-upgrade,post-install,post-upgradehelm.sh/hook-delete-policy: before-hook-creation,hook-succeededlabels:helm.sh/chart: ingress-nginx-4.0.1app.kubernetes.io/name: ingress-nginxapp.kubernetes.io/instance: ingress-nginxapp.kubernetes.io/version: 1.0.0app.kubernetes.io/managed-by: Helmapp.kubernetes.io/component: admission-webhookroleRef:apiGroup: rbac.authorization.k8s.iokind: ClusterRolename: ingress-nginx-admissionsubjects:- kind: ServiceAccountname: ingress-nginx-admissionnamespace: ingress-nginx---# Source: ingress-nginx/templates/admission-webhooks/job-patch/role.yamlapiVersion: rbac.authorization.k8s.io/v1kind: Rolemetadata:name: ingress-nginx-admissionnamespace: ingress-nginxannotations:helm.sh/hook: pre-install,pre-upgrade,post-install,post-upgradehelm.sh/hook-delete-policy: before-hook-creation,hook-succeededlabels:helm.sh/chart: ingress-nginx-4.0.1app.kubernetes.io/name: ingress-nginxapp.kubernetes.io/instance: ingress-nginxapp.kubernetes.io/version: 1.0.0app.kubernetes.io/managed-by: Helmapp.kubernetes.io/component: admission-webhookrules:- apiGroups:- ''resources:- secretsverbs:- get- create---# Source: ingress-nginx/templates/admission-webhooks/job-patch/rolebinding.yamlapiVersion: rbac.authorization.k8s.io/v1kind: RoleBindingmetadata:name: ingress-nginx-admissionnamespace: ingress-nginxannotations:helm.sh/hook: pre-install,pre-upgrade,post-install,post-upgradehelm.sh/hook-delete-policy: before-hook-creation,hook-succeededlabels:helm.sh/chart: ingress-nginx-4.0.1app.kubernetes.io/name: ingress-nginxapp.kubernetes.io/instance: ingress-nginxapp.kubernetes.io/version: 1.0.0app.kubernetes.io/managed-by: Helmapp.kubernetes.io/component: admission-webhookroleRef:apiGroup: rbac.authorization.k8s.iokind: Rolename: ingress-nginx-admissionsubjects:- kind: ServiceAccountname: ingress-nginx-admissionnamespace: ingress-nginx---# Source: ingress-nginx/templates/admission-webhooks/job-patch/job-createSecret.yamlapiVersion: batch/v1kind: Jobmetadata:name: ingress-nginx-admission-createnamespace: ingress-nginxannotations:helm.sh/hook: pre-install,pre-upgradehelm.sh/hook-delete-policy: before-hook-creation,hook-succeededlabels:helm.sh/chart: ingress-nginx-4.0.1app.kubernetes.io/name: ingress-nginxapp.kubernetes.io/instance: ingress-nginxapp.kubernetes.io/version: 1.0.0app.kubernetes.io/managed-by: Helmapp.kubernetes.io/component: admission-webhookspec:template:metadata:name: ingress-nginx-admission-createlabels:helm.sh/chart: ingress-nginx-4.0.1app.kubernetes.io/name: ingress-nginxapp.kubernetes.io/instance: ingress-nginxapp.kubernetes.io/version: 1.0.0app.kubernetes.io/managed-by: Helmapp.kubernetes.io/component: admission-webhookspec:containers:- name: createimage: k8s.gcr.io/ingress-nginx/kube-webhook-certgen:v1.0imagePullPolicy: IfNotPresentargs:- create- --host=ingress-nginx-controller-admission,ingress-nginx-controller-admission.$(POD_NAMESPACE).svc- --namespace=$(POD_NAMESPACE)- --secret-name=ingress-nginx-admissionenv:- name: POD_NAMESPACEvalueFrom:fieldRef:fieldPath: metadata.namespacerestartPolicy: OnFailureserviceAccountName: ingress-nginx-admissionnodeSelector:kubernetes.io/os: linuxsecurityContext:runAsNonRoot: truerunAsUser: 2000---# Source: ingress-nginx/templates/admission-webhooks/job-patch/job-patchWebhook.yamlapiVersion: batch/v1kind: Jobmetadata:name: ingress-nginx-admission-patchnamespace: ingress-nginxannotations:helm.sh/hook: post-install,post-upgradehelm.sh/hook-delete-policy: before-hook-creation,hook-succeededlabels:helm.sh/chart: ingress-nginx-4.0.1app.kubernetes.io/name: ingress-nginxapp.kubernetes.io/instance: ingress-nginxapp.kubernetes.io/version: 1.0.0app.kubernetes.io/managed-by: Helmapp.kubernetes.io/component: admission-webhookspec:template:metadata:name: ingress-nginx-admission-patchlabels:helm.sh/chart: ingress-nginx-4.0.1app.kubernetes.io/name: ingress-nginxapp.kubernetes.io/instance: ingress-nginxapp.kubernetes.io/version: 1.0.0app.kubernetes.io/managed-by: Helmapp.kubernetes.io/component: admission-webhookspec:containers:- name: patchimage: k8s.gcr.io/ingress-nginx/kube-webhook-certgen:v1.0imagePullPolicy: IfNotPresentargs:- patch- --webhook-name=ingress-nginx-admission- --namespace=$(POD_NAMESPACE)- --patch-mutating=false- --secret-name=ingress-nginx-admission- --patch-failure-policy=Failenv:- name: POD_NAMESPACEvalueFrom:fieldRef:fieldPath: metadata.namespacerestartPolicy: OnFailureserviceAccountName: ingress-nginx-admissionnodeSelector:kubernetes.io/os: linuxsecurityContext:runAsNonRoot: truerunAsUser: 2000

最后apply该文件

kubectl apply -f k8s-ingress-nginx.yaml#成功后检查一下效果[root@k8s01 ~]# kubectl exec -it ingress-nginx-controller-5ccb4b77c6-fdmbt -n ingress-nginx -- nginx-ingress-controller --version-------------------------------------------------------------------------------NGINX Ingress controllerRelease: v1.0.0Build: 041eb167c7bfccb1d1653f194924b0c5fd885e10Repository: https://github.com/kubernetes/ingress-nginxnginx version: nginx/1.20.1-------------------------------------------------------------------------------[root@k8s01 ~]# kubectl get po,svc -ANAMESPACE NAME READY STATUS RESTARTS AGEingress-nginx pod/ingress-nginx-admission-create--1-t582c 0/1 Completed 0 10mingress-nginx pod/ingress-nginx-admission-patch--1-5czbj 0/1 Completed 1 10mingress-nginx pod/ingress-nginx-controller-5ccb4b77c6-fdmbt 1/1 Running 0 10mkube-system pod/coredns-7f6cbbb7b8-gd4v5 1/1 Running 0 19hkube-system pod/coredns-7f6cbbb7b8-pzc6f 1/1 Running 0 19hkube-system pod/etcd-k8s01 1/1 Running 2 19hkube-system pod/kube-apiserver-k8s01 1/1 Running 2 19hkube-system pod/kube-controller-manager-k8s01 1/1 Running 2 19hkube-system pod/kube-flannel-ds-dgc8j 1/1 Running 0 19hkube-system pod/kube-flannel-ds-f96s6 1/1 Running 0 19hkube-system pod/kube-flannel-ds-mnb5w 1/1 Running 0 19hkube-system pod/kube-flannel-ds-rdvn8 1/1 Running 0 19hkube-system pod/kube-proxy-54mm9 1/1 Running 0 19hkube-system pod/kube-proxy-7k4f7 1/1 Running 0 19hkube-system pod/kube-proxy-bhjnr 1/1 Running 0 19hkube-system pod/kube-proxy-bqk6g 1/1 Running 0 19hkube-system pod/kube-scheduler-k8s01 1/1 Running 2 19hmetallb-system pod/controller-6b78bff7d9-b2rc4 1/1 Running 0 18hmetallb-system pod/speaker-7s4td 1/1 Running 0 18hmetallb-system pod/speaker-8z8ps 1/1 Running 0 18hmetallb-system pod/speaker-9kt2f 1/1 Running 0 18hmetallb-system pod/speaker-t9zz4 1/1 Running 0 18hNAMESPACE NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGEdefault service/kubernetes ClusterIP 10.96.0.1 <none> 443/TCP 19hingress-nginx service/ingress-nginx-controller NodePort 10.101.251.10 <none> 80:32013/TCP,443:30887/TCP 10mingress-nginx service/ingress-nginx-controller-admission ClusterIP 10.104.30.0 <none> 443/TCP 10mkube-system service/kube-dns ClusterIP 10.96.0.10 <none> 53/UDP,53/TCP,9153/TCP 19h[root@k8s01 ~]#

以上为执行成功后的效果,注意service/ingress-nginx-controller 的EXTERNAL-IP显示为<none>,这是正常的效果

截至此步骤Ingress-nginx安装完成

2.3 部署应用并通过NodePort暴露端口

apiVersion: v1kind: Servicemetadata:name: helloworldnamespace: ingress-nginxlabels:app: helloworldspec:ports:- name: httpport: 8080targetPort: 8080selector:app: helloworldtype: NodePort---apiVersion: apps/v1kind: Deploymentmetadata:name: helloworldnamespace: ingress-nginxlabels:app: helloworldspec:replicas: 3selector:matchLabels:app: helloworldtemplate:metadata:labels:app: helloworldspec:containers:- name: helloworldimage: hello-world-rest-json:v1.0ports:- containerPort: 8080#以上为yaml文件内容,apply该文件后效果如下[root@k8s01 ~]# kubectl -n ingress-nginx describe service/helloworldName: helloworldNamespace: ingress-nginxLabels: app=helloworldAnnotations: <none>Selector: app=helloworldType: NodePortIP Family Policy: SingleStackIP Families: IPv4IP: 10.98.169.177IPs: 10.98.169.177Port: http 8080/TCPTargetPort: 8080/TCPNodePort: http 30639/TCPEndpoints: 10.244.2.9:8080,10.244.3.18:8080,10.244.3.19:8080Session Affinity: NoneExternal Traffic Policy: ClusterEvents: <none>

测试一下效果

[root@k8s01 ~]# curl -I http://k8s01:30639HTTP/1.1 200Content-Type: application/json;charset=UTF-8Content-Length: 24Date: Thu, 16 Sep 2021 20:12:08 GMT[root@k8s01 ~]# curl -I http://k8s02:30639HTTP/1.1 200Content-Type: application/json;charset=UTF-8Content-Length: 24Date: Thu, 16 Sep 2021 20:12:13 GMT[root@k8s01 ~]# curl -I http://k8s03:30639HTTP/1.1 200Content-Type: application/json;charset=UTF-8Content-Length: 24Date: Thu, 16 Sep 2021 20:12:17 GMT[root@k8s01 ~]# curl -I http://k8s04:30639HTTP/1.1 200Content-Type: application/json;charset=UTF-8Content-Length: 24Date: Thu, 16 Sep 2021 20:12:22 GMT

2.4 通过LoadBalancer暴露端口

apiVersion: v1kind: Servicemetadata:name: helloworld-lbnamespace: ingress-nginxlabels:app: helloworldspec:ports:- name: httpport: 8081targetPort: 8080selector:app: helloworldtype: LoadBalancer#以上为yaml文件内容,apply该文件后的效果如下[root@k8s01 ~]# kubectl -n ingress-nginx describe service/helloworld-lbName: helloworld-lbNamespace: ingress-nginxLabels: app=helloworldAnnotations: <none>Selector: app=helloworldType: LoadBalancerIP Family Policy: SingleStackIP Families: IPv4IP: 10.96.63.182IPs: 10.96.63.182Port: http 8081/TCPTargetPort: 8080/TCPNodePort: http 30347/TCPEndpoints: 10.244.2.9:8080,10.244.3.18:8080,10.244.3.19:8080Session Affinity: NoneExternal Traffic Policy: ClusterEvents: <none>#测试其中2个node的结果[root@k8s01 ~]# curl -I http://k8s01:30347HTTP/1.1 200Content-Type: application/json;charset=UTF-8Content-Length: 24Date: Thu, 16 Sep 2021 20:27:16 GMT[root@k8s01 ~]# curl -I http://k8s04:30347HTTP/1.1 200Content-Type: application/json;charset=UTF-8Content-Length: 24Date: Thu, 16 Sep 2021 20:27:39 GMT

2.5 产生 MetalLB负载,对外只暴露一个IP

以上工作完成后,还有一个遗漏问题,就是需要配置一个负载均衡,向外只暴露一个ip和端口,免得外部客户端访问时只能访问固定的服务ip,导致单点故障。

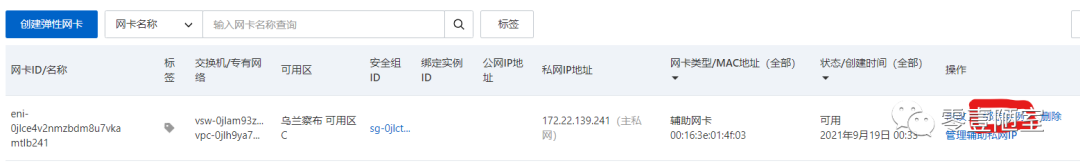

现在我们使用MetalLB来配置这个负载均衡,但配置之前我们需要一个IP池,这个ip池的ip属于node ip相同的网段,但是与node已经使用的ip不同,所以我使用阿里云的弹性网卡申请新的ip,并绑定到实例上。

2.5.1 申请阿里云弹性网卡并绑定ECS

本来我应该为每个ECS都申请一个弹性网卡的ip,并绑定到每个ECS上,然后用每个ECS上的弹性网卡IP组成ip池,再配置到MetalLB的mlb.yaml文件中。但是为了减少费用,也减少配置麻烦,本案例中我只申请一个弹性网卡的ip,绑定到上步骤LoadBalancer所在node的ECS上。

(1)选择“弹性网卡”页面,点击“创建弹性网卡”。

(2)在弹出的对话框中输入,除了“主私网IP”,其它都可以选择,与当前其它ECS保持一致。

找一个与其他ECS处于同一个网段,但是还没有被分配的ip,要了解其它ECS的ip和mask可以通过 ip address show 命名

(3)查看现在LoadBalancer所在node的ECS是哪个

[root@k8s01 ~]# kubectl -n ingress-nginx describe service/helloworld-lbName: helloworld-lbNamespace: ingress-nginxLabels: app=helloworldAnnotations: <none>Selector: app=helloworldType: LoadBalancerIP Family Policy: SingleStackIP Families: IPv4IP: 10.96.38.34IPs: 10.96.38.34LoadBalancer Ingress: 172.22.139.241Port: http 8081/TCPTargetPort: 8080/TCPNodePort: http 32684/TCPEndpoints: 10.244.2.9:8080,10.244.3.18:8080,10.244.3.19:8080Session Affinity: NoneExternal Traffic Policy: ClusterEvents:Type Reason Age From Message---- ------ ---- ---- -------Normal nodeAssigned 2m30s (x3062 over 4h17m) metallb-speaker announcing from node "k8s03"

如图,被分配到K8S03这台ECS上

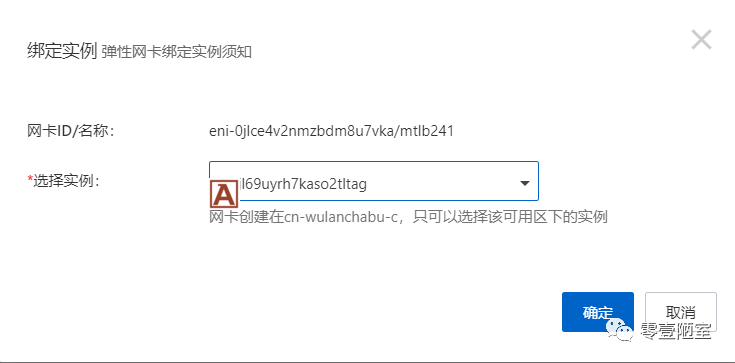

(4)绑定ECS

在新创建的弹性网卡上点击“绑定实例”

在弹出的对话框中选择K8S03,点击确定

(5)重启ECS上network服务

根据阿里云弹性网卡的官方文档,以上操作不会在ECS上自动生效,需要到ECS上重启network服务。

登录ECS,先查看一下现在网络接口的状态

[root@k8s03 ~]# ip address show1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN group default qlen 1000link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00inet 127.0.0.1/8 scope host lovalid_lft forever preferred_lft foreverinet6 ::1/128 scope hostvalid_lft forever preferred_lft forever2: eth0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc mq state UP group default qlen 1000link/ether 00:16:3e:01:54:e6 brd ff:ff:ff:ff:ff:ffinet 172.22.139.242/20 brd 172.22.143.255 scope global dynamic eth0valid_lft 315027811sec preferred_lft 315027811secinet6 fe80::216:3eff:fe01:54e6/64 scope linkvalid_lft forever preferred_lft forever3: docker0: <NO-CARRIER,BROADCAST,MULTICAST,UP> mtu 1500 qdisc noqueue state DOWN group defaultlink/ether 02:42:1e:da:da:a2 brd ff:ff:ff:ff:ff:ffinet 172.17.0.1/16 brd 172.17.255.255 scope global docker0valid_lft forever preferred_lft forever4: flannel.1: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1450 qdisc noqueue state UNKNOWN group defaultlink/ether ee:cf:11:bc:a7:3f brd ff:ff:ff:ff:ff:ffinet 10.244.2.0/32 brd 10.244.2.0 scope global flannel.1valid_lft forever preferred_lft foreverinet6 fe80::eccf:11ff:febc:a73f/64 scope linkvalid_lft forever preferred_lft forever5: cni0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1450 qdisc noqueue state UP group default qlen 1000link/ether 8e:bd:5e:30:41:f8 brd ff:ff:ff:ff:ff:ffinet 10.244.2.1/24 brd 10.244.2.255 scope global cni0valid_lft forever preferred_lft foreverinet6 fe80::8cbd:5eff:fe30:41f8/64 scope linkvalid_lft forever preferred_lft forever13: vethd9cb73ff@if3: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1450 qdisc noqueue master cni0 state UP group defaultlink/ether 72:7c:f0:62:57:81 brd ff:ff:ff:ff:ff:ff link-netnsid 1inet6 fe80::707c:f0ff:fe62:5781/64 scope linkvalid_lft forever preferred_lft forever14: veth24f8bcc2@if3: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1450 qdisc noqueue master cni0 state UP group defaultlink/ether 86:da:f3:4a:b9:ea brd ff:ff:ff:ff:ff:ff link-netnsid 0inet6 fe80::84da:f3ff:fe4a:b9ea/64 scope linkvalid_lft forever preferred_lft forever15: eth1: <BROADCAST,MULTICAST> mtu 1500 qdisc noop state DOWN group default qlen 1000link/ether 00:16:3e:01:4f:03 brd ff:ff:ff:ff:ff:ff[root@k8s03 ~]#

根据 官方文档的说明,一般eth0是现在正在使用网卡,而eth1是刚申请但还没有生效的网卡

检查一下eth1的配置信息

[root@k8s03 multi-nic-util-0.6]# cat etc/sysconfig/network-scripts/ifcfg-eth1DEVICE=eth1BOOTPROTO=dhcpONBOOT=yesTYPE=EthernetUSERCTL=yesPEERDNS=noIPV6INIT=noPERSISTENT_DHCLIENT=yesHWADDR=00:16:3e:01:4f:03DEFROUTE=no

重启network并再次查看eth1的状态

[root@k8s03 multi-nic-util-0.6]# systemctl restart network[root@k8s03 multi-nic-util-0.6]#[root@k8s03 multi-nic-util-0.6]# ip address show1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN group default qlen 1000link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00inet 127.0.0.1/8 scope host lovalid_lft forever preferred_lft foreverinet6 ::1/128 scope hostvalid_lft forever preferred_lft forever2: eth0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc mq state UP group default qlen 1000link/ether 00:16:3e:01:54:e6 brd ff:ff:ff:ff:ff:ffinet 172.22.139.242/20 brd 172.22.143.255 scope global dynamic eth0valid_lft 315359942sec preferred_lft 315359942secinet6 fe80::216:3eff:fe01:54e6/64 scope linkvalid_lft forever preferred_lft forever3: docker0: <NO-CARRIER,BROADCAST,MULTICAST,UP> mtu 1500 qdisc noqueue state DOWN group defaultlink/ether 02:42:1e:da:da:a2 brd ff:ff:ff:ff:ff:ffinet 172.17.0.1/16 brd 172.17.255.255 scope global docker0valid_lft forever preferred_lft forever4: flannel.1: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1450 qdisc noqueue state UNKNOWN group defaultlink/ether ee:cf:11:bc:a7:3f brd ff:ff:ff:ff:ff:ffinet 10.244.2.0/32 brd 10.244.2.0 scope global flannel.1valid_lft forever preferred_lft foreverinet6 fe80::eccf:11ff:febc:a73f/64 scope linkvalid_lft forever preferred_lft forever5: cni0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1450 qdisc noqueue state UP group default qlen 1000link/ether 8e:bd:5e:30:41:f8 brd ff:ff:ff:ff:ff:ffinet 10.244.2.1/24 brd 10.244.2.255 scope global cni0valid_lft forever preferred_lft foreverinet6 fe80::8cbd:5eff:fe30:41f8/64 scope linkvalid_lft forever preferred_lft forever13: vethd9cb73ff@if3: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1450 qdisc noqueue master cni0 state UP group defaultlink/ether 72:7c:f0:62:57:81 brd ff:ff:ff:ff:ff:ff link-netnsid 1inet6 fe80::707c:f0ff:fe62:5781/64 scope linkvalid_lft forever preferred_lft forever14: veth24f8bcc2@if3: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1450 qdisc noqueue master cni0 state UP group defaultlink/ether 86:da:f3:4a:b9:ea brd ff:ff:ff:ff:ff:ff link-netnsid 0inet6 fe80::84da:f3ff:fe4a:b9ea/64 scope linkvalid_lft forever preferred_lft forever15: eth1: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc mq state UP group default qlen 1000link/ether 00:16:3e:01:4f:03 brd ff:ff:ff:ff:ff:ffinet 172.22.139.241/20 brd 172.22.143.255 scope global dynamic eth1valid_lft 315359943sec preferred_lft 315359943secinet6 fe80::216:3eff:fe01:4f03/64 scope linkvalid_lft forever preferred_lft forever

如图,弹性网卡已经绑定成功

2.5.2 应用configMap

将上一步的弹性网卡ip和mask写入mlb.yaml文件,以下为mlb.yaml文件的内容

apiVersion: v1kind: ConfigMapmetadata:namespace: metallb-systemname: configdata:config: |address-pools:- name: defaultprotocol: layer2addresses:- 172.22.139.241/20

应用mlb.yaml文件

[root@k8s01 ~]# kubectl get po,svc,configmap -ANAMESPACE NAME READY STATUS RESTARTS AGEingress-nginx pod/helloworld-69fbd9b4b5-2v5l4 1/1 Running 0 2d8hingress-nginx pod/helloworld-69fbd9b4b5-qb4jh 1/1 Running 0 2d8hingress-nginx pod/helloworld-69fbd9b4b5-xhfvl 1/1 Running 0 2d8hingress-nginx pod/ingress-nginx-admission-create--1-t582c 0/1 Completed 0 2d22hingress-nginx pod/ingress-nginx-admission-patch--1-5czbj 0/1 Completed 1 2d22hingress-nginx pod/ingress-nginx-controller-5ccb4b77c6-fdmbt 1/1 Running 0 2d22hkube-system pod/coredns-7f6cbbb7b8-gd4v5 1/1 Running 0 3d17hkube-system pod/coredns-7f6cbbb7b8-pzc6f 1/1 Running 0 3d17hkube-system pod/etcd-k8s01 1/1 Running 2 3d17hkube-system pod/kube-apiserver-k8s01 1/1 Running 2 3d17hkube-system pod/kube-controller-manager-k8s01 1/1 Running 2 3d17hkube-system pod/kube-flannel-ds-dgc8j 1/1 Running 0 3d17hkube-system pod/kube-flannel-ds-f96s6 1/1 Running 0 3d17hkube-system pod/kube-flannel-ds-mnb5w 1/1 Running 0 3d17hkube-system pod/kube-flannel-ds-rdvn8 1/1 Running 0 3d17hkube-system pod/kube-proxy-54mm9 1/1 Running 0 3d17hkube-system pod/kube-proxy-7k4f7 1/1 Running 0 3d17hkube-system pod/kube-proxy-bhjnr 1/1 Running 0 3d17hkube-system pod/kube-proxy-bqk6g 1/1 Running 0 3d17hkube-system pod/kube-scheduler-k8s01 1/1 Running 2 3d17hmetallb-system pod/controller-6b78bff7d9-mqv9k 1/1 Running 0 145mmetallb-system pod/speaker-79bq5 1/1 Running 0 145mmetallb-system pod/speaker-99fdz 1/1 Running 0 145mmetallb-system pod/speaker-h42vn 1/1 Running 0 145mmetallb-system pod/speaker-rmjxr 1/1 Running 0 145mNAMESPACE NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGEdefault service/kubernetes ClusterIP 10.96.0.1 <none> 443/TCP 3d17hingress-nginx service/helloworld NodePort 10.98.169.177 <none> 8080:30639/TCP 2d8hingress-nginx service/helloworld-lb LoadBalancer 10.96.38.34 172.22.139.241 8081:32684/TCP 144mingress-nginx service/ingress-nginx-controller NodePort 10.101.251.10 <none> 80:32013/TCP,443:30887/TCP 2d22hingress-nginx service/ingress-nginx-controller-admission ClusterIP 10.104.30.0 <none> 443/TCP 2d22hkube-system service/kube-dns ClusterIP 10.96.0.10 <none> 53/UDP,53/TCP,9153/TCP 3d17hNAMESPACE NAME DATA AGEdefault configmap/kube-root-ca.crt 1 3d17hingress-nginx configmap/ingress-controller-leader 0 2d22hingress-nginx configmap/ingress-nginx-controller 0 2d22hingress-nginx configmap/kube-root-ca.crt 1 2d22hkube-node-lease configmap/kube-root-ca.crt 1 3d17hkube-public configmap/cluster-info 1 3d17hkube-public configmap/kube-root-ca.crt 1 3d17hkube-system configmap/coredns 1 3d17hkube-system configmap/extension-apiserver-authentication 6 3d17hkube-system configmap/kube-flannel-cfg 2 3d17hkube-system configmap/kube-proxy 2 3d17hkube-system configmap/kube-root-ca.crt 1 3d17hkube-system configmap/kubeadm-config 1 3d17hkube-system configmap/kubelet-config-1.22 1 3d17hmetallb-system configmap/config 1 143mmetallb-system configmap/kube-root-ca.crt 1 149mnginx-ingress configmap/kube-root-ca.crt 1 3d16hnginx-ingress configmap/nginx-config 0 3d16h[root@k8s01 ~]#

注意helloworld-lb的EXTERNAL-IP的变化,说明MetalLB的负载均衡发挥作用了,但是我们还是测试一下

[root@k8s01 ~]# curl http://172.22.139.241:8081{"message":"HelloWorld"}[root@k8s01 ~]#[root@k8s01 ~]#[root@k8s01 ~]# curl http://172.22.139.241:32684^C

测试172.22.139.241:8081成功,说明可以访问到服务,但是172.22.139.241:32684不通,这属于正常状况,因为MetalLB使用的IP池中地址。

关于MetalLB的工作原理,可以参考一下《METALLB IN LAYER 2 MODE》和《USAGE》,这些文章我觉得都值得详细了解,那就作为下一篇公众号文章的内容吧

3 FAQ

3.1 删除MetalLB的方法

如果要删除MetalLB,按照如下步骤

kubectl delete -f https://raw.githubusercontent.com/metallb/metallb/v0.10.2/manifests/namespace.yamlkubectl delete -f https://raw.githubusercontent.com/metallb/metallb/v0.10.2/manifests/metallb.yaml

如果要重新安装负载,先删除LoadBalancer再重新安装MetalLB,应用LoadBalancer就可以了

[root@k8s01 ~]# kubectl delete -f helloworld-lb.yamlservice "helloworld-lb" deleted

本文参考

《Kubernetes Metal LB for On-Prem / BareMetal Cluster in 10 minutes》

《Setup an NGINX Ingress Controller on Kubernetes》

《Install and configure MetalLB as a load balancer for Kubernetes》