########################环境介绍#######################

基于CentOS7.6版本搭建一套高可用K8S集群,由3个master节点和3个work节点构成。由于内网环境无法直接上网,需要设置代理。

如果可以直接连接互联网,请直接忽略相关部分。

硬件配置和相关规划信息如下:

| 功能 | IP | 主机名 | CPU | 内存(GB) | 系统 |

| master | 192.168.12.191 | master1 | 4 | 8 | CentOS7.6 |

| master | 192.168.12.192 | master2 | 4 | 8 | CentOS7.6 |

| master | 192.168.12.193 | master3 | 4 | 8 | CentOS7.6 |

| worker | 192.168.12.194 | node1 | 4 | 8 | CentOS7.6 |

| worker | 192.168.12.195 | node2 | 4 | 8 | CentOS7.6 |

| worker | 192.168.12.196 | node3 | 4 | 8 | CentOS7.6 |

#####################系统基础配置#####################

安装基础软件包

yum -y install vim git lrzsz wget net-tools bash-completion

------------------------------------------------------------------------------

设置域名解析,配置上网代理,这里使用ansible配置:

hosts文件内容:

cat > hosts << EOF

[master]

192.168.12.191 hostname=master1

192.168.12.192 hostname=master2

192.168.12.193 hostname=master3

[node]

192.168.12.194 hostname=node1

192.168.12.195 hostname=node2

192.168.12.196 hostname=node3

[all:vars]

ansible_ssh_user=monitor

ansible_become=true

EOF

------------------------------------------------------------------------------

ansible playbook文件,为了方便配置内网代理,此方法方便以后做配置,为了快速搭建,后续还是先用shell命令完成,后续再完善这一块内容:

cat > set_etc_hosts.yml << EOF

---

- hosts: all

gather_facts: true

tasks:

- name: modify hostname

raw: "echo {{hostname | quote}} > /etc/hostname"

- name: shell

shell: hostname {{hostname|quote}}

- name: register hosts

set_fact:

hosts: "{{ groups.master + groups.node| unique | list }}"

- debug:

msg: "{{ hosts }}"

- name: set /etc/hosts all ip and hostname

lineinfile:

dest: /etc/hosts

#regexp: '^{{ hostvars[item].hosts }}' #删除已有的记录,对应的state为absent

state: present

line: |

{{ item }} {{ hostvars[item].hostname }}

with_items:

- "{{ hosts }}"

- name: set http and https proxy

lineinfile:

dest: /etc/profile

regexp: '^export http_proxy=192.168.13.x:xxxxx'

state: present

line: |

export http_proxy=192.168.13.x:xxxxx

- name: set http and https proxy

lineinfile:

dest: /etc/profile

regexp: '^export https_proxy=192.168.13.x:xxxxx'

state: present

line: |

export https_proxy=192.168.13.x:xxxxx

- name: set http and https proxy to bashrc

lineinfile:

dest: /etc/bashrc

regexp: '^export http_proxy=192.168.13.x:xxxxx'

state: present

line: |

export http_proxy=192.168.13.x:xxxxx

- name: set http and https proxy to bashrc

lineinfile:

dest: /etc/bashrc

regexp: '^export https_proxy=192.168.13.x:xxxxx'

state: present

line: |

export https_proxy=192.168.13.x:xxxxx

EOF

------------------------------------------------------------------------------

执行命令:

ansible-playbook -i host1 set_etc_hosts.yml

------------------------------------------------------------------------------

设置防火墙、selinux:

systemctl stop firewalld systemctl disable firewalld setenforce 0 sed -i 's/SELINUX=enforcing/SELINUX=disabled/g' /etc/selinux/config

------------------------------------------------------------------------------

设置网桥:

CNI插件需要在L2层进行数据包的转发,需要用到iptables的Forward转发规则,所以需要做如下配置:

cat > /etc/sysctl.d/k8s.conf << EOF

net.bridge.bridge-nf-call-ip6tables = 1

net.bridge.bridge-nf-call-iptables = 1

net.ipv4.ip_forward = 1

EOF

使其生效:

modprobe br_netfilter sysctl -p /etc/sysctl.d/k8s.conf

------------------------------------------------------------------------------

虽然新版的K8S已经支持了swap,但是我们一般用不到,所以关掉:

swapoff -a yes | cp /etc/fstab /etc/fstab_bak cat /etc/fstab_bak | grep -v swap > /etc/fstab rm -rf /etc/fstab_bak

echo vm.swappiness = 0 >> /etc/sysctl.d/k8s.conf sysctl -p /etc/sysctl.d/k8s.conf

------------------------------------------------------------------------------

设置IPVS:

安装软件:

yum -y install ipvsadm ipset

配置脚本:

cat > /etc/sysconfig/modules/ipvs.modules << EOF

#!/bin/bash

modprobe -- ip_vs

modprobe -- ip_vs_rr

modprobe -- ip_vs_wrr

modprobe -- ip_vs_sh

modprobe -- nf_conntrack_ipv4

EOF

执行:

bash /etc/sysconfig/modules/ipvs.modules

#####################负载均衡配置######################

在master节点上安装haproxy和keepalived

yum -y install haproxy keepalived

------------------------------------------------------------------------------

修改haproxy配置文件

cat > /etc/haproxy/haproxy.cfg << EOF

global

log 127.0.0.1 local2

chroot /var/lib/haproxy

pidfile /var/run/haproxy.pid

maxconn 4000

user haproxy

group haproxy

daemon

stats socket /var/lib/haproxy/stats

defaults

mode tcp

log global

option tcplog

option dontlognull

option redispatch

retries 3

timeout queue 1m

timeout connect 10s

timeout client 1m

timeout server 1m

timeout check 10s

maxconn 3000

frontend k8s_https *:8443

mode tcp

maxconn 2000

default_backend https_sri

backend https_sri

balance roundrobin

server master1-api 192.168.12.191:6443 check inter 10000 fall 2 rise 2 weight 1

server master2-api 192.168.12.192:6443 check inter 10000 fall 2 rise 2 weight 1

server master3-api 192.168.12.193:6443 check inter 10000 fall 2 rise 2 weight 1

EOF

------------------------------------------------------------------------------

在master1上创建keepalived配置文件:

cat > /etc/keepalived/keepalived.conf << EOF

global_defs {

router_id LVS_DEVEL

}

vrrp_script check_haproxy {

script "/etc/keepalived/check_haproxy.sh"

interval 3000

}

vrrp_instance VI_1 {

state Master

interface ens160

virtual_router_id 80

priority 100

advert_int 1

authentication {

auth_type PASS

auth_pass 111111

}

virtual_ipaddress {

192.168.12.181/24

}

track_script {

}

}

EOF

------------------------------------------------------------------------------

在master2上创建keepalived配置文件:

cat > /etc/keepalived/keepalived.conf << EOF

global_defs {

router_id LVS_DEVEL

}

vrrp_script check_haproxy {

script "/etc/keepalived/check_haproxy.sh"

interval 3000

}

vrrp_instance VI_1 {

state Slave

interface ens160

virtual_router_id 80

priority 50

advert_int 1

authentication {

auth_type PASS

auth_pass 111111

}

virtual_ipaddress {

192.168.12.181/24

}

track_script {

}

}

EOF

------------------------------------------------------------------------------

在master3上创建keepalived配置文件:

cat > /etc/keepalived/keepalived.conf << EOF

global_defs {

router_id LVS_DEVEL

}

vrrp_script check_haproxy {

script "/etc/keepalived/check_haproxy.sh"

interval 3000

}

vrrp_instance VI_1 {

state Slave

interface ens160

virtual_router_id 80

priority 20

advert_int 1

authentication {

auth_type PASS

auth_pass 111111

}

virtual_ipaddress {

192.168.12.181/24

}

track_script {

}

}

EOF

------------------------------------------------------------------------------

在所有Master节点上创建haproxy检测脚本:

cat > /etc/keepalived/check_haproxy.sh << EOF

#!/bin/bash

if [ `ps -C haproxy --no-header | wc -l` == 0 ]; then

systemctl start haproxy

sleep 3

if [ `ps -C haproxy --no-header | wc -l` == 0 ]; then

systemctl stop keepalived

fi

fi

EOF

------------------------------------------------------------------------------

修改为可执行权限:

chmod +x /etc/keepalived/check_haproxy.sh

------------------------------------------------------------------------------

在所有master节点上启动haproxy和keepalived,并设置为开机自启动:

systemctl start haproxy keepalived systemctl enable haproxy keepalived systemctl status haproxy keepalived

######################K8S集群配置######################

所有节点上安装docker

安装所需软件包:

yum -y install yum-utils device-mapper-persistent-data lvm2

配置repo库:

yum-config-manager --add-repo http://mirrors.aliyun.com/docker-ce/linux/centos/docker-ce.repo

安装docker CE:

yum -y install docker-ce

设置开机自启动:

systemctl start docker systemctl enable docker systemctl status docker

------------------------------------------------------------------------------

设置docker镜像源和Cgroup驱动

修改docker cgroup 驱动为systemd:

cat > /etc/docker/daemon.json << EOF

{

"registry-mirrors": ["https://7y88q662.mirror.aliyuncs.com"],

"exec-opts": ["native.cgroupdriver=systemd"],

"log-driver": "json-file",

"log-opts": {

"max-size": "100m"

},

"storage-driver": "overlay2"

}

EOF

重启docker,验证修改结果:

systemctl restart docker docker info | grep Cgroup

------------------------------------------------------------------------------

安装kubelet、kubeadm和kubectl

添加K8S镜像库:

cat > /etc/yum.repos.d/kubernetes.repo << EOF

[kubernetes]

name=Kubernetes

baseurl=http://mirrors.aliyun.com/kubernetes/yum/repos/kubernetes-el7-x86_64

enabled=1

gpgcheck=1

repo_gpgcheck=0

gpgkey=http://mirrors.aliyun.com/kubernetes/yum/doc/yum-key.gpg http://mirrors.aliyun.com/kubernetes/yum/doc/rpm-package-key.gpg

EOF

master节点安装:

yum -y install kubelet-1.20.5-0 kubeadm-1.20.5-0 kubectl-1.20.5-0

work节点安装:

yum -y install kubelet-1.20.5-0 kubeadm-1.20.5-0

所有节点运行:

启动并设置开机自启动:

systemctl start kubelet systemctl enable kubelet

------------------------------------------------------------------------------

下载K8S相关镜像

由于某些不好描述的原因,无法直接访问默认的Google上的镜像库,可手动设置为从阿里云进行下载

在master节点上,查看所需镜像列表:

kubeadm config images list --kubernetes-version 1.20.5

生成镜像拉取脚本:

kubeadm config images list --kubernetes-version 1.20.5 | sed -e 's/^/docker pull /g' -e 's#k8s.gcr.io#registry.aliyuncs.com/google_containers#g' | sh -x

如果无法解析,需要检查DNS配置

因环境而异,可能需要设置docker代理,可以如下设置解决:

mkdir -p /etc/systemd/system/docker.service.d/

cat > /etc/systemd/system/docker.service.d/http-proxy.conf <<EOF

[Service]

Environment="HTTP_PROXY=http://192.168.13.x:xxxxx/"

Environment="HTTPS_PROXY=http://192.168.13.x:xxxxx/"

Environment="NO_PROXY=localhost,127.0.0.1"

EOF

------------------------------------------------------------------------------

修改镜像标签:

docker images | grep registry.aliyuncs.com/google_containers | awk '{print "docker tag ",$1":"$2,$1":"$2}' | sed -e 's#registry.aliyuncs.com/google_containers#k8s.gcr.io#2' | sh -x

删除无用镜像:

docker images | grep registry.aliyuncs.com/google_containers | awk '{print "docker rmi ", $1":"$2}' | sh -x

------------------------------------------------------------------------------

初始化集群:

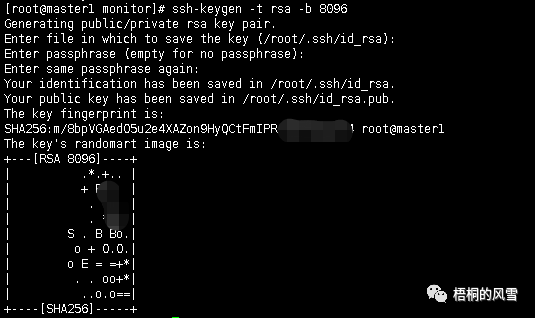

在master1上生成秘钥,以便免密登录master2和master3:

ssh-keygen -t rsa -b 8096

在master1上执行,复制秘钥到master2,master3上:

for host in master2 master3; do ssh-copy-id -i ~/.ssh/id_rsa.pub $host; done

如果未开启root密码登录可以暂时关闭,复制后再关闭:

sed -i 's/^#PermitRootLogin yes/PermitRootLogin yes/g' /etc/ssh/sshd_config

systemcl restart sshd

sed -i 's/^PermitRootLogin yes/#PermitRootLogin prohibit-password/g' /etc/ssh/sshd_config

systemctl restart sshd

------------------------------------------------------------------------------

在master1上创建集群配置文件:

cat > /etc/kubernetes/kubeadm-config.yaml << EOF

apiVersion: kubeadm.k8s.io/v1beta2

kind: ClusterConfiguration

kubernetesVersion: v1.20.5

controlPlaneEndpoint: "192.168.12.181:6443"

apiServer:

certSANs:

- 192.168.12.191

- 192.168.12.192

- 192.168.12.193

- 192.168.12.181

networking:

podSubnet: 10.200.0.0/16

EOF

------------------------------------------------------------------------------

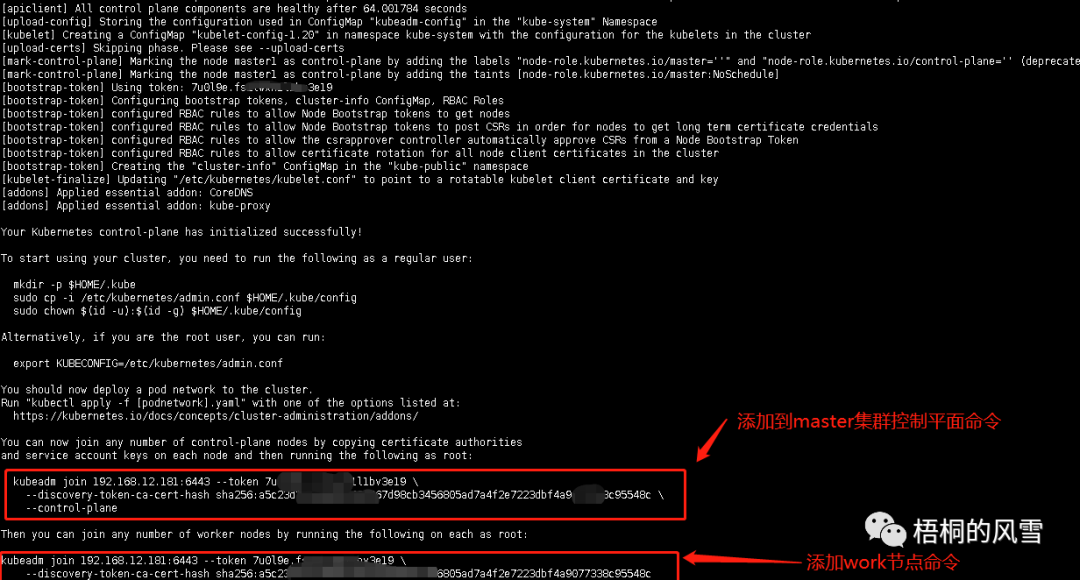

在master1上初始化集群:

kubeadm init --config /etc/kubernetes/kubeadm-config.yaml

上面红框内命令,后续要用到。

如果未初始化成功,则重置后,处理相关问题后,再初始化:

kubeadm reset

本人遇到的问题,报以下错误,且kubelet服务提示未同步:

[kubelet-check] The HTTP call equal to 'curl -sSL http://localhost:10248/healthz' failed with error: Get "http://localhost:10248/healthz": dial tcp [::1]:10248: connect: connection refused.

解决方法,去掉代理,删除下面红色字体部分:

/usr/lib/systemd/system/kubelet.service.d/10-kubeadm.conf

# Note: This dropin only works with kubeadm and kubelet v1.11+

[Service]

Environment="KUBELET_KUBECONFIG_ARGS=--bootstrap-kubeconfig=/etc/kubernetes/bootstrap-kubelet.conf --kubeconfig=/etc/kubernetes/kubelet.conf"

Environment="KUBELET_CONFIG_ARGS=--config=/var/lib/kubelet/config.yaml"

# This is a file that "kubeadm init" and "kubeadm join" generates at runtime, populating the KUBELET_KUBEADM_ARGS variable dynamically

EnvironmentFile=-/var/lib/kubelet/kubeadm-flags.env

# This is a file that the user can use for overrides of the kubelet args as a last resort. Preferably, the user should use

# the .NodeRegistration.KubeletExtraArgs object in the configuration files instead. KUBELET_EXTRA_ARGS should be sourced from this file.

EnvironmentFile=-/etc/sysconfig/kubelet

ExecStart=

ExecStart=/usr/bin/kubelet $KUBELET_KUBECONFIG_ARGS $KUBELET_CONFIG_ARGS $KUBELET_KUBEADM_ARGS $KUBELET_EXTRA_ARGS

------------------------------------------------------------------------------

从master1上拷贝证书到master2和master3上:

for host in master2 master3; do

ssh $host "mkdir -p /etc/kubernetes/pki/etcd; mkdir -p ~/.kube/"

scp /etc/kubernetes/pki/ca.crt $host:/etc/kubernetes/pki/ca.crt

scp /etc/kubernetes/pki/ca.key $host:/etc/kubernetes/pki/ca.key

scp /etc/kubernetes/pki/sa.key $host:/etc/kubernetes/pki/sa.key

scp /etc/kubernetes/pki/sa.pub $host:/etc/kubernetes/pki/sa.pub

scp /etc/kubernetes/pki/front-proxy-ca.crt $host:/etc/kubernetes/pki/front-proxy-ca.crt

scp /etc/kubernetes/pki/front-proxy-ca.key $host:/etc/kubernetes/pki/front-proxy-ca.key

scp /etc/kubernetes/pki/etcd/ca.crt $host:/etc/kubernetes/pki/etcd/ca.crt

scp /etc/kubernetes/pki/etcd/ca.key $host:/etc/kubernetes/pki/etcd/ca.key

scp /etc/kubernetes/admin.conf $host:/etc/kubernetes/admin.conf

scp /etc/kubernetes/admin.conf $host:~/.kube/config

done

------------------------------------------------------------------------------

添加master集群节点和work节点:

添加master2和master3到集群中,即上图中的红框1命令:

kubeadm join 192.168.12.181:6443 --token 7u0l9e.fs2lwxh1l1bv3e19 \

--discovery-token-ca-cert-hash sha256:a5c23d3cbe4681b43e267d98cb3456805ad7a4f2e7223dbf4a9077338c95548c \

--control-plane

在node1、node2、node3上执行以下命令,添加工作节点到集群中,即上图中红框2命令:

kubeadm join 192.168.12.181:6443 --token 7u0l9e.fs2lwxh1l1bv3e19 \

--discovery-token-ca-cert-hash sha256:a5c23d3cbe4681b43e267d98cb3456805ad7a4f2e7223dbf4a9077338c95548c

------------------------------------------------------------------------------

在所有master节点上,删除/etc/kubernetes/manifests/kube-scheduler.yaml和/etc/kubernetes/manifests/kube-controller-manager.yaml配置文件中的--port=0参数:

sed -i 's/- --port=0/#- --port=0/g' /etc/kubernetes/manifests/kube-scheduler.yaml

sed -i 's/- --port=0/#- --port=0/g' /etc/kubernetes/manifests/kube-controller-manager.yaml

在master上配置kubectl:

mkdir -p $HOME/.kube cp -i /etc/kubernetes/admin.conf $HOME/.kube/config chown $(id -u):$(id -g) $HOME/.kube/config

------------------------------------------------------------------------------

在任意master节点安装网络组件calico

下载calico配置文件:

https://docs.projectcalico.org/manifests/calico.yaml

修改文件,增加如下内容:

- name: CALICO_IPV4POOL_CIDR value: "10.200.0.0/16" - name: IP_AUTODETECTION_METHOD value: "interface=eth.*|en.*"

部署组件:

kubectl apply -f calico.yml

------------------------------------------------------------------------------

开启kube-proxy的ipvs

在任意节点上修改ConfigMap kube-proxy中的mode为ipvs

kubectl edit configmap kube-proxy -n kube-system

在master上通过删除pod实现所有节点的kube-proxy pod重启:

kubectl get pod -n kube-system | grep kube-proxy | awk '{system("kubectl delete pod "$1" -n kube-system")}'

验证:

kubectl logs kube-proxy-qtnsr -n kube-system | grep ipvs

########################功能验证#######################

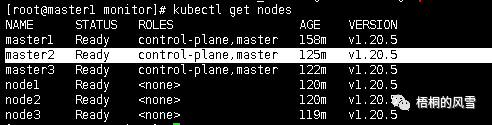

查看集群node信息:

kubectl get nodes

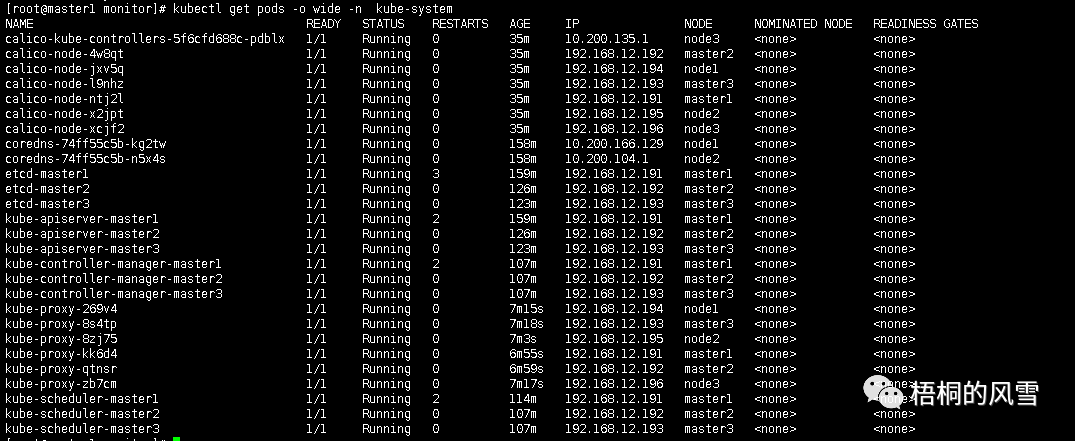

kubectl get pods -o wide -n kube-system

至此,一个高可用K8S集群搭建完毕,下一篇开始将介绍相关组件的使用。