前七篇文章带大家初步了解了kubernetes资源对象,本文主要带大家搭建kubernetes集群环境。

1、kubernetes集群介绍及规划

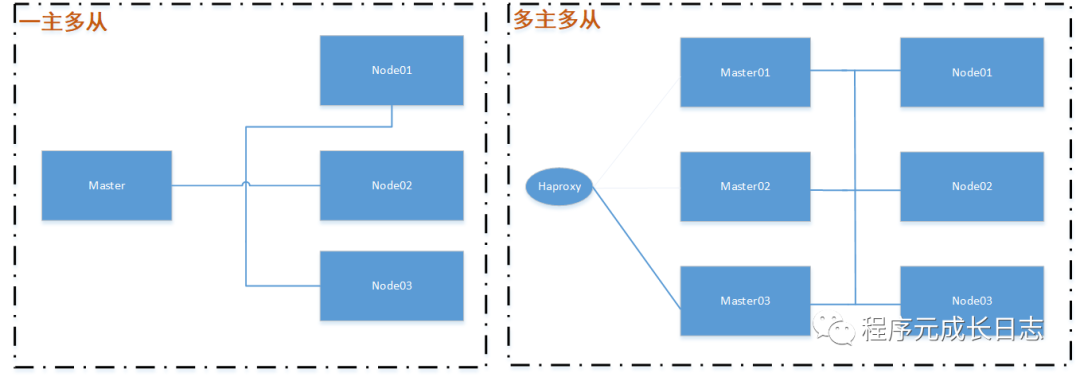

kubernetes集群分为两类:一主多从、多主多从。

一主多从:一台master节点和多台node节点,搭建简单,有单机故障风险,适用于测试环境。

多主多从:多台master节点和多台node节点,搭建麻烦,安全性高,适用于生产环境。

kubeadm:快速搭建kubernetes集群的工具,安装简单方便。

minikube:快速搭建单节点kubernetes的工具,安装简单、适用于入门学习。

二进制包:从官网下载每个组件的二进制包、依次安装,安装过程复杂,但是对于理解kubernetes组件更加有效。

源码编译:下载源码,执行编译为二进制包,然后依次安装,适用于需要修改kubernetes源码的场景。

本文将介绍基于kubeadm搭建一个“一主二从”的kubernetes集群。

2.1 服务器规划

节点名称 | IP | 角色 | 系统版本 |

k8s-master | 192.168.226.100 | Master | CentOS Linux release 7.9.2009 |

k8s-node01 | 192.168.226.101 | Node | CentOS Linux release 7.9.2009 |

k8s-node02 | 192.168.226.102 | Node | CentOS Linux release 7.9.2009 |

harbor | 192.168.226.103 | Harbor | CentOS Linux release 7.9.2009 |

2.2 系统环境初始设置

(1)检查操作系统版本,建议使用centos7以上版本的系统。

[root@master01 ~]# cat /etc/redhat-releaseCentOS Linux release 7.9.2009 (Core)

(2)设置主机名解析(企业中一般使用内部DNS服务器)

[root@node01 ~]# vim /etc/hosts127.0.0.1 localhost localhost.localdomain localhost4 localhost4.localdomain4::1 localhost localhost.localdomain localhost6 localhost6.localdomain6192.168.226.100 master01192.168.226.101 node01192.168.226.102 node02192.168.226.103 harbor

(3)设置时间同步

#启动chronyd[root@master01 ~]# systemctl start chronyd#设置chronyd开机启动启动[root@master01 ~]# systemctl enable chronyd

(4)禁用iptables和firewalled服务【生产环境慎重操作】

#关闭防火墙[root@node01 ~]# systemctl stop firewalld#禁止防火墙开机自动启动[root@node01 ~]# systemctl disable firewalldRemoved symlink /etc/systemd/system/multi-user.target.wants/firewalld.service.Removed symlink /etc/systemd/system/dbus-org.fedoraproject.FirewallD1.service.#如果未安装iptables服务,可以忽略此步[root@node01 ~]# systemctl stop iptablesFailed to stop iptables.service: Unit iptables.service not loaded.[root@node01 ~]# systemctl disable iptablesFailed to execute operation: No such file or directory

(5)禁用SELinux

[root@master01 ~]# vim /etc/sysconfig/selinux# This file controls the state of SELinux on the system.# SELINUX= can take one of these three values:# enforcing - SELinux security policy is enforced.# permissive - SELinux prints warnings instead of enforcing.# disabled - No SELinux policy is loaded.#SELINUX=enforcingSELINUX=disabled# SELINUXTYPE= can take one of three values:# targeted - Targeted processes are protected,# minimum - Modification of targeted policy. Only selected processes are protected.# mls - Multi Level Security protection.SELINUXTYPE=targeted

修改完成后保存退出,重启系统才能生效。

重启前查看

[root@master01 ~]# getenforceEnforcing

重启后查看

[root@master01 ~]# getenforceDisabled

(6)禁用swap分区

swap分区指的是虚拟内存分区,它的作用是在物理内存使用完之后,将磁盘空间虚拟成内存来使用。

启用swap设备会对系统的性能产生非常负面的影响,因此kubernetes要求每个节点都要禁用swap分区。

另外,kubeadm也需要关闭linux的swap系统交换区。

[root@master01 ~]# vim /etc/fstab## /etc/fstab# Created by anaconda on Thu Jul 29 17:29:34 2021## Accessible filesystems, by reference, are maintained under '/dev/disk'# See man pages fstab(5), findfs(8), mount(8) and/or blkid(8) for more info#/dev/mapper/centos-root / xfs defaults 0 0UUID=582672d0-6257-40cd-ad81-3e6a030a111b /boot xfs defaults 0 0#/dev/mapper/centos-swap swap swap defaults 0 0

文件保存后,重启系统才能生效。

修改方式二:直接执行 swapoff –a 命令(临时关闭,重启后会按照/etc/fstab配置文件启用swap分区)

[root@node01 ~]# swapoff -a

验证swap是否关闭:

[root@master01 ~]# free -mtotal used free shared buff/cache availableMem: 1828 404 1035 13 388 1266Swap: 0 0 0

(7)修改linux内核参数

[root@node01 ~]# vim /etc/sysctl.d/kubernetes.confnet.bridge.bridge-nf-call-iptables=1net.bridge.bridge-nf-call-ip6tables=1net.ipv4.ip_forward=1net.ipv4.tcp_tw_recycle=0net.ipv4.neigh.default.gc_thresh1=1024net.ipv4.neigh.default.gc_thresh2=2048net.ipv4.neigh.default.gc_thresh3=4096vm.swappiness=0vm.overcommit_memory=1vm.panic_on_oom=0fs.inotify.max_user_instances=8192fs.inotify.max_user_watches=1048576fs.file-max=52706963fs.nr_open=52706963net.ipv6.conf.all.disable_ipv6=1net.netfilter.nf_conntrack_max=2310720[root@master01 sysctl.d]# sysctl –p#加载网桥过滤模块[root@master01 sysctl.d]# modprobe br_netfilter#查看网桥过滤模块是否加载成功[root@master01 sysctl.d]# lsmod | grep br_netfilterbr_netfilter 22256 0bridge 151336 1 br_netfilter

问题总结:

#执行sysctl -p 时出现:[root@localhost ~]# sysctl -psysctl: cannot stat /proc/sys/net/bridge/bridge-nf-call-ip6tables: No such file or directorysysctl: cannot stat /proc/sys/net/bridge/bridge-nf-call-iptables: No such file or directory#解决方法:[root@localhost ~]# modprobe br_netfilter

(8)升级系统最新稳定内核

查看系统内核,centos7自带的3.10.x内核存在一些bug,导致运行的docker、kubernetes不稳定,在安装kubernetes之前,将系统升级为最新稳定版本,本文用用的是5.4.141。

#查看系统内核[root@harbor ~]# uname -r3.10.0-1160.el7.x86_64

升级系统内核步骤:

#第一步,启用ELRepo仓库[root@master01 /]# rpm --import https://www.elrepo.org/RPM-GPG-KEY-elrepo.org[root@master01 /]# rpm -Uvh http://www.elrepo.org/elrepo-release-7.0-3.el7.elrepo.noarch.rpm#第二步,查看可用的系统内核包[root@master01 ~]# yum --disablerepo="*" --enablerepo="elrepo-kernel" list available已加载插件:fastestmirror, langpacksLoading mirror speeds from cached hostfile* elrepo-kernel: mirrors.tuna.tsinghua.edu.cn可安装的软件包elrepo-release.noarch 7.0-5.el7.elrepo elrepo-kernelkernel-lt-devel.x86_64 5.4.141-1.el7.elrepo elrepo-kernelkernel-lt-doc.noarch 5.4.141-1.el7.elrepo elrepo-kernelkernel-lt-headers.x86_64 5.4.141-1.el7.elrepo elrepo-kernelkernel-lt-tools.x86_64 5.4.141-1.el7.elrepo elrepo-kernelkernel-lt-tools-libs.x86_64 5.4.141-1.el7.elrepo elrepo-kernelkernel-lt-tools-libs-devel.x86_64 5.4.141-1.el7.elrepo elrepo-kernelkernel-ml.x86_64 5.13.11-1.el7.elrepo elrepo-kernelkernel-ml-devel.x86_64 5.13.11-1.el7.elrepo elrepo-kernelkernel-ml-doc.noarch 5.13.11-1.el7.elrepo elrepo-kernelkernel-ml-headers.x86_64 5.13.11-1.el7.elrepo elrepo-kernelkernel-ml-tools.x86_64 5.13.11-1.el7.elrepo elrepo-kernelkernel-ml-tools-libs.x86_64 5.13.11-1.el7.elrepo elrepo-kernelkernel-ml-tools-libs-devel.x86_64 5.13.11-1.el7.elrepo elrepo-kernelperf.x86_64 5.13.11-1.el7.elrepo elrepo-kernelpython-perf.x86_64 5.13.11-1.el7.elrepo elrepo-kernel#可看到本次能升级到5.4.141和5.13.11两个内核版本#第三步,安装新内核[root@master01 /]# yum --enablerepo=elrepo-kernel install kernel-lt#--enablerepo 选项开启CentOS系统上的指定仓库。默认开启的是elrepo,这里用elrepo-kernel替换。#第四步,查看系统的全部内核[root@master01 ~]# awk -F\' '$1=="menuentry " {print i++ " : " $2}' /etc/grub2.cfg0 : CentOS Linux (5.4.141-1.el7.elrepo.x86_64) 7 (Core)1 : CentOS Linux (3.10.0-1160.el7.x86_64) 7 (Core)2 : CentOS Linux (0-rescue-d6070eb94632405a8d7946becd78c86b) 7 (Core)#第五步,设置开机从新内核启动(根据内核列表设置开机启动的内核)[root@master01 ~]# grub2-set-default 0# 0来自上一步查到的内核代号,想将哪一个内核设为默认启动内核则指定哪一个代号#第六步,生成 grub 配置文件并重启[root@master01 ~]# grub2-mkconfig -o /boot/grub2/grub.cfgGenerating grub configuration file ...Found linux image: /boot/vmlinuz-5.4.141-1.el7.elrepo.x86_64Found initrd image: /boot/initramfs-5.4.141-1.el7.elrepo.x86_64.imgFound linux image: /boot/vmlinuz-3.10.0-1160.el7.x86_64Found initrd image: /boot/initramfs-3.10.0-1160.el7.x86_64.imgFound linux image: /boot/vmlinuz-0-rescue-d6070eb94632405a8d7946becd78c86bFound initrd image: /boot/initramfs-0-rescue-d6070eb94632405a8d7946becd78c86b.imgdone[root@master01 ~]# reboot#第七步,查看正在使用的内核[root@master01 ~]# uname -r5.4.141-1.el7.elrepo.x86_64

升级内核问题总结:

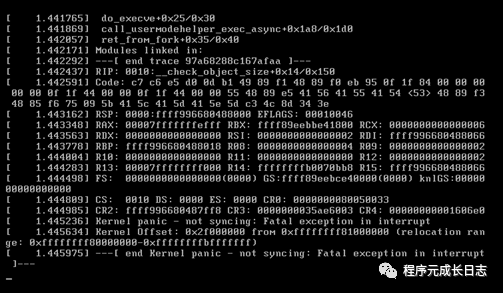

如果用vmware软件安装的centos7系统,升级完系统内核后,启动报错如下:

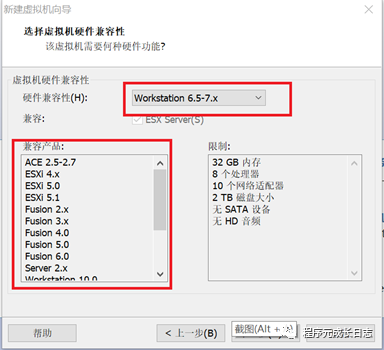

报错原因:虚拟机硬件版本兼容性问题,新建虚拟机的时候,选择自定义安装,版本选择较高兼容性的版本:

新建虚拟机时候,选择兼容性高的版本,如下图:

(9)配置ipvs功能

默认情况下,Kube-proxy将在kubeadm部署的集群中以iptables模式运,kube-proxy主要解决的是svc(service)与pod之间的调度关系,ipvs的调度方式可以极大的增加它的访问效率。

#安装ipset ipvsadm[root@master01 ~]# yum install ipset ipvsadm –y#添加需要加载的模块,写入脚本cat > /etc/sysconfig/modules/ipvs.modules <<EOF#!/bin/bashmodprobe -- ip_vsmodprobe -- ip_vs_rrmodprobe -- ip_vs_wrrmodprobe -- ip_vs_sh#modprobe -- nf_conntrack_ipv4 #######4.19版本一下内核使用modprobe -- nf_conntrack #######4.19版本及以上内核使用modprobe -- ip_tablesmodprobe -- ip_setmodprobe -- xt_setmodprobe -- ipt_setmodprobe -- ipt_rpfiltermodprobe -- ipt_REJECTmodprobe -- ipipEOF#为脚本添加执行权限[root@master01 modules]# chmod 755 /etc/sysconfig/modules/ipvs.modules#执行脚本[root@master01 modules]# bash /etc/sysconfig/modules/ipvs.modules#在内核4.19版本nf_conntrack_ipv4已经改为nf_conntrack,升级为4.19版本及以上的内核有可能会报错 “modprobe: FATAL: Module nf_conntrack_ipv4 not ,参考上述注释修改。#查看对应的模块是否加载成功[root@node01 ~]# lsmod | grep -e ip_vs -e nf_conntrack_ipv4[root@master01 ~]# lsmod | grep -e ip_vs -e nf_conntrack -e ip

2.3 安装docker

(1)卸载旧版本

[root@master01 yum.repos.d]# yum remove docker \docker-client \docker-client-latest \docker-common \docker-latest \docker-latest-logrotate \docker-logrotate \docker-engine

(2)切换镜像源,切换为阿里云镜像源

[root@master01 yum.repos.d]# yum-config-manager \--add-repo \http://mirrors.aliyun.com/docker-ce/linux/centos/docker-ce.repo已加载插件:fastestmirror, langpacksadding repo from: http://mirrors.aliyun.com/docker-ce/linux/centos/docker-ce.repograbbing file http://mirrors.aliyun.com/docker-ce/linux/centos/docker-ce.repo to /etc/yum.repos.d/docker-ce.reporepo saved to /etc/yum.repos.d/docker-ce.repo

(3)查看当前镜像支持的docker版本

[root@master01 yum.repos.d]# yum list docker-ce --showduplicates

(4)安装制定版本的docker-ce

[root@master01 yum.repos.d]# yum install --setopt=obsoletes=0 docker-ce-18.06.3.ce-3.el7 -y

(5)配置阿里云镜像加速、配置crgoup driver

Docker默认情况下使用的cgroup driver为cgroupfs,而kubernetes推荐使用systemd替换cgroups。

mkdir -p /etc/dockertee /etc/docker/daemon.json <<-'EOF'{"exec-opts": ["native.cgroupdriver=systemd"],"registry-mirrors": ["https://4cnob6ep.mirror.aliyuncs.com"],"log-driver": "json-file","log-opts": {"max-size": "100m"}}EOFsystemctl daemon-reloadsystemctl restart docker

(6)设置docker开机自动启动

[root@master01 docker]# systemctl enable docker

(7)查看docker版本、详细信息

[root@master01 docker]# docker version[root@master01 docker]# docker info

3、搭建Kubernetes集群

3.1 安装kubernetes组件

(1)由于kubernetes的镜像源在国外,下载速度慢,切换为国内阿里云的镜像源

cat <<EOF > /etc/yum.repos.d/kubernetes.repo[kubernetes]name=Kubernetesbaseurl=http://mirrors.aliyun.com/kubernetes/yum/repos/kubernetes-el7-x86_64enabled=1gpgcheck=0repo_gpgcheck=0gpgkey=http://mirrors.aliyun.com/kubernetes/yum/doc/yum-key.gpghttp://mirrors.aliyun.com/kubernetes/yum/doc/rpm-package-key.gpgEOF

(2)安装kubeadm、kubelet、kubectl

[root@master01 ~]# yum install --setopt=obsoletes=0 -y kubelet-1.18.0 kubeadm-1.18.0 kubectl-1.18.0

(3)配置kubelet的cgroup

[root@master01 yum.repos.d]# vim /etc/sysconfig/kubelet#KUBELET_EXTRA_ARGS=KUBELET_CGROUP_ARGS=”--cgroup-driver=systemd”KUBE_PROXY_MODE=ipvsKUBELET_EXTRA_ARGS="--fail-swap-on=false"

(4)重启kubelet,并设置为开机启动

[root@master01 yum.repos.d]# systemctl daemon-reload[root@master01 yum.repos.d]# systemctl restart kubelet[root@master01 yum.repos.d]# systemctl enable kubeletCreated symlink from /etc/systemd/system/multi-user.target.wants/kubelet.service to /usr/lib/systemd/system/kubelet.service.

3.2 启用kubectl命令自动补全

[root@master01 ~]# yum install -y bash-completion[root@master01 ~]# echo 'source /usr/share/bash-completion/bash_completion' >> /etc/profile[root@master01 ~]# source /etc/profile[root@master01 ~]# echo "source <(kubectl completion bash)" >> ~/.bashrc[root@master01 ~]# source ~/.bashrc

3.3 修改kubeadm默认配置

Kubeadm的初始化控制平面(init)命令和加入节点(join)命令均可以通过指定的配置文件修改默认参数的值。Kubeadm将配置文件以ConfigMap形式保存在集群中,便于后续的查询和升级工作,kubeadm config子命令提供了对这组功能的支持。

kubeadm config upload from-file:由配置文件上传到集群中生成ConfigMap。

kubeadm config upload from-flags:由配置参数生成ConfigMap。

kubeadm config view:查看当前集群中的配置值。

kubeadm config print init-defaults:输出kubeadm init默认参数文件的内容。

kubeadm config print join-defaults:输出kubeadm join默认参数文件的内容。

kubeadm config migrate:在新旧版本之间进行配置转换。

kubeadm config images list:列出所需的镜像列表。

kubeadm config images pull:拉取镜像到本地。

使用kubeadm config print init-defaults打印集群初始化默认的使用的配置,通过如下指令创建默认的kubeadm-config.yaml文件:

[root@master01 kubernetes]# kubeadm config print init-defaults > kubeadm-config.yamlW0820 20:34:03.392106 3417 configset.go:202] WARNING: kubeadm cannot validate component configs for API groups [kubelet.config.k8s.io kubeproxy.config.k8s.io]

(1)修改kubeadm-config.yaml默认配置:

localAPIEndpoint:advertiseAddress: 192.168.226.100(修改为master节点的ip)imageRepository: k8s.gcr.io(拉取镜像的仓库地址)kubernetesVersion: v1.18.0(k8s版本)

(2)增加配置:

networking:podSubnet: 10.244.0.0/16#声明pod的所处网段【注意,必须要添加此内容】默认情况下我们会安装一个#flannel网络插件去实现覆盖性网路,它的默认pod网段就这么一个网段,#如果这个网段不一致的话,后期我们需要进入pod一个个修改

#文件末尾增加如下配置,把默认的调度方式改为ipvs调度模式---apiVersion: kubeproxy.config.k8s.io/v1alpha1kind: KubeProxyConfigurationfeatureGates:SupportIPVSProxyMode: truemode: ipvs

(3)修改后的kubeadm配置如下(注:此配置适用于一主多从模式集群):

apiVersion: kubeadm.k8s.io/v1beta2bootstrapTokens:- groups:- system:bootstrappers:kubeadm:default-node-tokentoken: abcdef.0123456789abcdefttl: 24h0m0susages:- signing- authenticationkind: InitConfigurationlocalAPIEndpoint:advertiseAddress: 192.168.226.100bindPort: 6443nodeRegistration:criSocket: /var/run/dockershim.sockname: master01taints:- effect: NoSchedulekey: node-role.kubernetes.io/master---apiServer:timeoutForControlPlane: 4m0sapiVersion: kubeadm.k8s.io/v1beta2certificatesDir: /etc/kubernetes/pkiclusterName: kubernetescontrollerManager: {}dns:type: CoreDNSetcd:local:dataDir: /var/lib/etcdimageRepository: k8s.gcr.iokind: ClusterConfigurationkubernetesVersion: v1.18.0networking:dnsDomain: cluster.localpodSubnet: 10.244.0.0/16serviceSubnet: 10.96.0.0/12scheduler: {}---apiVersion: kubeproxy.config.k8s.io/v1alpha1kind: KubeProxyConfigurationfeatureGates:SupportIPVSProxyMode: truemode: ipvs

(4)kubeadm-config.yaml组成部署说明:

InitConfiguration:用于定义一些初始化配置,如初始化使用的token以及apiserver地址等。

ClusterConfiguration:用于定义apiserver、etcd、network、scheduler、controller-manager等master组件相关配置项。

KubeletConfiguration:用于定义kubelet组件相关的配置项。

KubeProxyConfiguration:用于定义kube-proxy组件相关的配置项。

# 生成KubeletConfiguration示例文件kubeadm config print init-defaults --component-configs KubeletConfiguration# 生成KubeletConfiguration示例文件并写入文件kubeadm config print init-defaults --component-configs KubeletConfiguration > kubeadm-kubeletconfig.yaml# 生成KubeProxyConfiguration示例文件kubeadm config print init-defaults --component-configs KubeProxyConfiguration# 生成KubeProxyConfiguration示例文件并写入文件kubeadm config print init-defaults --component-configs KubeProxyConfiguration > kubeadm-kubeproxyconfig.yaml

3.4 初始化master节点

(1)准备镜像

由于国内不能访问谷歌仓库(使用科学上网方式除外),需要手动将kubernetes集群所需镜像从国内仓库下载到本地,然后再打上k8s.gcr.io的tag,操作步骤如下:

[root@master01 ~]# kubeadm config images listW0820 21:31:08.731408 7659 version.go:102] could not fetch a Kubernetes version from the internet: unable to get URL "https://dl.k8s.io/release/stable-1.txt": Get https://dl.k8s.io/release/stable-1.txt: net/http: request canceled while waiting for connection (Client.Timeout exceeded while awaiting headers)W0820 21:31:08.731739 7659 version.go:103] falling back to the local client version: v1.18.0W0820 21:31:08.732395 7659 configset.go:202] WARNING: kubeadm cannot validate component configs for API groups [kubelet.config.k8s.io kubeproxy.config.k8s.io]k8s.gcr.io/kube-apiserver:v1.18.0k8s.gcr.io/kube-controller-manager:v1.18.0k8s.gcr.io/kube-scheduler:v1.18.0k8s.gcr.io/kube-proxy:v1.18.0k8s.gcr.io/pause:3.2k8s.gcr.io/etcd:3.4.3-0k8s.gcr.io/coredns:1.6.7

第二步,编写下载镜像的脚本:

#编写镜像清单文件[root@master01 ~]# vim k8s-images.txtkube-apiserver:v1.18.0kube-controller-manager:v1.18.0kube-scheduler:v1.18.0kube-proxy:v1.18.0pause:3.2etcd:3.4.3-0coredns:1.6.7#编写下载镜像脚本文件[root@master01 kubernetes]# vim k8s-images.shfor image in `cat k8s-images.txt`doecho 下载---- $imagedocker pull gotok8s/$imagedocker tag gotok8s/$image k8s.gcr.io/$imagedocker rmi gotok8s/$imagedone#授执行权限[root@master01 ~]# chmod 777 k8s-images.sh#执行脚本,从国内仓库下载镜像[root@master01 kubernetes]# ./k8s-images.sh

(2)初始化master节点

使用指定的yaml文件进行初始化安装自动颁发证书(1.13后支持) 把所有的信息都写入到 kubeadm-init.log中,

[root@master01 kubernetes]# kubeadm init --config=kubeadm-config.yaml --upload-certs | tee kubeadm-init.log#根据控制台日志提示,初始化成功后的后置操作[root@master01 kubernetes]# mkdir -p $HOME/.kube[root@master01 kubernetes]# sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config[root@master01 kubernetes]# sudo chown $(id -u):$(id -g) $HOME/.kube/config#查看集群节点状态[root@master01 kubernetes]# kubectl get nodesNAME STATUS ROLES AGE VERSIONmaster01 NotReady master 20m v1.18.0

(3)集群初始化日志解析

[root@master01 kubernetes]# kubeadm init --config=kubeadm-config.yaml --upload-certs | tee kubeadm-init.log #初始化集群命令W0820 21:46:37.633578 9253 configset.go:202] WARNING: kubeadm cannot validate component configs for API groups [kubelet.config.k8s.io kubeproxy.config.k8s.io][init] Using Kubernetes version: v1.18.0 #kubernetes版本[preflight] Running pre-flight checks #检测当前运行环境[preflight] Pulling images required for setting up a Kubernetes cluster#下载镜像[preflight] This might take a minute or two, depending on the speed of your internet connection[preflight] You can also perform this action in beforehand using 'kubeadm config images pull' #安装镜像[kubelet-start] Writing kubelet environment file with flags to file "/var/lib/kubelet/kubeadm-flags.env" #kubelet环境变量保存位置[kubelet-start] Writing kubelet configuration to file "/var/lib/kubelet/config.yaml" #kubelet配置文件保存位置[kubelet-start] Starting the kubelet[certs] Using certificateDir folder "/etc/kubernetes/pki"#kubernetes使用的证书位置[certs] Generating "ca" certificate and key[certs] Generating "apiserver" certificate and key[certs] apiserver serving cert is signed for DNS names [master01 kubernetes kubernetes.default kubernetes.default.svc kubernetes.default.svc.cluster.local] and IPs [10.96.0.1 192.168.226.100] #配置DNS以及当前默认的域名[certs] Generating "apiserver-kubelet-client" certificate and key#生成kubernetes组件的密钥[certs] Generating "front-proxy-ca" certificate and key[certs] Generating "front-proxy-client" certificate and key[certs] Generating "etcd/ca" certificate and key[certs] Generating "etcd/server" certificate and key[certs] etcd/server serving cert is signed for DNS names [master01 localhost] and IPs [192.168.226.100 127.0.0.1 ::1][certs] Generating "etcd/peer" certificate and key[certs] etcd/peer serving cert is signed for DNS names [master01 localhost] and IPs [192.168.226.100 127.0.0.1 ::1][certs] Generating "etcd/healthcheck-client" certificate and key[certs] Generating "apiserver-etcd-client" certificate and key[certs] Generating "sa" key and public key[kubeconfig] Using kubeconfig folder "/etc/kubernetes" #kubernetes组件配置文件位置[kubeconfig] Writing "admin.conf" kubeconfig file[kubeconfig] Writing "kubelet.conf" kubeconfig file[kubeconfig] Writing "controller-manager.conf" kubeconfig file[kubeconfig] Writing "scheduler.conf" kubeconfig file[control-plane] Using manifest folder "/etc/kubernetes/manifests"[control-plane] Creating static Pod manifest for "kube-apiserver"W0820 21:46:45.991676 9253 manifests.go:225] the default kube-apiserver authorization-mode is "Node,RBAC"; using "Node,RBAC"[control-plane] Creating static Pod manifest for "kube-controller-manager"W0820 21:46:45.993829 9253 manifests.go:225] the default kube-apiserver authorization-mode is "Node,RBAC"; using "Node,RBAC"[control-plane] Creating static Pod manifest for "kube-scheduler"[etcd] Creating static Pod manifest for local etcd in "/etc/kubernetes/manifests"[wait-control-plane] Waiting for the kubelet to boot up the control plane as static Pods from directory "/etc/kubernetes/manifests". This can take up to 4m0s[apiclient] All control plane components are healthy after 21.006303 seconds[upload-config] Storing the configuration used in ConfigMap "kubeadm-config" in the "kube-system" Namespace[kubelet] Creating a ConfigMap "kubelet-config-1.18" in namespace kube-system with the configuration for the kubelets in the cluster[upload-certs] Storing the certificates in Secret "kubeadm-certs" in the "kube-system" Namespace[upload-certs] Using certificate key:19a94c1fef133502412cec496124e49424e6fb40900ea3b8fb25bb2a30947217[mark-control-plane] Marking the node master01 as control-plane by adding the label "node-role.kubernetes.io/master=''"[mark-control-plane] Marking the node master01 as control-plane by adding the taints [node-role.kubernetes.io/master:NoSchedule][bootstrap-token] Using token: abcdef.0123456789abcdef[bootstrap-token] Configuring bootstrap tokens, cluster-info ConfigMap, RBAC Roles[bootstrap-token] configured RBAC rules to allow Node Bootstrap tokens to get nodes[bootstrap-token] configured RBAC rules to allow Node Bootstrap tokens to post CSRs in order for nodes to get long term certificate credentials[bootstrap-token] configured RBAC rules to allow the csrapprover controller automatically approve CSRs from a Node Bootstrap Token[bootstrap-token] configured RBAC rules to allow certificate rotation for all node client certificates in the cluster[bootstrap-token] Creating the "cluster-info" ConfigMap in the "kube-public" namespace[kubelet-finalize] Updating "/etc/kubernetes/kubelet.conf" to point to a rotatable kubelet client certificate and key[addons] Applied essential addon: CoreDNS[addons] Applied essential addon: kube-proxyYour Kubernetes control-plane has initialized successfully! #初始化成功To start using your cluster, you need to run the following as a regular user:mkdir -p $HOME/.kubesudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/configsudo chown $(id -u):$(id -g) $HOME/.kube/configYou should now deploy a pod network to the cluster.Run "kubectl apply -f [podnetwork].yaml" with one of the options listed at:https://kubernetes.io/docs/concepts/cluster-administration/addons/Then you can join any number of worker nodes by running the following on each as root:kubeadm join 192.168.226.100:6443 --token abcdef.0123456789abcdef \--discovery-token-ca-cert-hash sha256:b58abf99813a2c8a98ff35e6ef7af4a0684869a60791efef561dc600d2d488e4

3.5 node节点集群

(1)将master节点上下载镜像的脚本分别复制到node01、node02节点

[root@master01 kubernetes]# scp k8s-images.txt k8s-images.sh root@192.168.226.101:/data/kubernetes/The authenticity of host '192.168.226.101 (192.168.226.101)' can't be established.ECDSA key fingerprint is SHA256:bGZOi1f3UFkN+Urjo7zAmsMyeGbgU7f+ROmAjweU2ac.ECDSA key fingerprint is MD5:8f:73:26:e2:6d:f4:00:87:1d:eb:42:4e:03:9d:39:a0.Are you sure you want to continue connecting (yes/no)? yesWarning: Permanently added '192.168.226.101' (ECDSA) to the list of known hosts.root@192.168.226.101's password:k8s-images.txt 100% 134 6.9KB/s 00:00k8s-images.sh 100% 170 70.2KB/s 00:00[root@master01 kubernetes]# scp k8s-images.txt k8s-images.sh root@192.168.226.102:/data/kubernetes/The authenticity of host '192.168.226.102 (192.168.226.102)' can't be established.ECDSA key fingerprint is SHA256:IQbFmazVPUenOUh5+o6183jj7FJzKDXBfTPgG6imdWU.ECDSA key fingerprint is MD5:3c:44:a8:0c:fd:66:88:ba:6c:aa:fe:28:46:f5:25:d1.Are you sure you want to continue connecting (yes/no)? yesWarning: Permanently added '192.168.226.102' (ECDSA) to the list of known hosts.root@192.168.226.102's password:k8s-images.txt 100% 134 54.5KB/s 00:00k8s-images.sh 100% 170 26.3KB/s 00:00

(2)node01、node02机器分别执行如下命令,下载镜像:

[root@node01 kubernetes]# ./k8s-images.sh

(3)参考master节点初始化后的日志,分别在node01、node02节点执行kubeadm join命令,将这两个节点加入集群:

[root@node02 kubernetes]# kubeadm join 192.168.226.100:6443 --token abcdef.0123456789abcdef \> --discovery-token-ca-cert-hash sha256:b58abf99813a2c8a98ff35e6ef7af4a0684869a60791efef561dc600d2d488e4W0820 22:09:55.345093 9653 join.go:346] [preflight] WARNING: JoinControlPane.controlPlane settings will be ignored when control-plane flag is not set.[preflight] Running pre-flight checks[preflight] Reading configuration from the cluster...[preflight] FYI: You can look at this config file with 'kubectl -n kube-system get cm kubeadm-config -oyaml'[kubelet-start] Downloading configuration for the kubelet from the "kubelet-config-1.18" ConfigMap in the kube-system namespace[kubelet-start] Writing kubelet configuration to file "/var/lib/kubelet/config.yaml"[kubelet-start] Writing kubelet environment file with flags to file "/var/lib/kubelet/kubeadm-flags.env"[kubelet-start] Starting the kubelet[kubelet-start] Waiting for the kubelet to perform the TLS Bootstrap...This node has joined the cluster:* Certificate signing request was sent to apiserver and a response was received.* The Kubelet was informed of the new secure connection details.Run 'kubectl get nodes' on the control-plane to see this node join the cluster.

(4)在master节点查看集群状态

[root@master01 kubernetes]# kubectl get nodesNAME STATUS ROLES AGE VERSIONmaster01 NotReady master 24m v1.18.0node01 NotReady <none> 92s v1.18.0node02 NotReady <none> 65s v1.18.0

注:可以看到集群所有节点的状态都是NotReady,因为缺少网络插件,如flannel,地址https://github.com/flannel-io/flannel可以查看flannel在github上的相关项目。

(5)查看集群的其他信息

#查看组件的健康信息[root@master01 kubernetes]# kubectl get csNAME STATUS MESSAGE ERRORcontroller-manager Healthy okscheduler Healthy oketcd-0 Healthy {"health":"true"}#查看kube-system命名空间下的pod,均为kubernetes系统pod[root@master01 kubernetes]# kubectl get pods -n kube-systemNAME READY STATUS RESTARTS AGEcoredns-66bff467f8-5nfc2 0/1 Pending 0 26mcoredns-66bff467f8-r966w 0/1 Pending 0 26metcd-master01 1/1 Running 0 27mkube-apiserver-master01 1/1 Running 0 27mkube-controller-manager-master01 1/1 Running 0 27mkube-proxy-g98hd 1/1 Running 0 26mkube-proxy-hbt4j 1/1 Running 0 4m42skube-proxy-mtmrm 1/1 Running 0 4m15skube-scheduler-master01 1/1 Running 0 27m#查看当前系统的所有命名空间[root@master01 kubernetes]# kubectl get nsNAME STATUS AGEdefault Active 28mkube-node-lease Active 28mkube-public Active 28mkube-system Active 28m#查询kubernetes集群的所有ConfigMap[root@master01 kubernetes]# kubectl get configmaps -ANAMESPACE NAME DATA AGEkube-public cluster-info 2 44mkube-system coredns 1 44mkube-system extension-apiserver-authentication 6 44mkube-system kube-proxy 2 44mkube-system kubeadm-config 2 44mkube-system kubelet-config-1.18 1 44m

3.6 安装网络插件

(1)下载镜像

[root@master01 kubernetes]# wget https://raw.githubusercontent.com/coreos/flannel/master/Documentation/kube-flannel.yml--2021-08-21 08:48:14-- https://raw.githubusercontent.com/coreos/flannel/master/Documentation/kube-flannel.yml正在解析主机 raw.githubusercontent.com (raw.githubusercontent.com)... 0.0.0.0, ::正在连接 raw.githubusercontent.com (raw.githubusercontent.com)|0.0.0.0|:443... 失败:拒绝连接。正在连接 raw.githubusercontent.com (raw.githubusercontent.com)|::|:443... 失败:无法指定被请求的地址。#下载kube-flannel.yml显示“拒绝连接”,因为网站被防火墙屏蔽了,解决方案:在/etd/hosts文件中添加一条, 199.232.68.133 raw.githubusercontent.com[root@master01 kubernetes]# vim /etc/hosts127.0.0.1 localhost localhost.localdomain localhost4 localhost4.localdomain4::1 localhost localhost.localdomain localhost6 localhost6.localdomain6192.168.226.100 master01192.168.226.101 node01192.168.226.102 node02192.168.226.103 harbor199.232.68.133 raw.githubusercontent.com#重新下载kube-flannel.yml文件[root@master01 kubernetes]# wget https://raw.githubusercontent.com/coreos/flannel/master/Documentation/kube-flannel.yml--2021-08-21 08:50:39-- https://raw.githubusercontent.com/coreos/flannel/master/Documentation/kube-flannel.yml正在解析主机 raw.githubusercontent.com (raw.githubusercontent.com)... 199.232.68.133正在连接 raw.githubusercontent.com (raw.githubusercontent.com)|199.232.68.133|:443... 已连接。已发出 HTTP 请求,正在等待回应... 200 OK长度:4813 (4.7K) [text/plain]正在保存至: “kube-flannel.yml”100%[=================================================================================================================================>] 4,813 --.-K/s 用时 0s2021-08-21 08:50:40 (20.8 MB/s) - 已保存 “kube-flannel.yml” [4813/4813])

(2)修改yml

……containers:- name: kube-flannelimage: quay.io/coreos/flannel:v0.14.0command:- /opt/bin/flanneldargs:- --ip-masq- --kube-subnet-mgrresources: #############资源限额,生产环境一定要根据机器实际配置适当调大此单数requests:cpu: "100m"memory: "50Mi"limits:cpu: "100m"memory: "50Mi"……

(3)安装网络插件

#执行安装命令[root@master01 kubernetes]# kubectl apply -f kube-flannel.ymlpodsecuritypolicy.policy/psp.flannel.unprivileged createdclusterrole.rbac.authorization.k8s.io/flannel createdclusterrolebinding.rbac.authorization.k8s.io/flannel createdserviceaccount/flannel createdconfigmap/kube-flannel-cfg createddaemonset.apps/kube-flannel-ds created

注:如果遇到flannel进行拉取失败的问题,有两种解决方案:

(一)、将kube-flannel.yml文件中所有kuay.io的镜像地址修改为国内可访问的仓库地址。

(二)、手动下载镜像(https://github.com/flannel-io/flannel/releases),导入本地。

3.7 检查集群状态

[root@master01 ~]# kubectl get nodesNAME STATUS ROLES AGE VERSIONmaster01 Ready master 19h v1.18.0node01 Ready <none> 18h v1.18.0node02 Ready <none> 18h v1.18.0#集群所有节点状态均为ready[root@master01 ~]#[root@master01 ~]# kubectl get pods -n kube-systemNAME READY STATUS RESTARTS AGEcoredns-66bff467f8-5nfc2 1/1 Running 3 19hcoredns-66bff467f8-r966w 1/1 Running 3 19hetcd-master01 1/1 Running 4 19hkube-apiserver-master01 1/1 Running 5 19hkube-controller-manager-master01 1/1 Running 4 19hkube-flannel-ds-d6pl5 1/1 Running 3 7h37mkube-flannel-ds-lvdqq 1/1 Running 3 7h37mkube-flannel-ds-nbq6r 1/1 Running 3 7h37mkube-proxy-g98hd 1/1 Running 4 19hkube-proxy-hbt4j 1/1 Running 4 18hkube-proxy-mtmrm 1/1 Running 4 18hkube-scheduler-master01 1/1 Running 4 19h#kubernetes系统组件运行正常[root@master01 ~]#[root@master01 ~]# kubectl get csNAME STATUS MESSAGE ERRORscheduler Healthy okcontroller-manager Healthy oketcd-0 Healthy {"health":"true"}#kubernetes组件状态健康#检查ipvs规则[root@node01 ~]# ipvsadm -LnIP Virtual Server version 1.2.1 (size=4096)Prot LocalAddress:Port Scheduler Flags-> RemoteAddress:Port Forward Weight ActiveConn InActConnTCP 10.96.0.1:443 rr-> 192.168.226.100:6443 Masq 1 1 0TCP 10.96.0.10:53 rr-> 10.244.0.10:53 Masq 1 0 0-> 10.244.0.11:53 Masq 1 0 0TCP 10.96.0.10:9153 rr-> 10.244.0.10:9153 Masq 1 0 0-> 10.244.0.11:9153 Masq 1 0 0UDP 10.96.0.10:53 rr-> 10.244.0.10:53 Masq 1 0 0-> 10.244.0.11:53 Masq 1 0 0

4、安装harbor

VMware开源的企业级Registry项目Harbor,以Docker公司开源的registry 为基础,提供了管理UI、基于角色的访问控制(Role Based Access Control)、AD/LDAP集成、审计日志(Audit logging) 等企业用户需求的功能。Harbor由一组容器组成:nginx

、harbor-jobservice

、harbor-ui

、harbor-db

、harbor-adminserver

、registry

以及harbor-log

,这些容器之间都通过Docker内的DNS服务发现来连接通信。通过这种方式,每一个容器都可以通过相应的容器来进行访问。对于终端用户来说,只有反向代理(Nginx)服务的端口需要对外暴露。Harbor是通过docker compose来部署的。在Harbor源代码的make目录下的docker-compose模板会被用于部署Harbor。

4.1 安装docker-compose(Harbor是通过docker compose来部署的)

#下载docker-compose[root@harbor ~]# curl -L "https://github.com/docker/compose/releases/download/1.24.1/docker-compose-$(uname -s)-$(uname -m)" -o /usr/local/bin/docker-compose#授执行权限[root@harbor ~]# chmod +x /usr/local/bin/docker-compose#设置快捷方式-软链接[root@harbor ~]# ln -s /usr/local/bin/docker-compose /usr/bin/docker-compose#查看docker-compose版本,可以正常查看docker-compose版本,即安装成功[root@harbor bin]# docker-compose --version

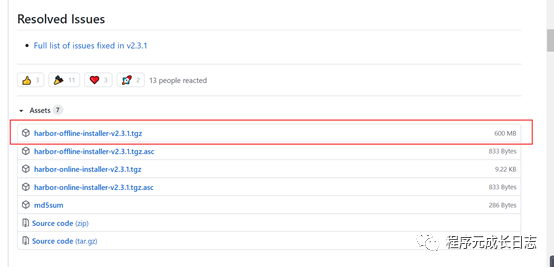

4.2 下载harbor安装包

在github上下载harbor安装包即可,harbor地址:https://github.com/goharbor/harbor/releases,本文使用的是最版v2.3.1,

[root@harbor /]# mkdir /data/harbor -p[root@harbor /]# cd /data/harbor/[root@harbor harbor]# wget https://github.com/goharbor/harbor/releases/download/v2.3.1/harbor-offline-installer-v2.3.1.tgz

4.3 解压harbor离线版安装包、修改配置文件

[root@harbor harbor]# tar zxvf harbor-offline-installer-v2.3.1.tgz[root@harbor harbor]# cd harbor/[root@harbor harbor]# cp harbor.yml.tmpl harbor.yml#修改harbor配置文件[root@harbor harbor]# vim harbor.yml#需要修改的参数:#hostname设置访问地址,可以使用ip、域名,不可以设置为127.0.0.1或localhosthostname: 192.168.226.103#证书、私钥路径certificate: /home/harbor/certs/harbor.crt #########取消注释,填写实际路径private_key: /home/harbor/certs/harbor.key #########取消注释,填写实际路径#web端登录密码harbor_admin_password = Harbor12345

4.4 制作证书

[root@harbor harbor_data]# mkdir /data/harbor_data/certs –p[root@harbor harbor_data]# openssl req -newkey rsa:4096 -nodes -sha256 -keyout /data/harbor_data/certs/harbor.key -x509 -out /data/harbor_data/certs/harbor.crt -subj /C=CN/ST=BJ/L=BJ/O=DEVOPS/CN=harbor.wangzy.com -days 3650

参数说明:

req 产生证书签发申请命令

-newkey 生成新私钥

rsa:4096 生成秘钥位数

-nodes 表示私钥不加密

-sha256 使用SHA-2哈希算法

-keyout 将新创建的私钥写入的文件名

-x509 签发X.509格式证书命令。X.509是最通用的一种签名证书格式。

-out 指定要写入的输出文件名

-subj 指定用户信息

-days 有效期(3650表示十年)

4.5 安装

[root@harbor harbor]# ./prepare[root@harbor harbor]# ./install.sh

4.6 将部署的私有harbor设置为信任仓库

# 添加信任仓库地址[root@master01 ~]# vim /etc/docker/daemon.json{"exec-opts": ["native.cgroupdriver=systemd"],"registry-mirrors": ["https://4cnob6ep.mirror.aliyuncs.com"],"log-driver": "json-file","log-opts": {"max-size": "100m"},"insecure-registries": ["https://192.168.226.103"]}#重启docker服务[root@master01 ~]# systemctl daemon-reload[root@master01 ~]# systemctl restart docker

4.7 运维

#切换到harbor安装路径下[root@harbor ~]# cd /data/harbor/harbor/#启动容器,容器不存在就无法启动,不会自动创建镜像[root@harbor harbor]# docker-compose start#停止容器[root@harbor harbor]# docker-compose stop#后台启动,如果容器不存在根据镜像自动创建[root@harbor harbor]# docker-compose up –d#停止容器并删除容器[root@harbor harbor]# docker-compose down -v列出项目中目前的所有容器[root@harbor harbor]# docker-compose ps显示正在运行的进程[root@harbor harbor]# docker-compose top

4.8 验证harbor可用性

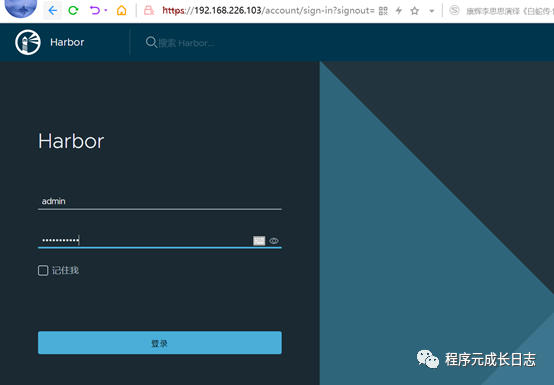

harbor启动后,就可通过 https://192.168.226.103 进行访问。默认的账户为 admin,密码为 Harbor12345。

(1) 使用admin用户登录

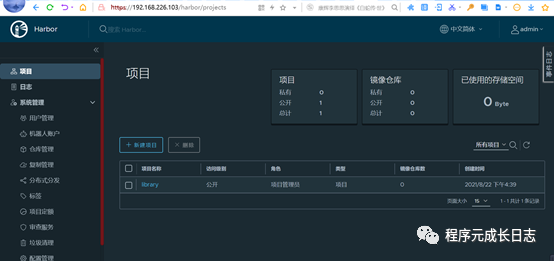

(2) 登录master,从远程仓库下载helloworld镜像,并推送到新建的harbor仓库。

#搜索镜像[root@master01 ~]# docker search helloworldNAME DESCRIPTION STARS OFFICIAL AUTOMATEDsupermanito/helloworld 学习资料 216karthequian/helloworld A simple helloworld nginx container to get y… 17 [OK]strm/helloworld-http A hello world container for testing http bal… 6 [OK]deis/helloworld 6 [OK]buoyantio/helloworld 4wouterm/helloworld A simple Docker image with an Nginx server … 1 [OK]#下载helloworld镜像[root@master01 ~]# docker pull wouterm/helloworldUsing default tag: latestlatest: Pulling from wouterm/helloworld658bc4dc7069: Pull completea3ed95caeb02: Pull completeaf3cc4b92fa1: Pull completed0034177ece9: Pull complete983d35417974: Pull completeaef548056d1a: Pull completeDigest: sha256:a949eca2185607e53dd8657a0ae8776f9d52df25675cb3ae3a07754df5f012e6Status: Downloaded newer image for wouterm/helloworld:latest#查看本地镜像[root@master01 ~]# docker imagesREPOSITORY TAG IMAGE ID CREATED SIZEwouterm/helloworld latest 0706462ea954 4 years ago 17.8MB#登录私有harbor[root@master01 ~]# docker login 192.168.226.103Username: adminPassword:WARNING! Your password will be stored unencrypted in /root/.docker/config.json.Configure a credential helper to remove this warning. Seehttps://docs.docker.com/engine/reference/commandline/login/#credentials-storeLogin Succeeded#打tag[root@master01 ~]# docker tag wouterm/helloworld:latest 192.168.226.103/library/myhelloworld:v1#上传镜像[root@master01 ~]# docker push 192.168.226.103/library/myhelloworld:v1The push refers to repository [192.168.226.103/library/myhelloworld]6781046df40c: Pushed5f70bf18a086: Pushed7958de11d0de: Pushed3d3d4b273cf9: Pushed1aaf09e09313: Pusheda58990fe2574: Pushedv1: digest: sha256:7278e5235e3780dc317f4a93dfd3961bf5760119d77553da3f7c9ee9d32a040a size: 1980

登录harbor web端,可以查询刚刚上传的镜像myhelloworld:v1。

PS:需要安装过程中配置文件的小伙伴可以公众号给我留言。