点击上方“IT那活儿”公众号,关注后了解更多内容,不管IT什么活儿,干就完了!!!

1. ASH dump分析

1)INSTANCE_NUMBER SAMPLE_ID SAMPLE_TIME SESSION_COUNT

2 127883388 29-AUG-22 10.25.08.492 AM 17

2 127883398 29-AUG-22 10.25.18.692 AM 12

2 127883408 29-AUG-22 10.25.28.892 AM 17

2 127883418 29-AUG-22 10.25.39.092 AM 13

2 127883428 29-AUG-22 10.25.49.292 AM 12

2 127883438 29-AUG-22 10.25.59.493 AM 138 <<<<<<<

2 127883448 29-AUG-22 10.26.09.692 AM 134

2 127883458 29-AUG-22 10.26.19.892 AM 242

2 127883468 29-AUG-22 10.26.30.112 AM 364

2 127883478 29-AUG-22 10.26.40.423 AM 498

2 127883488 29-AUG-22 10.26.50.722 AM 521

2 127883498 29-AUG-22 10.27.00.953 AM 531

2 127883508 29-AUG-22 10.27.11.173 AM 539

2 127883518 29-AUG-22 10.27.21.445 AM 547

2 127883528 29-AUG-22 10.27.31.739 AM 562

2 127883538 29-AUG-22 10.27.42.029 AM 572

2 127883548 29-AUG-22 10.27.52.343 AM 576

2 127883558 29-AUG-22 10.28.02.660 AM 464

2 127883568 29-AUG-22 10.28.12.963 AM 522

2 127883578 29-AUG-22 10.28.23.335 AM 464

2 127883588 29-AUG-22 10.28.33.620 AM 511

2 127883598 29-AUG-22 10.28.43.913 AM 467

2 127883608 29-AUG-22 10.28.54.196 AM 301

2 127883618 29-AUG-22 10.29.04.414 AM 327

2 127883628 29-AUG-22 10.29.14.624 AM 436

2 127883638 29-AUG-22 10.29.24.825 AM 433

2 127883648 29-AUG-22 10.29.35.025 AM 164

2 127883658 29-AUG-22 10.29.45.145 AM 13

2 127883668 29-AUG-22 10.29.55.245 AM 16

分析结论:问题发生的准确时间为10:25:59, 问题结束时间 10:29:45。

2)SAMPLE_TIME INSTANCE_NUMBER EVENT SESSION SESSION_COUNT

29-AUG-22 10.25.49.292 AM 2 Backup: MML write backup piece WAITING 5

29-AUG-22 10.25.59.493 AM 2 gc current grant 2-way WAITING 47

29-AUG-22 10.25.59.493 AM 2 enq: TX - index contention WAITING 36

29-AUG-22 10.26.09.692 AM 2 gc current grant 2-way WAITING 50

29-AUG-22 10.26.09.692 AM 2 buffer busy waits WAITING 37

29-AUG-22 10.26.19.892 AM 2 enq: US - contention WAITING 111 <<<<<<<

29-AUG-22 10.26.19.892 AM 2 gc current grant 2-way WAITING 45

29-AUG-22 10.26.30.112 AM 2 enq: US - contention WAITING 201

29-AUG-22 10.26.30.112 AM 2 gc buffer busy acquire WAITING 82

29-AUG-22 10.26.40.423 AM 2 enq: US - contention WAITING 348 <<<<<<<

29-AUG-22 10.26.40.423 AM 2 gc current grant 2-way WAITING 58

29-AUG-22 10.26.50.722 AM 2 enq: US - contention WAITING 377

29-AUG-22 10.26.50.722 AM 2 buffer busy waits WAITING 46

29-AUG-22 10.27.00.953 AM 2 row cache lock WAITING 211

29-AUG-22 10.27.00.953 AM 2 enq: US - contention WAITING 194

29-AUG-22 10.27.11.173 AM 2 row cache lock WAITING 424 <<<<<<<

29-AUG-22 10.27.11.173 AM 2 gc current grant 2-way WAITING 69

29-AUG-22 10.27.21.445 AM 2 enq: US - contention WAITING 415

29-AUG-22 10.27.21.445 AM 2 gc current grant 2-way WAITING 69

29-AUG-22 10.27.31.739 AM 2 enq: US - contention WAITING 434

29-AUG-22 10.27.31.739 AM 2 gc buffer busy acquire WAITING 62

29-AUG-22 10.27.42.029 AM 2 row cache lock WAITING 172

29-AUG-22 10.27.42.029 AM 2 latch: ges resource hash list WAITING 162

29-AUG-22 10.27.52.343 AM 2 enq: US - contention WAITING 410 <<<<<<<

29-AUG-22 10.27.52.343 AM 2 buffer busy waits WAITING 106

29-AUG-22 10.28.02.660 AM 2 enq: US - contention WAITING 337

29-AUG-22 10.28.02.660 AM 2 gc current grant 2-way WAITING 54

29-AUG-22 10.28.12.963 AM 2 enq: US - contention WAITING 325

29-AUG-22 10.28.12.963 AM 2 gc buffer busy acquire WAITING 105

29-AUG-22 10.28.23.335 AM 2 enq: US - contention WAITING 312

29-AUG-22 10.28.23.335 AM 2 gc current grant 2-way WAITING 58

29-AUG-22 10.28.33.620 AM 2 enq: US - contention WAITING 200

29-AUG-22 10.28.33.620 AM 2 row cache lock WAITING 100

29-AUG-22 10.28.43.913 AM 2 enq: US - contention WAITING 182

29-AUG-22 10.28.43.913 AM 2 row cache lock WAITING 104

29-AUG-22 10.28.54.196 AM 2 enq: US - contention WAITING 195

29-AUG-22 10.28.54.196 AM 2 gc current grant 2-way WAITING 24

29-AUG-22 10.29.04.414 AM 2 enq: US - contention WAITING 181

29-AUG-22 10.29.04.414 AM 2 gc current grant 2-way WAITING 55

29-AUG-22 10.29.14.624 AM 2 enq: US - contention WAITING 167

29-AUG-22 10.29.14.624 AM 2 buffer busy waits WAITING 110

29-AUG-22 10.29.24.825 AM 2 row cache lock WAITING 131

29-AUG-22 10.29.24.825 AM 2 enq: TX - index contention WAITING 51

29-AUG-22 10.29.35.025 AM 2 enq: US - contention WAITING 76

29-AUG-22 10.29.35.025 AM 2 db file sequential read WAITING 21

分析结论:问题发生时段主要等待事件enq: US – contention 和 row cache lock。其中enq: US – contention 与undo segment 有关。

3)SQL_ID EVENT COUNT(*)

6c9y7jqmk59v4 enq: US - contention 2

0tp7wdj6f90d4 enq: US - contention 4

5hz6hqsa0un78 enq: US - contention 7

9vx06344t67qv enq: US - contention 7

50jzds6fz61t2 enq: US - contention 10

6ycn3m0fk2rja enq: US - contention 10

1z3m3utvfg20g enq: US - contention 12

46ut8bh5bnsdw enq: US - contention 14

ddg8hyzb56m3t enq: US - contention 19

cm7vga0at4xt3 enq: US - contention 20

3f3mp8axvq054 enq: US - contention 21

gx80k0pkhfu8r enq: US - contention 22

25hcu7xcp1ncp enq: US - contention 25

bxr6zz5ksn7zn enq: US - contention 26

267nj6k322m27 enq: US - contention 28

0cq4pzfbg1wu7 enq: US - contention 31

2b0hhbp427pjm enq: US - contention 36

40s7a0r0mxq53 enq: US - contention 53

8xfcm2j2s9tnw enq: US - contention 61

9da9yp8uk2xb7 enq: US - contention 65

ckg2yyx6xn1pr enq: US - contention 71

b52y29yh7md4q enq: US - contention 81

9k8ff3ybnvwd1 enq: US - contention 95

7unbs10ja0wuk enq: US - contention 96

fgsjfkxc6wp83 enq: US - contention 124

97qbzhu7rj4jz enq: US - contention 146

3ky71j71cqh2c enq: US - contention 147

8g1s5t0qy1aar enq: US - contention 226

c21nv2ffrzqj6 enq: US - contention 350

2cu0rw9rv2uvt enq: US - contention 959

46ud1t80mgqd7 enq: US - contention 1795

SQL_ID EVENT P1 P2 P3 COUNT(*)

------------- -------------------------

0tp7wdj6f90d4 row cache lock 3 0 3 1

2cu0rw9rv2uvt row cache lock 3 0 5 1

3f3mp8axvq054 row cache lock 3 5 3 1

46ud1t80mgqd7 row cache lock 3 5 3 1

46ud1t80mgqd7 row cache lock 2 0 5 1

8g1s5t0qy1aar row cache lock 3 5 3 1

9vx06344t67qv row cache lock 3 0 3 1

c21nv2ffrzqj6 row cache lock 2 0 5 1

1z3m3utvfg20g row cache lock 3 0 3 3

25hcu7xcp1ncp row cache lock 3 0 3 3

46ud1t80mgqd7 row cache lock 3 0 5 3

6ycn3m0fk2rja row cache lock 3 0 3 3

50jzds6fz61t2 row cache lock 3 0 3 4

cm7vga0at4xt3 row cache lock 3 0 3 4

gx80k0pkhfu8r row cache lock 3 0 3 4

3f3mp8axvq054 row cache lock 3 0 3 5

46ut8bh5bnsdw row cache lock 3 0 3 5

0cq4pzfbg1wu7 row cache lock 3 0 3 6

bxr6zz5ksn7zn row cache lock 3 0 3 6

ddg8hyzb56m3t row cache lock 3 0 3 6

267nj6k322m27 row cache lock 3 0 3 9

40s7a0r0mxq53 row cache lock 3 0 3 11

2b0hhbp427pjm row cache lock 3 0 3 12

9da9yp8uk2xb7 row cache lock 3 0 3 15

b52y29yh7md4q row cache lock 3 0 3 17

8xfcm2j2s9tnw row cache lock 3 0 3 20

7unbs10ja0wuk row cache lock 3 0 3 21

ckg2yyx6xn1pr row cache lock 3 0 3 22

9k8ff3ybnvwd1 row cache lock 3 0 3 26

fgsjfkxc6wp83 row cache lock 3 0 3 33

97qbzhu7rj4jz row cache lock 3 0 3 35

3ky71j71cqh2c row cache lock 3 0 3 39

8g1s5t0qy1aar row cache lock 3 0 3 67

c21nv2ffrzqj6 row cache lock 3 0 3 91

2cu0rw9rv2uvt row cache lock 3 0 3 244

46ud1t80mgqd7 row cache lock 3 0 3 449

SQL> SELECT DISTINCT cache#, cache_name

2 FROM v$rowcache_parent

3 WHERE cache# =3;

CACHE# CACHE_NAME

---------- ----------------

3 dc_rollback_segments

分析结论:等待事件为 enq: US – contention 和 row cache lock 的主要SQL 相同, 其中row cache lock 争用在 dc_rollback_segments, 即undo 回滚段上。

综上:

问题发生时段,主要等待事件为enq: US – contention 和 row cache lock,两者都是在等待undo segment,且这两个等待事件对应的TOP SQL相同。

2. TOP SQL历史执行频率

TOP SQL 多是insert 语句,其中涉及以下sql,sql执行情况如下:

1)46ud1t80mgqd7

BTIME SQL_ID PLAN_HASH_VALUE EXECS AVG_ELAS AVG_CPUS AVG_LIOS AVG_PIOS

---------- ------------- ---------------

0822 09:00 46ud1t80mgqd7 110,282 .001 .001 29.9 .2

0822 10:00 46ud1t80mgqd7 129,715 .002 .001 30.5 .2 <<<<<< 同期

0822 11:00 46ud1t80mgqd7 118,641 .001 .001 32.8 .2

0822 12:00 46ud1t80mgqd7 67,699 .002 .001 31.1 .2

0822 13:00 46ud1t80mgqd7 53,676 .002 .001 30.3 .2

0822 14:00 46ud1t80mgqd7 90,873 .002 .001 30.3 .2

0822 15:00 46ud1t80mgqd7 131,476 .002 .001 30.4 .2

0822 16:00 46ud1t80mgqd7 135,878 .002 .001 31.5 .2

0822 17:00 46ud1t80mgqd7 120,929 .002 .001 31.2 .2

......

0829 09:00 46ud1t80mgqd7 129,364 .002 .001 30.9 .2

0829 10:00 46ud1t80mgqd7 194,743 .140 .001 30.7 .2 <<<<<< 问题时段

0829 11:00 46ud1t80mgqd7 174,934 .008 .001 31.0 .1

0829 12:00 46ud1t80mgqd7 124,156 .001 .001 30.7 .1

0829 13:00 46ud1t80mgqd7 99,360 .002 .001 30.6 .2

0829 14:00 46ud1t80mgqd7 120,341 .001 .001 30.5 .2

0829 15:00 46ud1t80mgqd7 186,968 .002 .001 33.8 .2

0829 16:00 46ud1t80mgqd7 155,762 .002 .001 32.7 .2

0829 17:00 46ud1t80mgqd7 162,019 .002 .001 31.5 .2

......

0830 08:00 46ud1t80mgqd7 66,975 .002 .001 30.5 .2

0830 09:00 46ud1t80mgqd7 132,190 .002 .001 31.7 .2

0830 10:00 46ud1t80mgqd7 166,823 .002 .001 30.5 .2 <<<<<< 同期

2)2cu0rw9rv2uvt

BTIME SQL_ID PLAN_HASH_VALUE EXECS AVG_ELAS AVG_CPUS AVG_LIOS AVG_PIOS

---------- ------------- ---------------

0822 09:00 2cu0rw9rv2uvt 51,971 .017 .006 184.1 7.2

0822 10:00 2cu0rw9rv2uvt 61,034 .018 .006 189.0 7.2 <<<<<<< 同期

0822 11:00 2cu0rw9rv2uvt 52,144 .017 .006 185.4 7.2

0822 12:00 2cu0rw9rv2uvt 23,504 .015 .006 186.8 7.1

0822 13:00 2cu0rw9rv2uvt 19,615 .015 .006 187.5 7.2

0822 14:00 2cu0rw9rv2uvt 37,674 .018 .006 193.6 7.5

0822 15:00 2cu0rw9rv2uvt 54,048 .021 .007 234.5 8.8

0822 16:00 2cu0rw9rv2uvt 50,803 .019 .007 210.5 8.0

0822 17:00 2cu0rw9rv2uvt 39,721 .019 .007 213.1 8.1

......

0829 09:00 2cu0rw9rv2uvt 64,347 .021 .007 242.3 9.4

0829 10:00 2cu0rw9rv2uvt 86,165 .205 .011 344.6 13.1 <<<<< 问题时段

0829 11:00 2cu0rw9rv2uvt 87,026 .041 .012 399.4 15.0

0829 12:00 2cu0rw9rv2uvt 49,126 .031 .012 384.3 14.6

0829 13:00 2cu0rw9rv2uvt 33,047 .023 .009 303.9 11.5

0829 14:00 2cu0rw9rv2uvt 52,935 .021 .008 250.6 9.6

0829 15:00 2cu0rw9rv2uvt 67,570 .021 .008 244.3 9.5

0829 16:00 2cu0rw9rv2uvt 66,522 .022 .008 245.0 9.6

0829 17:00 2cu0rw9rv2uvt 55,127 .022 .008 257.0 9.8

......

0830 09:00 2cu0rw9rv2uvt 62,608 .023 .008 262.9 10.2

0830 10:00 2cu0rw9rv2uvt 70,089 .023 .008 264.3 10.2 <<<<<<同期

3)c21nv2ffrzqj6

(该可能是新SQL,或者是以前的执行频率不高,没有被采集到)

BTIME SQL_ID PLAN_HASH_VALUE EXECS AVG_ELAS AVG_CPUS AVG_LIOS AVG_PIOS

---------- ------------- ---------------

0829 10:00 c21nv2ffrzqj6 0 16,288 .297 .002 30.8 1.9

4)6z56qk785zzx6

BTIME SQL_ID PLAN_HASH_VALUE EXECS AVG_ELAS AVG_CPUS AVG_LIOS AVG_PIOS

---------- ------------- ---------------

0822 09:00 6z56qk785zzx6 51,761 .023 .009 282.9 10.2

0822 10:00 6z56qk785zzx6 60,613 .026 .009 291.6 10.9 <<<<<<同期

0822 11:00 6z56qk785zzx6 51,878 .021 .009 287.5 8.8

0822 12:00 6z56qk785zzx6 23,460 .022 .009 287.4 11.0

0822 13:00 6z56qk785zzx6 19,588 .022 .009 289.6 11.4

0822 14:00 6z56qk785zzx6 37,498 .029 .010 309.7 12.4

0822 15:00 6z56qk785zzx6 53,665 .049 .015 506.3 11.0

0822 16:00 6z56qk785zzx6 50,252 .036 .012 415.2 10.3

0822 17:00 6z56qk785zzx6 39,604 .029 .011 328.8 11.3

......

0829 09:00 6z56qk785zzx6 63,928 .022 .009 308.4 9.5

0829 10:00 6z56qk785zzx6 85,561 .067 .011 379.9 8.9 <<<<<< 问题时段

0829 11:00 6z56qk785zzx6 86,509 .032 .012 411.1 9.0

0829 12:00 6z56qk785zzx6 48,313 .022 .011 395.5 8.5

0829 13:00 6z56qk785zzx6 32,448 .019 .010 343.1 8.2

0829 14:00 6z56qk785zzx6 52,486 .023 .010 328.3 8.9

0829 15:00 6z56qk785zzx6 66,912 .023 .010 317.2 9.1

0829 16:00 6z56qk785zzx6 65,810 .022 .010 315.8 8.3

0829 17:00 6z56qk785zzx6 54,785 .029 .012 350.9 10.2

......

0830 09:00 6z56qk785zzx6 62,333 .023 .010 321.5 10.0

0830 10:00 6z56qk785zzx6 69,686 .022 .010 321.7 8.9 <<<<<<同期

5)8g1s5t0qy1aar

BTIME SQL_ID PLAN_HASH_VALUE EXECS AVG_ELAS AVG_CPUS AVG_LIOS AVG_PIOS

---------- ------------- ---------------

0822 15:00 8g1s5t0qy1aar 93964273 9,432 .001 .000 6.4 .0

0822 16:00 8g1s5t0qy1aar 11,441 .001 .000 6.5 .0

0823 14:00 8g1s5t0qy1aar 6,672 .001 .000 6.4 .0

0823 16:00 8g1s5t0qy1aar 10,253 .001 .000 6.3 .0

0823 17:00 8g1s5t0qy1aar 11,436 .001 .000 6.5 .0

0824 16:00 8g1s5t0qy1aar 20,612 .001 .000 6.4 .0

0825 14:00 8g1s5t0qy1aar 5,759 .000 .000 6.3 .0

0826 15:00 8g1s5t0qy1aar 20,414 .000 .000 6.4 .0

0826 17:00 8g1s5t0qy1aar 20,118 .001 .000 6.6 .0

0826 21:00 8g1s5t0qy1aar 1,189 .000 .000 7.0 .0

0829 10:00 8g1s5t0qy1aar 58,793 .054 .000 5.4 .0 <<<<<< 问题时

分析结论:

与同期8/22, 8/30 对比,问题时段相关TOP SQL 的执行频率明显高于同期。

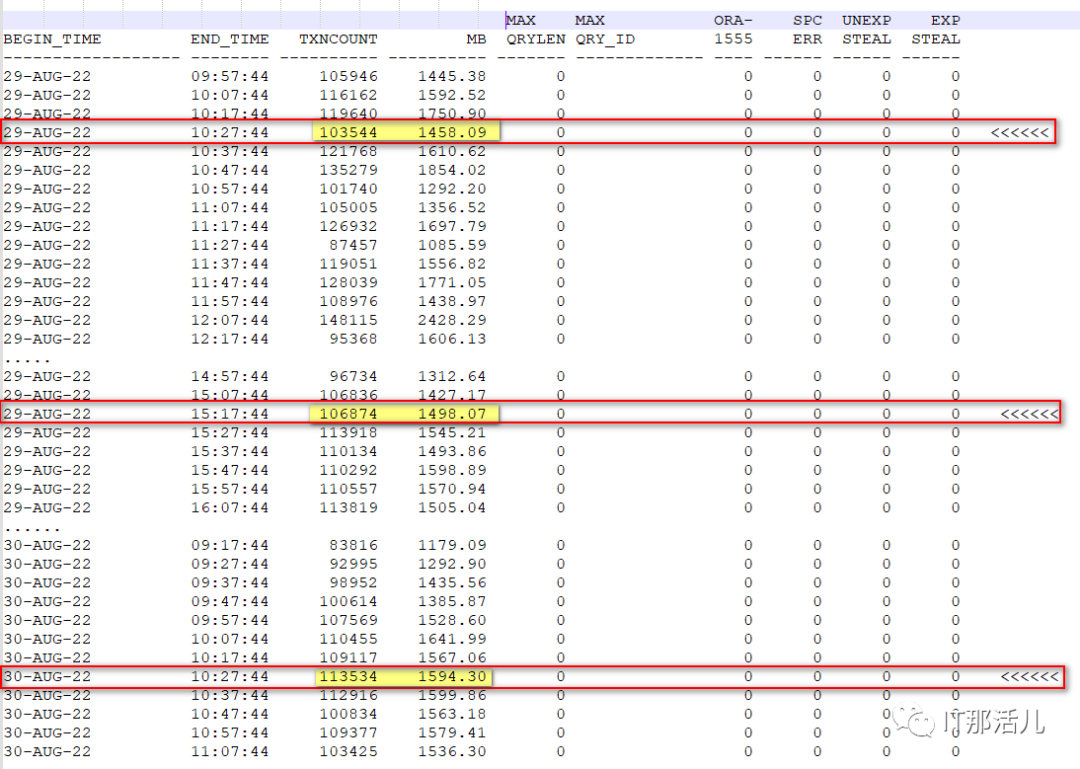

3. UNDO使用情况

1)UNDO历史使用情况

分析结论:

与当天下午业务高峰及同期的对比,问题时段事务数及使用的UNDO 大小无明显差异,且使用的 UNDO 不大。

2)UNDO当前使用情况

select r.owner,r.tablespace_name,r.status,count(r.segment_name),round(sum(s.bytes)/1024/1024,2) as "sum_mb"

from dba_rollback_segs r,dba_segments s

where r.segment_name=s.segment_name

group by r.owner,r.tablespace_name,r.status

order by 1,2,3,4

/

OWNER TABLESPACE_NAME STATUS COUNT(R.SEGMENT_NAME) sum_mb

-------- --------------------

PUBLIC UNDOTBS1 OFFLINE 293 10860.13

PUBLIC UNDOTBS1 ONLINE 89 15029.18

PUBLIC UNDOTBS2 OFFLINE 738 12042.68

PUBLIC UNDOTBS2 ONLINE 409 22695.06

SYS SYSTEM ONLINE 1 .43

select c.name container,

u.tablespace_name,

u.status,

round(sum(u.bytes 1024 / 1024),2) total_mb

from cdb_undo_extents u, v$containers c

where u.con_id = c.con_id

group by c.con_id, c.name, u.tablespace_name, u.status

order by 1, 2, 3;

CONTAINER TABLESPACE_NAME STATUS TOTAL_MB

------------------------------

tdb UNDOTBS1 ACTIVE 58.13

tdb UNDOTBS1 EXPIRED 8692.88

tdb UNDOTBS1 UNEXPIRED 16994.31

tdb UNDOTBS2 ACTIVE 27

tdb UNDOTBS2 EXPIRED 12453.19

tdb UNDOTBS2 UNEXPIRED 22327.56

SQL> select max(maxconcurrency) from v$undostat;

MAX(MAXCONCURRENCY)

-------------------

408

TABLESPACE_NAME SUM_SPACE(M) SUM_BLOCKS USED_SPACE(M) USED_RATE(%) FREE_SPACE(M)

-------------------- --------------------

SYSAUX 28672M 3670016 12255.81M 42.74% 16416.19M

SYSTEM 38912M 4980736 2263.62M 5.82% 36648.38M

TBS_DATA 14376960M 1840250880 13970216.75M 97.17% 406743.25M

TBS_INDEX 13486080M 1726218240 13230519.19M 98.11% 255560.81M

TBS_OGG 10240M 1310720 2.94M .03% 10237.06M

UNDOTBS1 217087.98M 27787262 25498.35M 11.75% 191589.63M <<<<<<<

UNDOTBS2 247800M 31718400 34798.75M 14.04% 213001.25M <<<<<<

USERS 1024M 131072 1M .1% 1023M

分析结论:

当前UNDO 表空间使用率不高,剩余可用空间充裕。同时ONLINE 状态的UNDO segment 409个。

3)UNDO当前参数

Parameter Instance Value Desc

----------------------------

_rollback_segment_count 0 number of undo segments

_undo_autotune FALSE enable auto tuning of undo_retention

分析结论:

当前数据库参数_rollback_segment_count 为默认值0,该参数用于控制保持online undo segments 个数。

以下是 MOS 相关内部文档对参数设置的部分说明,供参考:

1)提供更好的并发性能:系统高并发下,保证 undo 段数较多(_rollback_segment_count),提供更好的并发性能。

2)规避一些因为并发事务问题导致的 ORA-1554。

副作用:

在 DB 启动时,由于有更多的 undo 段要 online,导致数据库启动时间较长。

SGA 使用量会由于大量 online undo segment 而增加。

未使用的 undo 段仍然会一直保持 online 状态,导致 undo$表上会有这些额外(不在使用)的 undo 段记录,undo$表的扫描效率(内部几个地方使用)变慢等情况。

4. ASH分析

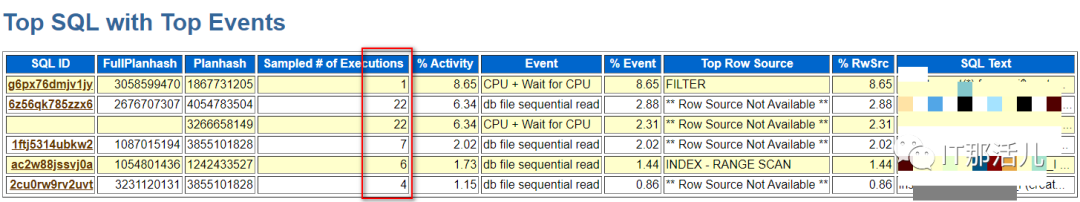

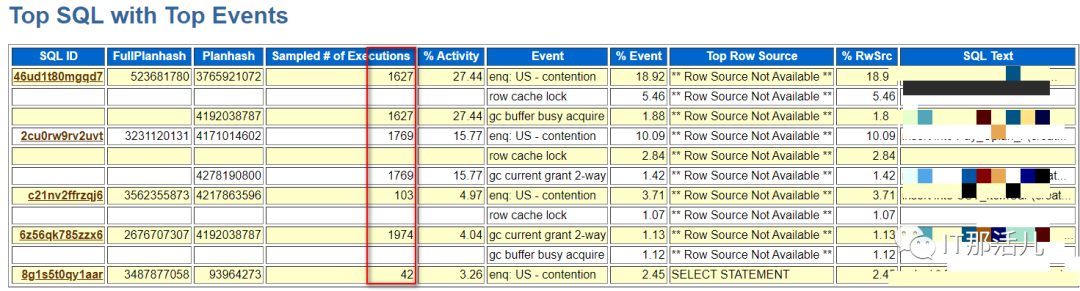

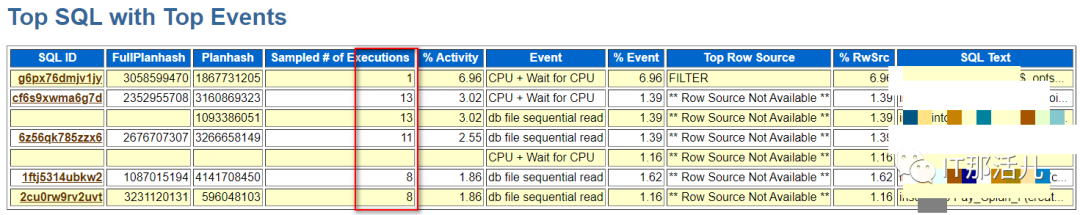

分别采集问题时段(8/29)、同期 8/22、同期 8/30 10:25 ~ 10:30 的 ASH 进行对比分析:

同期8/22:

问题时段:

同期 8/30:

分析结论:

与同期8/22,8/30 相比,问题时段的SQL 执行频率显著高于同期,同期SQL执行频次最高22次,问题时段执行频次达到了1769次,需要调查这是否是业务的正常行为。

通过ASH DUMP ,我们能够获取主要的来源应用服务器:

SQL_ID MACHINE PROGRAM COUNT(*)

------------- ------------------------------

6z56qk785zzx6 t-app-139-4 JDBC Thin Client 29

6z56qk785zzx6 t-app-139-14 JDBC Thin Client 27

8g1s5t0qy1aar sh-bpc-inepay-prd-ap-139-49 JDBC Thin Client 27

8g1s5t0qy1aar t-bpcintfpay-app-139-16 JDBC Thin Client 31

8g1s5t0qy1aar t-bpcintfpay-app-139-15 JDBC Thin Client 33

8g1s5t0qy1aar t-bpcintfpay-app-139-24 JDBC Thin Client 37

8g1s5t0qy1aar t-bpcintfpay-app-139-19 JDBC Thin Client 40

c21nv2ffrzqj6 t-app-139-13 JDBC Thin Client 6

c21nv2ffrzqj6 t-app-139-3 JDBC Thin Client 11

c21nv2ffrzqj6 t-app-139-6 JDBC Thin Client 13

c21nv2ffrzqj6 t-app-139-9 JDBC Thin Client 13

c21nv2ffrzqj6 t-app-139-14 JDBC Thin Client 17

c21nv2ffrzqj6 t-account-app-138-11 JDBC Thin Client 18

c21nv2ffrzqj6 t-app-139-8 JDBC Thin Client 18

c21nv2ffrzqj6 t-account-app-138-9 JDBC Thin Client 20

c21nv2ffrzqj6 t-app-139-37 JDBC Thin Client 20

c21nv2ffrzqj6 t-app-139-38 JDBC Thin Client 20

c21nv2ffrzqj6 nfs-account-app-138-10 JDBC Thin Client 23

c21nv2ffrzqj6 t-app-139-11 JDBC Thin Client 27

c21nv2ffrzqj6 t-app-139-4 JDBC Thin Client 27

c21nv2ffrzqj6 t-app-139-5 JDBC Thin Client 30

c21nv2ffrzqj6 t-app-139-39 JDBC Thin Client 32

c21nv2ffrzqj6 t-account-app-138-8 JDBC Thin Client 33

c21nv2ffrzqj6 t-app-139-12 JDBC Thin Client 43

c21nv2ffrzqj6 t-app-139-7 JDBC Thin Client 44

c21nv2ffrzqj6 t-app-139-10 JDBC Thin Client 51

46ud1t80mgqd7 t-account-app-138-14 JDBC Thin Client 21

46ud1t80mgqd7 t-bpcintfpay-app-139-18 JDBC Thin Client 23

46ud1t80mgqd7 t-account-app-138-9 JDBC Thin Client 28

46ud1t80mgqd7 t-app-139-10 JDBC Thin Client 31

46ud1t80mgqd7 t-account-app-138-12 JDBC Thin Client 33

46ud1t80mgqd7 t-app-139-14 JDBC Thin Client 35

46ud1t80mgqd7 t-account-app-138-16 JDBC Thin Client 37

46ud1t80mgqd7 t-app-139-3 JDBC Thin Client 37

46ud1t80mgqd7 sh-bpc-inepay-prd-ap-139-49 JDBC Thin Client 45

46ud1t80mgqd7 t-app-139-6 JDBC Thin Client 47

46ud1t80mgqd7 t-app-139-5 JDBC Thin Client 51

46ud1t80mgqd7 t-app-139-37 JDBC Thin Client 55

46ud1t80mgqd7 t-bpcintfpay-app-139-15 JDBC Thin Client 56

46ud1t80mgqd7 t-app-139-2 JDBC Thin Client 57

46ud1t80mgqd7 t-bpcintfpay-app-139-17 JDBC Thin Client 57

46ud1t80mgqd7 t-bpcintfpay-app-139-22 JDBC Thin Client 59

46ud1t80mgqd7 t-app-139-4 JDBC Thin Client 60

46ud1t80mgqd7 t-app-139-39 JDBC Thin Client 62

46ud1t80mgqd7 t-bpcintfpay-app-139-24 JDBC Thin Client 62

46ud1t80mgqd7 t-app-139-7 JDBC Thin Client 63

46ud1t80mgqd7 sh-bpc-inepay-prd-ap-139-48 JDBC Thin Client 63

46ud1t80mgqd7 t-app-139-8 JDBC Thin Client 65

46ud1t80mgqd7 t-app-139-9 JDBC Thin Client 66

46ud1t80mgqd7 sh-bpc-inepay-prd-ap-139-45 JDBC Thin Client 66

46ud1t80mgqd7 t-bpcintfpay-app-139-23 JDBC Thin Client 68

46ud1t80mgqd7 nfs-account-app-138-10 JDBC Thin Client 69

46ud1t80mgqd7 t-app-139-38 JDBC Thin Client 72

46ud1t80mgqd7 t-account-app-138-8 JDBC Thin Client 78

46ud1t80mgqd7 sh-bpc-inepay-prd-ap-139-46 JDBC Thin Client 80

46ud1t80mgqd7 t-account-app-138-11 JDBC Thin Client 81

46ud1t80mgqd7 t-app-139-11 JDBC Thin Client 96

46ud1t80mgqd7 sh-bpc-inepay-prd-ap-139-47 JDBC Thin Client 99

46ud1t80mgqd7 t-account-app-138-15 JDBC Thin Client 100

46ud1t80mgqd7 t-bpcintfpay-app-139-20 JDBC Thin Client 103

46ud1t80mgqd7 t-bpcintfpay-app-139-16 JDBC Thin Client 104

46ud1t80mgqd7 t-app-139-21 JDBC Thin Client 108

46ud1t80mgqd7 t-app-139-13 JDBC Thin Client 111

46ud1t80mgqd7 t-account-app-138-13 JDBC Thin Client 115

46ud1t80mgqd7 t-app-139-12 JDBC Thin Client 116

2cu0rw9rv2uvt t-app-139-11 JDBC Thin Client 9

2cu0rw9rv2uvt t-app-139-5 JDBC Thin Client 29

2cu0rw9rv2uvt t-app-139-38 JDBC Thin Client 35

2cu0rw9rv2uvt t-app-139-39 JDBC Thin Client 36

2cu0rw9rv2uvt t-app-139-4 JDBC Thin Client 54

2cu0rw9rv2uvt t-app-139-9 JDBC Thin Client 59

2cu0rw9rv2uvt t-app-139-14 JDBC Thin Client 60

2cu0rw9rv2uvt t-app-139-2 JDBC Thin Client 63

2cu0rw9rv2uvt t-account-app-138-11 JDBC Thin Client 64

2cu0rw9rv2uvt t-app-139-6 JDBC Thin Client 66

2cu0rw9rv2uvt t-app-139-10 JDBC Thin Client 71

2cu0rw9rv2uvt nfs-account-app-138-10 JDBC Thin Client 76

2cu0rw9rv2uvt t-account-app-138-8 JDBC Thin Client 82

2cu0rw9rv2uvt t-account-app-138-9 JDBC Thin Client 83

2cu0rw9rv2uvt t-app-139-13 JDBC Thin Client 84

2cu0rw9rv2uvt t-app-139-8 JDBC Thin Client 100

2cu0rw9rv2uvt t-app-139-37 JDBC Thin Client 114

2cu0rw9rv2uvt t-app-139-3 JDBC Thin Client 117

2cu0rw9rv2uvt t-app-139-7 JDBC Thin Client 123

2cu0rw9rv2uvt t-app-139-12 JDBC Thin Client 131

分析结论:

建议协调业务调查,SQL执行频率显著增加是否是业务的正常行为。

故障分析总结:

1) 问题发生时段为主要等待事件为enq: US – contention 和 row cache lock,两者都是在等待undo segment,且这两个等待事件对应的TOP SQL相同

2) 与同期8/22, 8/30 对比,问题时段相关TOP SQL 的执行频率明显高于同期

3) 与当天下午业务高峰及同期的8/30 对比,问题时段事务数及使用的UNDO 大小无明显差异,且使用的 UNDO 不大。

4) 当前UNDO 表空间使用率14%,剩余可用空间充裕。同时ONLINE 状态的UNDO segment 409个。

5) 当前数据库参数_rollback_segment_count 为默认值0,该参数用于控制保持online状态的undo segments 个数。

6) 问题时段负载、redo 生成量等指标均明显高于同期8/22, 略高于同期8/30

7) 与同期8/22,8/30 相比,问题时段的SQL 执行频率显著高于同期,同期SQL执行频次最高22次,问题时段执行频次达到了 1769次,需要调查这是否是业务的正常行为。

综上:

命令参考:

SQL > alter system set “_rollback_segment_count”=2000 scope=both sid=’*’;

回退步骤:

SQL> alter system reset "_rollback_segment_count";

然后重启数据库。

测试环境验证无问题后,再应用到生产环境,避开业务高峰实施。

2. 协调业务调查问题时段,SQL 执行频率显著高于同期,是否是正常的业务行为

本文作者:白炀斌(上海新炬中北团队)

本文来源:“IT那活儿”公众号