Kubernetes环境上openGauss HA集群架构设计

与单节点数据库相比,HA 架构可提供更强的数据层停机保护。如果要提供更高等级的SLA和更小的RTO,则可以使用高可用性架构。

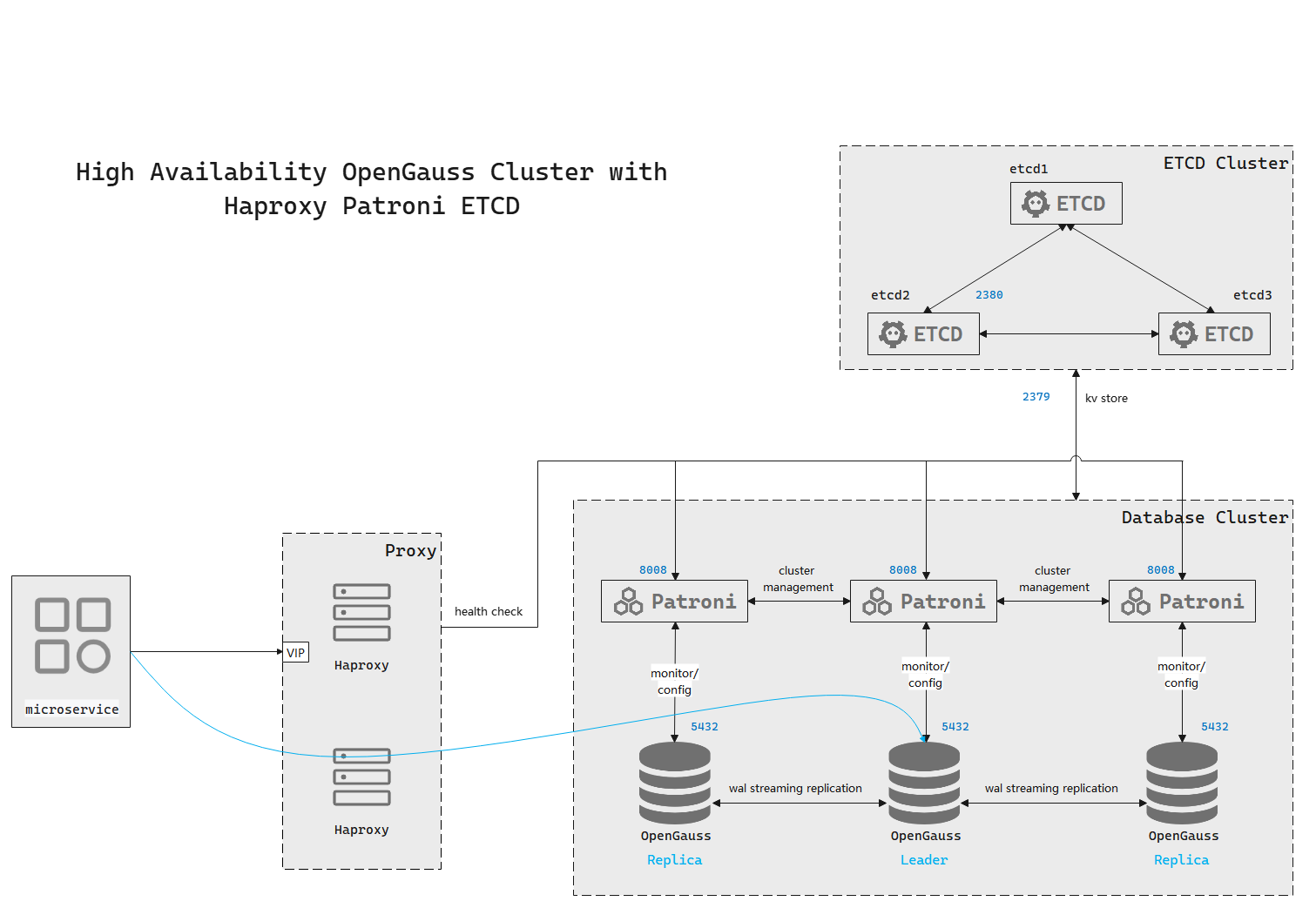

本文介绍了基于Patroni + HAProxy + etcd的架构设计,可为Kubernetes环境上的 openGauss部署提供高可用性 (HA)。

高可用性的工作原理

流式复制

流式复制是一种复制方法,即备机连接到主机并持续接收 WAL 记录流。与日志传送复制功能相比,流式复制功能可让备机与主机保持同步。openGauss提供内置的流式复制功能。

默认情况下,流式复制是异步进行的;主机不会先等待备机确认,然后再向客户端确认事务提交。如果主机在确认事务之后、备机收到事务之前发生故障,则异步复制可能会导致数据丢失。为了提高数据的安全性,可以使用同步流式复制。

同步流式复制

可以通过将一个或多个备机选择为同步备用节点,将流式复制配置为同步复制。同步模式下,在备机确认事务提交前,主机不会确认事务提交。同步流式复制功能可实现更高的数据一致性,但是会增加事务延迟时间。

opengGauss可以通过synchronous_commit 设置当前事务的同步方式:

- on表示将备机的同步日志刷新到磁盘。

- off表示异步提交。

- local表示为本地提交。

- remote_write表示要备机的同步日志写到磁盘。

- remote_receive表示要备机同步日志接收数据。

- true表示将备机的同步日志刷新到磁盘。

- false表示异步提交。

- yes表示将备机的同步日志刷新到磁盘

- no表示异步提交。

- 1表示将备机的同步日志刷新到磁盘。

- 0表示异步提交。

openGauss HA 架构

我们的openGauss HA 主要包含以下方面:

-

采用一主两备,主备之间通过异步流复制数据。

-

Patroni监控本地的openGauss状态,并将本地openGauss信息/状态写入etcd来存储集群状态。

-

Patroni与etcd结合可以实现数据库集群故障切换。当主机故障时,Patroni把一个备机(Standby)拉起来,作为新的主(Primary), 如果一个故障节点恢复了,能够自动加入集群。

-

HAProxy提供数据库的统一访问入口,对业务模块屏蔽数据库集群的内部实现,对外提供数据库的高可用,数据库切换对应用无感知。HAProxy通过Patroni提供的REST API来检测openGauss的主备状态,确保业务请求只会被路由到主机。

-

在Kubernetes环境上,通过设置replicas可以创建多个HAProxy副本,提供了HAProxy高可用。

-

etcd用于Patroni节点之间共享信息。Patroni的主备选举是通过是否能获得etcd中的 leader key 来控制的,获取到了leader key的Patroni为主节点,其它的为备节点。

架构图如下:

在Kubernetes环境上搭建openGauss HA

在Kubernetes环境上搭建openGauss HA需要部署openGauss Service,openGauss Deployment和HAProxy。

通常情况下Kubernetes集群会带有etcd集群,此处就不在单独部署etcd了。

openGauss Service

在Kubernetes 中 Service 是 将运行在一个或一组 Pod 上的网络应用程序公开为网络服务的方法。我们为每个openGauss实例定义一个Service,这样就可以通过这个Service对外提供服务。

apiVersion: v1

kind: Service

metadata:

namespace: platform

name: dbsrv1

spec:

ports:

- port: 5432

protocol: TCP

targetPort: 5432

name: gsql

selector:

app: db1

clusterIP: None

---

apiVersion: v1

kind: Service

metadata:

namespace: platform

name: dbsrv2

spec:

ports:

- port: 5432

protocol: TCP

targetPort: 5432

name: gsql

selector:

app: db2

clusterIP: None

---

apiVersion: v1

kind: Service

metadata:

namespace: platform

name: dbsrv3

spec:

ports:

- port: 5432

protocol: TCP

targetPort: 5432

name: gsql

selector:

app: db3

clusterIP: None

openGauss Deployment

以下是一个openGauss实例的部署yaml,其他的实例类似。

在deployment中使用节点亲和性保证一个主机上不会同时调度两个openGauss实例。同时将openGauss实例的数据目录挂载到主机目录上。

apiVersion: apps/v1

kind: Deployment

metadata:

name: db1-deployment

namespace: platform

spec:

selector:

matchLabels:

app: db1

replicas: 1

template:

metadata:

labels:

app: db1

version: 3.0.0

dev: gauss

spec:

affinity: //亲和性保证一个主机上不会同时调度两个openGauss实例

podAntiAffinity:

requiredDuringSchedulingIgnoredDuringExecution:

- labelSelector:

matchExpressions:

- key: app

operator: In

values:

- db2

- db3

topologyKey: "Kubernetes.io/hostname"

restartPolicy: Always

containers:

- name: opengauss

image: patroni-opengauss:3.0.3

imagePullPolicy: Always

securityContext:

runAsUser: 0

volumeMounts:

- mountPath: /var/lib/opengauss/data/

name: openguass-volume

ports:

- containerPort: 5432

name: opengauss

- containerPort: 8008

name: patroni

env:

- name: HOST_NAME

value: db1

- name: HOST_IP

valueFrom:

fieldRef:

fieldPath: status.podIP

- name: PEER_IPS

value: dbsrv2.platform,dbsrv3.platform

- name: PEER_HOST_NAMES

value: db2,db3

- name: PORT

value: "5432"

- name: GS_PASSWORD

value: "yourpassword"

- name: SERVER_MODE

value: primary

- name: db_config

value: ""

volumes: //将openGauss实例的数据目录挂载到主机目录上

- name: openguass-volume

hostPath:

path: /data/opengauss/

type: DirectoryOrCreate

HAProxy 部署

apiVersion: v1

kind: Service

metadata:

namespace: platform

name: haproxy-service

spec:

type: NodePort

ports:

- port: 6000

protocol: TCP

targetPort: 6000

name: gsql

- port: 7000

protocol: TCP

targetPort: 7000

name: haproxy-dashboard

selector:

app: haproxy

---

apiVersion: apps/v1

kind: Deployment

metadata:

name: haproxy-deployment

namespace: platform

spec:

selector:

matchLabels:

app: haproxy

replicas: 2

template:

metadata:

labels:

app: haproxy

version: 2.7.3-ping

spec:

containers:

- name: haproxy

image: wirepo.td-tech.com/cnp/haproxy:2.7.3-ping

ports:

- containerPort: 7000

name: haproxy

- containerPort: 6000

name: gauss

volumeMounts:

- name: config

mountPath: "/usr/local/etc/haproxy"

readOnly: true

nodeSelector:

db-proxy: "true"

volumes:

- name: config

configMap:

name: haproxy-config

HAProxy配置文件

---

apiVersion: v1

kind: ConfigMap

metadata:

namespace: platform

name: haproxy-config

data:

# 类文件键

haproxy.cfg: |

global

maxconn 100

log 127.0.0.1 local0

defaults

log global

mode tcp

retries 2

timeout client 30m

timeout connect 4s

timeout server 30m

timeout check 5s

listen stats

mode http

bind *:7000

stats enable

stats uri /

listen opengauss

bind *:6000

option httpchk

http-check expect status 200

default-server inter 3s fall 3 rise 2 on-marked-down shutdown-sessions

server dbsrv1 dbsrv1.platform.svc.cluster.local:5432 maxconn 100 check port 8008

server dbsrv2 dbsrv2.platform.svc.cluster.local:5432 maxconn 100 check port 8008

server dbsrv3 dbsrv3.platform.svc.cluster.local:5432 maxconn 100 check port 8008

主要配置

1主2从的流复制配置

在Kubernetes环境上需要在pod中配置,通常在镜像的entrypoint.sh中通过脚本实现,此处只记录脚本中使用的主要命令。

# 在pg_hba.conf中添加对端ip 此处使用主机名

host all all dbsrv1.platform.svc.cluster.local trust

host all all dbsrv2.platform.svc.cluster.local trust

host all all dbsrv3.platform.svc.cluster.local trust

# 配置postgresql.conf的如下参数

# 本机监听地址

gs_guc set -D $PGDATA -c “listen_addresses = '192.168.2.162'”

# 配置流复制连接信息 有几个备就要配置几个

gs_guc set -D $PGDATA -c "replconninfo1 = 'localhost=192.168.2.162 localport=5433 localheartbeatport=5434 localservice=5436 remotehost=192.168.1.186 remoteport=5433 remoteheartbeatport=5434 remoteservice=5436'"

# 配置主备同步模式

gs_guc set -D $PGDATA -c "synchronous_commit=on"

# 配置潜在同步复制的备机名称列表,每个名称用逗号分隔。当取值为*,表示匹配任意提供同步复制的备机名称。

gs_guc set -D $PGDATA -c "synchronous_standby_names = '*'"

HAProxy DNS解析配置

在HAProxy配置中我们通过监听openGauss Service来代理数据库,但是HAProxy通常只在启动时解析一次域名,并把ip保存在内存中,在Kubernetes上存在一个问题,当一个openGauss pod故障后,会重新拉起一个新的pod,此时ip会发生变化,从而导致HAProxy不能正常代理。因此需要配置动态域名解析,如下:

resolvers kube-dns

nameserver kube-dns kube-dns.kube-system.svc.cluster.local:53

parse-resolv-conf

resolve_retries 3

timeout resolve 1s

timeout retry 1s

hold other 30s

hold refused 30s

hold nx 30s

hold timeout 30s

hold valid 10s

hold obsolete 30s

基本维护

查看openGauss实例状态

通过kubectl查看 openGauss pod状态和调度情况:

[root@master2 ~]# kubectl get po -n platform -l dev=gauss -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

db1-deployment-67d97bbdf7-vz29h 1/1 Running 0 2d 192.168.1.29 10.148.151.130 <none> <none>

db2-deployment-6bfb5467b8-h4pxw 1/1 Running 1 (23h ago) 26h 192.168.0.154 10.148.151.140 <none> <none>

db3-deployment-d479ff947-9bfng 1/1 Running 0 25h 192.168.2.140 10.148.151.139 <none> <none>

也可以通过Patroni提供的http接口查看集群状态:

# curl -i dbsrv1.platform.svc.cluster.local:8008/cluster

HTTP/1.0 200 OK

Server: BaseHTTP/0.6 Python/3.7.16

Date: Wed, 19 Jul 2023 07:25:14 GMT

Content-Type: application/json

{

"members":[

{

"name":"db1",

"role":"leader",

"state":"running",

"api_url":"http://192.168.1.29:8008/patroni",

"host":"192.168.1.29",

"port":5432,

"timeline":1,

"pending_restart":true

},

{

"name":"db2",

"role":"replica",

"state":"running",

"api_url":"http://192.168.0.154:8008/patroni",

"host":"192.168.0.154",

"port":5432,

"timeline":"1",

"pending_restart":true,

"lag":0

},

{

"name":"db3",

"role":"replica",

"state":"running",

"api_url":"http://192.168.2.140:8008/patroni",

"host":"192.168.2.140",

"port":5432,

"timeline":"1",

"pending_restart":true,

"lag":0

}

]

}

attach到pod中通过Patroni查看集群状态

root@db1-deployment-67d97bbdf7-vz29h:/# patronictl -c /home/omm/patroni.yaml list

+--------+---------------+---------+---------+----+-----------+-----------------+

| Member | Host | Role | State | TL | Lag in MB | Pending restart |

+ Cluster: opengauss (5626236013549685205) --+----+-----------+-----------------+

| db1 | 192.168.1.29 | Leader | running | 1 | | * |

| db2 | 192.168.0.154 | Replica | running | 1 | 0 | * |

| db3 | 192.168.2.140 | Replica | running | 1 | 0 | * |

+--------+---------------+---------+---------+----+-----------+-----------------+

查看HAProxy代理状态

通过HAProxy提供的dashboard可以查看代理状态,如下图可以看出流量会被路由到dbsrv1

HA测试

数据库实例故障测试

| 测试类型 | 测试方式 | 测试命令 | 测试结果 |

|---|---|---|---|

| - | 停主库实例 | - | 备库自动升级成主库,HAProxy代理正常,数据库服务正常,故障节点恢复后重新加入集群 |

| - | 停备库实例 | - | 主库不受影响,HAProxy代理正常,数据库服务正常,故障节点恢复后重新加入集群 |

操作系统宕机测试

| 测试类型 | 测试方式 | 测试命令 | 测试结果 |

|---|---|---|---|

| - | 在Kubernetes集群中选取主库所在的主机重启 | reboot | 1. 触发故障切换到备库其中一个节点,备库另一个节点同步新主库 |

| - | 在Kubernetes集群中选取备库所在的主机重启 | reboot | HAProxy代理正常,数据库服务正常,故障节点恢复后重新加入集群 |

switchover和failover切换

- failover endpoints 允许在没有健康节点时执行手动 failover ,但同时它不允许 switchover 。

- switchover endpoint 则相反。它仅在集群健康(有leader)时才起作用,并允许在指定时间安排切换。

switchover操作

root@db1-deployment-67d97bbdf7-vz29h:/# patronictl -c /home/omm/patroni.yaml list

+--------+---------------+---------+---------+----+-----------+-----------------+

| Member | Host | Role | State | TL | Lag in MB | Pending restart |

+ Cluster: opengauss (5626236013549685205) --+----+-----------+-----------------+

| db1 | 192.168.1.29 | Leader | running | 1 | | * |

| db2 | 192.168.0.154 | Replica | running | 1 | 0 | * |

| db3 | 192.168.2.140 | Replica | running | 1 | 0 | * |

+--------+---------------+---------+---------+----+-----------+-----------------+

root@db1-deployment-67d97bbdf7-vz29h:/# patronictl -c /home/omm/patroni.yaml switchover

Master [db1]:

Candidate ['db2', 'db3'] []:

When should the switchover take place (e.g. 2023-07-19T17:32 ) [now]:

Current cluster topology

+--------+---------------+---------+---------+----+-----------+-----------------+

| Member | Host | Role | State | TL | Lag in MB | Pending restart |

+ Cluster: opengauss (5626236013549685205) --+----+-----------+-----------------+

| db1 | 192.168.1.29 | Leader | running | 1 | | * |

| db2 | 192.168.0.154 | Replica | running | 1 | 0 | * |

| db3 | 192.168.2.140 | Replica | running | 1 | 0 | * |

+--------+---------------+---------+---------+----+-----------+-----------------+

Are you sure you want to switchover cluster opengauss, demoting current master db1? [y/N]: y

2023-07-19 16:32:40.69923 Successfully switched over to "db2"

+--------+---------------+---------+---------+----+-----------+-----------------+

| Member | Host | Role | State | TL | Lag in MB | Pending restart |

+ Cluster: opengauss (5626236013549685205) --+----+-----------+-----------------+

| db1 | 192.168.1.29 | Replica | stopped | | unknown | * |

| db2 | 192.168.0.154 | Leader | running | 1 | | * |

| db3 | 192.168.2.140 | Replica | running | 1 | 0 | * |

+--------+---------------+---------+---------+----+-----------+-----------------+

root@db1-deployment-67d97bbdf7-vz29h:/# patronictl -c /home/omm/patroni.yaml list

+--------+---------------+---------+---------+----+-----------+-----------------+

| Member | Host | Role | State | TL | Lag in MB | Pending restart |

+ Cluster: opengauss (5626236013549685205) --+----+-----------+-----------------+

| db1 | 192.168.1.29 | Replica | running | 1 | 0 | * |

| db2 | 192.168.0.154 | Leader | running | 1 | | * |

| db3 | 192.168.2.140 | Replica | running | 1 | 0 | * |

+--------+---------------+---------+---------+----+-----------+-----------------+

failover操作

root@db1-deployment-67d97bbdf7-vz29h:/# patronictl -c /home/omm/patroni.yaml list

+--------+---------------+---------+---------+----+-----------+-----------------+

| Member | Host | Role | State | TL | Lag in MB | Pending restart |

+ Cluster: opengauss (5626236013549685205) --+----+-----------+-----------------+

| db1 | 192.168.1.29 | Replica | running | 1 | 0 | * |

| db2 | 192.168.0.154 | Leader | running | 1 | | * |

| db3 | 192.168.2.140 | Replica | running | 1 | 0 | * |

+--------+---------------+---------+---------+----+-----------+-----------------+

root@db1-deployment-67d97bbdf7-vz29h:/# patronictl -c /home/omm/patroni.yaml failover

Candidate ['db1', 'db3'] []: db3

Current cluster topology

+--------+---------------+---------+---------+----+-----------+-----------------+

| Member | Host | Role | State | TL | Lag in MB | Pending restart |

+ Cluster: opengauss (5626236013549685205) --+----+-----------+-----------------+

| db1 | 192.168.1.29 | Replica | running | 1 | 0 | * |

| db2 | 192.168.0.154 | Leader | running | 1 | | * |

| db3 | 192.168.2.140 | Replica | running | 1 | 0 | * |

+--------+---------------+---------+---------+----+-----------+-----------------+

Are you sure you want to failover cluster opengauss, demoting current master db2? [y/N]: y

2023-07-19 16:34:19.26151 Successfully failed over to "db3"

+--------+---------------+---------+---------+----+-----------+-----------------+

| Member | Host | Role | State | TL | Lag in MB | Pending restart |

+ Cluster: opengauss (5626236013549685205) --+----+-----------+-----------------+

| db1 | 192.168.1.29 | Replica | running | 1 | 0 | * |

| db2 | 192.168.0.154 | Replica | stopped | | unknown | * |

| db3 | 192.168.2.140 | Leader | running | 1 | | * |

+--------+---------------+---------+---------+----+-----------+-----------------+

root@db1-deployment-67d97bbdf7-vz29h:/# patronictl -c /home/omm/patroni.yaml list

+--------+---------------+---------+----------+----+-----------+-----------------+

| Member | Host | Role | State | TL | Lag in MB | Pending restart |

+ Cluster: opengauss (5626236013549685205) ---+----+-----------+-----------------+

| db1 | 192.168.1.29 | Replica | running | 1 | 0 | * |

| db2 | 192.168.0.154 | Replica | starting | | unknown | * |

| db3 | 192.168.2.140 | Leader | running | 1 | | * |

+--------+---------------+---------+----------+----+-----------+-----------------+

root@db1-deployment-67d97bbdf7-vz29h:/# patronictl -c /home/omm/patroni.yaml list

+--------+---------------+---------+----------+----+-----------+-----------------+

| Member | Host | Role | State | TL | Lag in MB | Pending restart |

+ Cluster: opengauss (5626236013549685205) ---+----+-----------+-----------------+

| db1 | 192.168.1.29 | Replica | running | 1 | 0 | * |

| db2 | 192.168.0.154 | Replica | starting | | unknown | * |

| db3 | 192.168.2.140 | Leader | running | 1 | | * |

+--------+---------------+---------+----------+----+-----------+-----------------+

root@db1-deployment-67d97bbdf7-vz29h:/# patronictl -c /home/omm/patroni.yaml list

+--------+---------------+---------+---------+----+-----------+-----------------+

| Member | Host | Role | State | TL | Lag in MB | Pending restart |

+ Cluster: opengauss (5626236013549685205) --+----+-----------+-----------------+

| db1 | 192.168.1.29 | Replica | running | 1 | 0 | * |

| db2 | 192.168.0.154 | Replica | running | 1 | 0 | * |

| db3 | 192.168.2.140 | Leader | running | 1 | | * |

+--------+---------------+---------+---------+----+-----------+-----------------+

总结

Kubernetes具有高可用性特征,可以确保应用程序的持续可用性。通过使用Patroni来管理openGauss集群,可以实现自动故障转移和数据复制,确保数据库的高可用性。

此外,在Kubernetes搭建数据库集群还有以下优势:

- 自动化部署和管理:Kubernetes是一个容器编排平台,提供了自动化的应用程序部署和管理机制。通过使用Kubernetes,可以轻松地部署和管理这些组件,包括Patroni、HAProxy、etcd和openGauss。

- 弹性伸缩:Kubernetes允许根据需求自动扩展或缩减应用程序和数据库实例。当负载增加时,可以自动添加新的Pod实例以增加容量。而当负载减少时,可以自动缩减Pod实例以减少资源消耗,从而实现弹性伸缩。

- 自我修复和健康检查:Kubernetes具有自我修复的能力。当组件出现故障时,Kubernetes可以自动重新启动受影响的Pod实例,使应用程序保持可用性。此外,Kubernetes还提供了健康检查机制,可以监测应用程序和数据库的状态,并在检测到异常时进行相应的操作。

- 灵活性和可移植性:Kubernetes是一个开放源代码的平台,支持多云环境和混合云环境。这意味着可以轻松迁移和扩展基于Kubernetes的架构,而无需对架构进行重大改动。此外,Kubernetes还提供了丰富的插件和工具生态系统,可以灵活地定制和扩展架构。