前言:初次接触GBase 8c,看到官网提示和Oracle兼容,希望做一次去O到GBase 8c的验证测试,测试前先部署了一套主备集群,也希望对此熟悉的朋友能多多指正。

一、安装需求

主备环境各服务器应具有相同体系架构

- 64bit 和 32bit 不能同一集群

- ARM 和 x86 两类系统不能同一集群

1.1 硬件环境要求

主备环境GBase 8c 各服务器应满足以下最低硬件需求,生产环境应根据业务需求适时调整硬件配置。

| 项目名称 | 配置描述 | 备注信息 |

|---|---|---|

| 服务器数量 | 2(台) | |

| 内存 | >=32(GB) | 性能及商业部署建议单机不低于128(GB) |

| CPU | >= 1 * 8(核),2.0(GHz) | 性能测试及商业部署建议单实例不低于1*16(核),2.0(GHz) 支持超线程和非超线程两种模式,建议选择相同模式 虚拟机环境指令集应含rdtscp |

| 硬盘 | > 1(GB) 存放GBase 8c应用程序 > 300(MB) 存放元数据 > 70(%) 存储空间用于数据存储 |

系统盘建议配置RAID1 数据盘建议配置RAID5(规划4组RAID5数据盘安装openGauss) Disk Cache Policy建议设置Disabled |

| 网络 | >= 300(兆) 以太网 | 建议设置双网卡冗余bond |

1.2 操作系统要求

1.2.1 软件环境要求

| 软件类型 | 配置描述 | 备注信息 |

|---|---|---|

| 操作系统 | x86操作系统 RHEL/CentOS 7.6及以上 |

RHEL/CentOS 7.6- 7.9 |

| inode个数 | 剩余inode个数>15(亿) | |

| 工具 | bzip2 | |

| Python | Python 3.6.X | python需要通过–enable-shared方式编译 |

1.2.2 软件依赖要求

| 所需软件 | 建议版本 | 备注信息 |

|---|---|---|

| libaio-devel | 建议版本:0.3.109-13 | |

| flex | 要求版本:2.5.31 以上 | |

| bison | 建议版本:2.7-4 | |

| ncurses-devel | 建议版本:5.9-13.20130511 | |

| glibc-devel | 建议版本:2.17-111 | |

| patch | 建议版本:2.7.1-10 | |

| lsb_release | 建议版本:4.1 | |

| bzip2 | 建议版本:1.0.6 |

二、集群规划

2.1 主机名称规划

| 主机名称 | 描述说明 |

|---|---|

| node-db2 | 主节点服务器名称 |

| node-db3 | 备节点服务器名称 |

2.2 主机地址规划

| 节点类型 | IP地址 | 描述说明 |

|---|---|---|

| gha_server/dcs/datanode | 10.110.3.153 | 主节点IP地址 |

| dcs/datanode | 10.110.3.154 | 备节点IP地址 |

2.3 端口号规划

| 端口号 | 参数名称 | 描述说明 |

|---|---|---|

| 20001 | port | 集群节点连接端口号 |

| 2379 | port | 集群节点连接端口号 |

| 15432 | port | 集群节点连接端口号 |

| 8005 | Agent Port | 高可用端口号 |

2.4 用户及组规划

| 项目名称 | 名称 | 所属类型 | 规划建议 |

|---|---|---|---|

| 用户名 | gbase | 操作系统 | 建议集群各节点密码及ID相同 |

| 组名 | gbase | 操作系统 | 建议集群各节点组ID相同 |

2.5 软件目录规划

| 目录名称 | 对应名称 | 目录作用 |

|---|---|---|

| /opt/gbase/data/dn/dn1 | work_dir | 集群主节点数据存放目录 |

| /opt/gbase/data/dn/dn2 | work_dir | 集群备节点数据存放目录 |

| /home/gbase/gbase_db | prefix | 指定运行目录路径 |

| /home/gbase/gbase_package | pkg_path | 制定数据库安装包的存放路径 |

三、环境准备

3.1 安装软件依赖包

# root用户执行【所有节点】 [root@node-dbxxx ~]# yum install -y bison flex libaio-devel lsb_release patch ncurses-devel bzip2 -- 检查是否已安装 [root@node-dbxxx ~]# rpm -qa --queryformat "%{NAME}-%{VERSION}-%{RELEASE} (%{ARCH})\n" | grep -E "|bison|flex|libaio-devel|lsb_release|patch|ncurses-devel|bzip2"

3.2 关闭防火墙

# root用户执行【所有节点】 -- 停止 firewalld 服务 [root@node-dbxxx ~]# systemctl stop firewalld.service -- 禁用 firewalld 服务 [root@node-dbxxx ~]# systemctl disable firewalld.service -- 查看 firewalld 服务状态 [root@node-dbxxx ~]# systemctl status firewalld -- 显示如下表示已关闭禁用防火墙 ● firewalld.service - firewalld - dynamic firewall daemon Loaded: loaded (/usr/lib/systemd/system/firewalld.service; disabled; vendor preset: enabled) Active: inactive (dead) Docs: man:firewalld(1)

3.3 关闭SELinux

# root用户执行【所有节点】 -- 临时关闭SELinux [root@node-dbxxx ~]# setenforce 0 -- 永久关闭SELinux [root@node-dbxxx ~]# sed -i "s/SELINUX=enforcing/SELINUX=disabled/" /etc/selinux/config -- 检查SELinux状态 [root@node-dbxxx ~]# getenforce -- 状态为Disabled表明SELinux已关闭 Disabled

3.4 设置时区和时间

GBase 8c 主备系统需主备节点完成时间同步,保证主备数据的一致性,通常采用NTP服务保证各节点间时间同步。

# root用户执行【所有节点】 # 使用ntp设置时钟同步 -- 安装ntp服务 [root@node-dbxxx ~]# yum install -y ntp -- 开机启动ntp服务 [root@node-dbxxx ~]# systemctl enable ntpd -- 启动ntp服务 [root@node-dbxxx ~]# systemctl start ntpd -- 设置时区Asia/Shanghai [root@node-dbxxx ~]# timedatectl set-timezone Asia/Shanghai -- 检查时区 [root@node-dbxxx ~]# timedatectl |grep -i zone -- 启用ntp服务 [root@node-dbxxx ~]# timedatectl set-ntp yes -- 编辑定时任务列表 [root@node-dbxxx ~]# crontab -e -- 使用vi/vim对定时任务列表进行编辑,添加如下内容变保存退出 0 12 * * * ntpdate cn.pool.ntp.org -- 查看时间及时区 [root@node-dbxxx ~]# timedatectl status

3.5 修改硬件时钟

硬件时钟是指主机板上的时钟设备,也就是通常可在BIOS画面设定的时钟,服务器启动时会根据硬件时钟设置系统时钟。

# root用户执行【所有节点】 -- 将当前系统时间写入硬件时钟,/etc/adjtime随之更新 [root@node-dbxxx ~]# hwclock --systohc -- 查看硬件时钟 [root@node-dbxxx ~]# hwclock --show

3.6 配置SSH

# root用户执行【所有节点】 -- 禁用 SSH 登录时的横幅(Banner) [root@node-dbxxx ~]#sed -i '/Banner/s/^/#/' /etc/ssh/sshd_config -- 目的是禁用允许以 root 用户登录的配置选项 [root@node-dbxxx ~]# sed -i '/PermitRootLogin/s/^/#/' /etc/ssh/sshd_config [root@node-dbxxx ~]# echo -e "\n" >> /etc/ssh/sshd_config [root@node-dbxxx ~]# echo "Banner none " >> /etc/ssh/sshd_config # 修改Banner配置,去掉连接到系统时,系统提示的欢迎信息。欢迎信息会干扰安装时远程操作的返回结果,影响安装正常执行 [root@node-dbxxx ~]# echo "PermitRootLogin yes" >> /etc/ssh/sshd_config [root@node-dbxxx ~]# cat /etc/ssh/sshd_config |grep -v ^#|grep -E 'PermitRootLogin|Banner' -- 重启生效 [root@node-dbxxx ~]# systemctl restart sshd.service -- 查看SSH状态 [root@node-dbxxx ~]# systemctl status sshd.service

3.7 添加hosts解析

# root用户执行【所有节点】 [root@node-dbxxx ~]# cat >> /etc/hosts<<EOF 10.110.3.153 node-db2 10.110.3.154 node-db3 EOF

3.8 调整系统内核参数

如下系统内核参数预安装时脚本未进行自动设置,需通过手工调整。

| 参数名称 | 参数说明 | 推荐取值 |

|---|---|---|

| kernel.sem | 信号量内核参数 | 40960 2048000 40960 20480 |

# root用户执行【所有节点】 [root@node-dbxxx ~]# tee -a /etc/sysctl.conf << EOF kernel.sem = 40960 2048000 40960 20480 EOF -- 然后执行sysctl -p使修改生效 [root@node-dbxxx ~]# sysctl -p -- 查看修改后的kernel.sem值 [root@node-dbxxx ~]# sysctl kernel.sem

3.9 创建用户配置sudoer

# root用户执行【所有节点】 [root@node-dbxxx ~]# groupadd gbase -g 1005 [root@node-dbxxx ~]# useradd gbase -u 1005 -g gbase [root@node-dbxxx ~]# echo "passwd" | passwd --stdin gbase -- 配置sudoer -- 1) 使用visudo命令打开/etc/sudoers文件 [root@node-dbxxx ~]# visudo -- 2) 在文件中找到以下内容(或类似内容) ## Allow root to run any commands anywhere -- 3) 将光标移到该行末尾,然后按下 o 键(小写字母 o,不是零) -- 4) 在新行中输入如下新的内容 gbase ALL=(ALL) NOPASSWD:ALL -- 5) 按下 Esc 键退出插入模式,然后按键“:wq!”或"zz"保存并退出

3.10 创建目录

# gbase用户执行【主节点】 [gbase@node-db2 ~]$ mkdir -p /home/gbase/gbase_package # root用户执行【所有节点】 [root@node-dbxxx ~]# mkdir -p /opt/gbase [root@node-dbxxx ~]# chown gbase:gbase /opt/gbase

3.11 配置互信

# gbase用户执行【主节点】 [gbase@node-db2 ~]$ tee -a /home/gbase/sshtrust.sh << EOF #!/bin/bash # 配置用户名和密码 username="gbase" password="gbase" # 配置服务器IP列表 servers=( "10.110.3.153" "10.110.3.154" ) # 创建脚本日志文件 log_file="$(dirname "$0")/script.log" echo "" > "$log_file" # 生成本地SSH密钥对 if [ ! -f "/home/$username/.ssh/id_rsa" ]; then ssh-keygen -q -t rsa -N "" -f "/home/$username/.ssh/id_rsa" fi # 拷贝本地SSH公钥到目标服务器 for server in "${servers[@]}" do # 进行互信 sshpass -p "$password" ssh-copy-id -o StrictHostKeyChecking=no "$username@$server" # 检查互信是否成功,并记录日志 if [ "$?" -eq "0" ] then echo "$(date +'%Y-%m-%d %H:%M:%S') - 互信成功 - $server" >> "$log_file" # 拷贝本地.ssh目录内容到目标服务器 sshpass -p "$password" scp -r /home/"$username"/.ssh/* "$username@$server":/home/"$username"/.ssh else echo "$(date +'%Y-%m-%d %H:%M:%S') - 互信失败 - $server" >> "$log_file" fi done EOF -- 2) 授权/home/gbase/sshtrust.sh [gbase@node-db2 ~]$ chmod +x /root/sshtrust.sh -- 3) 执行/root/sshtrust.sh [gbase@node-db2 ~]$ sh sshtrust.sh /bin/ssh-copy-id: INFO: Source of key(s) to be installed: "/home/gbase/.ssh/id_rsa.pub" /bin/ssh-copy-id: INFO: attempting to log in with the new key(s), to filter out any that are already installed /bin/ssh-copy-id: INFO: 1 key(s) remain to be installed -- if you are prompted now it is to install the new keys Number of key(s) added: 1 Now try logging into the machine, with: "ssh -o 'StrictHostKeyChecking=no' 'gbase@10.110.3.153'" and check to make sure that only the key(s) you wanted were added. /bin/ssh-copy-id: INFO: Source of key(s) to be installed: "/home/gbase/.ssh/id_rsa.pub" /bin/ssh-copy-id: INFO: attempting to log in with the new key(s), to filter out any that are already installed /bin/ssh-copy-id: INFO: 1 key(s) remain to be installed -- if you are prompted now it is to install the new keys Number of key(s) added: 1 Now try logging into the machine, with: "ssh -o 'StrictHostKeyChecking=no' 'gbase@10.110.3.154'" and check to make sure that only the key(s) you wanted were added.

3.12 下载软件安装包

3.12.1 下载安装包

使用注册账号登录GBase官网https://www.gbase.cn/download/gbase-8c?category=INSTALL_PACKAGE下载页面,下载与操作系统匹配的[GBase8cV5_S3.0.0_单机主备版_x86_64.tar.zip]软件安装包并将下载的软件包使用gbase用户上传至服务器/home/gbase/gbase_package目录下。

3.12.2 校验安装包

# gbase用户执行【主节点】 [gbase@node-db2 ~]$ cd /home/gbase/gbase_package [gbase@node-db2 gbase_package]$ unzip GBase8cV5_S3.0.0_单机主备版_x86_64.tar.zip [gbase@node-db2 gbase_package]$ ll total 525488 -rw-rw-r-- 1 gbase gbase 269042643 May 6 10:26 GBase8cV5_S3.0.0B69_centos7.8_x86_64.tar.gz -rw-r--r-- 1 gbase gbase 269043107 Jul 24 11:33 GBase8cV5_S3.0.0_单机主备版_x86_64.tar.zip -rw-rw-r-- 1 gbase gbase 133 May 6 10:24 md5.txt -- 解压下载的GBase8cV5_S3.0.0_单机主备版_x86_64.tar.zip安装包后,里面含有一个md5.txt的文件,内含软件安装包GBase8cV5_S3.0.0B69_centos7.8_x86_64.tar.gz的md5值,使用如下命令校验并对比下载的安装包是否损坏 [gbase@node-db2 gbase_package]$ md5sum GBase8cV5_S3.0.0B69_centos7.8_x86_64.tar.gz 506fe679e7a6d851456d27fe39730f95 GBase8cV5_S3.0.0B69_centos7.8_x86_64.tar.gz [gbase@node-db2 gbase_package]$ cat md5.txt 506fe679e7a6d851456d27fe39730f95 GBase8cV5_S3.0.0B69_centos7.8_x86_64.tar.gz a8badbbd317ed021518d195ee00f8c40b0bc3724 git commit id

3.12.3 解压安装包

# gbase用户执行【主节点】 [gbase@node-db2 gbase_package]$ tar -zxvf GBase8cV5_S3.0.0B69_centos7.8_x86_64.tar.gz GBase8cV5_S3.0.0B69_CentOS_x86_64_om.sha256 GBase8cV5_S3.0.0B69_CentOS_x86_64_om.tar.gz GBase8cV5_S3.0.0B69_CentOS_x86_64_pgpool.tar.gz GBase8cV5_S3.0.0B69_CentOS_x86_64.sha256 GBase8cV5_S3.0.0B69_CentOS_x86_64.tar.bz2 -- 继续解压GBase8cV5_S3.0.0B69_CentOS_x86_64_om.tar.gz [gbase@node-db2 gbase_package]$ tar -zxvf GBase8cV5_S3.0.0B69_CentOS_x86_64_om.tar.gz

3.13 编辑yml文件

编辑集群配置文件gbase.yml。

# gbase用户执行【主节点】 -- 部署主备式集群要制定cluster_type参数为 single-inst [gbase@node-db2 gbase_package]$ cp -p gbase.yml /home/gbase/ [gbase@node-db2 gbase_package]$ cd ~ [gbase@node-db2 ~]$ vim gbase.yml -- 修改后的gbase.yml信息如下 gha_server: - gha_server1: host: 10.110.3.153 port: 20001 dcs: - host: 10.110.3.153 port: 2379 - host: 10.110.3.154 port: 2379 datanode: - dn1: - dn1_1: host: 10.110.3.153 agent_host: 10.110.3.153 role: primary port: 15432 agent_port: 8005 work_dir: /opt/gbase/data/dn/dn_1 - dn1_2: host: 10.110.3.154 agent_host: 10.110.3.154 role: standby port: 15433 agent_port: 8006 work_dir: /opt/gbase/data/dn/dn_2 env: # cluster_type allowed values: multiple-nodes, single-inst, default is multiple-nodes cluster_type: single-inst pkg_path: /home/gbase/gbase_package prefix: /home/gbase/gbase_db version: V5_S3.0.0B69 user: gbase port: 22 third_ssh: false # constant: # virtual_ip: 100.0.1.254/24

四、执行安装

4.1 安装数据库

# gbase用户执行【主节点】 [gbase@node-db2 ~]$ cd /home/gbase/gbase_package/script [gbase@node-db2 script]$ ./gha_ctl install -p /home/gbase/ -c gbase { "ret":0, "msg":"Success" } -- 返回上述内容即表示安装成功 -- 执行过程中可以进入到集群各节点/home/gbase/gbase_db/log/om目录下查看安装日志 [root@node-db2 om]# pwd /home/gbase/gbase_db/log/om [root@node-db2 om]# ls -l total 24 -rw------- 1 gbase gbase 1739 Jul 25 09:35 gs_install-2023-07-25_093427.log -rw------- 1 gbase gbase 19707 Jul 25 09:36 gs_local-2023-07-25_093429.log

4.2 安装验证

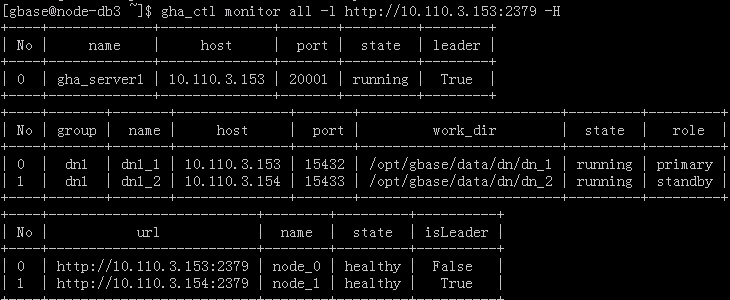

4.2.1 检查数据库集群状态

# gbase用户执行【任意节点】 [gbase@node-dbxxx ~]$ gha_ctl monitor all -l http://10.110.3.153:2379 -H +----+-------------+--------------+-------+---------+--------+ | No | name | host | port | state | leader | +----+-------------+--------------+-------+---------+--------+ | 0 | gha_server1 | 10.110.3.153 | 20001 | running | True | +----+-------------+--------------+-------+---------+--------+ +----+-------+-------+--------------+-------+-------------------------+---------+---------+ | No | group | name | host | port | work_dir | state | role | +----+-------+-------+--------------+-------+-------------------------+---------+---------+ | 0 | dn1 | dn1_1 | 10.110.3.153 | 15432 | /opt/gbase/data/dn/dn_1 | running | primary | | 1 | dn1 | dn1_2 | 10.110.3.154 | 15433 | /opt/gbase/data/dn/dn_2 | running | standby | +----+-------+-------+--------------+-------+-------------------------+---------+---------+ +----+--------------------------+--------+---------+----------+ | No | url | name | state | isLeader | +----+--------------------------+--------+---------+----------+ | 0 | http://10.110.3.153:2379 | node_0 | healthy | False | | 1 | http://10.110.3.154:2379 | node_1 | healthy | True | +----+--------------------------+--------+---------+----------+

4.2.2 启停集群测试

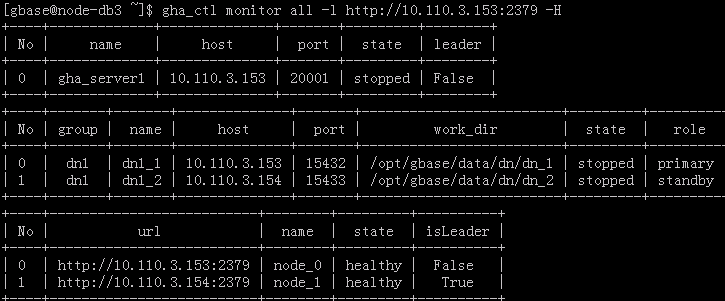

4.2.2.1 停止集群

# gbase用户执行【任意节点】 [gbase@node-dbxxx ~]$ gha_ctl stop all -l http://10.110.3.153:2379 { "ret":0, "msg":"Success" } -- 查看集群状态 [gbase@node-dbxxx ~]$ gha_ctl monitor all -l http://10.110.3.153:2379 -H +----+-------------+--------------+-------+---------+--------+ | No | name | host | port | state | leader | +----+-------------+--------------+-------+---------+--------+ | 0 | gha_server1 | 10.110.3.153 | 20001 | stopped | False | +----+-------------+--------------+-------+---------+--------+ +----+-------+-------+--------------+-------+-------------------------+---------+---------+ | No | group | name | host | port | work_dir | state | role | +----+-------+-------+--------------+-------+-------------------------+---------+---------+ | 0 | dn1 | dn1_1 | 10.110.3.153 | 15432 | /opt/gbase/data/dn/dn_1 | stopped | primary | | 1 | dn1 | dn1_2 | 10.110.3.154 | 15433 | /opt/gbase/data/dn/dn_2 | stopped | standby | +----+-------+-------+--------------+-------+-------------------------+---------+---------+ +----+--------------------------+--------+---------+----------+ | No | url | name | state | isLeader | +----+--------------------------+--------+---------+----------+ | 0 | http://10.110.3.153:2379 | node_0 | healthy | False | | 1 | http://10.110.3.154:2379 | node_1 | healthy | True | +----+--------------------------+--------+---------+----------+

4.2.2.2 启动集群

# gbase用户执行【任意节点】 [gbase@node-db3 ~]$ gha_ctl start all -l http://10.110.3.153:2379 { "ret":0, "msg":"Success" } -- 查看集群状态 [gbase@node-db3 ~]$ gha_ctl monitor all -l http://10.110.3.153:2379 -H +----+-------------+--------------+-------+---------+--------+ | No | name | host | port | state | leader | +----+-------------+--------------+-------+---------+--------+ | 0 | gha_server1 | 10.110.3.153 | 20001 | running | True | +----+-------------+--------------+-------+---------+--------+ +----+-------+-------+--------------+-------+-------------------------+---------+---------+ | No | group | name | host | port | work_dir | state | role | +----+-------+-------+--------------+-------+-------------------------+---------+---------+ | 0 | dn1 | dn1_1 | 10.110.3.153 | 15432 | /opt/gbase/data/dn/dn_1 | running | primary | | 1 | dn1 | dn1_2 | 10.110.3.154 | 15433 | /opt/gbase/data/dn/dn_2 | running | standby | +----+-------+-------+--------------+-------+-------------------------+---------+---------+ +----+--------------------------+--------+---------+----------+ | No | url | name | state | isLeader | +----+--------------------------+--------+---------+----------+ | 0 | http://10.110.3.153:2379 | node_0 | healthy | False | | 1 | http://10.110.3.154:2379 | node_1 | healthy | True | +----+--------------------------+--------+---------+----------+ # 集群在启动过程中,可以看到集群的状态state是从stopped--> unstable --> running

4.3.3 连接数据库测试

# gbase用户执行【任意节点】 [gbase@node-db2 ~]$ gsql -d postgres -p 15432 gsql ((single_node GBase8cV5 3.0.0B69 build a8badbbd) compiled at 2023-03-31 15:23:20 commit 0 last mr 1110 ) Non-SSL connection (SSL connection is recommended when requiring high-security) Type "help" for help. postgres=# \l List of databases Name | Owner | Encoding | Collate | Ctype | Access privileges -----------+-------+----------+---------+-------+------------------- postgres | gbase | UTF8 | C | C | template0 | gbase | UTF8 | C | C | =c/gbase + | | | | | gbase=CTc/gbase template1 | gbase | UTF8 | C | C | =c/gbase + | | | | | gbase=CTc/gbase (3 rows) postgres=# create database gbasedb; CREATE DATABASE postgres=# \q [gbase@node-db2 ~]$ gsql -d postgres -p 15433 gsql ((single_node GBase8cV5 3.0.0B69 build a8badbbd) compiled at 2023-03-31 15:23:20 commit 0 last mr 1110 ) Non-SSL connection (SSL connection is recommended when requiring high-security) Type "help" for help. postgres=# \l List of databases Name | Owner | Encoding | Collate | Ctype | Access privileges -----------+-------+----------+---------+-------+------------------- gbasedb | gbase | UTF8 | C | C | postgres | gbase | UTF8 | C | C | template0 | gbase | UTF8 | C | C | =c/gbase + | | | | | gbase=CTc/gbase template1 | gbase | UTF8 | C | C | =c/gbase + | | | | | gbase=CTc/gbase (4 rows) postgres=# create database gbase8c; CREATE DATABASE

五、附录

5.1 “msg”:“Resource:gbase already in use”

问题描述:

在安装GBase主备集群时,第一次未安装成功,当再次安装时,总是报如下信息,无法继续安装:

[gbase@node-db2 script]$ ./gha_ctl install -p /home/gbase/ -c gbase { "ret":80000107, "msg":"Resource:gbase already in use" }

即使删除主备节点/home/gbase/gbase_db及/tmp/gha_ctl目录也还是无法安装

解决办法:

使用gbase用户进入到主节点/home/gbase/gbase_package/scripts目录下执行如下命令进行卸载DCS工具

[gbase@node-db2 ~]$ cd /home/gbase/gbase_package/script/ [gbase@node-db2 script]$ ./gha_ctl uninstall -c gbase -l http://10.110.3.153:2379 { "ret":0, "msg":"Success" } -- 当出现如上信息时提示卸载成功 -- 实际生产测试中

当DCS工具卸载干净,可以重新进行安装。

5.2 主备集群状态正常时执行集群启动任务竟然会先停集群再启动

在主备集群安装完成后,我尝试执行gha_ctl start all -l http://10.110.3.153:2379 启动集群,结果发现集群状态先是被停止了,然后又将集群重新进行了启动。

[gbase@node-db2 ~]$ gha_ctl start all -l http://10.110.3.153:2379 { "ret":0, "msg":"Success" } -- 此时新开一个会话监控集群状态,会发现集群状态state先stopped [gbase@node-db2 ~]$ gha_ctl monitor all -l http://10.110.3.153:2379 -H +----+-------------+--------------+-------+---------+--------+ | No | name | host | port | state | leader | +----+-------------+--------------+-------+---------+--------+ | 0 | gha_server1 | 10.110.3.153 | 20001 | stopped | False | +----+-------------+--------------+-------+---------+--------+ +----+-------+-------+--------------+-------+-------------------------+---------+---------+ | No | group | name | host | port | work_dir | state | role | +----+-------+-------+--------------+-------+-------------------------+---------+---------+ | 0 | dn1 | dn1_1 | 10.110.3.153 | 15432 | /opt/gbase/data/dn/dn_1 | stopped | primary | | 1 | dn1 | dn1_2 | 10.110.3.154 | 15433 | /opt/gbase/data/dn/dn_2 | stopped | standby | +----+-------+-------+--------------+-------+-------------------------+---------+---------+ +----+--------------------------+--------+---------+----------+ | No | url | name | state | isLeader | +----+--------------------------+--------+---------+----------+ | 0 | http://10.110.3.153:2379 | node_0 | healthy | False | | 1 | http://10.110.3.154:2379 | node_1 | healthy | True | +----+--------------------------+--------+---------+----------+ -- 一段时间后,集群状态显示为running [gbase@node-db2 ~]$ gha_ctl monitor all -l http://10.110.3.153:2379 -H +----+-------------+--------------+-------+---------+--------+ | No | name | host | port | state | leader | +----+-------------+--------------+-------+---------+--------+ | 0 | gha_server1 | 10.110.3.153 | 20001 | running | True | +----+-------------+--------------+-------+---------+--------+ +----+-------+-------+--------------+-------+-------------------------+---------+---------+ | No | group | name | host | port | work_dir | state | role | +----+-------+-------+--------------+-------+-------------------------+---------+---------+ | 0 | dn1 | dn1_1 | 10.110.3.153 | 15432 | /opt/gbase/data/dn/dn_1 | running | primary | | 1 | dn1 | dn1_2 | 10.110.3.154 | 15433 | /opt/gbase/data/dn/dn_2 | running | standby | +----+-------+-------+--------------+-------+-------------------------+---------+---------+ +----+--------------------------+--------+---------+----------+ | No | url | name | state | isLeader | +----+--------------------------+--------+---------+----------+ | 0 | http://10.110.3.153:2379 | node_0 | healthy | False | | 1 | http://10.110.3.154:2379 | node_1 | healthy | True | +----+--------------------------+--------+---------+----------+

我觉得这点不太合理,在集群状态正常情况下再启动集群应该提示集群已启动,而不是重启,此问题已反馈GBase公司相应人员。