!!!本文仅作为个人测试使用,不作为生产环境部署文档。(持续更新中)

环境介绍:

采用virtualbox 部署了3个虚拟机,安装centos7.8操作系统。

集群管理节点放到了第一节点上,另外2个节点为worker。

一、操作系统配置

在三个节点都执行,均以root进行操作

参考官方文档如下:

https://kubernetes.io/zh-cn/docs/setup/production-environment/container-runtimes/#containerd

1、转发 IPv4 并让 iptables 看到桥接流量

执行下述指令:

overlay

br_netfilter

EOF

modprobe overlay

modprobe br_netfilter

2、设置所需的 sysctl 参数

cat <<EOF | sudo tee /etc/sysctl.d/k8s.conf

net.bridge.bridge-nf-call-iptables = 1

net.bridge.bridge-nf-call-ip6tables = 1

net.ipv4.ip_forward = 1

EOF

应用 sysctl 参数而不重新启动

sysctl --system

3、 检查br_netfilter 和 overlay 模块正常加载

lsmod | grep br_netfilter

lsmod | grep overlay

通过运行以下指令确认 net.bridge.bridge-nf-call-iptables、net.bridge.bridge-nf-call-ip6tables 和 net.ipv4.ip_forward 系统变量在你的 sysctl 配置中被设置为 1:

sysctl net.bridge.bridge-nf-call-iptables net.bridge.bridge-nf-call-ip6tables net.ipv4.ip_forward

4、关闭selinux

将 SELinux 设置为 permissive 模式(相当于将其禁用)

sudo setenforce 0

sudo sed -i 's/^SELINUX=enforcing$/SELINUX=permissive/' /etc/selinux/config

5、关闭swap

临时关闭 swapoff -a

永久关闭 sed -ir ‘/ swap / s/^(.*)$/#\1/’ /etc/fstab

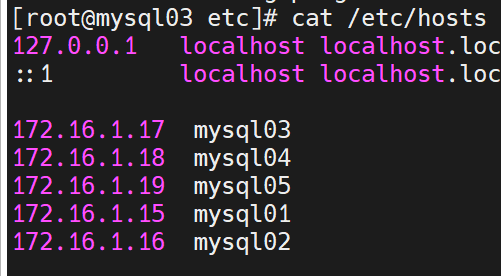

6、配置本地解析

配置/etc/hosts的各节点IP与主机名

二、部署环境

1、容器进行时

用rpm包安装

wget -O /etc/yum.repos.d/docker-ce.repo https://mirrors.aliyun.com/docker-ce/linux/centos/docker-ce.repo

yum list | grep containerd

yum -y install containerd.io

修改systemcgroup

mkdir -p /etc/containerd/

containerd config default > /etc/containerd/config.toml

查看以下文件,搜索SystemdCgroup,修改参数为true

/etc/containerd/config.toml

修改

sandbox_image的镜像地址

sandbox_image= “registry.aliyuncs.com/google_containers/pause:3.6”

sed -i 's#SystemdCgroup = false#SystemdCgroup = true#' /etc/containerd/config.toml

sed -i 's#sandbox_image = "registry.k8s.io/pause:3.6"#sandbox_image = "registry.aliyuncs.com/google_containers/pause:3.6"#' /etc/containerd/config.toml

systemctl daemon-reload

systemctl start containerd

systemctl enable containerd

4、部署kubelet kubeadm

参考文档:

https://kubernetes.io/zh-cn/docs/setup/production-environment/tools/kubeadm/install-kubeadm/

由于国外镜像下载不了,所以使用阿里的镜像源。

cat <<EOF > /etc/yum.repos.d/kubernetes.repo

[kubernetes]

name=Kubernetes

baseurl=http://mirrors.aliyun.com/kubernetes/yum/repos/kubernetes-el7-x86_64

enabled=1

gpgcheck=0

repo_gpgcheck=0

gpgkey=http://mirrors.aliyun.com/kubernetes/yum/doc/yum-key.gpg http://mirrors.aliyun.com/kubernetes/yum/doc/rpm-package-key.gpg

EOF

yum install -y kubelet kubeadm kubectl --disableexcludes=kubernetes

yum install kubernetes-cni -y

sudo systemctl enable --now kubelet

设置容器运行时

crictl config runtime-endpoint unix:///run/containerd/containerd.sock

crictl config image-endpoint unix:///run/containerd/containerd.sock

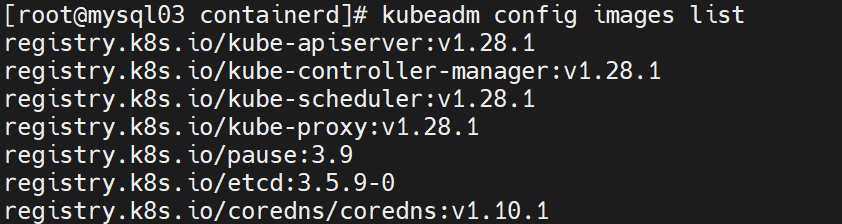

5、下载镜像

kubeadm config print init-defaults --component-configs KubeletConfiguration > kubeadm.yaml

[root@mysql03 ~]# cat kubeadm.yaml

apiVersion: kubeadm.k8s.io/v1beta3

bootstrapTokens:

- groups:

- system:bootstrappers:kubeadm:default-node-token

token: abcdef.0123456789abcdef

ttl: 24h0m0s

usages:

- signing

- authentication

kind: InitConfiguration

localAPIEndpoint:

advertiseAddress: 172.16.1.17 ####master节点IP

bindPort: 6443

nodeRegistration:

criSocket: unix:///run/containerd/containerd.sock ####containerd容器

imagePullPolicy: IfNotPresent

name: mysql03 ####master主机名

taints: null

---

apiServer:

timeoutForControlPlane: 4m0s

apiVersion: kubeadm.k8s.io/v1beta3

certificatesDir: /etc/kubernetes/pki

clusterName: kubernetes

controllerManager: {}

dns: {}

etcd:

local:

dataDir: /var/lib/etcd

imageRepository: registry.aliyuncs.com/google_containers ####镜像拉取地址

kind: ClusterConfiguration

kubernetesVersion: 1.22.1 #####镜像版本

networking:

dnsDomain: cluster.local

serviceSubnet: 10.96.0.0/12

podSubnet: 10.244.0.0/16 ####POD子网

scheduler: {}

---

apiVersion: kubelet.config.k8s.io/v1beta1

authentication:

anonymous:

enabled: false

webhook:

cacheTTL: 0s

enabled: true

x509:

clientCAFile: /etc/kubernetes/pki/ca.crt

authorization:

mode: Webhook

webhook:

cacheAuthorizedTTL: 0s

cacheUnauthorizedTTL: 0s

cgroupDriver: systemd

clusterDNS:

- 10.96.0.10

clusterDomain: cluster.local

cpuManagerReconcilePeriod: 0s

evictionPressureTransitionPeriod: 0s

fileCheckFrequency: 0s

healthzBindAddress: 127.0.0.1

healthzPort: 10248

httpCheckFrequency: 0s

imageMinimumGCAge: 0s

kind: KubeletConfiguration

cgroupDriver: systemd

logging: {}

memorySwap: {}

nodeStatusReportFrequency: 0s

nodeStatusUpdateFrequency: 0s

rotateCertificates: true

runtimeRequestTimeout: 0s

shutdownGracePeriod: 0s

shutdownGracePeriodCriticalPods: 0s

staticPodPath: /etc/kubernetes/manifests

streamingConnectionIdleTimeout: 0s

syncFrequency: 0s

volumeStatsAggPeriod: 0s

在开始初始化集群之前可以使用预先在各个服务器节点上拉取所k8s需要的容器镜像。

kubeadm config images pull --config kubeadm.yaml

kubeadm config images list

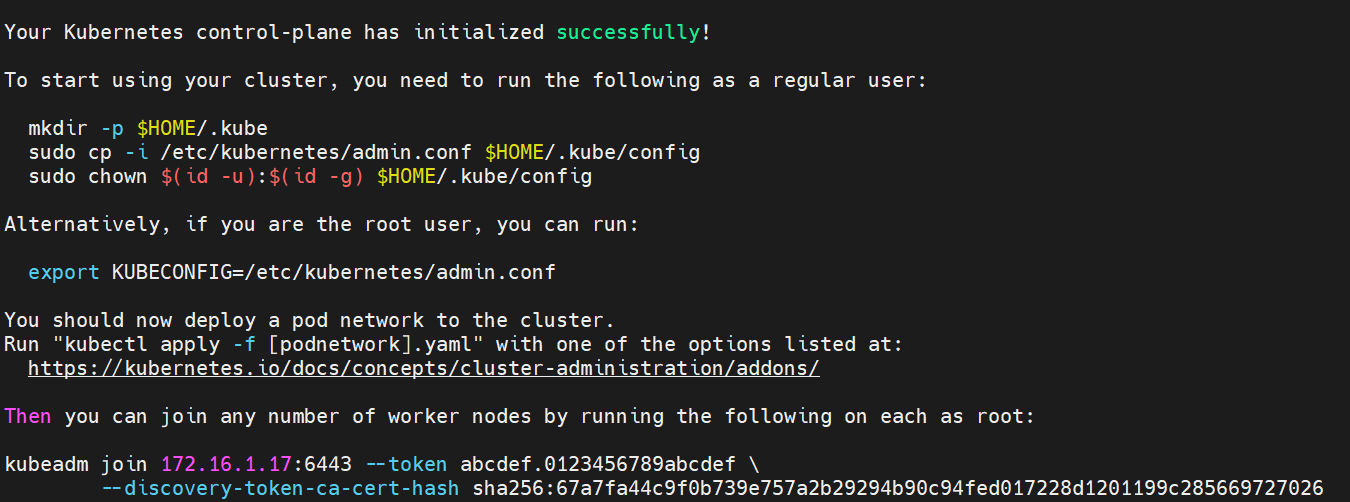

三、初始化master节点

kubeadm init --config kubeadm.yaml

在将结果中配置执行下

mkdir -p $HOME/.kube

sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

sudo chown $(id -u):$(id -g) $HOME/.kube/config

export KUBECONFIG=/etc/kubernetes/admin.conf ###最好写到.bash_profile

将结果中各节点加入集群命令,在复制到其他节点执行。

kubeadm join 172.16.1.17:6443 --token abcdef.0123456789abcdef \

--discovery-token-ca-cert-hash sha256:67a7fa44c9f0b739e757a2b29294b90c94fed017228d1201199c285669727026

此命令也可以再次生成。

[root@mysql03 ~]# kubeadm token create --print-join-command

kubeadm join 172.16.1.17:6443 --token 7xsss1.ncrd5xuoj2lf5sdt --discovery-token-ca-cert-hash sha256:67a7fa44c9f0b739e757a2b29294b90c94fed017228d1201199c285669727026

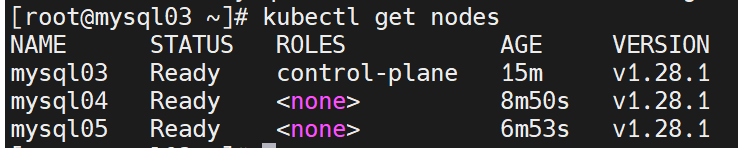

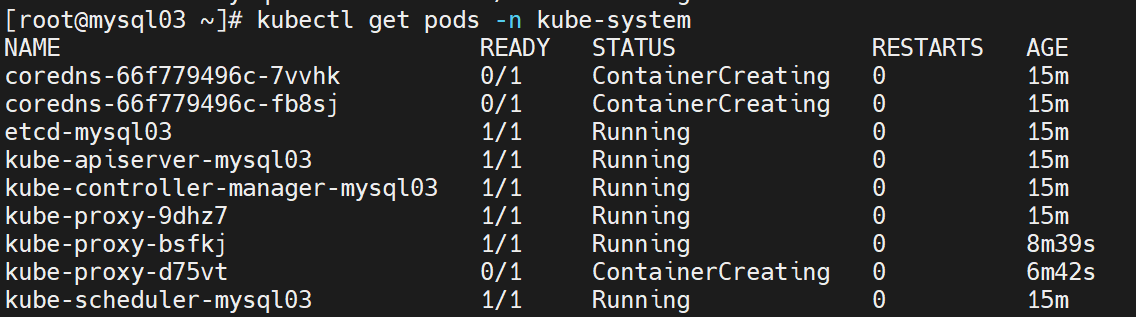

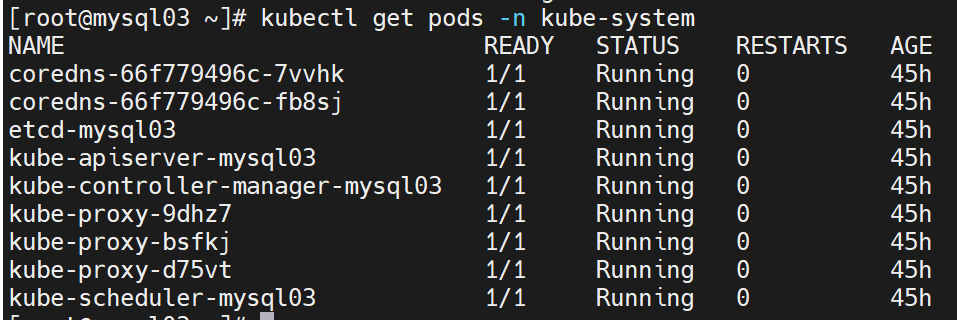

6、查看容器状态

kubectl get nodes

kubectl get pods -n kube-system

四、部署网络插件

在主节点操作

参考

https://kubernetes.io/zh-cn/docs/concepts/cluster-administration/addons/#networking-and-network-policy

下载配置文件

wget https://github.com/flannel-io/flannel/releases/latest/download/kube-flannel.yml

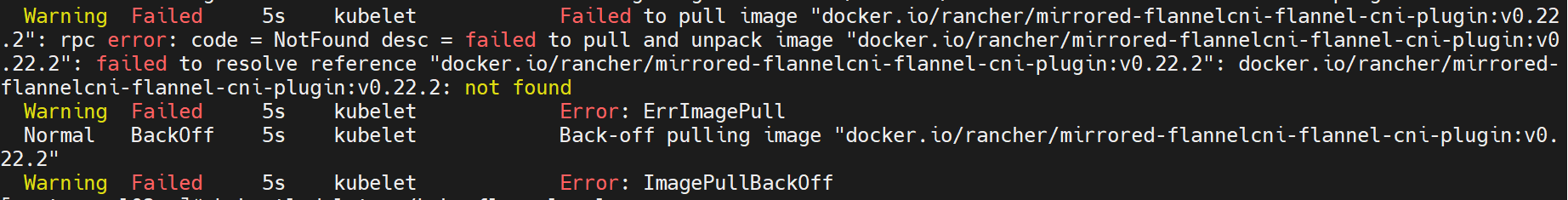

修改里面得image

grep image kube-flannel.yml

image: docker.io/rancher/mirrored-flannelcni-flannel:v0.18.1

image: docker.io/rancher/mirrored-flannelcni-flannel-cni-plugin:v1.1.0

image: docker.io/rancher/mirrored-flannelcni-flannel-cni-plugin:v1.1.0

拉取flannel的镜像,可选

ctr images pull docker.io/rancher/mirrored-flannelcni-flannel:v0.18.1

ctr images pull docker.io/rancher/mirrored-flannelcni-flannel-cni-plugin:v1.1.0

安装fannel

kubectl apply -f ./kube-flannel.yml

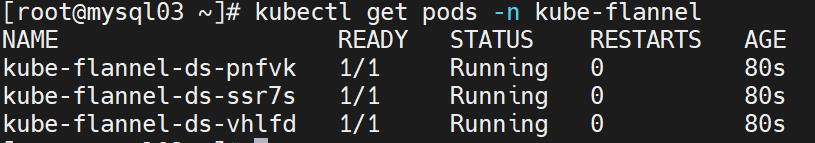

查看PODS启动情况

kubectl get pods -n kube-flannel

kubectl get pods -n kube-system

故障总结

1、如果需要清理环境,重新部署容器,可在所有节点执行

kubeadm reset

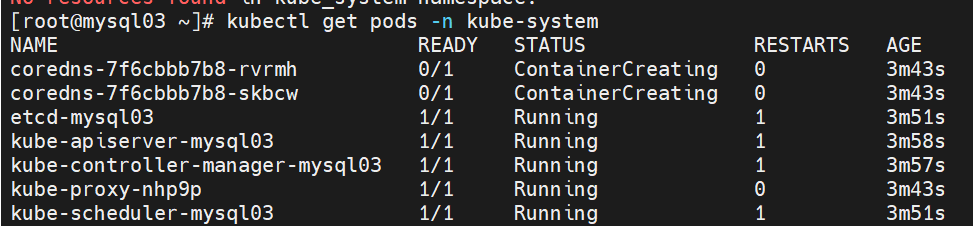

2、清理环境时如果报错

failed to find plugin “portmap” in path [/opt/cni/bin]"

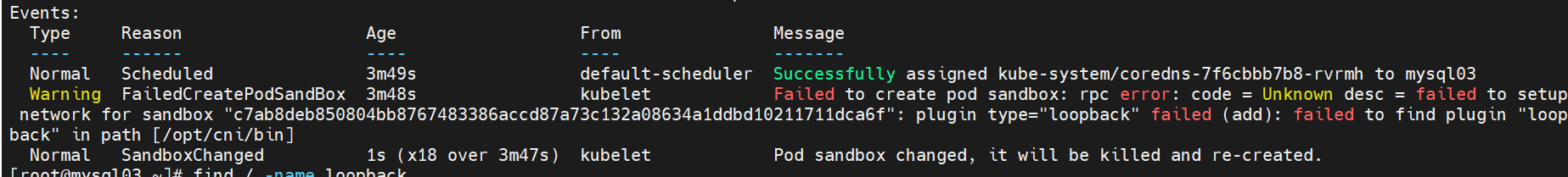

或者部署完fannel网络组件后,coredns显示ContainerCreating

kubectl describe pod coredns-7f6cbbb7b8-rvrmh -n kube-system

解决方法

yum install kubernetes-cni -y

3、kubeadm拉取镜像报错

kubeadm config images pull --config kubeadm.yaml

如果报错如下:

[root@mysql03 ~]# kubeadm config images pull --config kubeadm.yaml --cri-socket /run/containerd/containerd.sock

W0908 03:13:53.596893 5923 strict.go:55] error unmarshaling configuration schema.GroupVersionKind{Group:"kubelet.config.k8s.io", Version:"v1beta1", Kind:"KubeletConfiguration"}: error converting YAML to JSON: yaml: unmarshal errors:

line 27: key "cgroupDriver" already set in map

failed to pull image "registry.aliyuncs.com/google_containers/kube-apiserver:v1.22.1": output: E0908 03:13:53.686317 5946 remote_image.go:171] "PullImage from image service failed" err="rpc error: code = Unavailable desc = connection error: desc = \"transport: Error while dialing dial unix /var/run/crio/crio.sock: connect: no such file or directory\"" image="registry.aliyuncs.com/google_containers/kube-apiserver:v1.22.1"

time="2023-09-08T03:13:53Z" level=fatal msg="pulling image: rpc error: code = Unavailable desc = connection error: desc = \"transport: Error while dialing dial unix /var/run/crio/crio.sock: connect: no such file or directory\""

, error: exit status 1

To see the stack trace of this error execute with --v=5 or higher

解决方式:

crictl config runtime-endpoint unix:///run/containerd/containerd.sock

crictl config image-endpoint unix:///run/containerd/containerd.sock

https://github.com/containerd/containerd/blob/main/docs/getting-started.md

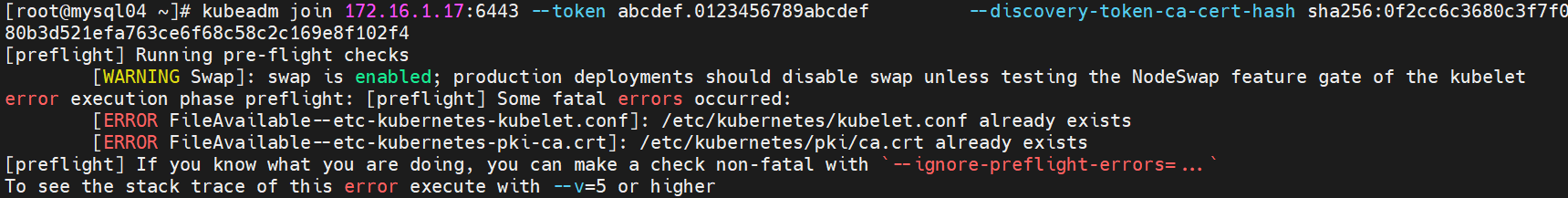

4、其他节点加入集群

检查swap是否关闭

swapoff -a

kubeadm reset

重新执行

kubeadm join 的命令

5、镜像拉取错误

查看容器部署状态,大部分问题是镜像问题

kubectl describe pod -n kube-flannel

kubectl delete -f ./kube-flannel.yml