将数据的结构、一些示例行或两者都放入一个文本字符串中。 使用该信息加上你的自然语言问题来构建一个“提示”给AI。 将提示发送到OpenAI的GPT-3.5-turbo API,并请求一个SQL查询来回答您的问题。在数据集上运行返回的SQL来计算您的答案。 (可选)创建一个交互式应用程序,以便轻松地使用纯英语查询数据集。

library(rio)library(dplyr)library(sqldf)library(glue)states <- rio::import("https://raw.githubusercontent.com/smach/SampleData/main/states.csv") |>filter(!is.na(Region))states_schema <- sqldf("PRAGMA table_info(states)")states_schema_string <- paste(apply(states_schema, 1, paste, collapse = "\t"), collapse = "\n")states_sample <- dplyr::sample_n(states, 3)states_sample_string <- paste(apply(states_sample, 1, paste, collapse = "\t"), collapse = "\n")

步骤2:为LLM创建提示

create_prompt <- function(schema, rows_sample, query, table_name) {glue::glue("Act as if you're a data scientist. You have a SQLite table named {table_name} with the following schema:```{schema}```The first rows look like this:```{rows_sample}```Based on this data, write a SQL query to answer the following question: {query}. Return the SQL query ONLY. Do not include any additional explanation.")}

步骤3:将数据发送到OpenAI的API

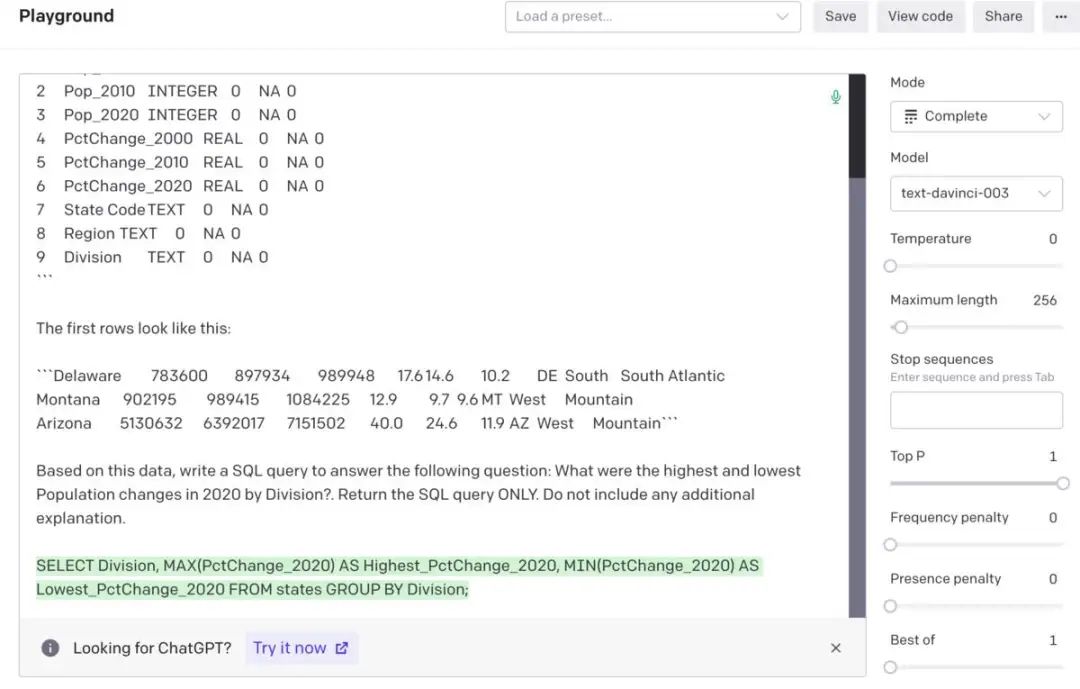

你可以先将数据复制粘贴到OpenAI的Web界面之一中,以在ChatGPT或OpenAI API playground中查看结果。ChatGPT不收费,但无法调整结果。Playground允许设置诸如温度(即回答的“随机性”或创造性程度)和要使用的模型等参数。对于SQL代码,我将温度设置为0。

接下来,我将一个自然语言问题保存到变量`question`中,使用我的函数创建一个提示,并查看将该提示粘贴到API playground中会发生什么:

> my_query <- "What were the highest and lowest Population changes in 2020 by Division?"> my_prompt <- get_query(states_schema_string, states_sample_string, my_query, "states")> cat(my_prompt)Act as if you're a data scientist. You have a SQLite table named states with the following schema:```0 State TEXT 0 NA 01 Pop_2000 INTEGER 0 NA 02 Pop_2010 INTEGER 0 NA 03 Pop_2020 INTEGER 0 NA 04 PctChange_2000 REAL 0 NA 05 PctChange_2010 REAL 0 NA 06 PctChange_2020 REAL 0 NA 07 State Code TEXT 0 NA 08 Region TEXT 0 NA 09 Division TEXT 0 NA 0```The first rows look like this:```Delaware 783600 897934 989948 17.6 14.6 10.2 DE South South AtlanticMontana 902195 989415 1084225 12.9 9.7 9.6 MT West MountainArizona 5130632 6392017 7151502 40.0 24.6 11.9 AZ West Mountain```Based on this data, write a SQL query to answer the following question: What were the highest and lowest Population changes in 2020 by Division?. Return the SQL query ONLY. Do not include any additional explanation.

提示输入OpenAI API playground和生成的SQL代码

sqldf("SELECT Division, MAX(PctChange_2020) AS Highest_PctChange_2020, MIN(PctChange_2020) AS Lowest_PctChange_2020 FROM states GROUP BY Division;")Division Highest_PctChange_2020 Lowest_PctChange_20201 East North Central 4.7 -0.12 East South Central 8.9 -0.23 Middle Atlantic 5.7 2.44 Mountain 18.4 2.35 New England 7.4 0.96 Pacific 14.6 3.37 South Atlantic 14.6 -3.28 West North Central 15.8 2.89 West South Central 15.9 2.7

通过编程方式将数据发送到OpenAI并从中返回会比将其复制粘贴到Web界面中更方便。有一些R包可以用于与OpenAI API进行交互。以下代码块使用该包向API发送提示,存储API响应,提取包含所请求SQL代码的文本部分,复制该代码,并在数据上运行SQL。

library(openai)my_results <- openai::create_chat_completion(model = "gpt-3.5-turbo", temperature = 0, messages = list(list(role = "user", content = my_prompt)))the_answer <- my_results$choices$message.contentcat(the_answer)SELECT Division, MAX(PctChange_2020) AS Highest_Population_Change, MIN(PctChange_2020) AS Lowest_Population_ChangeFROM statesGROUP BY Division;sqldf(the_answer)Division Highest_Population_Change Lowest_Population_Change1 East North Central 4.7 -0.12 East South Central 8.9 -0.23 Middle Atlantic 5.7 2.44 Mountain 18.4 2.35 New England 7.4 0.96 Pacific 14.6 3.37 South Atlantic 14.6 -3.28 West North Central 15.8 2.89 West South Central 15.9 2.7

步骤5(可选):创建一个交互式应用程序

library(shiny)library(openai)library(dplyr)library(sqldf)# Load hard-coded datasetstates <- read.csv("states.csv") |>dplyr::filter(!is.na(Region) & Region != "")states_schema <- sqldf::sqldf("PRAGMA table_info(states)")states_schema_string <- paste(apply(states_schema, 1, paste, collapse = "\t"), collapse = "\n")states_sample <- dplyr::sample_n(states, 3)states_sample_string <- paste(apply(states_sample, 1, paste, collapse = "\t"), collapse = "\n")# Function to process user inputget_prompt <- function(query, schema = states_schema_string, rows_sample = states_sample_string, table_name = "states") {my_prompt <- glue::glue("Act as if you're a data scientist. You have a SQLite table named {table_name} with the following schema:```{schema}```The first rows look like this:```{rows_sample}```Based on this data, write a SQL query to answer the following question: {query} Return the SQL query ONLY. Do not include any additional explanation.")print(my_prompt)return(my_prompt)}ui <- fluidPage(titlePanel("Query state database"),sidebarLayout(sidebarPanel(textInput("query", "Enter your query", placeholder = "e.g., What is the total 2020 population by Region?"),actionButton("submit_btn", "Submit")),mainPanel(uiOutput("the_sql"),br(),br(),verbatimTextOutput("results"))))server <- function(input, output) {# Create the prompt from the user query to send to GPTthe_prompt <- eventReactive(input$submit_btn, {req(input$query, states_schema_string, states_sample_string)my_prompt <- get_prompt(query = input$query)})# send prompt to GPT, get SQL, run SQL, print resultsobserveEvent(input$submit_btn, {req(the_prompt()) # text to send to GPT# Send results to GPT and get response# withProgress adds a Shiny progress bar. Commas now needed after each statementwithProgress(message = 'Getting results from GPT', value = 0, { # Add Shiny progress messagemy_results <- openai::create_chat_completion(model = "gpt-3.5-turbo", temperature = 0, messages = list(list(role = "user", content = the_prompt())))the_gpt_sql <- my_results$choices$message.content# print the SQLsql_html <- gsub("\n", "<br >", the_gpt_sql)sql_html <- paste0("<p>", sql_html, "</p>")# Run SQL on data to get resultsgpt_answer <- sqldf(the_gpt_sql)setProgress(value = 1, message = 'GPT results received') # Send msg to user that})# Print SQL and resultsoutput$the_sql <- renderUI(HTML(sql_html))if (is.vector(gpt_answer) ) {output$results <- renderPrint(gpt_answer)} else {output$results <- renderPrint({ print(gpt_answer) })}})}shinyApp(ui = ui, server = server)

文章转载自云原生数据库,如果涉嫌侵权,请发送邮件至:contact@modb.pro进行举报,并提供相关证据,一经查实,墨天轮将立刻删除相关内容。