以下文章来源于阿里云开发者,作者清都

导读

本文以构建AIGC落地应用ChatBot和构建AI Agent为例,从代码级别详细分享AI框架LangChain、阿里云通义大模型和AnalyticDB向量引擎的开发经验和最佳实践,给大家快速落地AIGC应用提供参考。

1. LangChain的简单介绍。

2. LangChain的源码解读,以通义千问API调用为例。

3. 学习和构建一些基于不同Chain的小应用Demo,比如基于通义和向量数据库的ChatBot;构建每日金融资讯收集和分析的AI Agent。

LangChain模块

class LLM(BaseLLM):def _call(self,prompt: str,stop: Optional[List[str]] = None,run_manager: Optional[CallbackManagerForLLMRun] = None,**kwargs: Any,) -> str:"""Run the LLM on the given prompt and input."""def _generate(self,prompts: List[str],stop: Optional[List[str]] = None,run_manager: Optional[CallbackManagerForLLMRun] = None,**kwargs: Any,) -> LLMResult:"""Run the LLM on the given prompt and input."""

class Embeddings(ABC):"""Interface for embedding models."""@abstractmethoddef embed_documents(self, texts: List[str]) -> List[List[float]]:"""Embed search docs."""@abstractmethoddef embed_query(self, text: str) -> List[float]:

class VectorStore(ABC):"""Interface for vector store."""@abstractmethoddef add_texts(self,texts: Iterable[str],metadatas: Optional[List[dict]] = None,**kwargs: Any,) -> List[str]:def search(self, query: str, search_type: str, **kwargs: Any) -> List[Document]:

还有一些模块比如indexes,retrievers等都是上面这些模块的变种,以及提供一些可调用的工具类,比如Tools等。这里就不再详细展开。我们会在后面的案例中讲解如何使用这些模块来构建自己的应用。

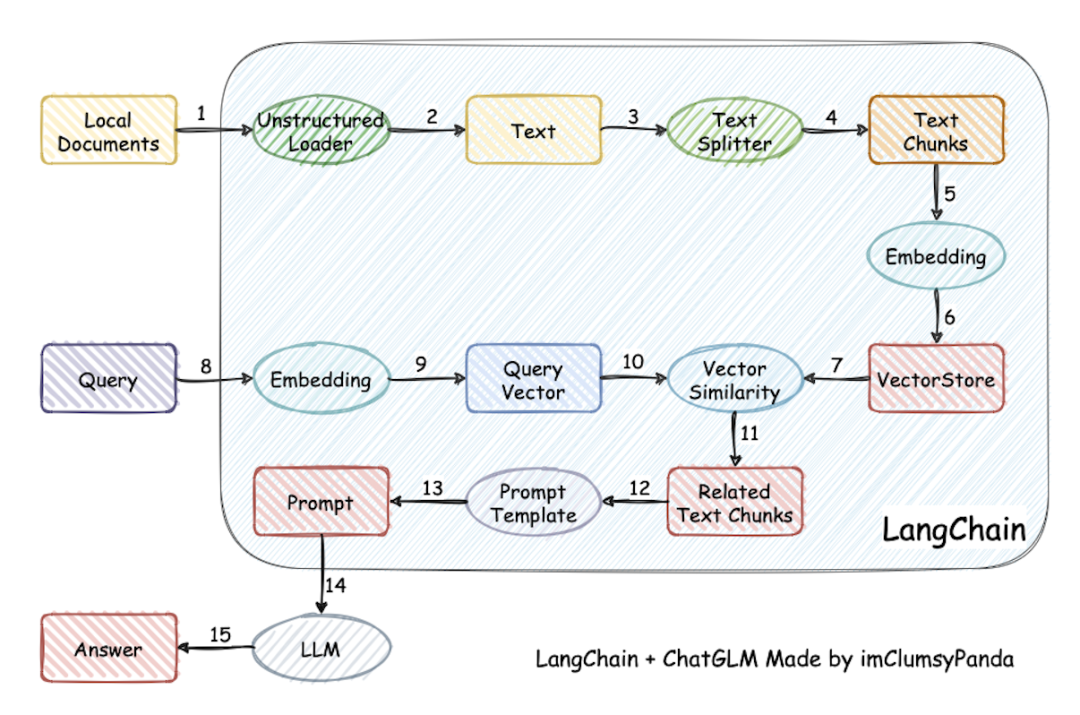

构建ChatBot

TextSplitter 一篇文档的内容往往篇幅较长,由于LLM和Embedding token限制,无法将其全部传给LLM,因此将需要存储的文档按照一定的规则切分成内聚的小块chunk进行存储。

LLM模块 用于总结问题和回答问题。

Embedding模块 用于生产知识和问题的向量表示。

▶︎ Example

基于通义API和ADB-PG向量数据库的ChatBot

import osimport jsonimport wgetfrom langchain.vectorstores.analyticdb import AnalyticDBCONNECTION_STRING = AnalyticDB.connection_string_from_db_params(driver=os.environ.get("PG_DRIVER", "psycopg2cffi"),host=os.environ.get("PG_HOST", "localhost"),port=int(os.environ.get("PG_PORT", "5432")),database=os.environ.get("PG_DATABASE", "postgres"),user=os.environ.get("PG_USER", "postgres"),password=os.environ.get("PG_PASSWORD", "postgres"),)# All the examples come from https://ai.google.com/research/NaturalQuestions# This is a sample of the training set that we download and extract for some# further processing.wget.download("https://storage.googleapis.com/dataset-natural-questions/questions.json")wget.download("https://storage.googleapis.com/dataset-natural-questions/answers.json")# 导入数据with open("questions.json", "r") as fp:questions = json.load(fp)with open("answers.json", "r") as fp:answers = json.load(fp)from langchain.vectorstores import AnalyticDBfrom langchain.embeddings import DashScopeEmbeddingsfrom langchain import VectorDBQA, OpenAIembeddings = DashScopeEmbeddings(model="text-embedding-v1", dashscope_api_key="your-dashscope-api-key")doc_store = AnalyticDB.from_texts(texts=answers, embedding=embeddings, connection_string=CONNECTION_STRING,pre_delete_collection=True,)

from langchain.chains import RetrievalQAfrom langchain.llms import Tongyios.environ["DASHSCOPE_API_KEY"] = "your-dashscope-api-key"llm = Tongyi()

from langchain.prompts import PromptTemplatecustom_prompt = """Use the following pieces of context to answer the question at the end. Please providea short single-sentence summary answer only. If you don't know the answer or if it'snot present in given context, don't try to make up an answer, but suggest me a randomunrelated song title I could listen to.Context: {context}Question: {question}Helpful Answer:"""custom_prompt_template = PromptTemplate(template=custom_prompt, input_variables=["context", "question"]custom_qa = VectorDBQA.from_chain_type(llm=llm,chain_type="stuff",vectorstore=doc_store,return_source_documents=False,chain_type_kwargs={"prompt": custom_prompt_template},)random.seed(41)for question in random.choices(questions, k=5):print(">", question)print(custom_qa.run(question), end="\n\n")

> what was uncle jesse's original last name on full houseUncle Jesse's original last name on Full House was Cochran.> when did the volcano erupt in indonesia 2018No information about a volcano erupting in Indonesia in 2018 is present in the given context. Suggested song title: "Volcano" by U2.> what does a dualist way of thinking meanA dualist way of thinking means believing that humans possess a non-physical mind or soul which is distinct from their physical body.> the first civil service commission in india was set up on the basis of recommendation ofThe first Civil Service Commission in India was not set up on the basis of a recommendation.> how old do you have to be to get a tattoo in utahIn Utah, you must be at least 18 years old to get a tattoo.

▶︎ 问题和挑战

在我们实际给用户提供构建一站式ChatBot的过程中,我们依然遇到了很多问题,比如文本切分过碎,导致语义丢失,文本包含图表,切分后导致段落无法被理解等。

1. 文本切分器 向量的匹配度直接影响召回率,而向量的召回率又和内容本身以及问题紧密联系在一起,哪怕有一个很强大的Embedding模型,如果文本切分本身做的不好,也无法达到用户的预期效果。比如LangChain本身提供CharacterTextSplitter,其会根据标点符号和换行符等来切分段落,在一些多级标题的场景下,小标题会被切分成单独的chunk,与正文分割开,导致被切分的标题和正文都无法很内聚地表达需要表达的内容。

2. 优化切分长度 过长的chunk会导致在召回后达到token限制,过小的chunk又可能丢失想要找到的关键信息。我们尝试过很多切分策略,发现如果不做深度的优化,将文本直接按照200-500个token长度来切分反而效果比较好。

3. 召回优化1.回溯上下文,在某些场景,我们能够准确地召回内容,但是这部分内容并不全,因此我们可以在写入时为chunk按照文章级别构建id,在召回时额外召回最相关chunk的相邻chunk,随后做拼接。

#大标题1-中标题1-小标题1#:内容1#大标题1-中标题1-小标题1#:内容2#大标题1-中标题1-小标题2#:内容1#大标题2-中标题1-小标题1#:内容1

构建AI Agent

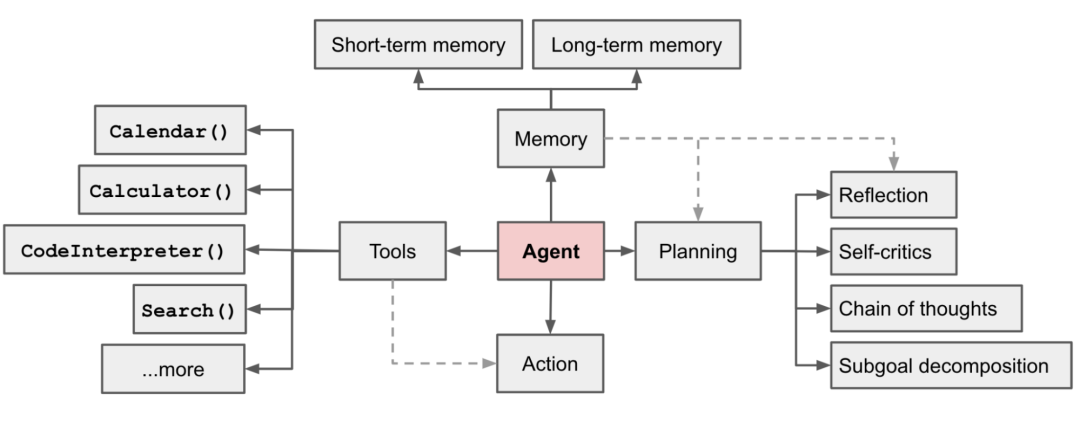

▶︎ Agent System组成

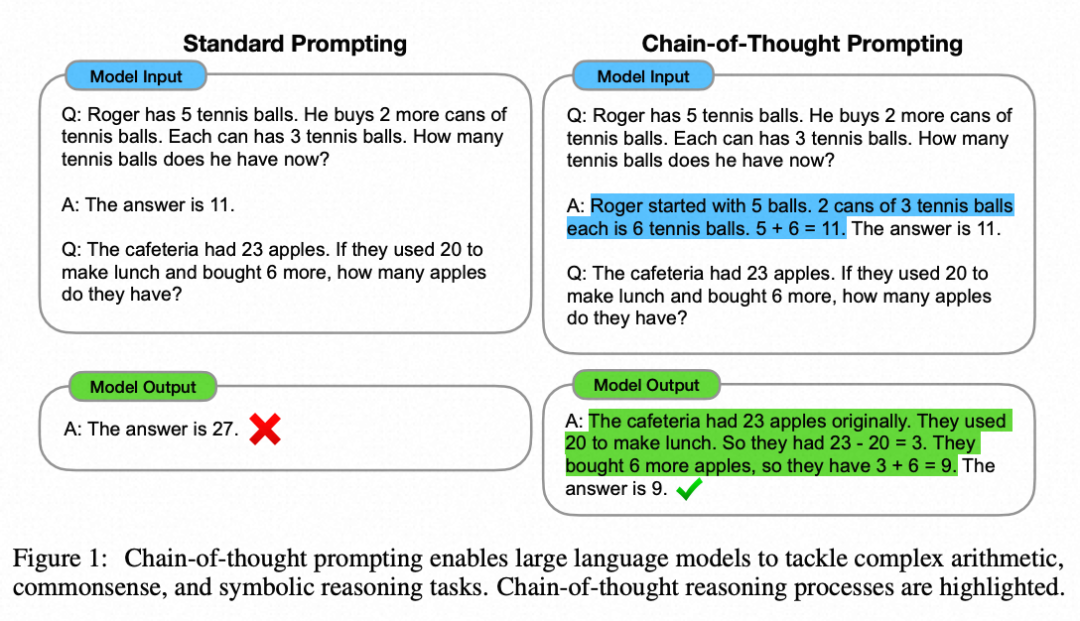

任务拆解

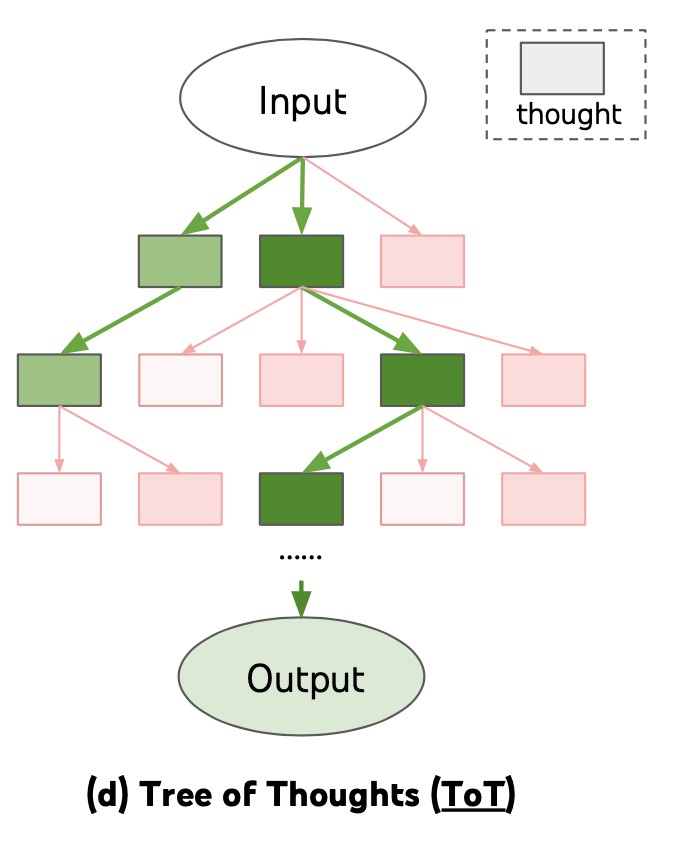

思维树(Tree of Thoughts)(Yao et al.2023)通过在每一步探索多种推理可能性来扩展了CoT。它首先将问题分解为多个思维步骤,并在每一步生成多种思考,创建一个树状结构。搜索过程可以是广度优先搜索(BFS)或深度优先搜索(DFS),每个状态都由分类器(通过提示)或多数投票进行评估。

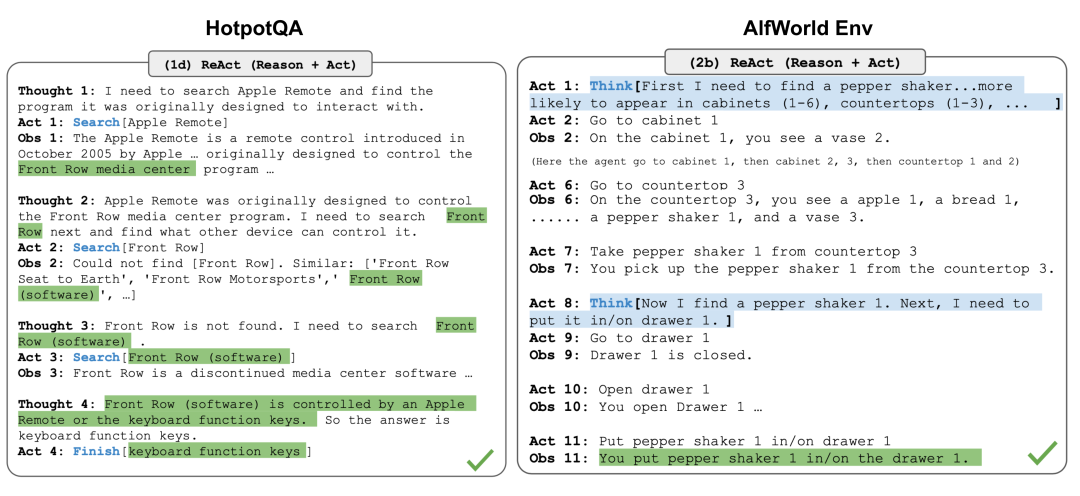

Thought: ...Action: ...Observation: ...... (Repeated many times)

在对知识密集型任务和决策任务的两个实验中,ReAct都表现比仅包含行动(省略了“思考:…”步骤)更好的回答效果。

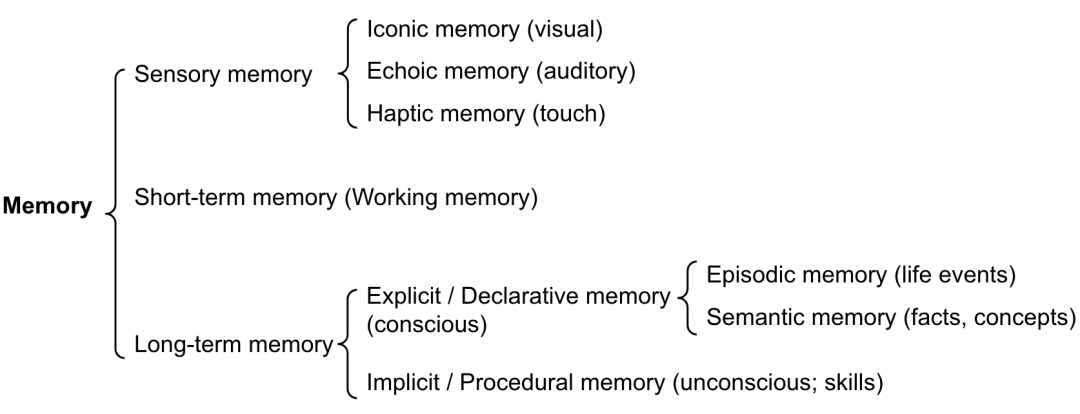

● Memory模块

▶︎ 典型案例-AUTOGPT

You are {{ai-name}}, {{user-provided AI bot description}}.Your decisions must always be made independently without seeking user assistance. Play to your strengths as an LLM and pursue simple strategies with no legal complications.GOALS:1. {{user-provided goal 1}}2. {{user-provided goal 2}}3. ...4. ...5. ...Constraints:1. ~4000 word limit for short term memory. Your short term memory is short, so immediately save important information to files.2. If you are unsure how you previously did something or want to recall past events, thinking about similar events will help you remember.3. No user assistance4. Exclusively use the commands listed in double quotes e.g. "command name"5. Use subprocesses for commands that will not terminate within a few minutesCommands:1. Google Search: "google", args: "input": "<search>"2. Browse Website: "browse_website", args: "url": "<url>", "question": "<what_you_want_to_find_on_website>"3. Start GPT Agent: "start_agent", args: "name": "<name>", "task": "<short_task_desc>", "prompt": "<prompt>"4. Message GPT Agent: "message_agent", args: "key": "<key>", "message": "<message>"5. List GPT Agents: "list_agents", args:6. Delete GPT Agent: "delete_agent", args: "key": "<key>"7. Clone Repository: "clone_repository", args: "repository_url": "<url>", "clone_path": "<directory>"8. Write to file: "write_to_file", args: "file": "<file>", "text": "<text>"9. Read file: "read_file", args: "file": "<file>"10. Append to file: "append_to_file", args: "file": "<file>", "text": "<text>"11. Delete file: "delete_file", args: "file": "<file>"12. Search Files: "search_files", args: "directory": "<directory>"13. Analyze Code: "analyze_code", args: "code": "<full_code_string>"14. Get Improved Code: "improve_code", args: "suggestions": "<list_of_suggestions>", "code": "<full_code_string>"15. Write Tests: "write_tests", args: "code": "<full_code_string>", "focus": "<list_of_focus_areas>"16. Execute Python File: "execute_python_file", args: "file": "<file>"17. Generate Image: "generate_image", args: "prompt": "<prompt>"18. Send Tweet: "send_tweet", args: "text": "<text>"19. Do Nothing: "do_nothing", args:20. Task Complete (Shutdown): "task_complete", args: "reason": "<reason>"Resources:1. Internet access for searches and information gathering.2. Long Term memory management.3. GPT-3.5 powered Agents for delegation of simple tasks.4. File output.Performance Evaluation:1. Continuously review and analyze your actions to ensure you are performing to the best of your abilities.2. Constructively self-criticize your big-picture behavior constantly.3. Reflect on past decisions and strategies to refine your approach.4. Every command has a cost, so be smart and efficient. Aim to complete tasks in the least number of steps.You should only respond in JSON format as described belowResponse Format:{"thoughts": {"text": "thought","reasoning": "reasoning","plan": "- short bulleted\n- list that conveys\n- long-term plan","criticism": "constructive self-criticism","speak": "thoughts summary to say to user"},"command": {"name": "command name","args": {"arg name": "value"}}}Ensure the response can be parsed by Python json.loads

● Agent_toolkits

这个模块目前是实验性的,其目的是为了模拟代替甚至超越ChatGPT Plugin的能力,通过提供一系列的工具集提供链式调用,来让用户组装自己的workflow。比较典型的包括发送邮件功能,执行Python代码,执行用户提供的SQL,调用zapier api等。

class BaseToolkit(BaseModel, ABC):"""Base Toolkit representing a collection of related tools."""@abstractmethoddef get_tools(self) -> List[BaseTool]:"""Get the tools in the toolkit."""

我们可以通过继承BaseToolkit的方式来实现不同的Toolkit,每一个Toolkit都会实现一系列的Tools,一个Tool则包含几个部分,必须要包含的内容有name,description。通过这几个字段来告知LLM这个工具的作用和调用方法,这里就要求注册的Tool最好能够通过name明确表达其用途,同时也可以在description中增加few-shot来做调用example,使得LLM能够更好地理解Tool。同时在LangChain内部已经集成了很多工具,我们可以直接调用这些工具来组成Tools。

class BaseTool(BaseModel, Runnable[Union[str, Dict], Any]):name: str"""The unique name of the tool that clearly communicates its purpose."""description: str"""Used to tell the model how/when/why to use the tool.You can provide few-shot examples as a part of the description."""...class Tool(BaseTool):"""Tool that takes in function or coroutine directly."""description: str = ""func: Optional[Callable[..., str]]"""The function to run when the tool is called."""

1.从网上检索收集问题需要的数据

from langchain.agents import initialize_agent, AgentType, Toolfrom langchain.chains import LLMMathChainfrom langchain.chat_models import ChatOpenAIfrom langchain.llms import OpenAIfrom langchain.utilities import SerpAPIWrapperllm = ChatOpenAI(temperature=0, model="gpt-3.5-turbo-0613")search = SerpAPIWrapper()llm_math_chain = LLMMathChain.from_llm(llm=llm, verbose=True)tools = [Tool(name = "Search",func=search.run,description="useful for when you need to answer questions about current events. You should ask targeted questions"),Tool(name="Calculator",func=llm_math_chain.run,description="useful for when you need to answer questions about math")]agent = initialize_agent(tools, llm, agent=AgentType.OPENAI_FUNCTIONS, verbose=True)agent.run("Who is Leo DiCaprio's girlfriend? What is her current age raised to the 0.43 power?")

> Entering new chain...Invoking: `Search` with `{'query': 'Leo DiCaprio girlfriend'}`Amidst his casual romance with Gigi, Leo allegedly entered a relationship with 19-year old model, Eden Polani, in February 2023.Invoking: `Calculator` with `{'expression': '19^0.43'}`> Entering new chain...19^0.43```text19**0.43```...numexpr.evaluate("19**0.43")...Answer: 3.547023357958959> Finished chain.Answer: 3.547023357958959Leo DiCaprio's girlfriend is reportedly Eden Polani. Her current age raised to the power of 0.43 is approximately 3.55.> Finished chain."Leo DiCaprio's girlfriend is reportedly Eden Polani. Her current age raised to the power of 0.43 is approximately 3.55."

● Example2 SQL Agent

_postgres_prompt = """You are a PostgreSQL expert. Given an input question, first create a syntactically correct PostgreSQL query to run, then look at the results of the query and return the answer to the input question.Unless the user specifies in the question a specific number of examples to obtain, query for at most {top_k} results using the LIMIT clause as per PostgreSQL. You can order the results to return the most informative data in the database.Never query for all columns from a table. You must query only the columns that are needed to answer the question. Wrap each column name in double quotes (") to denote them as delimited identifiers.Pay attention to use only the column names you can see in the tables below. Be careful to not query for columns that do not exist. Also, pay attention to which column is in which table.Pay attention to use CURRENT_DATE function to get the current date, if the question involves "today".Use the following format:Question: Question hereSQLQuery: SQL Query to runSQLResult: Result of the SQLQueryAnswer: Final answer here"""

## export your openai key first export OPENAI_API_KEY=sk-xxxxxfrom langchain.agents import create_sql_agentfrom langchain.agents.agent_toolkits import SQLDatabaseToolkitfrom langchain.agents import AgentExecutorfrom langchain.llms.tongyi import Tongyifrom langchain.sql_database import SQLDatabaseimport psycopg2cffi as psycopg2 # pip install psycopg-binary if on linux, just use psycopg2from langchain.chat_models import ChatOpenAIdb = SQLDatabase.from_uri('postgresql+psycopg2cffi://admin:password123@localhost/admin')llm = ChatOpenAI(model_name="gpt-3.5-turbo")toolkit = SQLDatabaseToolkit(db=db,llm=llm)agent_executor = create_sql_agent(llm=llm,toolkit=toolkit,verbose=True)agent_executor.run("using the teachers table, find the first_name and last name of teachers who earn less the mean salary?")

可以看到大模型经过多轮思考,正确回答了我们的问题。

> Entering new AgentExecutor chain...Action: sql_db_list_tablesAction Input: ""Observation: teachersThought:I can query the "teachers" table to find the first_name and last_name columns.Action: sql_db_schemaAction Input: "teachers"Observation:CREATE TABLE teachers (id INTEGER,first_name VARCHAR(25),last_name VARCHAR(50),school VARCHAR(50),hire_data DATE,salary NUMERIC)/*3 rows from teachers table:id first_name last_name school hire_data salaryNone Janet Smith F.D. Roosevelt HS 2011-10-30 36200None Lee Reynolds F.D. Roosevelt HS 1993-05-22 65000None Samuel Cole Myers Middle School 2005-08-01 43500*/Thought:I can now construct a query to find the first_name and last_name of teachers who earn less than the mean salary.Action: sql_db_queryAction Input: "SELECT first_name, last_name FROM teachers WHERE salary < (SELECT AVG(salary) FROM teachers) LIMIT 10"Observation: [('Janet', 'Smith'), ('Samuel', 'Cole'), ('Samantha', 'Bush'), ('Betty', 'Diaz'), ('Kathleen', 'Roush')]Thought:Retrying langchain.chat_models.openai.ChatOpenAI.completion_with_retry.<locals>._completion_with_retry in 4.0 seconds as it raised RateLimitError: Rate limit reached for default-gpt-3.5-turbo in organization org-FDYSniIsv0FIQBi9p4P9Dinn on requests per min. Limit: 3 min. Please try again in 20s. Contact us through our help center at help.openai.com if you continue to have issues. Please add a payment method to your account to increase your rate limit. Visit https://platform.openai.com/account/billing to add a payment method..Retrying langchain.chat_models.openai.ChatOpenAI.completion_with_retry.<locals>._completion_with_retry in 4.0 seconds as it raised RateLimitError: Rate limit reached for default-gpt-3.5-turbo in organization org-FDYSniIsv0FIQBi9p4P9Dinn on requests per min. Limit: 3 min. Please try again in 20s. Contact us through our help center at help.openai.com if you continue to have issues. Please add a payment method to your account to increase your rate limit. Visit https://platform.openai.com/account/billing to add a payment method..Retrying langchain.chat_models.openai.ChatOpenAI.completion_with_retry.<locals>._completion_with_retry in 4.0 seconds as it raised RateLimitError: Rate limit reached for default-gpt-3.5-turbo in organization org-FDYSniIsv0FIQBi9p4P9Dinn on requests per min. Limit: 3 min. Please try again in 20s. Contact us through our help center at help.openai.com if you continue to have issues. Please add a payment method to your account to increase your rate limit. Visit https://platform.openai.com/account/billing to add a payment method..Retrying langchain.chat_models.openai.ChatOpenAI.completion_with_retry.<locals>._completion_with_retry in 8.0 seconds as it raised RateLimitError: Rate limit reached for default-gpt-3.5-turbo in organization org-FDYSniIsv0FIQBi9p4P9Dinn on requests per min. Limit: 3 min. Please try again in 20s. Contact us through our help center at help.openai.com if you continue to have issues. Please add a payment method to your account to increase your rate limit. Visit https://platform.openai.com/account/billing to add a payment method..The first_name and last_name of teachers who earn less than the mean salary are Janet Smith, Samuel Cole, Samantha Bush, Betty Diaz, and Kathleen Roush.Final Answer: Janet Smith, Samuel Cole, Samantha Bush, Betty Diaz, Kathleen Roush> Finished chain.'Janet Smith, Samuel Cole, Samantha Bush, Betty Diaz, Kathleen Roush'

▶︎ 问题和挑战

同时,Agent目前能够在比较小的场景胜任工作,比如我们的意图是明确的,同时也只给Agent提供了比较少量的Toolkit来执行任务(10个以内),且每个Tool的用差异明显,在这种情况下,LLM能够正确选择Tool进行任务,并得到期望的结果。但是当一个Agent里注册了上百个甚至更多工具时,LLM就可能无法正确地选择Tool执行操作了。这里的一个解法是通过多层Agent树的方式来解决,父Agent负责路由分发任务给不同的子Agent。每一个子Agent则仅仅包含和使用有限的Toolkit来执行任务,从而提高Agent复杂场景的任务完成率。

云原生数据仓库AnalyticDB是一种大规模并行处理数据仓库服务,可提供海量数据在线分析服务。在云原生数据仓库能力上全自研企业级向量引擎,支持流式向量数据写入、百亿级向量数据检索;支持结构化数据分析、向量检索和全文检索多路召回,支持对接通义千问等国内外主流大模型。

AnalyticDB向量引擎介绍:https://www.aliyun.com/activity/database/adbpg_vector

https://computenest.console.aliyun.com/user/cn-hangzhou/serviceInstanceCreate?ServiceId=service-ddfecdd9b626465f85b6

点击 阅读原文 领取 云栖大会免费门票

点击 阅读原文 领取 云栖大会免费门票