实战TTS迁移单个表空间8T大小,30w+数据对象

- 环境说明

- 基本检查

- 源端配置

- 目标端配置

- 创建pdb

- sftp上传数据文件和元数据文件

- 创建必要的dblink和directory

- 目标端创建用户并赋权

- 目标端导入public对象

- 目标端导入用户元数据

- 表空间置为read write

- 对象比对

- 导入系统权限信息

- 处理无效对象

- 收集数据库和数据字典统计信息

- 回收dba 权限

- 修改用户默认表空间

- 元数据导出导入慢问题说明

环境说明

源端环境:

2节点19c rac。小版本19.13.0.0,归档模式。现在由于存储限制,已经开始存储阈值告警,没有多余空间承载这么大容量。所以经过讨论,把这套库里面的历史数据表空间,10T左右,迁移至别的数据库里面,源端删除表空间,达到释放空间的目的。也就是历史数据归档。

qhactdb2:/home/oracle(qhactdb2)$cat /etc/redhat-release

Red Hat Enterprise Linux Server release 7.6 (Maipo)

qhactdb2:/home/oracle(qhactdb2)$sqlplus / as sysdba

SQL*Plus: Release 19.0.0.0.0 - Production on Wed Nov 1 13:45:06 2023

Version 19.13.0.0.0

Copyright (c) 1982, 2021, Oracle. All rights reserved.

Connected to:

Oracle Database 19c Enterprise Edition Release 19.0.0.0.0 - Production

Version 19.13.0.0.0

此表空间8.6T左右,大约里面又28w张表。所以元数据信息非常大。

TBS_NAME TYPE TOTAL(GB) USAGE(GB) FREE(GB) FREE PCT % EXTENSIBLE(GB) MAX_SIZE(GB) USED PCT OF MAX % NO_AXF_NUM AXF_NUM

-------------------- -------------------- --------------- --------------- --------------- ---------- --------------- --------------- ----------------- ---------- -------

TBS_OLD_DATA PERMANENT 8,610.000 4,861.615 3,748.385 56.46 .000 8,610.000 56.46 287 0

SQL> archive log list;

Database log mode Archive Mode

Automatic archival Enabled

Archive destination +ARCHIVEDG

Oldest online log sequence 82348

Next log sequence to archive 82357

Current log sequence 82357

SQL> select count(*) from dba_tables where tablespace_name='TBS_OLD_DATA';

COUNT(*)

----------

285482

SQL> select count(*) from dba_indexes where tablespace_name='TBS_OLD_DATA';

COUNT(*)

----------

46397

目标端环境:

有套现成的19c环境,存储空间也足够,所以打算归档到这套库上面,但未开归档。这是个pdb环境,所以可以创建单独pdb,来迁移本次历史表空间。

-bash-4.2$ cat /etc/redhat-release

Red Hat Enterprise Linux Server release 7.6 (Maipo)

-bash-4.2$ sqlplus / as sysdba

SQL*Plus: Release 19.0.0.0.0 - Production on Wed Nov 1 13:48:39 2023

Version 19.6.0.0.0

Copyright (c) 1982, 2019, Oracle. All rights reserved.

Connected to:

Oracle Database 19c Enterprise Edition Release 19.0.0.0.0 - Production

Version 19.6.0.0.0

SQL> show pdbs;

CON_ID CON_NAME OPEN MODE RESTRICTED

---------- ------------------------------ ---------- ----------

.....

2 PDB$SEED READ ONLY NO

8 TBMDBPRD READ WRITE NO

.....

SQL> archive log list;

Database log mode No Archive Mode

Automatic archival Disabled

Archive destination /u01/app/oracle/product/19.3.0/db_1/dbs/arch

Oldest online log sequence 21446

Current log sequence 21461

lASMCMD> sdg

State Type Rebal Sector Logical_Sector Block AU Total_MB Free_MB Req_mir_free_MB Usable_file_MB Offline_disks Voting_files Name

MOUNTED NORMAL N 512 512 4096 1048576 266649600 183535609 91422720 46056444 0 N HDD_DATA01/

MOUNTED NORMAL N 512 512 4096 4194304 30720 29740 10240 9750 0 Y OCR/

环境限制:

网络:由于两套库没在一个地域,所以开通网络策略后,经过scp和asm cp远程复制测试,带宽仅有1MB/s。那么计算下,在不中断的情况下,10T的表空间,需要传输一个月左右,如果数据泵只传输数据,也要传至少输半个月。所以网络太慢。刚好本地有个跳板机,经他跳转,网速可以达到20MB/s,比直接传输快的多。

硬盘:本地没有多余空间,只有最多安装oracle的100G目录,远远达不到承载落地的目的。但是有块没加入asm的2T共享磁盘,可以临时使用下。

由于是历史数据表空间,上面没有业务,表空间可以无限read only。综上考虑,采用TTS的方案。介于物理和逻辑的方法之间,折中的一种方案。

下面就详细说明下整个迁移过程:

基本检查

其实主要就是表空间自包含检查

SQL> exec dbms_tts.transport_set_check('TBS_OLD_DATA',true);

-- 查看结果,结果为空,表示为自包含

col violations for a70

select * from transport_set_violations;

PL/SQL procedure successfully completed.

SQL> SQL> SQL> SQL>

VIOLATIONS

----------------------------------------------------------------------

ORA-39907: Index UQRY_LC_ZHANGJIN.IND_T2 in tablespace TBS_OLD_DATA po

ints to table UQRY_LC_ZHANGJIN.T2 in tablespace TBS_DH_DATA.

ORA-39921: Default Partition (Table) Tablespace TBS_DH_DATA for TF_F_C

USTOMER not contained in transportable set.

ORA-39907: Index LC_LIPF.TMP_LIPF_USER in tablespace TBS_OLD_DATA poin

ts to table LC_LIPF.TMP_LIPF_USER in tablespace TMP_TS_VB_ACCT_01.

这都容易处理,把表move到合适的表空间即可。当然其中也有技巧。

源端配置

创建文件系统

qhactdb2:/home/grid(+ASM2)$kfod disks=all status=true -------------------------------------------------------------------------------- Disk Size Header Path User Group ================================================================================ ...... 16: 2097152 MB CANDIDATE /dev/asmdisk24 grid asmadmin ...... -------------------------------------------------------------------------------- ORACLE_SID ORACLE_HOME ================================================================================

有一块未用的大磁盘。

[root@qhactdb2 ~]# pvcreate /dev/sdy Physical volume "/dev/sdy" successfully created. [root@qhactdb2 ~]# vgcreate vg_app /dev/sdy Volume group "vg_app" successfully created [root@qhactdb2 ~]# lvcreate -l 100%FREE -n lv_app vg_app Logical volume "lv_app" created. [root@qhactdb2 ~]# mkfs.xfs /dev/vg_app/lv_app meta-data=/dev/vg_app/lv_app isize=512 agcount=4, agsize=134217472 blks = sectsz=512 attr=2, projid32bit=1 = crc=1 finobt=0, sparse=0 data = bsize=4096 blocks=536869888, imaxpct=5 = sunit=0 swidth=0 blks naming =version 2 bsize=4096 ascii-ci=0 ftype=1 log =internal log bsize=4096 blocks=262143, version=2 = sectsz=512 sunit=0 blks, lazy-count=1 realtime =none extsz=4096 blocks=0, rtextents=0 [root@qhactdb2 ~]# mkdir /acttest [root@qhactdb2 ~]# mount /dev/mapper/vg_app-lv_app /acttest/ [root@qhactdb2 ~]# df -h Filesystem Size Used Avail Use% Mounted on ....... /dev/mapper/vg_app-lv_app 2.0T 33M 2.0T 1% /acttest [root@qhactdb2 ~]# cd /acttest/ [root@qhactdb2 acttest]# ls -ltr total 0

表空间置为read only

SQL> alter tablespace TBS_OLD_DATA read only;

Tablespace altered.

生成可传输表空间集

qhactdb2:/dmp(qhactdb2)$nohup expdp \'/ as sysdba\' directory=dmp dumpfile=act_%U.dmp logfile=act.log TRANSPORT_TABLESPACES=TBS_OLD_DATA cluster=n parallel=6 exclude=TABLE_STATISTICS,INDEX_STATISTICS &

会有报错:ORA-39047: Jobs of type TRANSPORTABLE cannot use multiple execution streams.

TRANSPORT_TABLESPACES和parallel不能同时使用。去掉parallel。

qhactdb2:/dmp(qhactdb2)$nohup expdp \'/ as sysdba\' directory=dmp dumpfile=act.dmp logfile=act.log TRANSPORT_TABLESPACES=TBS_OLD_DATA cluster=n exclude=TABLE_STATISTICS,INDEX_STATISTICS &

具体过程如下:

qhactdb2:/dmp(qhactdb2)$cat act.log ;;; Export: Release 19.0.0.0.0 - Production on Thu Nov 2 19:29:23 2023 Version 19.13.0.0.0 Copyright (c) 1982, 2019, Oracle and/or its affiliates. All rights reserved. ;;; Connected to: Oracle Database 19c Enterprise Edition Release 19.0.0.0.0 - Production Starting "SYS"."SYS_EXPORT_TRANSPORTABLE_01": "/******** AS SYSDBA" directory=dmp dumpfile=act.dmp logfile=act.log TRANSPORT_TABLESPACES=TBS_OLD_DATA cluster=n exclude=TABLE_STATISTICS,INDEX_STATISTICS Processing object type TRANSPORTABLE_EXPORT/PLUGTS_BLK Processing object type TRANSPORTABLE_EXPORT/POST_INSTANCE/PLUGTS_BLK Processing object type TRANSPORTABLE_EXPORT/TABLE Processing object type TRANSPORTABLE_EXPORT/GRANT/OWNER_GRANT/OBJECT_GRANT Processing object type TRANSPORTABLE_EXPORT/INDEX/INDEX Processing object type TRANSPORTABLE_EXPORT/CONSTRAINT/CONSTRAINT Processing object type TRANSPORTABLE_EXPORT/COMMENT Processing object type TRANSPORTABLE_EXPORT/CONSTRAINT/REF_CONSTRAINT Master table "SYS"."SYS_EXPORT_TRANSPORTABLE_01" successfully loaded/unloaded ****************************************************************************** Dump file set for SYS.SYS_EXPORT_TRANSPORTABLE_01 is: /dmp/act.dmp ****************************************************************************** Datafiles required for transportable tablespace TBS_OLD_DATA: +DATADG1/QHACTDB/DATAFILE/tbs_old_data.359.1048698211 +DATADG1/QHACTDB/DATAFILE/tbs_old_data.362.1050870189 ................... +DATADG2/QHACTDB/DATAFILE/tbs_old_data.829.1120386801 +DATADG2/QHACTDB/DATAFILE/tbs_old_data.830.1120386865 +DATADG2/QHACTDB/DATAFILE/tbs_old_data.831.1120386925 Job "SYS"."SYS_EXPORT_TRANSPORTABLE_01" successfully completed at Thu Nov 2 21:17:22 2023 elapsed 0 01:47:57

30w+个对象,光导出元数据,就耗费了近2h。

落地asm数据文件

总共287个datafile

SQL> select file_name from dba_data_files where tablespace_name='TBS_OLD_DATA'

FILE_NAME

--------------------------------------------------------------------------------

+DATADG2/QHACTDB/DATAFILE/tbs_old_data.829.1120386801

+DATADG2/QHACTDB/DATAFILE/tbs_old_data.830.1120386865

+DATADG2/QHACTDB/DATAFILE/tbs_old_data.831.1120386925

+DATADG1/QHACTDB/DATAFILE/tbs_old_data.941.1123234871

.....

ASMCMD> cp +DATADG1/QHACTDB/DATAFILE/tbs_old_data.359.1048698211 /acttest/tbs_old_data_1.dbf

cp +DATADG1/QHACTDB/DATAFILE/tbs_old_data.362.1050870189 /acttest/tbs_old_data_2.dbf

cp +DATADG1/QHACTDB/DATAFILE/tbs_old_data.363.1050870415 /acttest/tbs_old_data_3.dbf

cp +DATADG1/QHACTDB/DATAFILE/tbs_old_data.364.1050870523 /acttest/tbs_old_data_4.dbf

cp +DATADG1/QHACTDB/DATAFILE/tbs_old_data.365.1050870527 /acttest/tbs_old_data_5.dbf

cp +DATADG1/QHACTDB/DATAFILE/tbs_old_data.366.1050870531 /acttest/tbs_old_data_6.dbf

cp +DATADG1/QHACTDB/DATAFILE/tbs_old_data.367.1050870533 /acttest/tbs_old_data_7.dbf

cp +DATADG1/QHACTDB/DATAFILE/tbs_old_data.368.1050870537 /acttest/tbs_old_data_8.dbf

.......

ASMCMD> copying +DATADG1/QHACTDB/DATAFILE/tbs_old_data.362.1050870189 -> /acttest/tbs_old_data_2.dbf

ASMCMD> copying +DATADG1/QHACTDB/DATAFILE/tbs_old_data.363.1050870415 -> /acttest/tbs_old_data_3.dbf

ASMCMD> copying +DATADG1/QHACTDB/DATAFILE/tbs_old_data.364.1050870523 -> /acttest/tbs_old_data_4.dbf

ASMCMD> copying +DATADG1/QHACTDB/DATAFILE/tbs_old_data.365.1050870527 -> /acttest/tbs_old_data_5.dbf

ASMCMD> copying +DATADG1/QHACTDB/DATAFILE/tbs_old_data.366.1050870531 -> /acttest/tbs_old_data_6.dbf

ASMCMD> copying +DATADG1/QHACTDB/DATAFILE/tbs_old_data.367.1050870533 -> /acttest/tbs_old_data_7.dbf

ASMCMD> copying +DATADG1/QHACTDB/DATAFILE/tbs_old_data.368.1050870537 -> /acttest/tbs_old_data_8.dbf

.......

压缩数据文件

由于网络带宽有限制,所以这10T的数据文件传起来还是有压力的,经过再三考虑,可以在源端先进行文件压缩,再进行传输,即可减少待传输的数据量。

经过前期测试,gzip压缩解压太耗时,此处采用它的并行版本pigz。

pigz(parallel implementation of gzip)是一个并行执行的压缩工具,解压缩比gzip快,同时CPU消耗是gzip的好几倍,在对短时间内CPU消耗较高不受影响的场景下,可以使用pigz。

测试压缩一个文件:

[root@qhactdb2 acttest]# pigz tbs_old_data_130.dbf

top - 21:35:59 up 315 days, 17:38, 9 users, load average: 11.92, 10.84, 10.83

Tasks: 3463 total, 6 running, 3457 sleeping, 0 stopped, 0 zombie

%Cpu(s): 7.2 us, 2.0 sy, 0.0 ni, 89.7 id, 1.0 wa, 0.0 hi, 0.1 si, 0.0 st

KiB Mem : 79055136+total, 74306400 free, 51713510+used, 19910987+buff/cache

KiB Swap: 20971516 total, 17057532 free, 3913984 used. 24702427+avail Mem

PID USER PR NI VIRT RES SHR S %CPU %MEM TIME+ COMMAND

43881 root 20 0 11.3g 48588 700 D 552.3 0.0 1:37.41 pigz

158871 root 20 0 12.7g 3.2g 56856 S 145.1 0.4 284554:55 dsware_vbs

105001 root 20 0 4620 860 448 R 99.7 0.0 254:44.53 gzip

34863 root 20 0 4620 860 448 D 99.0 0.0 189:46.59 gzip

86044 root 20 0 4620 856 448 R 99.0 0.0 222:05.52 gzip

72305 root 20 0 4620 848 448 R 98.7 0.0 54:06.82 gzip

确实比gzip速度快,但是cpu消耗是gzip的几倍大。

用法:

1、压缩单个文件

pigz -k your_file_name

# 加上-k选项保留原始文件,会在当前工作目录获得压缩后的your_file_name.gz 文件

2、解压单个文件

unpigz -d your_file_name.gz

# 同样,如果需要保留.gz文件,记得加上-k选项

3、压缩文件夹

tar -cvf - dir1 dir2 dir3 | pigz > output.tar.gz

# pigz没有压缩文件夹的选项,只能压缩单个文件。若想压缩文件夹,可以结合tar使用

4、解压文件夹

pigz -d output.tar.gz

# 这样得到的是output.tar,再通过tar解打包就好了

tar -xzvf output.tar.gz # 也可以直接用tar命令解压

常用参数

-0 ~ -9 压缩等级,数字越大压缩率越高,速度越慢,默认为6 -k --keep 压缩后不删除原始文件 -l --list 列出压缩输入的内容 -K --zip Compress to PKWare zip (.zip) single entry format -d --decompress 解压缩输入 -p --processes n 使用n核处理,默认为使用所有CPU核心

参考:https://www.jianshu.com/p/db81073b491c

此处分多个文件夹,采用pigz并行压缩:

[root@qhactdb2 3]# pigz *

因为是核心生产库,注意观察cpu的使用率,如果使用率过高,注意-p调节cpu核心数。

sftp传输

此过程耗费了主要的时间,cp,压缩,落地传输,解压,cp大概经历了48h。

目标端配置

创建pdb

SQL> create pluggable database OLDACTDB admin user pdbadmin identified by xxxx;

Pluggable database created.

SQL> show pdbs;

CON_ID CON_NAME OPEN MODE RESTRICTED

---------- ------------------------------ ---------- ----------

......

11 OLDACTDB MOUNTED

......

SQL> alter pluggable database OLDACTDB open;

Pluggable database altered.

收集下统计信息,要不然后面impdp元数据慢的很

set serveroutput on

set timing on

exec dbms_stats.set_param('DEGREE','64');

select dbms_stats.get_param('DEGREE') from dual;

exec dbms_stats.gather_database_stats;

exec dbms_stats.gather_dictionary_stats;

exec dbms_stats.gather_fixed_objects_stats;

exec dbms_stats.set_param('DEGREE','0');

commit;

sftp上传数据文件和元数据文件

先解压这些传输的文件

[root@zdb034 acttest]# cd 4/ [root@zdb034 4]# unpigz -d *

赋权

[root@zdb034 dsgarchive]# chown oracle:oinstall -R acttest/ [root@zdb034 dsgarchive]# chmod 775 -R acttest/

文件系统拷贝到asm中

ASMCMD> cp /dsgarchive/acttest/1/tbs_old_data_1.dbf +HDD_DATA01/ZHJQDB/091038C4D2A63642E0632204BC87EA64/DATAFILE/ cp /dsgarchive/acttest/1/tbs_old_data_2.dbf +HDD_DATA01/ZHJQDB/091038C4D2A63642E0632204BC87EA64/DATAFILE/ cp /dsgarchive/acttest/1/tbs_old_data_3.dbf +HDD_DATA01/ZHJQDB/091038C4D2A63642E0632204BC87EA64/DATAFILE/ cp /dsgarchive/acttest/1/tbs_old_data_4.dbf +HDD_DATA01/ZHJQDB/091038C4D2A63642E0632204BC87EA64/DATAFILE/ cp /dsgarchive/acttest/1/tbs_old_data_5.dbf +HDD_DATA01/ZHJQDB/091038C4D2A63642E0632204BC87EA64/DATAFILE/ .......

创建必要的dblink和directory

create public database link to_act connect to system identified by "xxxx" using '(DESCRIPTION =(ADDRESS_LIST =(ADDRESS =(PROTOCOL = TCP)(HOST = xx.x.x.x)(PORT = 1688)))(CONNECT_DATA =(SERVICE_NAME = xxaxxdb)))';

create directory impdp as '/home/oracle/enmo';

grant read,write on directory impdp to public;

目标端创建用户并赋权

源端执行脚本,输出结果,目标端执行创建语句。

set serveroutput ON echo on DECLARE v_sql VARCHAR2 (2000); BEGIN FOR c_username IN (SELECT name, password FROM sys.user$ WHERE name NOT IN ('ANONYMOUS', 'APEX_030200', 'APEX_PUBLIC_USER', 'APPQOSSYS', 'CTXSYS', 'DBSNMP', 'DIP', 'EXFSYS', 'FLOWS_FILES', 'MDDATA', 'MDSYS', 'MGMT_VIEW', 'OLAPSYS', 'ORACLE_OCM', 'ORDDATA', 'ORDPLUGINS', 'ORDSYS', 'OUTLN', 'OWBSYS', 'OWBSYS_AUDIT', 'SI_INFORMTN_SCHEMA', 'SPATIAL_CSW_ADMIN_USR', 'SPATIAL_WFS_ADMIN_USR', 'SYS', 'SYSMAN', 'SYSTEM', 'WMSYS', 'XDB', 'XS$NULL','DMSYS','TSMSYS') AND TYPE# = 1) LOOP v_sql := 'create user ' || c_username.name || ' identified by values '||chr(39) || c_username.password||chr(39) || ';'; DBMS_OUTPUT.put_line (v_sql); END LOOP; END; / create user UOP_VB1 identified by values 'F4C240C968599350'; create user UQRY_LC_ZHANGJIN identified by values 'B5739F5CCA86DE9F'; create user MD identified by values 'FFC9664CE4665CE6'; ...... create user LC_WANGJ identified by values '471F79A2D0CCB210'; create user YD_MAWX identified by values 'EB90D6C7A0AA2F61';

为了后面导入元数据报错权限,线都赋予dba权限,后面回收即可

grant dba to UOP_VB1 ;

......

grant dba to LC_WANGJ ;

grant dba to YD_MAWX ;

目标端导入public对象

nohup impdp system/xxxx@oldact include=db_link:\"" in \(select db_link from dba_db_links where owner=\'PUBLIC\'\)\"" include=profile include=role FULL=Y directory=impdp network_link=TO_ACT logfile=dblink.log &

具体过程如下:

-bash-4.2$ cat dblink.log ;;; Import: Release 19.0.0.0.0 - Production on Thu Nov 2 15:23:11 2023 Version 19.6.0.0.0 Copyright (c) 1982, 2019, Oracle and/or its affiliates. All rights reserved. ;;; Connected to: Oracle Database 19c Enterprise Edition Release 19.0.0.0.0 - Production FLASHBACK automatically enabled to preserve database integrity. Starting "SYSTEM"."SYS_IMPORT_FULL_01": system/********@oldact include=db_link:" in \(select db_link from dba_db_links where owner=\'PUBLIC\'\)" include=profile include=role FULL=Y directory=impdp network_link=TO_ACT logfile=dblink.log Estimate in progress using BLOCKS method... Total estimation using BLOCKS method: 0 KB Processing object type DATABASE_EXPORT/PROFILE ...... Processing object type DATABASE_EXPORT/ROLE Processing object type DATABASE_EXPORT/SCHEMA/DB_LINK Job "SYSTEM"."SYS_IMPORT_FULL_01" completed with 3 error(s) at Thu Nov 2 15:43:43 2023 elapsed 0 00:20:16

目标端导入用户元数据

-bash-4.2$ cat actpar.par dumpfile=act.dmp directory=impdp metrics=yes cluster=N TRANSPORT_datafiles='+HDD_DATA01/ZHJQDB/091038C4D2A63642E0632204BC87EA64/DATAFILE/tbs_old_data_1.dbf','+HDD_DATA01/ZHJQDB/091038C4D2A63642E0632204BC87EA64/DATAFILE/tbs_old_data_2.dbf','+HDD_DATA01/ZHJQDB/091038C4D2A63642E0632204BC87EA64/DATAFILE/tbs_old_data_3.dbf','+HDD_DATA01/ZHJQDB/091038C4D2A63642E0632204BC87EA64/DATAFILE/tbs_old_data_4.dbf',。。。。。。。。。。'+HDD_DATA01/ZHJQDB/091038C4D2A63642E0632204BC87EA64/DATAFILE/tbs_old_data_286.dbf','+HDD_DATA01/ZHJQDB/091038C4D2A63642E0632204BC87EA64/DATAFILE/tbs_old_data_287.dbf' nohup impdp system/xxxx@oldact parfile=actpar.par &

具体过程如下:

-bash-4.2$ impdp system/xxxx@oldact attach="SYSTEM"."SYS_IMPORT_TRANSPORTABLE_01"

Import: Release 19.0.0.0.0 - Production on Fri Nov 3 10:27:15 2023

Version 19.6.0.0.0

Copyright (c) 1982, 2019, Oracle and/or its affiliates. All rights reserved.

Connected to: Oracle Database 19c Enterprise Edition Release 19.0.0.0.0 - Production

Job: SYS_IMPORT_TRANSPORTABLE_01

Owner: SYSTEM

Operation: IMPORT

Creator Privs: TRUE

GUID: 093721F92DBB7047E0632204BC873D40

Start Time: Friday, 03 November, 2023 10:19:25

Mode: TRANSPORTABLE

Instance: zhjqdb1

Max Parallelism: 1

Timezone: +00:00

Export timezone: +08:00

Timezone version: 32

Endianness: LITTLE

NLS character set: ZHS16GBK

NLS NCHAR character set: AL16UTF16

EXPORT Job Parameters:

Parameter Name Parameter Value:

CLIENT_COMMAND "/******** AS SYSDBA" directory=dmp dumpfile=act.dmp logfile=act.log TRANSPORT_TABLESPACES=TBS_OLD_DATA cluster=n exclude=TABLE_STATISTICS,INDEX_STATISTICS

TRACE 0

TTS_FULL_CHECK 0

IMPORT Job Parameters:

Parameter Name Parameter Value:

CLIENT_COMMAND system/********@oldact parfile=/home/oracle/enmo/actpar.par

METRICS 1

TABLESPACE_DATAFILE +HDD_DATA01/ZHJQDB/091038C4D2A63642E0632204BC87EA64/DATAFILE/tbs_old_data_1.dbf

TRACE 0

TABLESPACE_DATAFILE +HDD_DATA01/ZHJQDB/091038C4D2A63642E0632204BC87EA64/DATAFILE/tbs_old_data_2.dbf

TABLESPACE_DATAFILE +HDD_DATA01/ZHJQDB/091038C4D2A63642E0632204BC87EA64/DATAFILE/tbs_old_data_3.dbf

TABLESPACE_DATAFILE +HDD_DATA01/ZHJQDB/091038C4D2A63642E0632204BC87EA64/DATAFILE/tbs_old_data_4.dbf

....................................

TABLESPACE_DATAFILE +HDD_DATA01/ZHJQDB/091038C4D2A63642E0632204BC87EA64/DATAFILE/tbs_old_data_286.dbf

TABLESPACE_DATAFILE +HDD_DATA01/ZHJQDB/091038C4D2A63642E0632204BC87EA64/DATAFILE/tbs_old_data_287.dbf

State: EXECUTING

Bytes Processed: 0

Current Parallelism: 1

Job Error Count: 0

Job heartbeat: 3

Dump File: /home/oracle/enmo/act.dmp

Worker 1 Status:

Instance ID: 1

Instance name: zhjqdb1

Host name: zdb034

Object start time: Friday, 03 November, 2023 10:27:35

Object status at: Friday, 03 November, 2023 10:27:35

Process Name: DW00

State: EXECUTING

Object Schema: YD_WANGSD

Object Name: WSD_B_ACCOUNTDEPOSIT_202006

Object Type: TRANSPORTABLE_EXPORT/TABLE

Completed Objects: 1,016

Worker Parallelism: 1

--过一会:

Import> status

Job: SYS_IMPORT_TRANSPORTABLE_01

Operation: IMPORT

Mode: TRANSPORTABLE

State: EXECUTING

Bytes Processed: 0

Current Parallelism: 1

Job Error Count: 0

Job heartbeat: 277

Dump File: /home/oracle/enmo/act.dmp

Worker 1 Status:

Instance ID: 1

Instance name: zhjqdb1

Host name: zdb034

Object start time: Friday, 03 November, 2023 15:17:35

Object status at: Friday, 03 November, 2023 15:17:35

Process Name: DW00

State: EXECUTING

Object Schema: OLD_AD

Object Name: CA_RES_LOG_D_0977_1_20200817

Object Type: TRANSPORTABLE_EXPORT/TABLE

Completed Objects: 18,257

Worker Parallelism: 1

--过26h以后:

Import> status

Job: SYS_IMPORT_TRANSPORTABLE_01

Operation: IMPORT

Mode: TRANSPORTABLE

State: EXECUTING

Bytes Processed: 0

Current Parallelism: 1

Job Error Count: 0

Job heartbeat: 1521

Dump File: /home/oracle/enmo/act.dmp

Worker 1 Status:

Instance ID: 1

Instance name: zhjqdb1

Host name: zdb034

Object start time: Saturday, 04 November, 2023 13:01:13

Object status at: Saturday, 04 November, 2023 13:01:13

Process Name: DW00

State: EXECUTING

Object Schema: OLD_AD

Object Name: CA_PROM_DTL_0970_6_20200623

Object Type: TRANSPORTABLE_EXPORT/TABLE

Completed Objects: 192,066

Worker Parallelism: 1

--已经导入19w个对象

W-1 Processing object type TRANSPORTABLE_EXPORT/TABLE

W-1 Completed 285508 TABLE objects in 145806 seconds

--经历了145806s,表的元数据导入完成。

虽然慢,Completed Objects 对象数目一直在变,说明在慢慢入库。刚开始,还排查了impdp hang的问题,排查了一大圈,除过等待事件“wait for unread message on broadcast channel”,其他都正常。

经测试,TTS导入元数据的时候,也不能使用并行。仍然会报错ORA-39047。如下:

[oracle@11gasm ~]$ impdp \'/ as sysdba\' dumpfile=zhuo.dmp directory=DATA_PUMP_DIR TRANSPORT_datafiles='+DATADG/orcl/datafile/o1_mf_zhuo_gxdcfr5s_.dbf' parallel=4 Import: Release 11.2.0.4.0 - Production on Thu Nov 2 22:02:10 2023 Copyright (c) 1982, 2011, Oracle and/or its affiliates. All rights reserved. Connected to: Oracle Database 11g Enterprise Edition Release 11.2.0.4.0 - 64bit Production With the Partitioning, Automatic Storage Management, OLAP, Data Mining and Real Application Testing options ORA-39002: invalid operation ORA-39047: Jobs of type TRANSPORTABLE cannot use multiple execution streams.

从后面知道,只有从20c开始,oracle加强了TTS导出导入元数据,可以使用并行。

使用impdp+network_link可以不落地进行元数据导入,但是参数TRANSPORT_datafiles和TRANSPORT_TABLESPACES 必须连用:

[oracle@11gasm ~]$ impdp \'/ as sysdba\' network_link=to_orcl directory=DATA_PUMP_DIR TRANSPORT_datafiles='+DATADG/orcl/datafile/o1_mf_zhuo_gxdcfr5s_.dbf' TRANSPORT_TABLESPACES=zhuo Import: Release 11.2.0.4.0 - Production on Thu Nov 2 22:07:02 2023 Copyright (c) 1982, 2011, Oracle and/or its affiliates. All rights reserved. Connected to: Oracle Database 11g Enterprise Edition Release 11.2.0.4.0 - 64bit Production With the Partitioning, Automatic Storage Management, OLAP, Data Mining and Real Application Testing options Starting "SYS"."SYS_IMPORT_TRANSPORTABLE_01": "/******** AS SYSDBA" network_link=to_orcl directory=DATA_PUMP_DIR TRANSPORT_datafiles=+DATADG/orcl/datafile/o1_mf_zhuo_gxdcfr5s_.dbf TRANSPORT_TABLESPACES=zhuo Processing object type TRANSPORTABLE_EXPORT/PLUGTS_BLK Processing object type TRANSPORTABLE_EXPORT/TABLE Processing object type TRANSPORTABLE_EXPORT/TABLE_STATISTICS Processing object type TRANSPORTABLE_EXPORT/POST_INSTANCE/PLUGTS_BLK Job "SYS"."SYS_IMPORT_TRANSPORTABLE_01" successfully completed at Thu Nov 2 22:07:14 2023 elapsed 0 00:00:12 [oracle@11gasm ~]$ impdp \'/ as sysdba\' network_link=to_orcl directory=DATA_PUMP_DIR TRANSPORT_datafiles='+DATADG/orcl/datafile/o1_mf_zhuo_gxdcfr5s_.dbf' Import: Release 11.2.0.4.0 - Production on Thu Nov 2 22:06:37 2023 Copyright (c) 1982, 2011, Oracle and/or its affiliates. All rights reserved. Connected to: Oracle Database 11g Enterprise Edition Release 11.2.0.4.0 - 64bit Production With the Partitioning, Automatic Storage Management, OLAP, Data Mining and Real Application Testing options ORA-39004: invalid state ORA-39053: parameter or attribute TRANSPORT_TABLESPACES must be defined for a TRANSPORTABLE job

19c的元数据导入,默认会导入对象权限信息。

Import> status

Job: SYS_IMPORT_TRANSPORTABLE_01

Operation: IMPORT

Mode: TRANSPORTABLE

State: EXECUTING

Bytes Processed: 0

Current Parallelism: 1

Job Error Count: 0

Job heartbeat: 1072

Dump File: /home/oracle/enmo/act.dmp

Worker 1 Status:

Instance ID: 1

Instance name: zhjqdb1

Host name: zdb034

Object start time: Sunday, 05 November, 2023 21:36:33

Object status at: Sunday, 05 November, 2023 21:36:33

Process Name: DW00

State: EXECUTING

Object Schema: OLD_AD

Object Name: ALTER

Object Type: TRANSPORTABLE_EXPORT/GRANT/OWNER_GRANT/OBJECT_GRANT

Completed Objects: 2,256,000

Worker Parallelism: 1

源端查询权限数量:

SQL> select count(*) from dba_tab_privs;

COUNT(*)

----------

11017811

权限数量也确实够多。

完整的元数据导入日志如下:

-bash-4.2$ cat actpar.log ;;; Import: Release 19.0.0.0.0 - Production on Fri Nov 3 10:19:22 2023 Version 19.6.0.0.0 Copyright (c) 1982, 2019, Oracle and/or its affiliates. All rights reserved. ;;; Connected to: Oracle Database 19c Enterprise Edition Release 19.0.0.0.0 - Production ;;; ************************************************************************** ;;; Parfile values: ;;; parfile: transport_datafiles=+HDD_DATA01/ZHJQDB/091038C4D2A63642E0632204B ;;; _parfile: C87EA64/DATAFILE/tbs_old_data_1.dbf, ;;; _parfile: +HDD_DATA01/ZHJQDB/091038C4D2A63642E0632204BC87EA64/DATAFILE/tbs ;;; _parfile: _old_data_2.dbf, ;;; _parfile: +HDD_DATA01/ZHJQDB/091038C4D2A63642E0632204BC87EA64/DATAFILE/tbs ;;; _parfile: _old_data_3.dbf, ......................................................... ;;; _parfile: _old_data_285.dbf, ;;; _parfile: +HDD_DATA01/ZHJQDB/091038C4D2A63642E0632204BC87EA64/DATAFILE/tbs ;;; _parfile: _old_data_286.dbf, ;;; _parfile: +HDD_DATA01/ZHJQDB/091038C4D2A63642E0632204BC87EA64/DATAFILE/tbs ;;; _parfile: _old_data_287.dbf ;;; parfile: directory=impdp ;;; parfile: cluster=N ;;; parfile: metrics=Y ;;; parfile: logfile=actpar.log ;;; parfile: dumpfile=act.dmp ;;; ************************************************************************** W-1 Startup took 0 seconds W-1 Master table "SYSTEM"."SYS_IMPORT_TRANSPORTABLE_01" successfully loaded/unloaded W-1 Source time zone is +08:00 and target time zone is +00:00. Starting "SYSTEM"."SYS_IMPORT_TRANSPORTABLE_01": system/********@oldact parfile=/home/oracle/enmo/actpar.par W-1 Processing object type TRANSPORTABLE_EXPORT/PLUGTS_BLK W-1 Completed 1 PLUGTS_BLK objects in 71 seconds W-1 Processing object type TRANSPORTABLE_EXPORT/TABLE W-1 Completed 285508 TABLE objects in 145806 seconds W-1 Processing object type TRANSPORTABLE_EXPORT/GRANT/OWNER_GRANT/OBJECT_GRANT W-1 Completed 2714737 OBJECT_GRANT objects in 79652 seconds W-1 Processing object type TRANSPORTABLE_EXPORT/INDEX/INDEX W-1 Completed 4006 INDEX objects in 637 seconds W-1 Processing object type TRANSPORTABLE_EXPORT/CONSTRAINT/CONSTRAINT W-1 Completed 68398 CONSTRAINT objects in 9014 seconds W-1 Processing object type TRANSPORTABLE_EXPORT/COMMENT W-1 Completed 2792 COMMENT objects in 69 seconds W-1 Processing object type TRANSPORTABLE_EXPORT/CONSTRAINT/REF_CONSTRAINT W-1 Completed 3 REF_CONSTRAINT objects in 1 seconds W-1 Processing object type TRANSPORTABLE_EXPORT/POST_INSTANCE/PLUGTS_BLK W-1 Completed 1 PLUGTS_BLK objects in 11529 seconds Job "SYSTEM"."SYS_IMPORT_TRANSPORTABLE_01" successfully completed at Mon Nov 6 07:02:09 2023 elapsed 2 20:42:45

整个过程耗时2天21个小时。太夸张了。。。

加上前面的传输文件,2天。整个过程持续了5天左右。确实够长,还好,这个表空间是历史归档数据。可以一直停机。

表空间置为read write

SQL> alter tablespace TBS_OLD_DATA read write;

Tablespace altered.

SQL> select TABLESPACE_NAME,status,CONTENTS from dba_tablespaces;

TABLESPACE_NAME STATUS CONTENTS

------------------------------ --------- ---------------------

SYSTEM ONLINE PERMANENT

SYSAUX ONLINE PERMANENT

UNDOTBS1 ONLINE UNDO

TEMP ONLINE TEMPORARY

TBS_OLD_DATA ONLINE PERMANENT

对象比对

表空间信息:

Mon Nov 06 page 1

Tablespace Usage Status

TBS_NAME TYPE TOTAL(GB) USAGE(GB) FREE(GB) FREE PCT % EXTENSIBLE(GB) MAX_SIZE(GB) USED PCT OF MAX % NO_AXF_NUM AXF_NUM

-------------------- -------------------- --------------- --------------- --------------- ---------- --------------- --------------- ----------------- ---------- -------

TBS_OLD_DATA PERMANENT 8,610.000 4,782.176 3,827.824 55.54 .000 8,610.000 55.54 287 0

SYSTEM PERMANENT 14.678 2.788 11.889 19.00 17.322 32.000 8.71 0 1

SYSAUX PERMANENT 2.188 2.074 .113 94.82 29.812 32.000 6.48 0 1

UNDOTBS1 UNDO 1.011 .889 .122 87.93 30.989 32.000 2.78 0 1

TEMP TEMPORARY .339 .000 .339 .00 31.661 32.000 .00 0 1

对象信息:

SQL> select owner,table_name from dba_tables where tablespace_name='TBS_OLD_DATA'

2 minus

3 select owner,table_name from dba_tables@to_act where tablespace_name='TBS_OLD_DATA';

Mon Nov 06 page 1

Tablespace Usage Status

OWNER TABLE_NAME

-------------------- --------------------

LC_ZHAOGE TEMP_CALFACTOR_ERROR

SQL> select owner,index_name from dba_indexes where tablespace_name='TBS_OLD_DATA'

2 minus

3 select owner,index_name from dba_indexes@to_act where tablespace_name='TBS_OLD_DATA';

Mon Nov 06 page 1

Tablespace Usage Status

OWNER INDEX_NAME

-------------------- ------------------------------

JD SYS_C001806330

JD SYS_C001832189

JD SYS_C001848608

JD SYS_IL0005623531C00003$$

LC_LIPF SYS_IL0005623543C00003$$

LC_LIPF SYS_IL0005623569C00003$$

OGG SYS_IL0005623557C00001$$

OGG SYS_IL0005623560C00001$$

OGG SYS_IL0005623563C00001$$

OGG SYS_IL0005623566C00001$$

OLD_AD SYS_C001806081

OLD_AD SYS_C001813410

OLD_AD SYS_C001814737

OLD_AD SYS_C001840432

OLD_AD SYS_C001848530

OLD_AD SYS_IL0005623534C00008$$

OLD_AD SYS_IL0005623537C00003$$

OLD_AD SYS_IL0005623540C00003$$

UTOPTEA SYS_IL0005623546C00112$$

UTOPTEA SYS_IL0005623549C00002$$

UTOPTEA SYS_IL0005623552C00007$$

UTOPTEA SYS_IL0005623552C00008$$

22 rows selected.

SQL> @table_info

ENTER YOU TABLE_NAME: TEMP_CALFACTOR_ERROR

+----------------------------------------------------------------------------+

| TABLE INFORMATION******************************************** |

+----------------------------------------------------------------------------+

Tablespace

OWNER TABLENAME Name ANALYZED INI_TRANS PAR TEMP BYTES PCT_FREE PCT_USED NUM_ROWS

---------- ------------------------- -------------------- -------------------- ---------- --- ---- ---------- ---------- ---------- ----------

LC_ZHAOGE TEMP_CALFACTOR_ERROR TBS_OLD_DATA 2023-11-05 16:36:17 1 NO N 1M 10 0

+----------------------------------------------------------------------------+

| OBJECT INFORMATION |

+----------------------------------------------------------------------------+

OBJECT_ID OWNER OBJECT_NAME OBJECT_TYP STATUS CREATED LASTDDLTIME STATUS Temp

---------- ---------- ------------------------------ ---------- ------- -------------------- -------------------- ------- ---------------

5604804 LC_ZHAOGE TEMP_CALFACTOR_ERROR TABLE VALID 2023-11-05 00:13:46 2023-11-05 03:07:49 VALID N

多余的这张表是昨天刚新建的。

多余的索引,都是lob字段对应的段和索引段,重新生成的。

所以对象都迁移过来了

导入系统权限信息

nohup impdp system/xxxx@oldact metrics=yes directory=impdp network_link=TO_ACT cluster=N logfile=act11.log full=y include=system_grant,ROLE_GRANT &

处理无效对象

exec utl_recomp.recomp_parallel(60);

收集数据库和数据字典统计信息

set serveroutput on

set timing on

exec dbms_stats.set_param('DEGREE','64');

select dbms_stats.get_param('DEGREE') from dual;

exec dbms_stats.gather_database_stats;

exec dbms_stats.gather_dictionary_stats;

exec dbms_stats.gather_fixed_objects_stats;

exec dbms_stats.set_param('DEGREE','0');

commit;

回收dba 权限

revoke dba from UOP_VB1 ;

revoke dba from UQRY_LC_ZHANGJIN ;

revoke dba from MD ;

revoke dba from PD ;

revoke dba from SD ;

revoke dba from BD ;

revoke dba from AD ;

revoke dba from ZD ;

.........

修改用户默认表空间

alter user LC_QIYZ default tablespace TBS_OLD_DATA;

alter user LC_ZHANGSM default tablespace TBS_OLD_DATA;

alter user LC_YANHL default tablespace TBS_OLD_DATA;

alter user LC_CHENWJ default tablespace TBS_OLD_DATA;

alter user LC_MAJL default tablespace TBS_OLD_DATA;

.............

alter user YD_GUOYN default tablespace TBS_OLD_DATA;

alter user LC_MINR default tablespace TBS_OLD_DATA;

alter user TOPTEACT default tablespace TBS_OLD_DATA;

alter user LC_LIJ default tablespace TBS_OLD_DATA;

alter user LC_DIZW default tablespace TBS_OLD_DATA;

alter user LC_LIX default tablespace TBS_OLD_DATA;

alter user UOP_VB1 temporary tablespace temp;

alter user UQRY_LC_ZHANGJIN temporary tablespace temp;

.........

alter user LC_RUW temporary tablespace temp;

alter user WS_LIQY temporary tablespace temp;

alter user LC_WANGJ temporary tablespace temp;

alter user YD_MAWX temporary tablespace temp;

经历了5天,整个迁移过程就算结束了。

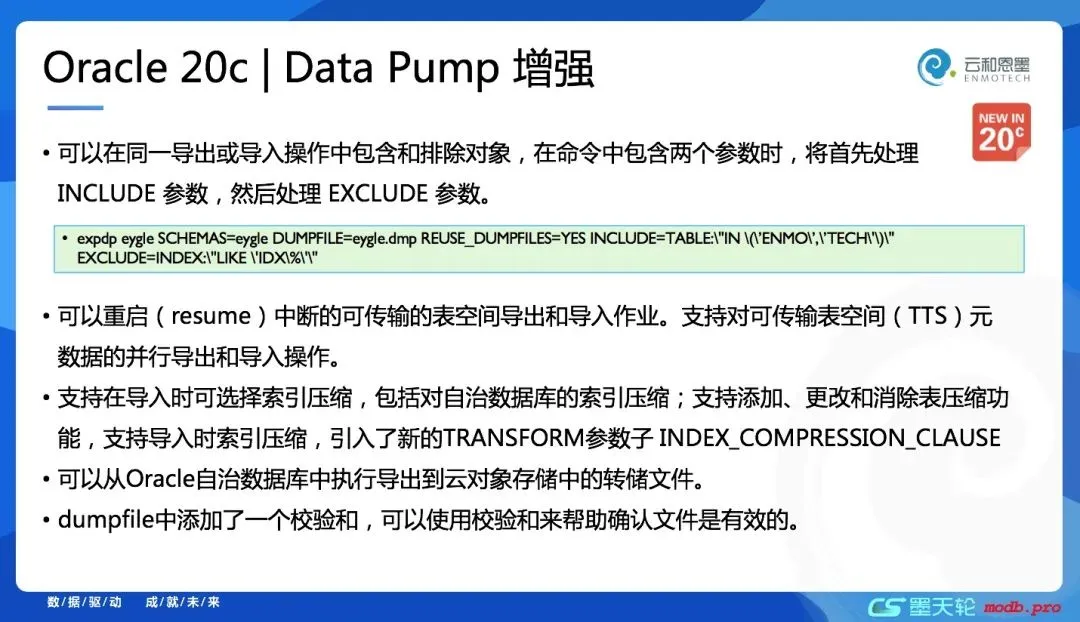

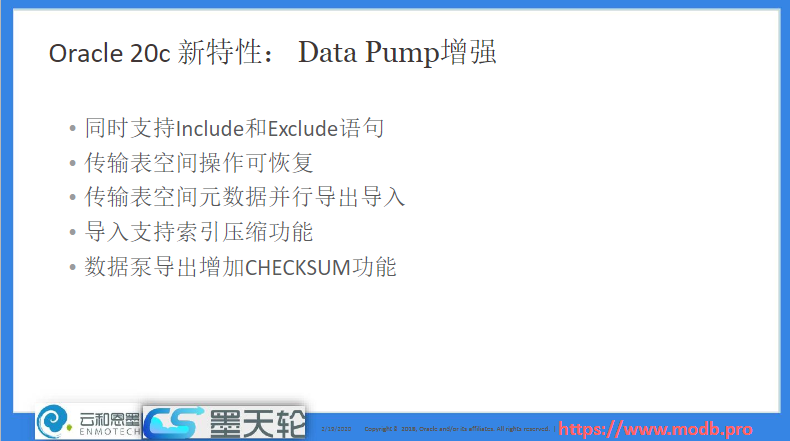

元数据导出导入慢问题说明

· 从12.2版本开始,parallel参数扩展为在expdp和impdp过程中包含元数据,例如parallel>1,所有的元数据和数据会并行卸载

· 从20C版本开始,Data Pump改进了并行传输表空间元数据操作

从Oracle Database 20c开始Oracle Data Pump通过并行性改善了可移植表空间元数据操作。

商业收益:Oracle Data Pump现在支持可移植表空间(TTS)元数据的并行导出和导入操作。这是在TTS迁移中将表空间数据文件与目标数据库关联的信息。并行改进了TTS的导出和导入性能,尤其是当数据文件中有数百万个数据库对象(包括表索引分区和子分区)时。

本次数据库版本为19c,所以TTS的元数据导出导入不能采用并行的方式。如上,参数共用,会报错:ORA-39047。

但是数据库版本大于12.2的时候,数据泵支持元数据的并行。

参考:

https://www.modb.pro/db/24496

https://www.modb.pro/doc/2174

https://www.modb.pro/db/41495

最后,对新版本TTS并行导出导入支持测试:

数据库版本:

[oracle@db1 ~]$ sqlplus / as sysdba

SQL*Plus: Release 23.0.0.0.0 - Beta on Thu Nov 2 23:59:33 2023

Version 23.1.0.0.0

Copyright (c) 1982, 2022, Oracle. All rights reserved.

Connected to:

Oracle Database 23c Enterprise Edition Release 23.0.0.0.0 - Beta

Version 23.1.0.0.0

SQL>

TRANSPORT_TABLESPACES和parallel共用,并行导出:

[oracle@db1 enmo]$ expdp system/oracle@ENMOPDB1 directory=impdp dumpfile=users_%U.dmp logfile=users.log TRANSPORT_TABLESPACES=users cluster=n parallel=4

Export: Release 23.0.0.0.0 - Beta on Thu Nov 2 23:08:57 2023

Version 23.1.0.0.0

Copyright (c) 1982, 2022, Oracle and/or its affiliates. All rights reserved.

Connected to: Oracle Database 23c Enterprise Edition Release 23.0.0.0.0 - Beta

Starting "SYSTEM"."SYS_EXPORT_TRANSPORTABLE_01": system/********@ENMOPDB1 directory=impdp dumpfile=users_%U.dmp logfile=users.log TRANSPORT_TABLESPACES=users cluster=n parallel=4

Processing object type TRANSPORTABLE_EXPORT/INDEX/STATISTICS/INDEX_STATISTICS

Processing object type TRANSPORTABLE_EXPORT/STATISTICS/TABLE_STATISTICS

Processing object type TRANSPORTABLE_EXPORT/TABLE

Processing object type TRANSPORTABLE_EXPORT/GRANT/OWNER_GRANT/OBJECT_GRANT

Processing object type TRANSPORTABLE_EXPORT/INDEX/INDEX

Processing object type TRANSPORTABLE_EXPORT/PLUGTS_BLK

Processing object type TRANSPORTABLE_EXPORT/CONSTRAINT/REF_CONSTRAINT

Processing object type TRANSPORTABLE_EXPORT/TRIGGER

Processing object type TRANSPORTABLE_EXPORT/CONSTRAINT/CONSTRAINT

Processing object type TRANSPORTABLE_EXPORT/POST_INSTANCE/PLUGTS_BLK

ORA-39439: Warning: cannot process blockchain tables in transportable jobs or over a network link

Master table "SYSTEM"."SYS_EXPORT_TRANSPORTABLE_01" successfully loaded/unloaded

******************************************************************************

Dump file set for SYSTEM.SYS_EXPORT_TRANSPORTABLE_01 is:

/home/oracle/enmo/users_01.dmp

/home/oracle/enmo/users_02.dmp

/home/oracle/enmo/users_03.dmp

******************************************************************************

Datafiles required for transportable tablespace USERS:

+DATA/ENMO/EFEE7AA6FC6F0FFCE053A71614AC1A59/DATAFILE/users.278.1123603769

Job "SYSTEM"."SYS_EXPORT_TRANSPORTABLE_01" completed with 1 error(s) at Thu Nov 2 23:09:34 2023 elapsed 0 00:00:35

--期间查看并行进程

Export> status

Job: SYS_EXPORT_TRANSPORTABLE_01

Operation: EXPORT

Mode: TRANSPORTABLE

State: EXECUTING

Bytes Processed: 0

Current Parallelism: 4

Job Error Count: 0

Job heartbeat: 1

Dump File: /home/oracle/enmo/users_%u.dmp

Dump File: /home/oracle/enmo/users_01.dmp

bytes written: 8,192

Dump File: /home/oracle/enmo/users_02.dmp

bytes written: 12,288

Dump File: /home/oracle/enmo/users_03.dmp

bytes written: 8,192

Worker 1 Status:

Instance ID: 1

Instance name: enmo1

Host name: db1

Object start time: Thursday, 02 November, 2023 23:06:39

Object status at: Thursday, 02 November, 2023 23:06:40

Process Name: DW00

State: EXECUTING

Worker 2 Status:

Instance ID: 1

Instance name: enmo1

Host name: db1

Object start time: Thursday, 02 November, 2023 23:06:43

Object status at: Thursday, 02 November, 2023 23:06:45

Process Name: DW01

State: EXECUTING

Worker 3 Status:

Instance ID: 1

Instance name: enmo1

Host name: db1

Object start time: Thursday, 02 November, 2023 23:06:40

Object status at: Thursday, 02 November, 2023 23:06:41

Process Name: DW02

State: EXECUTING

Worker 4 Status:

Instance ID: 1

Instance name: enmo1

Host name: db1

Object start time: Thursday, 02 November, 2023 23:06:42

Object status at: Thursday, 02 November, 2023 23:06:45

Process Name: DW03

State: EXECUTING

Object Schema: ENMO

Object Name: T1

Object Type: TRANSPORTABLE_EXPORT/TABLE

Completed Objects: 25

Worker Parallelism: 1

[oracle@db1 enmo]$ ls -ltr

total 2224

-rw-rw---- 1 oracle asmadmin 2224128 Nov 2 23:09 users_02.dmp

-rw-rw---- 1 oracle asmadmin 28672 Nov 2 23:09 users_01.dmp

-rw-rw---- 1 oracle asmadmin 20480 Nov 2 23:09 users_03.dmp

-rw-rw-rw- 1 oracle asmadmin 1811 Nov 2 23:09 users.log

23c确实支持TTS的并行导出导入。