一、背景

近段时间,一款叫yashandb的数据库突然出圈,而我们也正处在国产数据库的选型阶段,当然也找到yashan数据库的专家老师们做了一次技术交流。其中,在这款数据库中的主备模式,有一个特性看起来还是非常有意思的。

在我们常见的数据库主备中,主要有Oracle Adg,MySQL主从,PostgreSQL主从,DB2 HADR等。这些主备模式的切换主要依靠手动,脚本、keepalive、haproxy等工具实现。当我们的脚本或工具判断条件写的不是非常完善的时候,则可能会导致切换混乱,数据丢失的问题。而yashandb的一主多备的架构,则是依靠RAFT协议自动完成切换。当看到这个小特性的时候,瞬间感觉眼前一亮,想要体验一把。

二、raft协议简介

Raft协议是在分布式存储系统中常用的一种一致性协议模式,在Oracle、Mysql、PostgreSQL、DB2等关系型数据库中比较少见,因此对于DBA来说可能不太熟悉。而在阿里的OceanBase中主要采用了一种分布式一致性协议Paxos,raft协议则可以看成是一种更加精简的Paxos协议。

简单来说,它主要可以分为三个角色:领导者(leader)、候选者(candidate)、跟随者(follower)。在默认情况下,所有节点都是跟随者,而当节点启动后,多个节点则会在集群内部进行投票,得票最多的节点,则成为领导者;当某两个或者多个节点出现平票的时候,这些节点则会成为候选者,此时集群则会随机timeout这些节点,重新发起投票,直到选出领导者,而选出新的领导者后,整个集群就会进入新的任期(term)。

领导者则会定时通过网络心跳机制定时像跟随者发送信息,告诉集群,我还活着;当主节点(领导者)出现异常离线后,跟随者无法收到领导者的存活信息,则会再次自助发起投票,选出新的领导者。

可以看出使用raft协议的集群,是一个比较稳定、自助的选主集群,基本上不需要人为介入。

三、观察选举过程

针对yashandb的主备架构安装搭建,这里就不多加叙述了,我们可以直接通过其官网的文档说明,跟着做就能得到一套完整的主备环境。

不过这里需要特别说一下,从上面的章节可以知道,raft一致性协议实现的最小节点数是3个。因此,我们在搭建的时候需要选择一主多备的模式,同时主节点和各个从节点的node_id必须不同,而yashandb中的node_id是最开始就确定好的,虽然后续我们可以在配置文件中查看到,并且做出修改,但实际上是不生效的,所以如果后续在生产环境中使用该特性,我们则一定要事先做好规划。而yashandb对自主切换模式的主备环境整个搭建过程还是非常友好和智能的,基本只需要两条命令,我们就可以根据我们的需求获得一个完整的一主多备集群(包括参数也是官方推荐的)。

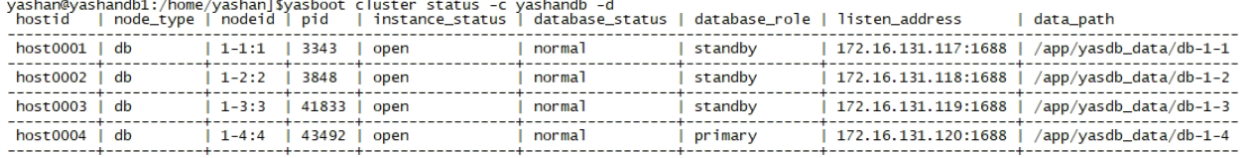

这里,我们的集群信息如下:

yasboot cluster status -c yashandb -d

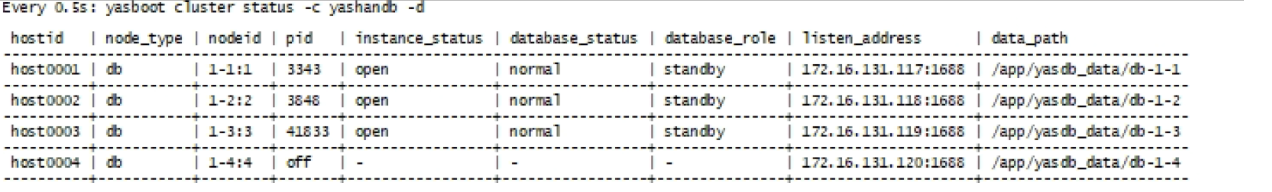

这里,我们将host0004关闭,看看是什么现象呢(从状态和日志上可以观察)?

关闭初期,集群正在选举,没有主:

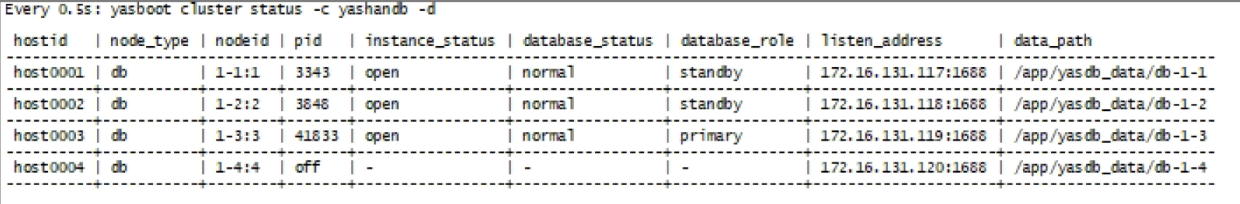

集群选出新的主,开始新的任期:

从主节点的日志中可以则看到一下内容(db的run.log日志):

2023-12-03 19:32:02.759 41848 [ERROR][errno=00406]: connection is closed

2023-12-03 19:32:09.052 41881 [INFO] [election-main][tender::core::follower][(1-3)][Term(7)] an election timeout is hit, need to transit to pre-candidate

2023-12-03 19:32:09.052 41881 [INFO] [election-main][tender::core::candidate][(1-3)][Term(7)] start the pre-candidate loop

2023-12-03 19:32:09.053 41881 [INFO] [ELECTION] last redo point: 7-9-15746-46092

2023-12-03 19:32:09.052 41834 [INFO] [ELECTION_WORKER][yas_election::event][Node((1-3))] an event has happened: TransitToPreCandidate

2023-12-03 19:32:09.054 41836 [WARN] [ELECTION_WORKER][tender::core::candidate][(1-3)][Term(7)] failed to send vote request to node((1-4)): failed to connect socket, errno 111, error message "Connection refused"

2023-12-03 19:32:09.056 41881 [INFO] [election-main][tender::core::candidate][(1-3)][Term(7)] minimum number of pre-votes have been received, so transit to candidate

2023-12-03 19:32:09.057 41881 [INFO] [election-main][tender::core::candidate][(1-3)][Term(7)] start the candidate loop

2023-12-03 19:32:09.057 41836 [INFO] [ELECTION_WORKER][yas_election::event][Node((1-3))] an event has happened: TransitToCandidate

2023-12-03 19:32:09.060 41881 [INFO] [ELECTION] election reset id: 8

2023-12-03 19:32:09.061 41881 [INFO] [ELECTION] last redo point: 7-9-15746-46092

2023-12-03 19:32:09.071 41835 [WARN] [ELECTION_WORKER][tender::core::candidate][(1-3)][Term(8)] failed to send vote request to node((1-4)): failed to connect socket, errno 111, error message "Connection refused"

2023-12-03 19:32:09.081 41881 [INFO] [election-main][tender::core::candidate][(1-3)][Term(8)] minimum number of votes have been received, so transit to leader

2023-12-03 19:32:09.082 41836 [INFO] [ELECTION] database promote, current term 8

2023-12-03 19:32:09.082 41881 [INFO] [election-main][tender::core::leader][(1-3)][Term(8)] start the leader loop

2023-12-03 19:32:09.084 41835 [WARN] [ELECTION_WORKER][tender::core::leader][(1-3)][Term(8)] failed to send heartbeat request to (1-4): failed to connect socket, errno 111, error message "Connection refused"

2023-12-03 19:32:09.120 41833 [INFO] [HA] stop sender threads

2023-12-03 19:32:09.121 41833 [INFO] [HA] stop redo receiver 0

2023-12-03 19:32:09.164 41848 [INFO] [HA] redo receiver thread exited

2023-12-03 19:32:09.165 41833 [INFO] [HA] start waiting replay end

2023-12-03 19:32:09.166 41833 [INFO] [HA] replay point 7-9-15746-46092, remain redo size 0MB, apply remain time 0s, apply Rate 46455688KB/s

2023-12-03 19:32:09.184 41871 [WARN] [RCY] instance 0 stop replay, point 7-10-1-46092

2023-12-03 19:32:09.186 41871 [INFO] [RCY] instance 0 standby replay end with point 7-9-15746-46092

2023-12-03 19:32:09.187 41871 [INFO] [DB] recover destroy

2023-12-03 19:32:09.186 41833 [INFO] [HA] end waiting replay end

2023-12-03 19:32:09.188 41833 [INFO] [DB] stop standby replay thread

2023-12-03 19:32:09.191 41833 [INFO] [HA] start to promote

2023-12-03 19:32:09.258 41833 [INFO] [ARCH] archive process has been paused

2023-12-03 19:32:09.277 41833 [INFO] [REDO] switch redo file, new asn 10

2023-12-03 19:32:09.280 41833 [INFO] [REDO] set current redo to slot 1, flush point 8-10-1-46092

2023-12-03 19:32:09.281 41833 [INFO] [ARCH] archive process has been resumed

2023-12-03 19:32:09.282 41833 [INFO] [REDO] instance 0, switch redo file, type 4, point 8-10-1-46092

2023-12-03 19:32:09.357 41852 [INFO] [ARCH] redo archive start with asn 7

2023-12-03 19:32:09.369 41833 [INFO] [TEMP][EXTENT] start remove tablespace TEMP from temp extent map

2023-12-03 19:32:09.454 41833 [INFO] [HA] end to promote

2023-12-03 19:32:09.455 41833 [INFO] [HA] start redo senders for primary

2023-12-03 19:32:09.456 50618 [INFO] [HA] redo sender thread 0 started, remote address 172.16.131.117:1689

2023-12-03 19:32:09.456 50619 [INFO] [HA] redo sender thread 1 started, remote address 172.16.131.118:1689

2023-12-03 19:32:09.456 50620 [INFO] [HA] redo sender thread 2 started, remote address 172.16.131.120:1689

2023-12-03 19:32:09.461 50618 [INFO] [HA] successfully connected to standby 0

2023-12-03 19:32:09.461 41836 [INFO] [ELECTION_WORKER][yas_election::event][Node((1-3))] an event has happened: TransitToLeader { term: 8, caused_by_step_up: false }

2023-12-03 19:32:09.463 50619 [INFO] [HA] successfully connected to standby 1

2023-12-03 19:32:09.464 50618 [INFO] [HA] standby redo 3, rstId 7, asn 9, first lfn 32342, next lfn 46092, time 1701540008614792, last lsn 48712, last scn 506944706566328320

2023-12-03 19:32:09.466 50618 [INFO] [HA] primary redo 3, rstId 7, asn 9, fisrt lfn 32342, next lfn 46092, time 1701540008614792, last lsn 48712, last scn 506944706566328320

2023-12-03 19:32:09.467 50618 [INFO] [HA] initialize send point:7-9-15746-46092, standby id 0, instance id 0

2023-12-03 19:32:09.468 50618 [INFO] [SCF] fetch coast Repl Manager finished, scan range [46092,18446744073709551615)

2023-12-03 19:32:09.469 50618 [INFO] [REDO] try switch redo file, current file point 8-10-1-46092

2023-12-03 19:32:09.470 50618 [INFO] [HA] update send point to 8-10-1-46092

2023-12-03 19:32:09.474 50619 [INFO] [HA] standby redo 3, rstId 7, asn 9, first lfn 32342, next lfn 46092, time 1701540008614792, last lsn 48712, last scn 506944706566328320

2023-12-03 19:32:09.475 50619 [INFO] [HA] primary redo 3, rstId 7, asn 9, fisrt lfn 32342, next lfn 46092, time 1701540008614792, last lsn 48712, last scn 506944706566328320

2023-12-03 19:32:09.476 50619 [INFO] [HA] initialize send point:7-9-15746-46092, standby id 1, instance id 0

2023-12-03 19:32:09.477 50619 [INFO] [SCF] fetch coast Repl Manager finished, scan range [46092,18446744073709551615)

2023-12-03 19:32:09.478 50619 [INFO] [REDO] try switch redo file, current file point 8-10-1-46092

2023-12-03 19:32:09.478 50619 [INFO] [HA] update send point to 8-10-1-46092

2023-12-03 19:32:09.670 41852 [INFO] [ARCH] instance: 0, itemCounts: 8, add archive file filename /app/yasdb_data/db-1-3/archive/arch_7_9.ARC, asn: 9, ctrlId: 7, used: 1

2023-12-03 19:32:09.696 41852 [INFO] [ARCH] add new archive file /app/yasdb_data/db-1-3/archive/arch_7_9.ARC

2023-12-03 19:32:12.085 41834 [WARN] [ELECTION_WORKER][tender::core::leader][(1-3)][Term(8)] failed to send heartbeat request to (1-4): failed to connect socket, errno 111, error message "Connection refused"

2023-12-03 19:32:15.085 41834 [WARN] [ELECTION_WORKER][tender::core::leader][(1-3)][Term(8)] failed to send heartbeat request to (1-4): failed to connect socket, errno 111, error message "Connection refused"

2023-12-03 19:32:18.084 41834 [WARN] [ELECTION_WORKER][tender::core::leader][(1-3)][Term(8)] failed to send heartbeat request to (1-4): failed to connect socket, errno 111, error message "Connection refused"

从上述日志内容中可以看到,新的任期内的主节点(1-3)经历了以下过程:

1.日志第1行:连接关闭(这个应该是指和上一任期的主节点连接发生了关闭);

2.日志第2-5行:在第7个任期内(日志中显示的Term(7))由于主节点异常,选举发生了暂停,该节点成为预候选者(pre-pre-candidate),此时发生了TransitToPreCandidate的事件;

3.日志第6-9行:在第7个任期内,节点收到了最多的投票,并转换为候选者(candidate),其中4节点由于无法连接,不能参与投票,所以投票的只有另外三个节点,此时发生了TransitToCandidate的事件;

4.日志10-16行:集群将选举id设置为8(将开启第8任期),并且收到了最多的投票,并转换为领导者(leader),正式开启第8任期(current term 8),其中4节点由于无法连接,不能参与投票;

5.日志17-40行:数据库开始停止信息发送线程,redo接受线程,重新recover数据库,切换redo,并重新启用发送线程和redo线程等工作,这里可以认为是在恢复数据库连接,并重从新的主库同步日志;

6.日志41-42行:成功连接其中一个备库,此时发生了TransitToLeader的事件,该节点正式开启第8任期;

7.后续则是成功连接剩下的另一个备库,并同步日志;而关闭的节点则由于无法连接,则会重复报连接错误。

从备节点的日志中可以则看到一下内容(db的run.log日志):

2023-12-03 19:31:40.705 3863 [ERROR][errno=00406]: connection is closed

2023-12-03 19:31:47.004 3895 [INFO] [ELECTION] last redo point: 7-9-15746-46092

2023-12-03 19:31:47.013 3895 [INFO] [ELECTION] election reset id: 8

2023-12-03 19:31:47.014 3895 [INFO] [election-main][tender::core][(1-2)][Term(8)] vote request term(8) is greater than current term(7)

2023-12-03 19:31:47.015 3895 [INFO] [ELECTION] last redo point: 7-9-15746-46092

2023-12-03 19:31:47.019 3895 [INFO] [ELECTION] election reset id: 8

2023-12-03 19:31:47.033 3895 [INFO] [election-main][tender::core][(1-2)][Term(8)] change leader from (1-4) to (1-3)

2023-12-03 19:31:47.034 3851 [INFO] [ELECTION_WORKER][yas_election::event][Node((1-2))] an event has happened: ChangeLeader(NodeId { serviceId: 0, groupId: 1, groupNodeId: 3 })

2023-12-03 19:31:47.406 130828 [INFO] [HA] standby redo receiver is successfully connected to primary: 172.16.131.119:1689

2023-12-03 19:31:47.421 3863 [INFO] [HA] redo receiver receive message, type 70, length 128

2023-12-03 19:31:47.425 3863 [INFO] [REDO] set standby instance 0 receive point to 7-9-15746-46092, slot 3, receive lsn 48712, receive scn 506944706566328320

2023-12-03 19:31:47.486 3863 [INFO] [ARCH] archive process has been paused

2023-12-03 19:31:47.512 3863 [INFO] [ARCH] archive process has been resumed

2023-12-03 19:31:47.513 3863 [INFO] [HA] redo receiver receive message, type 69, length 96

2023-12-03 19:31:47.529 3863 [INFO] [REDO] switch redo file, new asn 10

2023-12-03 19:31:47.586 3868 [INFO] [ARCH] redo archive start with asn 7

2023-12-03 19:31:47.631 3887 [INFO] [RCY] change reset point from 7-8-1-2257 to 8-10-1-46092

2023-12-03 19:31:47.991 3868 [INFO] [ARCH] instance: 0, itemCounts: 8, add archive file filename /app/yasdb_data/db-1-2/archive/arch_7_9.ARC, asn: 9, ctrlId: 7, used: 1

2023-12-03 19:31:48.019 3868 [INFO] [ARCH] add new archive file /app/yasdb_data/db-1-2/archive/arch_7_9.ARC

从备节点的日志中可以看到,新的任期内备节点经历了以下过程:

1.连接关闭;

2.记录了最后的redo日志点;

3.进入第8任期;

4.投票认为第8任期比第7任期更佳;

5.投票将第7任期的(1-4)主节点转换为第8任期(1-3)的主节点;

6.此时(1-2)备节点发生了一个事件,就是将(1-3)节点选为第8任期的主节点;

7.连接新的主节点,并开始接受日志。

另外一个(1-1)的备节点与(1-2)备节点日志基本一致。

四、总结

一个看起来很小的主备切换动作,却结合了raft这样的一致性协议,让我们在对数据库的主备切换过程中形成全自动化切换,更加简单,效率更高。从这一点来看,国产数据库的创意和功能确实是用了心,未来可期。

但是,针对该场景,我们还是必须要思考一点,数据库层面虽然能够完成很好的自动切换,但是我们应该如何配合应用更快速的改造?当我们无序的完成数据库的切换后,最理想的状态应该是类似于Oracle的TAF,甚至TAC的功能,尽可能做到应用无缝切换,无需修改连接串等(特别是在微服务架构中,很多时候的连接串是写在代码里或者配置中心里,修改起来是非常麻烦的)。那么我们是否可以考虑提供原生的vip功能,使vip跟随主库漂移;甚至可以自动转移我们的应用连接。

当然,国产数据库在当前如此大的竞争下,一定是会不断向前发展的,我相信总有一天,我们也会有一款数据库能成为和Oracle、Mysql、PostgreSQL一样的主流数据库。