【安装】-Oracle Linux7.9 基于VMWARE安装Oracle19g rac规划安装配置详细方案

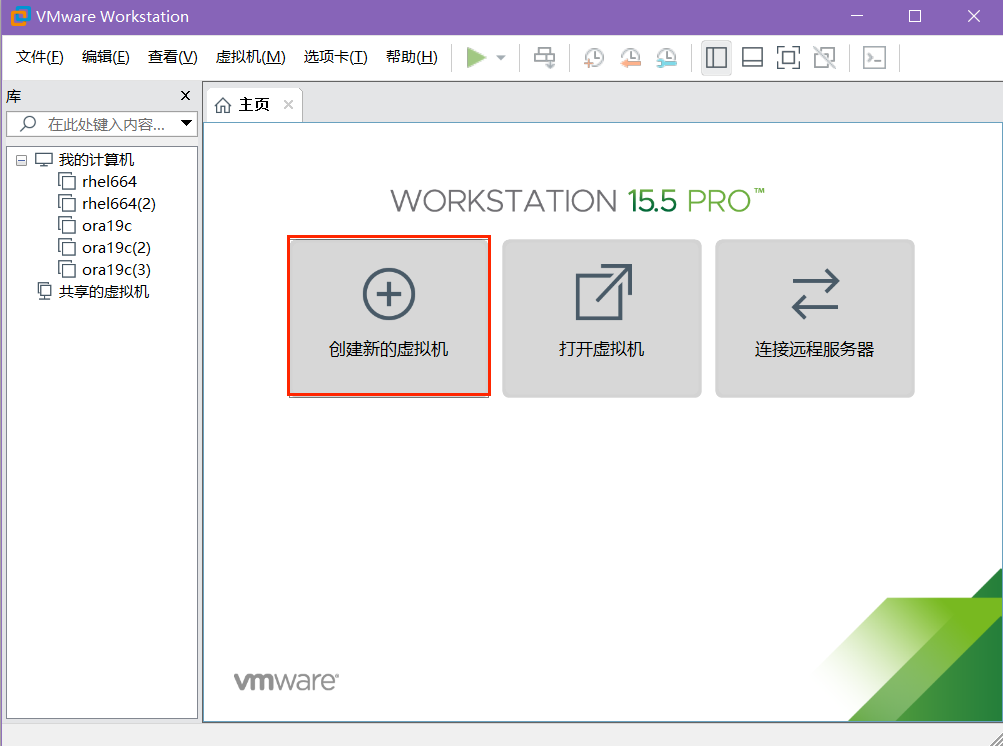

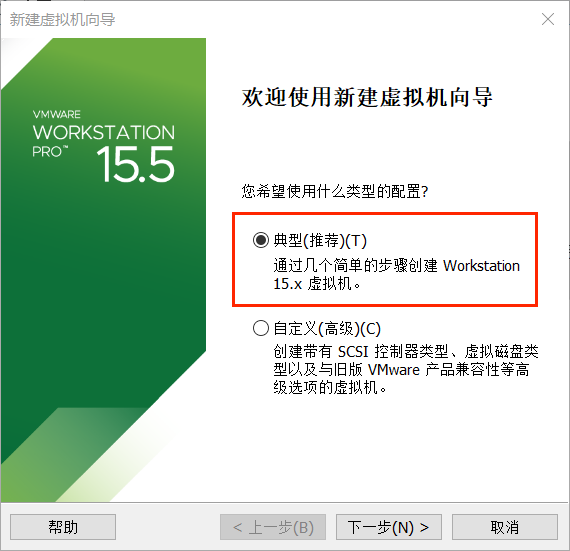

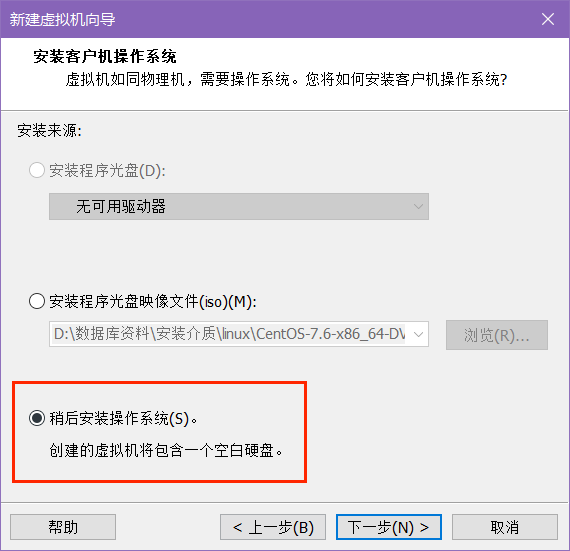

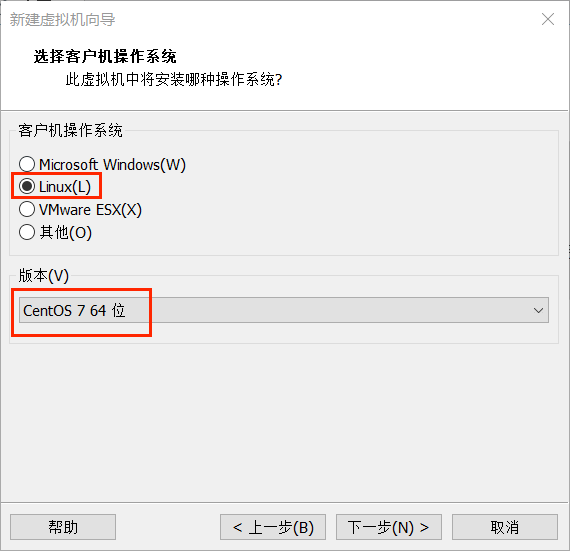

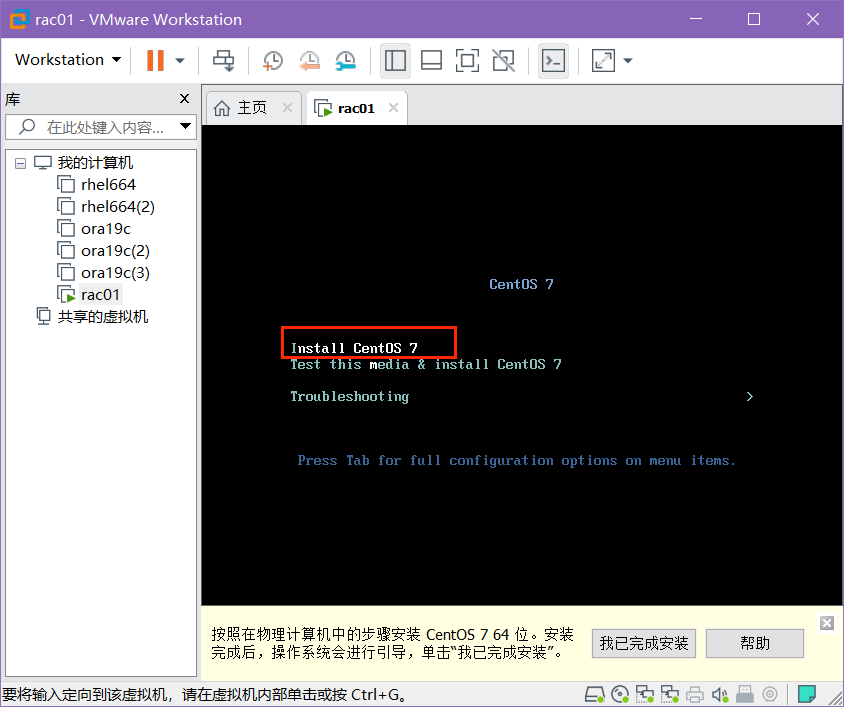

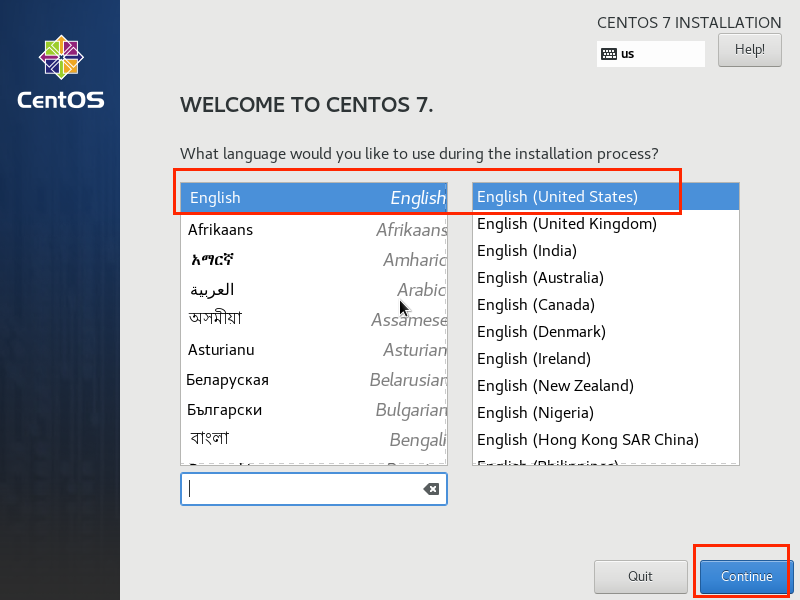

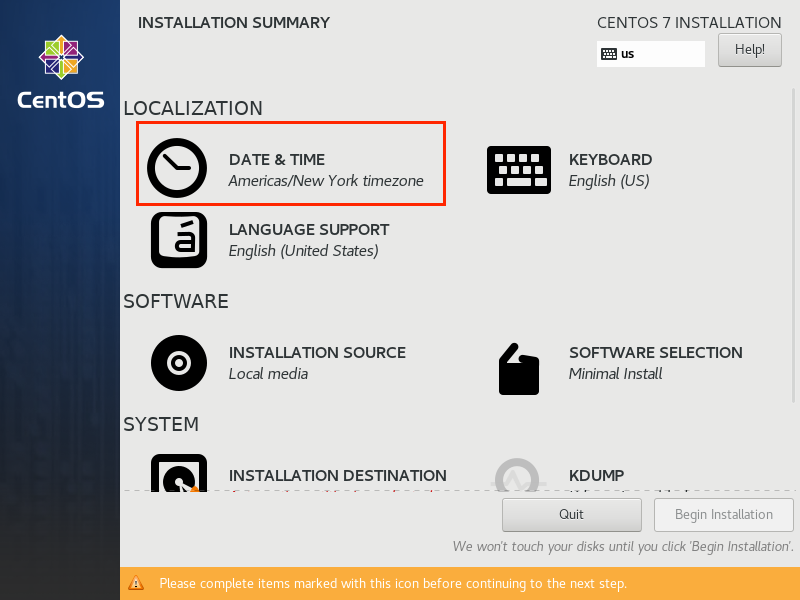

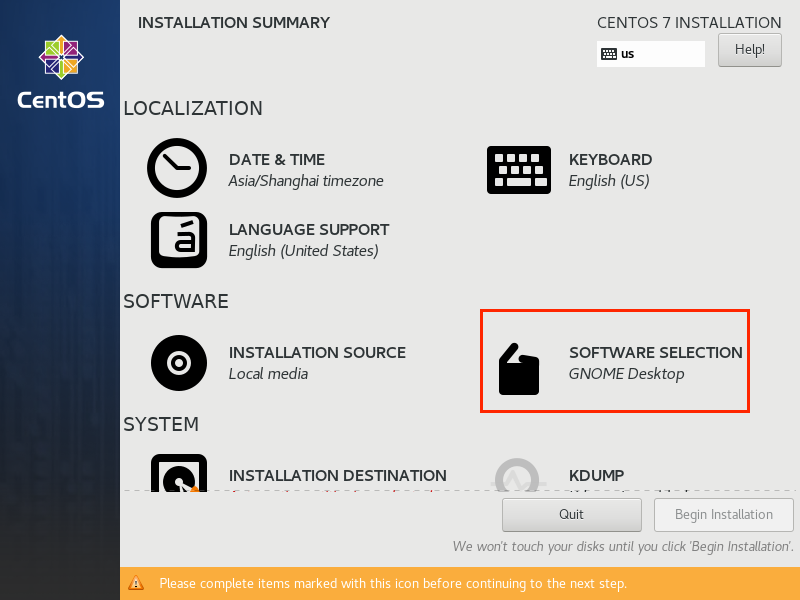

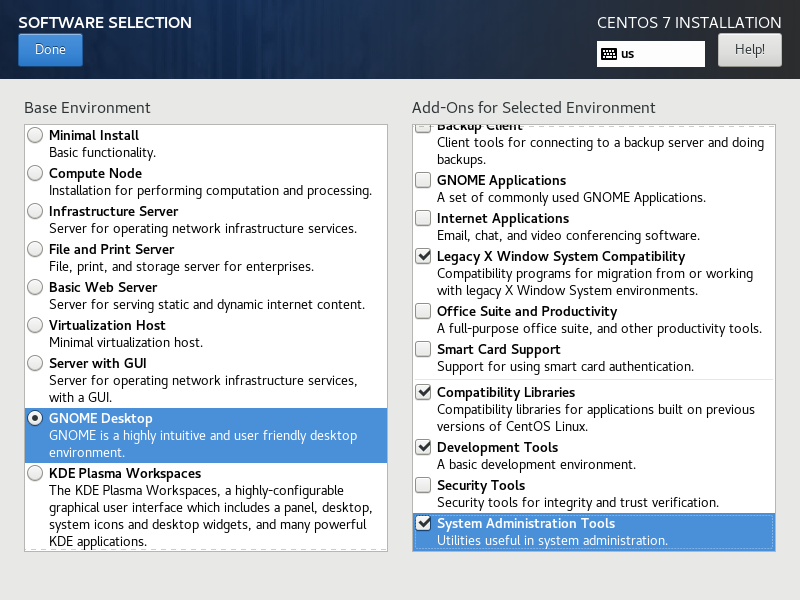

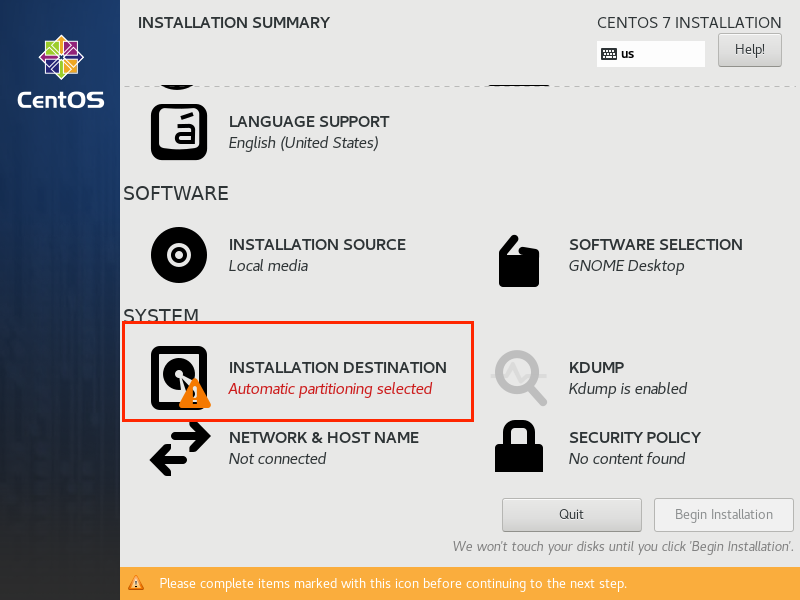

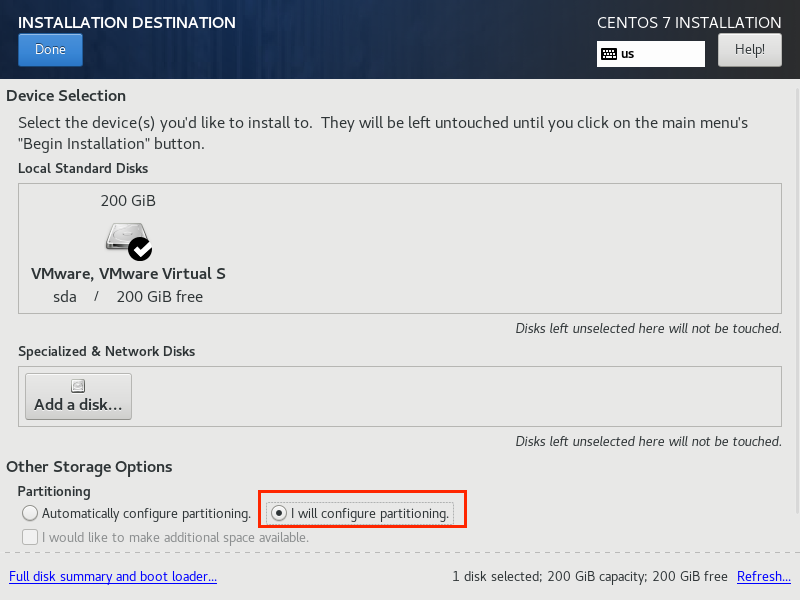

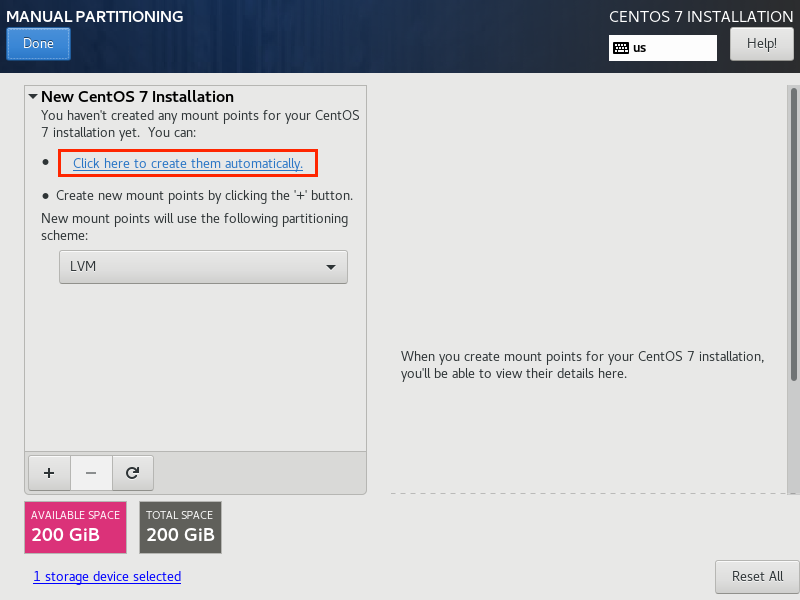

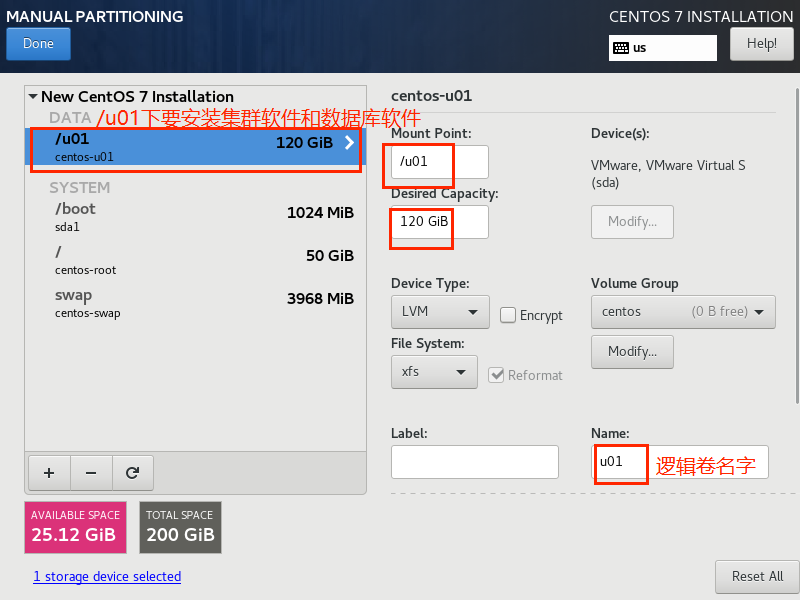

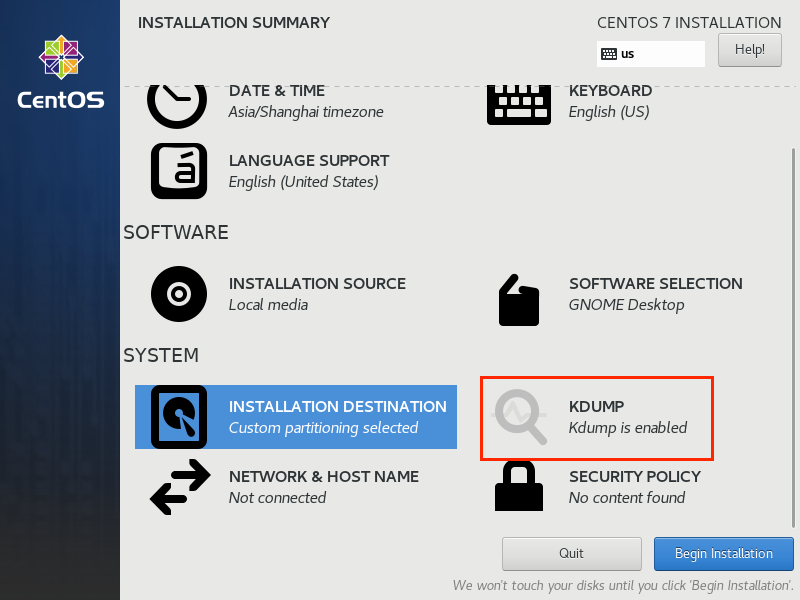

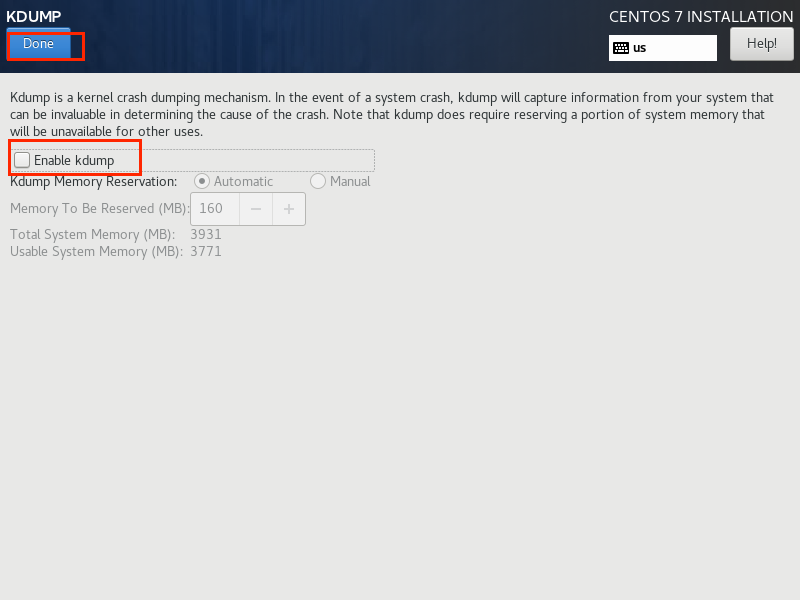

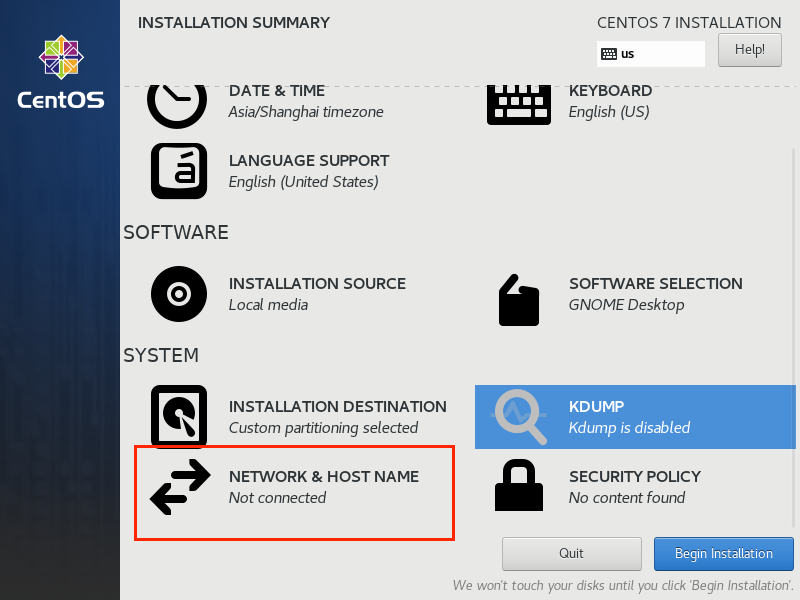

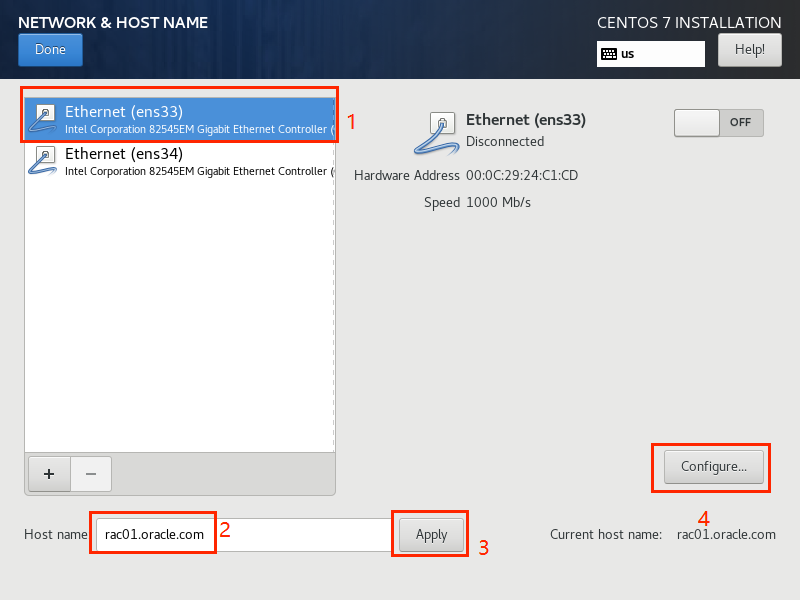

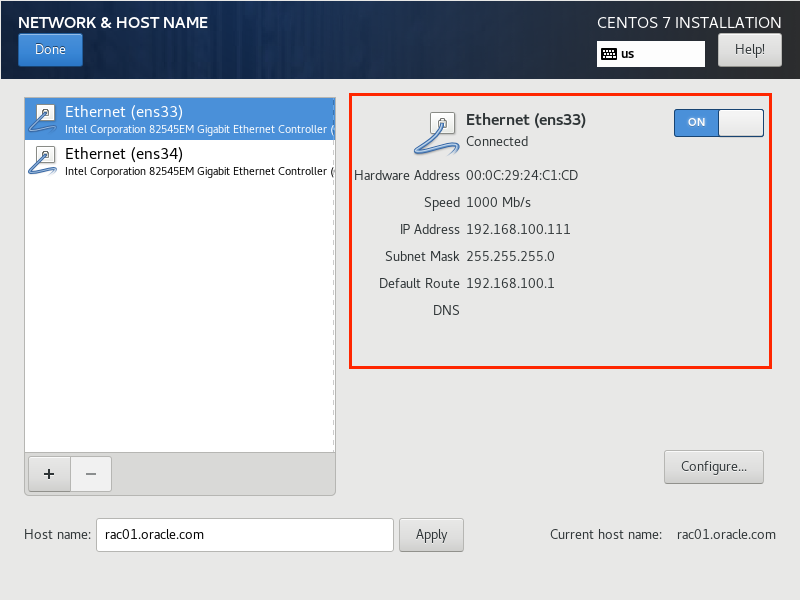

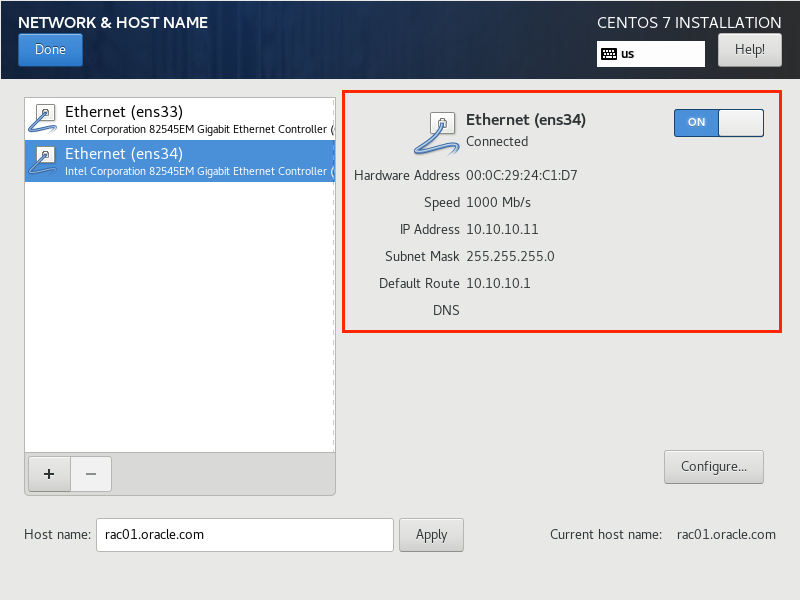

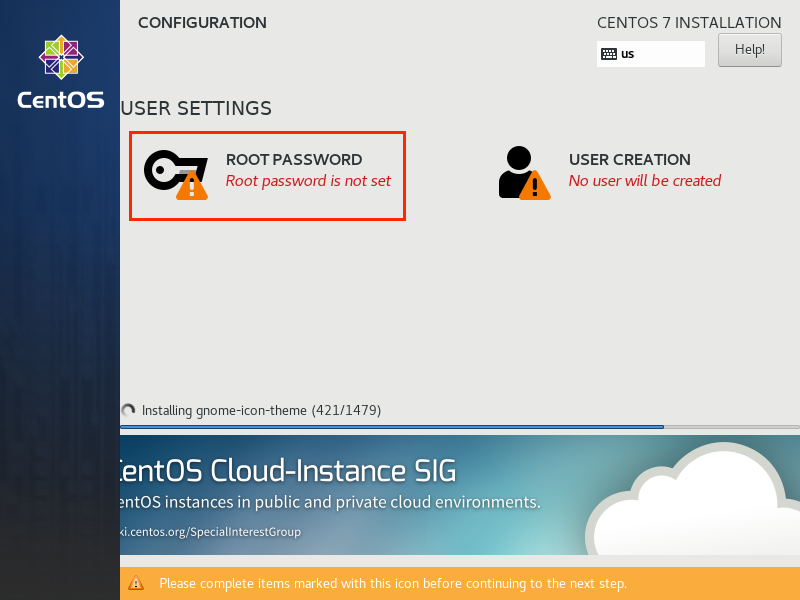

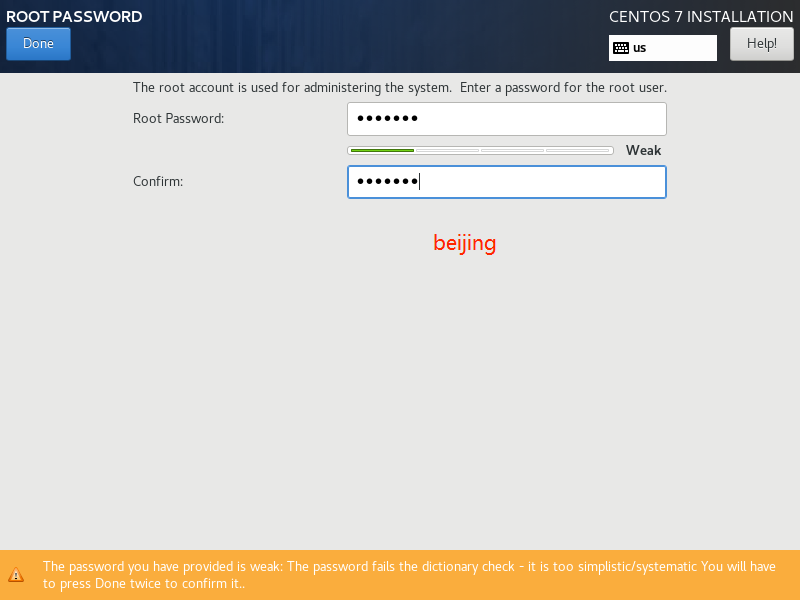

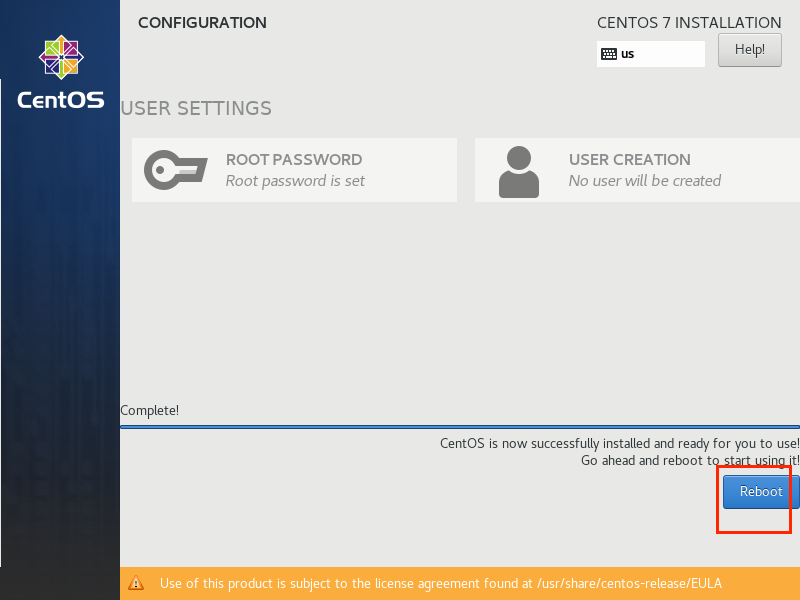

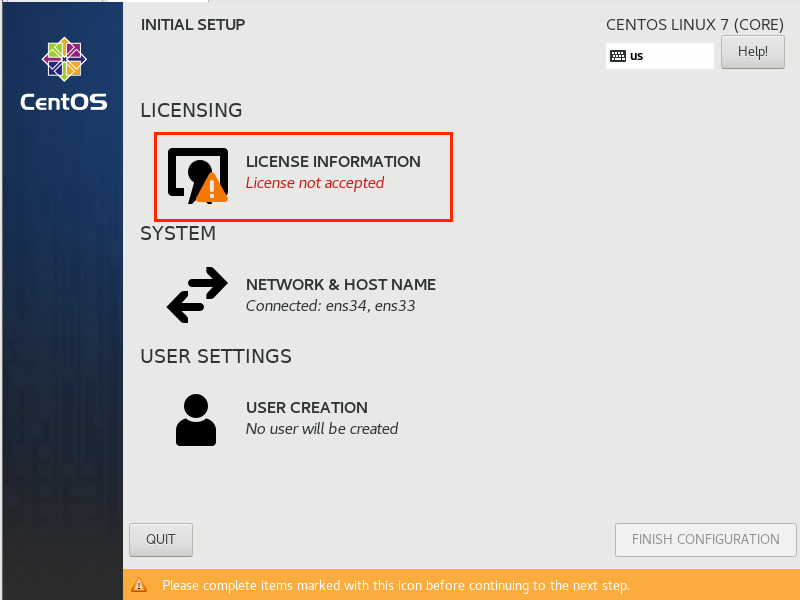

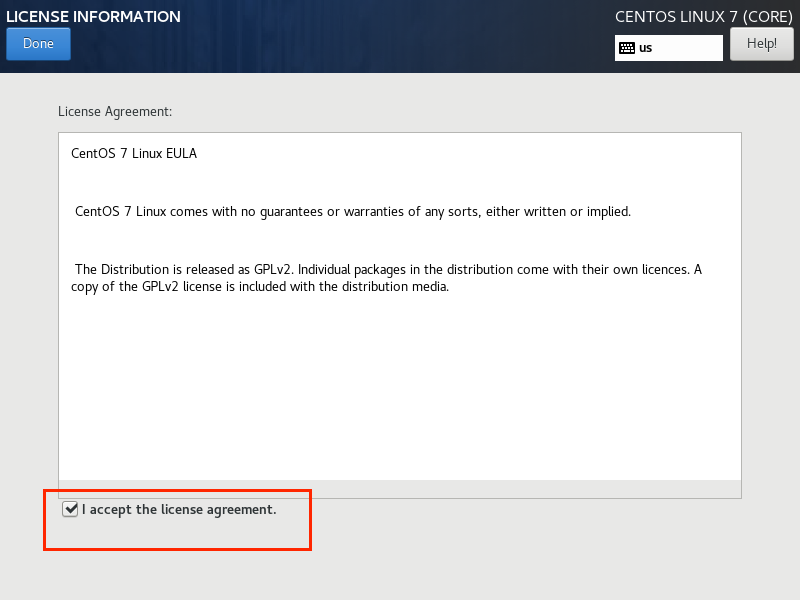

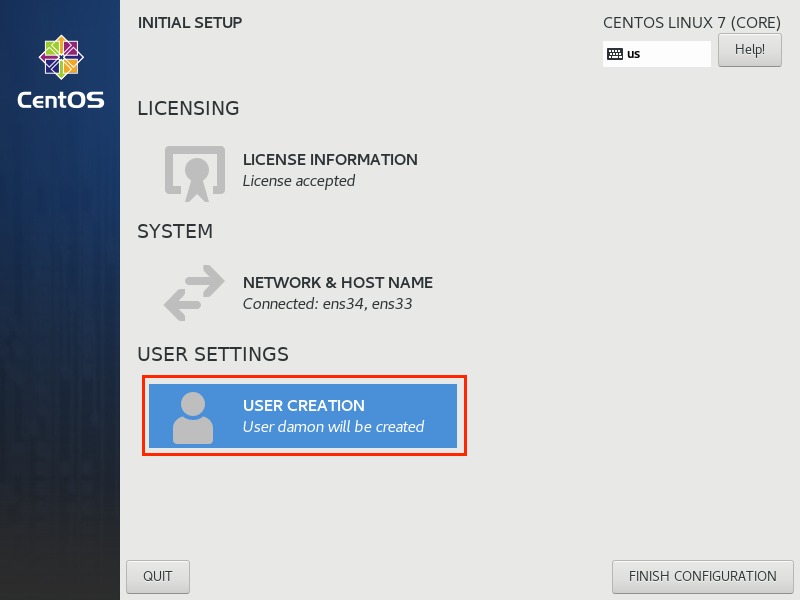

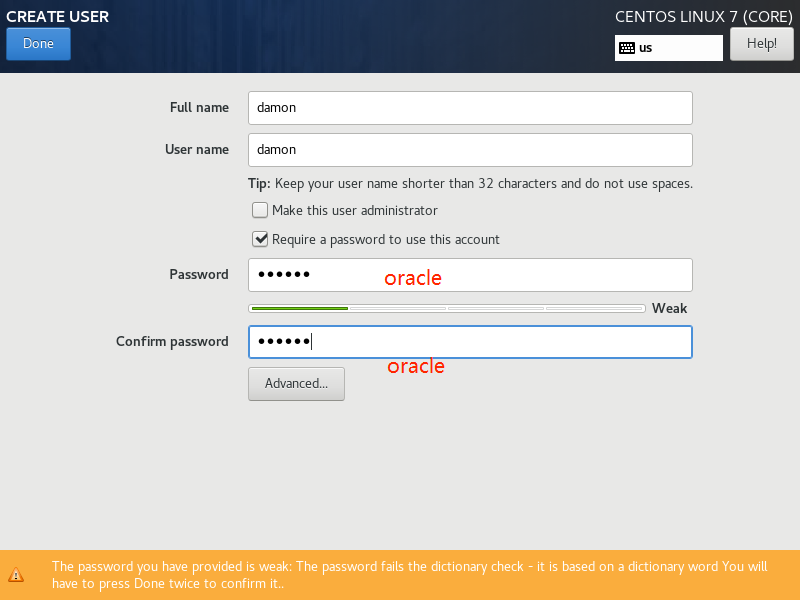

1.操作系统安装

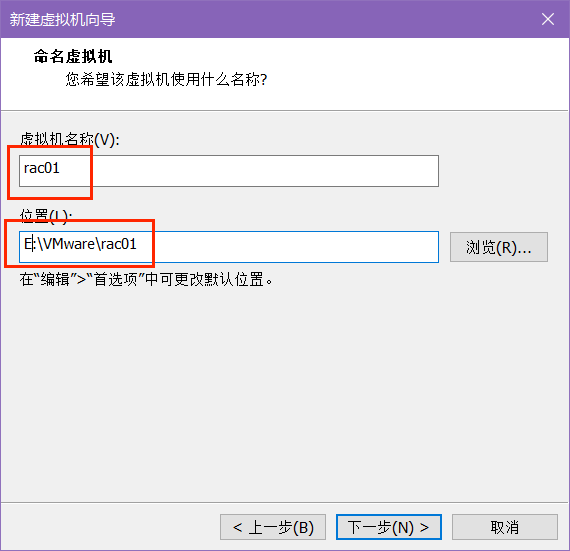

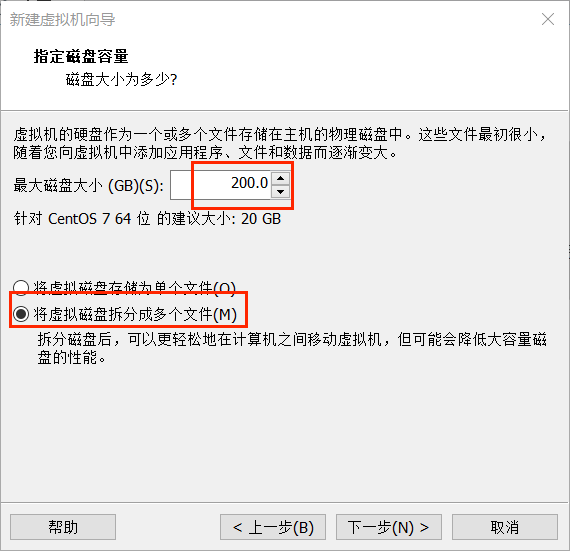

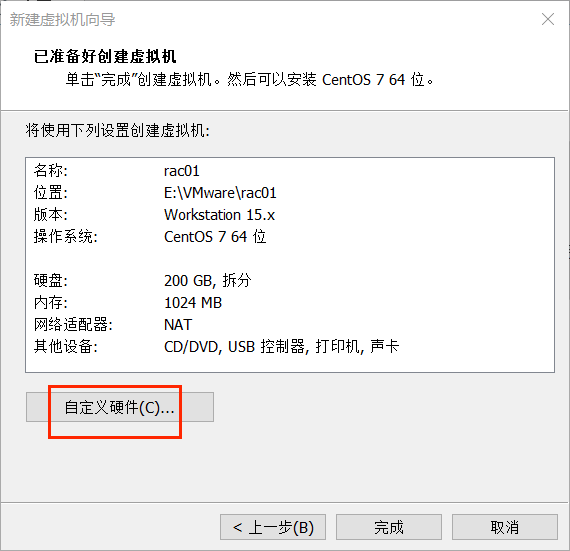

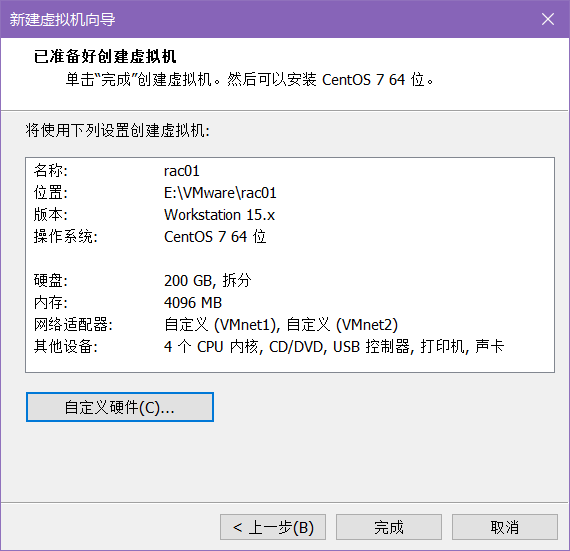

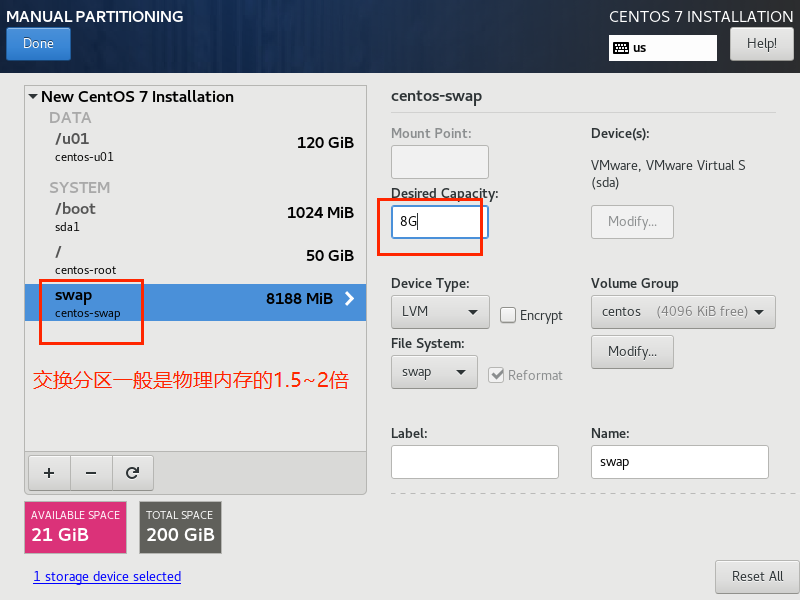

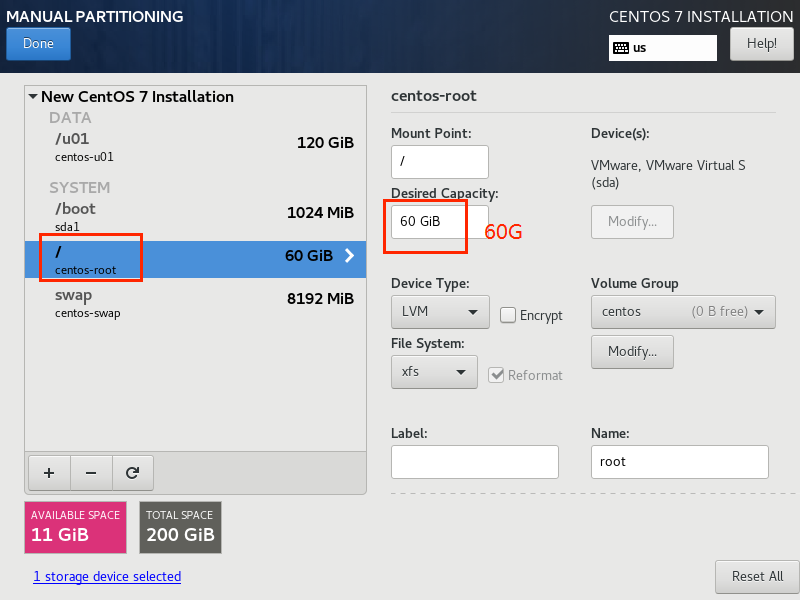

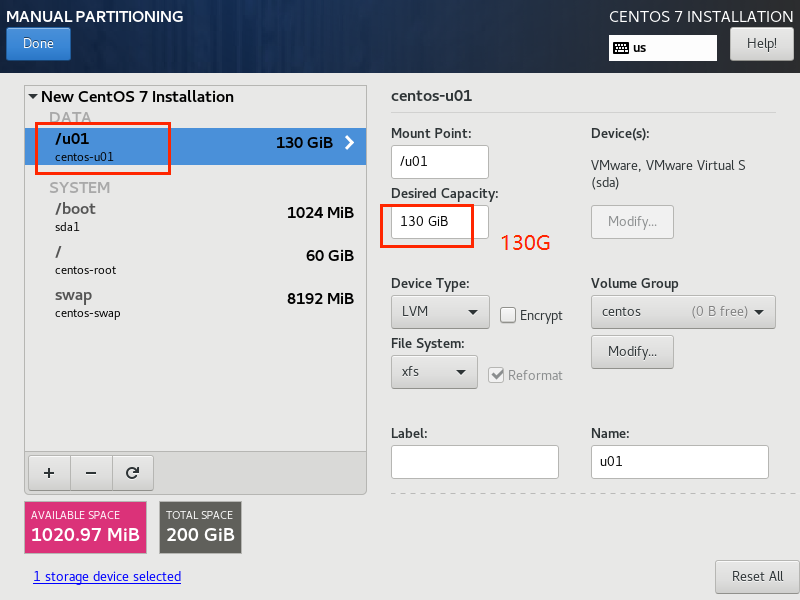

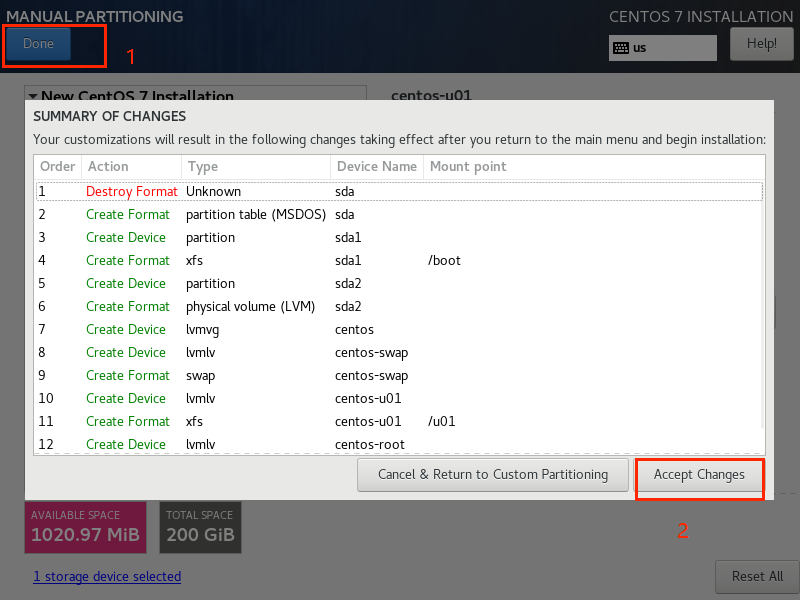

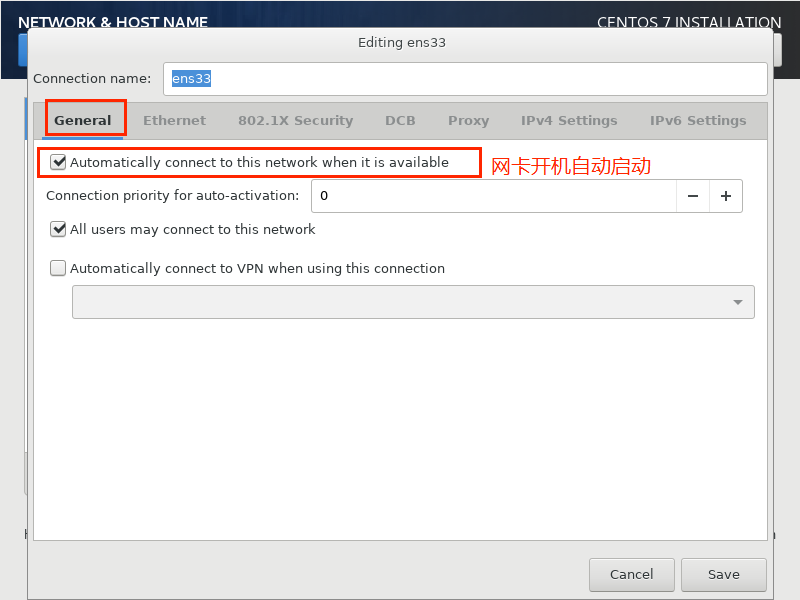

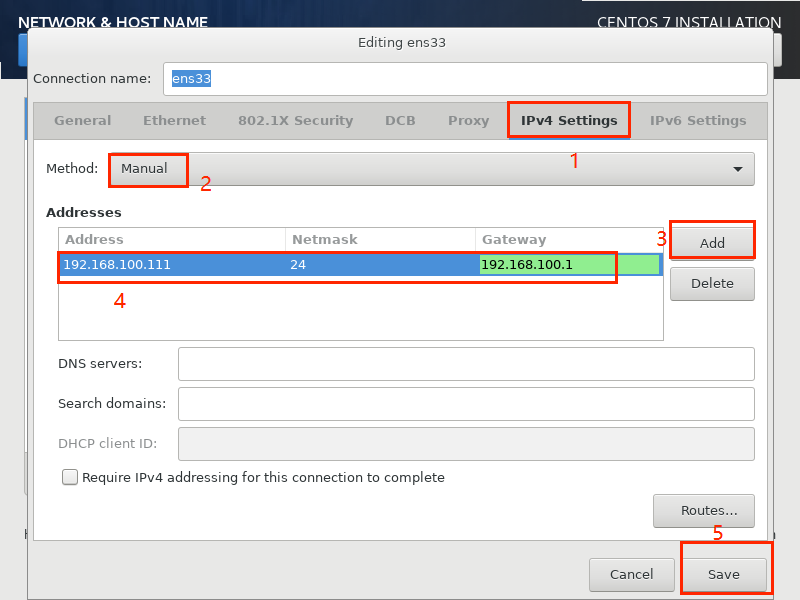

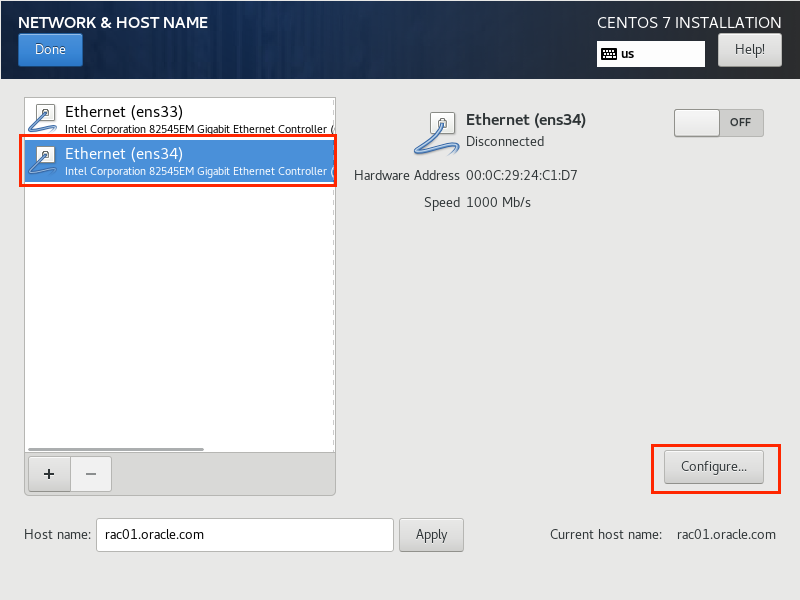

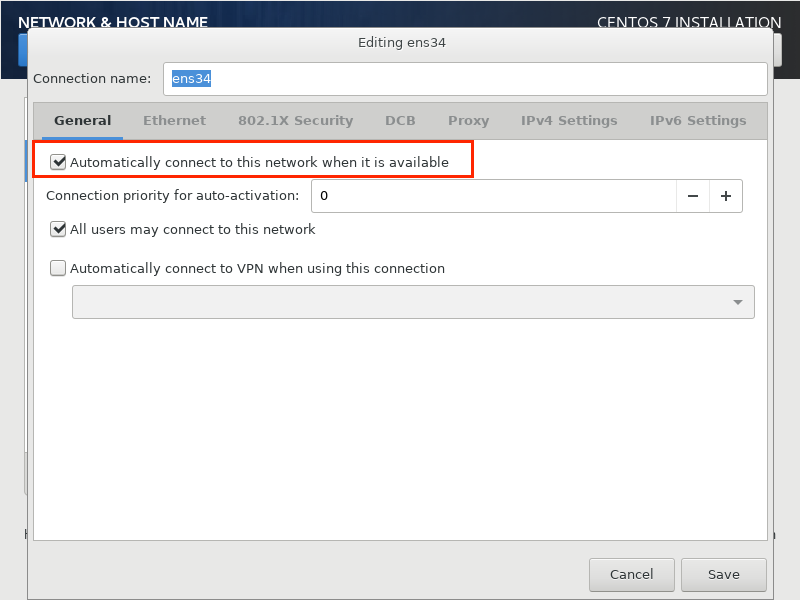

1.1.第一台虚拟机安装(rac01)

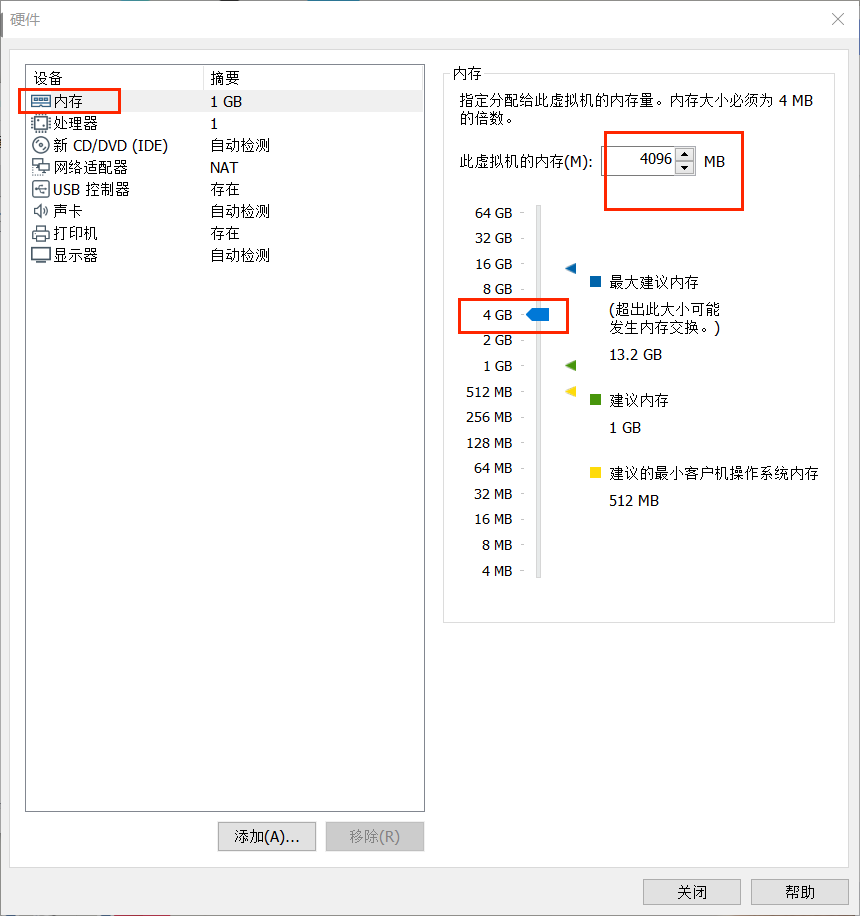

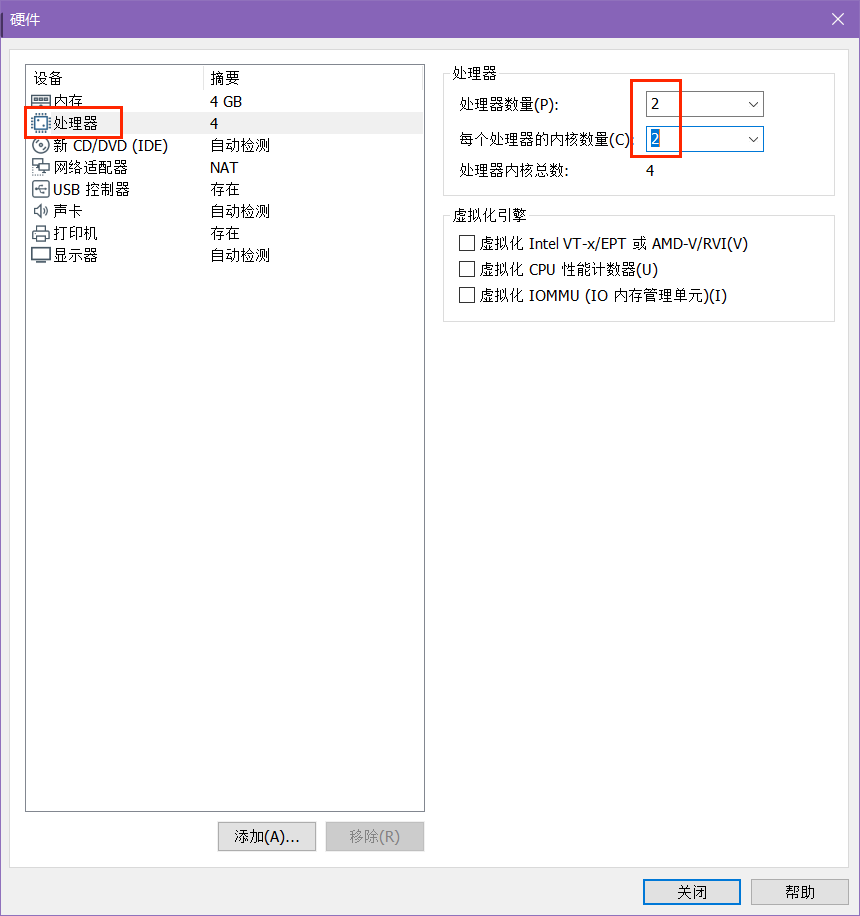

内存

CPU

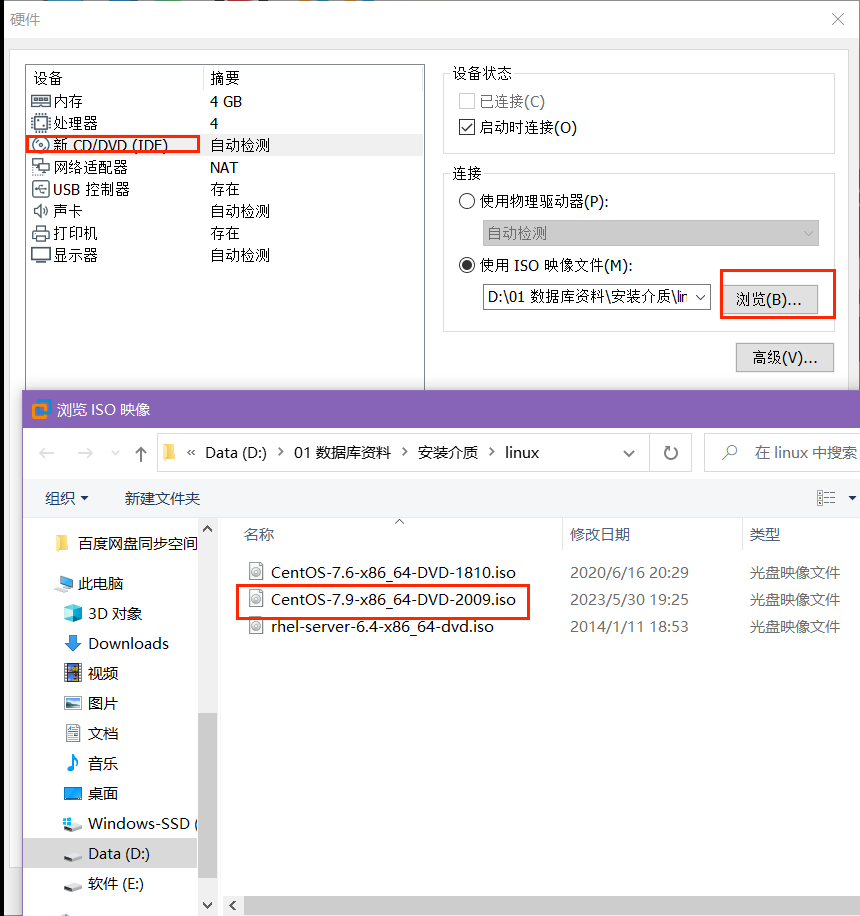

选择操作系统ISO

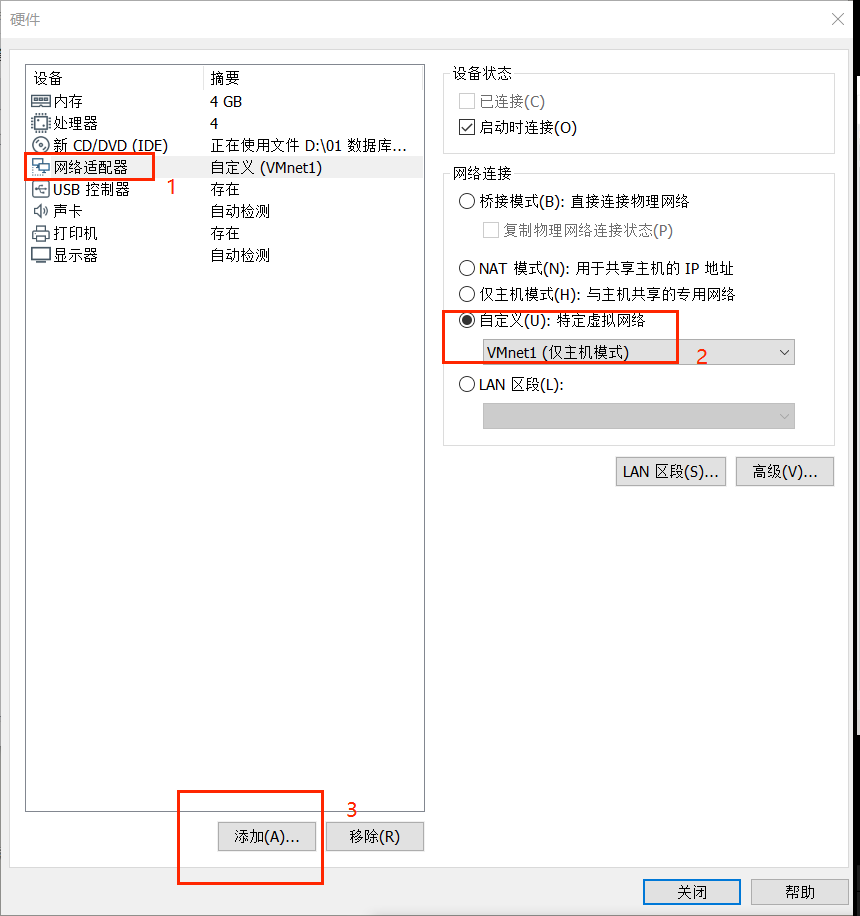

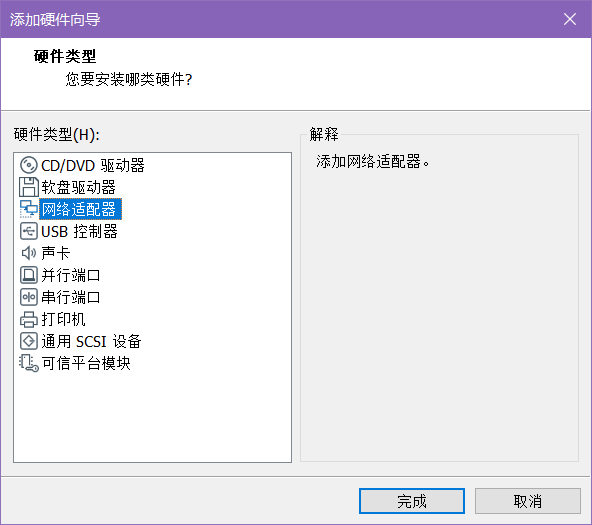

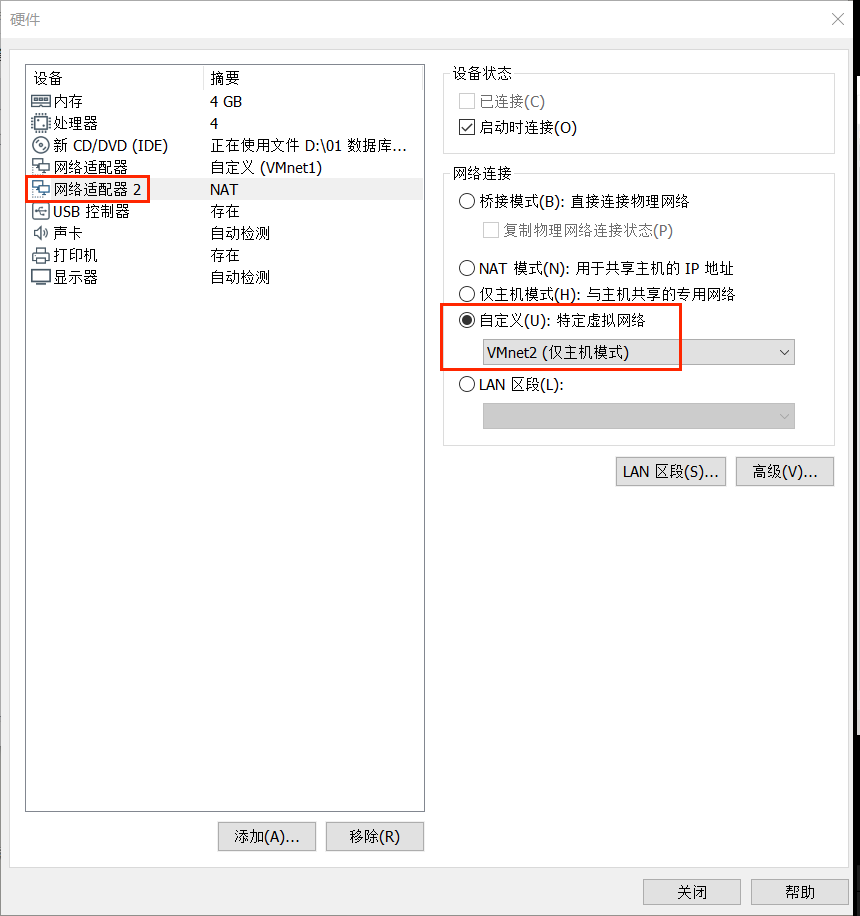

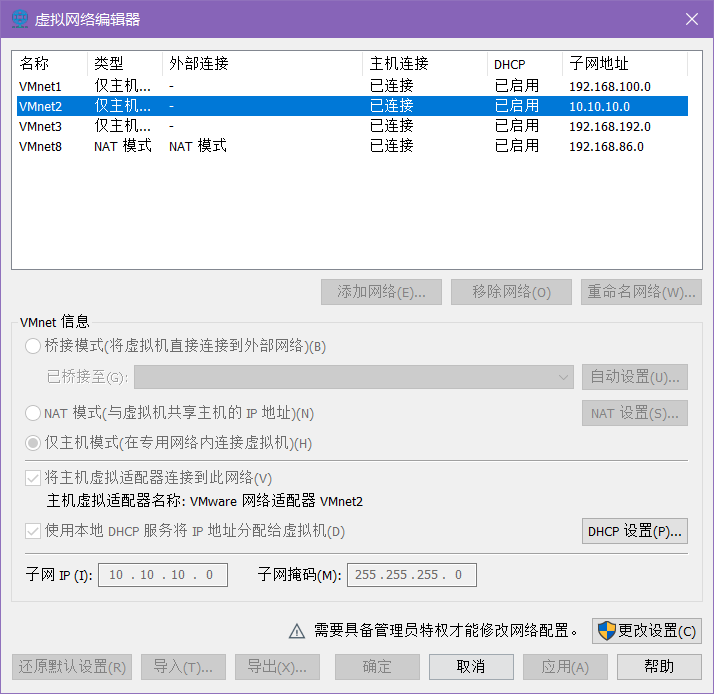

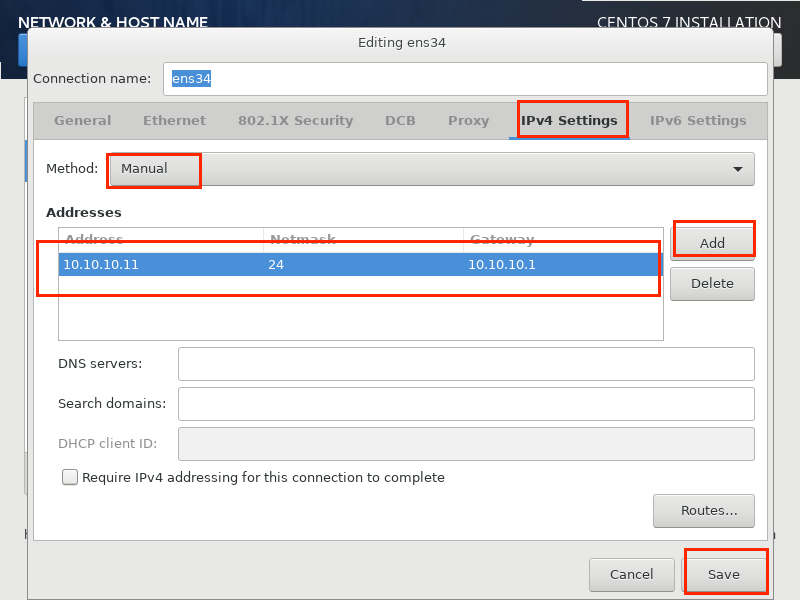

添加网卡

进入root用户

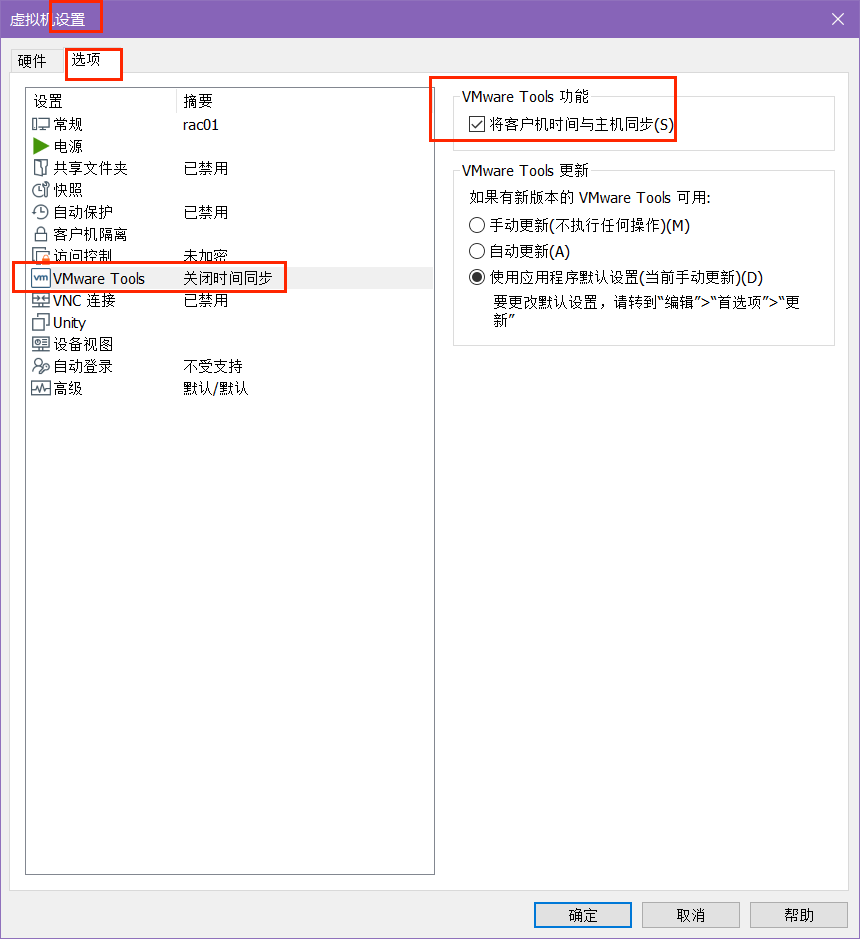

时钟同步

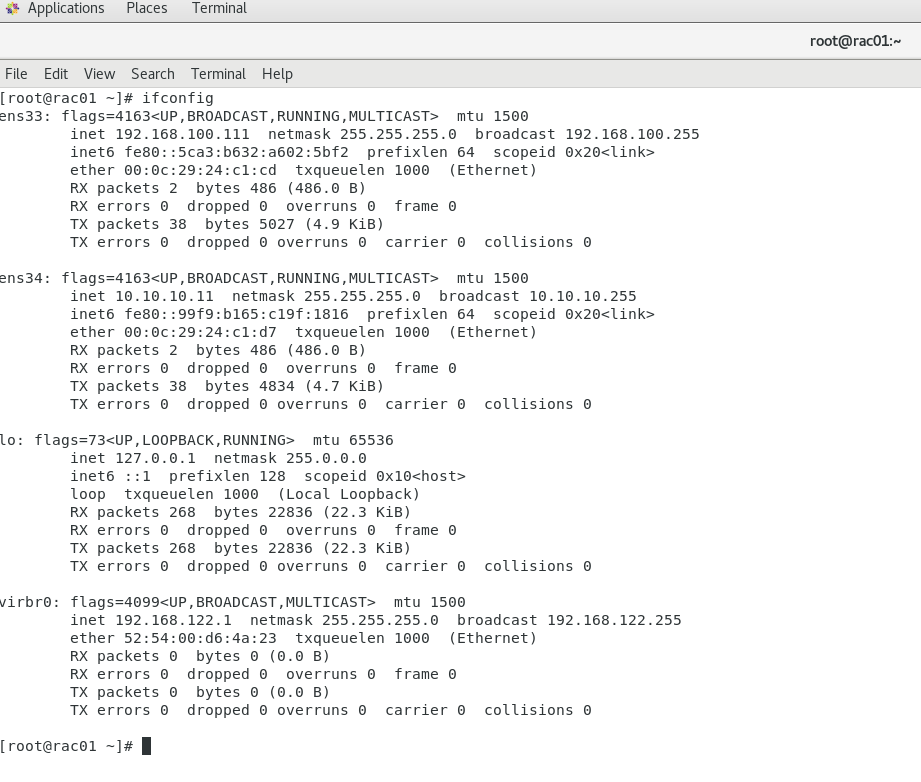

查ip

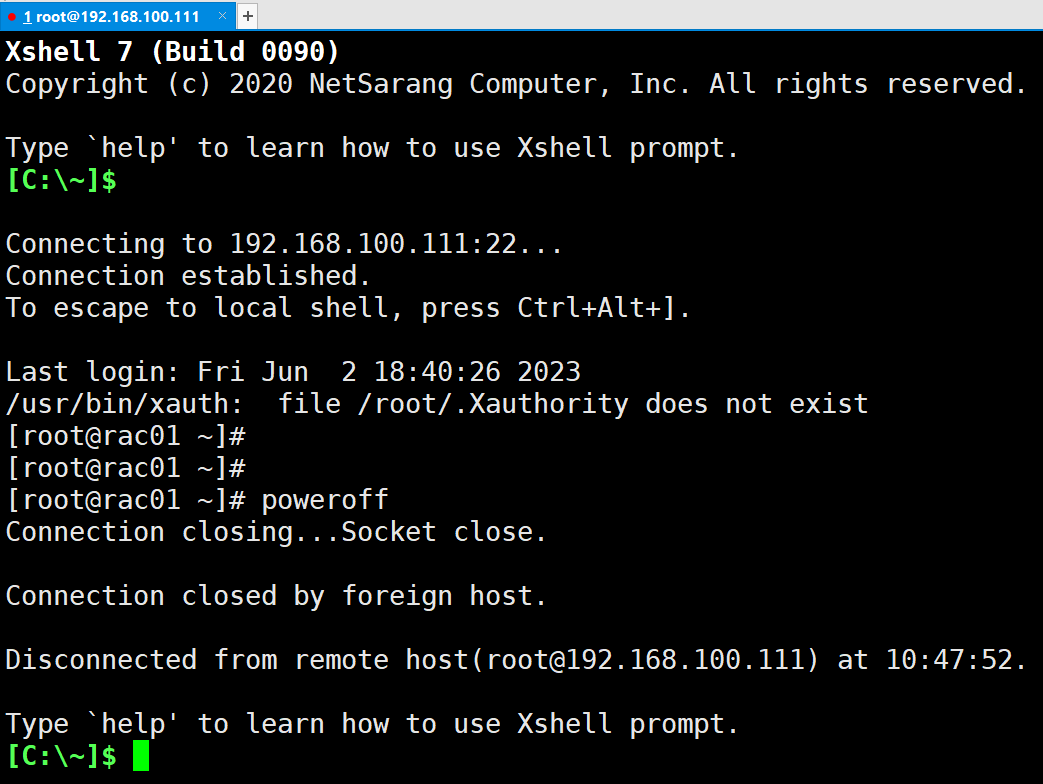

关机

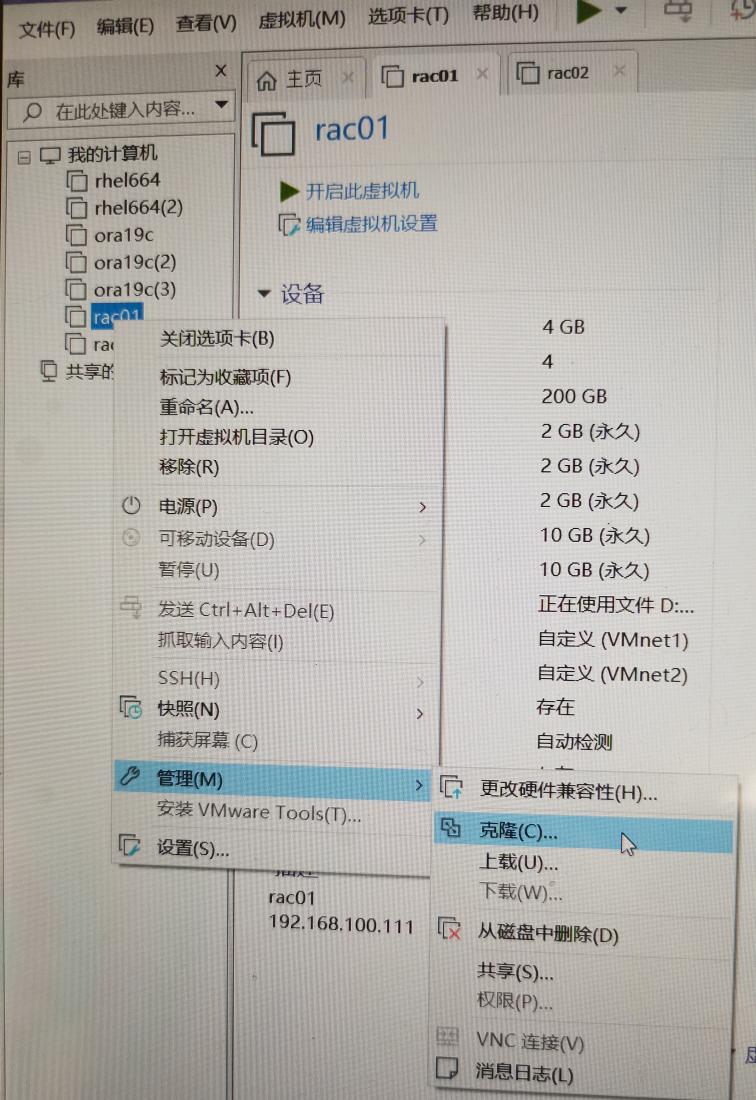

1.2.克隆一个虚拟机(rac02)

1.2.1.克隆后配置

1.2.1.1.方法一:进入虚拟机下root用户修改

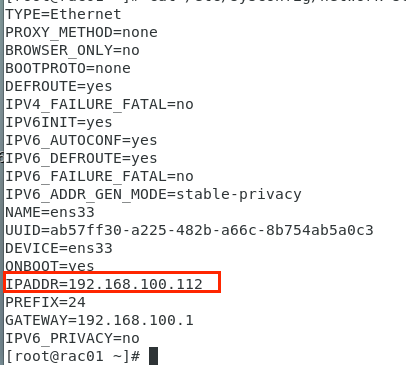

(1)改IP

[root@rac01 ~]# vi /etc/sysconfig/network-scripts/ifcfg-ens33

[root@rac02 ~]# vi /etc/sysconfig/network-scripts/ifcfg-ens34

[root@rac02 ~]# cat /etc/sysconfig/network-scripts/ifcfg-ens34

TYPE=Ethernet

PROXY_METHOD=none

BROWSER_ONLY=no

BOOTPROTO=none

DEFROUTE=yes

IPV4_FAILURE_FATAL=no

IPV6INIT=yes

IPV6_AUTOCONF=yes

IPV6_DEFROUTE=yes

IPV6_FAILURE_FATAL=no

IPV6_ADDR_GEN_MODE=stable-privacy

NAME=ens34

UUID=d1219c17-f7b0-44a7-86fd-187765f6e108

DEVICE=ens34

ONBOOT=yes

IPADDR=10.10.10.12

PREFIX=24

GATEWAY=10.10.10.1

IPV6_PRIVACY=no

(2)改主机名

[root@rac01 ~]# hostnamectl set-hostname rac02.oracle.com

1.2.1.2.方法二 :xshell连接

改ip , 服务名 ,/etc/hosts

systemctl restart network 重启网卡,或 reboot

新建连接

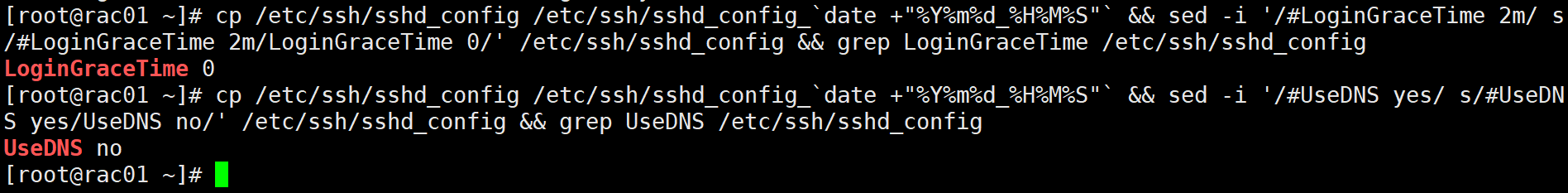

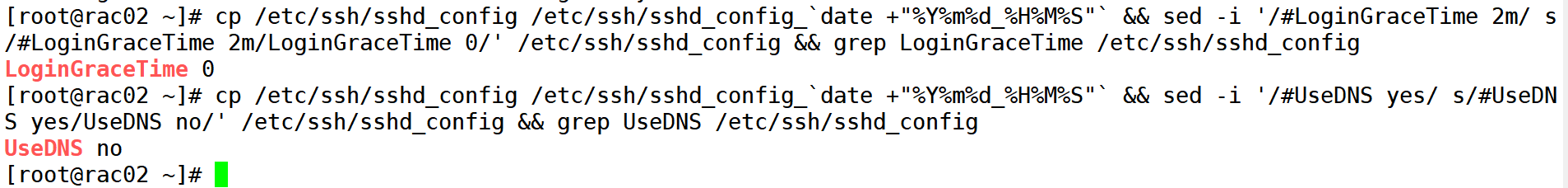

1.2.2.安装完成之后,加快SSH登录

--配置LoginGraceTime参数为0, 将timeout wait设置为无限制

cp /etc/ssh/sshd_config /etc/ssh/sshd_config_`date +"%Y%m%d_%H%M%S"` && sed -i '/#LoginGraceTime 2m/ s/#LoginGraceTime 2m/LoginGraceTime 0/' /etc/ssh/sshd_config && grep LoginGraceTime /etc/ssh/sshd_config

(备份,替换)

--加快SSH登陆速度,禁用DNS

cp /etc/ssh/sshd_config /etc/ssh/sshd_config_`date +"%Y%m%d_%H%M%S"` && sed -i '/#UseDNS yes/ s/#UseDNS yes/UseDNS no/' /etc/ssh/sshd_config && grep UseDNS /etc/ssh/sshd_config

重启ssh服务

systemctl restart sshd

2.共享存储设备

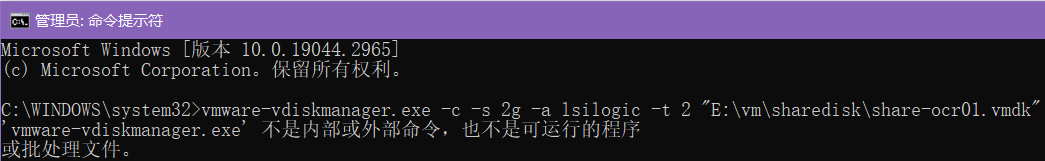

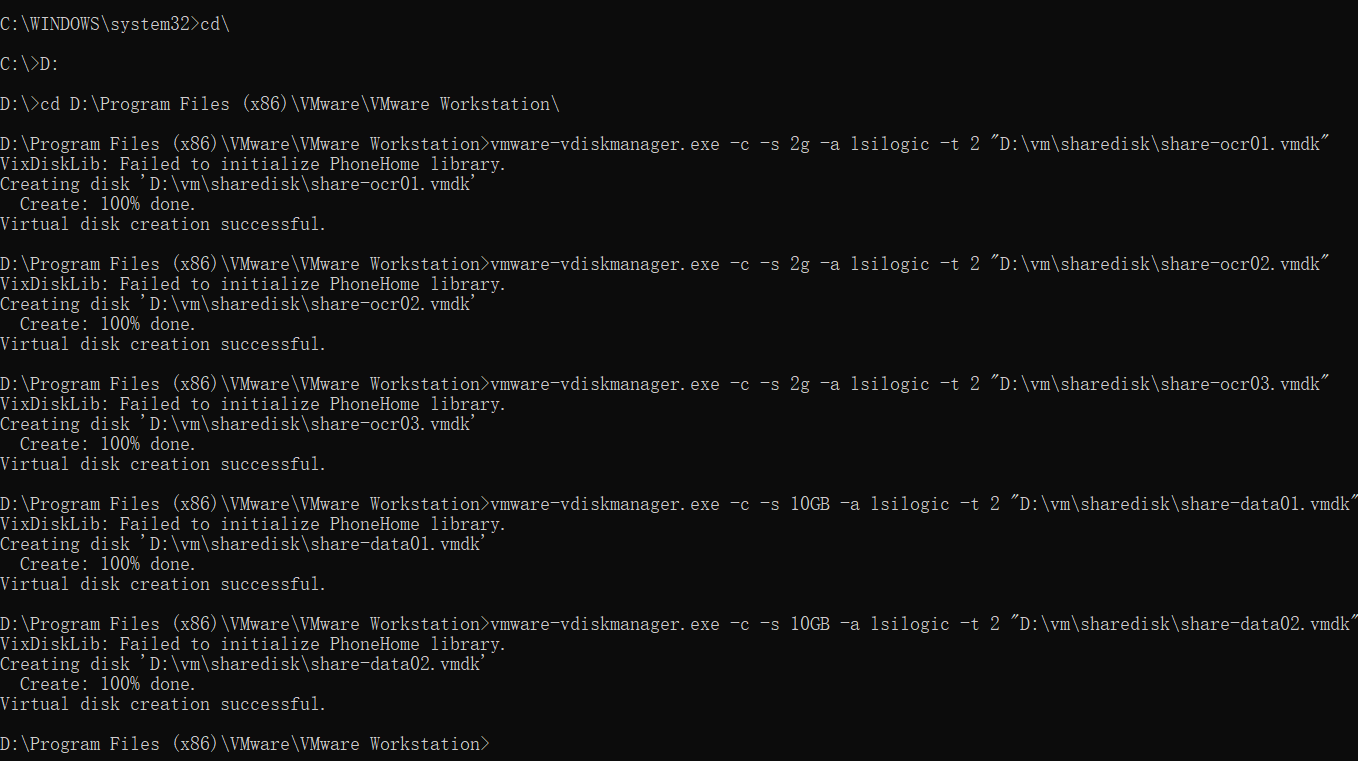

2.1.创建共享磁盘-命令行

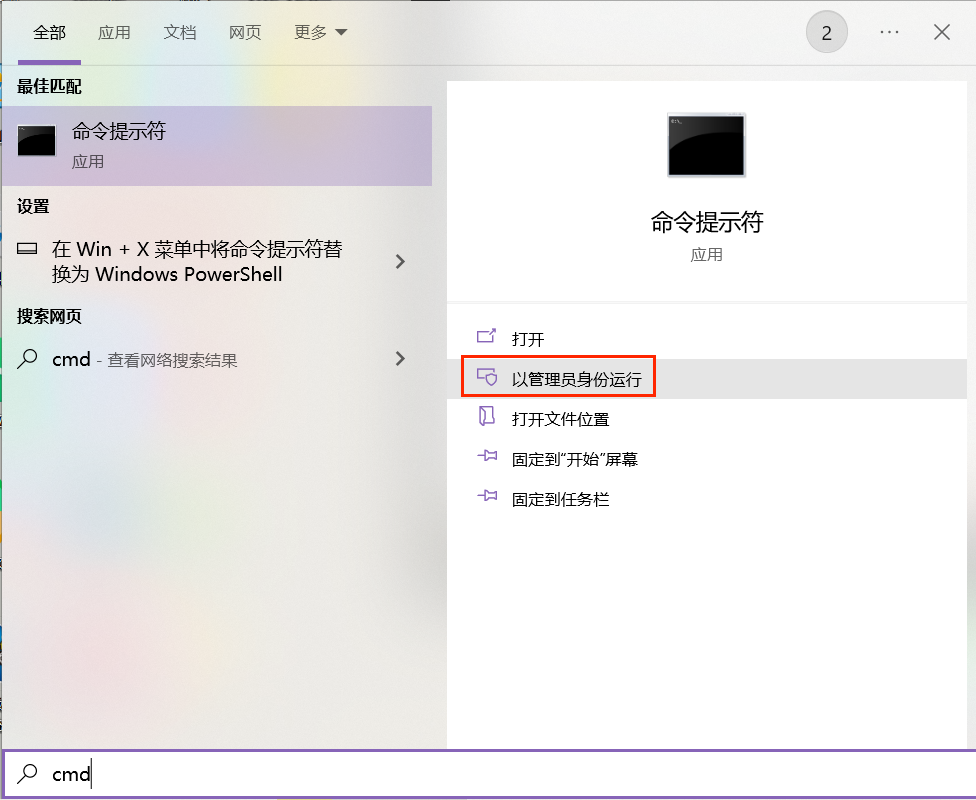

windows创建目录D:\vm\sharedisk

vmware-vdiskmanager.exe -c -s 2g -a lsilogic -t 2 "D:\vm\sharedisk\share-ocr01.vmdk"

vmware-vdiskmanager.exe -c -s 2g -a lsilogic -t 2 "D:\vm\sharedisk\share-ocr02.vmdk"

vmware-vdiskmanager.exe -c -s 2g -a lsilogic -t 2 "D:\vm\sharedisk\share-ocr03.vmdk"

vmware-vdiskmanager.exe -c -s 10GB -a lsilogic -t 2 "D:\vm\sharedisk\share-data01.vmdk"

vmware-vdiskmanager.exe -c -s 10GB -a lsilogic -t 2 "D:\vm\sharedisk\share-data02.vmdk"

2.2.管理员权限启动cmd

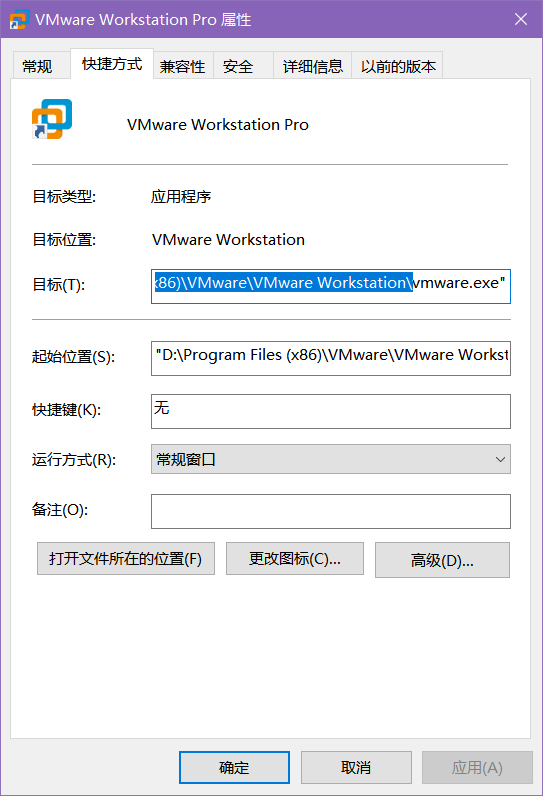

2.3.桌面虚拟机图标-右击-属性,复制路径

2.4.cmd进入复制的虚拟机路径并执行命令行

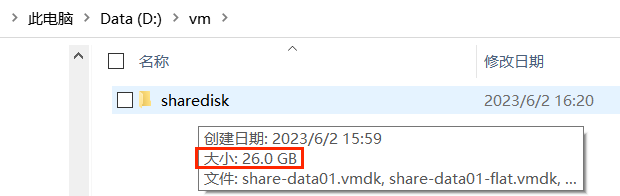

2.5.进入D:\vm\sharedisk目录查看大小

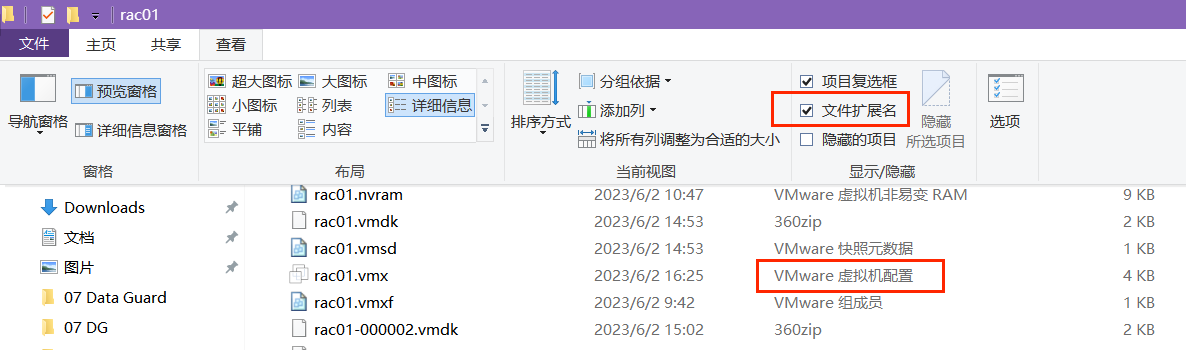

2.6.关闭两台虚拟机,编辑相关vmx文件

[root@rac01 ~]# poweroff

[root@rac02 ~]# poweroff

rac01 和 rac02

记事本打开rac01.vms和rac02.vms

末尾添加:

#shared disks configure

diskLib.dataCacheMaxSize=0

diskLib.dataCacheMaxReadAheadSize=0

diskLib.dataCacheMinReadAheadSize=0

diskLib.dataCachePageSize=4096

diskLib.maxUnsyncedWrites = "0"

disk.locking = "FALSE"

scsi1.sharedBus = "virtual"

scsi1.present = "TRUE"

scsi1.virtualDev = "lsilogic"

scsi1:0.mode = "independent-persistent"

scsi1:0.deviceType = "disk"

scsi1:0.present = "TRUE"

scsi1:0.fileName = "D:\vm\sharedisk\share-ocr01.vmdk"

scsi1:0.redo = ""

scsi1:1.mode = "independent-persistent"

scsi1:1.deviceType = "disk"

scsi1:1.present = "TRUE"

scsi1:1.fileName = "D:\vm\sharedisk\share-ocr02.vmdk"

scsi1:1.redo = ""

scsi1:2.mode = "independent-persistent"

scsi1:2.deviceType = "disk"

scsi1:2.present = "TRUE"

scsi1:2.fileName = "D:\vm\sharedisk\share-ocr03.vmdk"

scsi1:2.redo = ""

scsi1:3.mode = "independent-persistent"

scsi1:3.deviceType = "disk"

scsi1:3.present = "TRUE"

scsi1:3.fileName = "D:\vm\sharedisk\share-data01.vmdk"

scsi1:3.redo = ""

scsi1:4.mode = "independent-persistent"

scsi1:4.deviceType = "disk"

scsi1:4.present = "TRUE"

scsi1:4.fileName = "D:\vm\sharedisk\share-data02.vmdk"

scsi1:4.redo = ""

2.7.开机并查看磁盘

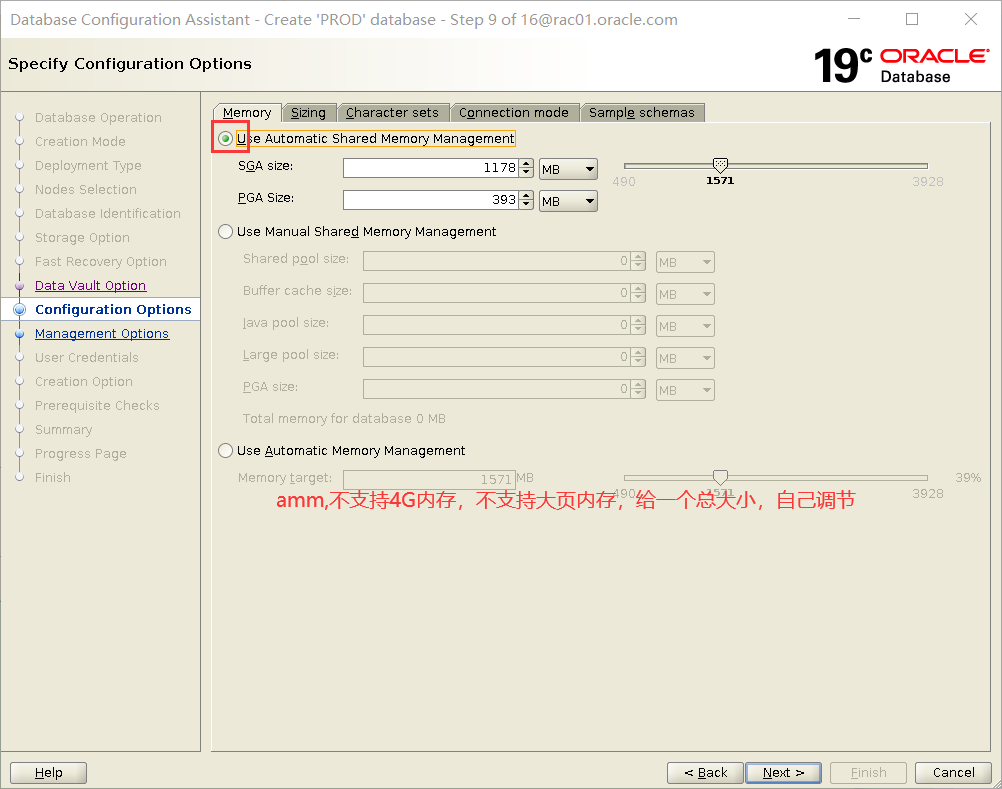

注意:Asmm支持大页内存

注意:Amm 不能超过4G,</dev/shm,不支持大页内存

[root@rac01 ~]# lsblk

NAME MAJ:MIN RM SIZE RO TYPE MOUNTPOINT

sda 8:0 0 200G 0 disk

├─sda1 8:1 0 1G 0 part /boot

└─sda2 8:2 0 198G 0 part

├─centos-root 253:0 0 60G 0 lvm /

├─centos-swap 253:1 0 8G 0 lvm [SWAP]

└─centos-u01 253:2 0 130G 0 lvm /u01

sdb 8:16 0 2G 0 disk

sdc 8:32 0 2G 0 disk

sdd 8:48 0 2G 0 disk

sde 8:64 0 10G 0 disk

sdf 8:80 0 10G 0 disk

sr0 11:0 1 4.4G 0 rom

[root@rac01 ~]# fdisk -l

Disk /dev/sdc: 2147 MB, 2147483648 bytes, 4194304 sectors

Units = sectors of 1 * 512 = 512 bytes

Sector size (logical/physical): 512 bytes / 512 bytes

I/O size (minimum/optimal): 512 bytes / 512 bytes

Disk /dev/sdb: 2147 MB, 2147483648 bytes, 4194304 sectors

Units = sectors of 1 * 512 = 512 bytes

Sector size (logical/physical): 512 bytes / 512 bytes

I/O size (minimum/optimal): 512 bytes / 512 bytes

Disk /dev/sdd: 2147 MB, 2147483648 bytes, 4194304 sectors

Units = sectors of 1 * 512 = 512 bytes

Sector size (logical/physical): 512 bytes / 512 bytes

I/O size (minimum/optimal): 512 bytes / 512 bytes

Disk /dev/sda: 214.7 GB, 214748364800 bytes, 419430400 sectors

Units = sectors of 1 * 512 = 512 bytes

Sector size (logical/physical): 512 bytes / 512 bytes

I/O size (minimum/optimal): 512 bytes / 512 bytes

Disk label type: dos

Disk identifier: 0x0001599b

Device Boot Start End Blocks Id System

/dev/sda1 * 2048 2099199 1048576 83 Linux

/dev/sda2 2099200 417341439 207621120 8e Linux LVM

Disk /dev/sde: 10.7 GB, 10737418240 bytes, 20971520 sectors

Units = sectors of 1 * 512 = 512 bytes

Sector size (logical/physical): 512 bytes / 512 bytes

I/O size (minimum/optimal): 512 bytes / 512 bytes

Disk /dev/sdf: 10.7 GB, 10737418240 bytes, 20971520 sectors

Units = sectors of 1 * 512 = 512 bytes

Sector size (logical/physical): 512 bytes / 512 bytes

I/O size (minimum/optimal): 512 bytes / 512 bytes

Disk /dev/mapper/centos-root: 64.4 GB, 64424509440 bytes, 125829120 sectors

Units = sectors of 1 * 512 = 512 bytes

Sector size (logical/physical): 512 bytes / 512 bytes

I/O size (minimum/optimal): 512 bytes / 512 bytes

Disk /dev/mapper/centos-swap: 8589 MB, 8589934592 bytes, 16777216 sectors

Units = sectors of 1 * 512 = 512 bytes

Sector size (logical/physical): 512 bytes / 512 bytes

I/O size (minimum/optimal): 512 bytes / 512 bytes

Disk /dev/mapper/centos-u01: 139.6 GB, 139586437120 bytes, 272629760 sectors

Units = sectors of 1 * 512 = 512 bytes

Sector size (logical/physical): 512 bytes / 512 bytes

I/O size (minimum/optimal): 512 bytes / 512 bytes

[root@rac02 ~]# lsblk

NAME MAJ:MIN RM SIZE RO TYPE MOUNTPOINT

sda 8:0 0 200G 0 disk

├─sda1 8:1 0 1G 0 part /boot

└─sda2 8:2 0 198G 0 part

├─centos-root 253:0 0 60G 0 lvm /

├─centos-swap 253:1 0 8G 0 lvm [SWAP]

└─centos-u01 253:2 0 130G 0 lvm /u01

sdb 8:16 0 2G 0 disk

sdc 8:32 0 2G 0 disk

sdd 8:48 0 2G 0 disk

sde 8:64 0 10G 0 disk

sdf 8:80 0 10G 0 disk

sr0 11:0 1 4.4G 0 rom

[root@rac02 ~]# fdisk -l

Disk /dev/sdc: 2147 MB, 2147483648 bytes, 4194304 sectors

Units = sectors of 1 * 512 = 512 bytes

Sector size (logical/physical): 512 bytes / 512 bytes

I/O size (minimum/optimal): 512 bytes / 512 bytes

Disk /dev/sdb: 2147 MB, 2147483648 bytes, 4194304 sectors

Units = sectors of 1 * 512 = 512 bytes

Sector size (logical/physical): 512 bytes / 512 bytes

I/O size (minimum/optimal): 512 bytes / 512 bytes

Disk /dev/sde: 10.7 GB, 10737418240 bytes, 20971520 sectors

Units = sectors of 1 * 512 = 512 bytes

Sector size (logical/physical): 512 bytes / 512 bytes

I/O size (minimum/optimal): 512 bytes / 512 bytes

Disk /dev/sdd: 2147 MB, 2147483648 bytes, 4194304 sectors

Units = sectors of 1 * 512 = 512 bytes

Sector size (logical/physical): 512 bytes / 512 bytes

I/O size (minimum/optimal): 512 bytes / 512 bytes

Disk /dev/sdf: 10.7 GB, 10737418240 bytes, 20971520 sectors

Units = sectors of 1 * 512 = 512 bytes

Sector size (logical/physical): 512 bytes / 512 bytes

I/O size (minimum/optimal): 512 bytes / 512 bytes

Disk /dev/sda: 214.7 GB, 214748364800 bytes, 419430400 sectors

Units = sectors of 1 * 512 = 512 bytes

Sector size (logical/physical): 512 bytes / 512 bytes

I/O size (minimum/optimal): 512 bytes / 512 bytes

Disk label type: dos

Disk identifier: 0x0001599b

Device Boot Start End Blocks Id System

/dev/sda1 * 2048 2099199 1048576 83 Linux

/dev/sda2 2099200 417341439 207621120 8e Linux LVM

Disk /dev/mapper/centos-root: 64.4 GB, 64424509440 bytes, 125829120 sectors

Units = sectors of 1 * 512 = 512 bytes

Sector size (logical/physical): 512 bytes / 512 bytes

I/O size (minimum/optimal): 512 bytes / 512 bytes

Disk /dev/mapper/centos-swap: 8589 MB, 8589934592 bytes, 16777216 sectors

Units = sectors of 1 * 512 = 512 bytes

Sector size (logical/physical): 512 bytes / 512 bytes

I/O size (minimum/optimal): 512 bytes / 512 bytes

Disk /dev/mapper/centos-u01: 139.6 GB, 139586437120 bytes, 272629760 sectors

Units = sectors of 1 * 512 = 512 bytes

Sector size (logical/physical): 512 bytes / 512 bytes

I/O size (minimum/optimal): 512 bytes / 512 bytes

[root@rac02 ~]#

3.RAC安装准备工作

3.1.硬件配置和系统情况

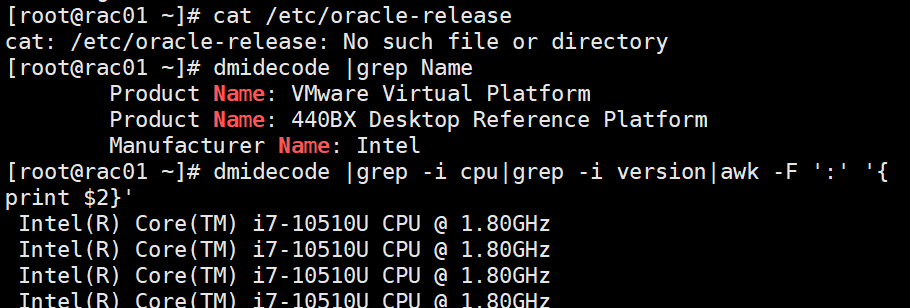

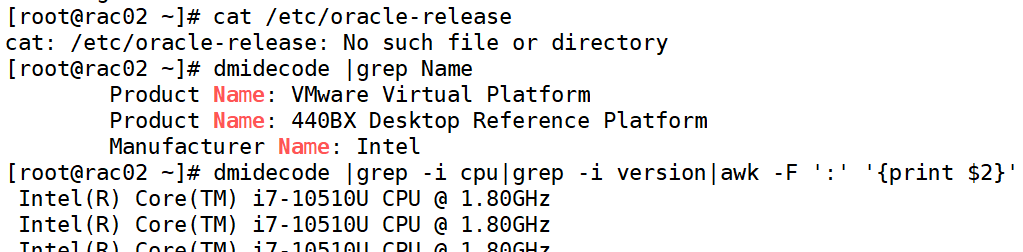

3.1.1.检查操作系统

cat /etc/oracle-release

dmidecode |grep Name

cpu:

dmidecode |grep -i cpu|grep -i version|awk -F ':' '{print $2}'

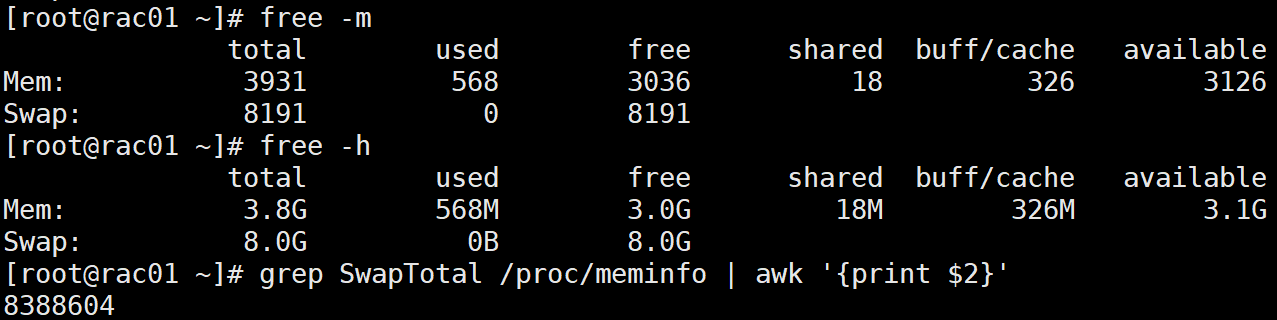

3.1.2.检查内存

[root@rac01 ~]# dmidecode|grep -A5 "Memory Device"|grep Size|grep -v No |grep -v Range

Size: 4096 MB

[root@rac02 ~]# dmidecode|grep -A5 "Memory Device"|grep Size|grep -v No |grep -v Range

Size: 4096 MB

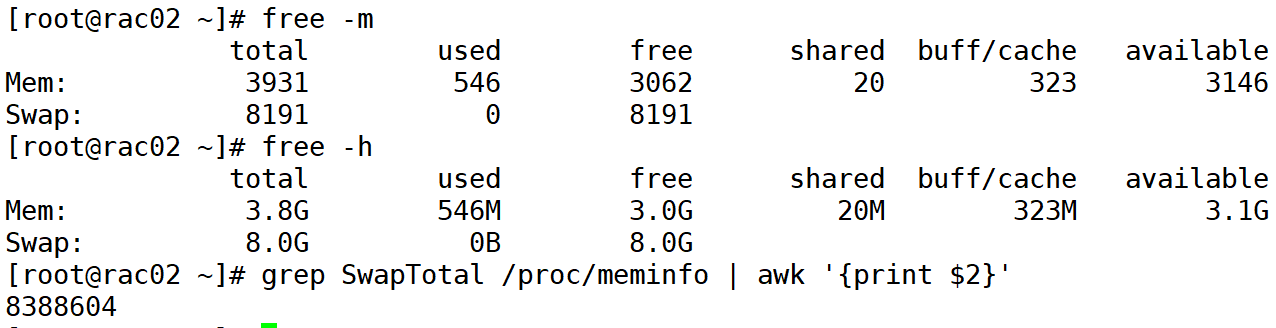

3.1.3.检查swap

free -m

free -h

3.1.4.检查时间和时区

(1)查看时间

[root@rac01 ~]# date

Sat Jun 3 16:55:12 CST 2023

(2)时区

[root@rac01 ~]# timedatectl status|grep Local

Local time: Sat 2023-06-03 16:55:46 CST

[root@rac01 ~]# date -R

Sat, 03 Jun 2023 16:55:57 +0800

[root@rac01 ~]# timedatectl | grep "Asia/Shanghai"

Time zone: Asia/Shanghai (CST, +0800)

设置时区

[root@rac01 ~]# timedatectl set-timezone "Asia/Shanghai" && timedatectl status|grep Local

Local time: Sat 2023-06-03 17:00:14 CST

rac02同理

3.2.主机名和hosts文件

3.2.1检查和设置主机名

检查:hostnamectl status

设置:hostnamectl set-hostname rac01.oracle.com

注意:主机名允许使用小写字母、数字和中横线(-),并且只能以小写字母开头。

3.2.2调整hosts文件

[root@rac01 ~]# cp /etc/hosts /etc/hosts_`date +"%Y%m%d_%H%M%S"`

[root@rac01 ~]# echo '#public ip

> 192.168.100.111 rac01

> 192.168.100.112 rac02

> #private ip

> 10.10.10.11 rac01-priv

> 10.10.10.12 rac02-priv

> #vip

> 192.168.100.113 rac01-vip

> 192.168.100.114 rac02-vip

> #scanip

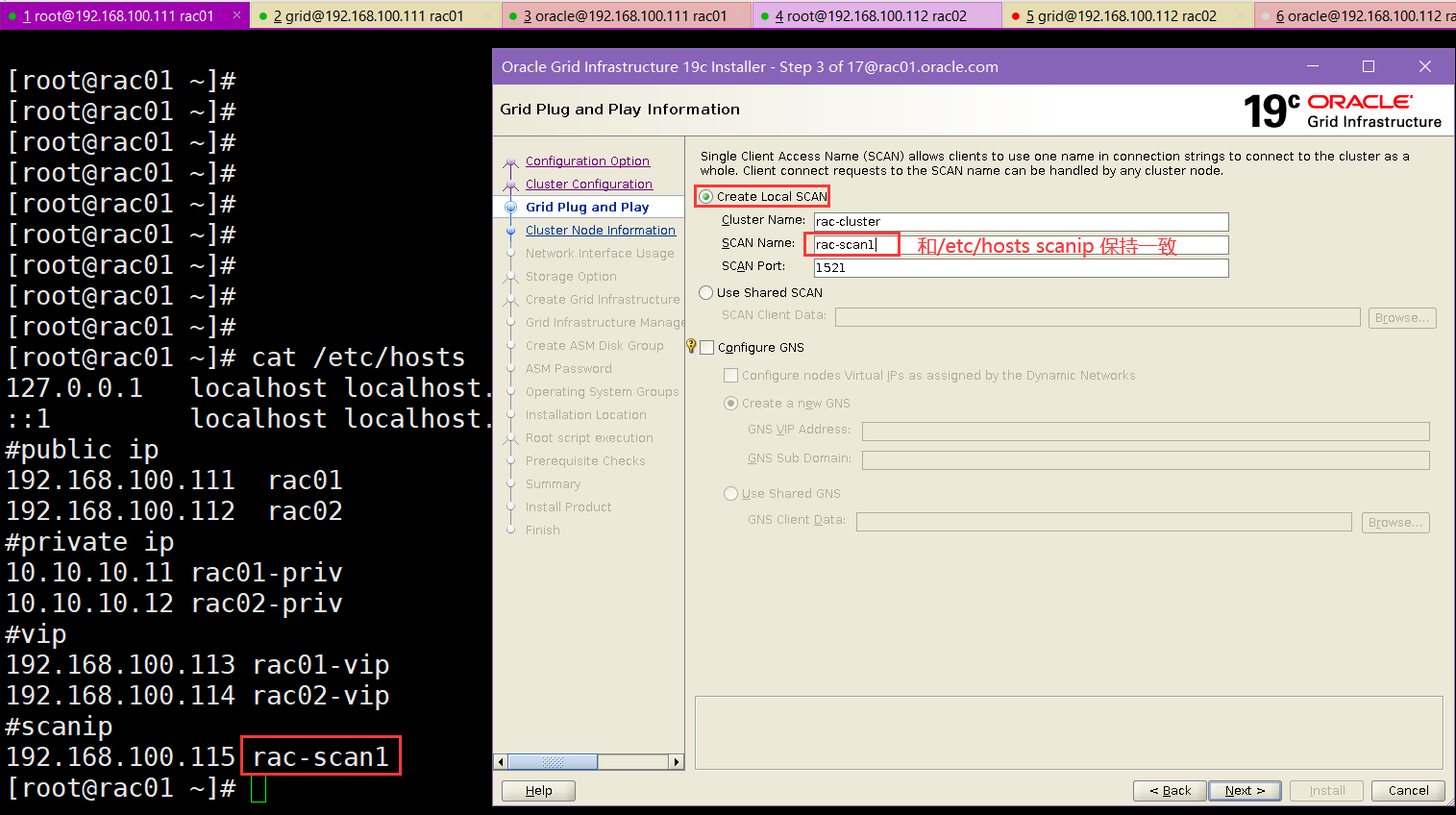

> 192.168.100.115 rac-scan1'>> /etc/hosts

[root@rac01 ~]# cat /etc/hosts

127.0.0.1 localhost localhost.localdomain localhost4 localhost4.localdomain4

::1 localhost localhost.localdomain localhost6 localhost6.localdomain6

#public ip

192.168.100.111 rac01

192.168.100.112 rac02

#private ip

10.10.10.11 rac01-priv

10.10.10.12 rac02-priv

#vip

192.168.100.113 rac01-vip

192.168.100.114 rac02-vip

#scanip

192.168.100.115 rac-scan1

[root@rac02 ~]# cp /etc/hosts /etc/hosts_`date +"%Y%m%d_%H%M%S"`

[root@rac02 ~]# echo '#public ip

> 192.168.100.111 rac01

> 192.168.100.112 rac02

> #private ip

> 10.10.10.11 rac01-priv

> 10.10.10.12 rac02-priv

> #vip

> 192.168.100.113 rac01-vip

> 192.168.100.114 rac02-vip

> #scanip

> 192.168.100.115 rac-scan1'>> /etc/hosts

[root@rac02 ~]# cat /etc/hosts

127.0.0.1 localhost localhost.localdomain localhost4 localhost4.localdomain4

::1 localhost localhost.localdomain localhost6 localhost6.localdomain6

#public ip

192.168.100.111 rac01

192.168.100.112 rac02

#private ip

10.10.10.11 rac01-priv

10.10.10.12 rac02-priv

#vip

192.168.100.113 rac01-vip

192.168.100.114 rac02-vip

#scanip

192.168.100.115 rac-scan1

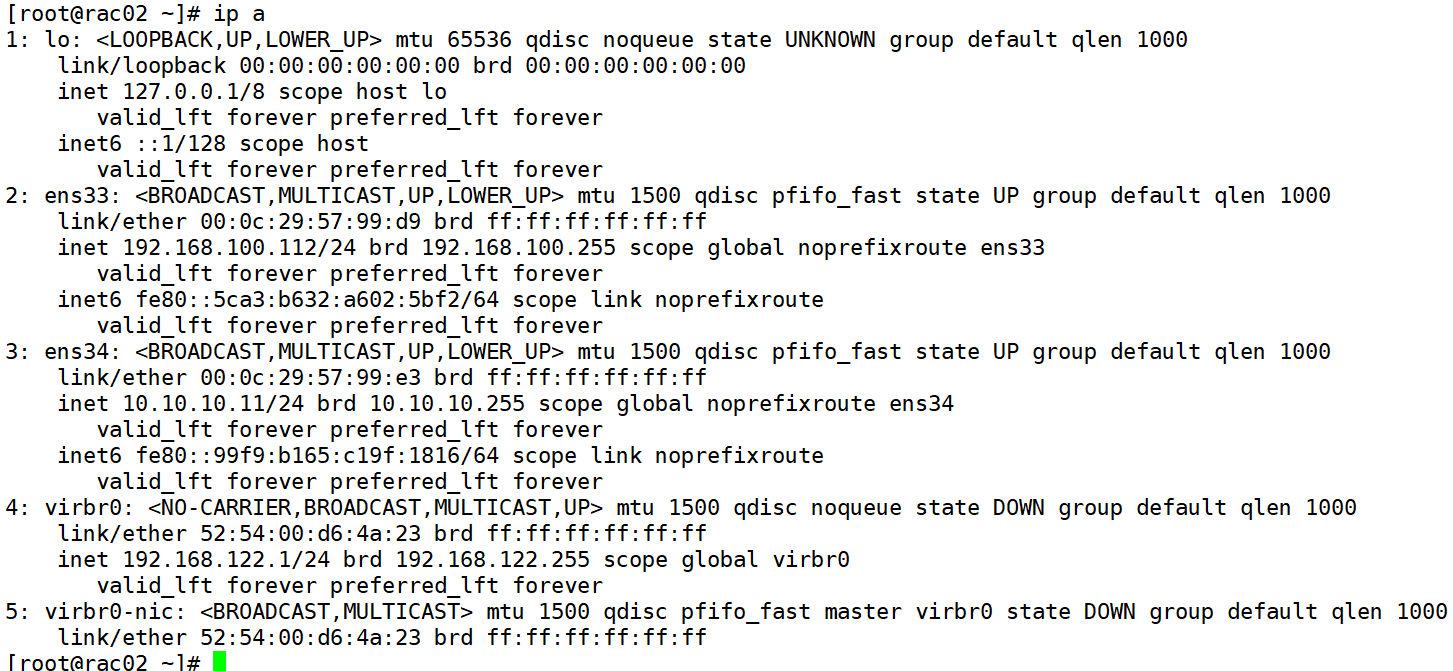

3.3.网卡(虚拟)配置,network文件

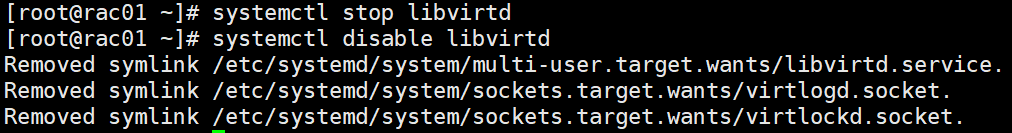

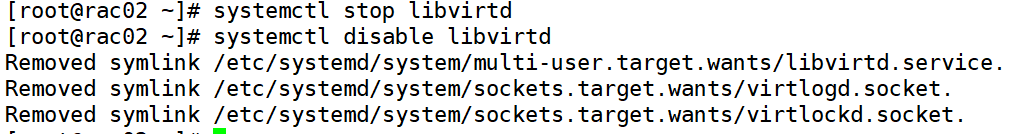

3.3.1.(可选)禁用虚拟网卡

systemctl stop libvirtd

systemctl disable libvirtd

注意:需要重启操作系统

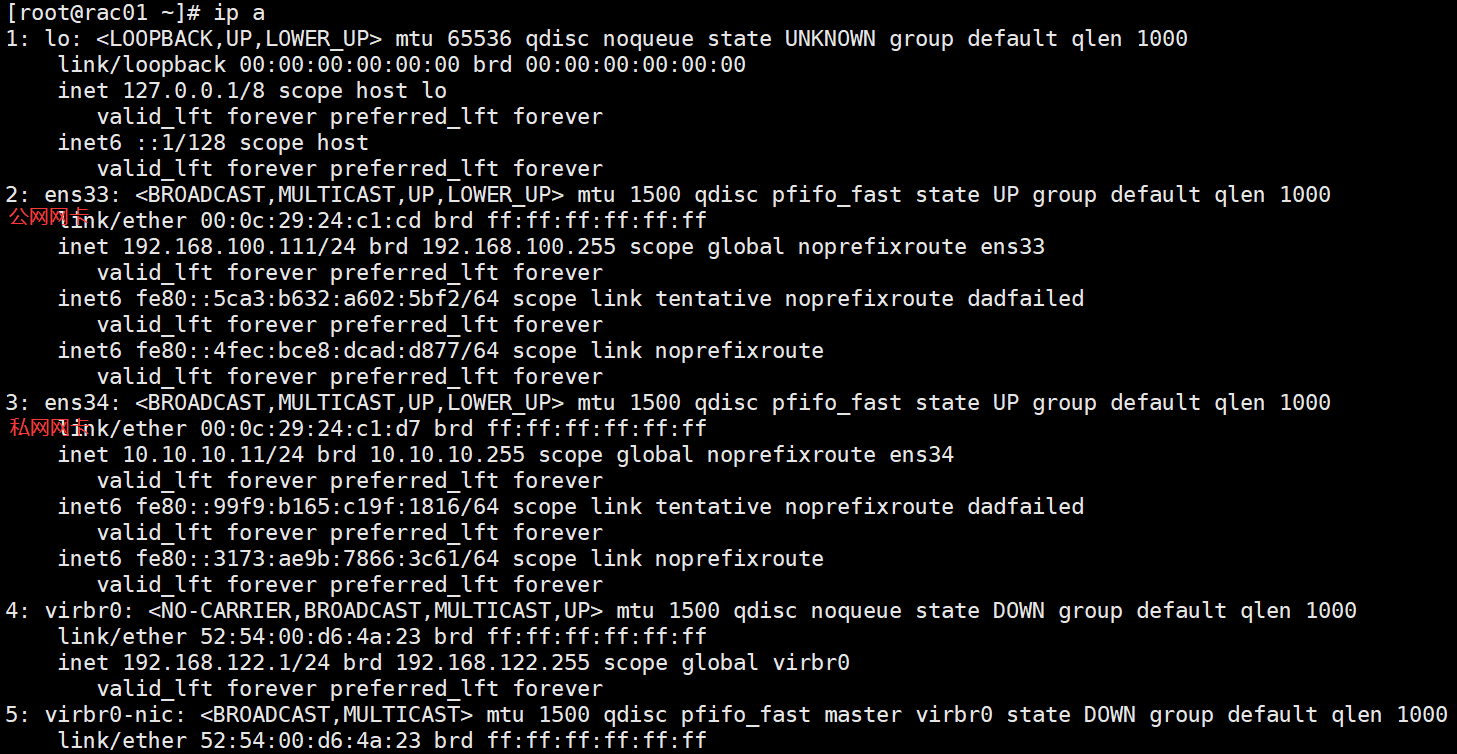

3.3.2.检查节点的网卡名和IP

[root@oracle19c-rac1 ~]# ip a

注意:需要确认两个节点的网卡名一致,否者安装会出现问题。

如何两个节点名称不一致,可以通过如下方式修改某一个节点

cat /etc/udev/rules.d/70-persistent-net.rules

ACTION=="add", SUBSYSTEM=="net", DRIVERS=="?*", ATTR{type}=="1", ATTR{address}=="00:50:56:86:64:82", KERNEL=="ens256" NAME="ens224"

ACTION=="add", SUBSYSTEM=="net", DRIVERS=="?*", ATTR{type}=="1", ATTR{address}=="00:50:56:86:05:a1", KERNEL=="ens161" NAME="ens192"

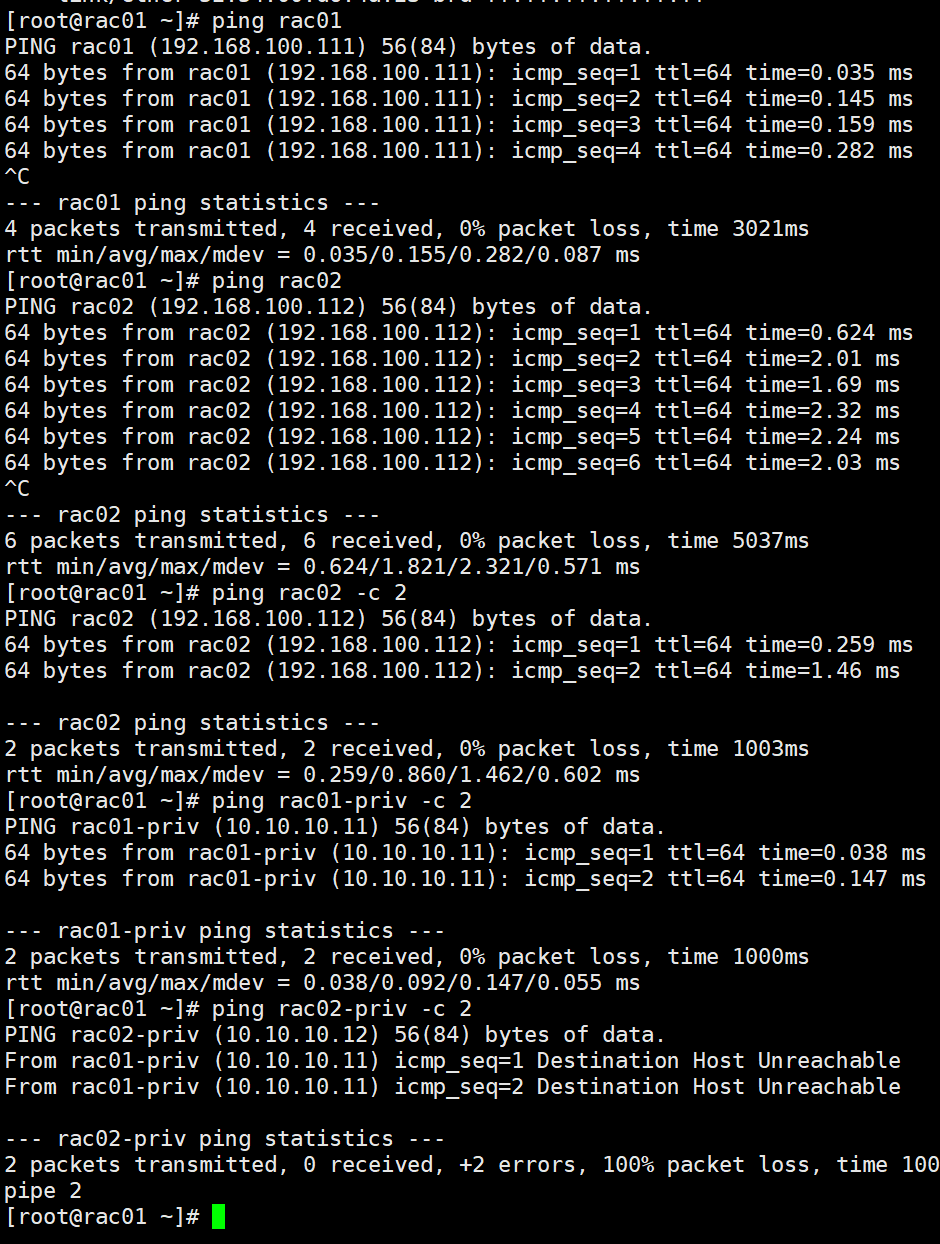

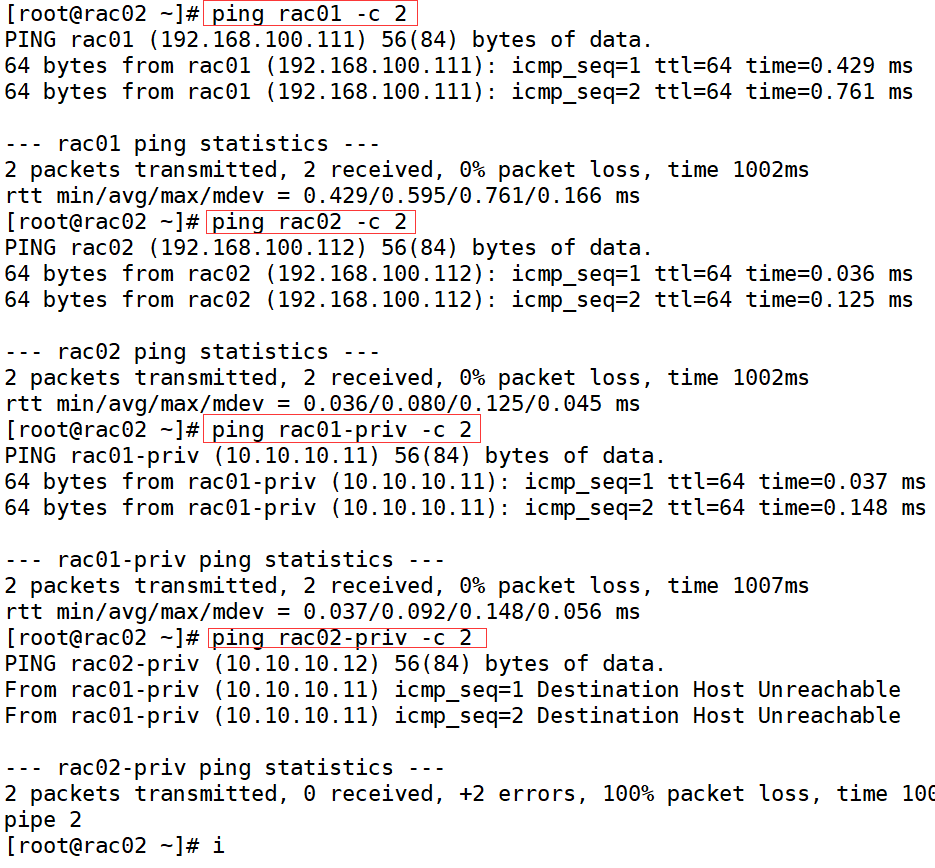

3.3.3.测试连通性

ping rac01 -c 2

ping rac02 -c 2

ping rac01-priv -c 2

ping rac02-priv -c 2

没ping scanip 是因为 是虚拟的,没安装集群之前是通不了的

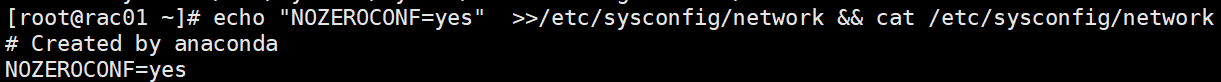

3.3.4.调整network

当使用Oracle集群的时候,Zero Configuration Network一样可能会导致节点间的通信问题,所以也应该停掉

Without zeroconf, a network administrator must set up network services, such as Dynamic Host Configuration Protocol (DHCP) and Domain Name System (DNS), or configure each computer's network settings manually.

在使用平常的网络设置方式的情况下是可以停掉Zero Conf的

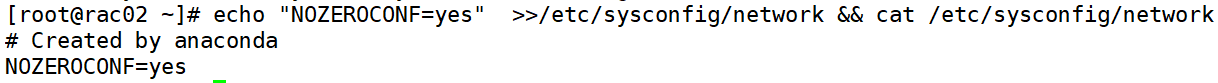

两个节点执行

echo "NOZEROCONF=yes" >>/etc/sysconfig/network && cat /etc/sysconfig/network

3.4.调整/dev/shm

df -Th

cp /etc/fstab /etc/fstab_`date +"%Y%m%d_%H%M%S"`

echo "tmpfs /dev/shm tmpfs rw,exec,size=4G 0 0">>/etc/fstab

cat /etc/fstab

mount -o remount /dev/shm

df -h

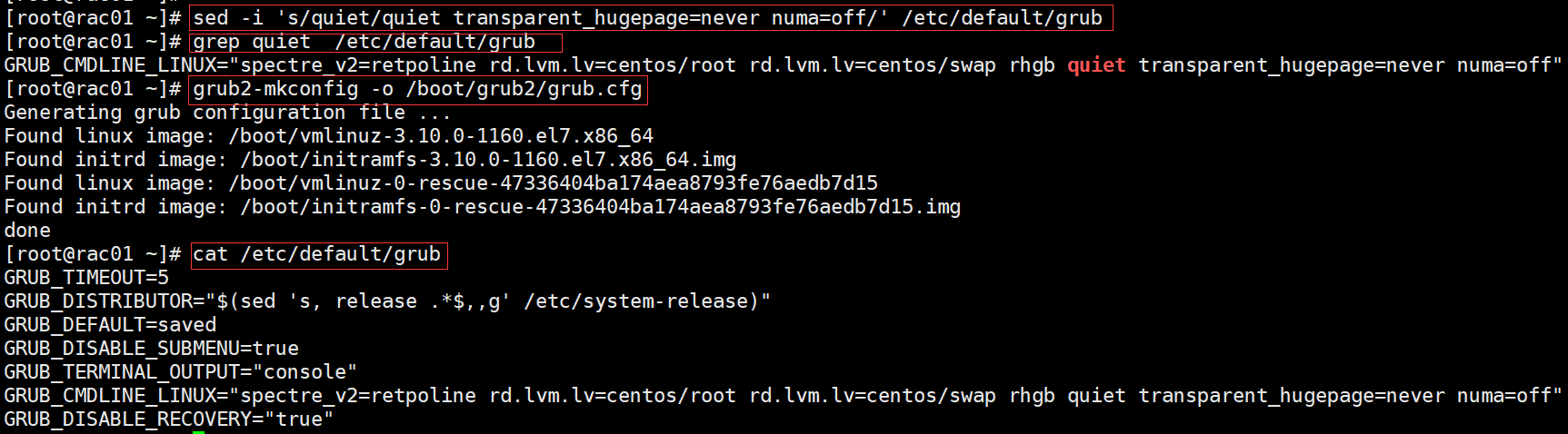

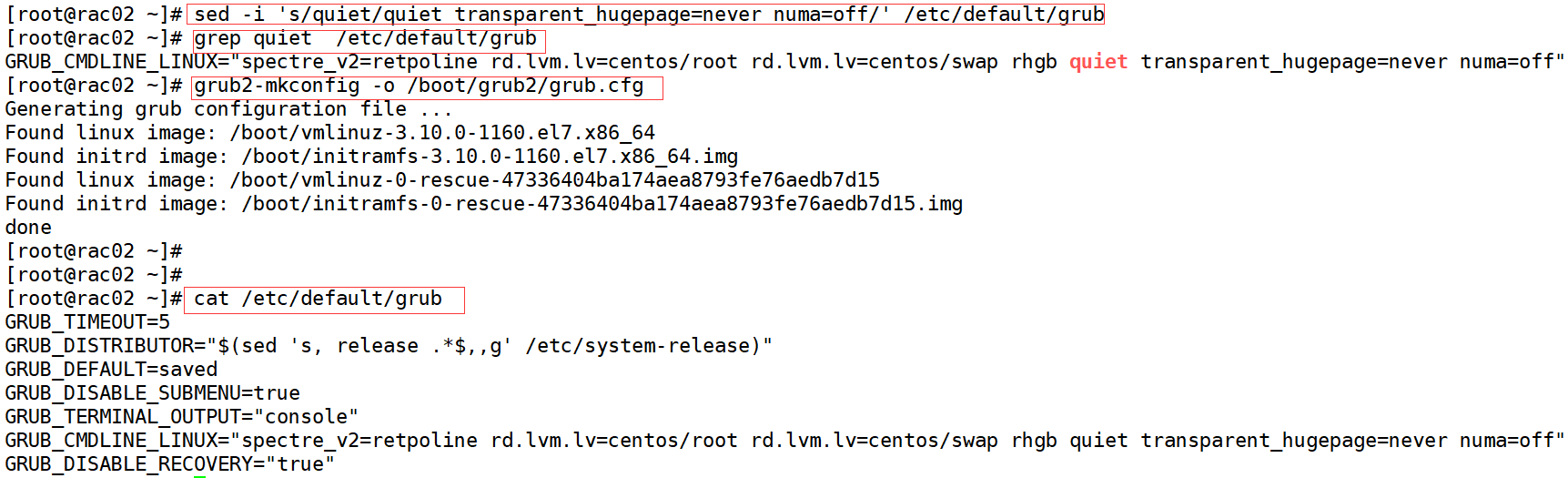

3.5.关闭THP(透明大页)和numa(多核机制)

检查:

cat /sys/kernel/mm/transparent_hugepage/enabled

cat /sys/kernel/mm/transparent_hugepage/defrag

sed -i 's/quiet/quiet transparent_hugepage=never numa=off/' /etc/default/grub

grep quiet /etc/default/grub

grub2-mkconfig -o /boot/grub2/grub.cfg

可以重启检查是否生效

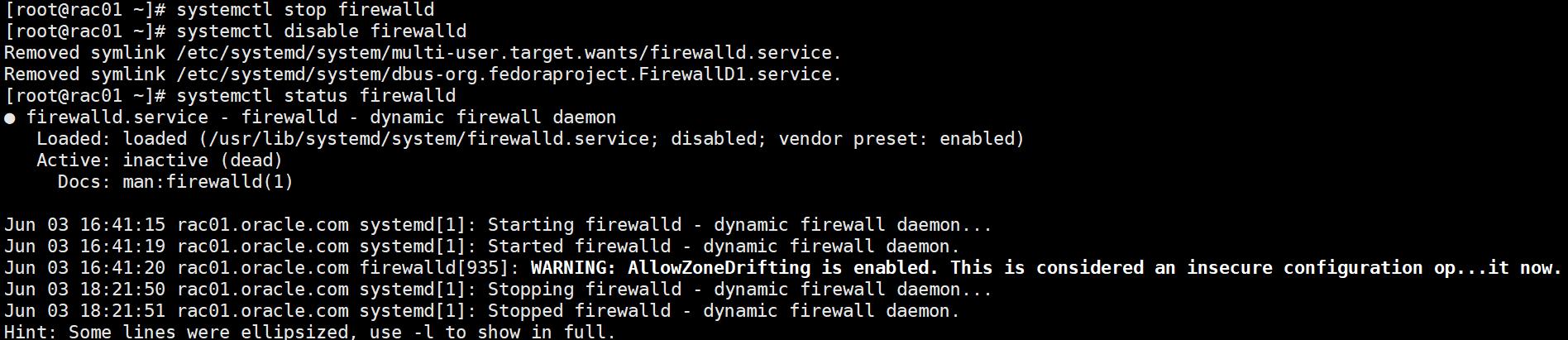

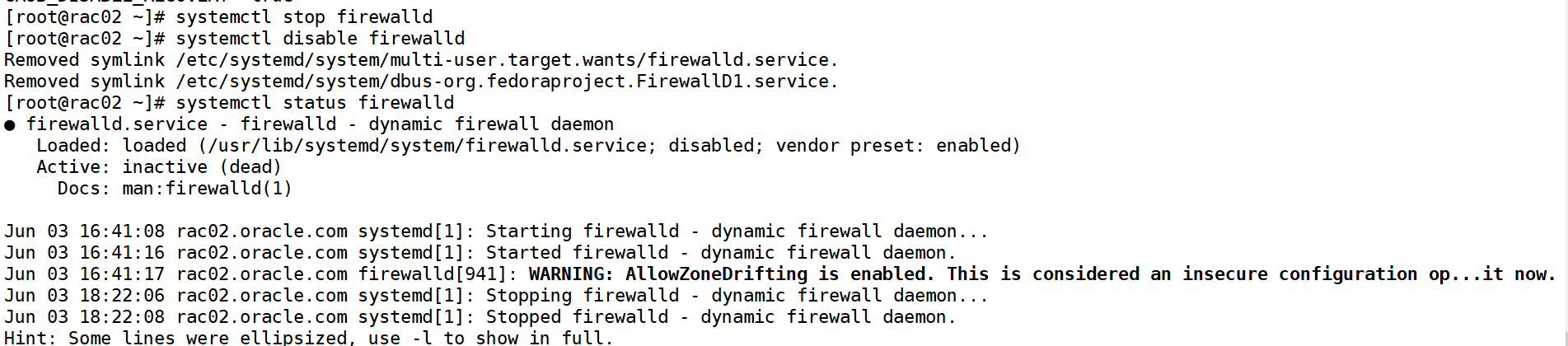

3.6.关闭防火墙

systemctl stop firewalld

systemctl disable firewalld

systemctl status firewalld

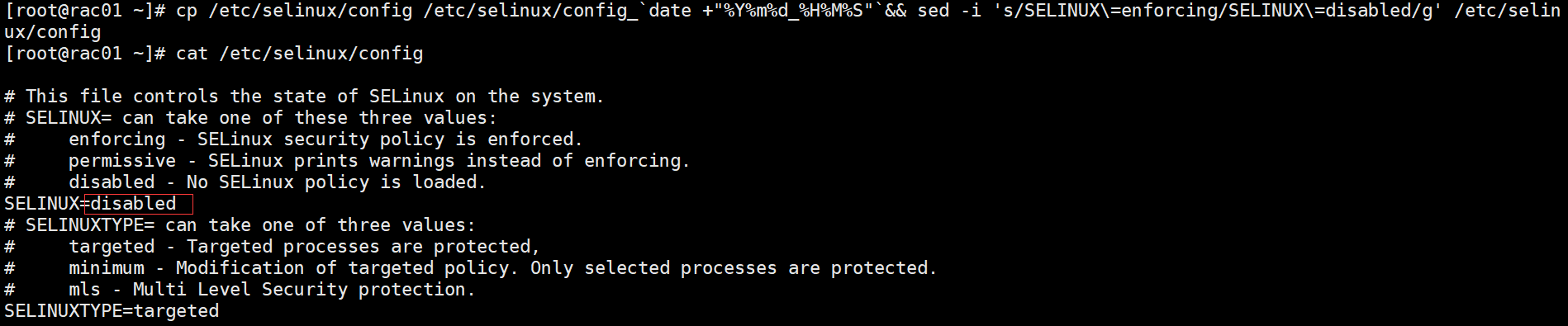

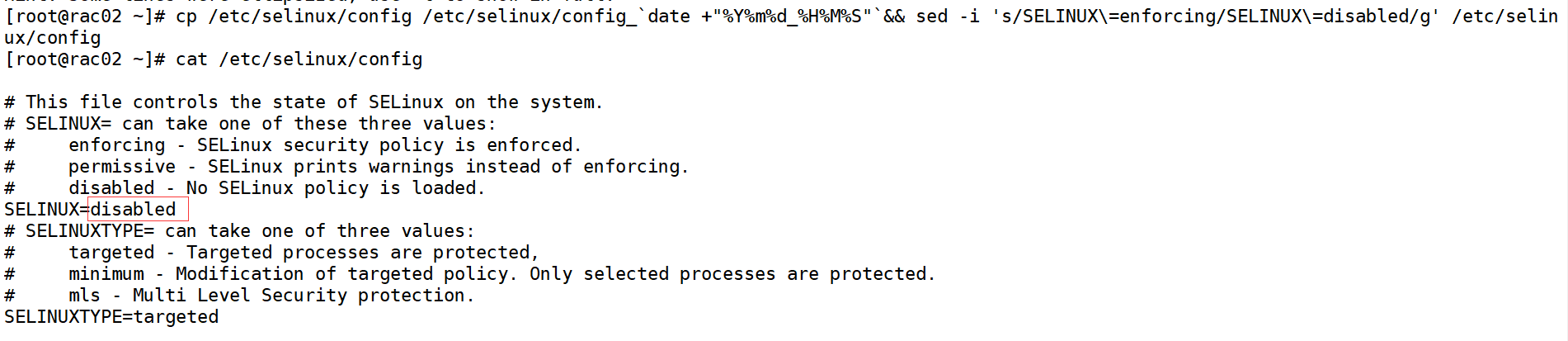

3.7.关闭selinux

cp /etc/selinux/config /etc/selinux/config_`date +"%Y%m%d_%H%M%S"`&& sed -i 's/SELINUX\=enforcing/SELINUX\=disabled/g' /etc/selinux/config

cat /etc/selinux/config

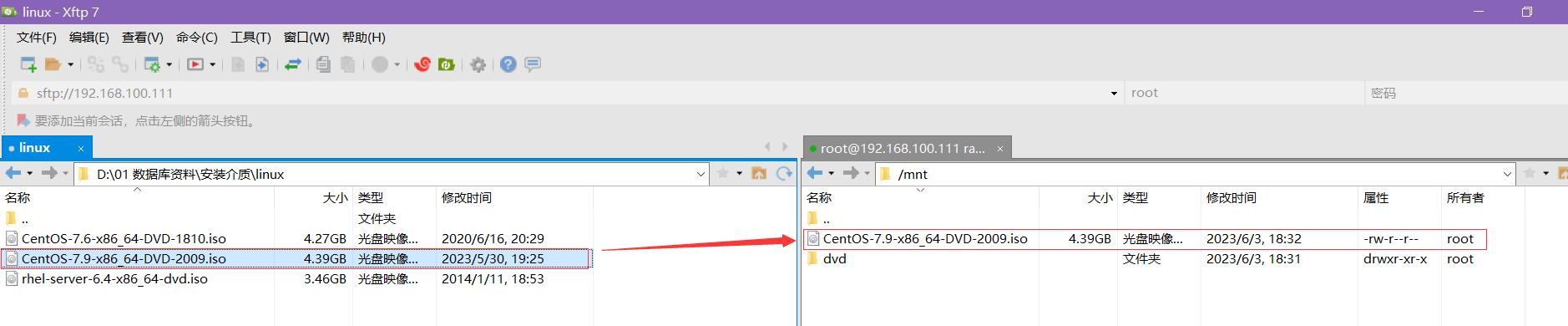

3.8.配置软件yum源

(1)光盘挂载

创建目录:

[root@rac01 ~]# mkdir /mnt/dvd

xftp传递镜像

[root@rac01 ~]# cd /mnt/

[root@rac01 mnt]# ll

total 4601856

-rw-r--r--. 1 root root 4712300544 Jun 3 18:32 CentOS-7.9-x86_64-DVD-2009.iso

drwxr-xr-x. 2 root root 6 Jun 3 18:31 dvd

改名

[root@rac01 mnt]# mv CentOS-7.9-x86_64-DVD-2009.iso centos.iso

[root@rac01 mnt]# ll

total 4601856

-rw-r--r--. 1 root root 4712300544 Jun 3 18:32 centos.iso

drwxr-xr-x. 2 root root 6 Jun 3 18:31 dvd

[root@rac01 mnt]# vi /etc/fstab

[root@rac01 mnt]# cat /etc/fstab

#

# /etc/fstab

# Created by anaconda on Fri Jun 2 18:14:31 2023

#

# Accessible filesystems, by reference, are maintained under '/dev/disk'

# See man pages fstab(5), findfs(8), mount(8) and/or blkid(8) for more info

#

/dev/mapper/centos-root / xfs defaults 0 0

UUID=636e6953-80e6-4505-beb4-73b40b1ef1e7 /boot xfs defaults 0 0

/dev/mapper/centos-u01 /u01 xfs defaults 0 0

/dev/mapper/centos-swap swap swap defaults 0 0

tmpfs /dev/shm tmpfs rw,exec,size=4G 0 0

/mnt/centos.iso /mnt/dvd iso9660 loop 0 0

挂载

[root@rac01 mnt]# mount -a

mount: /dev/loop0 is write-protected, mounting read-only

[root@rac01 mnt]# df -Th

Filesystem Type Size Used Avail Use% Mounted on

devtmpfs devtmpfs 2.0G 0 2.0G 0% /dev

tmpfs tmpfs 4.0G 0 4.0G 0% /dev/shm

tmpfs tmpfs 2.0G 13M 2.0G 1% /run

tmpfs tmpfs 2.0G 0 2.0G 0% /sys/fs/cgroup

/dev/mapper/centos-root xfs 60G 8.8G 52G 15% /

/dev/sda1 xfs 1014M 172M 843M 17% /boot

/dev/mapper/centos-u01 xfs 130G 33M 130G 1% /u01

tmpfs tmpfs 394M 12K 394M 1% /run/user/42

tmpfs tmpfs 394M 0 394M 0% /run/user/0

/dev/loop0 iso9660 4.4G 4.4G 0 100% /mnt/dvd

[root@rac01 mnt]# ll /mnt/dvd/

total 696

-rw-r--r--. 3 root root 14 Oct 30 2020 CentOS_BuildTag

drwxr-xr-x. 3 root root 2048 Oct 27 2020 EFI

-rw-rw-r--. 21 root root 227 Aug 30 2017 EULA

-rw-rw-r--. 21 root root 18009 Dec 10 2015 GPL

drwxr-xr-x. 3 root root 2048 Oct 27 2020 images

drwxr-xr-x. 2 root root 2048 Nov 3 2020 isolinux

drwxr-xr-x. 2 root root 2048 Oct 27 2020 LiveOS

drwxr-xr-x. 2 root root 673792 Nov 4 2020 Packages

drwxr-xr-x. 2 root root 4096 Nov 4 2020 repodata

-rw-rw-r--. 21 root root 1690 Dec 10 2015 RPM-GPG-KEY-CentOS-7

-rw-rw-r--. 21 root root 1690 Dec 10 2015 RPM-GPG-KEY-CentOS-Testing-7

-r--r--r--. 1 root root 2883 Nov 4 2020 TRANS.TBL

[root@rac01 mnt]#

rac02同理

(2)配置软件yum源

[root@rac01 mnt]# cd /etc/yum.repos.d/

[root@rac01 yum.repos.d]# ll

total 40

-rw-r--r--. 1 root root 1664 Oct 23 2020 CentOS-Base.repo

-rw-r--r--. 1 root root 1309 Oct 23 2020 CentOS-CR.repo

-rw-r--r--. 1 root root 649 Oct 23 2020 CentOS-Debuginfo.repo

-rw-r--r--. 1 root root 314 Oct 23 2020 CentOS-fasttrack.repo

-rw-r--r--. 1 root root 630 Oct 23 2020 CentOS-Media.repo

-rw-r--r--. 1 root root 1331 Oct 23 2020 CentOS-Sources.repo

-rw-r--r--. 1 root root 8515 Oct 23 2020 CentOS-Vault.repo

-rw-r--r--. 1 root root 616 Oct 23 2020 CentOS-x86_64-kernel.repo

[root@rac01 yum.repos.d]# mkdir bak

[root@rac01 yum.repos.d]# ll

total 40

drwxr-xr-x. 2 root root 6 Jun 3 18:49 bak

-rw-r--r--. 1 root root 1664 Oct 23 2020 CentOS-Base.repo

-rw-r--r--. 1 root root 1309 Oct 23 2020 CentOS-CR.repo

-rw-r--r--. 1 root root 649 Oct 23 2020 CentOS-Debuginfo.repo

-rw-r--r--. 1 root root 314 Oct 23 2020 CentOS-fasttrack.repo

-rw-r--r--. 1 root root 630 Oct 23 2020 CentOS-Media.repo

-rw-r--r--. 1 root root 1331 Oct 23 2020 CentOS-Sources.repo

-rw-r--r--. 1 root root 8515 Oct 23 2020 CentOS-Vault.repo

-rw-r--r--. 1 root root 616 Oct 23 2020 CentOS-x86_64-kernel.repo

[root@rac01 yum.repos.d]# mv *.repo bak

[root@rac01 yum.repos.d]# ll

total 0

drwxr-xr-x. 2 root root 220 Jun 3 18:50 bak

[root@rac01 yum.repos.d]# vi centos.repo

[root@rac01 yum.repos.d]# cat centos.repo

[centos]

name=centos

baseurl=file:///mnt/dvd

gpgcheck=0

[root@rac01 yum.repos.d]# yum clean all

Loaded plugins: fastestmirror, langpacks

Cleaning repos: centos

Cleaning up list of fastest mirrors

[root@rac01 yum.repos.d]# yum list

arc02同理

3.9.安装软件包

(1)检查

rpm -q --qf '%{NAME}-%{VERSION}-%{RELEASE} (%{ARCH})\n' \

bc \

binutils \

compat-libcap1 \

compat-libstdc++-33 \

elfutils-libelf \

elfutils-libelf-devel \

fontconfig-devel \

glibc \

gcc \

gcc-c++ \

glibc \

glibc-devel \

ksh \

libstdc++ \

libstdc++-devel \

libaio \

libaio-devel \

libXrender \

libXrender-devel \

libxcb \

libX11 \

libXau \

libXi \

libXtst \

libgcc \

libstdc++-devel \

make \

sysstat \

unzip \

readline \

smartmontools | grep "not installed"

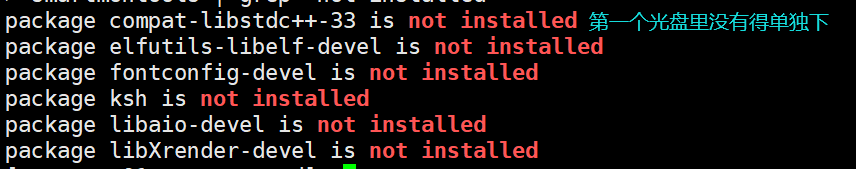

结果:

(2)安装软件包和工具包

[root@rac01 yum.repos.d]#

yum install -y bc* ntp* binutils* compat-libcap1* compat-libstdc++* dtrace-modules* dtrace-modules-headers* dtrace-modules-provider-headers* dtrace-utils* elfutils-libelf* elfutils-libelf-devel* fontconfig-devel* glibc* glibc-devel* ksh* libaio* libaio-devel* libdtrace-ctf-devel* libXrender* libXrender-devel* libX11* libXau* libXi* libXtst* libgcc* librdmacm-devel* libstdc++* libstdc++-devel* libxcb* make* net-tools* nfs-utils* python* python-configshell* python-rtslib* python-six* targetcli* smartmontools* sysstat* gcc* nscd* unixODBC* unzip readline tigervnc*

(3)再检查一遍有一个没安装

package compat-libstdc++-33 is not installed

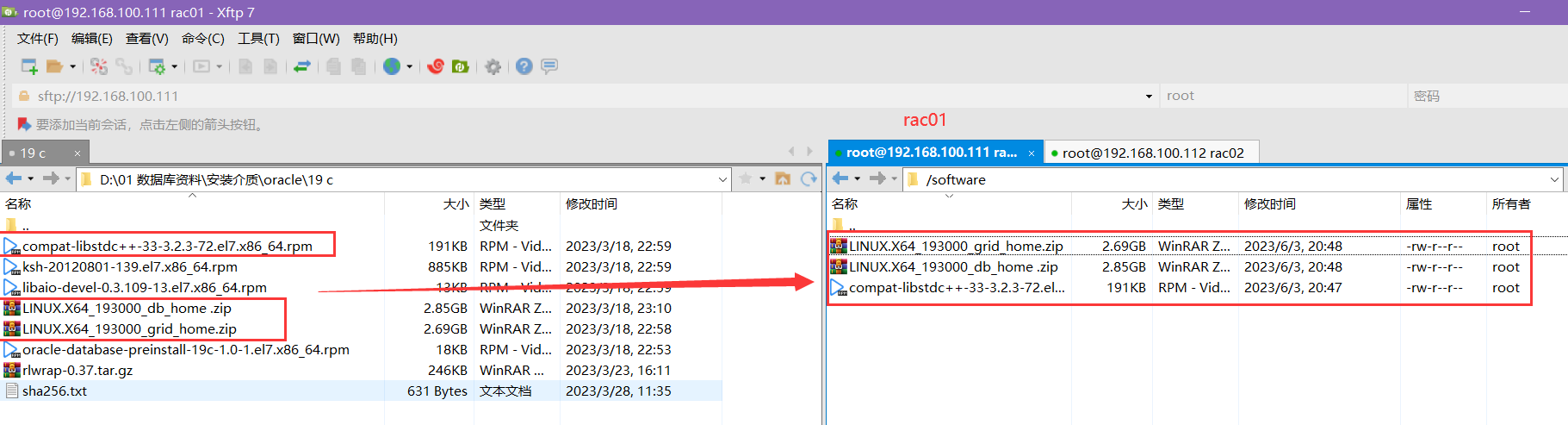

(4)虚拟机创建/software目录

mkdir /software

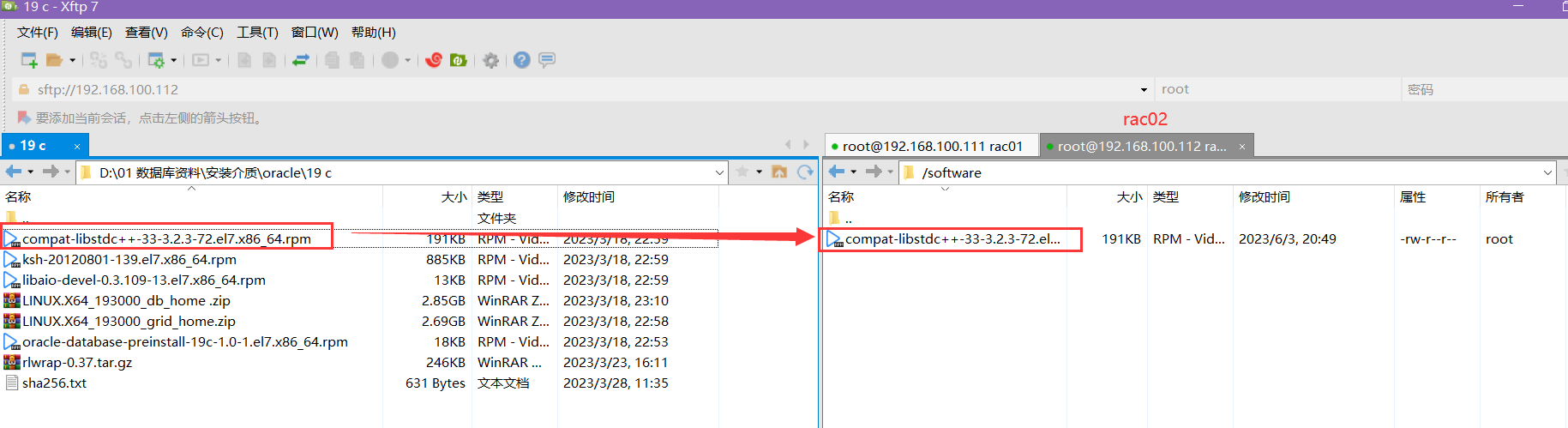

(5)xftp传输文件

[root@rac01 yum.repos.d]# cd /software

[root@rac01 software]# ll

total 5809660

-rw-r--r--. 1 root root 195388 Jun 3 20:47 compat-libstdc++-33-3.2.3-72.el7.x86_64.rpm

-rw-r--r--. 1 root root 3059705302 Jun 3 20:48 LINUX.X64_193000_db_home .zip

-rw-r--r--. 1 root root 2889184573 Jun 3 20:48 LINUX.X64_193000_grid_home.zip

[root@rac01 software]# rpm -ivh compat-libstdc++-33-3.2.3-72.el7.x86_64.rpm

warning: compat-libstdc++-33-3.2.3-72.el7.x86_64.rpm: Header V3 RSA/SHA256 Signature, key ID f4a80eb5: NOKEY

Preparing... ################################# [100%]

Updating / installing...

1:compat-libstdc++-33-3.2.3-72.el7 ################################# [100%]

[root@rac01 software]#

arc02同理

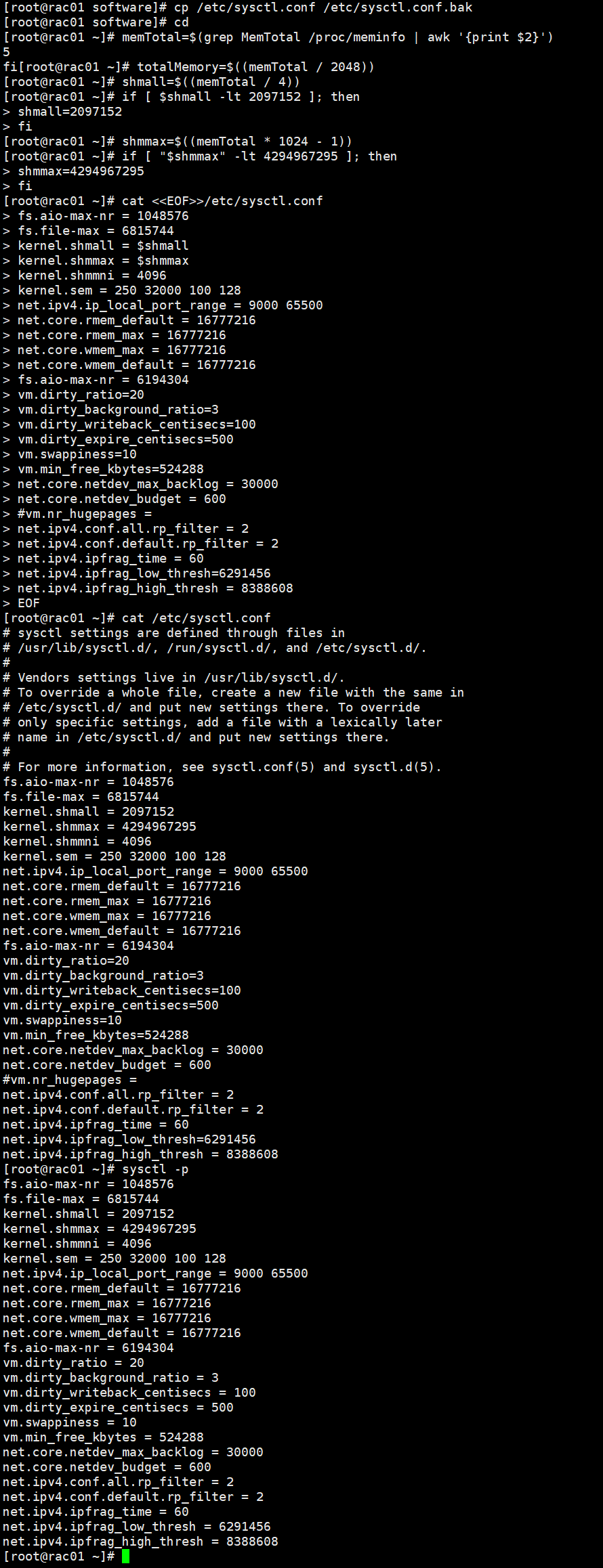

3.10配置核心参数

(1)

cp /etc/sysctl.conf /etc/sysctl.conf.bak

(2)

memTotal=$(grep MemTotal /proc/meminfo | awk '{print $2}')

totalMemory=$((memTotal / 2048))

shmall=$((memTotal / 4))

if [ $shmall -lt 2097152 ]; then

shmall=2097152

fi

shmmax=$((memTotal * 1024 - 1))

if [ "$shmmax" -lt 4294967295 ]; then

shmmax=4294967295

fi

(3)

cat <<EOF>>/etc/sysctl.conf

fs.aio-max-nr = 1048576

fs.file-max = 6815744

kernel.shmall = $shmall

kernel.shmmax = $shmmax

kernel.shmmni = 4096

kernel.sem = 250 32000 100 128

net.ipv4.ip_local_port_range = 9000 65500

net.core.rmem_default = 16777216

net.core.rmem_max = 16777216

net.core.wmem_max = 16777216

net.core.wmem_default = 16777216

fs.aio-max-nr = 6194304

vm.dirty_ratio=20

vm.dirty_background_ratio=3

vm.dirty_writeback_centisecs=100

vm.dirty_expire_centisecs=500

vm.swappiness=10

vm.min_free_kbytes=524288

net.core.netdev_max_backlog = 30000

net.core.netdev_budget = 600

#vm.nr_hugepages =

net.ipv4.conf.all.rp_filter = 2

net.ipv4.conf.default.rp_filter = 2

net.ipv4.ipfrag_time = 60

net.ipv4.ipfrag_low_thresh=6291456

net.ipv4.ipfrag_high_thresh = 8388608

EOF

(4)

sysctl -p

arc02同理

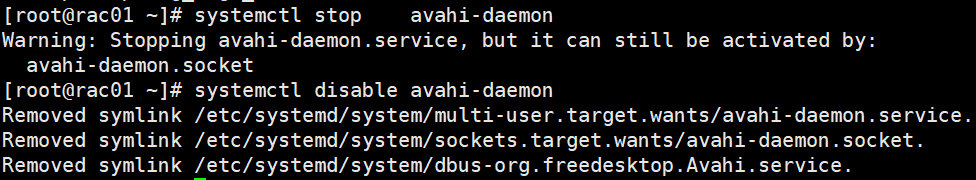

3.11.关闭avahi服务

systemctl stop avahi-daemon

systemctl disable avahi-daemon

3.12.关闭其他服务

--禁用开机启动

systemctl disable accounts-daemon.service

systemctl disable atd.service

systemctl disable avahi-daemon.service

systemctl disable avahi-daemon.socket

systemctl disable bluetooth.service

systemctl disable brltty.service

--systemctl disable chronyd.service

systemctl disable colord.service

systemctl disable cups.service

systemctl disable debug-shell.service

systemctl disable firewalld.service

systemctl disable gdm.service

systemctl disable ksmtuned.service

systemctl disable ktune.service

systemctl disable libstoragemgmt.service

systemctl disable mcelog.service

systemctl disable ModemManager.service

--systemctl disable ntpd.service

systemctl disable postfix.service

systemctl disable postfix.service

systemctl disable rhsmcertd.service

systemctl disable rngd.service

systemctl disable rpcbind.service

systemctl disable rtkit-daemon.service

systemctl disable tuned.service

systemctl disable upower.service

systemctl disable wpa_supplicant.service

--停止服务

systemctl stop accounts-daemon.service

systemctl stop atd.service

systemctl stop avahi-daemon.service

systemctl stop avahi-daemon.socket

systemctl stop bluetooth.service

systemctl stop brltty.service

--systemctl stop chronyd.service

systemctl stop colord.service

systemctl stop cups.service

systemctl stop debug-shell.service

systemctl stop firewalld.service

systemctl stop gdm.service

systemctl stop ksmtuned.service

systemctl stop ktune.service

systemctl stop libstoragemgmt.service

systemctl stop mcelog.service

systemctl stop ModemManager.service

--systemctl stop ntpd.service

systemctl stop postfix.service

systemctl stop postfix.service

systemctl stop rhsmcertd.service

systemctl stop rngd.service

systemctl stop rpcbind.service

systemctl stop rtkit-daemon.service

systemctl stop tuned.service

systemctl stop upower.service

systemctl stop wpa_supplicant.service

chrony和ntp也关闭,注释去掉

stop不操作,因为一会儿重启

rac02同理

3.13.修改login配置

cat >> /etc/pam.d/login <<EOF

session required pam_limits.so

EOF

rac02同理

3.14.配置用户限制

cat >> /etc/security/limits.conf <<EOF

grid soft nproc 2047

grid hard nproc 16384

grid soft nofile 1024

grid hard nofile 65536

grid soft stack 10240

grid hard stack 32768

gride soft memlock 3145728

gride hard memlock 3145728

oracle soft nproc 2047

oracle hard nproc 16384

oracle soft nofile 1024

oracle hard nofile 65536

oracle soft stack 10240

oracle hard stack 32768

oracle soft memlock 3145728

oracle hard memlock 3145728

EOF

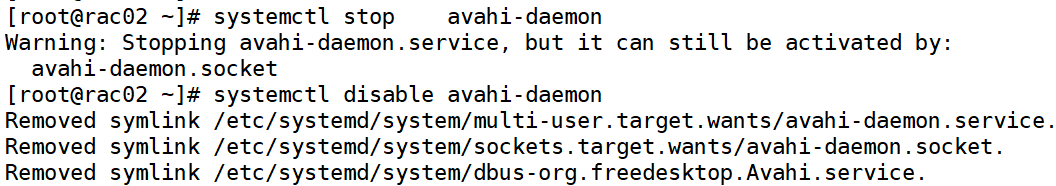

配置文件删除,彻底禁用

mv /etc/chrony.conf /etc/chrony.conf_bak

mv /etc/ntp.conf /etc/ntp.conf_bak

systemctl list-unit-files|grep -E 'ntp|chrony'

rac02同理

3.15.两台虚拟重启reboot

Poweroff

3.16.创建组和用户

groupadd -g 54321 oinstall

groupadd -g 54322 dba

groupadd -g 54323 oper

groupadd -g 54324 backupdba

groupadd -g 54325 dgdba

groupadd -g 54326 kmdba

groupadd -g 54327 asmdba

groupadd -g 54328 asmoper

groupadd -g 54329 asmadmin

groupadd -g 54330 racdba

useradd -g oinstall -G dba,oper,backupdba,dgdba,kmdba,asmdba,racdba -u 10000 oracle

useradd -g oinstall -G dba,asmdba,asmoper,asmadmin,racdba -u 10001 grid

echo "oracle" | passwd --stdin oracle

echo "grid" | passwd --stdin grid

3.17.创建目录

mkdir -p /u01/app/19.3.0/grid

mkdir -p /u01/app/grid

mkdir -p /u01/app/oracle/product/19.3.0/dbhome_1

chown -R grid:oinstall /u01

chown -R oracle:oinstall /u01/app/oracle

chmod -R 775 /u01/

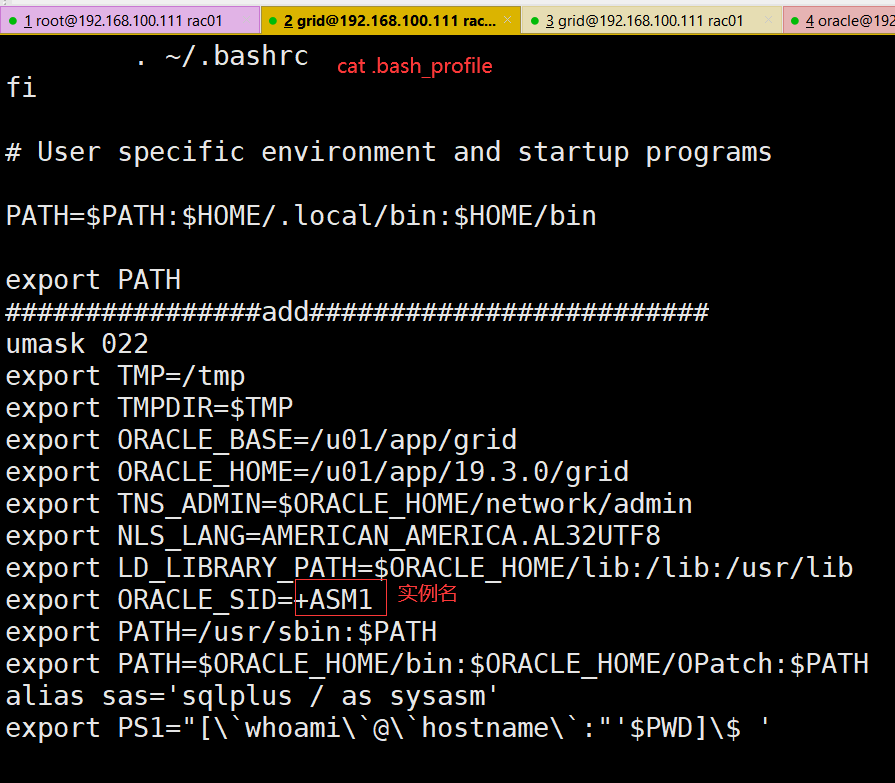

3.18.配置用户环境变量

3.18.1grid用户

- rac01

cat >> /home/grid/.bash_profile << "EOF"

################add#########################

umask 022

export TMP=/tmp

export TMPDIR=$TMP

export ORACLE_BASE=/u01/app/grid

export ORACLE_HOME=/u01/app/19.3.0/grid

export TNS_ADMIN=$ORACLE_HOME/network/admin

export NLS_LANG=AMERICAN_AMERICA.AL32UTF8

export LD_LIBRARY_PATH=$ORACLE_HOME/lib:/lib:/usr/lib

export ORACLE_SID=+ASM1

export PATH=/usr/sbin:$PATH

export PATH=$ORACLE_HOME/bin:$ORACLE_HOME/OPatch:$PATH

alias sas='sqlplus / as sysasm'

export PS1="[\`whoami\`@\`hostname\`:"'$PWD]\$ '

EOF

(2)rac02

cat >> /home/grid/.bash_profile << "EOF"

################ enmo add#########################

umask 022

export TMP=/tmp

export TMPDIR=$TMP

export ORACLE_BASE=/u01/app/grid

export ORACLE_HOME=/u01/app/19.3.0/grid

export TNS_ADMIN=$ORACLE_HOME/network/admin

export NLS_LANG=AMERICAN_AMERICA.AL32UTF8

export LD_LIBRARY_PATH=$ORACLE_HOME/lib:/lib:/usr/lib

export ORACLE_SID=+ASM2

export PATH=/usr/sbin:$PATH

export PATH=$ORACLE_HOME/bin:$ORACLE_HOME/OPatch:$PATH

alias sas='sqlplus / as sysasm'

export PS1="[\`whoami\`@\`hostname\`:"'$PWD]\$ '

EOF

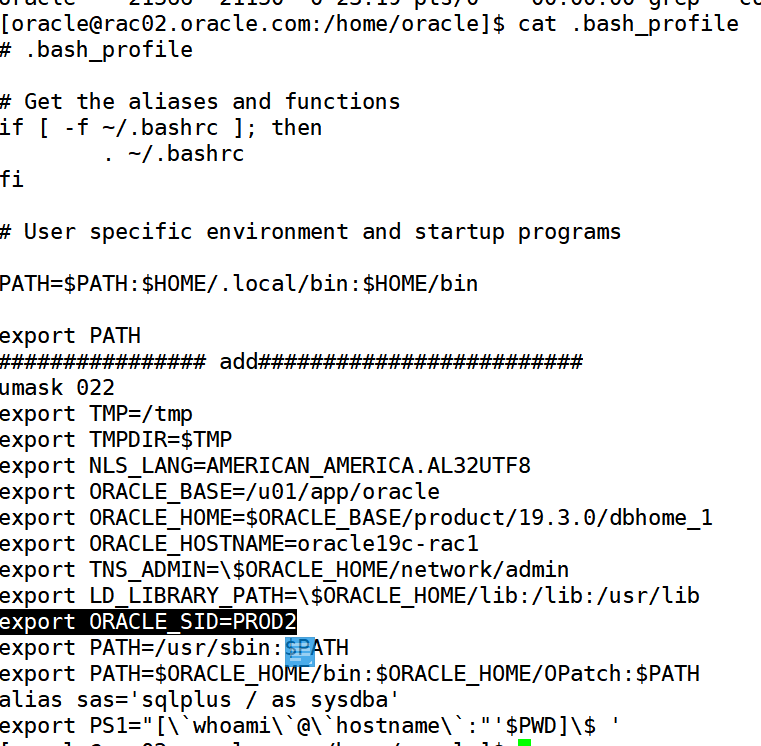

3.18.2. oracle用户

(1)rac01

cat >> /home/oracle/.bash_profile << "EOF"

################ add#########################

umask 022

export TMP=/tmp

export TMPDIR=$TMP

export NLS_LANG=AMERICAN_AMERICA.AL32UTF8

export ORACLE_BASE=/u01/app/oracle

export ORACLE_HOME=$ORACLE_BASE/product/19.3.0/dbhome_1

export ORACLE_HOSTNAME=oracle19c-rac1

export TNS_ADMIN=\$ORACLE_HOME/network/admin

export LD_LIBRARY_PATH=\$ORACLE_HOME/lib:/lib:/usr/lib

export ORACLE_SID=PROD1

export PATH=/usr/sbin:$PATH

export PATH=$ORACLE_HOME/bin:$ORACLE_HOME/OPatch:$PATH

alias sas='sqlplus / as sysdba'

export PS1="[\`whoami\`@\`hostname\`:"'$PWD]\$ '

EOF

(2)rac02

cat >> /home/oracle/.bash_profile << "EOF"

################ add#########################

umask 022

export TMP=/tmp

export TMPDIR=$TMP

export NLS_LANG=AMERICAN_AMERICA.AL32UTF8

export ORACLE_BASE=/u01/app/oracle

export ORACLE_HOME=$ORACLE_BASE/product/19.3.0/dbhome_1

export ORACLE_HOSTNAME=oracle19c-rac1

export TNS_ADMIN=\$ORACLE_HOME/network/admin

export LD_LIBRARY_PATH=\$ORACLE_HOME/lib:/lib:/usr/lib

export ORACLE_SID=PROD2

export PATH=/usr/sbin:$PATH

export PATH=$ORACLE_HOME/bin:$ORACLE_HOME/OPatch:$PATH

alias sas='sqlplus / as sysdba'

export PS1="[\`whoami\`@\`hostname\`:"'$PWD]\$ '

EOF

3.19.配置共享存储(multipath+udev)

3.19.1.multipath

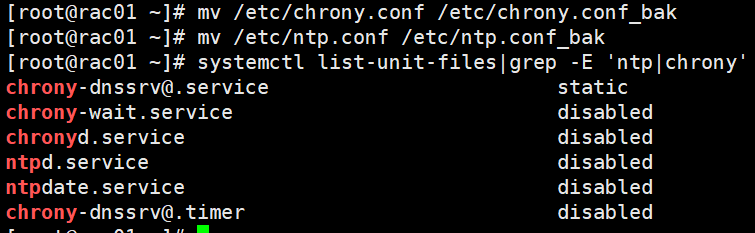

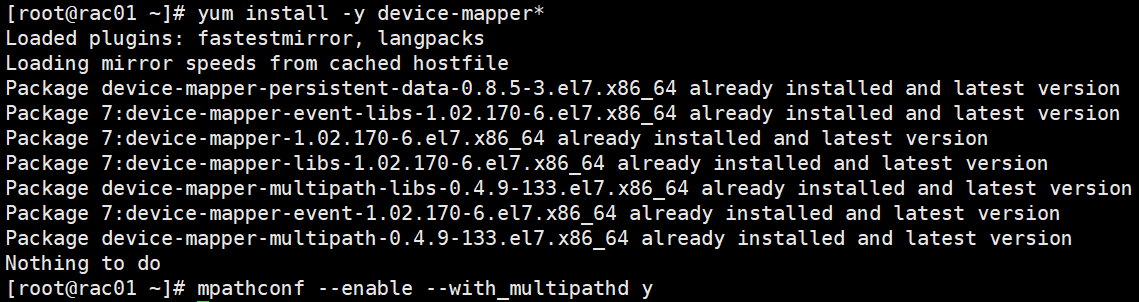

(1)安装multipath

yum install -y device-mapper*

mpathconf --enable --with_multipathd y

(2)查看共享盘的scsi_id

/usr/lib/udev/scsi_id -g -u /dev/sdb

/usr/lib/udev/scsi_id -g -u /dev/sdc

/usr/lib/udev/scsi_id -g -u /dev/sdd

/usr/lib/udev/scsi_id -g -u /dev/sde

/usr/lib/udev/scsi_id -g -u /dev/sdf

(3)配置multipath,wwid的值为上面获取的scsi_id,alias可自定义,这里配置3块OCR盘,2块DATA盘

defaults {

user_friendly_names yes

}

这里可以和之前的冲突了

cp /etc/multipath.conf /etc/multipath.conf.bak

sed '/^/s/^/#/' /etc/multipath.conf -i 注释所有的行

cat <<EOF>> /etc/multipath.conf

defaults {

user_friendly_names yes

}

blacklist {

devnode "^sda"

}

multipaths {

multipath {

wwid "36000c29af3e8a022df507cbc4b6adf7e"

alias asm_ocr01

}

multipath {

wwid "36000c29c915c5cc2c4049fd023987535"

alias asm_ocr02

}

multipath {

wwid "36000c29701271874ae80f25559817100"

alias asm_ocr03

}

multipath {

wwid "36000c29185ae0737eccfd5046e6589c3"

alias asm_data01

}

multipath {

wwid "36000c29b3bb30499832406896631f223"

alias asm_data02

}

}

EOF

(4)激活multipath多路径:

multipath -F

multipath -v2

multipath -ll

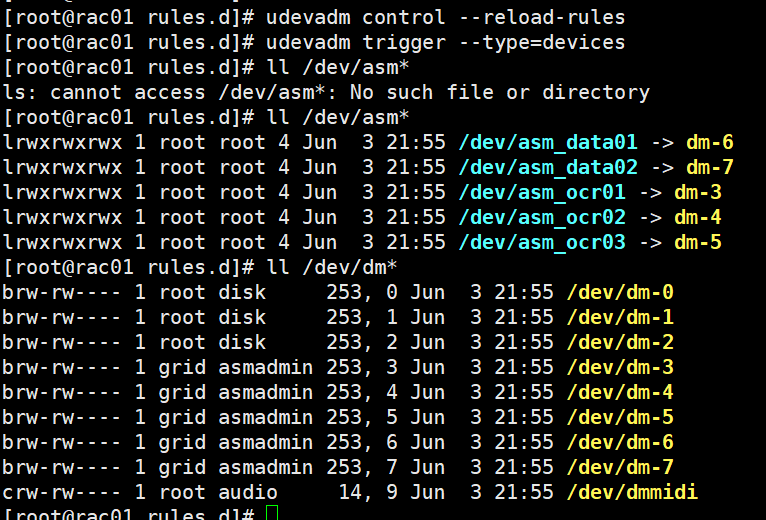

3.19.2. UDEV

cd /dev/mapper

for i in asm_*; do

printf "%s %s\n" "$i" "$(udevadm info --query=all --name=/dev/mapper/"$i" | grep -i dm_uuid)" >>/dev/mapper/udev_info

done

while read -r line; do

dm_uuid=$(echo "$line" | awk -F'=' '{print $2}')

disk_name=$(echo "$line" | awk '{print $1}')

echo "KERNEL==\"dm-*\",ENV{DM_UUID}==\"${dm_uuid}\",SYMLINK+=\"${disk_name}\",OWNER=\"grid\",GROUP=\"asmadmin\",MODE=\"0660\"" >>/etc/udev/rules.d/99-oracle-asmdevices.rules

done < /dev/mapper/udev_info

##重载udev

udevadm control --reload-rules

udevadm trigger --type=devices

ll /dev/asm*

[root@oracle19c-rac2 dev]# more /etc/udev/rules.d/99-oracle-asmdevices.rules

KERNEL=="dm-*",ENV{DM_UUID}=="mpath-36000c29e516572af5c105d12e8c0db12",SYMLINK+="asm_data01",OWNER="grid",GROUP="asmadmin",MODE="0660"

KERNEL=="dm-*",ENV{DM_UUID}=="mpath-36000c29d6be6679787ceadf23b29b180",SYMLINK+="asm_data02",OWNER="grid",GROUP="asmadmin",MODE="0660"

KERNEL=="dm-*",ENV{DM_UUID}=="mpath-36000c29a961f7eb1208473713ca7b007",SYMLINK+="asm_ocr01",OWNER="grid",GROUP="asmadmin",MODE="0660"

KERNEL=="dm-*",ENV{DM_UUID}=="mpath-36000c29f87ed61db71c60bd3d6e737dc",SYMLINK+="asm_ocr02",OWNER="grid",GROUP="asmadmin",MODE="0660"

KERNEL=="dm-*",ENV{DM_UUID}=="mpath-36000c297c53b91255620471a6deb6853",SYMLINK+="asm_ocr03",OWNER="grid",GROUP="asmadmin",MODE="0660"

[root@oracle19c-rac2 dev]#

[root@oracle19c-rac2 mapper]# ll

total 4

lrwxrwxrwx 1 root root 7 Jul 28 15:44 asm_data01 -> ../dm-7

lrwxrwxrwx 1 root root 7 Jul 28 15:44 asm_data02 -> ../dm-8

lrwxrwxrwx 1 root root 7 Jul 28 15:44 asm_ocr01 -> ../dm-4

lrwxrwxrwx 1 root root 7 Jul 28 15:44 asm_ocr02 -> ../dm-5

lrwxrwxrwx 1 root root 7 Jul 28 15:44 asm_ocr03 -> ../dm-6

crw------- 1 root root 10, 236 Jul 28 15:44 control

lrwxrwxrwx 1 root root 7 Jul 28 15:44 ol-root -> ../dm-0

lrwxrwxrwx 1 root root 7 Jul 28 15:44 ol-swap -> ../dm-1

lrwxrwxrwx 1 root root 7 Jul 28 15:44 ol-tmp -> ../dm-2

lrwxrwxrwx 1 root root 7 Jul 28 15:44 ol-u01 -> ../dm-3

-rw-r--r-- 1 root root 307 Jul 28 15:44 udev_info

[root@oracle19c-rac2 mapper]# ll /dev/dm*

brw-rw---- 1 root disk 252, 0 Jul 28 15:44 /dev/dm-0

brw-rw---- 1 root disk 252, 1 Jul 28 15:44 /dev/dm-1

brw-rw---- 1 root disk 252, 2 Jul 28 15:44 /dev/dm-2

brw-rw---- 1 root disk 252, 3 Jul 28 15:44 /dev/dm-3

brw-rw---- 1 grid asmadmin 252, 4 Jul 28 15:44 /dev/dm-4

brw-rw---- 1 grid asmadmin 252, 5 Jul 28 15:44 /dev/dm-5

brw-rw---- 1 grid asmadmin 252, 6 Jul 28 15:44 /dev/dm-6

brw-rw---- 1 grid asmadmin 252, 7 Jul 28 15:44 /dev/dm-7

brw-rw---- 1 grid asmadmin 252, 8 Jul 28 15:44 /dev/dm-8

crw-rw---- 1 root audio 14, 9 Jul 28 15:44 /dev/dmmidi

重启reboot

4.应用 GIRU 并安装 GRID 软件

4.1.上传并解压软件

(1)rac01 root 用户

[root@rac01 ~]# cd /software/

[root@rac01 software]# ll

total 5809660

-rw-r--r--. 1 root root 195388 Jun 3 20:47 compat-libstdc++-33-3.2.3-72.el7.x86_64.rpm

-rw-r--r--. 1 root root 3059705302 Jun 3 20:48 LINUX.X64_193000_db_home .zip

-rw-r--r--. 1 root root 2889184573 Jun 3 20:48 LINUX.X64_193000_grid_home.zip

[root@rac01 software]# chown grid:oinstall LINUX.X64_193000_grid_home.zip

[root@rac01 software]# chown oracle:oinstall LINUX.X64_193000_db_home\ .zip

[root@rac01 software]# ll

total 5809660

-rw-r--r--. 1 root root 195388 Jun 3 20:47 compat-libstdc++-33-3.2.3-72.el7.x86_64.rpm

-rw-r--r--. 1 oracle oinstall 3059705302 Jun 3 20:48 LINUX.X64_193000_db_home .zip

-rw-r--r--. 1 grid oinstall 2889184573 Jun 3 20:48 LINUX.X64_193000_grid_home.zip

(2)rac01 grid 用户

[grid@rac01.oracle.com:/home/grid]$ cd /software/

[grid@rac01.oracle.com:/software]$ ll

total 5809660

-rw-r--r--. 1 root root 195388 Jun 3 20:47 compat-libstdc++-33-3.2.3-72.el7.x86_64.rpm

-rw-r--r--. 1 oracle oinstall 3059705302 Jun 3 20:48 LINUX.X64_193000_db_home .zip

-rw-r--r--. 1 grid oinstall 2889184573 Jun 3 20:48 LINUX.X64_193000_grid_home.zip

[grid@rac01.oracle.com:/software]$ unzip LINUX.X64_193000_grid_home.zip -d $ORACLE_HOME

(3)rac01 oracle 用户

[oracle@rac01.oracle.com:/home/oracle]$ cd /software/

[oracle@rac01.oracle.com:/software]$ ll

total 5809660

-rw-r--r--. 1 root root 195388 Jun 3 20:47 compat-libstdc++-33-3.2.3-72.el7.x86_64.rpm

-rw-r--r--. 1 oracle oinstall 3059705302 Jun 3 20:48 LINUX.X64_193000_db_home .zip

-rw-r--r--. 1 grid oinstall 2889184573 Jun 3 20:48 LINUX.X64_193000_grid_home.zip

[oracle@rac01.oracle.com:/software]$ echo $ORACLE_HOME

/u01/app/oracle/product/19.3.0/dbhome_1

[oracle@rac01.oracle.com:/software]$ unzip LINUX.X64_193000_db_home\ .zip -d $ORACLE_HOME

4.2.安装 cvuqdisk 包

如果配好两台主机之间的ssh互信,可以提前跑一些脚本,检查安装过程依赖的需求是否满足

(1)rac01 grid用户

[grid@rac01.oracle.com:/software]$ cd $ORACLE_HOME

[grid@rac01.oracle.com:/u01/app/19.3.0/grid]$ pwd

/u01/app/19.3.0/grid

[grid@rac01.oracle.com:/u01/app/19.3.0/grid]$ cd cv/rpm/

[grid@rac01.oracle.com:/u01/app/19.3.0/grid/cv/rpm]$ pwd

/u01/app/19.3.0/grid/cv/rpm

[grid@rac01.oracle.com:/u01/app/19.3.0/grid/cv/rpm]$ ls

cvuqdisk-1.0.10-1.rpm

- rac01 root用户

[root@rac01 software]# cd /u01/app/19.3.0/grid/cv/rpm

[root@rac01 rpm]# ll

total 12

-rw-r--r-- 1 grid oinstall 11412 Mar 13 2019 cvuqdisk-1.0.10-1.rpm

[root@rac01 rpm]#

[root@rac01 rpm]# rpm -ivh cvuqdisk-1.0.10-1.rpm

Preparing... ################################# [100%]

Using default group oinstall to install package

Updating / installing...

1:cvuqdisk-1.0.10-1 ################################# [100%]

[root@rac01 rpm]#

[root@rac01 rpm]#

[root@rac01 rpm]# scp cvuqdisk-1.0.10-1.rpm 192.168.100.112:/tmp

The authenticity of host '192.168.100.112 (192.168.100.112)' can't be established.

ECDSA key fingerprint is SHA256:4fppGXhshRhSlv1w62bh4J9FzFYMoL6SoVPOrOO3O5E.

ECDSA key fingerprint is MD5:1b:03:1b:a4:1c:79:59:92:bc:0b:a2:94:5d:29:3a:12.

Are you sure you want to continue connecting (yes/no)? yes

Warning: Permanently added '192.168.100.112' (ECDSA) to the list of known hosts.

root@192.168.100.112's password:

cvuqdisk-1.0.10-1.rpm 100% 11KB 10.9MB/s 00:00

[root@rac01 rpm]#

- rac02 root 用户

[root@rac02 ~]# cd /tmp/

[root@rac02 tmp]# ll

total 24

-rw-r--r--. 1 root root 2053 Jun 2 18:38 anaconda.log

-rw-r--r-- 1 root root 11412 Jun 4 16:47 cvuqdisk-1.0.10-1.rpm

drwxr-xr-x. 2 root root 18 Jun 2 18:16 hsperfdata_root

-rw-r--r--. 1 root root 1992 Jun 2 18:37 ifcfg.log

-rwx------. 1 root root 836 Jun 2 18:21 ks-script-mZKrxE

-rw-r--r--. 1 root root 0 Jun 2 18:22 packaging.log

-rw-r--r--. 1 root root 0 Jun 2 18:22 program.log

drwx------. 2 root root 6 Jun 2 18:37 pulse-BAcvmNtlXNhx

-rw-r--r--. 1 root root 0 Jun 2 18:22 sensitive-info.log

-rw-r--r--. 1 root root 0 Jun 2 18:22 storage.log

drwx------. 2 root root 6 Jun 2 18:40 tracker-extract-files.0

drwx------. 2 root root 6 Jun 2 11:02 vmware-root_757-4281843244

drwx------. 2 root root 6 Jun 2 15:02 vmware-root_763-4248287269

drwx------. 2 root root 6 Jun 2 11:12 vmware-root_772-2990547578

drwx------. 2 root root 6 Jun 2 11:14 vmware-root_788-2957517930

drwx------. 2 root root 6 Jun 2 18:22 vmware-root_791-4282302006

drwx------. 2 root root 6 Jun 2 16:46 vmware-root_830-2999133150

drwx------. 2 root root 6 Jun 3 16:41 vmware-root_833-3979642945

drwx------ 2 root root 6 Jun 3 21:29 vmware-root_840-2697008529

drwx------ 2 root root 6 Jun 3 22:09 vmware-root_890-2722107963

drwx------ 2 root root 6 Jun 3 22:15 vmware-root_913-4013723377

drwx------ 2 root root 6 Jun 4 15:43 vmware-root_920-2731086625

-rw-------. 1 root root 0 Jun 2 18:14 yum.log

[root@rac02 tmp]# rpm -ivh cvuqdisk-1.0.10-1.rpm

Preparing... ################################# [100%]

Using default group oinstall to install package

Updating / installing...

1:cvuqdisk-1.0.10-1 ################################# [100%]

[root@rac02 tmp]#

4.3.配置 ssh 双机互信

(1)rac01 grid用户

[grid@rac01.oracle.com:/u01/app/19.3.0/grid/cv/rpm]$ cd

[grid@rac01.oracle.com:/home/grid]$ cd $ORACLE_HOME

[grid@rac01.oracle.com:/u01/app/19.3.0/grid]$ cd deinstall/

[grid@rac01.oracle.com:/u01/app/19.3.0/grid/deinstall]$ ls

bootstrap_files.lst deinstall deinstall.xml readme.txt sshUserSetup.sh

bootstrap.pl deinstall.pl jlib response utl

[grid@rac01.oracle.com:/u01/app/19.3.0/grid/deinstall]$ ./sshUserSetup.sh –user grid -hosts "rac01 rac01-priv rac02 rac02-priv" -advanced -noPromptPassphrase

(2)rac01 oracle 用户

[oracle@rac01.oracle.com:/home/oracle]$ cd

[oracle@rac01.oracle.com:/home/oracle]$ cd $ORACLE_HOME

[oracle@rac01.oracle.com:/u01/app/oracle/product/19.3.0/dbhome_1]$ cd deinstall/

[oracle@rac01.oracle.com:/u01/app/oracle/product/19.3.0/dbhome_1/deinstall]$ ls

bootstrap_files.lst deinstall deinstall.xml readme.txt sshUserSetup.sh

bootstrap.pl deinstall.pl jlib response utl

[oracle@rac01.oracle.com:/u01/app/oracle/product/19.3.0/dbhome_1/deinstall]$ ./sshUserSetup.sh –user oracle -hosts "rac01 rac01-priv rac02 rac02-priv" -advanced -noPromptPassphrase

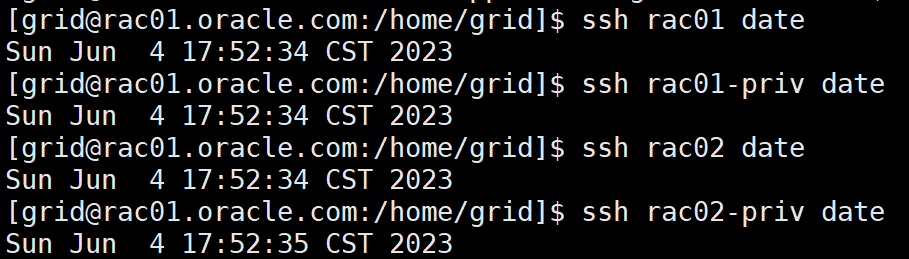

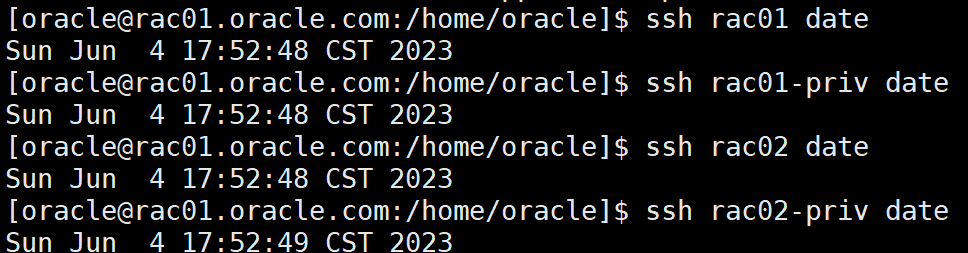

验证

grid 用户:

su - grid

ssh rac01 date

ssh rac01-priv date

ssh rac02 date

ssh rac02-priv date

oracle 用户:

su - oracle

ssh rac01 date

ssh rac01-priv date

ssh rac02 date

ssh rac02-priv date

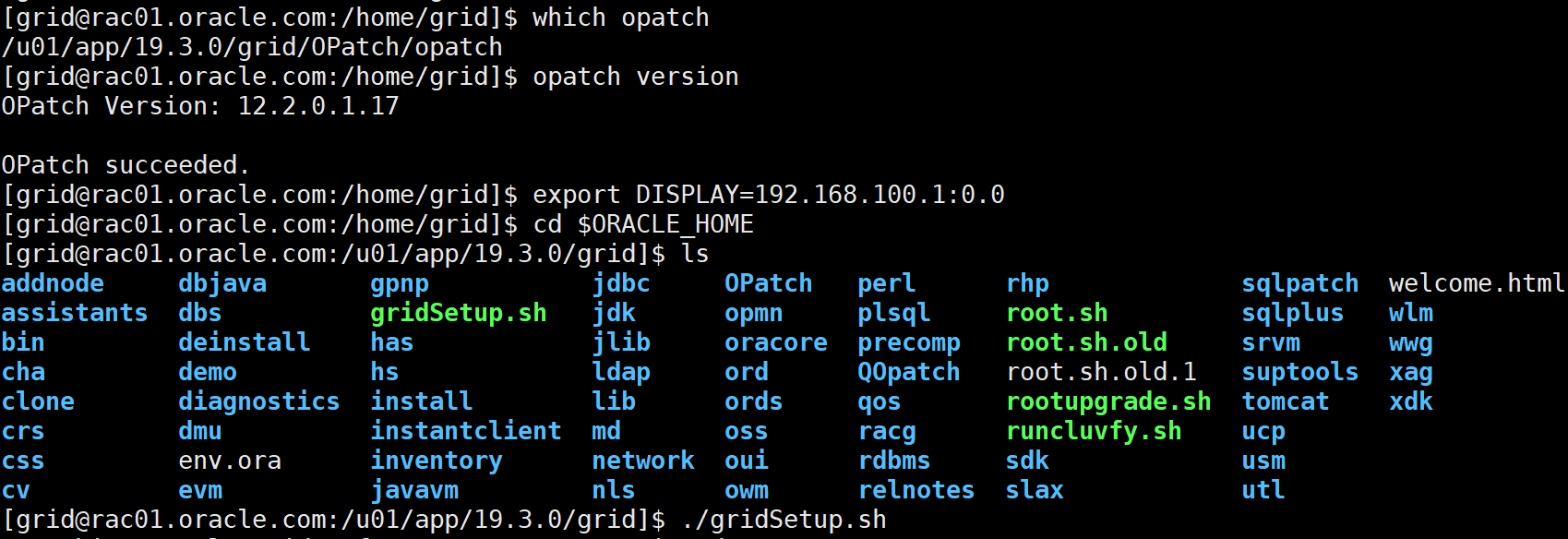

4.4.更新 OPatch

在一节点,上传 opatch 工具(version 12.2.0.37)以 grid 用户解

压缩

su – grid

mv $ORACLE_HOME/OPatch $ORACLE_HOME/OPatch_12.2.0.1.17

unzip /tmp/oracle19c/p6880880_190000_12.2.0.1.37_Linux-x86-64.zip -d $ORACLE_HOME

su – oracle

mv $ORACLE_HOME/OPatch $ORACLE_HOME/OPatch_12.2.0.1.17

unzip /tmp/oracle19c/p6880880_190000_12.2.0.1.37_Linux-x86-64.zip -d $ORACLE_HOME

安装前,版本为:

opatch version

OPatch Version: 12.2.0.1.17

安装后,版本为:

opatch version

OPatch Version: 12.2.0.1.37

4.5.应用GIRU安装grid软件

启动xmanager

Export DISPLAY=192.168.100.1:0.0

cd $ORACLE_HOME

./gridSetup.sh

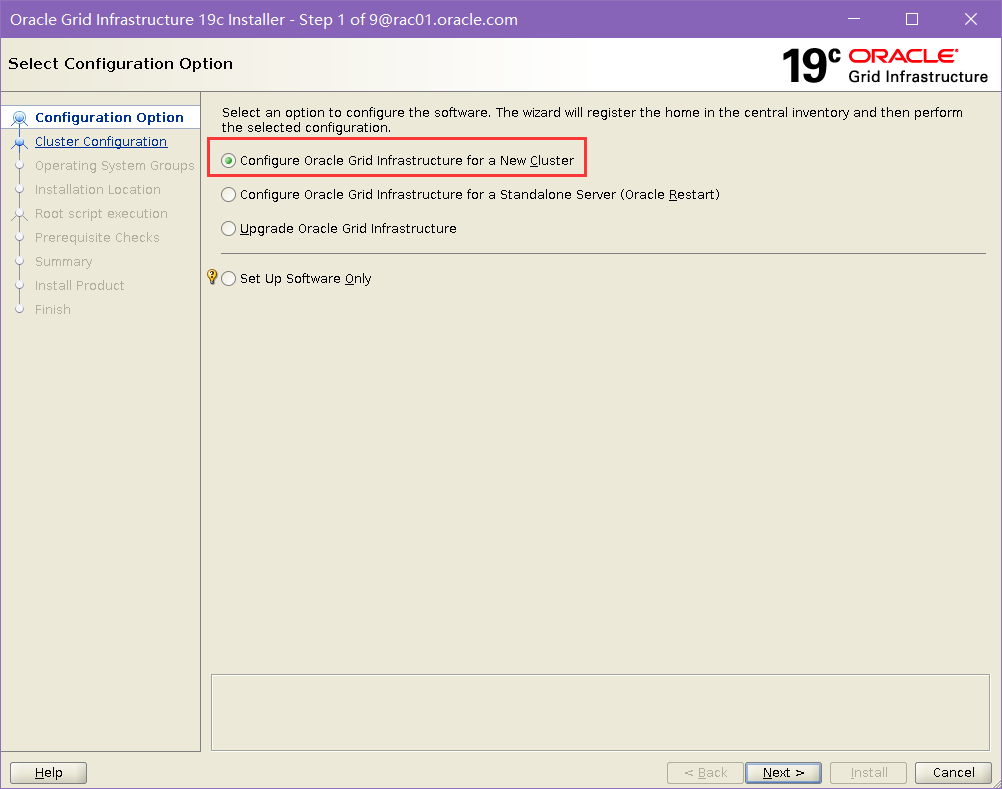

选择安装 new cluster

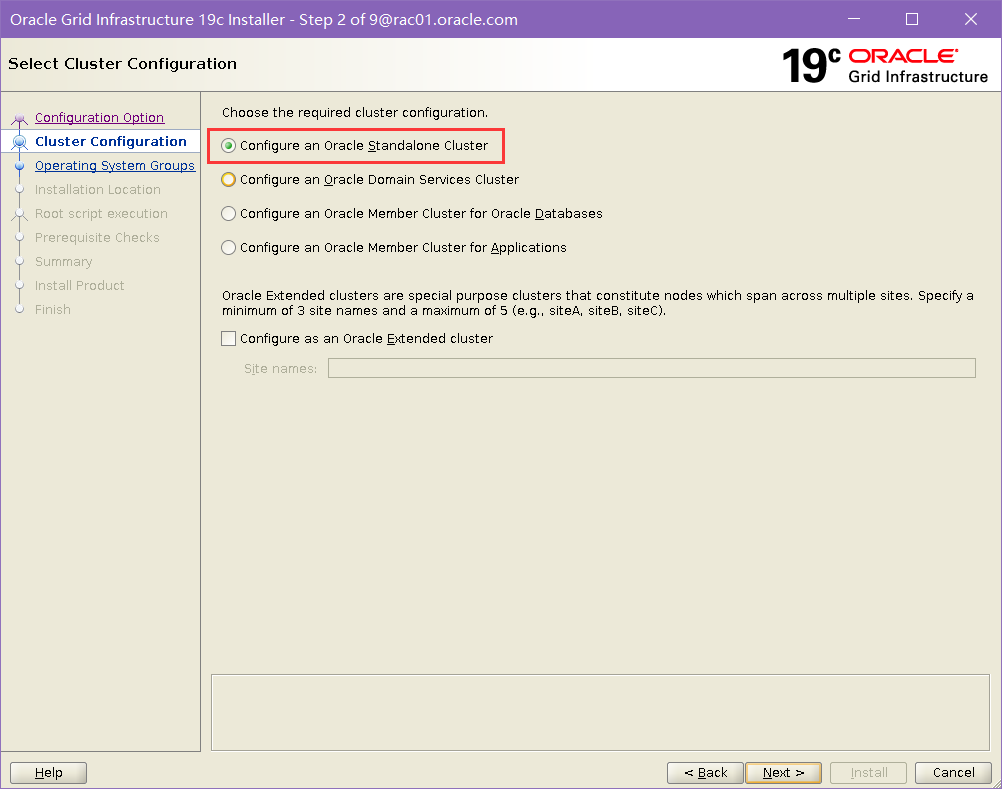

选择 standalone

设置 scan

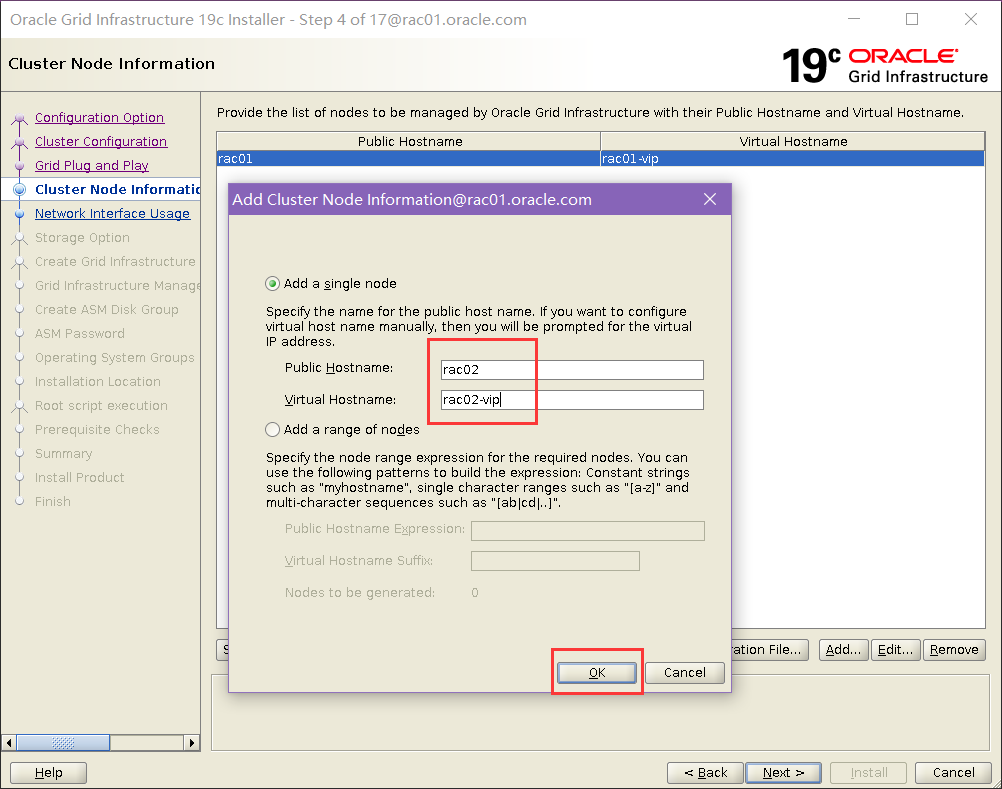

点击add按钮,添加节点2信息(必须与/etc/hosts内容严格一致)

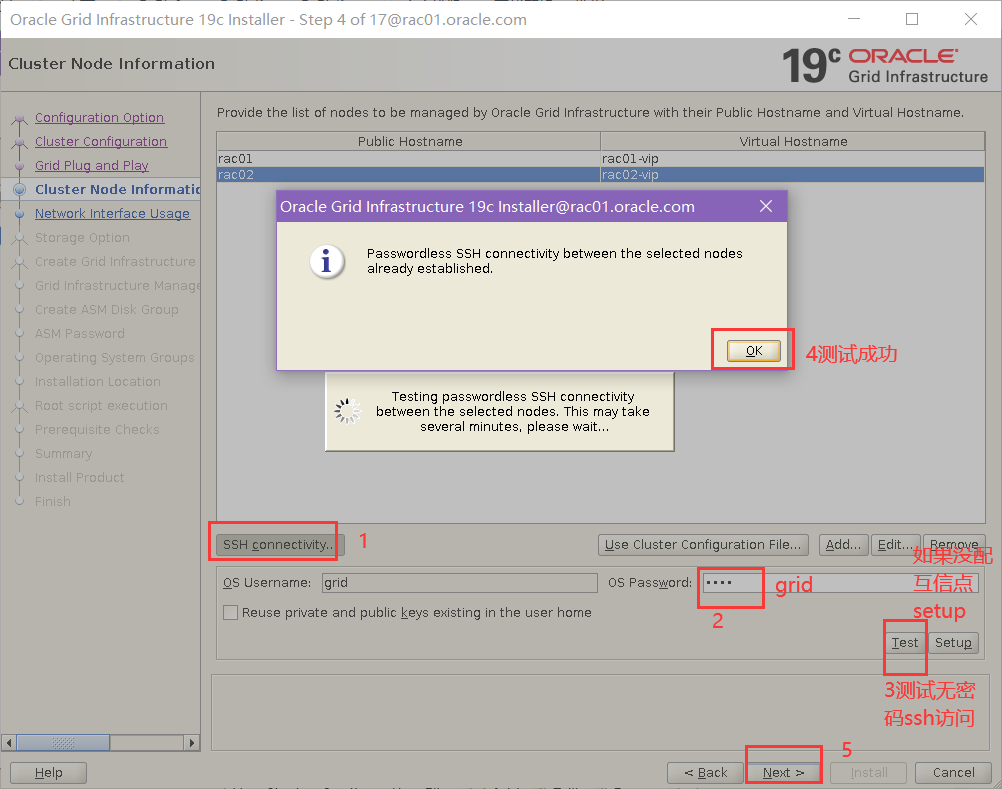

单击“SSH Connectivity”按钮,测试 grid 用户互信,

单击 Test 按钮,应该出现以下窗口,如果以下窗口不能出现,则说明 SSH 配置有问题,请退出安装程序,修改 ssh 配置,然后重新执行上述步骤:

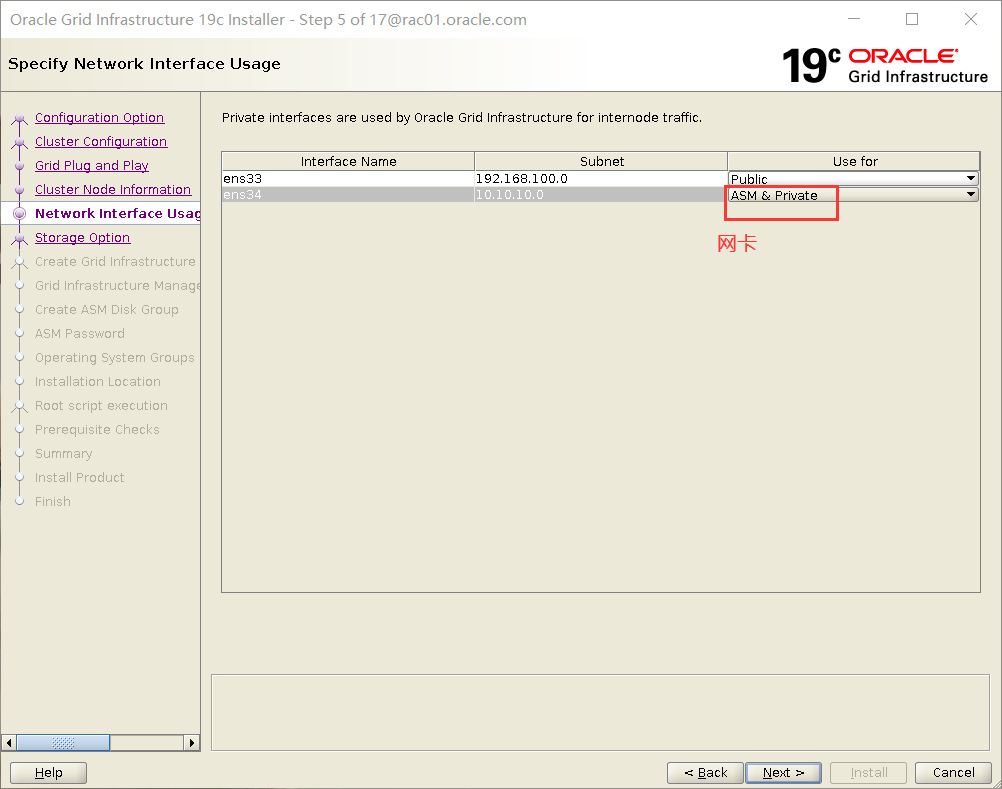

选择网卡

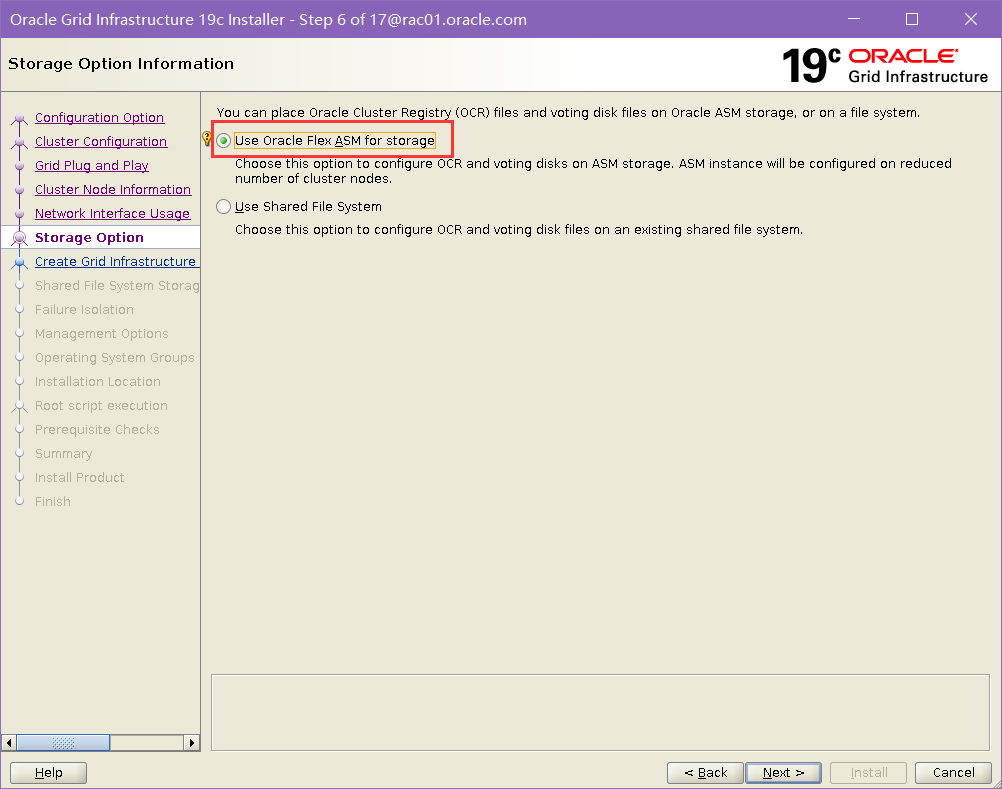

选择使用ASM

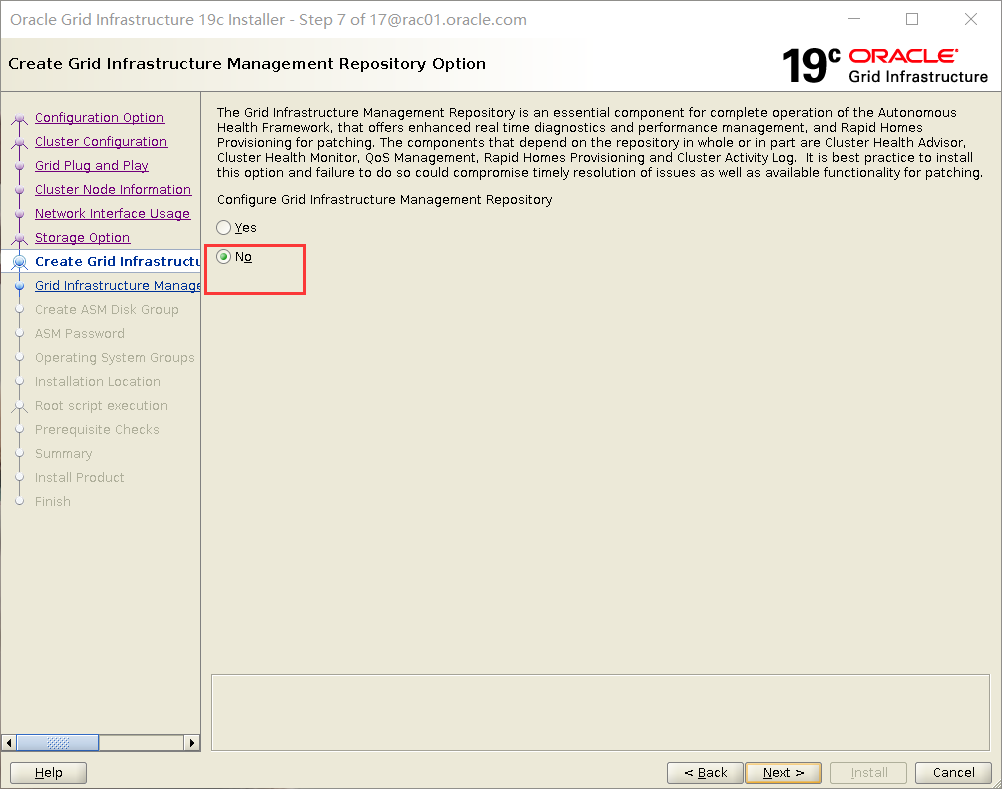

选择不使用 GI repository

不安装集群配置管理库。如果安装建议单独分配磁盘。在这有点区别,12 选 no 也会强制装,而且不能将 mgmtdb 单独装在一个磁盘,导致 ocr 磁盘不能少于 40g。18 的时候可以单独分,19 选择 no 不装。

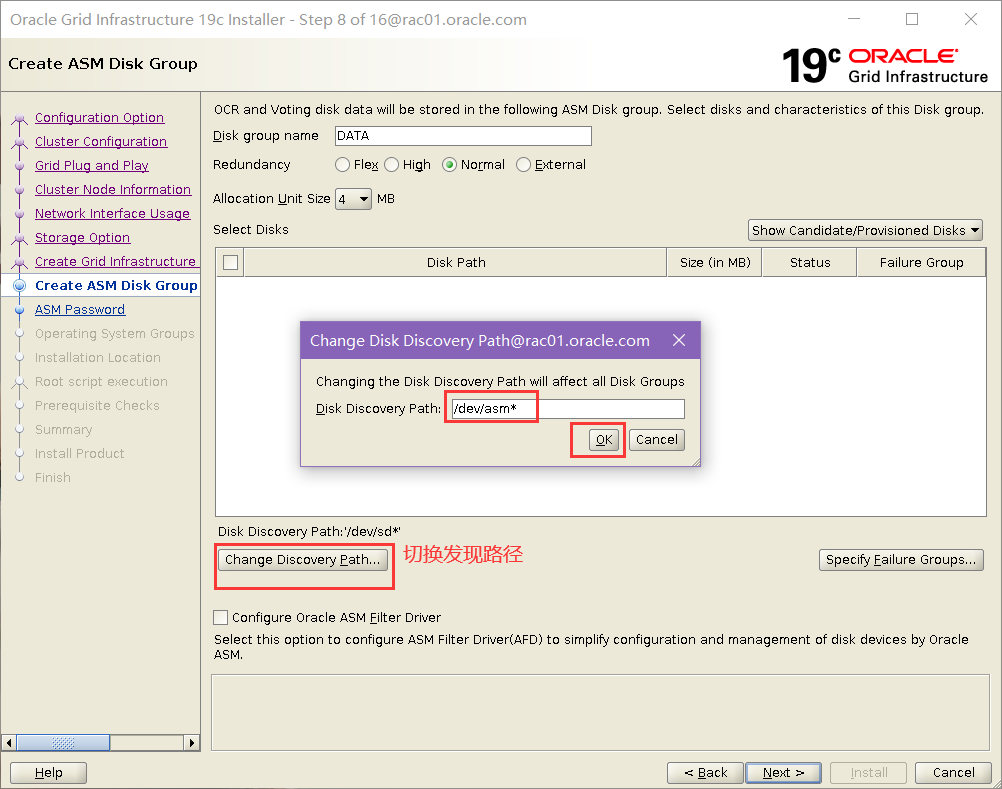

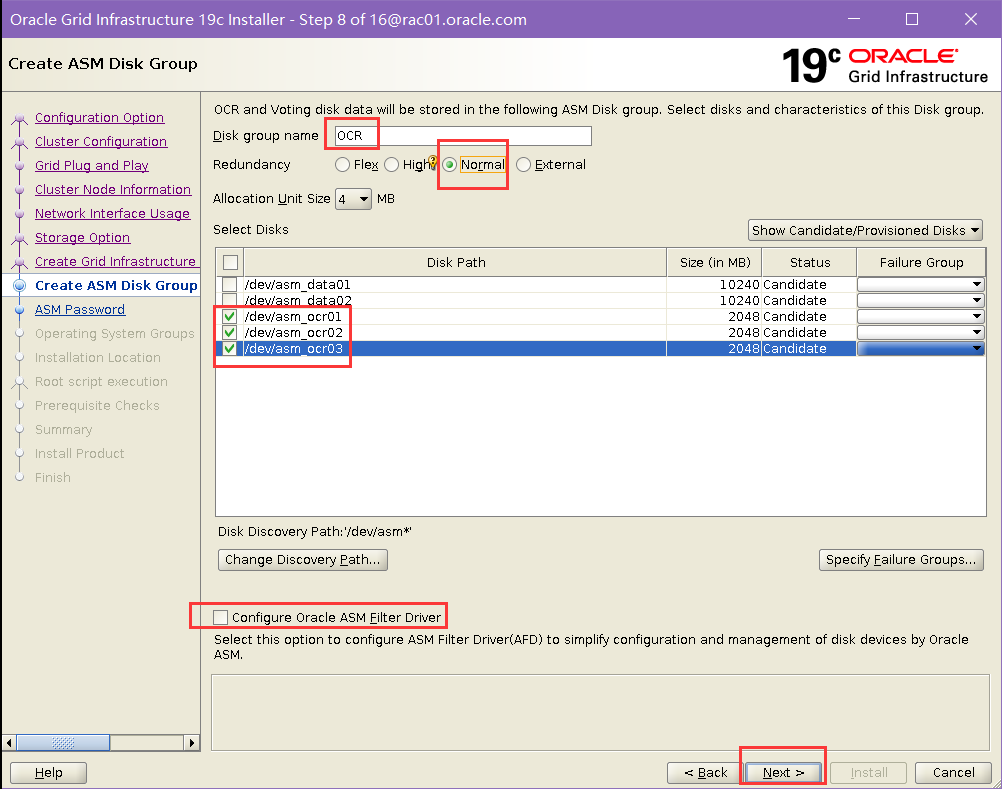

配置磁盘组

点击 change discovery path 按钮,根据实际情况,修改磁盘扫描路径

选择需要的磁盘,不选择 AFD

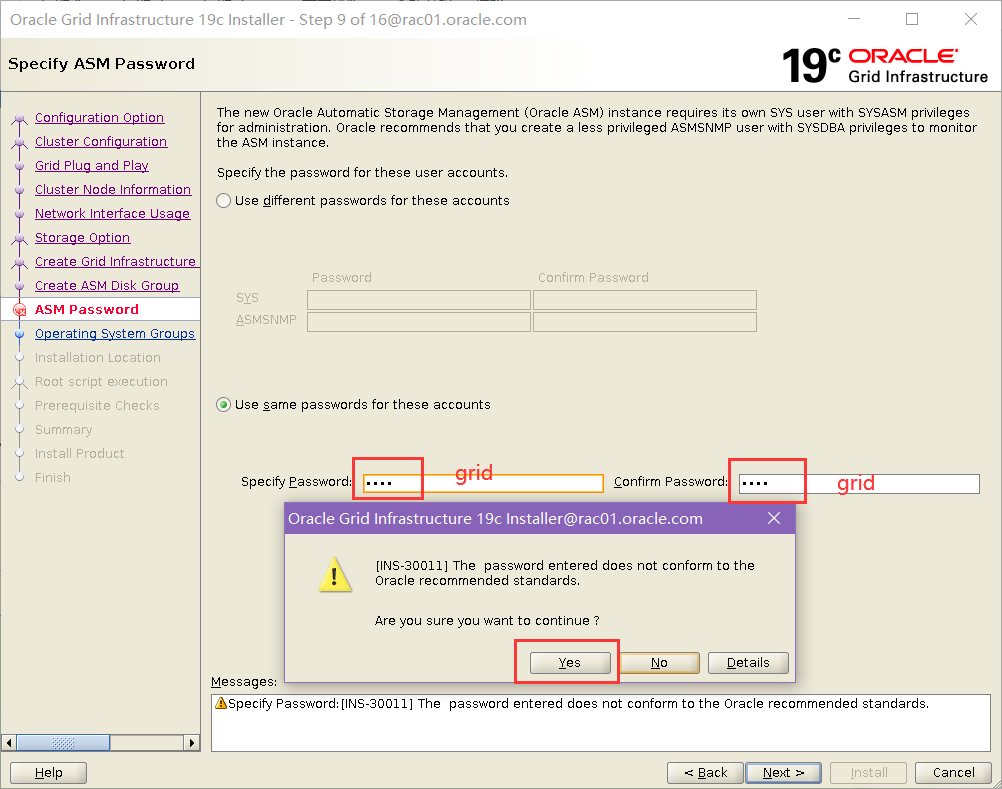

设置 ASM 口令

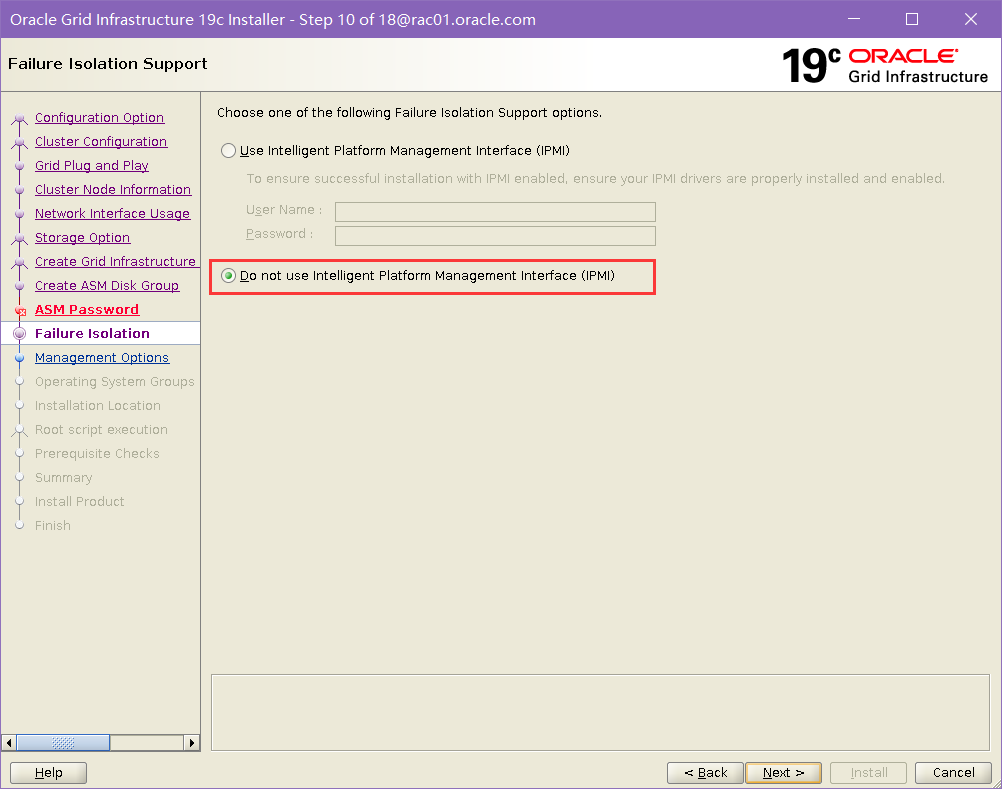

不使用 IPMI

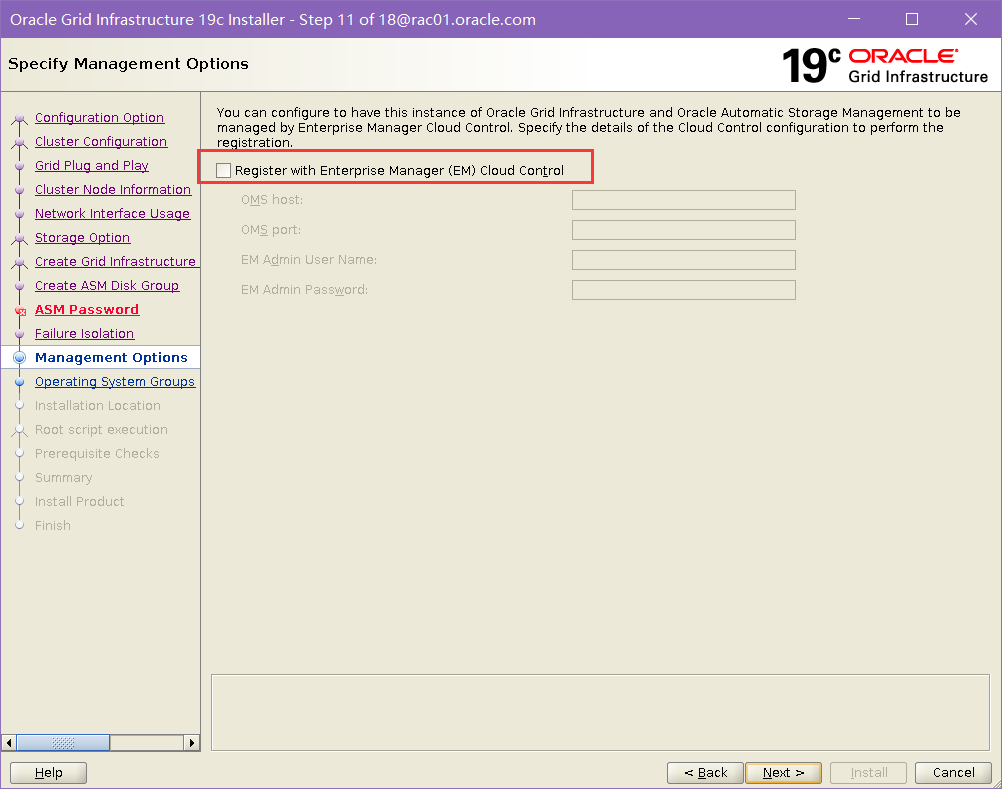

不向 EM 注册

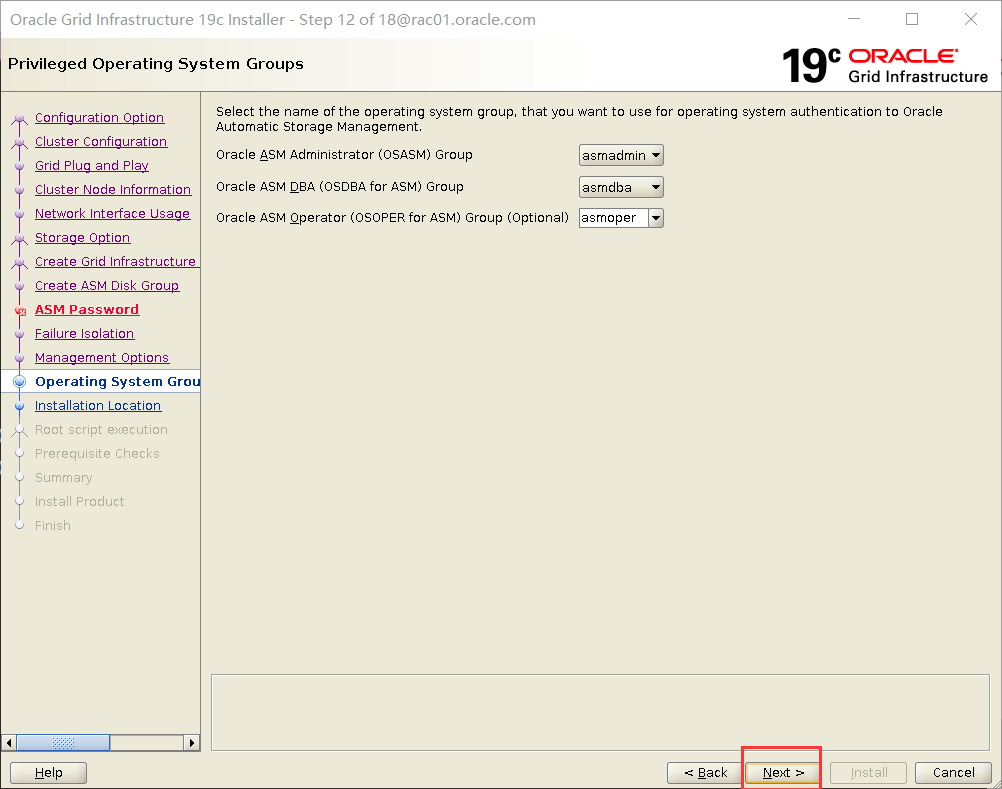

确认用户组

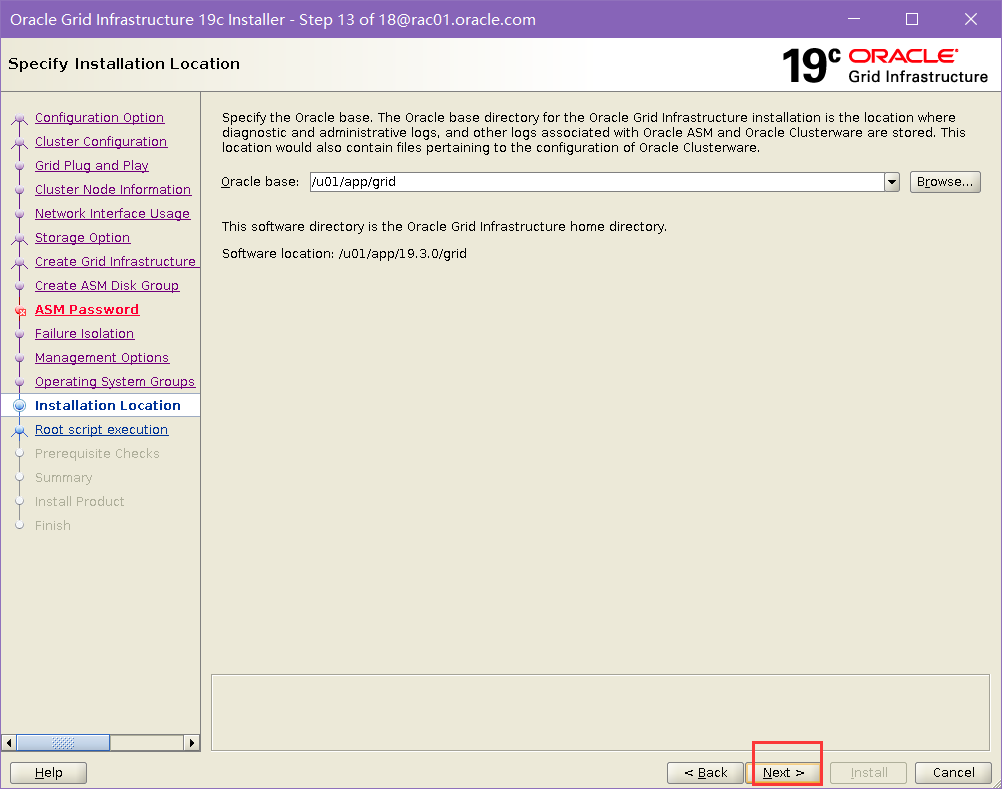

确认安装路径(应与 grid 环境变量中配置一致)

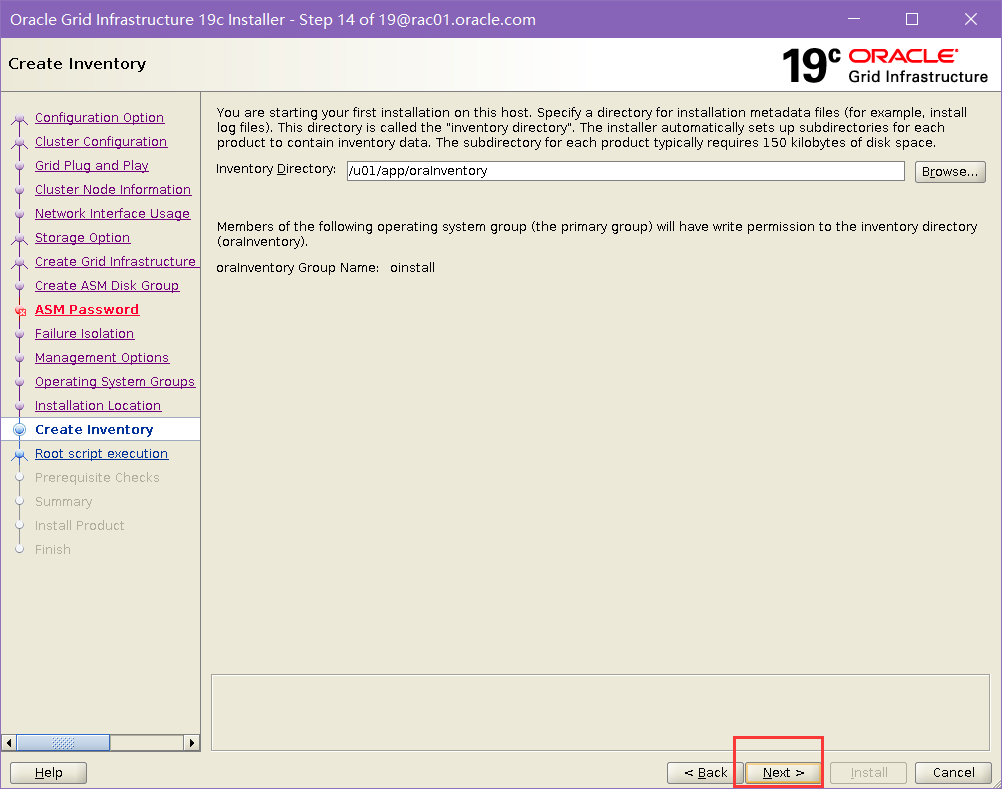

确认 inventory 目录(应与 grid 环境变量中配置一致)

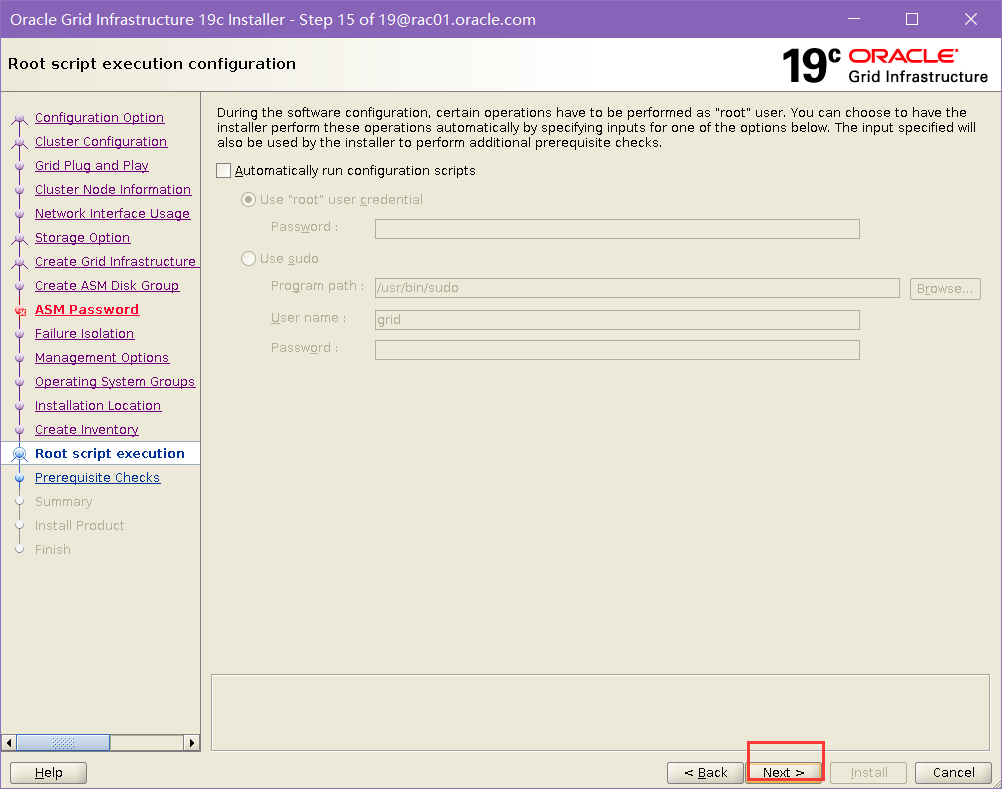

不自动跑脚本

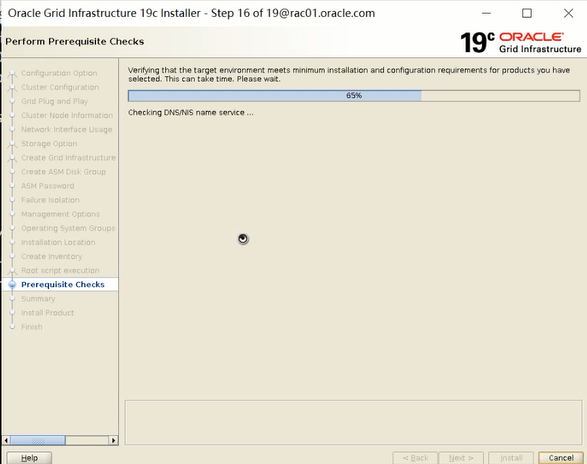

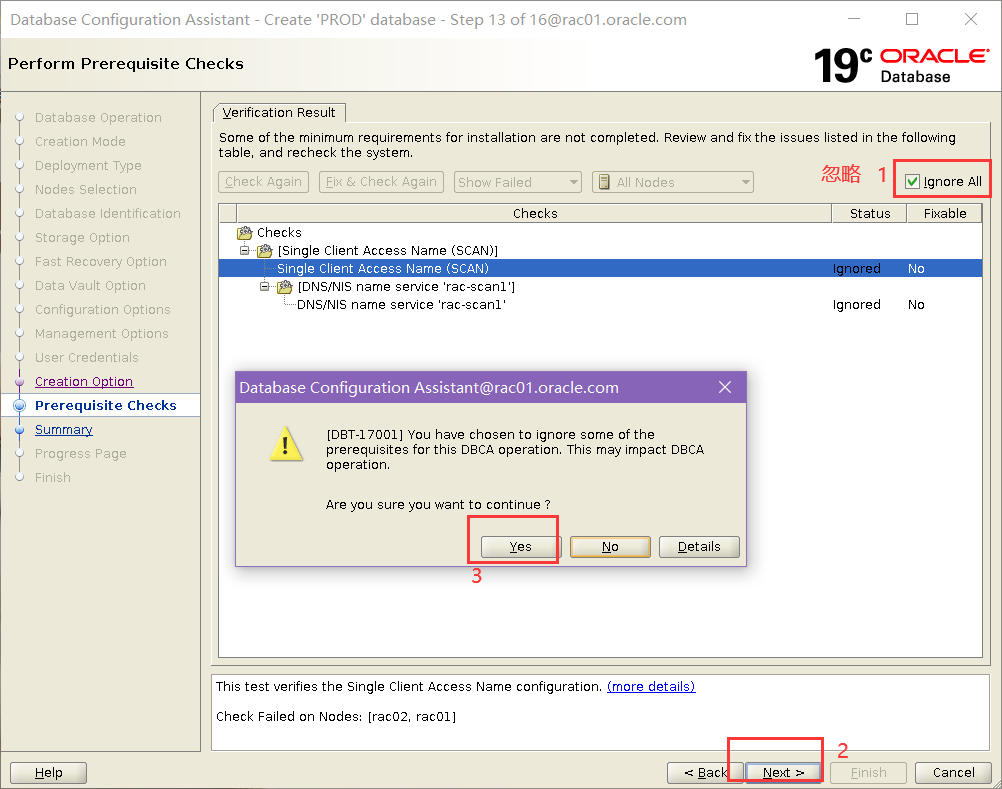

安装前检测

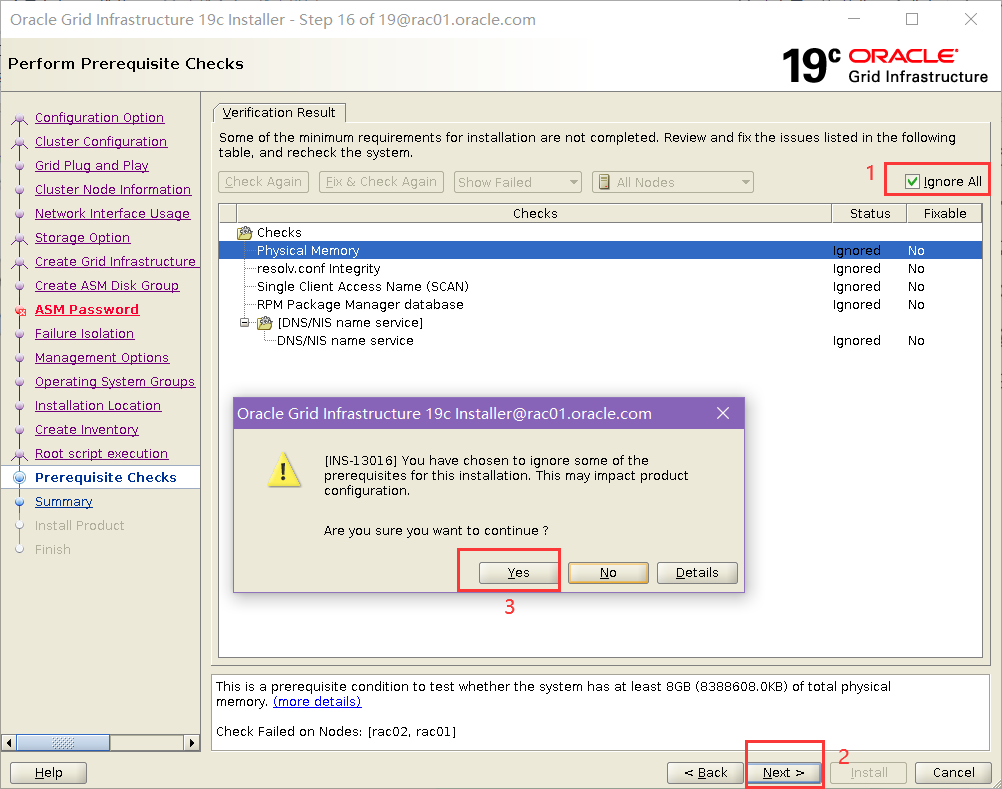

可忽略检测结果

此处需要说明的是:

1)相关 limit 设置经过检查确认满足要求,可忽略;

2)resolv.conf 为 DNS 解析的配置,可忽略;

3)由于没有配置 Ntp,dns 和 scan 可以选择忽略;

因此可以选择“Ignore All”,然后单击“Next”按钮执行下一步。

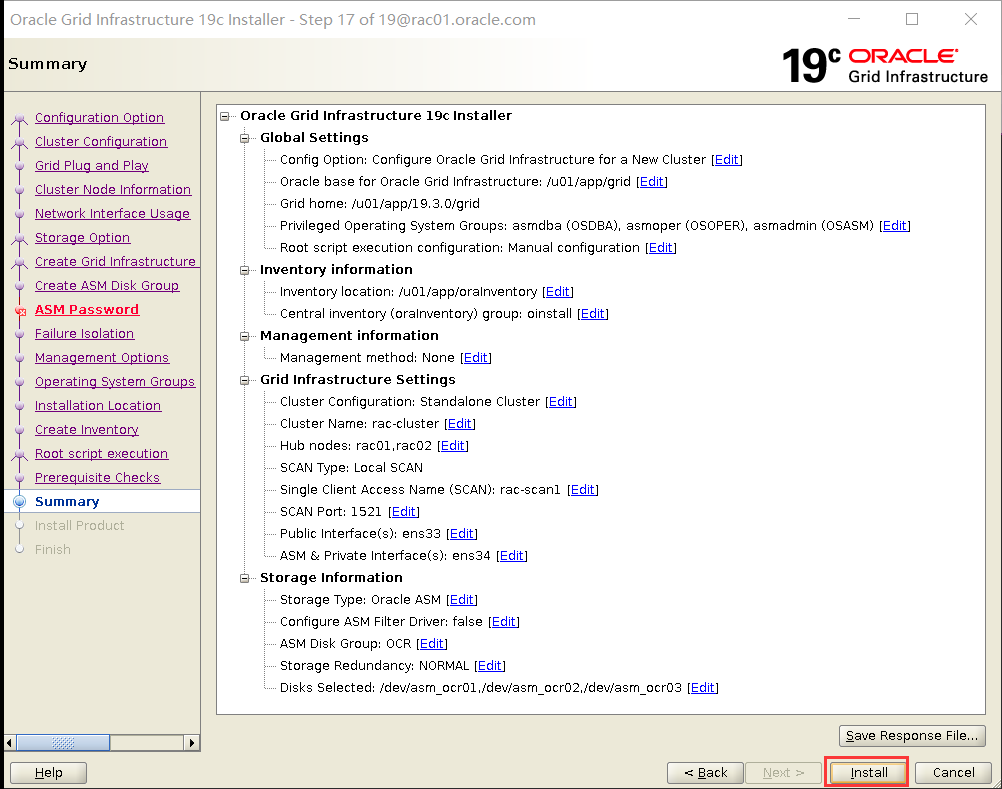

最后安装前展示

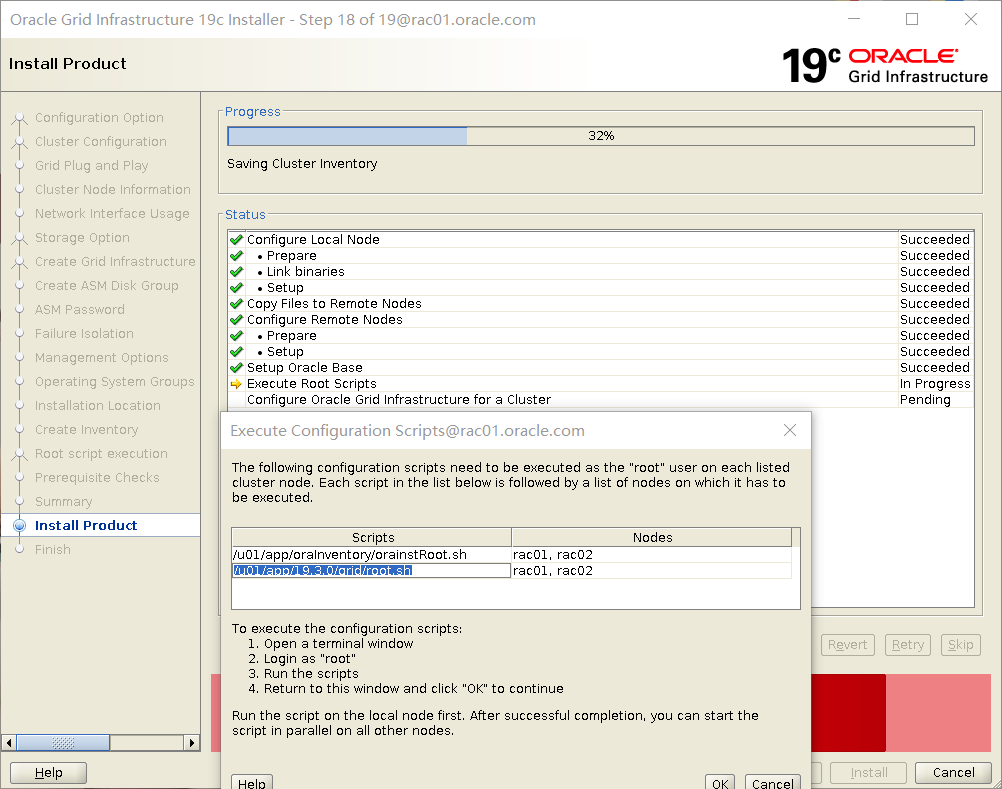

开始安装

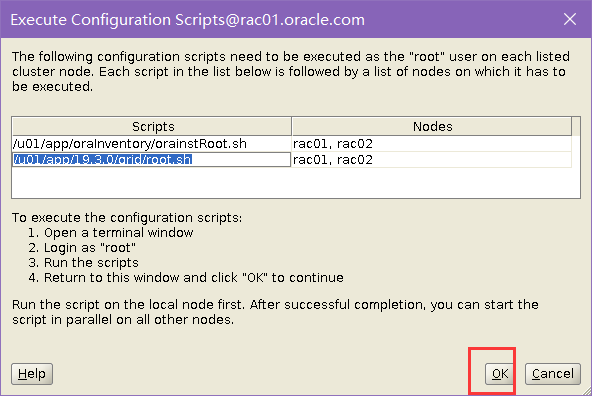

跑脚本顺序从上到下,从左到右

Rac01 root 用户

/u01/app/oraInventory/orainstRoot.sh

Rac02 root 用户

/u01/app/oraInventory/orainstRoot.sh

Rac01 root 用户

/u01/app/19.3.0/grid/root.sh

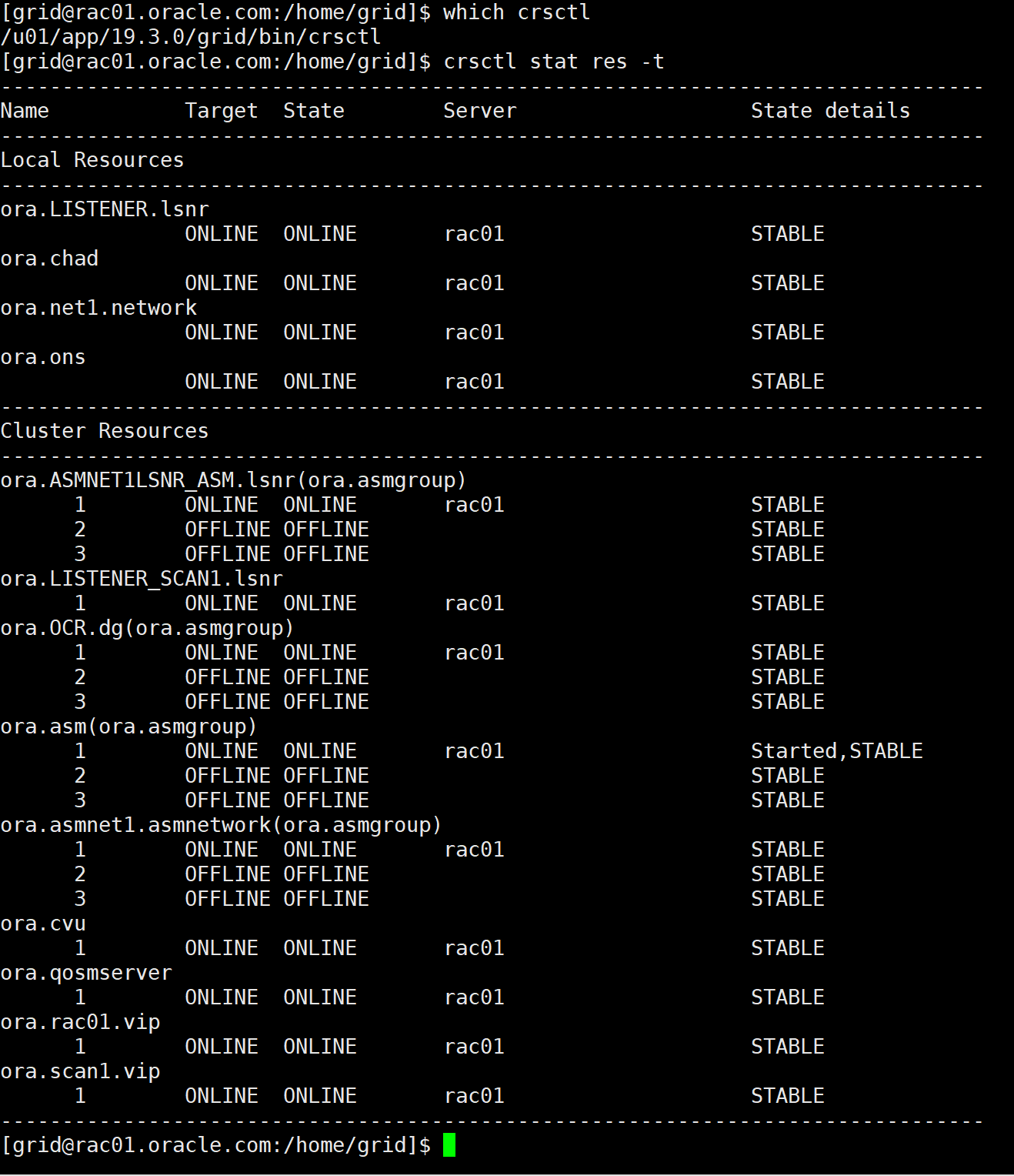

rac01 grid 用户

查看集群已经启动,此时只有一个节点

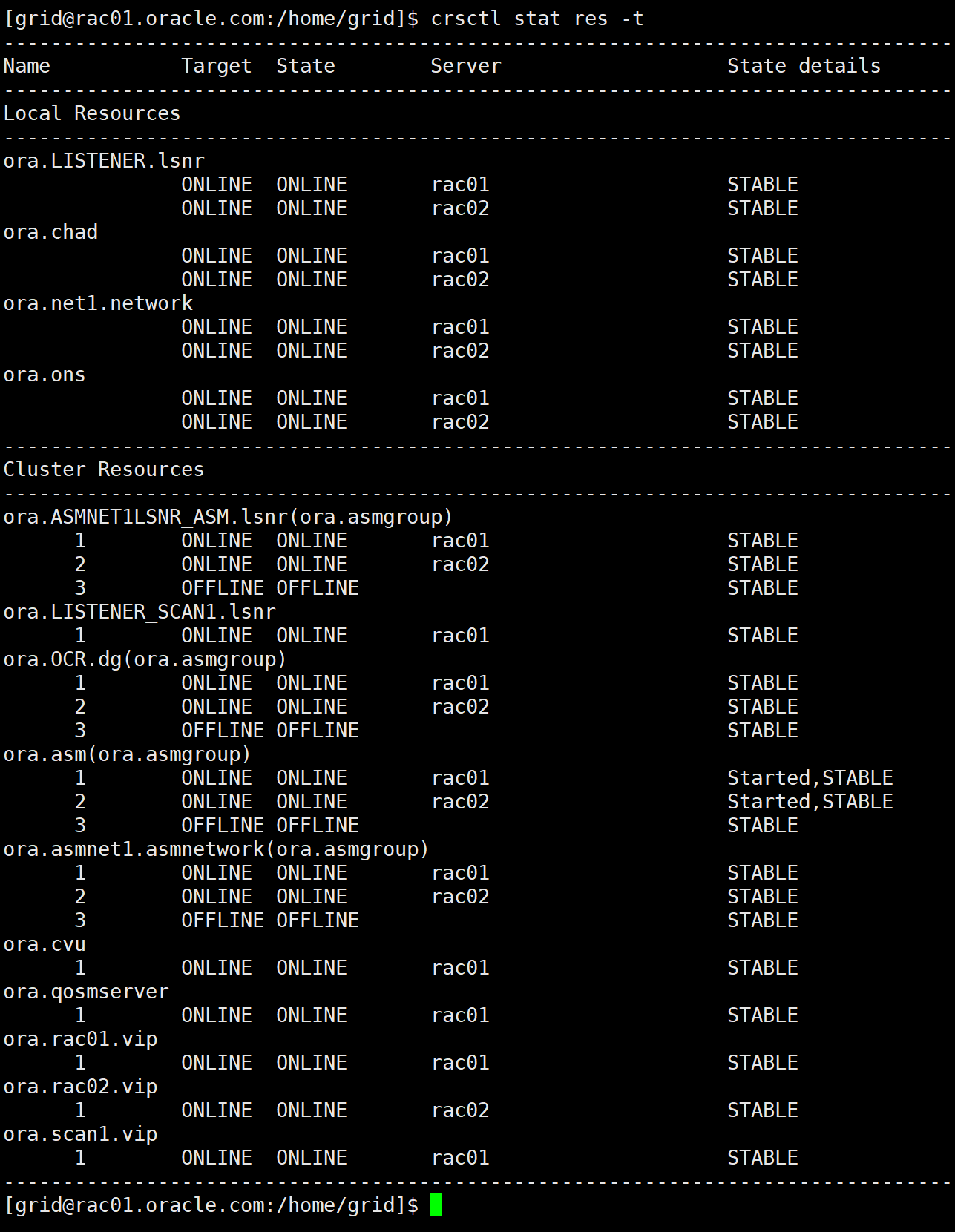

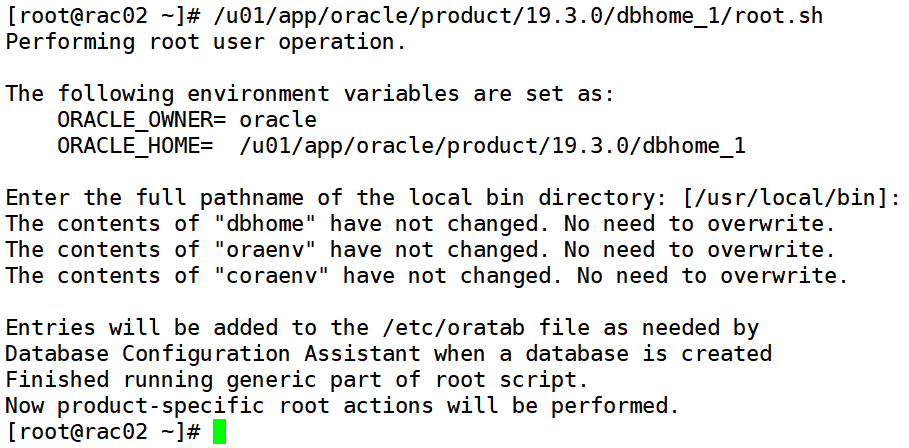

Rac02 root 用户

/u01/app/19.3.0/grid/root.sh

此时有两个节点

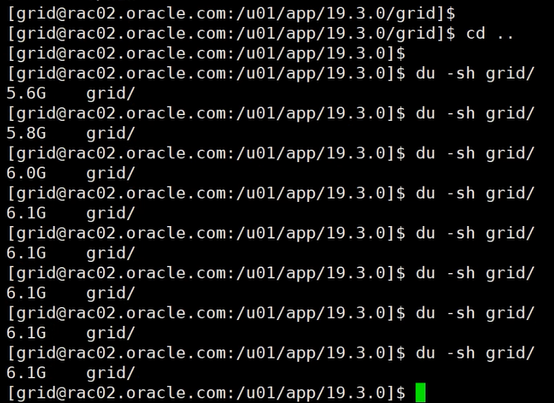

过程中grid用户

cd $ORACLE_HOME

跑完脚本点OK

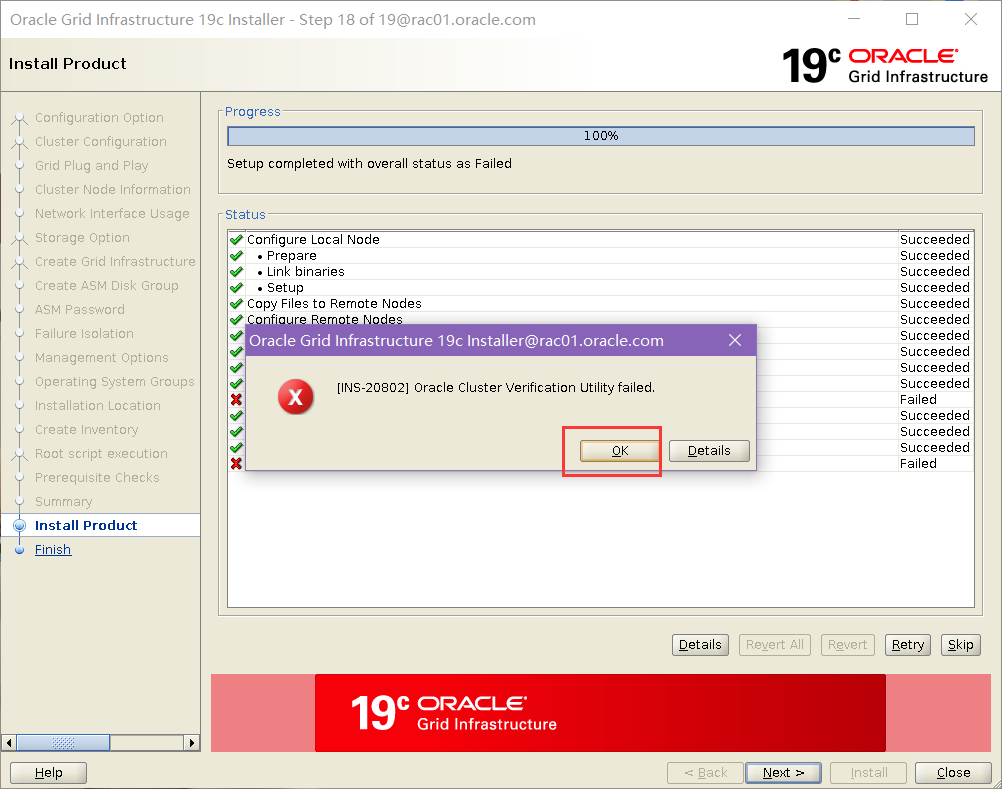

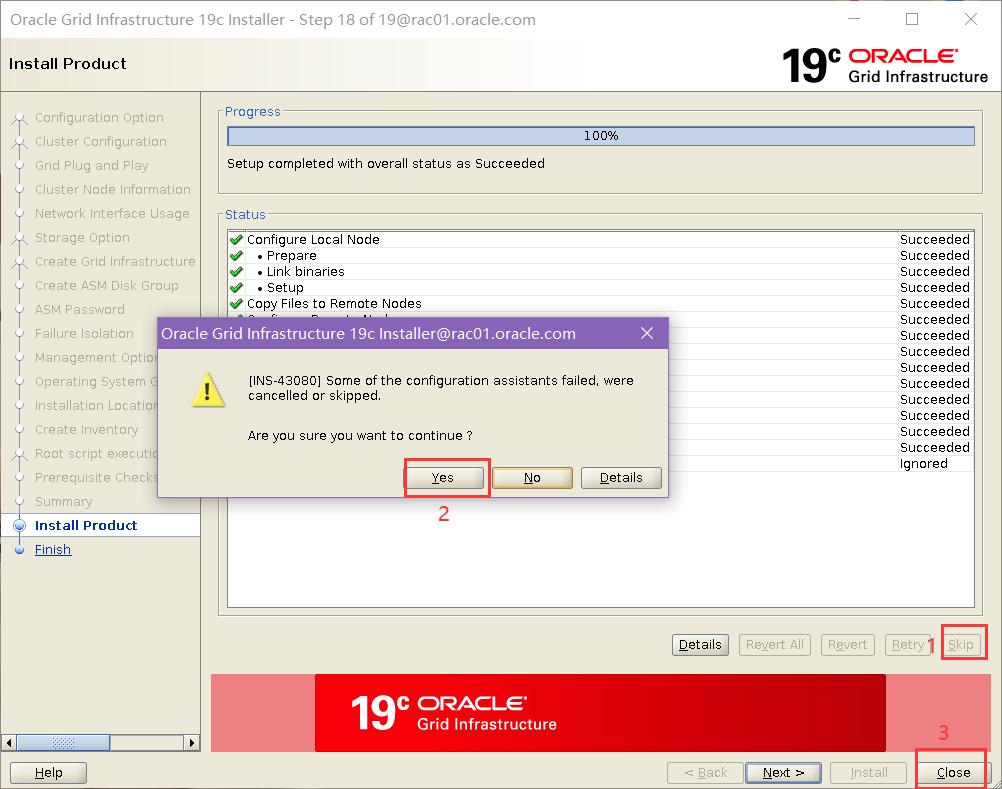

点 ok,next,这个报错是由于 scan 引起的可以忽略。

点 close 至此集群安装完毕。

安装后检查:

[grid@rac01.oracle.com:/home/grid]$ echo $ORACLE_HOME

/u01/app/19.3.0/grid

检查:

[root@rac01 ~]# which crsctl

/usr/bin/which: no crsctl in (/usr/local/sbin:/usr/local/bin:/usr/sbin:/usr/bin:/root/bin:/root/bin)

[root@rac01 ~]# vi /etc/profile

末尾加上export PATH=$PATH:/u01/app/19.3.0/grid/bin

[root@rac01 ~]# source /etc/profile

[root@rac01 ~]# which crsctl

/u01/app/19.3.0/grid/bin/crsctl

[root@rac01 ~]# crsctl check crs

CRS-4638: Oracle High Availability Services is online

CRS-4537: Cluster Ready Services is online

CRS-4529: Cluster Synchronization Services is online

CRS-4533: Event Manager is online

[root@rac01 ~]# crsctl check ctss

CRS-4701: The Cluster Time Synchronization Service is in Active mode.

CRS-4702: Offset (in msec): 0

[root@rac01 ~]#

[root@rac01 ~]# crs_stat -t

bash: crs_stat: command not found...

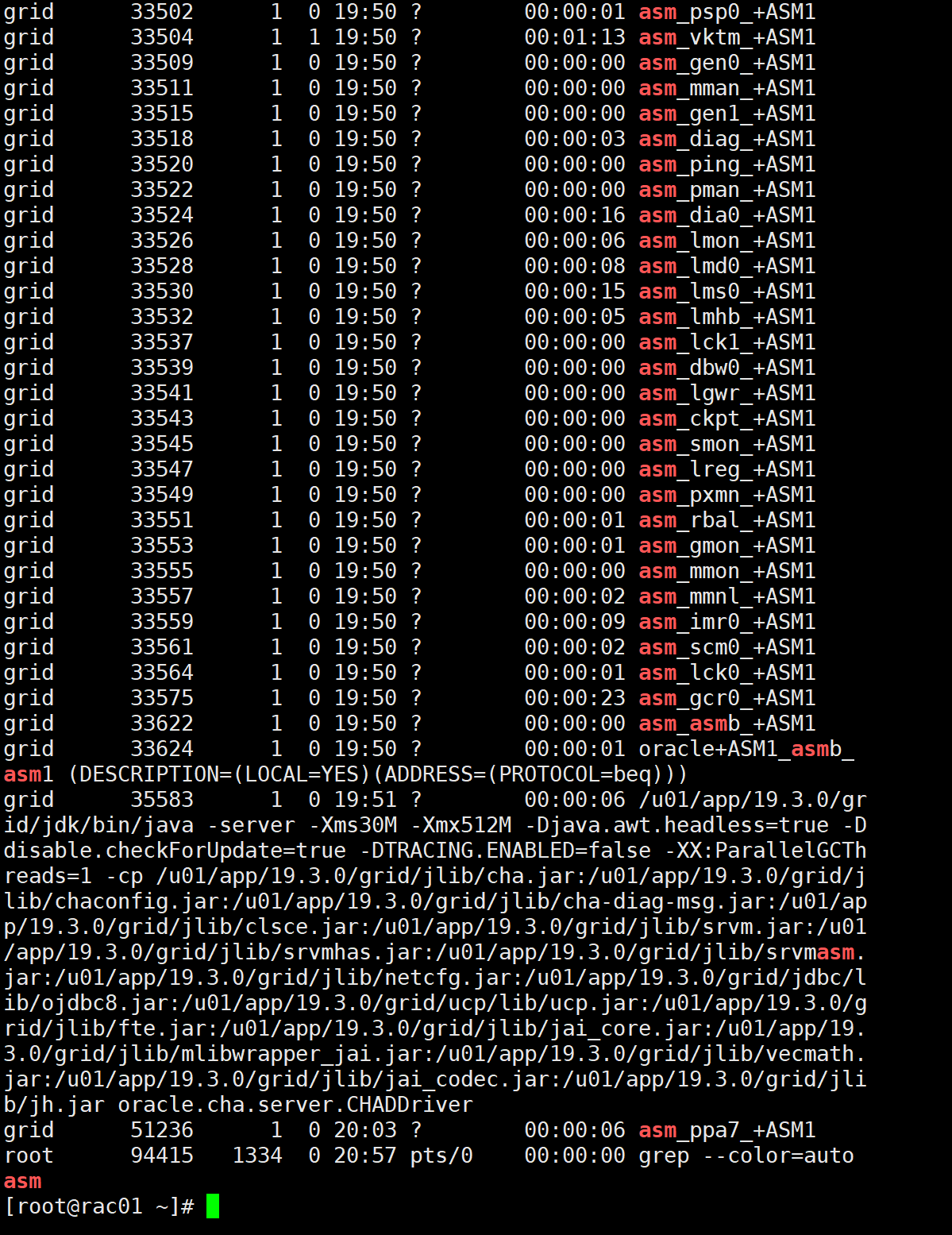

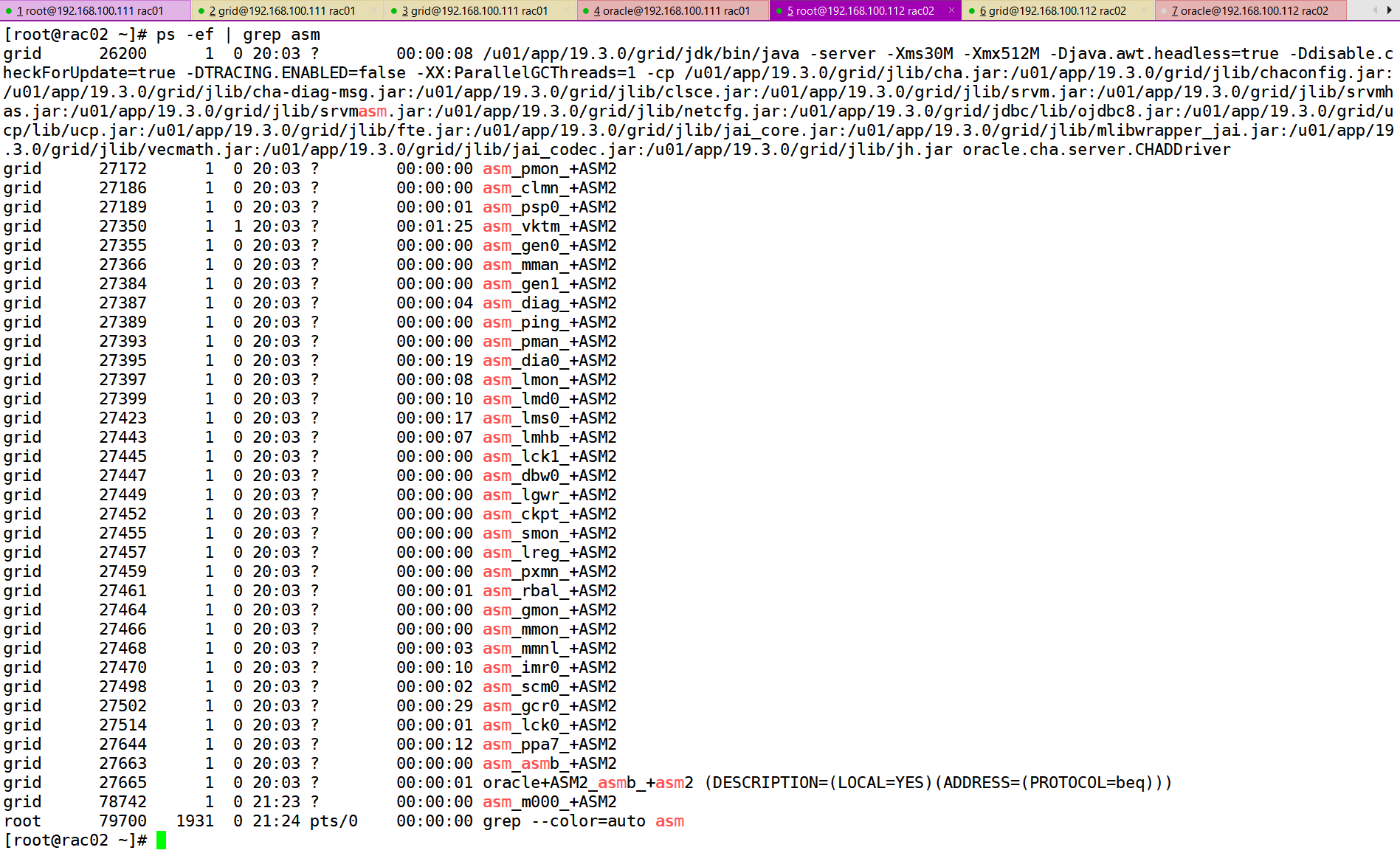

查看后台进程

[root@rac01 ~]# ps -ef | grep asm

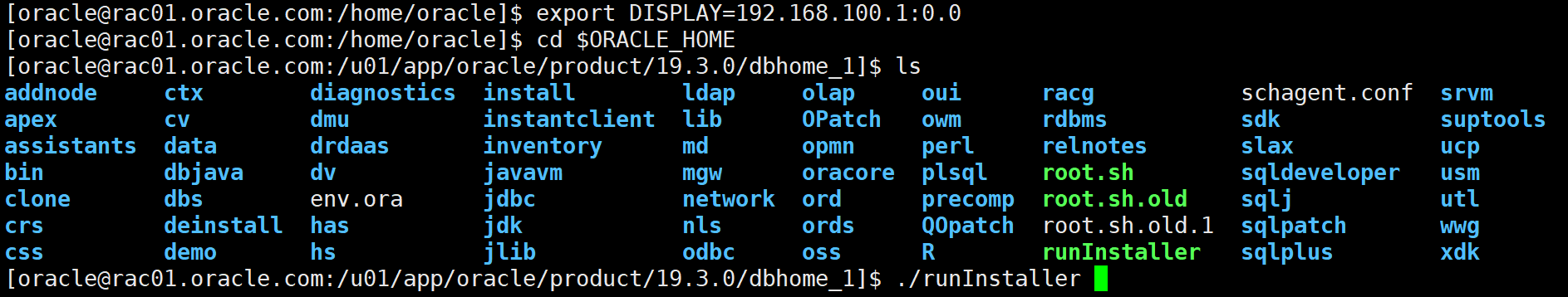

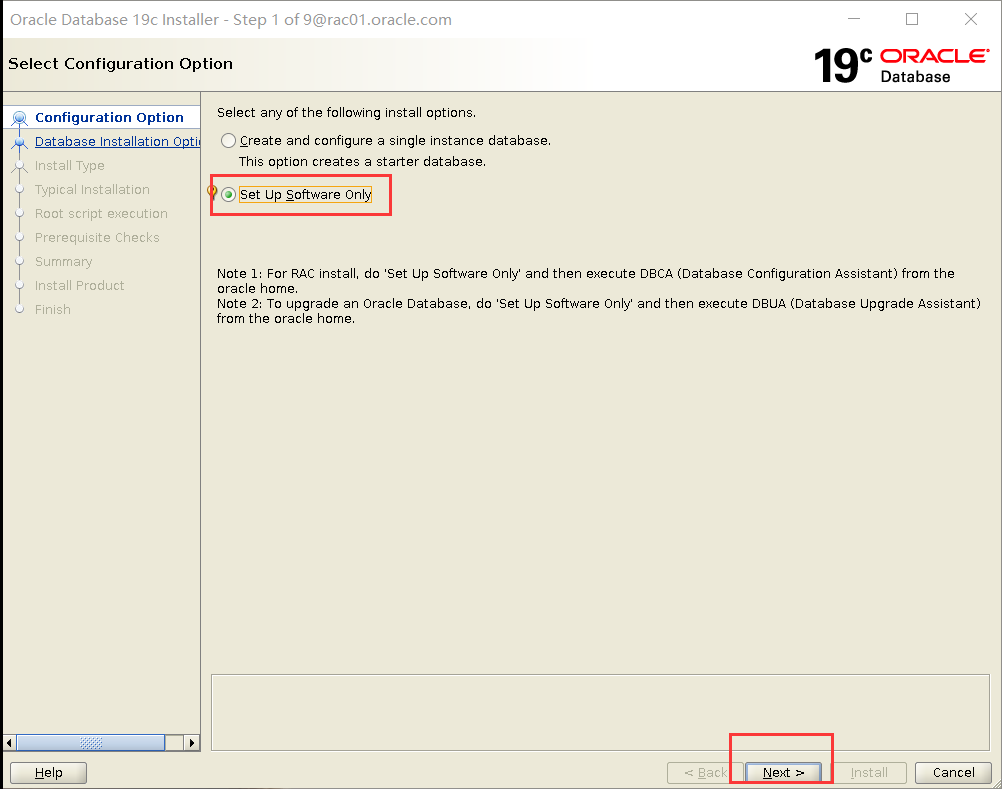

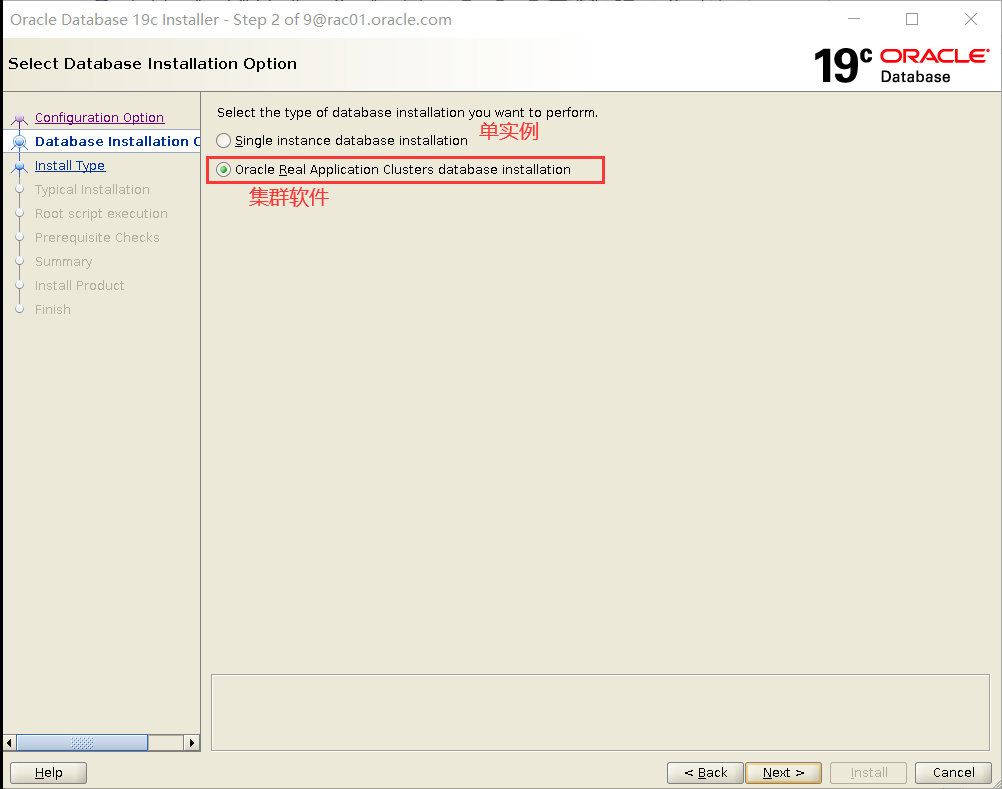

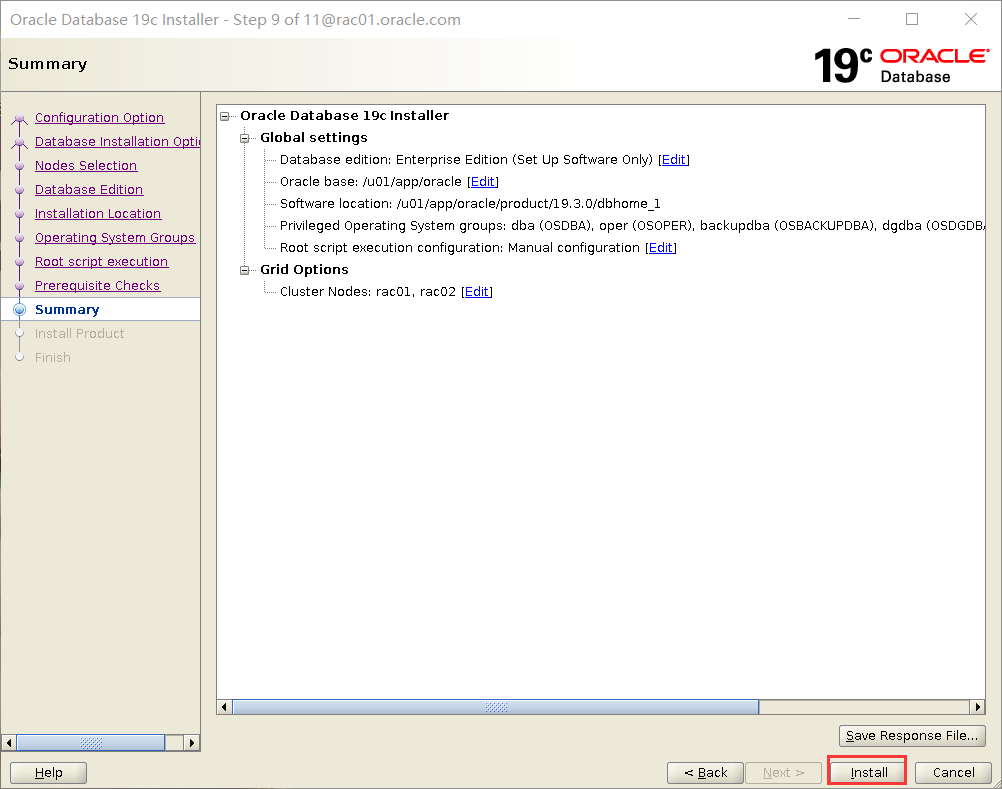

- 应用 DBRU 并安装 DB 软件

启动安装

只安装软件

选择集群安装

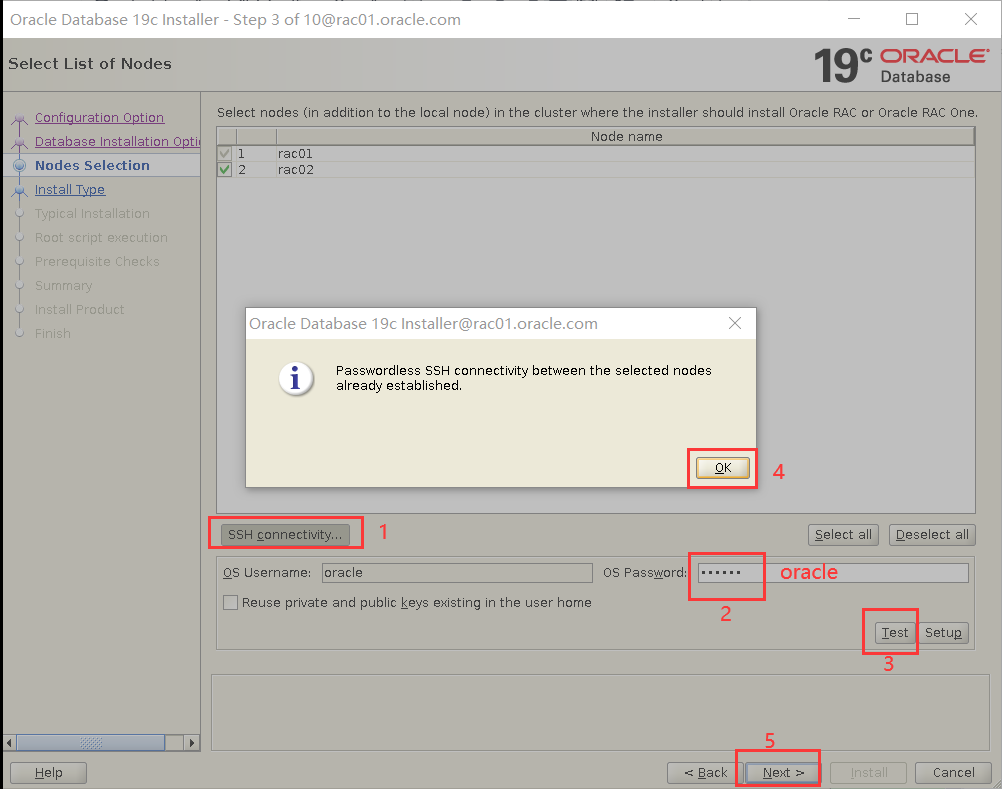

选中集群全部节点

测试 oracle 用户互信

单击“SSH Connectivity”按钮:

单击 Test 按钮,应该出现以下窗口,如果以下窗口不能出现,则说明 SSH 配置有问题,请退出安装程序,修改 ssh 配置,然后重新执行上述步骤:

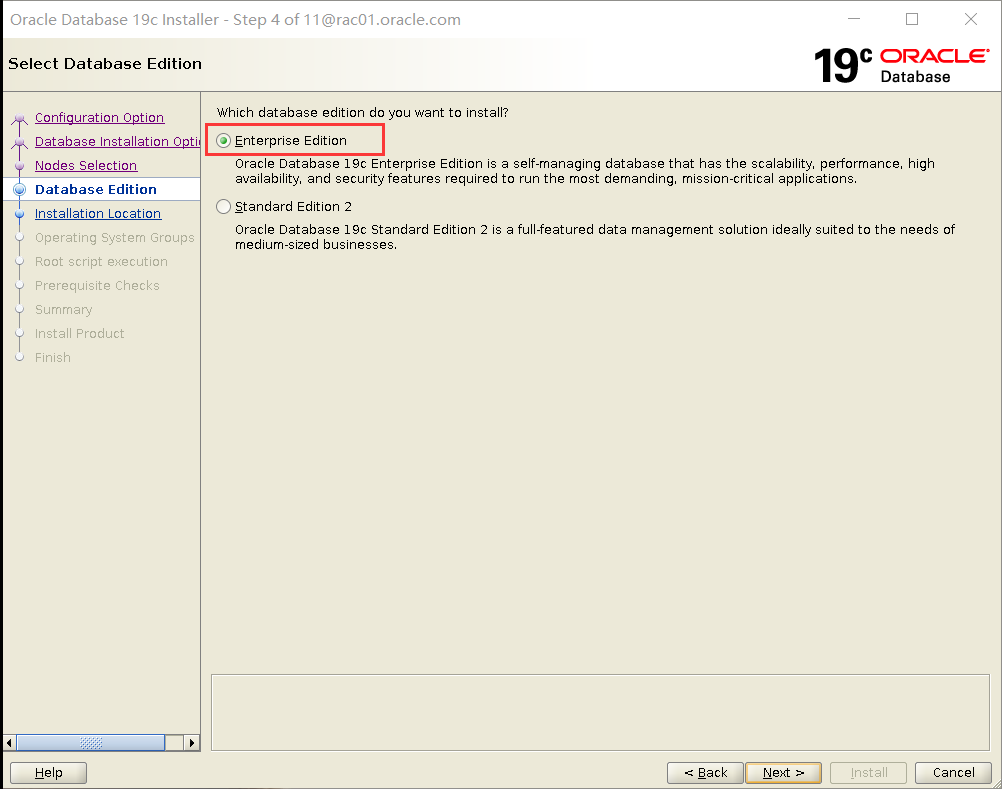

选择安装企业版

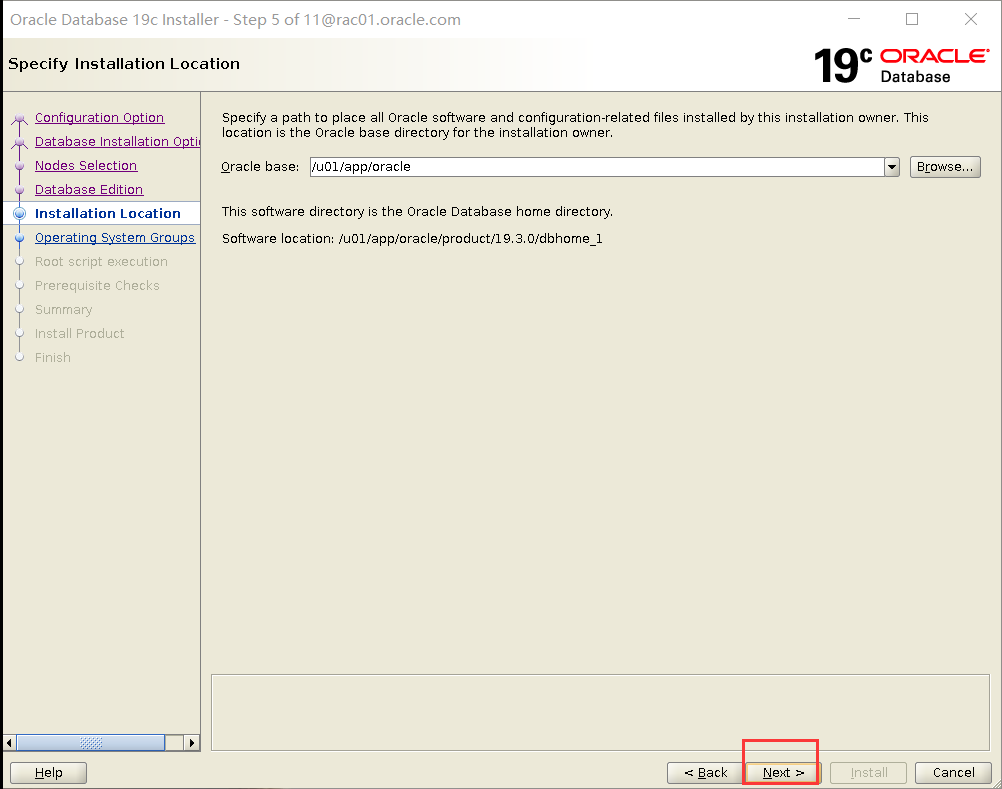

确认安装路径

安装路径应与 oracle 环境变量里设置相同

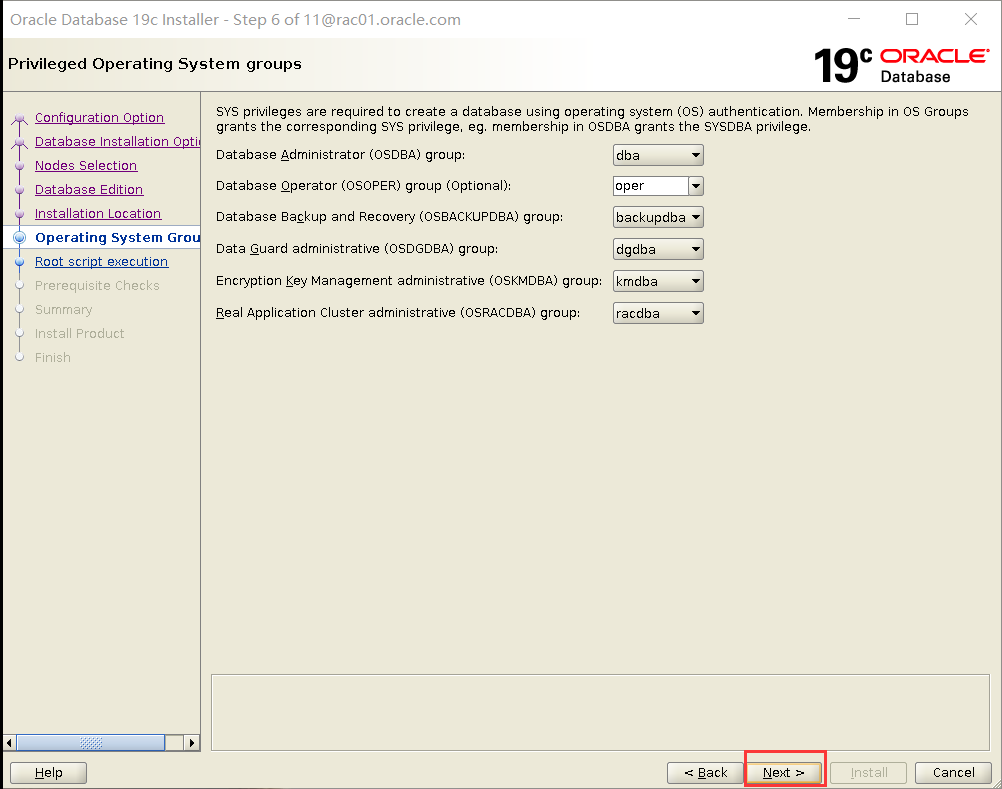

确认用户组

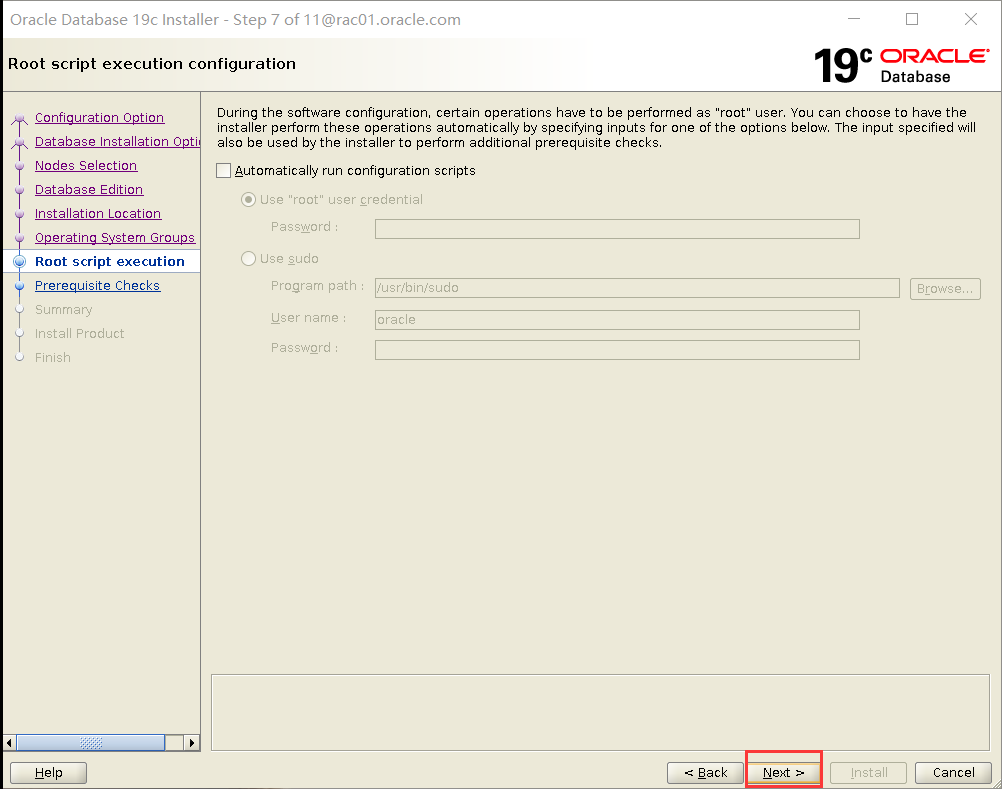

不自动运行脚本

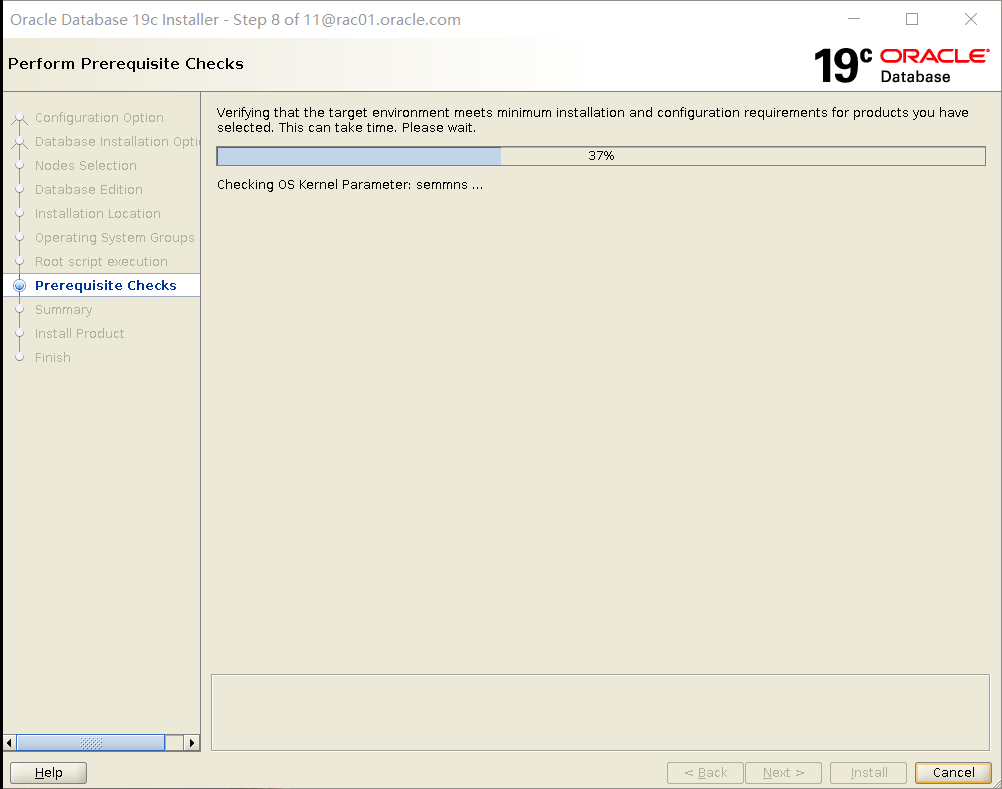

安装前环境检查

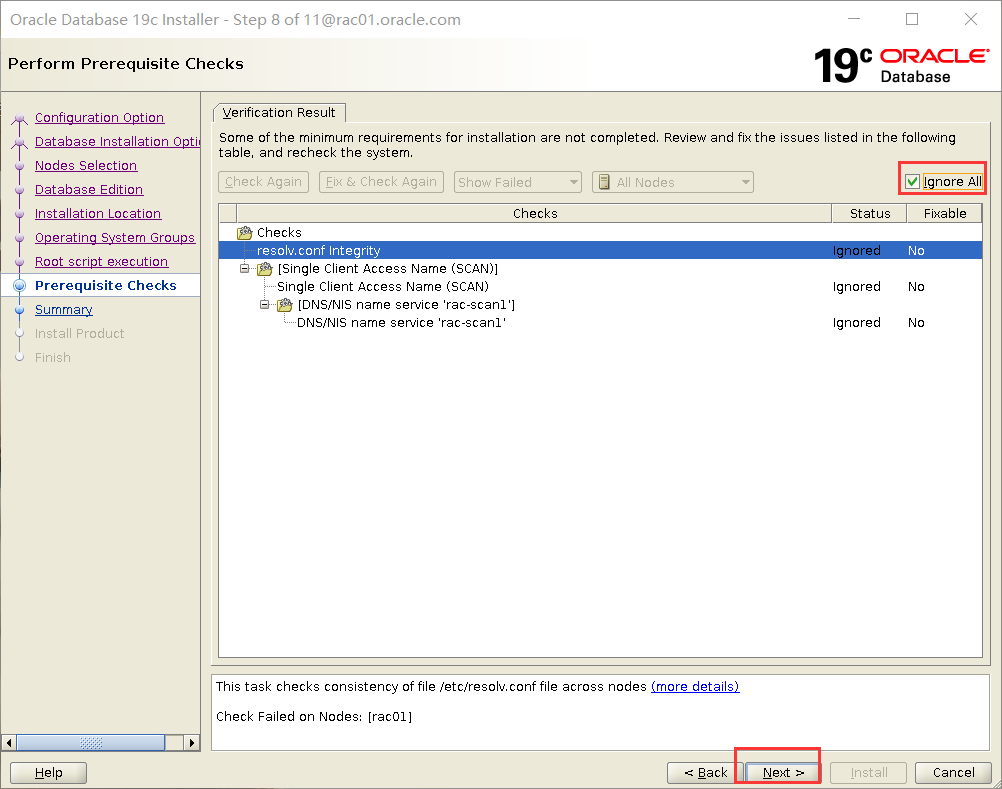

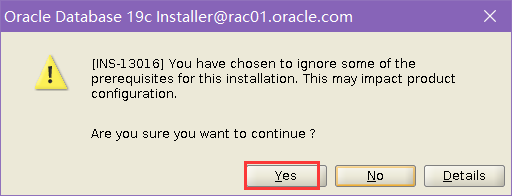

可忽略选项

安装前检查结果

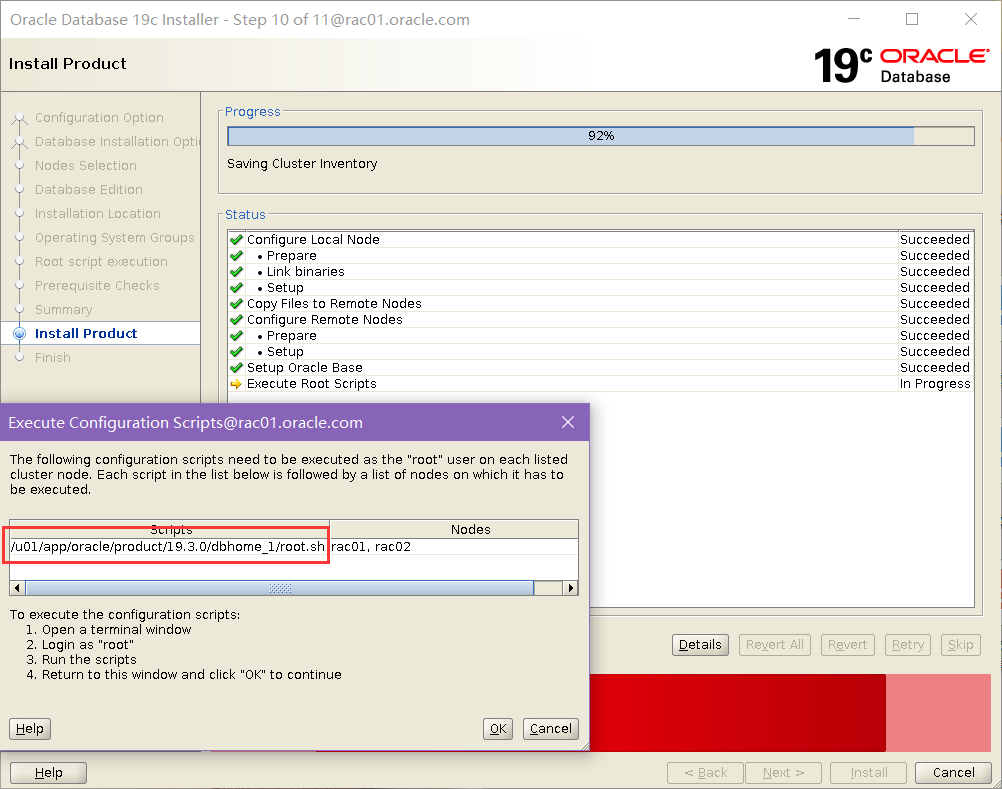

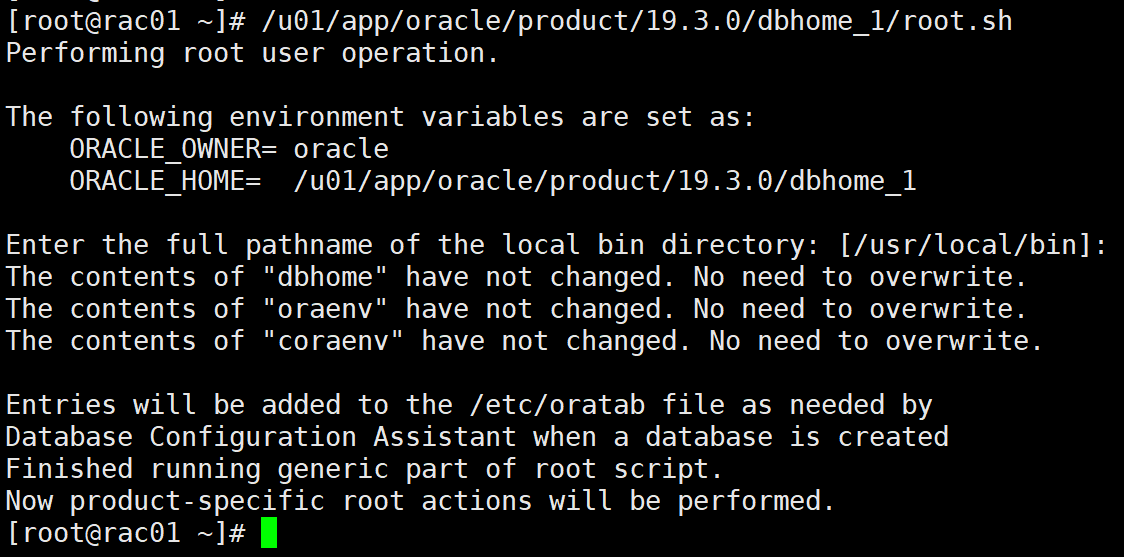

开始安装并运行root.sh

ok close

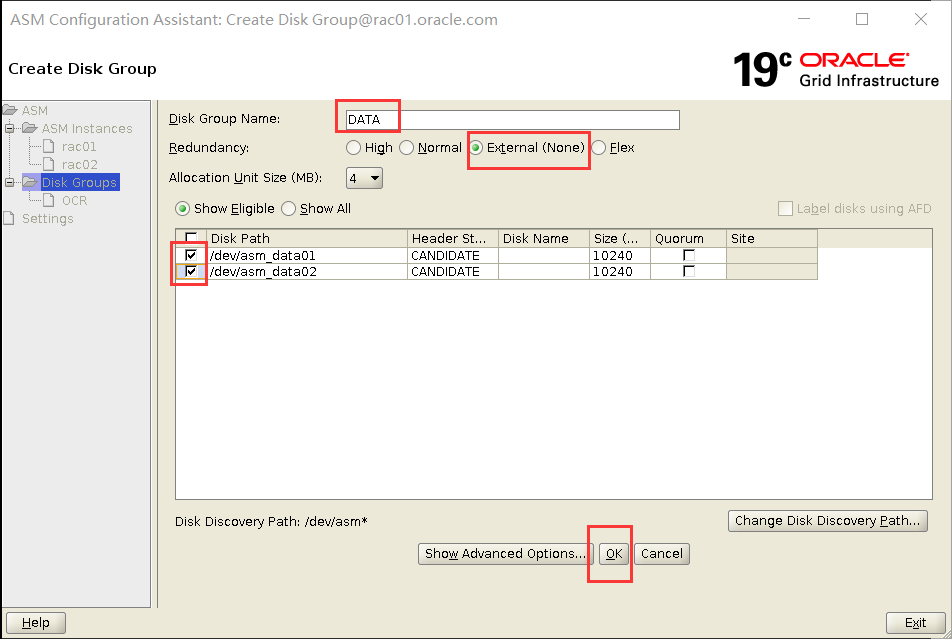

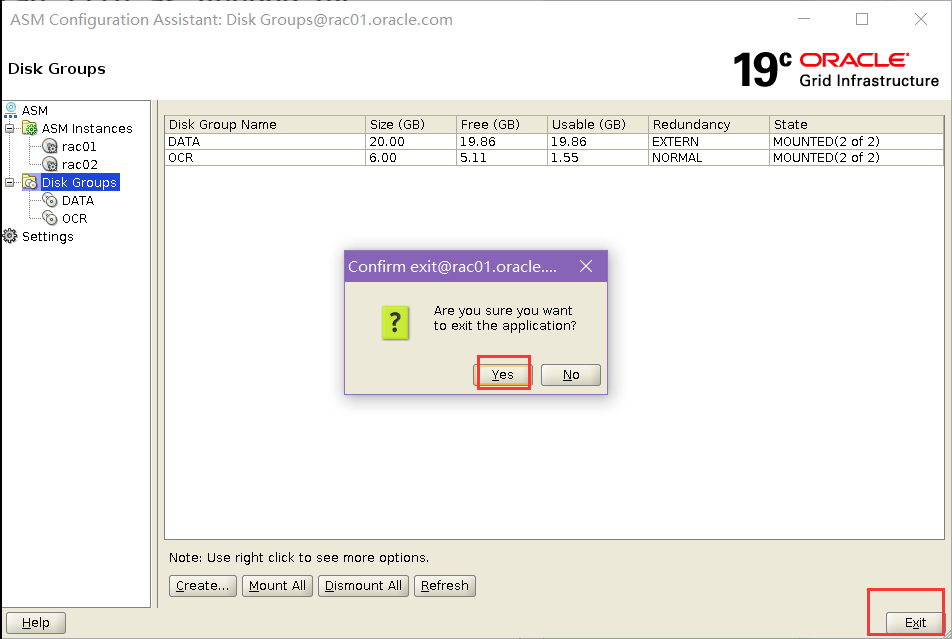

7.创建用于建库的磁盘组

启动 asmca

在 1 节点,以 grid 用户执行

[grid@rac01.oracle.com:/home/grid]$ export DISPLAY=192.168.100.1:0.0

[grid@rac01.oracle.com:/home/grid]$ asmca

Disk Groups create

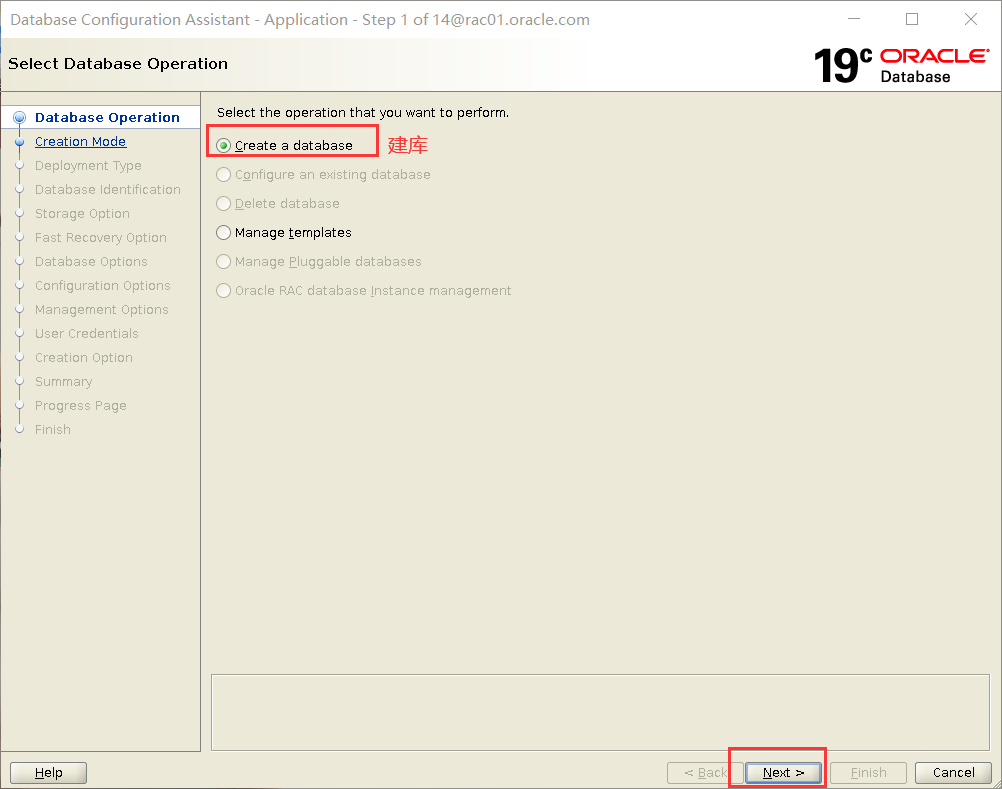

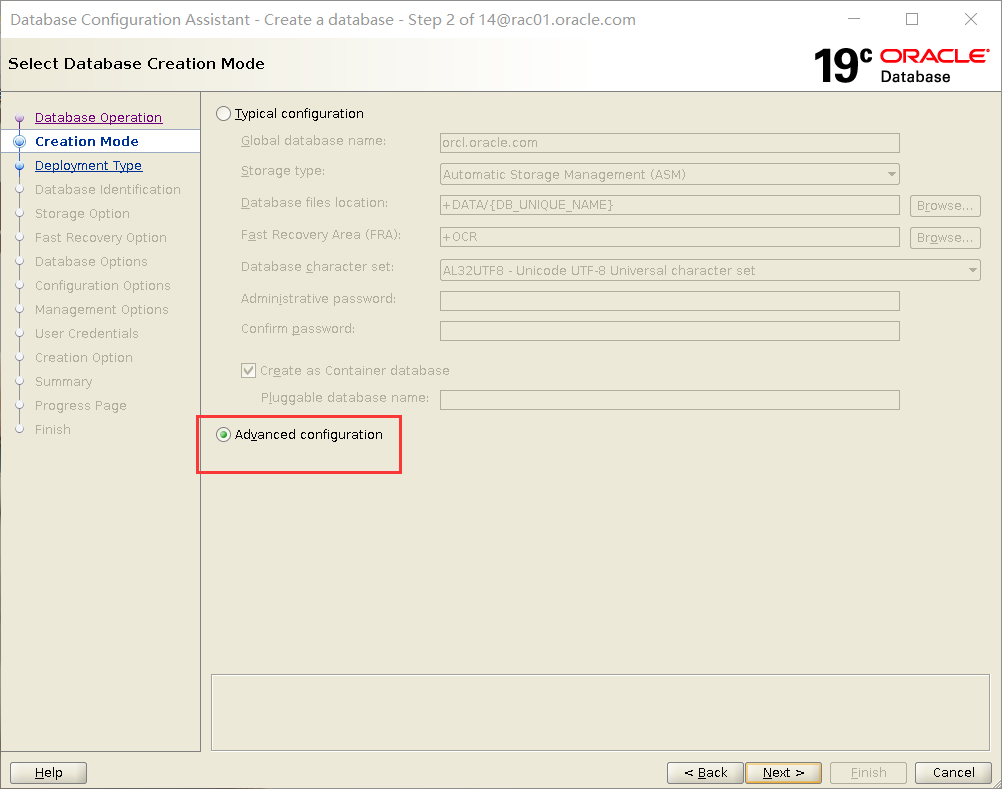

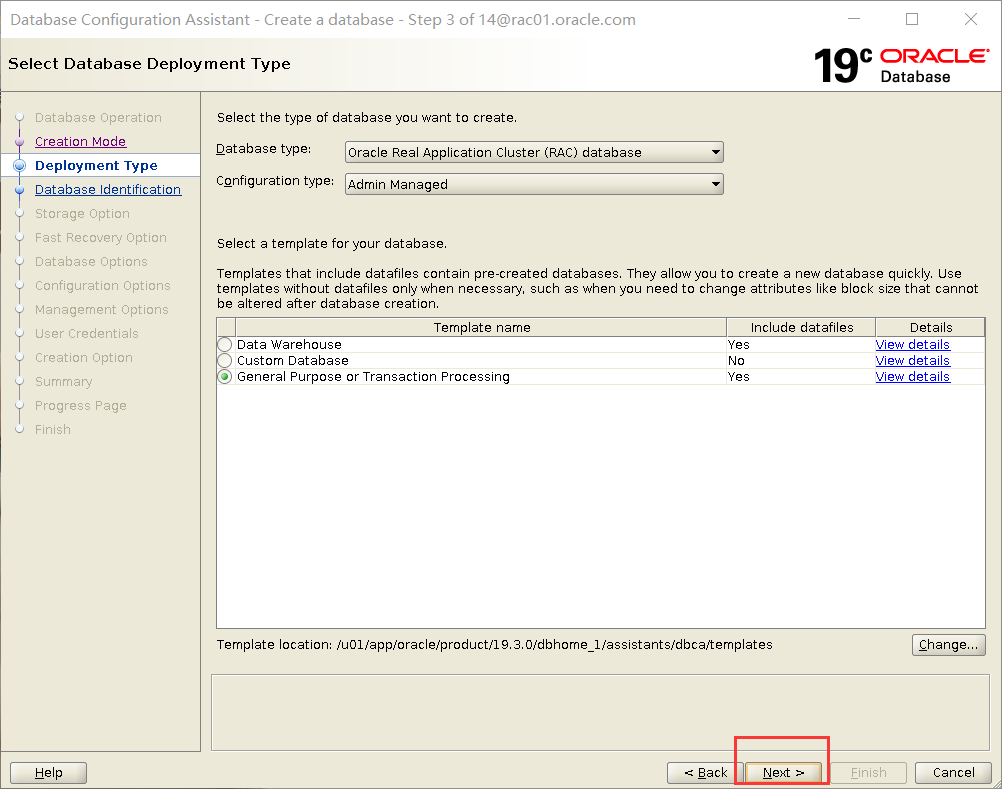

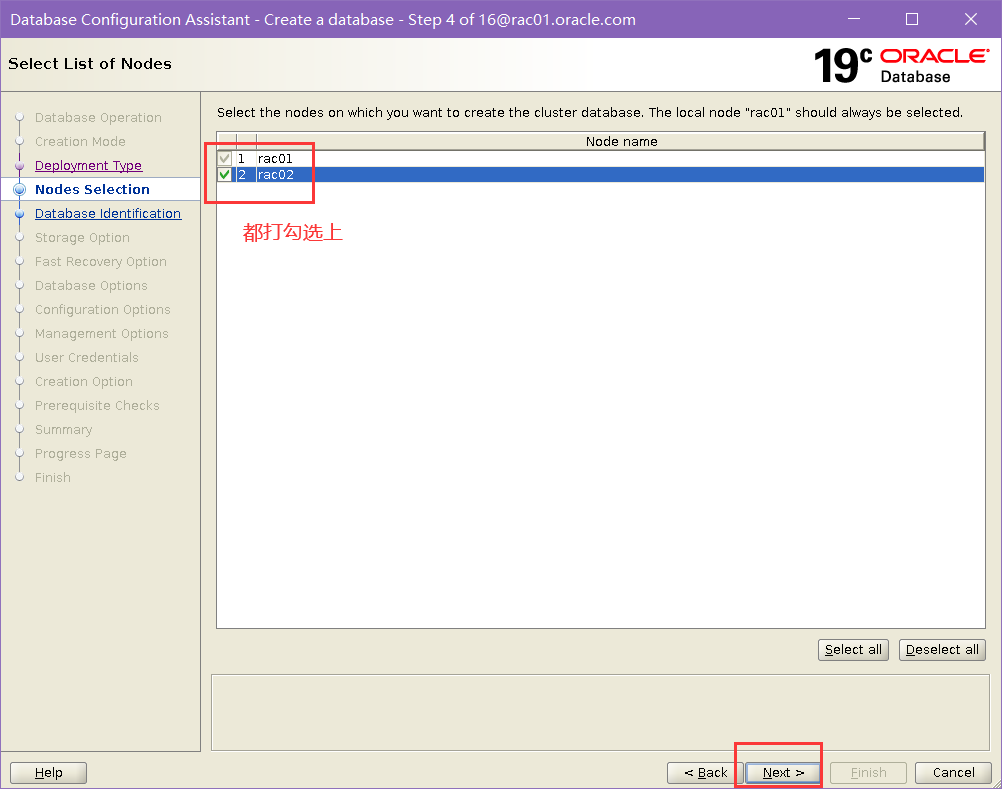

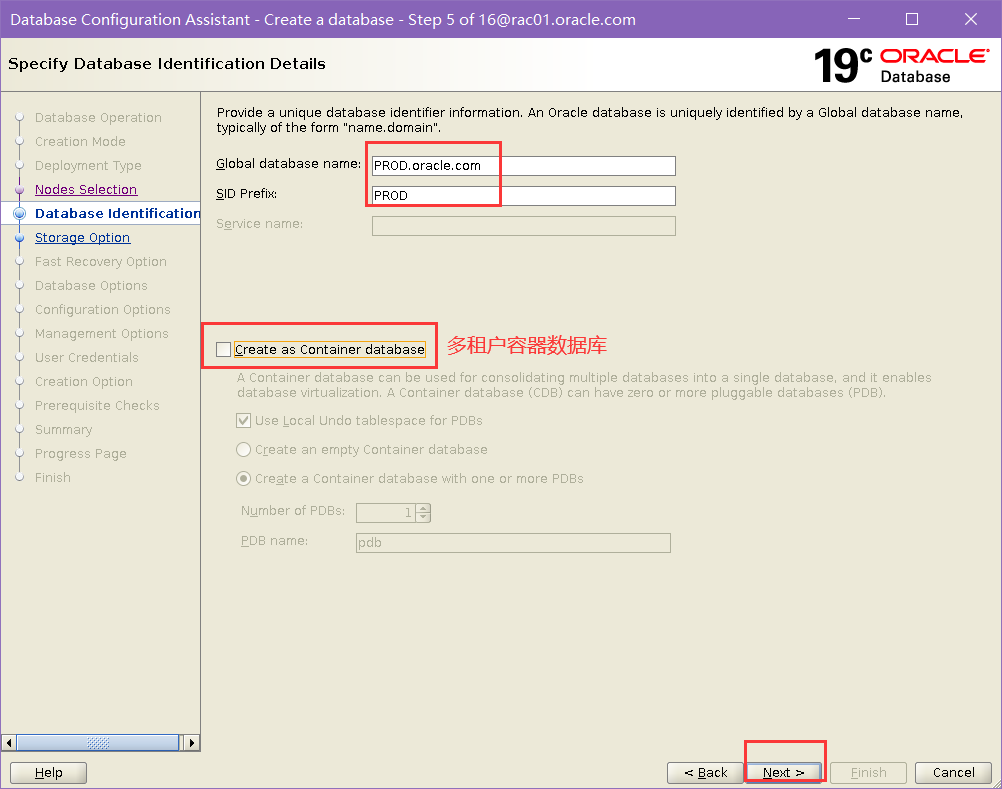

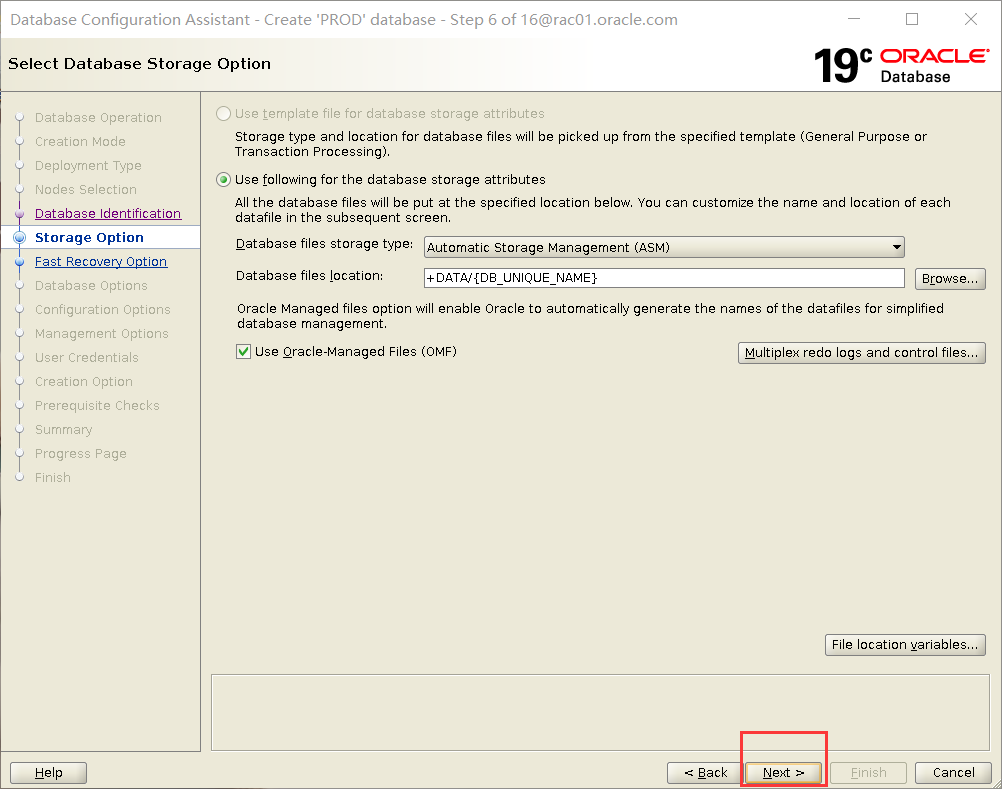

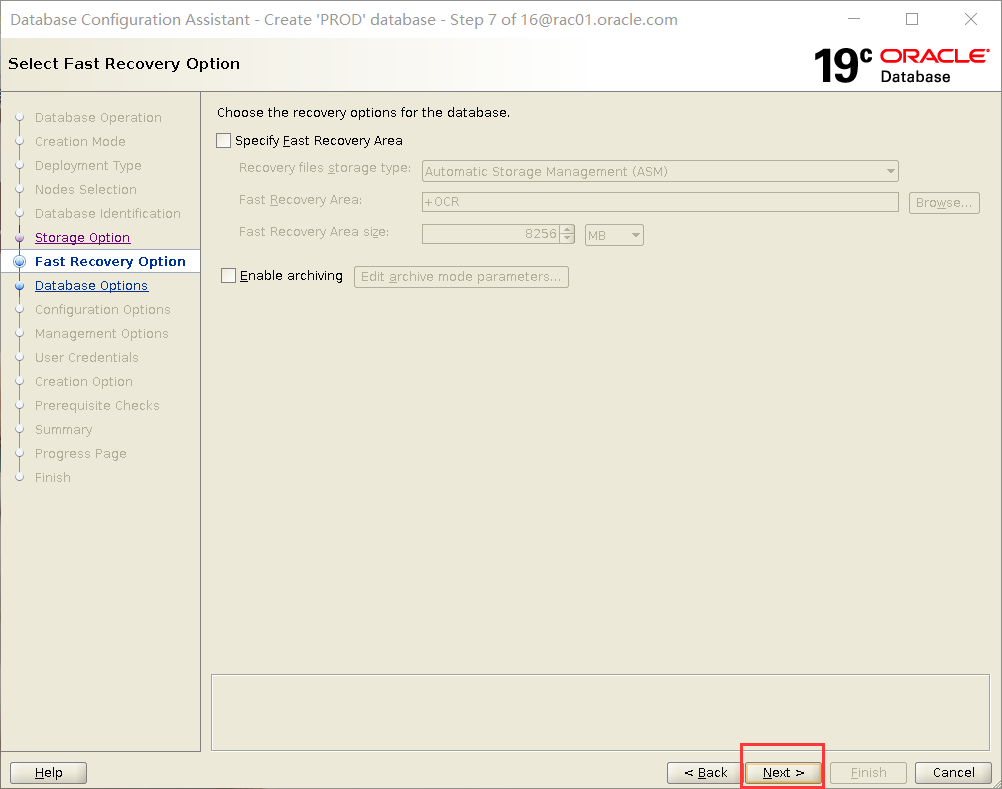

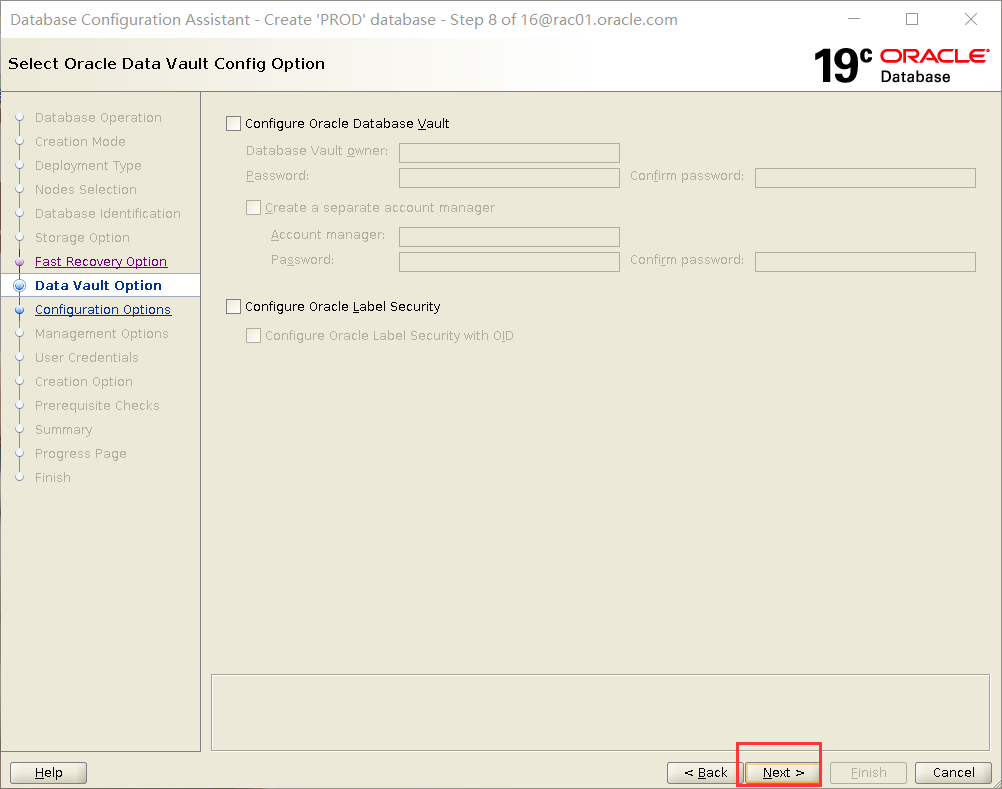

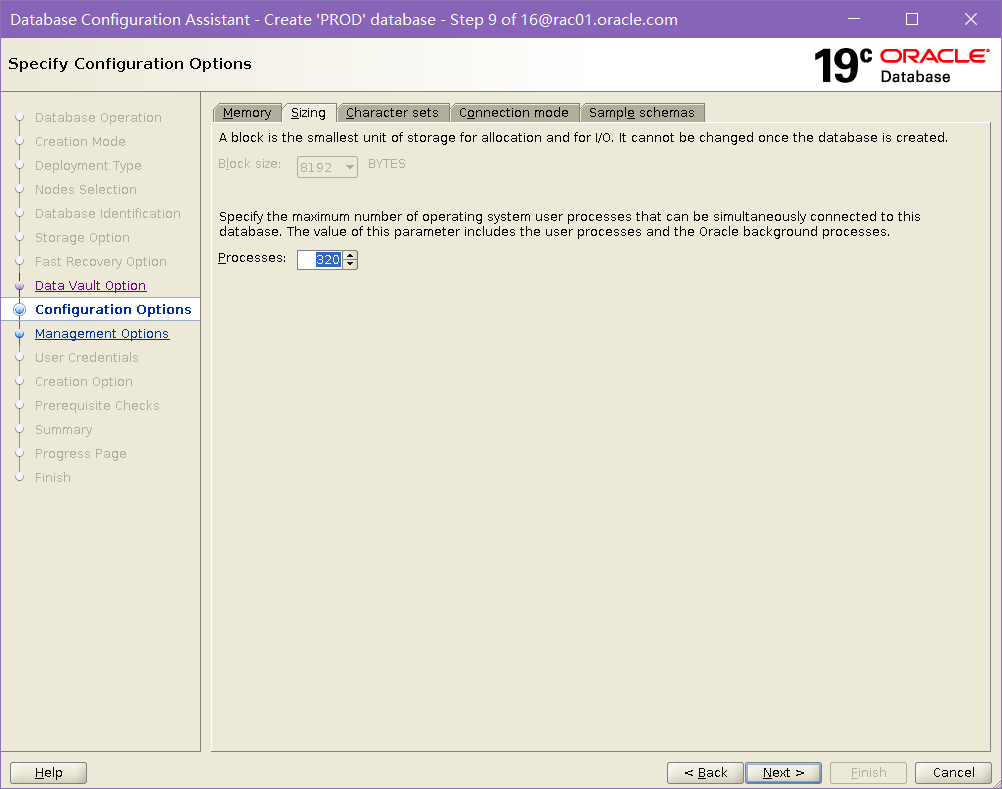

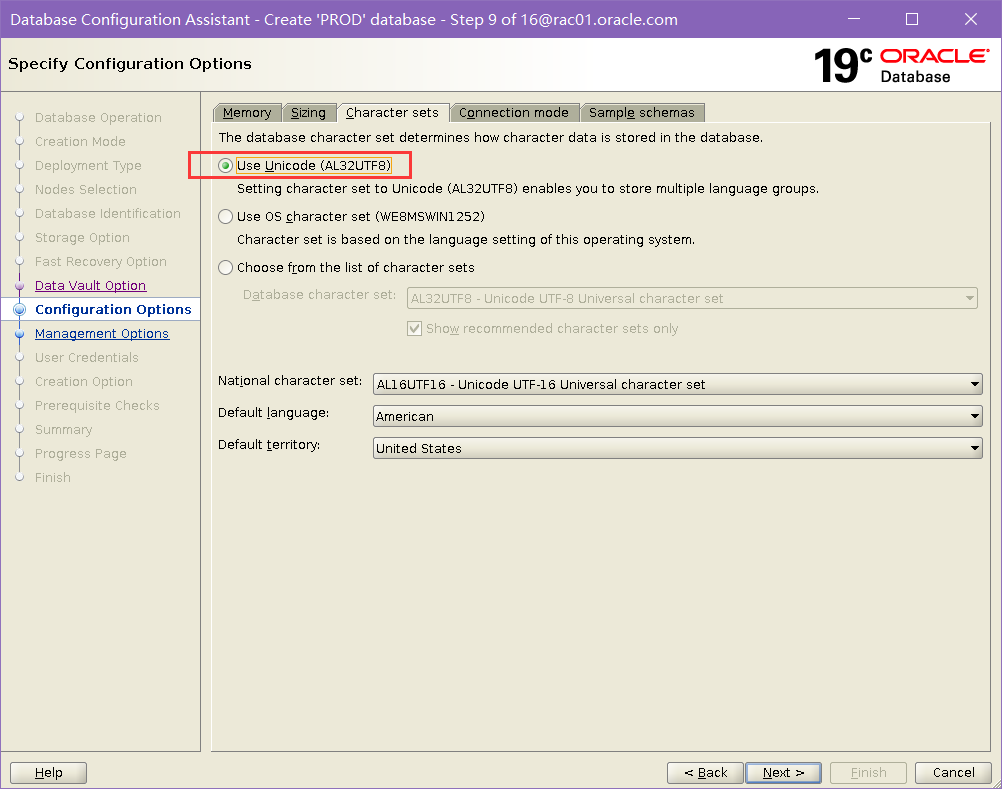

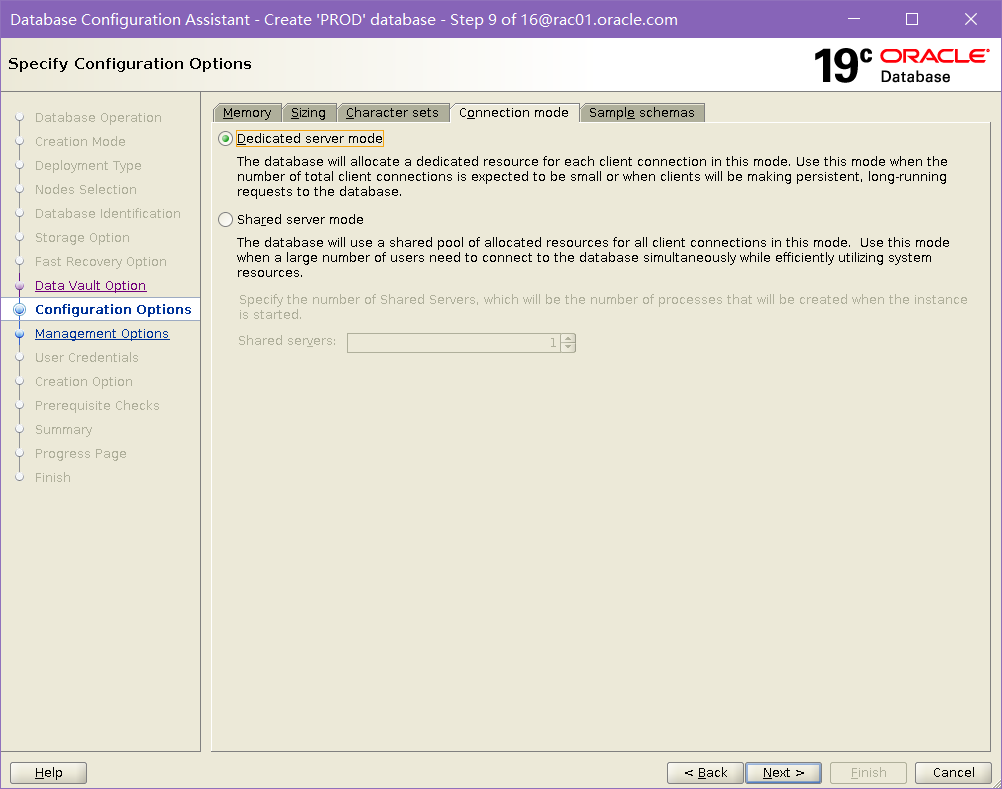

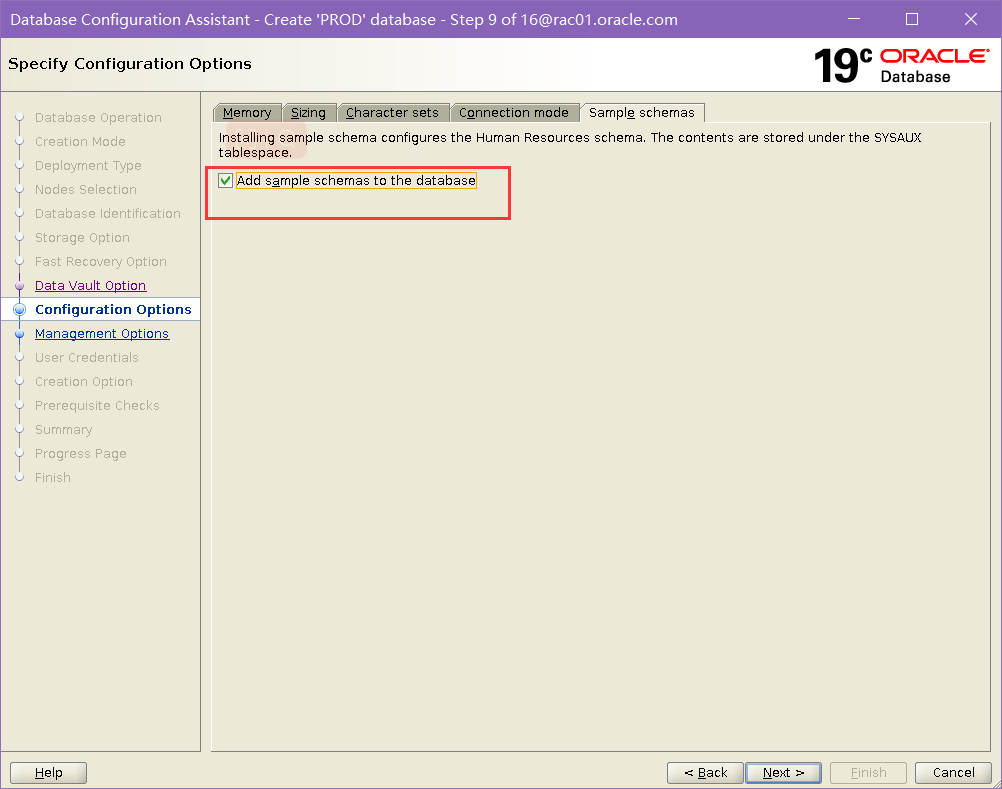

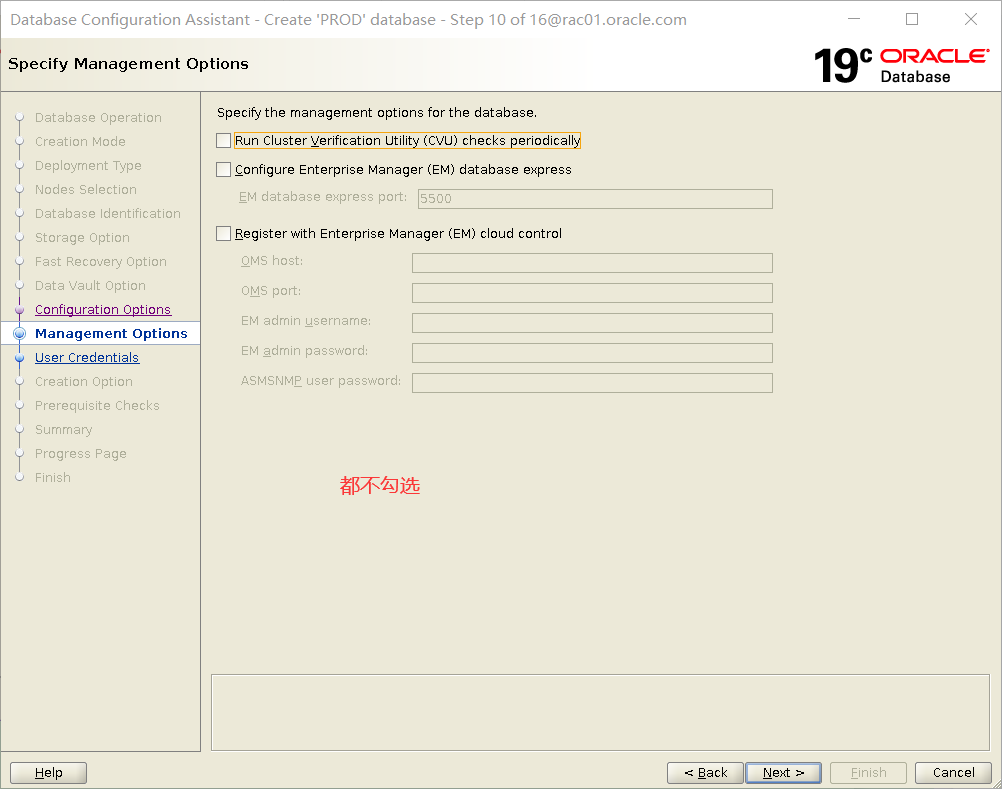

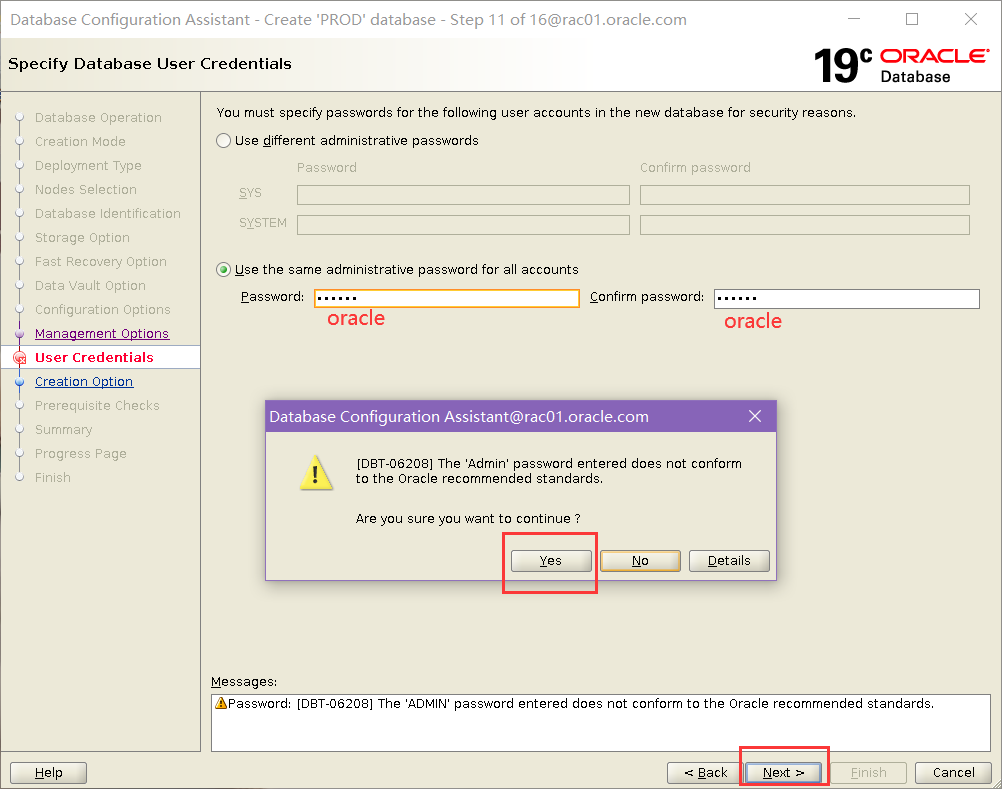

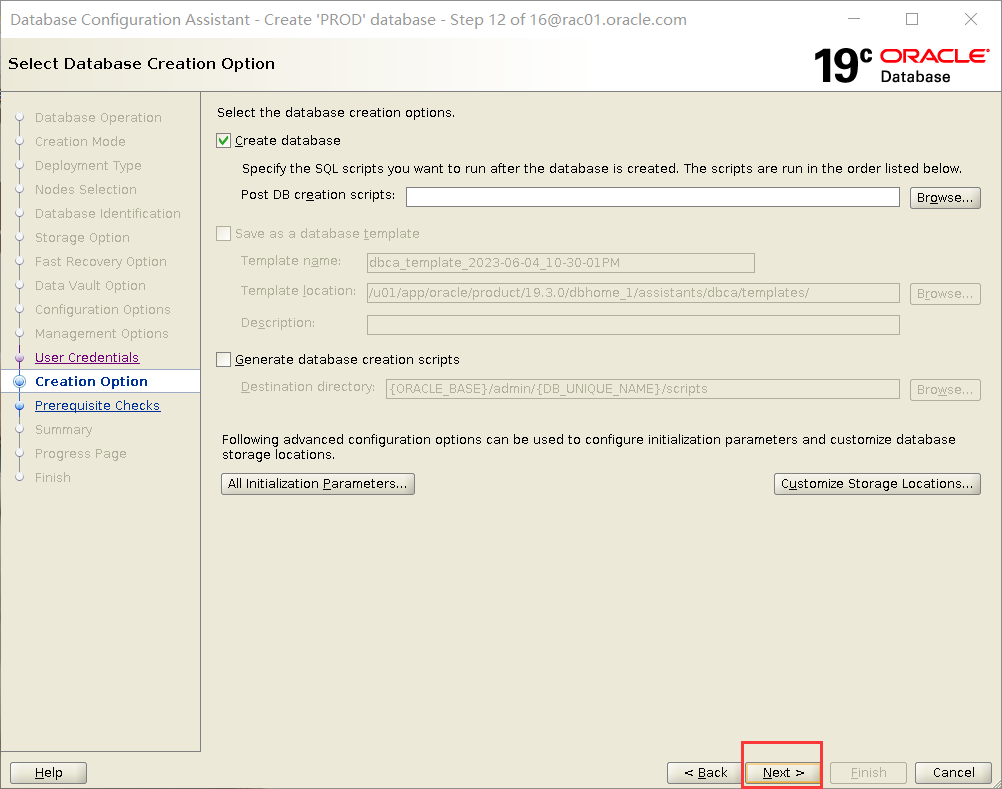

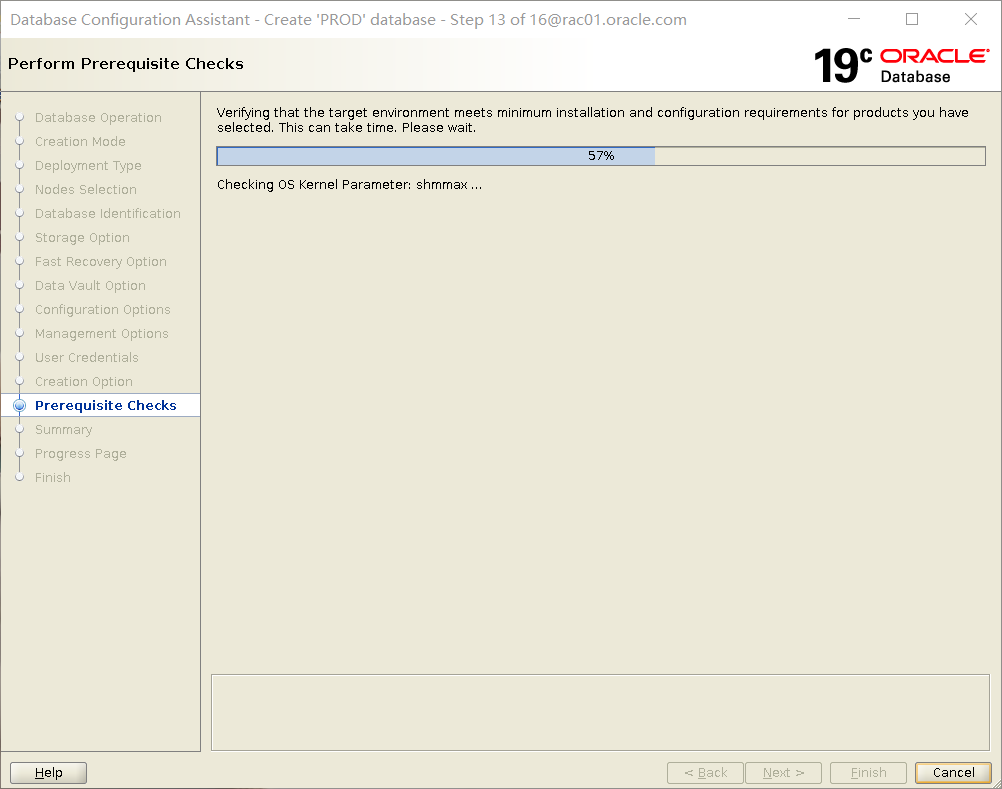

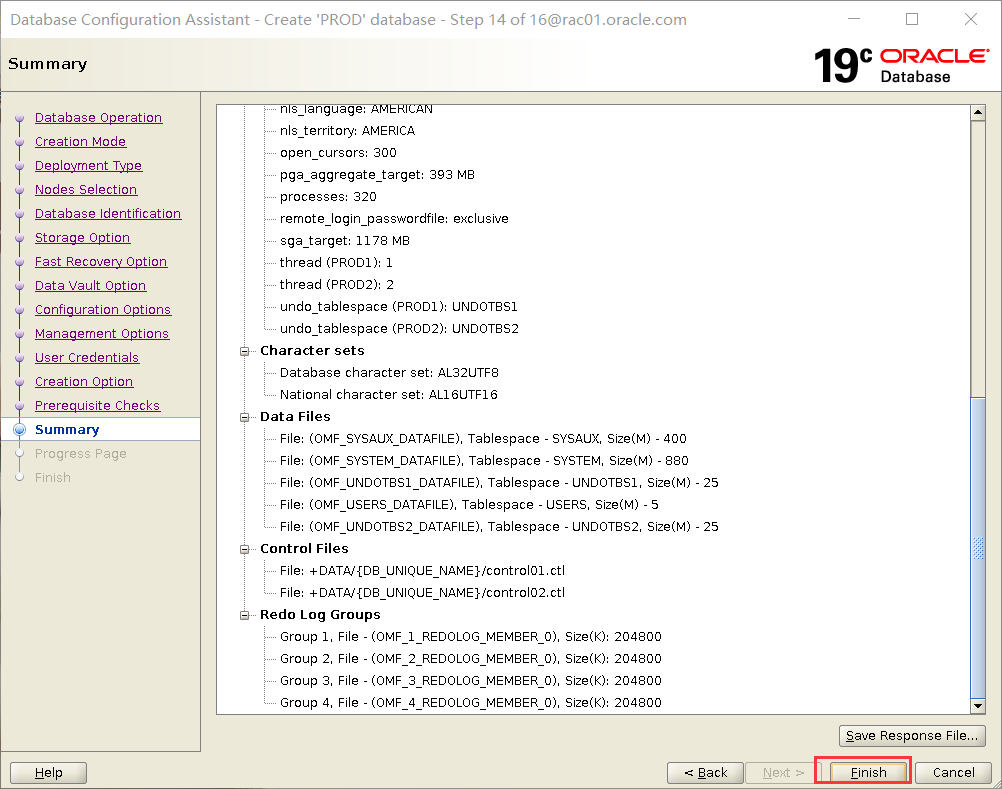

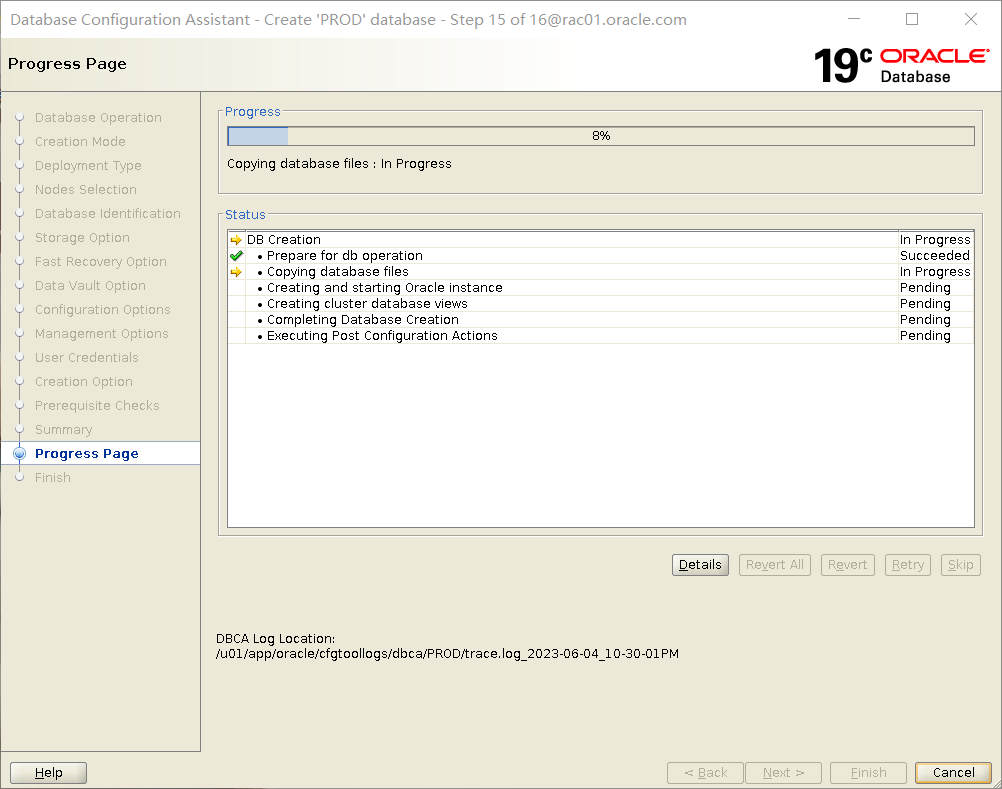

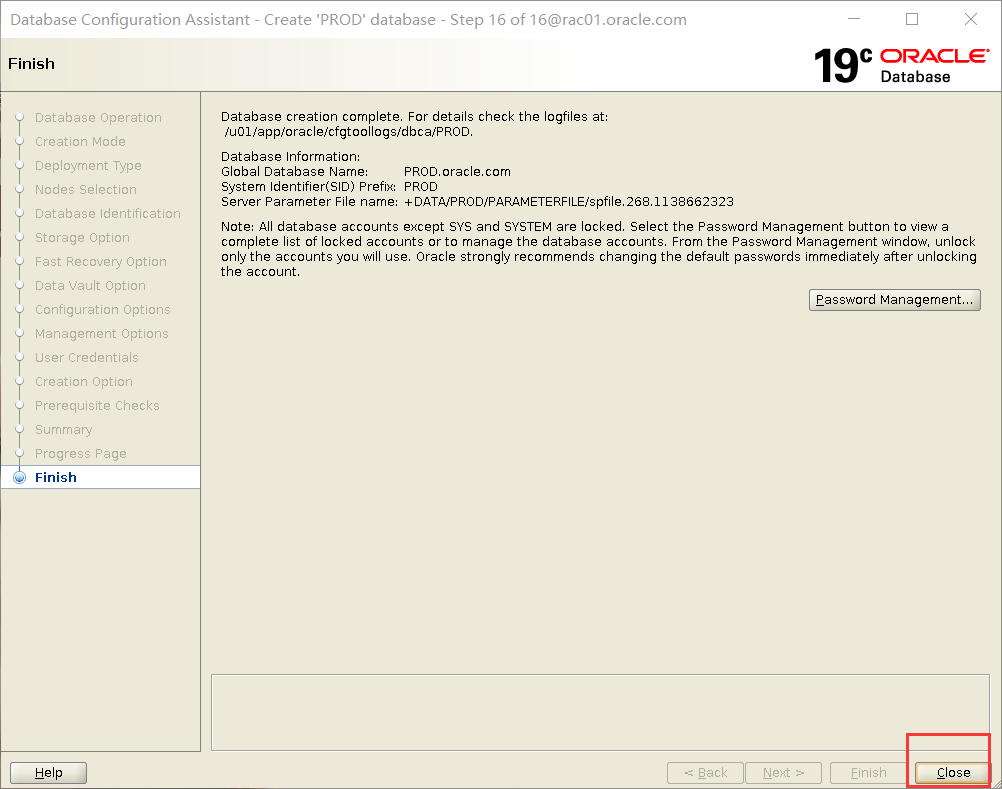

8.DBCA 建库

rac01 oracle 用户DBCA建库

[oracle@rac01.oracle.com:/home/oracle]$ export DISPLAY=192.168.100.1:0.0

[oracle@rac01.oracle.com:/home/oracle]$ dbca

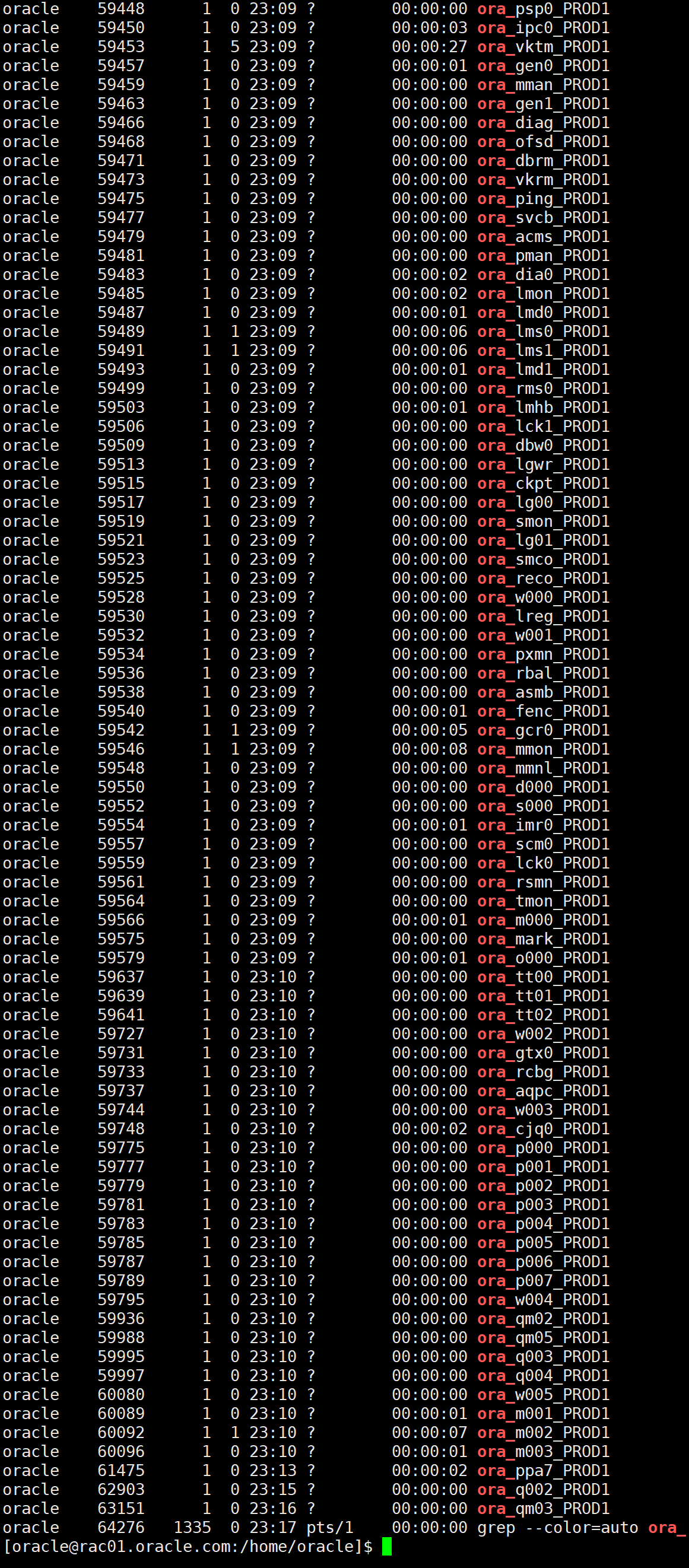

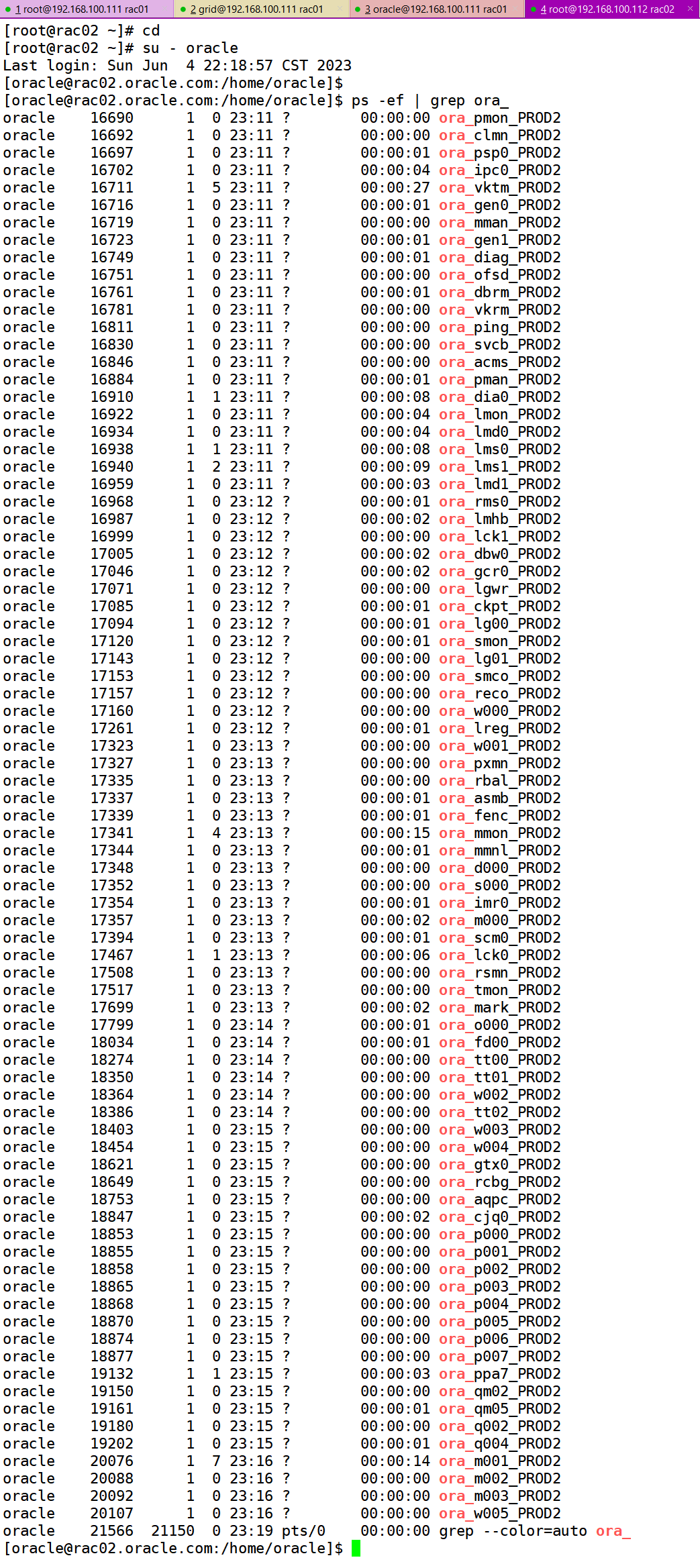

查看后台进程

环境变量已经提前配好

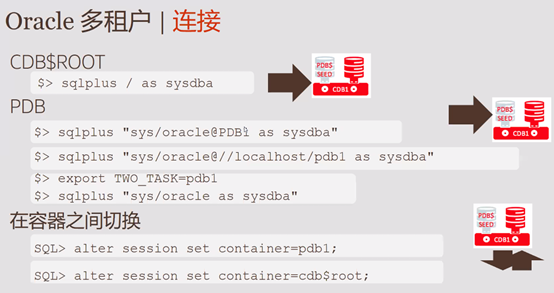

若创建多租户容器数据库连接测试

SQL> startup

ORACLE instance started.

Total System Global Area 1895824672 bytes

Fixed Size 9141536 bytes

Variable Size 1140850688 bytes

Database Buffers 738197504 bytes

Redo Buffers 7634944 bytes

Database mounted.

Database opened.

SQL> show pdbs

CON_ID CON_NAME OPEN MODE RESTRICTED

---------- ------------------------------ ---------- ----------

2 PDB$SEED READ ONLY NO

3 PDB1 MOUNTED

SQL> select

2 'DB Name: ' ||Sys_Context('Userenv', 'DB_Name')||

3 ' / CDB?: ' ||case

4 when Sys_Context('Userenv', 'CDB_Name') is not null then 'YES'

5 else 'NO'

6 end||

7 ' / Auth-ID: ' ||Sys_Context('Userenv', 'Authenticated_Identity')||

8 ' / Sessn-User: '||Sys_Context('Userenv', 'Session_User')||

9 ' / Container: ' ||Nvl(Sys_Context('Userenv', 'Con_Name'), 'n/a')

10 "Who am I?"

11 from Dual

12 /

Who am I?

--------------------------------------------------------------------------------

DB Name: orcl / CDB?: YES / Auth-ID: oracle / Sessn-User: SYS / Container: CDB$R

OOT

SQL> set linesize 300

SQL> /

Who am I?

------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------

DB Name: orcl / CDB?: YES / Auth-ID: oracle / Sessn-User: SYS / Container: CDB$ROOT

SQL>

SQL> alter pluggable database pdb1 open;

Pluggable database altered.

SQL> show pdbs

CON_ID CON_NAME OPEN MODE RESTRICTED

---------- ------------------------------ ---------- ----------

2 PDB$SEED READ ONLY NO

3 PDB1 READ WRITE NO

SQL>

(2)连接pdb

ORACLE_PDB_SID方式

export ORACLE_PDB_SID=pdb1

[oracle@oracle19c-rac1:/home/oracle]$ export ORACLE_PDB_SID=pdb1

[oracle@oracle19c-rac1:/home/oracle]$ env|grep PDB

ORACLE_PDB_SID=pdb1

[oracle@oracle19c-rac1:/home/oracle]$ sqlplus / as sysdba

SQL*Plus: Release 19.0.0.0.0 - Production on Wed Aug 4 11:08:03 2021

Version 19.11.0.0.0

Copyright (c) 1982, 2020, Oracle. All rights reserved.

Connected to:

Oracle Database 19c Enterprise Edition Release 19.0.0.0.0 - Production

Version 19.11.0.0.0

SQL> show pdbs

CON_ID CON_NAME OPEN MODE RESTRICTED

---------- ------------------------------ ---------- ----------

3 PDB1 READ WRITE NO

SQL>

SET CONTAINER方式

ALTER SESSION SET CONTAINER = PDB1;

Connected to:

Oracle Database 19c Enterprise Edition Release 19.0.0.0.0 - Production

Version 19.11.0.0.0

SQL> ALTER SESSION SET CONTAINER = PDB01;

ERROR:

ORA-65011: Pluggable database PDB01 does not exist.

SQL> ALTER SESSION SET CONTAINER = PDB1;

Session altered.

SQL> show pdbs

CON_ID CON_NAME OPEN MODE RESTRICTED

---------- ------------------------------ ---------- ----------

3 PDB1 READ WRITE NO

SQL>

service方式+tnsnames.ora

[oracle@oracle19c-rac1:/home/oracle]$ lsnrctl status

LSNRCTL for Linux: Version 19.0.0.0.0 - Production on 04-AUG-2021 11:13:08

Copyright (c) 1991, 2021, Oracle. All rights reserved.

Connecting to (ADDRESS=(PROTOCOL=tcp)(HOST=)(PORT=1521))

STATUS of the LISTENER

------------------------

Alias LISTENER

Version TNSLSNR for Linux: Version 19.0.0.0.0 - Production

Start Date 04-AUG-2021 10:36:15

Uptime 0 days 0 hr. 36 min. 54 sec

Trace Level off

Security ON: Local OS Authentication

SNMP OFF

Listener Parameter File /u01/app/19.3.0/grid/network/admin/listener.ora

Listener Log File /u01/app/grid/diag/tnslsnr/oracle19c-rac1/listener/alert/log.xml

Listening Endpoints Summary...

(DESCRIPTION=(ADDRESS=(PROTOCOL=ipc)(KEY=LISTENER)))

(DESCRIPTION=(ADDRESS=(PROTOCOL=tcp)(HOST=192.168.245.141)(PORT=1521)))

(DESCRIPTION=(ADDRESS=(PROTOCOL=tcp)(HOST=192.168.245.143)(PORT=1521)))

(DESCRIPTION=(ADDRESS=(PROTOCOL=tcps)(HOST=oracle19c-rac1)(PORT=5500))(Security=(my_wallet_directory=/u01/app/oracle/admin/orcl/xdb_wallet))(Presentation=HTTP)(Session=RAW))

Services Summary...

Service "+ASM" has 1 instance(s).

Instance "+ASM1", status READY, has 1 handler(s) for this service...

Service "+ASM_DATA" has 1 instance(s).

Instance "+ASM1", status READY, has 1 handler(s) for this service...

Service "+ASM_OCRVOTE" has 1 instance(s).

Instance "+ASM1", status READY, has 1 handler(s) for this service...

Service "86b637b62fdf7a65e053f706e80a27ca" has 1 instance(s).

Instance "orcl1", status READY, has 1 handler(s) for this service...

Service "c8a90ae7cd0a17b8e0538df5a8c0c88c" has 1 instance(s).

Instance "orcl1", status READY, has 1 handler(s) for this service...

Service "orcl" has 1 instance(s).

Instance "orcl1", status READY, has 1 handler(s) for this service...

Service "orclXDB" has 1 instance(s).

Instance "orcl1", status READY, has 1 handler(s) for this service...

Service "pdb1" has 1 instance(s).

Instance "orcl1", status READY, has 1 handler(s) for this service...

The command completed successfully

tnsnames.ora方式

pdb1 =

(DESCRIPTION =

(ADDRESS = (PROTOCOL = TCP)(HOST = oracle19c-rac1)(PORT = 1521))

(CONNECT_DATA =

(SERVER = DEDICATED)

(SERVICE_NAME = pdb1)

)

)

sqlplus pdb1/pdb1@pdb1

show con_name

tow_task

export TWO_TASK= pdb1

tow_task连接方式

普通用户。

sys加上密码

sys不加密码方式

[oracle@oracle19c-rac1:/home/oracle]$ export TWO_TASK=pdb1

[oracle@oracle19c-rac1:/home/oracle]$ sqlplus sys/oracle as sysdba

SQL*Plus: Release 19.0.0.0.0 - Production on Wed Aug 4 11:24:30 2021

Version 19.11.0.0.0

Copyright (c) 1982, 2020, Oracle. All rights reserved.

Connected to:

Oracle Database 19c Enterprise Edition Release 19.0.0.0.0 - Production

Version 19.11.0.0.0

SQL> show con_name

CON_NAME

------------------------------

PDB1

SQL> exit

Disconnected from Oracle Database 19c Enterprise Edition Release 19.0.0.0.0 - Production

Version 19.11.0.0.0

[oracle@oracle19c-rac1:/home/oracle]$ sqlplus / as sysdba

SQL*Plus: Release 19.0.0.0.0 - Production on Wed Aug 4 11:24:49 2021

Version 19.11.0.0.0

Copyright (c) 1982, 2020, Oracle. All rights reserved.

ERROR:

ORA-01017: invalid username/password; logon denied

Enter user-name: ^C

[oracle@oracle19c-rac1:/home/oracle]$ sqlplus pdb1/pdb1

SQL*Plus: Release 19.0.0.0.0 - Production on Wed Aug 4 11:24:59 2021

Version 19.11.0.0.0

Copyright (c) 1982, 2020, Oracle. All rights reserved.

Last Successful login time: Wed Aug 04 2021 11:23:35 +08:00

Connected to:

Oracle Database 19c Enterprise Edition Release 19.0.0.0.0 - Production

Version 19.11.0.0.0

SQL> exit

Disconnected from Oracle Database 19c Enterprise Edition Release 19.0.0.0.0 - Production

Version 19.11.0.0.0

[oracle@oracle19c-rac1:/home/oracle]$ sqlplus pdb1/pdb1 as sysdba

SQL*Plus: Release 19.0.0.0.0 - Production on Wed Aug 4 11:25:06 2021

Version 19.11.0.0.0

Copyright (c) 1982, 2020, Oracle. All rights reserved.

ERROR:

ORA-01017: invalid username/password; logon denied

Enter user-name: ^C

PS:使用no-sys和sys密码的方式是可以登录的,但是 sqlplus / as sysdba是不允许的

(3)datafile

SQL> /

CON_ID Con_Name T'space_Na File_Name

---------- ---------- ---------- ----------------------------------------------------------------------------------------------------

1 CDB$ROOT SYSAUX +DATA/ORCL/DATAFILE/sysaux.258.1079636747

1 CDB$ROOT SYSTEM +DATA/ORCL/DATAFILE/system.257.1079636681

1 CDB$ROOT TEMP +DATA/ORCL/TEMPFILE/temp.264.1079636895

1 CDB$ROOT UNDOTBS1 +DATA/ORCL/DATAFILE/undotbs1.259.1079636773

1 CDB$ROOT UNDOTBS2 +DATA/ORCL/DATAFILE/undotbs2.269.1079639099

1 CDB$ROOT USERS +DATA/ORCL/DATAFILE/users.260.1079636775

3 PDB1 SYSAUX +DATA/ORCL/C8A90AE7CD0A17B8E0538DF5A8C0C88C/DATAFILE/sysaux.278.1079646975

3 PDB1 SYSTEM +DATA/ORCL/C8A90AE7CD0A17B8E0538DF5A8C0C88C/DATAFILE/system.277.1079646973

3 PDB1 TEMP +DATA/ORCL/C8A90AE7CD0A17B8E0538DF5A8C0C88C/TEMPFILE/temp.279.1079647223

3 PDB1 UNDOTBS1 +DATA/ORCL/C8A90AE7CD0A17B8E0538DF5A8C0C88C/DATAFILE/undotbs1.276.1079646973

10 rows selected.

SQL> l

1 with Containers as (

2 select PDB_ID Con_ID, PDB_Name Con_Name from DBA_PDBs

3 union

4 select 1 Con_ID, 'CDB$ROOT' Con_Name from Dual)

5 select

6 Con_ID,

7 Con_Name "Con_Name",

8 Tablespace_Name "T'space_Name",

9 File_Name "File_Name"

10 from CDB_Data_Files inner join Containers using (Con_ID)

11 union

12 select

13 Con_ID,

14 Con_Name "Con_Name",

15 Tablespace_Name "T'space_Name",

16 File_Name "File_Name"

17 from CDB_Temp_Files inner join Containers using (Con_ID)

18 order by 1, 3

19*

9.RAC日常管理命令

9.1.集群资源状态

[grid@oracle19c-rac1:/home/grid]$ crsctl stat res -t

--------------------------------------------------------------------------------

Name Target State Server State details

--------------------------------------------------------------------------------

Local Resources

--------------------------------------------------------------------------------

ora.LISTENER.lsnr

ONLINE ONLINE oracle19c-rac1 STABLE

ONLINE ONLINE oracle19c-rac2 STABLE

ora.chad

ONLINE ONLINE oracle19c-rac1 STABLE

ONLINE ONLINE oracle19c-rac2 STABLE

ora.net1.network

ONLINE ONLINE oracle19c-rac1 STABLE

ONLINE ONLINE oracle19c-rac2 STABLE

ora.ons

ONLINE ONLINE oracle19c-rac1 STABLE

ONLINE ONLINE oracle19c-rac2 STABLE

ora.proxy_advm

OFFLINE OFFLINE oracle19c-rac1 STABLE

OFFLINE OFFLINE oracle19c-rac2 STABLE

--------------------------------------------------------------------------------

Cluster Resources

--------------------------------------------------------------------------------

ora.ASMNET1LSNR_ASM.lsnr(ora.asmgroup)

1 ONLINE ONLINE oracle19c-rac1 STABLE

2 ONLINE ONLINE oracle19c-rac2 STABLE

ora.DATA.dg(ora.asmgroup)

1 ONLINE ONLINE oracle19c-rac1 STABLE

2 ONLINE ONLINE oracle19c-rac2 STABLE

ora.LISTENER_SCAN1.lsnr

1 ONLINE ONLINE oracle19c-rac1 STABLE

ora.OCRVOTE.dg(ora.asmgroup)

1 ONLINE ONLINE oracle19c-rac1 STABLE

2 ONLINE ONLINE oracle19c-rac2 STABLE

ora.asm(ora.asmgroup)

1 ONLINE ONLINE oracle19c-rac1 Started,STABLE

2 ONLINE ONLINE oracle19c-rac2 Started,STABLE

ora.asmnet1.asmnetwork(ora.asmgroup)

1 ONLINE ONLINE oracle19c-rac1 STABLE

2 ONLINE ONLINE oracle19c-rac2 STABLE

ora.cvu

1 ONLINE ONLINE oracle19c-rac1 STABLE

ora.oracle19c-rac1.vip

1 ONLINE ONLINE oracle19c-rac1 STABLE

ora.oracle19c-rac2.vip

1 ONLINE ONLINE oracle19c-rac2 STABLE

ora.orcl.db

1 ONLINE ONLINE oracle19c-rac1 Open,HOME=/u01/app/o

racle/product/19.3.0

/dbhome_1,STABLE

2 OFFLINE OFFLINE Instance Shutdown,ST

ABLE

ora.qosmserver

1 ONLINE ONLINE oracle19c-rac1 STABLE

ora.scan1.vip

1 ONLINE ONLINE oracle19c-rac1 STABLE

--------------------------------------------------------------------------------

9.2.集群服务状态

[grid@oracle19c-rac1:/home/grid]$ crsctl check cluster -all

**************************************************************

oracle19c-rac1:

CRS-4537: Cluster Ready Services is online

CRS-4529: Cluster Synchronization Services is online

CRS-4533: Event Manager is online

**************************************************************

oracle19c-rac2:

CRS-4537: Cluster Ready Services is online

CRS-4529: Cluster Synchronization Services is online

CRS-4533: Event Manager is online

**************************************************************

[grid@oracle19c-rac1:/home/grid]$ crsctl check crs

CRS-4638: Oracle High Availability Services is online

CRS-4537: Cluster Ready Services is online

CRS-4529: Cluster Synchronization Services is online

CRS-4533: Event Manager is online

[grid@oracle19c-rac1:/home/grid]$

9.3.数据库状态

[grid@oracle19c-rac1:/home/grid]$ srvctl status database -d orcl

Instance orcl1 is running on node oracle19c-rac1

Instance orcl2 is not running on node oracle19c-rac2

[grid@oracle19c-rac1:/home/grid]$

9.4.监听状态

[grid@oracle19c-rac1:/home/grid]$ lsnrctl status

LSNRCTL for Linux: Version 19.0.0.0.0 - Production on 04-AUG-2021 11:27:21

Copyright (c) 1991, 2021, Oracle. All rights reserved.

Connecting to (DESCRIPTION=(ADDRESS=(PROTOCOL=IPC)(KEY=LISTENER)))

STATUS of the LISTENER

------------------------

Alias LISTENER

Version TNSLSNR for Linux: Version 19.0.0.0.0 - Production

Start Date 04-AUG-2021 10:36:15

Uptime 0 days 0 hr. 51 min. 7 sec

Trace Level off

Security ON: Local OS Authentication

SNMP OFF

Listener Parameter File /u01/app/19.3.0/grid/network/admin/listener.ora

Listener Log File /u01/app/grid/diag/tnslsnr/oracle19c-rac1/listener/alert/log.xml

Listening Endpoints Summary...

(DESCRIPTION=(ADDRESS=(PROTOCOL=ipc)(KEY=LISTENER)))

(DESCRIPTION=(ADDRESS=(PROTOCOL=tcp)(HOST=192.168.245.141)(PORT=1521)))

(DESCRIPTION=(ADDRESS=(PROTOCOL=tcp)(HOST=192.168.245.143)(PORT=1521)))

(DESCRIPTION=(ADDRESS=(PROTOCOL=tcps)(HOST=oracle19c-rac1)(PORT=5500))(Security=(my_wallet_directory=/u01/app/oracle/admin/orcl/xdb_wallet))(Presentation=HTTP)(Session=RAW))

Services Summary...

Service "+ASM" has 1 instance(s).

Instance "+ASM1", status READY, has 1 handler(s) for this service...

Service "+ASM_DATA" has 1 instance(s).

Instance "+ASM1", status READY, has 1 handler(s) for this service...

Service "+ASM_OCRVOTE" has 1 instance(s).

Instance "+ASM1", status READY, has 1 handler(s) for this service...

Service "86b637b62fdf7a65e053f706e80a27ca" has 1 instance(s).

Instance "orcl1", status READY, has 1 handler(s) for this service...

Service "c8a90ae7cd0a17b8e0538df5a8c0c88c" has 1 instance(s).

Instance "orcl1", status READY, has 1 handler(s) for this service...

Service "orcl" has 1 instance(s).

Instance "orcl1", status READY, has 1 handler(s) for this service...

Service "orclXDB" has 1 instance(s).

Instance "orcl1", status READY, has 1 handler(s) for this service...

Service "pdb1" has 1 instance(s).

Instance "orcl1", status READY, has 1 handler(s) for this service...

The command completed successfully

[grid@oracle19c-rac1:/home/grid]$ srvctl status listener

Listener LISTENER is enabled

Listener LISTENER is running on node(s): oracle19c-rac2,oracle19c-rac1

[grid@oracle19c-rac1:/home/grid]$

9.5.scan状态

[grid@oracle19c-rac1:/home/grid]$ srvctl status scan

SCAN VIP scan1 is enabled

SCAN VIP scan1 is running on node oracle19c-rac1

[grid@oracle19c-rac1:/home/grid]$ srvctl status scan_listener

SCAN Listener LISTENER_SCAN1 is enabled

SCAN listener LISTENER_SCAN1 is running on node oracle19c-rac1

[grid@oracle19c-rac1:/home/grid]$ lsnrctl status LISTENER_SCAN1

LSNRCTL for Linux: Version 19.0.0.0.0 - Production on 04-AUG-2021 11:28:18

Copyright (c) 1991, 2021, Oracle. All rights reserved.

Connecting to (DESCRIPTION=(ADDRESS=(PROTOCOL=IPC)(KEY=LISTENER_SCAN1)))

STATUS of the LISTENER

------------------------

Alias LISTENER_SCAN1

Version TNSLSNR for Linux: Version 19.0.0.0.0 - Production

Start Date 04-AUG-2021 10:36:09

Uptime 0 days 0 hr. 52 min. 10 sec

Trace Level off

Security ON: Local OS Authentication

SNMP OFF

Listener Parameter File /u01/app/19.3.0/grid/network/admin/listener.ora

Listener Log File /u01/app/grid/diag/tnslsnr/oracle19c-rac1/listener_scan1/alert/log.xml

Listening Endpoints Summary...

(DESCRIPTION=(ADDRESS=(PROTOCOL=ipc)(KEY=LISTENER_SCAN1)))

(DESCRIPTION=(ADDRESS=(PROTOCOL=tcp)(HOST=192.168.245.145)(PORT=1521)))

Services Summary...

Service "86b637b62fdf7a65e053f706e80a27ca" has 1 instance(s).

Instance "orcl1", status READY, has 1 handler(s) for this service...

Service "c8a90ae7cd0a17b8e0538df5a8c0c88c" has 1 instance(s).

Instance "orcl1", status READY, has 1 handler(s) for this service...

Service "orcl" has 1 instance(s).

Instance "orcl1", status READY, has 1 handler(s) for this service...

Service "orclXDB" has 1 instance(s).

Instance "orcl1", status READY, has 1 handler(s) for this service...

Service "pdb1" has 1 instance(s).

Instance "orcl1", status READY, has 1 handler(s) for this service...

The command completed successfully

[grid@oracle19c-rac1:/home/grid]$

9.6.nodeapps状态

[grid@oracle19c-rac1:/home/grid]$ srvctl status nodeapps

VIP 192.168.245.143 is enabled

VIP 192.168.245.143 is running on node: oracle19c-rac1

VIP 192.168.245.144 is enabled

VIP 192.168.245.144 is running on node: oracle19c-rac2

Network is enabled

Network is running on node: oracle19c-rac1

Network is running on node: oracle19c-rac2

ONS is enabled

ONS daemon is running on node: oracle19c-rac1

ONS daemon is running on node: oracle19c-rac2

[grid@oracle19c-rac1:/home/grid]$

9.7.VIP状态

[grid@oracle19c-rac1:/home/grid]$ srvctl status vip -node oracle19c-rac1

VIP 192.168.245.143 is enabled

VIP 192.168.245.143 is running on node: oracle19c-rac1

[grid@oracle19c-rac1:/home/grid]$ srvctl status vip -node oracle19c-rac2

VIP 192.168.245.144 is enabled

VIP 192.168.245.144 is running on node: oracle19c-rac2

[grid@oracle19c-rac1:/home/grid]$

9.8.数据库配置

[grid@oracle19c-rac1:/home/grid]$ srvctl config database -d orcl

Database unique name: orcl

Database name: orcl

Oracle home: /u01/app/oracle/product/19.3.0/dbhome_1

Oracle user: oracle

Spfile: +DATA/ORCL/PARAMETERFILE/spfile.272.1079640143

Password file: +DATA/ORCL/PASSWORD/pwdorcl.256.1079636555

Domain:

Start options: open

Stop options: immediate

Database role: PRIMARY

Management policy: AUTOMATIC

Server pools:

Disk Groups: DATA

Mount point paths:

Services:

Type: RAC

Start concurrency:

Stop concurrency:

OSDBA group: dba

OSOPER group: oper

Database instances: orcl1,orcl2

Configured nodes: oracle19c-rac1,oracle19c-rac2

CSS critical: no

CPU count: 0

Memory target: 0

Maximum memory: 0

Default network number for database services:

Database is administrator managed

[grid@oracle19c-rac1:/home/grid]$ crsctl status res ora.orcl.db -p |grep -i auto

AUTO_START=restore

MANAGEMENT_POLICY=AUTOMATIC

START_DEPENDENCIES_RTE_INTERNAL=<xml><Cond name="ASMClientMode">False</Cond><Cond name="ASMmode">remote</Cond><Arg name="dg" type="ResList">ora.DATA.dg</Arg><Arg name="acfs_or_nfs" type="ResList"></Arg><Cond name="OHResExist">False</Cond><Cond name="DATABASE_TYPE">RAC</Cond><Cond name="MANAGEMENT_POLICY">AUTOMATIC</Cond><Arg name="acfs_and_nfs" type="ResList"></Arg></xml>

[grid@oracle19c-rac1:/home/grid]$

AUTO_START=restore是设置数据库是否启动的,restore就是保持上次状态。

9.9.OCR

[grid@oracle19c-rac1:/home/grid]$ ocrcheck

Status of Oracle Cluster Registry is as follows :

Version : 4

Total space (kbytes) : 491684

Used space (kbytes) : 84364

Available space (kbytes) : 407320

ID : 865494789

Device/File Name : +OCRVOTE

Device/File integrity check succeeded

Device/File not configured

Device/File not configured

Device/File not configured

Device/File not configured

Cluster registry integrity check succeeded

Logical corruption check bypassed due to non-privileged user

[grid@oracle19c-rac1:/home/grid]$ ocrconfig -showbackup

PROT-24: Auto backups for the Oracle Cluster Registry are not available

PROT-25: Manual backups for the Oracle Cluster Registry are not available

9.10.VOTEDISK

[grid@oracle19c-rac1:/home/grid]$ crsctl query css votedisk

## STATE File Universal Id File Name Disk group

-- ----- ----------------- --------- ---------

1. ONLINE b6fda7690d0e4fcdbfadc303580fb08d (/dev/asm_ocr01) [OCRVOTE]

2. ONLINE 8e02bf80da2e4fb8bf50d145561261c9 (/dev/asm_ocr02) [OCRVOTE]

3. ONLINE bca68836396f4fcdbf307746a22941c5 (/dev/asm_ocr03) [OCRVOTE]

Located 3 voting disk(s).

[grid@oracle19c-rac1:/home/grid]$

9.11.GI版本

[grid@oracle19c-rac1:/home/grid]$ crsctl query crs releaseversion

Oracle High Availability Services release version on the local node is [19.0.0.0.0]

[grid@oracle19c-rac1:/home/grid]$ crsctl query crs activeversion

Oracle Clusterware active version on the cluster is [19.0.0.0.0]

[grid@oracle19c-rac1:/home/grid]$

9.12.ASM

[grid@oracle19c-rac1:/home/grid]$ asmcmd

ASMCMD> lsdg

State Type Rebal Sector Logical_Sector Block AU Total_MB Free_MB Req_mir_free_MB Usable_file_MB Offline_disks Voting_files Name

MOUNTED EXTERN N 512 512 4096 4194304 20480 14492 0 14492 0 N DATA/

MOUNTED NORMAL N 512 512 4096 4194304 6144 5228 2048 1590 0 Y OCRVOTE/

ASMCMD> lsof

DB_Name Instance_Name Path

+ASM +ASM1 +OCRVOTE.255.1079463727

orcl orcl1 +DATA/ORCL/86B637B62FE07A65E053F706E80A27CA/DATAFILE/sysaux.266.1079638609

orcl orcl1 +DATA/ORCL/86B637B62FE07A65E053F706E80A27CA/DATAFILE/system.265.1079638609

orcl orcl1 +DATA/ORCL/86B637B62FE07A65E053F706E80A27CA/DATAFILE/undotbs1.267.1079638609

orcl orcl1 +DATA/ORCL/C8A7183AA0841E82E0538DF5A8C0A5A9/TEMPFILE/temp.268.1079638657

orcl orcl1 +DATA/ORCL/C8A90AE7CD0A17B8E0538DF5A8C0C88C/DATAFILE/sysaux.278.1079646975

orcl orcl1 +DATA/ORCL/C8A90AE7CD0A17B8E0538DF5A8C0C88C/DATAFILE/system.277.1079646973

orcl orcl1 +DATA/ORCL/C8A90AE7CD0A17B8E0538DF5A8C0C88C/DATAFILE/undotbs1.276.1079646973

orcl orcl1 +DATA/ORCL/C8A90AE7CD0A17B8E0538DF5A8C0C88C/TEMPFILE/temp.279.1079647223

orcl orcl1 +DATA/ORCL/CONTROLFILE/current.261.1079636859

orcl orcl1 +DATA/ORCL/DATAFILE/sysaux.258.1079636747

orcl orcl1 +DATA/ORCL/DATAFILE/system.257.1079636681

orcl orcl1 +DATA/ORCL/DATAFILE/undotbs1.259.1079636773

orcl orcl1 +DATA/ORCL/DATAFILE/undotbs2.269.1079639099

orcl orcl1 +DATA/ORCL/DATAFILE/users.260.1079636775

orcl orcl1 +DATA/ORCL/ONLINELOG/group_1.263.1079636863

orcl orcl1 +DATA/ORCL/ONLINELOG/group_2.262.1079636863

orcl orcl1 +DATA/ORCL/ONLINELOG/group_3.270.1079640129

orcl orcl1 +DATA/ORCL/ONLINELOG/group_4.271.1079640135

orcl orcl1 +DATA/ORCL/TEMPFILE/temp.264.1079636895

ASMCMD> lsdsk

Path

/dev/asm_data01

/dev/asm_data02

/dev/asm_ocr01

/dev/asm_ocr02

/dev/asm_ocr03

9.13.启动和关闭RAC

启动和关闭RAC

-关闭\启动单个实例

$ srvctl stop\start instance -d racdb -i racdb1

--关闭\启动所有实例

$ srvctl stop\start database -d orcl

--关闭\启动CRS

$ crsctl stop\start crs

--关闭\启动集群服务

$ crsctl stop\start cluster -all

crsctl start\stop crs 是单节管理

crsctl start\stop cluster [-all 所有节点] 可以管理多个节点

crsctl start\stop crs 管理crs 包含进程 OHASD

crsctl start\stop cluster 不包含OHASD进程 要先启动 OHASD进程才可以使用

srvctl stop\start database 启动\停止所有实例及其启用的服务

9.14.节点状态

节点示例状态

SQL> SELECT inst_id

,instance_number inst_no

,instance_name inst_name

,parallel

,STATUS

,database_status db_status

,active_state STATE

,host_name host

FROM gv$instance

INST_ID INST_NO INST_NAME PARALLEL

---------- ---------- ------------------------------------------------ ---------

STATUS

------------------------------------

DB_STATUS STATE

--------------------------------------------------- ---------------------------

HOST

--------------------------------------------------------------------------------

1 1 orcl1 YES

OPEN

ACTIVE NORMAL

oracle19c-rac1

9.15.切换scan

srvctl relocate scan_listener -i 1 -n oracle19c-rac2

9.16.切换VIP

srvctl config network

srvctl relocate vip -vip oracle19c-rac2-vip -node oracle19c-rac2