点击上方👆蓝字关注!

简介

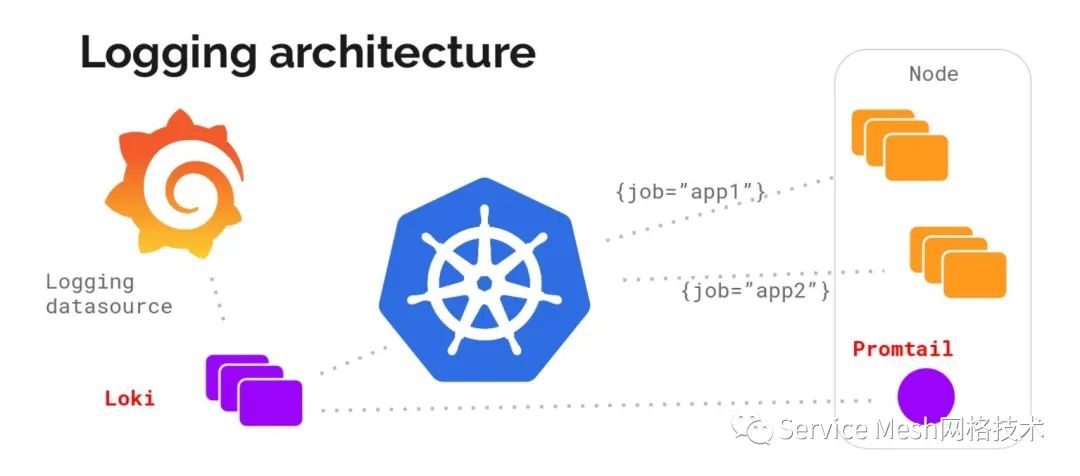

Grafana的Loki工具是一个具备水平可扩展,高可用性,多租户的日志聚合系统,它包含了日志收集,存储,可视化以及报警等功能。

而且,与其他日志系统不同,Loki的构想是仅对日志建立标签索引,而使原始日志消息保持未索引状态。这意味着Loki的运营成本更低,并且效率更高。

Loki特别适合存储Kubernetes Pod日志。诸如Pod标签之类的元数据会自动被抓取并建立索引。

与EFK对比

EFK(Elasticsearch,Fluentd/Filebeat,Kibana)技术栈用于收集,可视化和查询来自各种来源的日志。 Elasticsearch中的数据作为非结构化JSON对象存储在磁盘上。每个对象的键和每个键的内容都被索引。然后可以使用JSON对象或定义为Lucene的查询语言来查询数据。

相比之下,Loki在单二进制模式下可以将数据存储在磁盘上,但是在水平可伸缩模式下,数据存储在云存储系统(例如S3,GCS或Cassandra)中。日志以纯文本格式存储,并带有一组标签名称和值,其中仅对标签对进行索引。这种折衷使得它比全索引更具备成本优势,并且允许开发人员从其应用程序积极地进行日志记录。使用LogQL查询Loki中的日志。但是,由于这种设计的折衷,基于内容(即日志行中的文本)进行过滤的LogQL查询需要加载搜索窗口中与查询中定义的标签匹配的所有块。

此外,我们知道metrcis和alert只能揭示预定义的问题,未知的问题还得从Log里边查找。日志和 metric 分在两个系统,这增加了排查问题的难度。我们的日志和metrcis系统需要建立联系,而灵感来源于prometheus的loki,恰好解决了这个问题。

Loki架构

Loki大体架构如下:

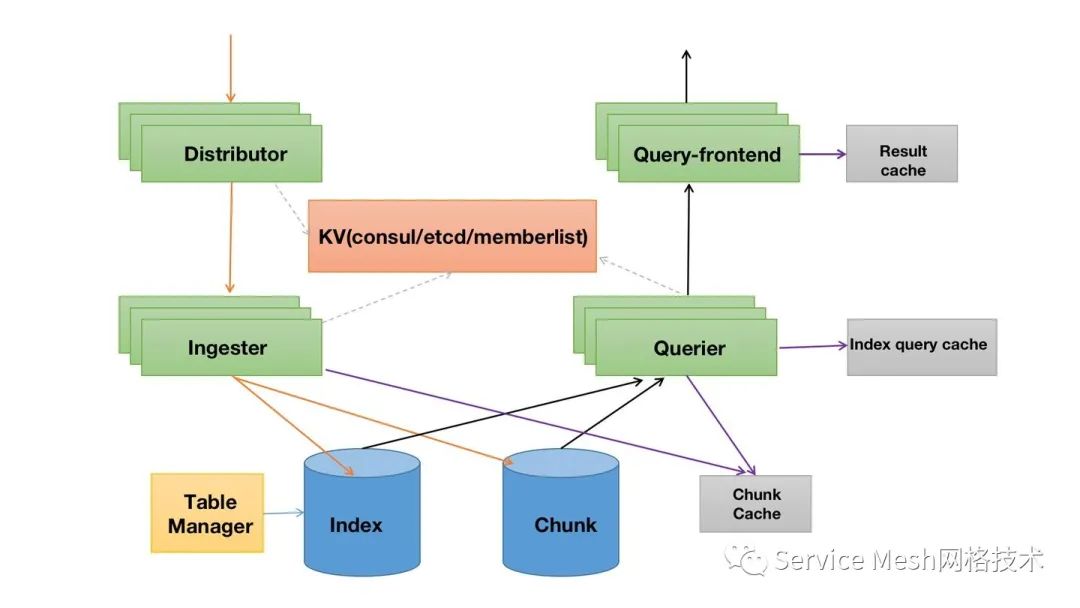

接下来我们介绍一些核心组件:

Distributor

-- Distributor 服务负责处理客户端的写入流。这是日志数据写入路径中的第一站。Distributor收到一组流后,将验证每个流的正确性并确保其在配置的租户(或全局)限制之内。然后,将有效块拆分为多个批次,并并行发送到多个ingester。

Distributor 使用一致性哈希和可配置的复制因子,以确定ingester服务的哪些实例应接收给定的流。流是与租户和唯一标签集关联的一组日志。使用租户ID和标签集对流进行散列,然后使用散列查找将流发送到的实例。

Ingester

-- Ingester服务负责在写入路径上将日志数据写入到长期存储后端(DynamoDB,S3,Cassandra等),并在读取路径上返回日志数据以进行内存中查询。

Ingester包含一个生命周期器,该生命周期器管理哈希环中ingester的生命周期。每个ingester状态为以下状态中的一种:PENDING

,JOINING

,ACTIVE

,LEAVING

或UNHEALTHY

。

Query frontend

-- Query frontend是一项可选服务,可提供查询器的API终结点,并可用于加速读取路径。当Query frontend就位时,应将传入的查询请求定向到Query frontend,而不是Querier。为了执行实际查询,集群中仍将需要Querier服务。

Query frontend在内部执行一些查询调整,并将查询保存在内部队列中。在此设置中,Queriers充当工作人员,将工作从队列中拉出,执行,然后将其返回到Query frontend进行聚合。Queriers需要配置Query frontend地址(通过-querier.frontend-address CLI标志),以允许Queriers连接到Query frontend。

Query frontend是无状态的。但是,由于内部队列的工作原理,建议运行一些Query frontend副本以充分利用公平调度的好处。在大多数情况下,两个副本就足够了。Querier

--Querier 使用 LogQL 查询语言处理查询,同时从ingester和长期存储中获取日志。

Querier将查询所有内存中的内存数据,然后回退到针对后端存储运行相同的查询。由于副本因素,Querier可能会收到重复的数据。为解决此问题,Querier在内部对具有相同纳秒级时间戳,标签集和日志消息的数据进行重复数据删除。Chunk Store

-- Chunk Store是Loki的长期数据存储,旨在支持交互式查询和持续写入,而无需后台维护任务。它包括:

块的索引。该索引可以通过以下方式支持:

Amazon DynamoDB

Google Bigtable

Apache Cassandra

块数据本身的键值(KV)存储,可以是:

Amazon DynamoDB

Google Bigtable

Apache Cassandra

Amazon S3

Google Cloud Storage

当然还可以包括做日志报警的ruler组件以及负责在其时间段开始之前创建周期表,并在其数据时间范围超出保留期限时将其删除的table-manager。

部署

Loki微服务部署模式,涉及组件比较多,我们生产环境使用k8s部署,当然涉及到敏感信息已经去掉。

Chuck 存储选择的是s3,index存储选择的是Cassandra。

1. 创建s3 桶,然后将aksk添加到下面的配置文件中。Cassandra 集群搭建我们这里不再讲述。

2. 部署loki的配置文件,

apiVersion: v1data:config.yaml: |chunk_store_config:chunk_cache_config:memcached:batch_size: 100parallelism: 100memcached_client:consistent_hash: truehost: memcached.loki.svc.cluster.localservice: memcached-clientmax_look_back_period: 0write_dedupe_cache_config:memcached:batch_size: 100parallelism: 100memcached_client:consistent_hash: truehost: memcached-index-writes.loki.svc.cluster.localservice: memcached-clientauth_enabled: falsedistributor:ring:kvstore:store: memberlistfrontend:compress_responses: truelog_queries_longer_than: 5smax_outstanding_per_tenant: 200frontend_worker:frontend_address: query-frontend.loki.svc.cluster.local:9095grpc_client_config:max_send_msg_size: 1.048576e+08parallelism: 2ingester:chunk_block_size: 262144chunk_idle_period: 15mlifecycler:heartbeat_period: 5sinterface_names:- eth0join_after: 30snum_tokens: 512ring:kvstore:store: memberlistreplication_factor: 3max_transfer_retries: 0ingester_client:grpc_client_config:max_recv_msg_size: 6.7108864e+07remote_timeout: 1slimits_config:enforce_metric_name: falseingestion_burst_size_mb: 20ingestion_rate_mb: 10ingestion_rate_strategy: globalmax_cache_freshness_per_query: 10mmax_global_streams_per_user: 10000max_query_length: 12000hmax_query_parallelism: 16max_streams_per_user: 0reject_old_samples: truereject_old_samples_max_age: 168hquerier:query_ingesters_within: 2hquery_range:align_queries_with_step: truecache_results: truemax_retries: 5results_cache:cache:memcached_client:consistent_hash: truehost: memcached-frontend.loki.svc.cluster.localmax_idle_conns: 16service: memcached-clienttimeout: 500msupdate_interval: 1msplit_queries_by_interval: 30mruler: {}schema_config:configs:- from: "2020-05-15"index:period: 168hprefix: cassandra_tableobject_store: s3schema: v11store: cassandraserver:graceful_shutdown_timeout: 5sgrpc_server_max_concurrent_streams: 1000grpc_server_max_recv_msg_size: 1.048576e+08grpc_server_max_send_msg_size: 1.048576e+08http_listen_port: 3100http_server_idle_timeout: 120shttp_server_write_timeout: 1mstorage_config:cassandra:username: loki-superuserpassword: xxxaddresses: loki-dc1-all-pods-service.cass-operator.svc.cluster.localauth: truekeyspace: lokiindexaws:bucketnames: xxendpoint: s3.amazonaws.comregion: ap-southeast-1access_key_id: xxsecret_access_key: xxs3forcepathstyle: falseindex_queries_cache_config:memcached:batch_size: 100parallelism: 100memcached_client:consistent_hash: truehost: memcached-index-queries.loki.svc.cluster.localservice: memcached-clientmemberlist:abort_if_cluster_join_fails: falsebind_port: 7946join_members:- loki-gossip-ring.loki.svc.cluster.local:7946max_join_backoff: 1mmax_join_retries: 10min_join_backoff: 1stable_manager:creation_grace_period: 3hpoll_interval: 10mretention_deletes_enabled: trueretention_period: 168hkind: ConfigMapmetadata:name: lokinamespace: loki---apiVersion: v1data:overrides.yaml: |overrides: {}kind: ConfigMapmetadata:name: overridesnamespace: loki

2. 部署依赖的4个memcached:

memcached-frontend.yaml如下:

apiVersion: apps/v1kind: StatefulSetmetadata:labels:app: memcached-frontendname: memcached-frontendnamespace: lokispec:replicas: 3selector:matchLabels:app: memcached-frontendserviceName: memcached-frontendtemplate:metadata:annotations:prometheus.io/scrape: "true"prometheus.io/port: "9150"labels:app: memcached-frontendspec:nodeSelector:group: lokitolerations:- effect: NoExecutekey: appoperator: Existsaffinity:podAntiAffinity:requiredDuringSchedulingIgnoredDuringExecution:- labelSelector:matchLabels:app: memcached-frontendtopologyKey: kubernetes.io/hostnamecontainers:- args:- -m 1024- -I 5m- -c 1024- -vimage: memcached:1.5.17-alpineimagePullPolicy: IfNotPresentname: memcachedports:- containerPort: 11211name: clientresources:limits:cpu: "3"memory: 1536Mirequests:cpu: 500mmemory: 1329Mi- args:- --memcached.address=localhost:11211- --web.listen-address=0.0.0.0:9150image: prom/memcached-exporter:v0.6.0imagePullPolicy: IfNotPresentname: exporterports:- containerPort: 9150name: http-metricsupdateStrategy:type: RollingUpdate---apiVersion: v1kind: Servicemetadata:labels:app: memcached-frontendname: memcached-frontendnamespace: lokispec:ports:- name: memcached-clientport: 11211targetPort: 11211selector:app: memcached-frontendmemcached-index-queries.yaml 如下:apiVersion: apps/v1kind: StatefulSetmetadata:labels:app: memcached-index-queriesname: memcached-index-queriesnamespace: lokispec:replicas: 3selector:matchLabels:app: memcached-index-queriesserviceName: memcached-index-queriestemplate:metadata:annotations:prometheus.io/scrape: "true"prometheus.io/port: "9150"labels:app: memcached-index-queriesspec:nodeSelector:group: lokitolerations:- effect: NoExecutekey: appoperator: Existsaffinity:podAntiAffinity:requiredDuringSchedulingIgnoredDuringExecution:- labelSelector:matchLabels:app: memcached-index-queriestopologyKey: kubernetes.io/hostnamecontainers:- args:- -m 1024- -I 5m- -c 1024- -vimage: memcached:1.5.17-alpineimagePullPolicy: IfNotPresentname: memcachedports:- containerPort: 11211name: clientresources:limits:cpu: "3"memory: 1536Mirequests:cpu: 500mmemory: 1329Mi- args:- --memcached.address=localhost:11211- --web.listen-address=0.0.0.0:9150image: prom/memcached-exporter:v0.6.0imagePullPolicy: IfNotPresentname: exporterports:- containerPort: 9150name: http-metricsupdateStrategy:type: RollingUpdate---apiVersion: v1kind: Servicemetadata:labels:app: memcached-index-queriesname: memcached-index-queriesnamespace: lokispec:clusterIP: Noneports:- name: memcached-clientport: 11211targetPort: 11211selector:app: memcached-index-queries

memcached-index-writes.yaml 如下:

apiVersion: apps/v1kind: StatefulSetmetadata:labels:app: memcached-index-writesname: memcached-index-writesnamespace: lokispec:replicas: 3selector:matchLabels:app: memcached-index-writesserviceName: memcached-index-writestemplate:metadata:annotations:prometheus.io/scrape: "true"prometheus.io/port: "9150"labels:app: memcached-index-writesspec:nodeSelector:group: lokitolerations:- effect: NoExecutekey: appoperator: Existsaffinity:podAntiAffinity:requiredDuringSchedulingIgnoredDuringExecution:- labelSelector:matchLabels:app: memcached-index-writestopologyKey: kubernetes.io/hostnamecontainers:- args:- -m 1024- -I 1m- -c 1024- -vimage: memcached:1.5.17-alpineimagePullPolicy: IfNotPresentname: memcachedports:- containerPort: 11211name: clientresources:limits:cpu: "3"memory: 1536Mirequests:cpu: 500mmemory: 1329Mi- args:- --memcached.address=localhost:11211- --web.listen-address=0.0.0.0:9150image: prom/memcached-exporter:v0.6.0imagePullPolicy: IfNotPresentname: exporterports:- containerPort: 9150name: http-metricsupdateStrategy:type: RollingUpdate---apiVersion: v1kind: Servicemetadata:labels:app: memcached-index-writesname: memcached-index-writesnamespace: lokispec:clusterIP: Noneports:- name: memcached-clientport: 11211targetPort: 11211selector:app: memcached-index-writesmemcached.yaml 如下:apiVersion: apps/v1kind: StatefulSetmetadata:labels:app: memcachedname: memcachednamespace: lokispec:replicas: 3selector:matchLabels:app: memcachedserviceName: memcachedtemplate:metadata:annotations:prometheus.io/scrape: "true"prometheus.io/port: "9150"labels:app: memcachedspec:nodeSelector:group: lokitolerations:- effect: NoExecutekey: appoperator: Existsaffinity:podAntiAffinity:requiredDuringSchedulingIgnoredDuringExecution:- labelSelector:matchLabels:app: memcachedtopologyKey: kubernetes.io/hostnamecontainers:- args:- -m 4096- -I 2m- -c 1024- -vimage: memcached:1.5.17-alpineimagePullPolicy: IfNotPresentname: memcachedports:- containerPort: 11211name: clientresources:limits:cpu: "3"memory: 6Girequests:cpu: 500mmemory: 5016Mi- args:- --memcached.address=localhost:11211- --web.listen-address=0.0.0.0:9150image: prom/memcached-exporter:v0.6.0imagePullPolicy: IfNotPresentname: exporterports:- containerPort: 9150name: http-metricsupdateStrategy:type: RollingUpdate---apiVersion: v1kind: Servicemetadata:labels:app: memcachedname: memcachednamespace: lokispec:clusterIP: Noneports:- name: memcached-clientport: 11211targetPort: 11211selector:app: memcached

对于这4个memcached 的作用,大家可以结合loki的配置文件和架构图查阅。

4. 在Loki中,ring是由tokens分成较小段的空间。每个段都属于单个“ ingester”,用于对多个ingester的系列/日志进行分片。除tokens外,每个实例还具有其ID,地址和定期更新的最新心跳时间戳。这允许其他组件(distributors 和 queriers)发现哪些inester是可用的和有效的。

支持consul ,etcd,memberlist(gossip) 等实现。我们为了减少不必要的依赖,选择了memberlist。

所以需要部署一个service:

gossip_ring.yaml 如下:

apiVersion: v1kind: Servicemetadata:labels:name: loki-gossip-ringname: loki-gossip-ringnamespace: lokispec:ports:- name: gossip-ringport: 7946targetPort: 7946protocol: TCPselector:gossip_ring_member: 'true'

5. 部署distributor

apiVersion: apps/v1kind: Deploymentmetadata:name: distributornamespace: lokilabels:app: distributorspec:minReadySeconds: 10replicas: 3revisionHistoryLimit: 10selector:matchLabels:app: distributortemplate:metadata:annotations:prometheus.io/path: /metricsprometheus.io/port: "3100"prometheus.io/scrape: "true"labels:app: distributorgossip_ring_member: 'true'spec:nodeSelector:group: lokitolerations:- effect: NoExecutekey: appoperator: Existsaffinity:podAntiAffinity:requiredDuringSchedulingIgnoredDuringExecution:- labelSelector:matchLabels:app: distributortopologyKey: kubernetes.io/hostnamecontainers:- args:- -config.file=/etc/loki/config/config.yaml- -limits.per-user-override-config=/etc/loki/overrides/overrides.yaml- -target=distributorimage: grafana/loki:1.6.1imagePullPolicy: IfNotPresentname: distributorports:- containerPort: 3100name: http-metrics- containerPort: 9095name: grpc- containerPort: 7946name: gossip-ringreadinessProbe:httpGet:path: /readyport: 3100initialDelaySeconds: 15timeoutSeconds: 1resources:limits:cpu: "1"memory: 1Girequests:cpu: 500mmemory: 500MivolumeMounts:- mountPath: /etc/loki/configname: loki- mountPath: /etc/loki/overridesname: overridesvolumes:- configMap:name: lokiname: loki- configMap:name: overridesname: overrides---apiVersion: v1kind: Servicemetadata:labels:name: distributorname: distributornamespace: lokispec:ports:- name: distributor-http-metricsport: 3100targetPort: 3100- name: distributor-grpcport: 9095targetPort: 9095selector:app: distributor

6. 部署ingester

apiVersion: apps/v1kind: StatefulSetmetadata:name: ingesternamespace: lokilabels:app: ingesterspec:updateStrategy:type: RollingUpdatereplicas: 3serviceName: ingesterselector:matchLabels:app: ingesterstrategy:rollingUpdate:maxSurge: 0maxUnavailable: 1template:metadata:annotations:prometheus.io/path: /metricsprometheus.io/port: "3100"prometheus.io/scrape: "true"labels:name: ingestergossip_ring_member: 'true'spec:nodeSelector:group: lokitolerations:- effect: NoExecutekey: appoperator: ExistssecurityContext:fsGroup: 10001affinity:podAntiAffinity:requiredDuringSchedulingIgnoredDuringExecution:- labelSelector:matchLabels:app: ingestertopologyKey: kubernetes.io/hostnamecontainers:- args:- -config.file=/etc/loki/config/config.yaml- -limits.per-user-override-config=/etc/loki/overrides/overrides.yaml- -target=ingesterimage: grafana/loki:1.6.1imagePullPolicy: IfNotPresentname: ingesterports:- containerPort: 3100name: http-metrics- containerPort: 9095name: grpc- containerPort: 7946name: gossip-ringreadinessProbe:httpGet:path: /readyport: 3100initialDelaySeconds: 15timeoutSeconds: 1resources:limits:cpu: "2"memory: 10Girequests:cpu: "1"memory: 5GivolumeMounts:- mountPath: /etc/loki/configname: loki- mountPath: /etc/loki/overridesname: overrides- mountPath: /dataname: ingester-dataterminationGracePeriodSeconds: 4800volumes:- configMap:name: lokiname: loki- configMap:name: overridesname: overridesvolumeClaimTemplates:- metadata:labels:app: queriername: ingester-dataspec:accessModes:- ReadWriteOnceresources:requests:storage: 10GistorageClassName: gp2---apiVersion: v1kind: Servicemetadata:labels:name: ingestername: ingesternamespace: lokispec:ports:- name: ingester-http-metricsport: 3100targetPort: 3100- name: ingester-grpcport: 9095targetPort: 9095selector:app: ingester

7. 部署query-frontend

apiVersion: apps/v1kind: Deploymentmetadata:name: query-frontendnamespace: lokilabels:app: query-frontendspec:minReadySeconds: 10replicas: 2revisionHistoryLimit: 10selector:matchLabels:name: query-frontendtemplate:metadata:annotations:prometheus.io/path: /metricsprometheus.io/port: "3100"prometheus.io/scrape: "true"labels:app: query-frontendspec:nodeSelector:group: lokitolerations:- effect: NoExecutekey: appoperator: Existsaffinity:podAntiAffinity:requiredDuringSchedulingIgnoredDuringExecution:- labelSelector:matchLabels:app: query-frontendtopologyKey: kubernetes.io/hostnamecontainers:- args:- -config.file=/etc/loki/config/config.yaml- -limits.per-user-override-config=/etc/loki/overrides/overrides.yaml- -log.level=debug- -target=query-frontendimage: grafana/loki:master-92ace83imagePullPolicy: IfNotPresentname: query-frontendports:- containerPort: 3100name: http-metrics- containerPort: 9095name: grpcreadinessProbe:httpGet:path: /readyport: 3100initialDelaySeconds: 15timeoutSeconds: 1resources:limits:memory: 1200Mirequests:cpu: "2"memory: 600MivolumeMounts:- mountPath: /etc/loki/configname: loki- mountPath: /etc/loki/overridesname: overridesvolumes:- configMap:name: lokiname: loki- configMap:name: overridesname: overrides---apiVersion: v1kind: Servicemetadata:labels:name: query-frontendname: query-frontendnamespace: lokispec:clusterIP: NonepublishNotReadyAddresses: trueports:- name: query-frontend-http-metricsport: 3100targetPort: 3100- name: query-frontend-grpcport: 9095targetPort: 9095selector:app: query-frontend

8. 部署querier

apiVersion: apps/v1kind: StatefulSetmetadata:labels:name: queriername: queriernamespace: lokispec:updateStrategy:type: RollingUpdatereplicas: 3serviceName: querierselector:matchLabels:app: queriertemplate:metadata:annotations:prometheus.io/path: /metricsprometheus.io/port: "3100"prometheus.io/scrape: "true"labels:app: queriergossip_ring_member: 'true'spec:nodeSelector:group: lokitolerations:- effect: NoExecutekey: appoperator: ExistssecurityContext:fsGroup: 10001affinity:podAntiAffinity:requiredDuringSchedulingIgnoredDuringExecution:- labelSelector:matchLabels:app: queriertopologyKey: kubernetes.io/hostnamecontainers:- args:- -config.file=/etc/loki/config/config.yaml- -limits.per-user-override-config=/etc/loki/overrides/overrides.yaml- -target=querierimage: grafana/loki:1.6.1imagePullPolicy: IfNotPresentname: querierports:- containerPort: 3100name: http-metrics- containerPort: 9095name: grpc- containerPort: 7946name: gossip-ringreadinessProbe:httpGet:path: /readyport: 3100initialDelaySeconds: 15timeoutSeconds: 1resources:requests:cpu: "4"memory: 2GivolumeMounts:- mountPath: /etc/loki/configname: loki- mountPath: /etc/loki/overridesname: overrides- mountPath: /dataname: querier-datavolumes:- configMap:name: lokiname: loki- configMap:name: overridesname: overridesvolumeClaimTemplates:- metadata:labels:app: queriername: querier-dataspec:accessModes:- ReadWriteOnceresources:requests:storage: 10GistorageClassName: gp2---apiVersion: v1kind: Servicemetadata:labels:name: queriername: queriernamespace: lokispec:ports:- name: querier-http-metricsport: 3100targetPort: 3100- name: querier-grpcport: 9095targetPort: 9095selector:app: querier

9:部署table manager

apiVersion: apps/v1kind: Deploymentmetadata:name: table-managernamespace: lokilabels:app: table-managerspec:minReadySeconds: 10replicas: 1revisionHistoryLimit: 10selector:matchLabels:app: table-managertemplate:metadata:annotations:prometheus.io/path: /metricsprometheus.io/port: "3100"prometheus.io/scrape: "true"labels:app: table-managerspec:nodeSelector:group: lokitolerations:- effect: NoExecutekey: appoperator: Existscontainers:- args:- -bigtable.backoff-on-ratelimits=true- -bigtable.grpc-client-rate-limit=5- -bigtable.grpc-client-rate-limit-burst=5- -bigtable.table-cache.enabled=true- -config.file=/etc/loki/config/config.yaml- -limits.per-user-override-config=/etc/loki/overrides/overrides.yaml- -target=table-managerimage: grafana/loki:1.6.1imagePullPolicy: IfNotPresentname: table-managerports:- containerPort: 3100name: http-metrics- containerPort: 9095name: grpcreadinessProbe:httpGet:path: /readyport: 3100initialDelaySeconds: 15timeoutSeconds: 1resources:limits:cpu: 200mmemory: 200Mirequests:cpu: 100mmemory: 100MivolumeMounts:- mountPath: /etc/loki/configname: lokivolumes:- configMap:name: lokiname: loki---apiVersion: v1kind: Servicemetadata:labels:app: table-managername: table-managernamespace: lokispec:ports:- name: table-manager-grpcport: 9095targetPort: 9095selector:app: table-manager

部署完成之后,可以查看所有pod运行状态:

kubectl get pods -n lokiNAME READY STATUS RESTARTS AGEdistributor-84747955fb-hhtzl 1/1 Running 0 8ddistributor-84747955fb-pq9wn 1/1 Running 0 8ddistributor-84747955fb-w66hp 1/1 Running 0 8dingester-0 1/1 Running 0 8dingester-1 1/1 Running 0 8dingester-2 1/1 Running 0 8dmemcached-0 2/2 Running 0 3d2hmemcached-1 2/2 Running 0 3d2hmemcached-2 2/2 Running 0 3d2hmemcached-frontend-0 2/2 Running 0 3d2hmemcached-frontend-1 2/2 Running 0 3d2hmemcached-frontend-2 2/2 Running 0 3d2hmemcached-index-queries-0 2/2 Running 0 3d2hmemcached-index-queries-1 2/2 Running 0 3d2hmemcached-index-queries-2 2/2 Running 0 3d2hmemcached-index-writes-0 2/2 Running 0 3d3hmemcached-index-writes-1 2/2 Running 0 3d3hmemcached-index-writes-2 2/2 Running 0 3d3hquerier-0 1/1 Running 0 3d3hquerier-1 1/1 Running 0 3d3hquerier-2 1/1 Running 0 3d3hquery-frontend-6c8ffc8667-qj5zq 1/1 Running 0 8dtable-manager-c4fdf6475-zjzqg 1/1 Running 0 8d

至此,部署工作完成。至于promtail插件,本文不作介绍,目前支持loki数据源的插件比较多,比如fluent bit 等。

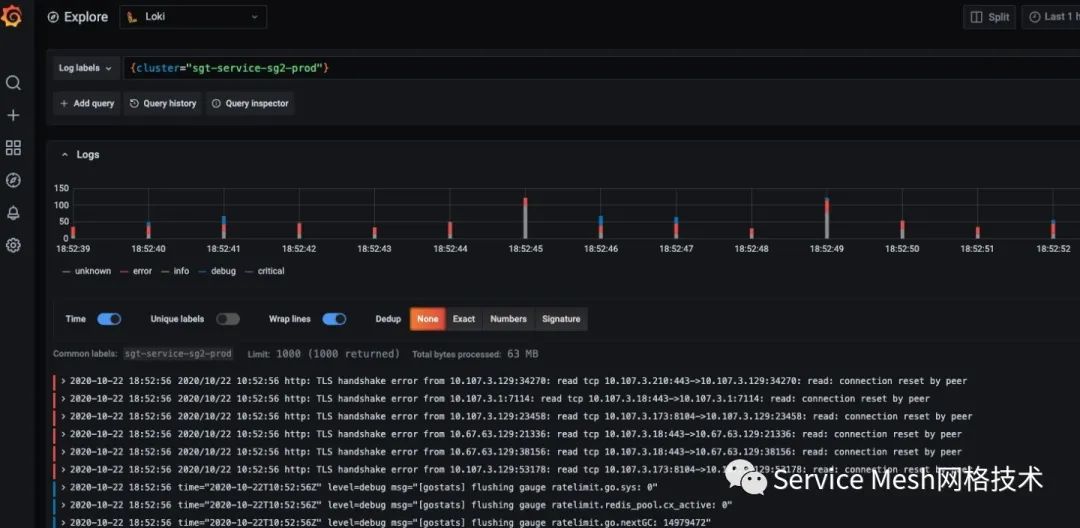

可视化

Grafana已经通过Explore组件支持对loki直接查询。

通过标签组合来实现查询。

总结

如果对全文索引不是强需求,那么loki是k8s 日志系统的一个比较好的选择。