原标题:Learning to Transfer Initializations forBayesian hyperparameter optimization

作者:Jungtaek Kim,InternationalConferenceon Machine Learning,2017

关键词:SMBO、迁移学习、LSTM

中文摘要:

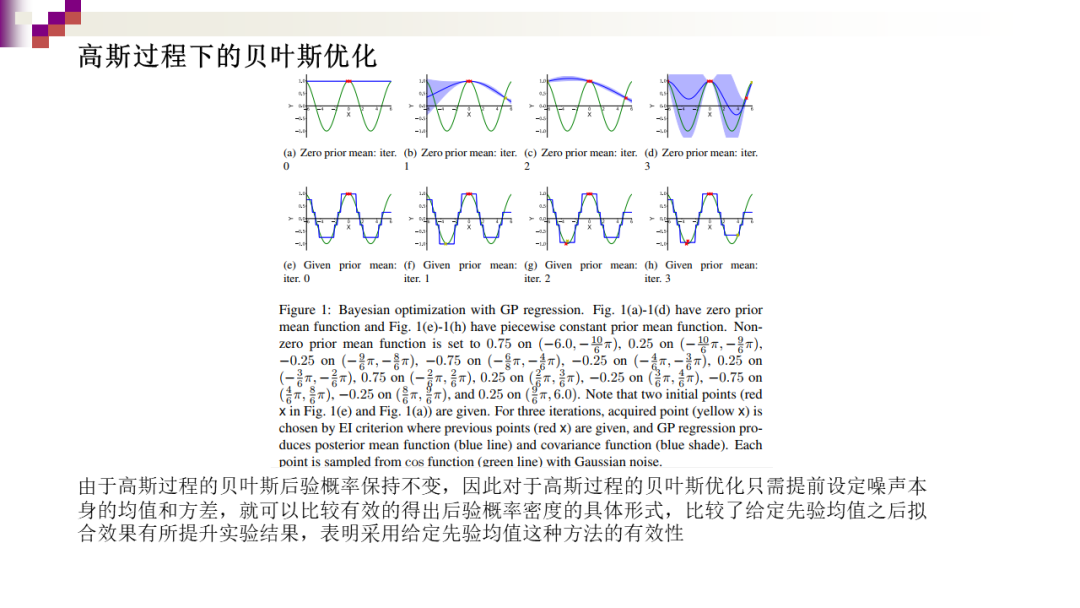

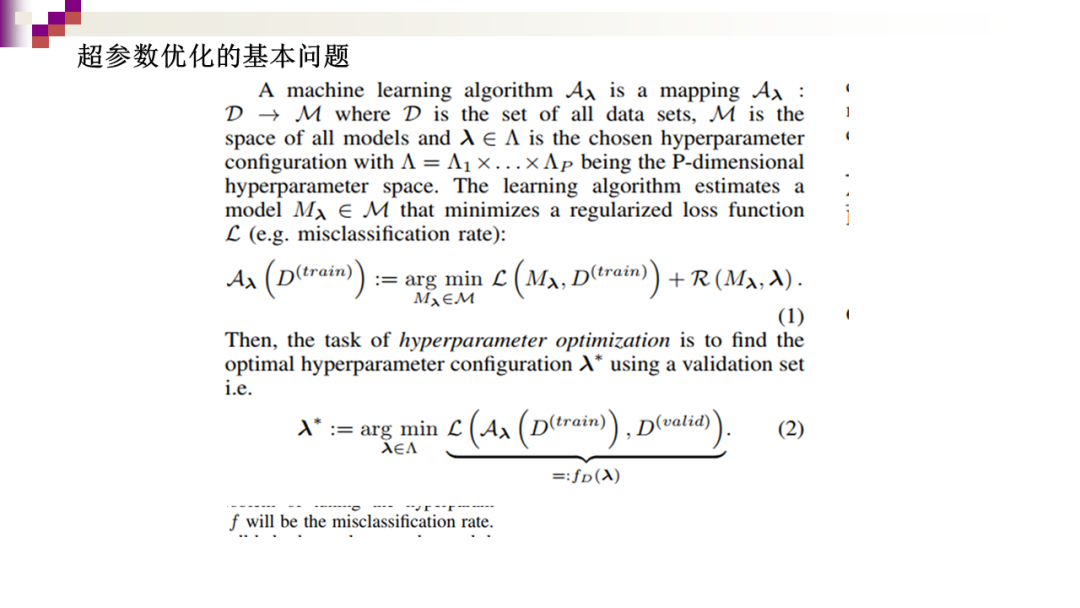

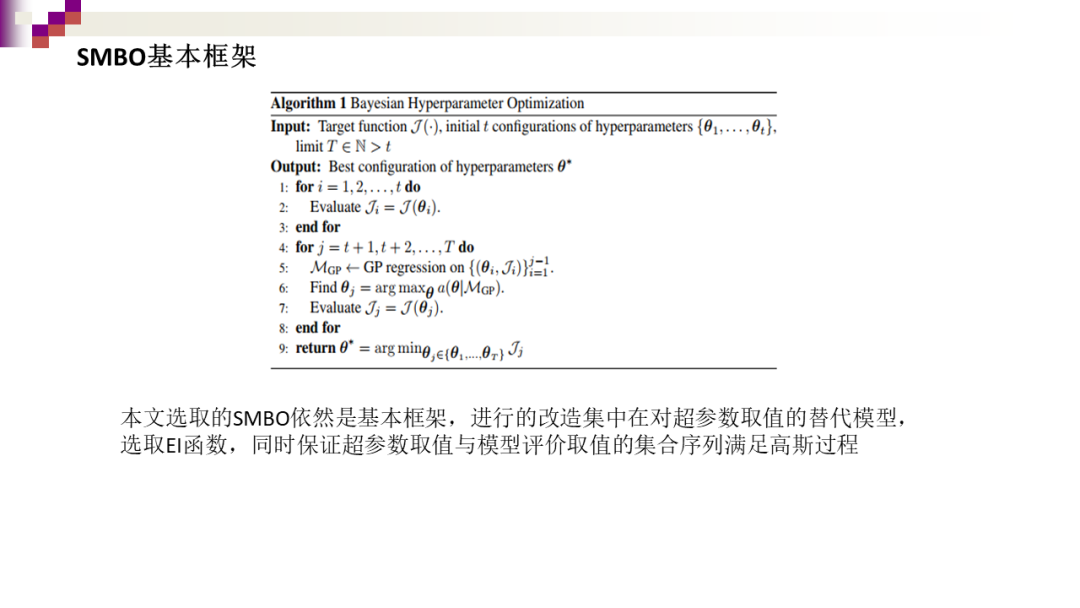

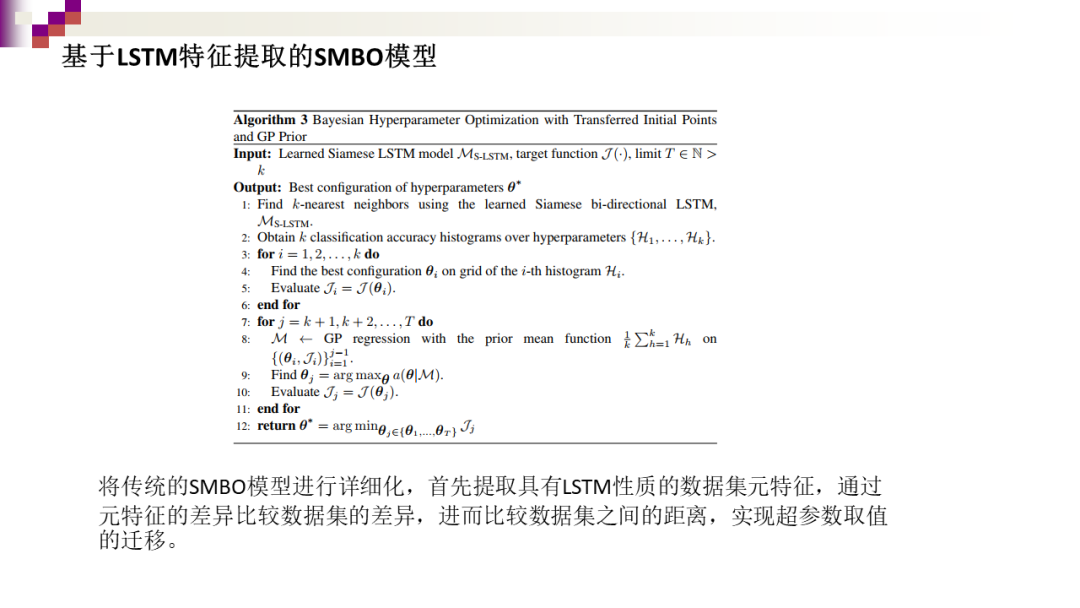

整篇文章主要是对传统基于序列优化模型(SMBO)的迁移学习改造。

将知识(超参数集合)从先前的实验转移到新的实验

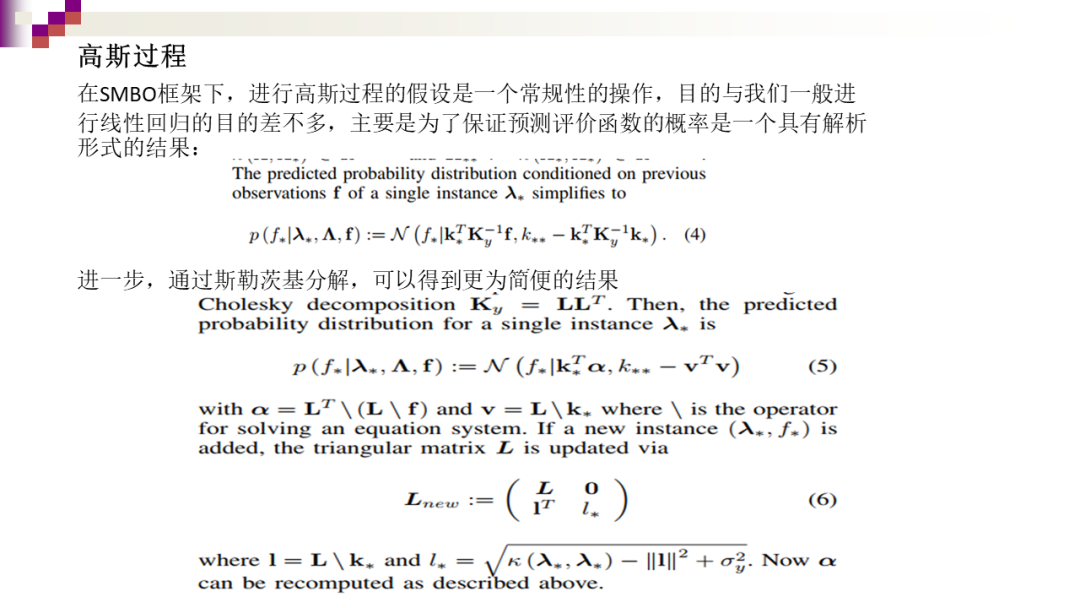

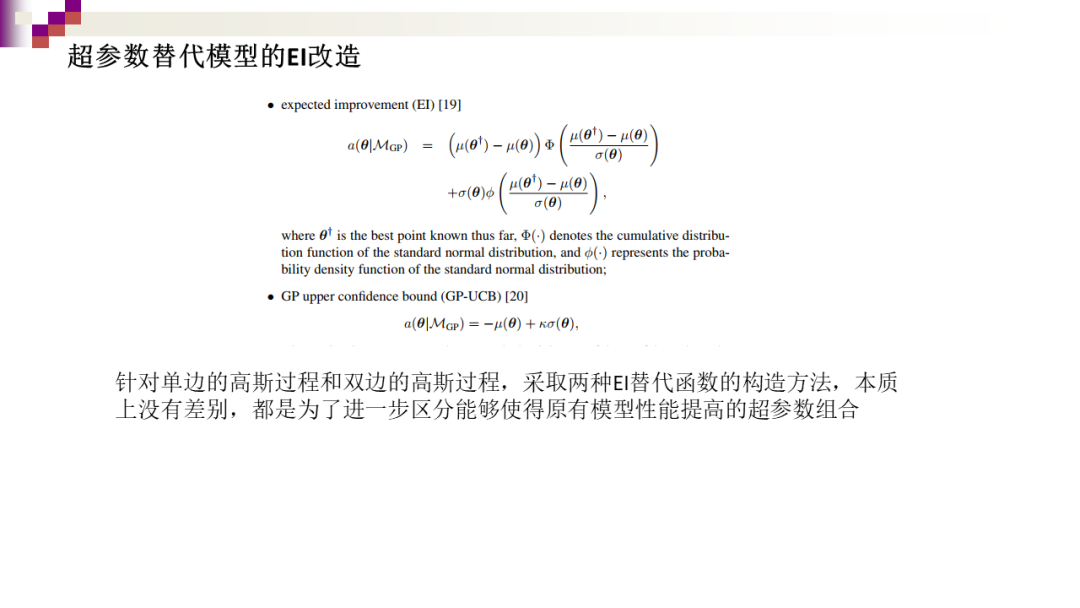

针对SMBO框架中的超参数取值的替代模型进行期望改进(EI)函数改造

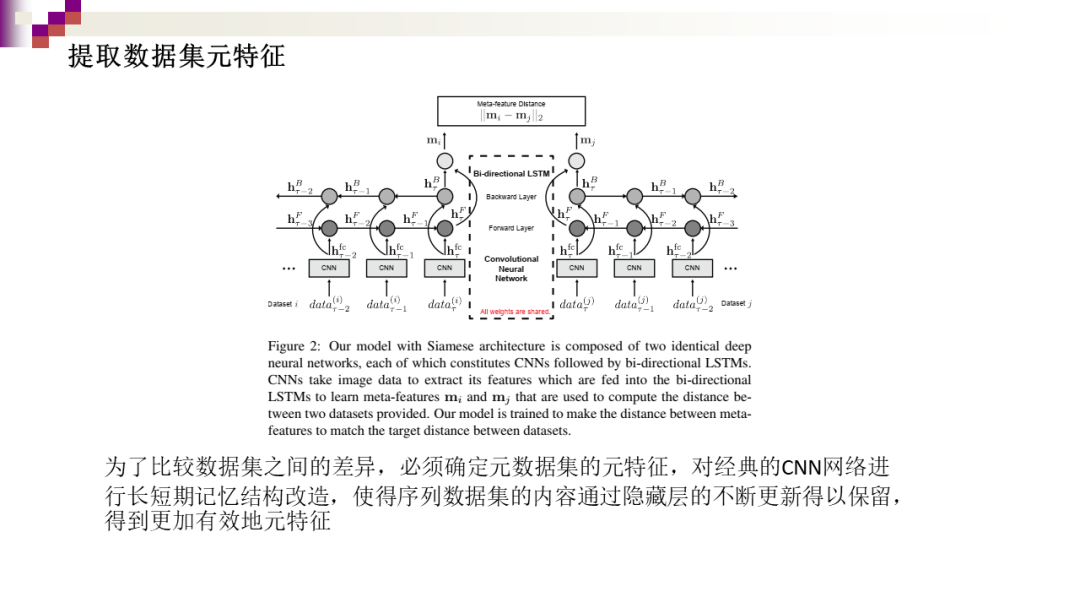

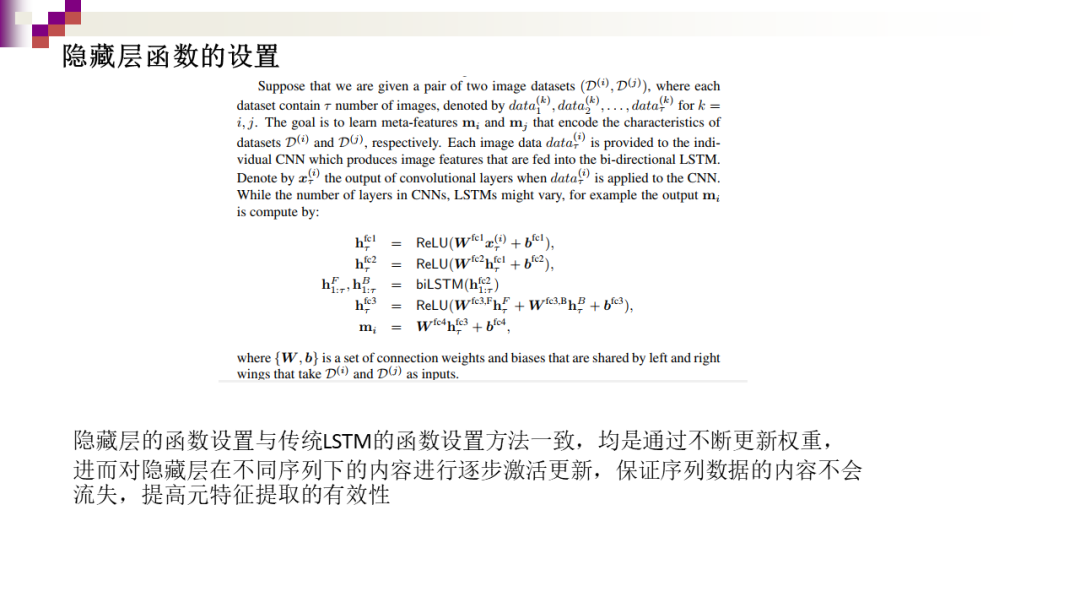

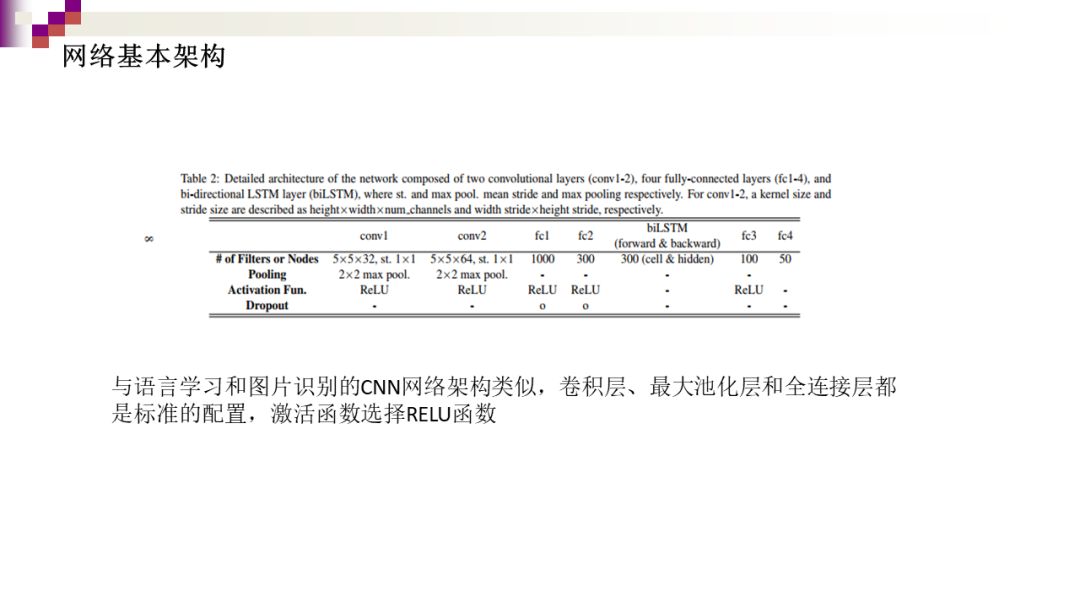

引入LSTM(长短期记忆)的神经网络构架,提取数据集的元特征,进一步构造数据集之间差异的损失函数,构成Siamese bi-directional LSTM

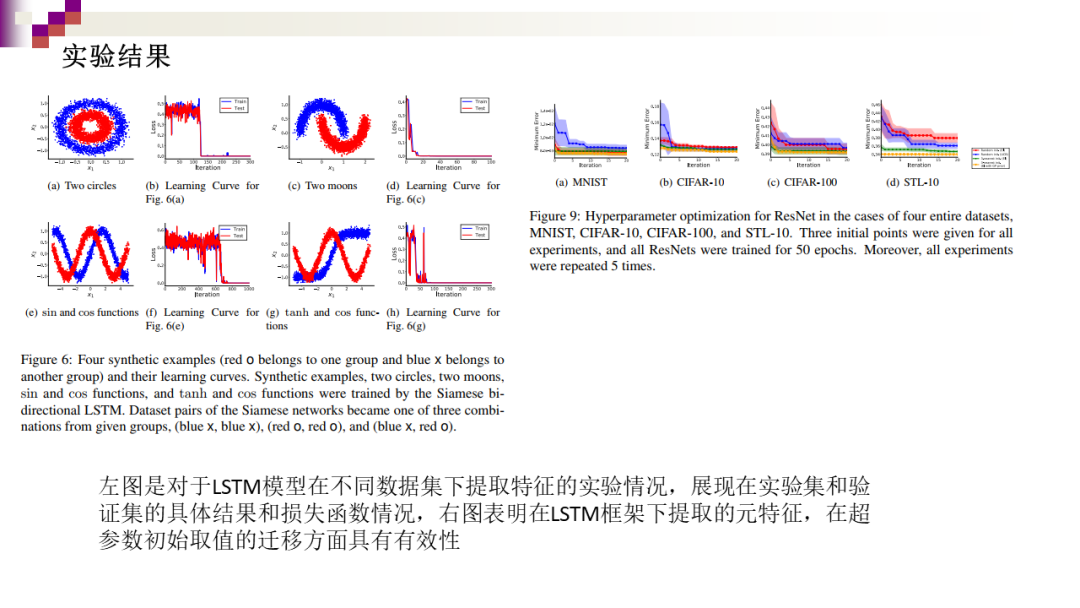

进行实际数据实验

英文摘要:

Hyperparameter optimization undergoesextensive evaluations of validation errors in order to find the bestconfiguration of hyperparameters. Bayesian optimization is now popular forhyperparameter optimization, since it reduces the number of validation errorevaluations required. Suppose that we are given a collection of datasets onwhich hyperparameters are already tuned by either humans with domain expertiseor extensive trials of cross-validation. When a model is applied to a newdataset, it is desirable to let Bayesian hyperparameter optimzation start from configurationsthat were successful on similar datasets. To this end, we construct a Siamesenetwork with convolutional layers followed by bi-directional LSTM layers, tolearn meta-features over datasets. Learned meta-features are used to select afew datasets that are similar to the new dataset, so that a set ofconfigurations in similar datasets is adopted as initializations for Bayesianhyperparameter optimization. Experiments on image datasets demonstrate that ourlearned meta-features are useful in optimizing several hyperparameters in deepresidual networks for image classification.

原文链接:

https://www.baidu.com/s?word=Learning%20to%20Transfer%20Initializations%20for%20Bayesian&tn=25017023_6_pg&lm=-1&ssl_s=1&ssl_c=ssl1_171e312b4c4

文献总结: