原标题:This Looks Like That: Deep Learning for Interpretable Image Recognition

作者:Chaofan Chen Oscar Li Chaofan Tao Alina Jade Barnett Jonathan Su Cynthia Rudin

中文摘要:

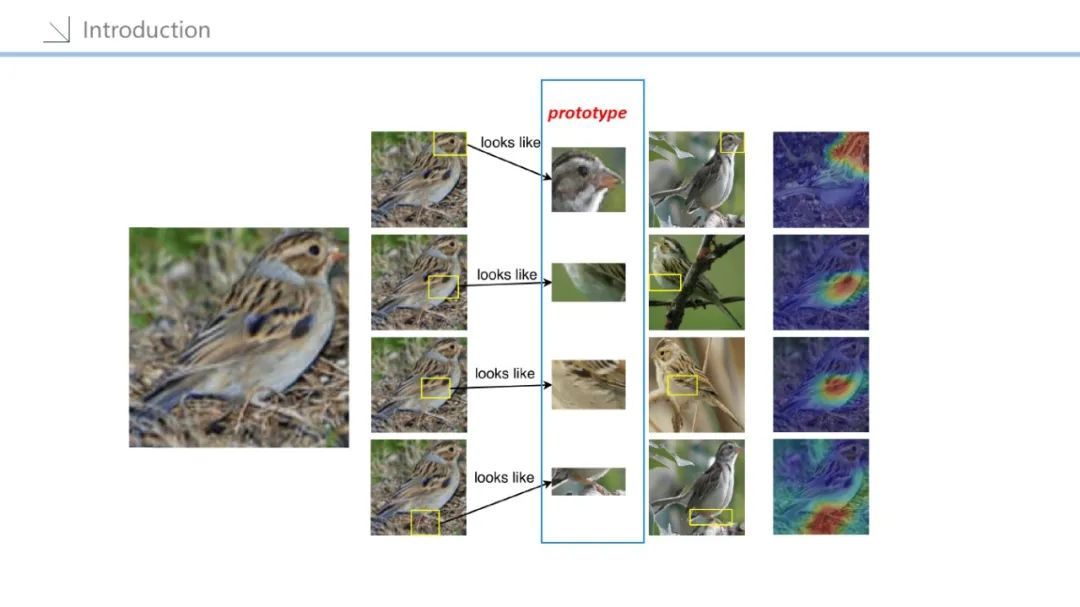

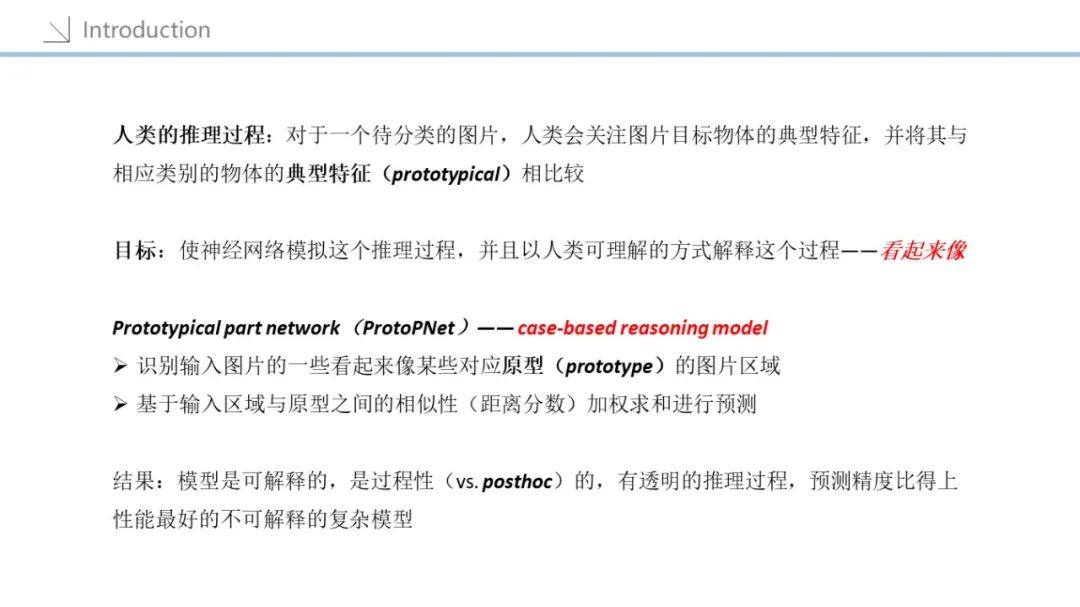

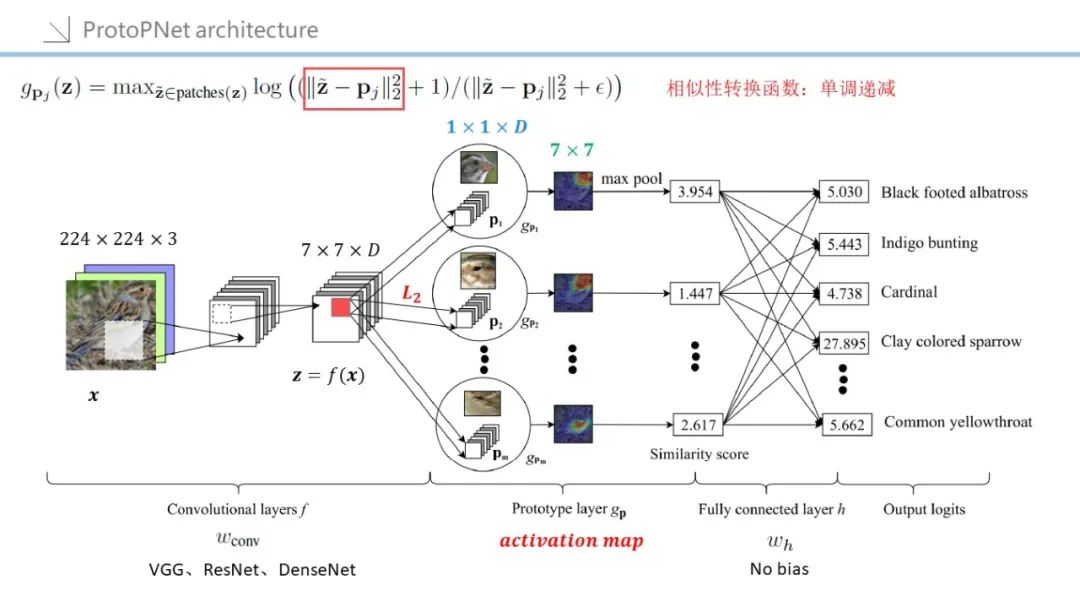

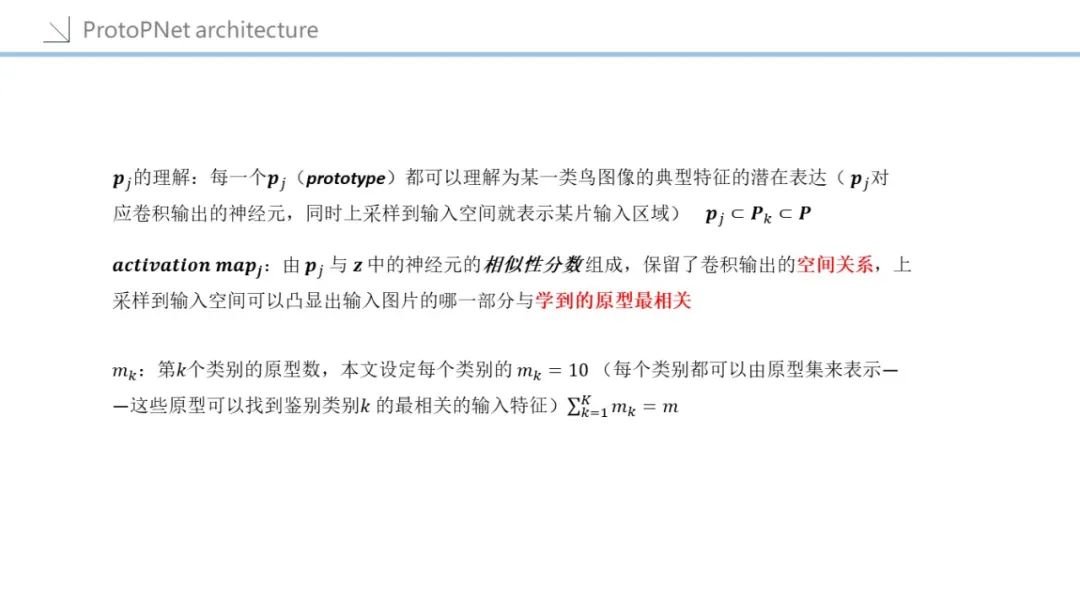

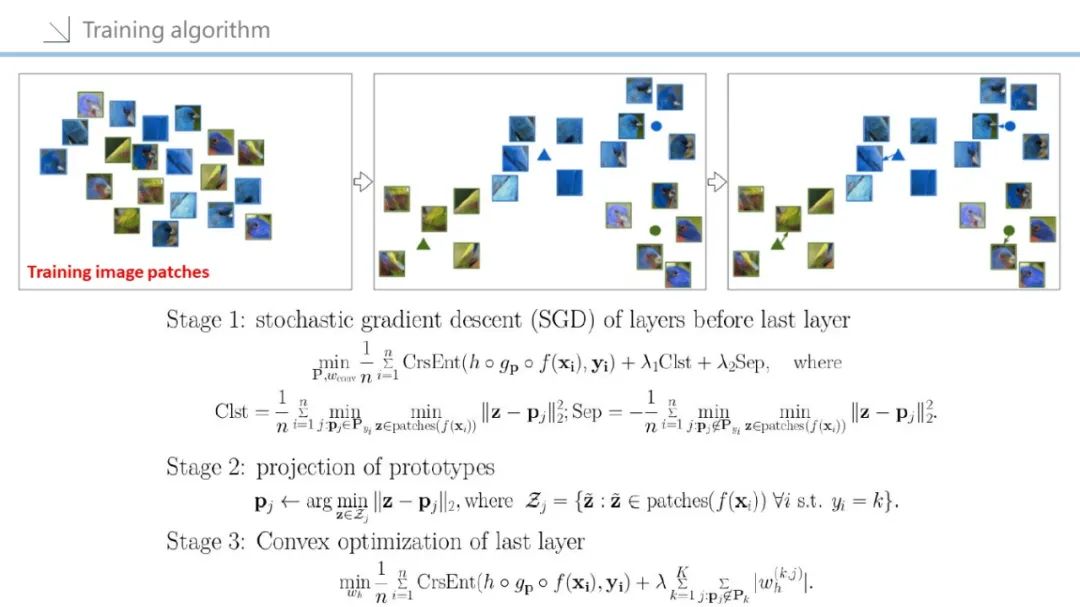

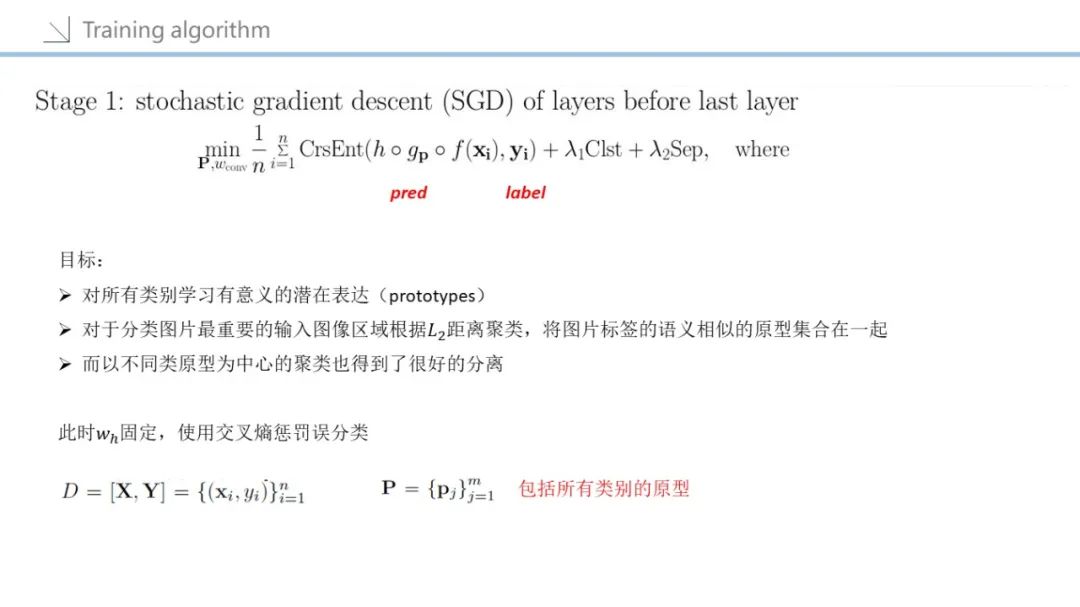

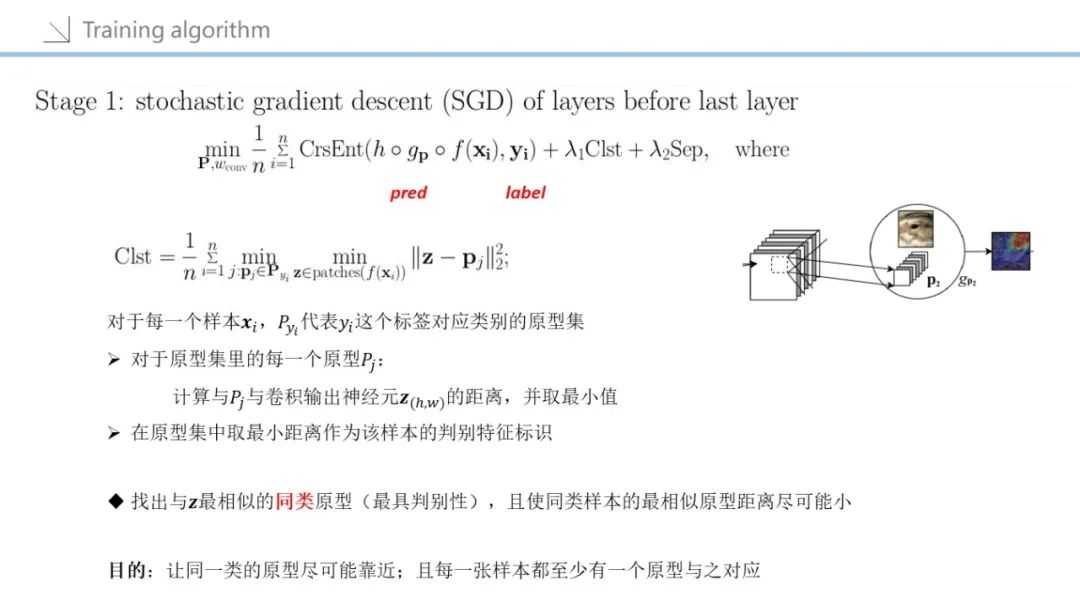

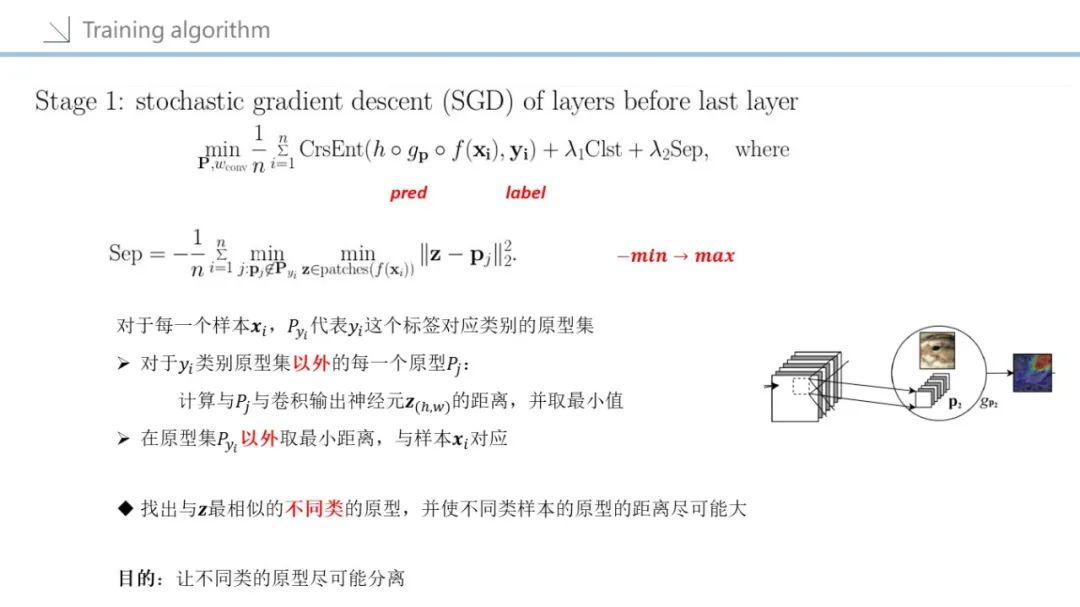

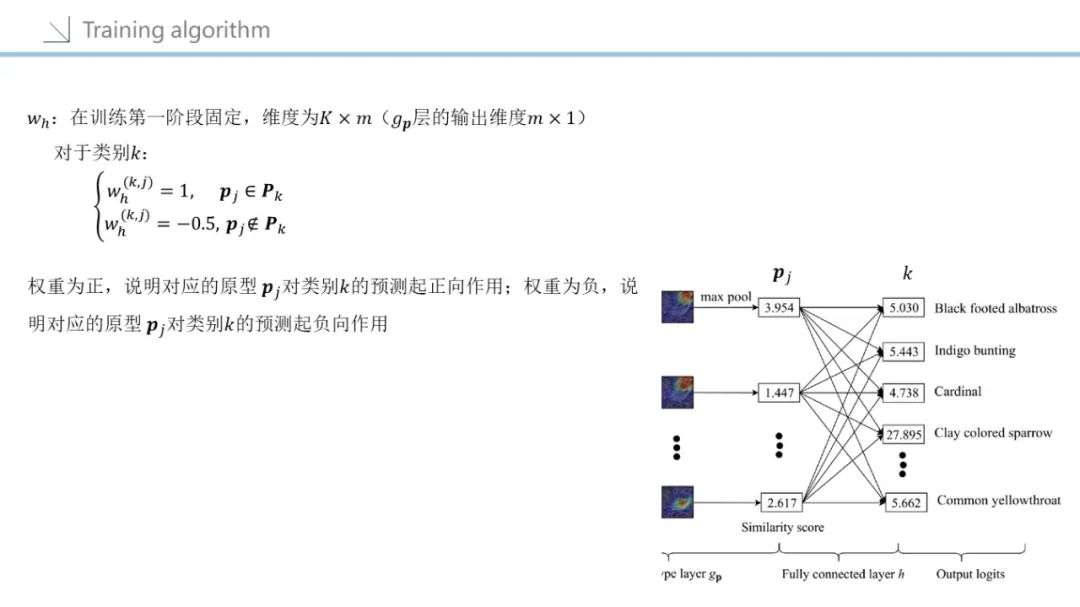

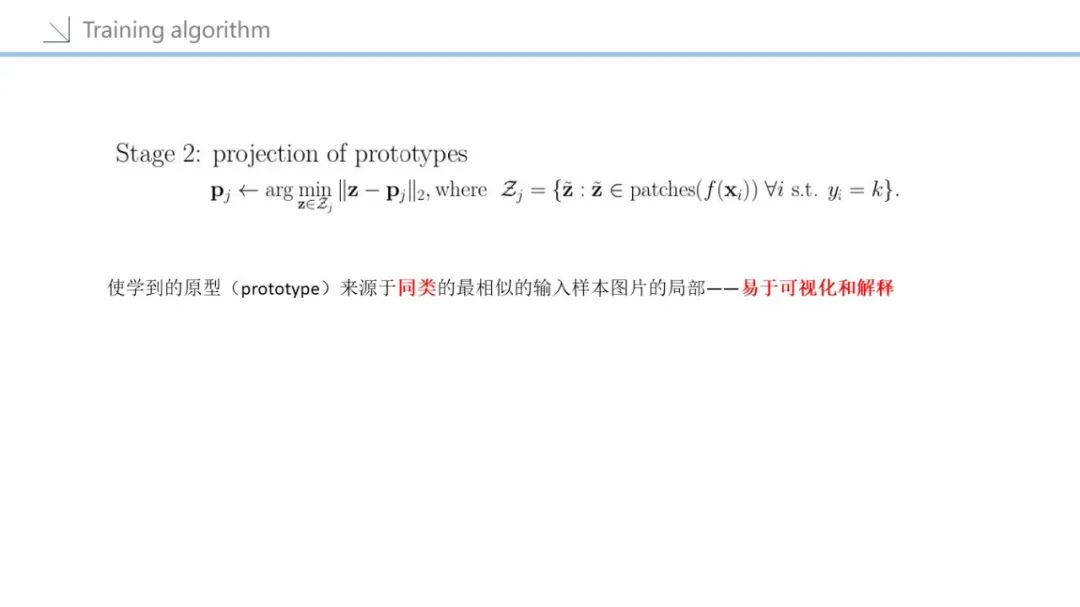

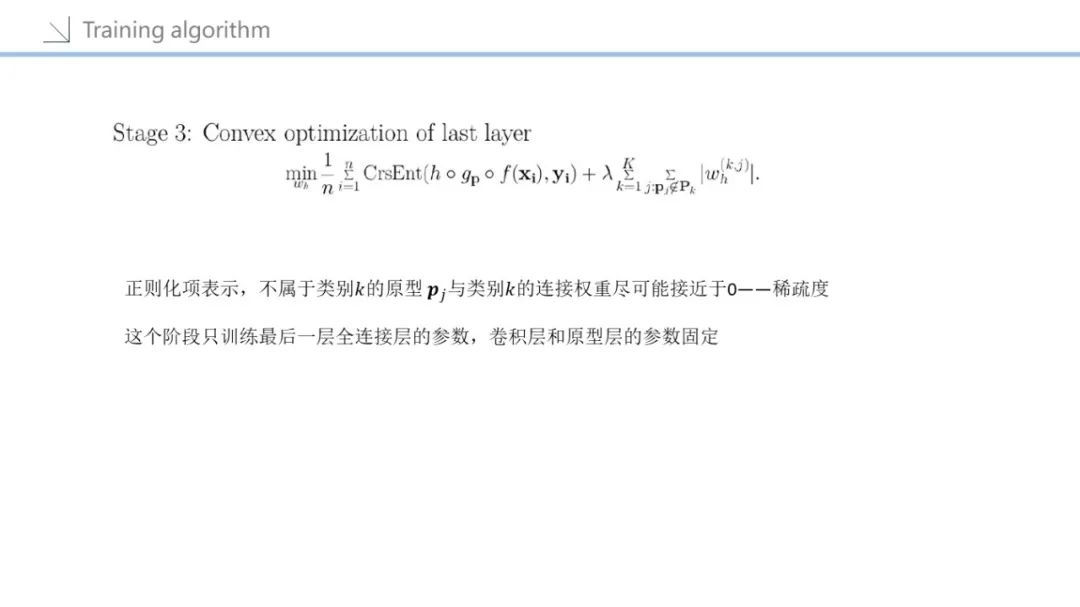

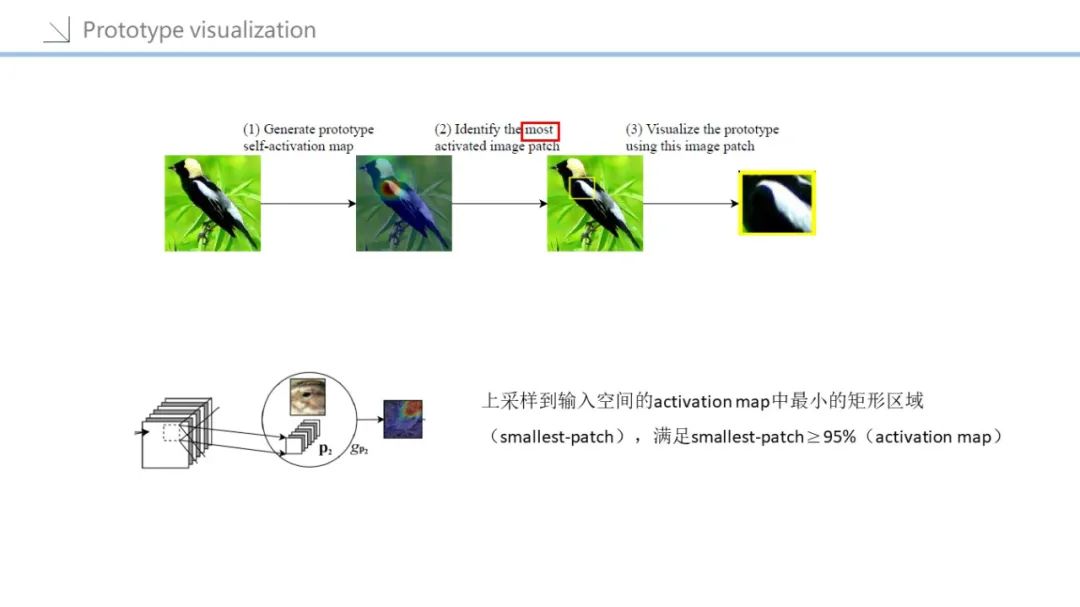

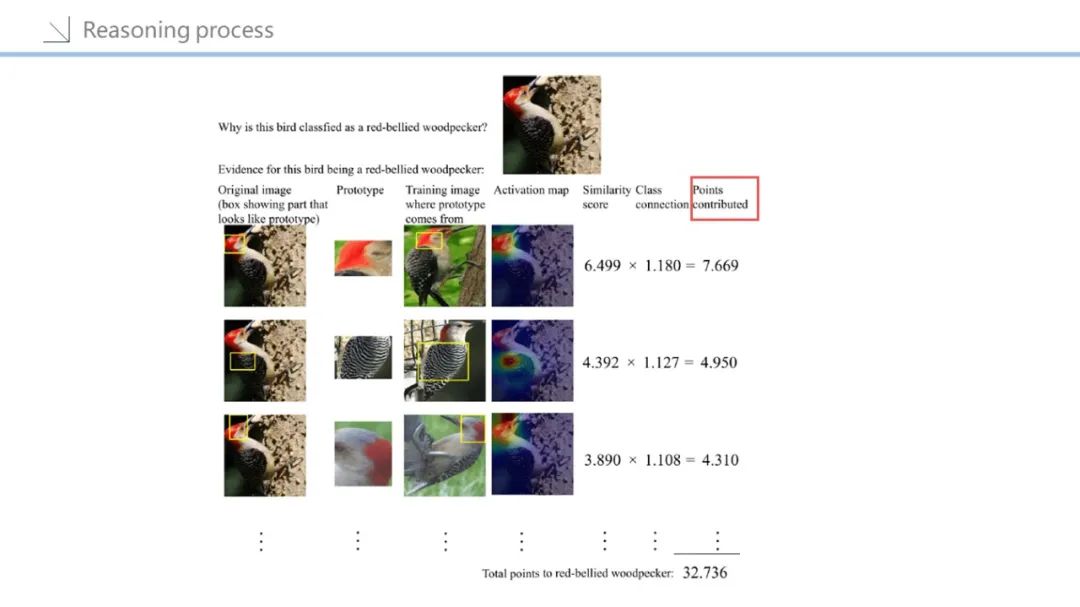

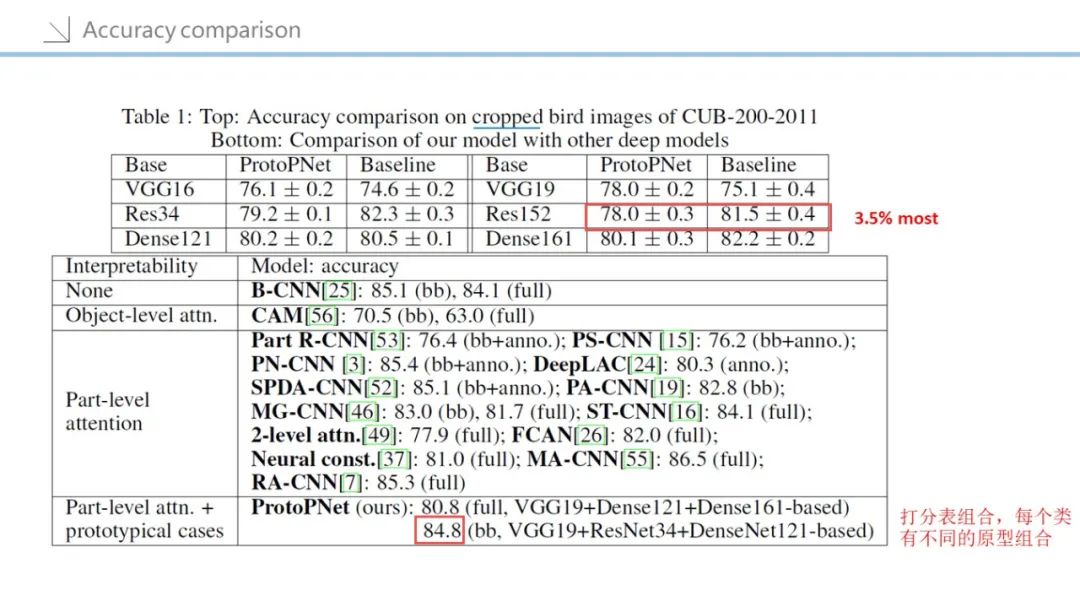

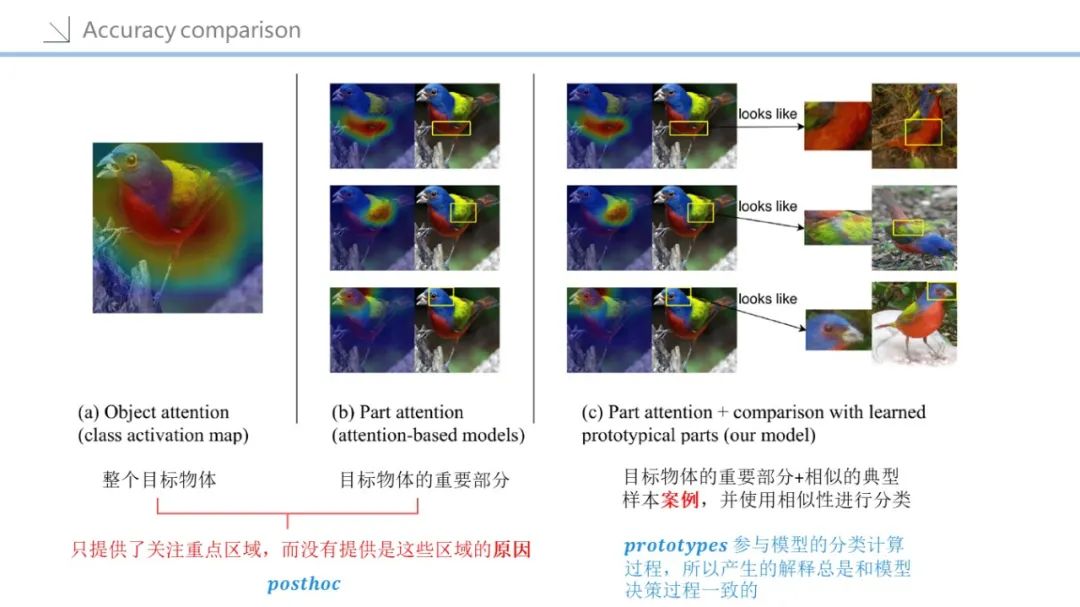

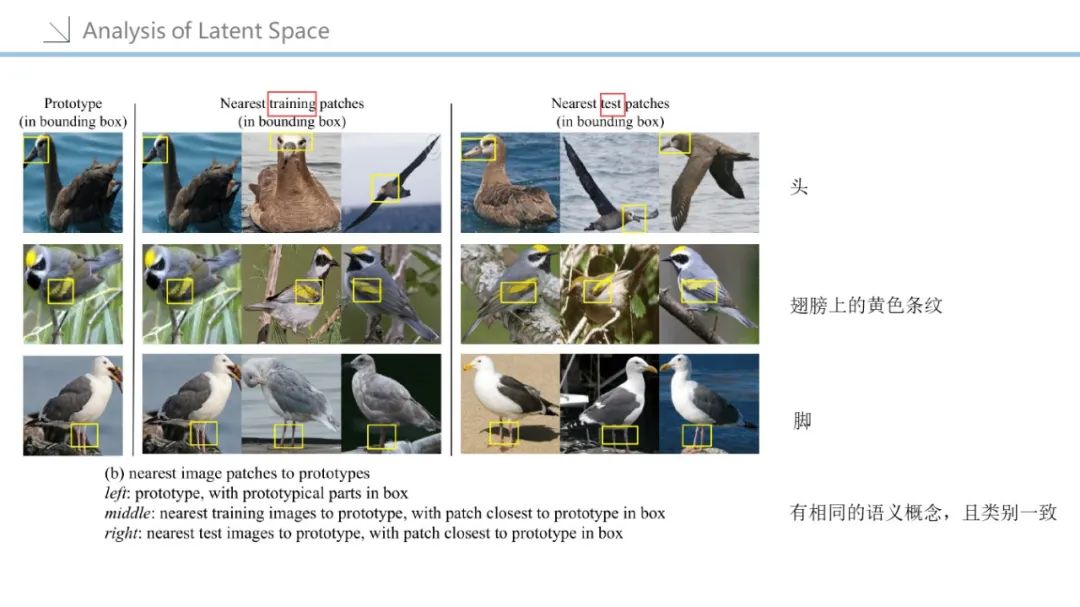

当我们面对具有挑战性的图像分类任务时,我们常常通过解剖图像,指出一个类或另一个类的典型特征来解释我们的推理。类别的证据越多,越有助于做出最后的决策。在这项工作中,我们介绍了一个深层网络结构——prototype partnetwork (ProtoPNet),其推理过程与人类相似:网络通过寻找原型部分来解剖图像,并结合原型中的证据进行最终分类。因此,模型的推理方式是定性上类似于鸟类学家、医生和其他人向人们解释如何解决具有挑战性的图像分类任务的方式。网络仅使用训练的图像级别标签,不包含图像部分的任何标注。我们在CUB-200-2011数据集和斯坦福汽车数据集上演示了我们的方法。我们的实验表明,ProtoPNet可以达到与它类似的不可解释的模型相当的精度,当多个ProtoPNet 组合成一个更大的网络时,它可以获得与一些性能最好的深度模型相当的精度。此外,ProtoPNet提供了其他可解释的深层模型所没有的可解释性水平。

英文摘要:

When we are faced with challenging image classification tasks, we oftenexplain our reasoning by dissecting the image, and pointing out prototypicalaspects of one class or another. The mounting evidence for each of the classes helps us make our final decision. In this work, we introduce a deep networkarchitecture –prototypical part network (ProtoPNet), that reasons in a similar way: thenetwork dissects the image by finding prototypical parts, and combines evidencefrom the prototypes to make a final classification. The model thus reasons in a waythat is

qualitatively similar to the way ornithologists, physicians, and otherswould explain to people on how to solve challenging image classification tasks. Thenetwork uses only image-level labels for training without any annotations for parts ofimages. We demonstrate our method on the CUB-200-2011 dataset and the StanfordCars dataset. Our experiments show that ProtoPNet can achieve comparableaccuracy with its analogous non-interpretable counterpart, and when severalProtoPNets are combined into a larger network, it can achieve an accuracy that is onpar with some of the best-performing deep models. Moreover, ProtoPNet provides alevel of interpretability that is absent in other interpretable deep models.

文献总结:

点击“阅读原文”,了解论文详情!