原标题:Interpretability Beyond FeatureAttribution: Quantitative Testing with Concept Activation Vectors (TCAV)

作者:Been Kim MartinWattenberg Justin Gilmer Carrie Cai James Wexler

Fernanda Viegas Rory Sayres

中文摘要:

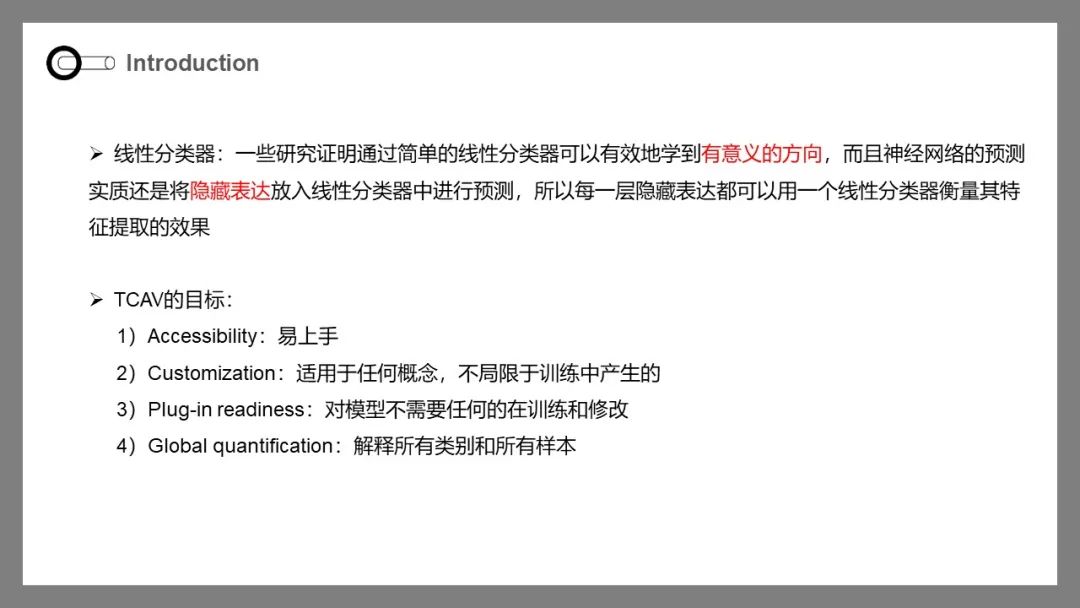

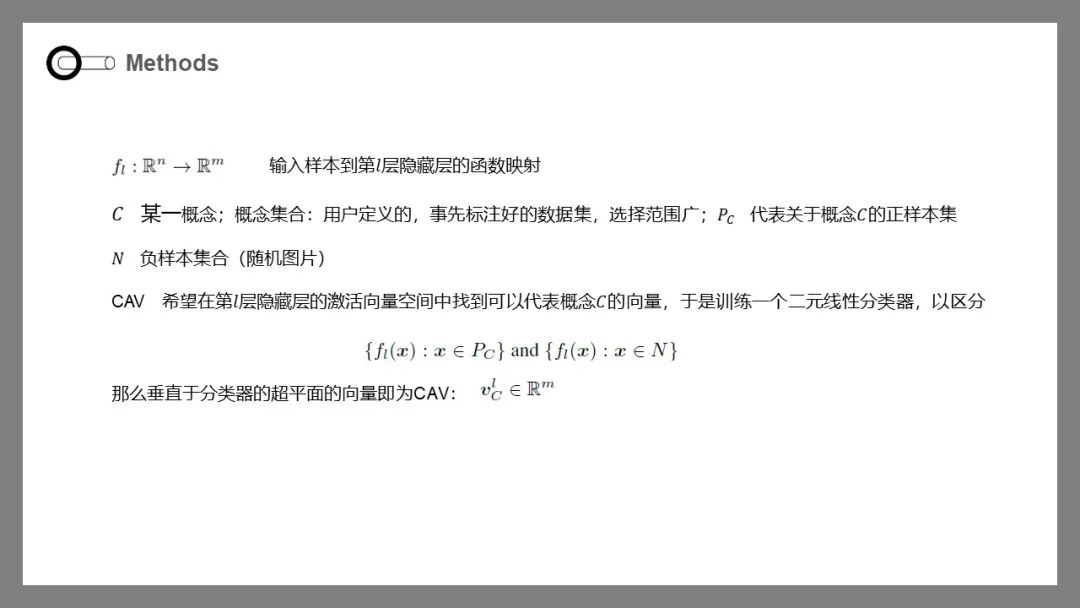

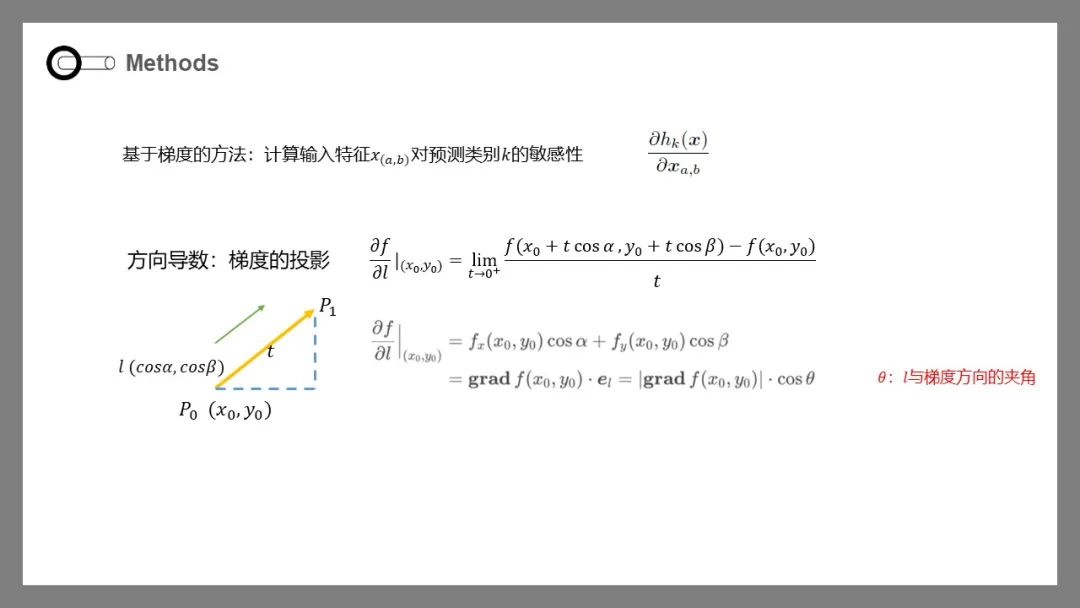

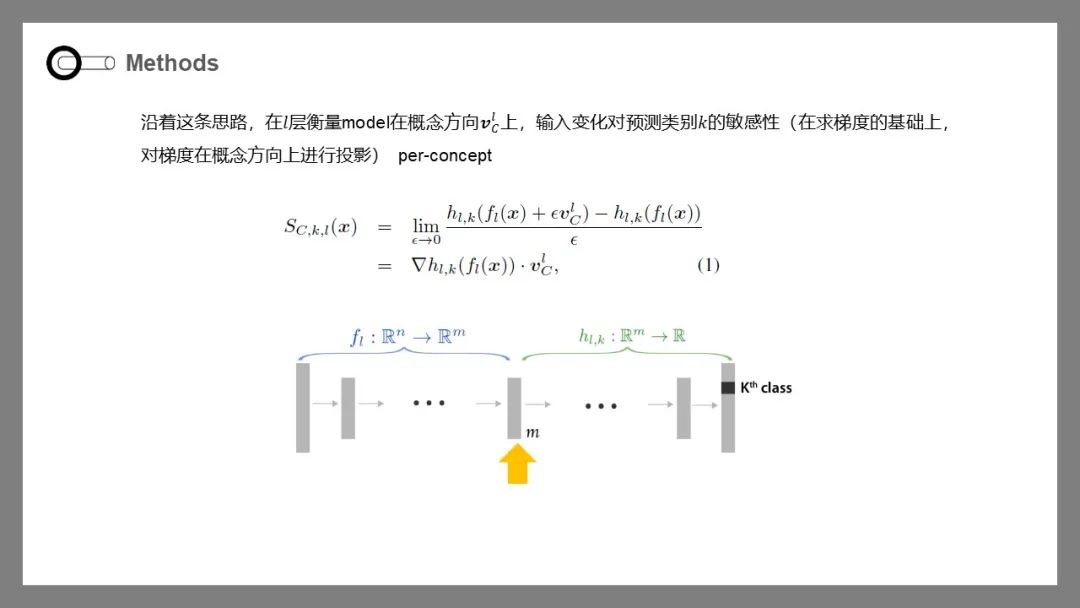

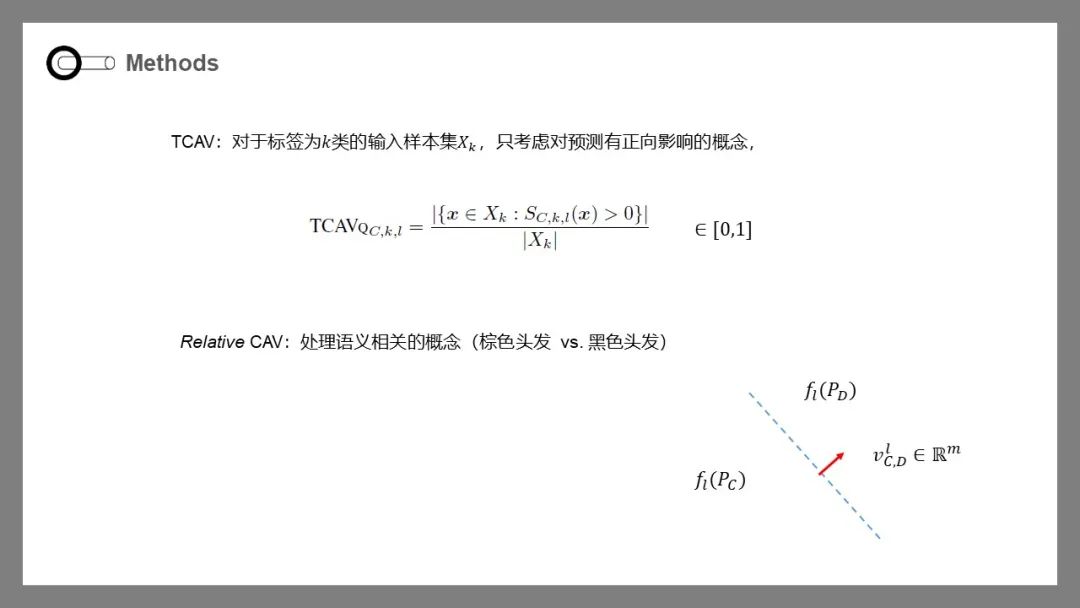

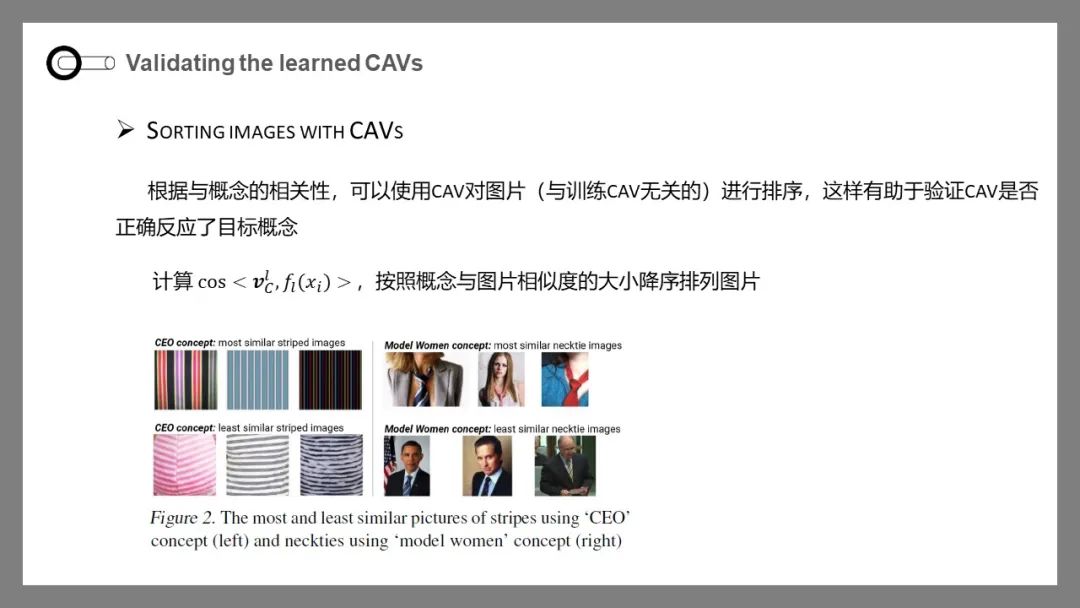

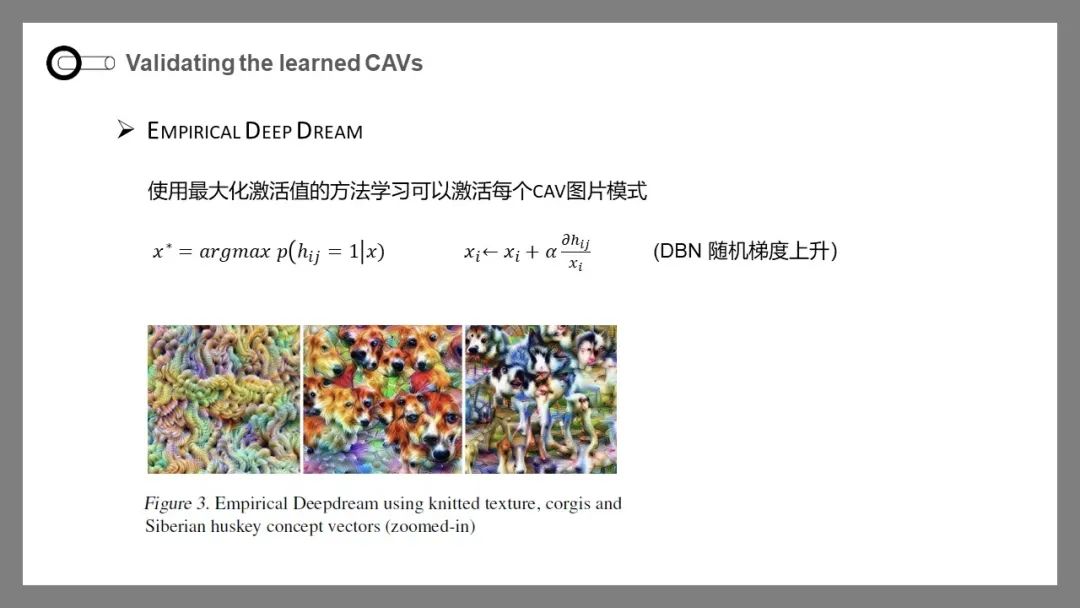

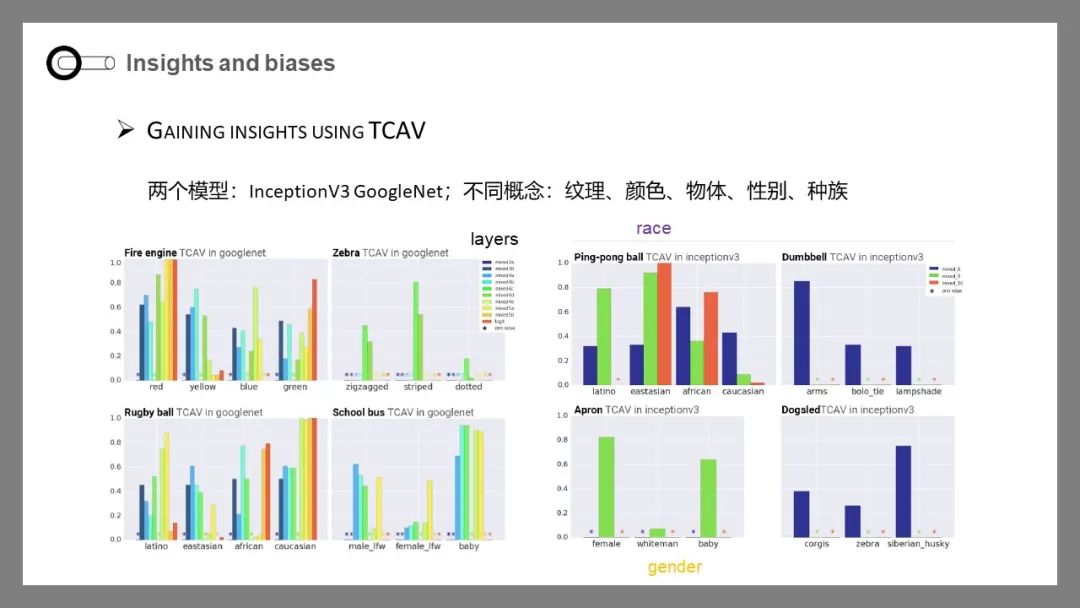

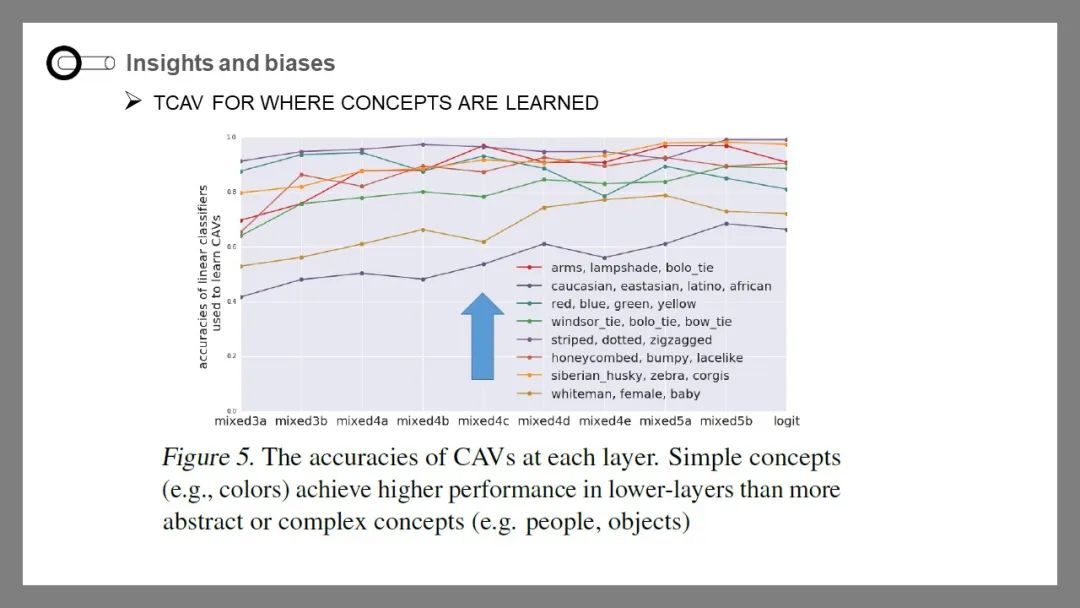

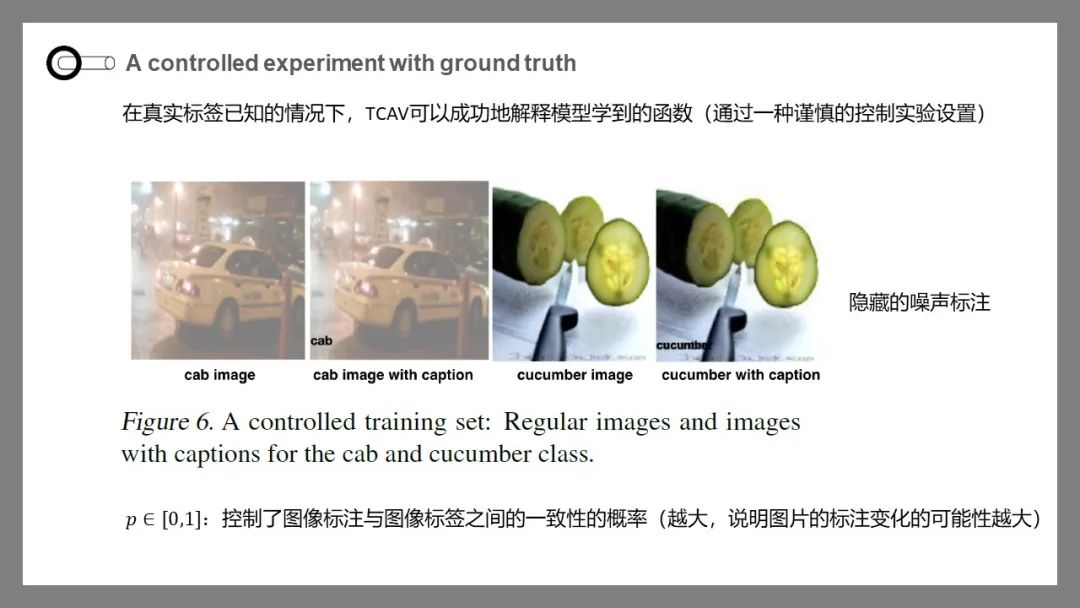

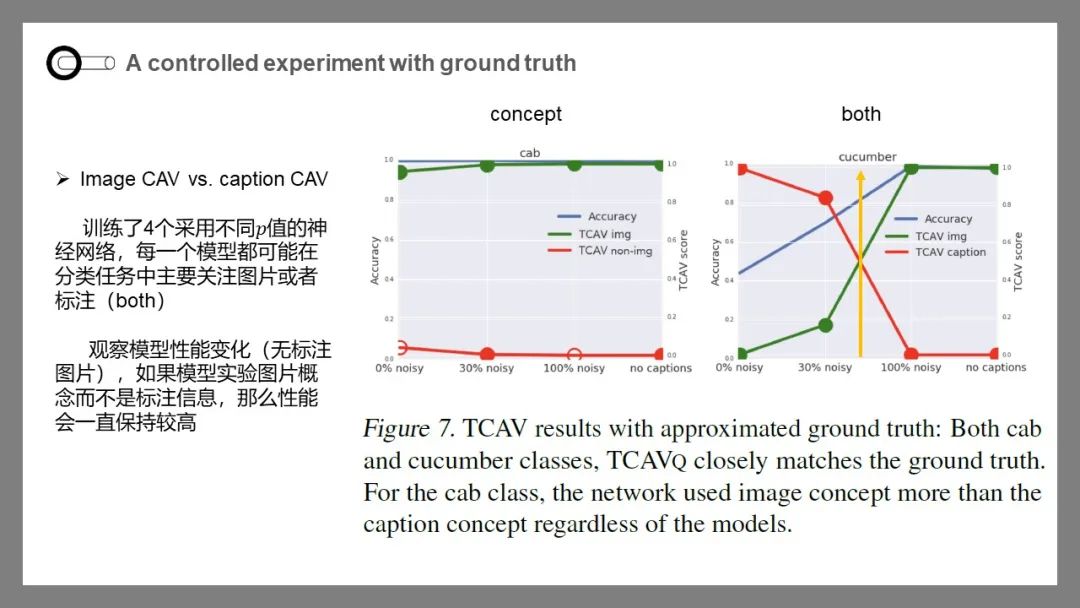

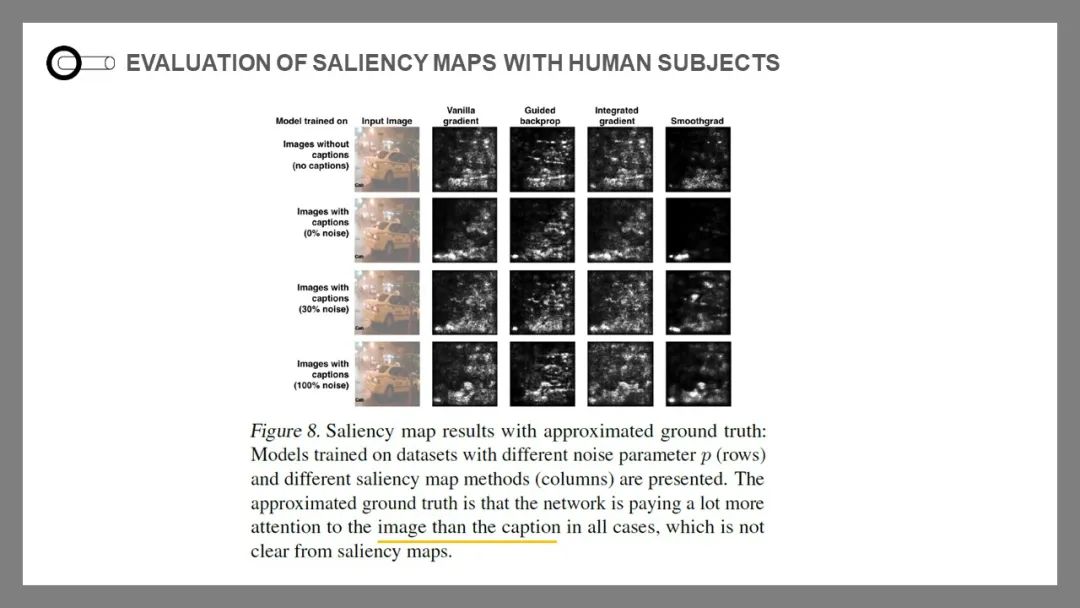

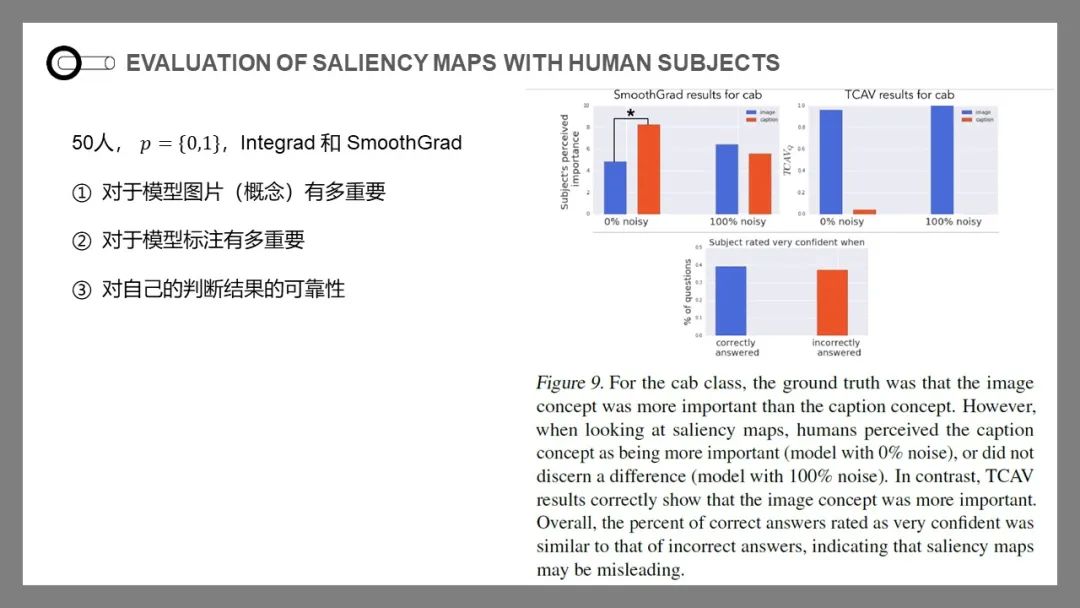

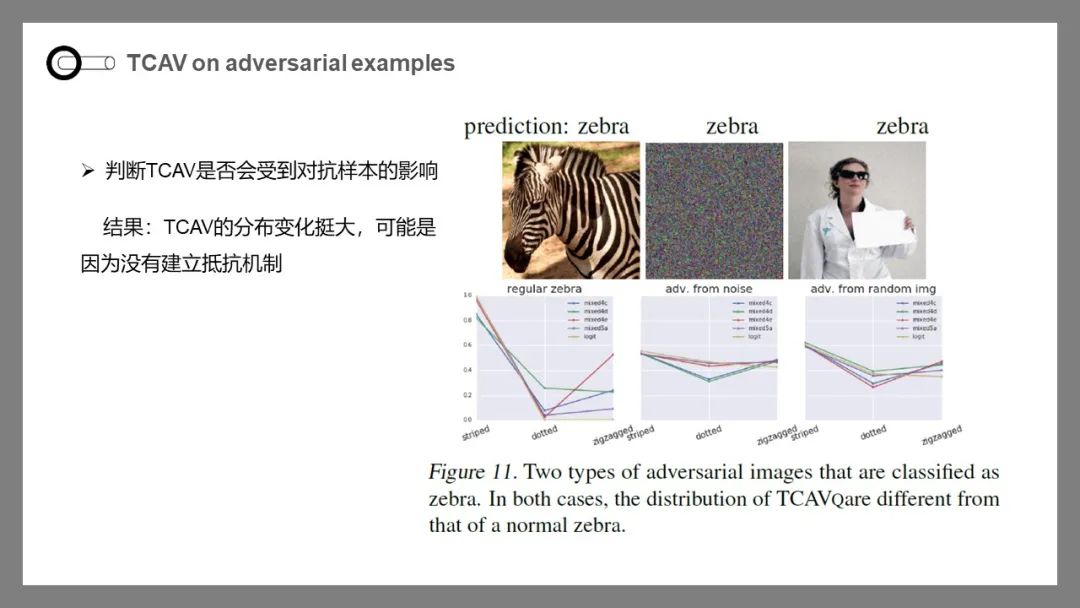

因为深度学习模型的规模,复杂性,不透明的内部状态,其解释一直是一个挑战。此外,许多系统,如图像分类器,都是基于低级特征而不是高级概念。为了应对这些挑战,我们引入了概念激活向量(CAVs),它用人类友好的概念来解释神经网络的内部状态。关键的想法是把神经网络的高维内部状态看作是一种辅助,而不是一种障碍。我们展示了如何将CAVs作为一项技术的一部分,使用CAVs(TCAV)进行测试,该技术使用方向导数来量化用户定义的概念对分类结果的重要性,例如,斑马的预测对条纹的存在有多敏感。以图像分类领域为试验场,我们描述了CAVs如何用于探索假设并生成标准图像分类网络和医学应用系统的理解。

英文摘要:

The interpretation of deep learningmodels is a challenge due to their size, complexity, and often opaque internalstate. In addition, many systems, such as image classifiers, operate onlow-level features rather than high-level concepts. To address thesechallenges, we introduce Concept Activation Vectors (CAVs), which provide aninterpretation of a neural net’s internal state in terms of human-friendlyconcepts. The key idea is to view the high-dimensional internal state of aneural net as an aid, not an obstacle. We show how to use CAVs as part of atechnique, Testing with CAVs (TCAV), that uses directional derivatives toquantify the degree to which a user-defined concept is important to aclassification result–for example, how sensitive a prediction of zebra is tothe presence of stripes. Using the domain of image classification as a testingground, we describe how CAVs may be used to explore hypotheses and generateinsights for a standard image classification network as well as a medicalapplication.

文献总结:

点击“阅读原文”,了解论文详情!