一、使用场景

当主库的读取压力较大,或者用户想要提升数据库灾难恢复能力,需要新增备节点。当集群中的某些备节点发生故障无法短时间内进行修复时,为保证集群状态正常,用户可以先将故障节点删除。

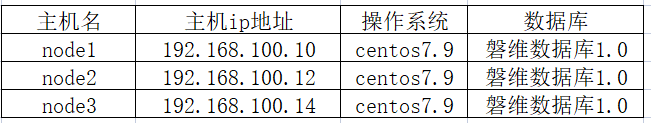

二、环境说明

手头正好有1套已搭建好的3节点的集群环境,所以本次先做节点的缩容操作,从集群中删除node3备节点(192.168.100.14),然后再把node3备节点扩容到集群中。

三、节点的缩容

缩容工具 gs_dropnode:

- 支持从一主多备删除至单节点。

- 支持备机故障的情况下将其删除。

- 支持在线新增和删除备节点,即可以在不影响主机业务的情况下进行新增和删除备节点。

缩容须知:

- 删除备节点的操作只能在主节点上执行。

- 操作过程中不允许同时在其他备节点上执行主备倒换或者故障倒换的操作。

- 不允许同时在主节点上执行 gs_expansion 命令进行扩容。

- 不允许同时执行 2 次相同的 gs_dropnode 命令。

- 执行删除操作前,需要确保主节点和备节点之间建立好数据库管理用户的互信。需要使用数据库管理用户执行该命令。

- 执行命令前需要通过 source 命令导入主机数据库的环境变量。

检查集群状态

[omm@node1 ~]$ su - omm

[omm@node1 ~]$ gs_om -t status --detail

[ CMServer State ]

node node_ip instance state

---------------------------------------------------------------------

1 node1 192.168.100.10 1 /apps/data/cmserver/cm_server Standby

2 node2 192.168.100.12 2 /apps/data/cmserver/cm_server Primary

3 node3 192.168.100.14 3 /apps/data/cmserver/cm_server Standby

[ Cluster State ]

cluster_state : Normal

redistributing : No

balanced : No

current_az : AZ_ALL

[ Datanode State ]

node node_ip instance state

-----------------------------------------------------------------

1 node1 192.168.100.10 6001 /apps/data/data P Standby Normal

2 node2 192.168.100.12 6002 /apps/data/data S Primary Normal

3 node3 192.168.100.14 6003 /apps/data/data S Standby Normal

缩容备节点

[omm@node1 ~]$ gs_dropnode -U omm -G dbgrp -h 192.168.100.14

[GAUSS-35804] The dropnode operation can only be executed at the primary node.

说明:gs_dropnode删除节点的命令必须在主节点上执行,现在登录到node2(主节点)执行删除node3节点的操作

[omm@node2 ~]$ gs_dropnode -U omm -G dbgrp -h 192.168.100.14

The target node to be dropped is (['node3'])

Do you want to continue to drop the target node (yes/no)?yes

Drop node start with CM node.

Drop node with CM node is running.

[gs_dropnode]Start to drop nodes of the cluster.

[gs_dropnode]Start to stop the target node node3.

[gs_dropnode]End of stop the target node node3.

[gs_dropnode]Start to backup parameter config file on node1.

[gs_dropnode]End to backup parameter config file on node1.

[gs_dropnode]The backup file of node1 is /apps/tmp/gs_dropnode_backup20240320230307/parameter_node1.tar

[gs_dropnode]Start to parse parameter config file on node1.

[gs_dropnode]End to parse parameter config file on node1.

[gs_dropnode]Start to parse backup parameter config file on node1.

[gs_dropnode]End to parse backup parameter config file node1.

[gs_dropnode]Start to set openGauss config file on node1.

[gs_dropnode]End of set openGauss config file on node1.

[gs_dropnode]Start to backup parameter config file on node2.

[gs_dropnode]End to backup parameter config file on node2.

[gs_dropnode]The backup file of node2 is /apps/tmp/gs_dropnode_backup20240320230313/parameter_node2.tar

[gs_dropnode]Start to parse parameter config file on node2.

[gs_dropnode]End to parse parameter config file on node2.

[gs_dropnode]Start to parse backup parameter config file on node2.

[gs_dropnode]End to parse backup parameter config file node2.

[gs_dropnode]Start to set openGauss config file on node2.

[gs_dropnode]End of set openGauss config file on node2.

[gs_dropnode]Start of set pg_hba config file on node1.

[gs_dropnode]End of set pg_hba config file on node1.

[gs_dropnode]Start of set pg_hba config file on node2.

[gs_dropnode]End of set pg_hba config file on node2.

[gs_dropnode]Start to set repl slot on node2.

[gs_dropnode]Start to get repl slot on node2.

[gs_dropnode]End of set repl slot on node2.

Stopping node.

=========================================

Successfully stopped node.

=========================================

End stop node.

Generate drop flag file on drop node node3 successfully.

[gs_dropnode]Start to modify the cluster static conf.

[gs_dropnode]End of modify the cluster static conf.

Restart cluster ...

Stopping cluster.

=========================================

Successfully stopped cluster.

=========================================

End stop cluster.

Remove dynamic_config_file and CM metadata directory on all nodes.

Starting cluster.

======================================================================

Successfully started primary instance. Wait for standby instance.

======================================================================

.

Successfully started cluster.

======================================================================

cluster_state : Normal

redistributing : No

node_count : 2

Datanode State

primary : 1

standby : 1

secondary : 0

cascade_standby : 0

building : 0

abnormal : 0

down : 0

Failed to reset switchover the cluster. Command: "source /home/omm/.bashrc ; cm_ctl switchover -a -t 0".

Output: "cm_ctl: create ssl connection failed.

cm_ctl: socket is [4], 462 : create ssl failed:

cm_ctl: send switchover msg to cm_server, connect fail node_id:0, data_path:.".

[gs_dropnode] Success to drop the target nodes.

再次检查集群状态

[omm@node1 ~]$ gs_om -t status --detail

[ CMServer State ]

node node_ip instance state

---------------------------------------------------------------------

1 node1 192.168.100.10 1 /apps/data/cmserver/cm_server Standby

2 node2 192.168.100.12 2 /apps/data/cmserver/cm_server Primary

[ Cluster State ]

cluster_state : Normal

redistributing : No

balanced : No

current_az : AZ_ALL

[ Datanode State ]

node node_ip instance state

-----------------------------------------------------------------

1 node1 192.168.100.10 6001 /apps/data/data P Standby Normal

2 node2 192.168.100.12 6002 /apps/data/data S Primary Normal

说明:node3已经被从集群中剔除。

以下步骤根据实际情况决定是否进行操作:

清理备节点数据

--omm用户

[omm@node3 data]$ gs_uninstall --delete-data -L

This is a node where the gs_dropnode command has been executed. Uninstall a single node instead of the gs_dropnode command.

Checking uninstallation.

Successfully checked uninstallation.

Stopping the cluster.

Successfully stopped local node.

Successfully deleted instances.

Uninstalling application.

Successfully uninstalled application.

Uninstallation succeeded.

[omm@node3 data]$ pwd

/apps/data/data

[omm@node3 data]$ ls

[omm@node3 data]$

删除ssh互信

--root、omm用户

[omm@node3 ~]$ mv .ssh .sshbak

[omm@node3 ~]$ su - root

Password:

Last login: Thu Mar 20 15:09:45 CST 2024 from 192.168.100.1 on pts/0

[root@node3 ~]# mv .ssh .sshbak

清理备节点软件

[root@node1 script]# scp gs_postuninstall root@node3:/opt/software/panweidb/script/

root@node3’s password:

gs_postuninstall

–登录到node3

[root@node3 script]# pwd

/opt/software/panweidb/script

[root@node3 script]# ./gs_postuninstall -U omm -X /opt/software/panweidb/cluster_config.xml --delete-user --delete-group -L

Parsing the configuration file.

Successfully parsed the configuration file.

Check log file path.

Successfully checked log file path.

Checking unpreinstallation.

Successfully checked unpreinstallation.

Deleting the instance’s directory.

Successfully deleted the instance’s directory.

Deleting the temporary directory.

Successfully deleted the temporary directory.

Deleting software packages and environmental variables of the local node.

Successfully deleted software packages and environmental variables of the local nodes.

Deleting local OS user.

Successfully deleted local OS user.

Deleting local node’s logs.

Successfully deleted local node’s logs.

Successfully cleaned environment.

清理备节点目录

根据cluster_config.xml文件,清理备库相应目录

四、节点的扩容

扩容工具 gs_expansion:

- 支持从单机或者一主多备最多扩容至一主八备。

- 支持新增级联备机。

- 支持在集群中存在故障备机的情况下新增备节点。

扩容须知:

- 数据库主机上存在 PanWeiDB 镜像包。

- 在新增的扩容备机上创建好与主机上相同的用户和用户组。

- 已存在的数据库节点和新增的扩容节点之间需要建立好 root 用户互信以及数据库管理用户的互信。

- 正确配置 XML 文件,在已安装数据库配置文件的基础上,添加需要扩容的备机信息。

- 只能使用 root 用户执行扩容命令。

- 不允许同时在主节点上执行 gs_dropnode 命令删除其他备机。

- 执行扩容命令前需要导入主机数据库的环境变量。

- 扩容备机的操作系统与主机保持一致。

- 操作过程中不允许同时在其他备节点上执行主备倒换或者故障倒换的操作。

扩容备节点

[omm@node1 panweidb]$ gs_expansion -U omm -G dbgrp -h 192.168.100.14 -X ./clusterconfig.xml

[GAUSS-50104] : Only a user with the root permission can run this script.

[root@node1 script]# ./gs_expansion -U omm -G dbgrp -h 192.168.100.14 -X /opt/software/panweidb/cluster_config.xml

[GAUSS-51802] : Failed to obtain the environment variable "GAUSSHOME", please import environment variable.

[root@node1 script]# ./gs_expansion -U omm -G dbgrp -h 192.168.10.14 -X /opt/software/panweidb/cluster_config.xml

[GAUSS-51802] : Failed to obtain the environment variable "PGHOST", please import environment variable.

说明:扩容需要用root用户执行操作,同时需要设置GAUSSHOME、PGHOST环境变量

export GAUSSHOME=/apps/app

export PGHOST=/apps/tmp

[root@node1 tool]# ./script/gs_expansion -U omm -G dbgrp -h 192.168.100.14 -X /opt/software/panweidb/cluster_config.xml

Start expansion with cluster manager component.

Start to send soft to each standby nodes.

End to send soft to each standby nodes.

Success to send XML to new nodes

Start to perform perinstall on nodes: ['node3']

Preinstall command is: /apps/tool/script/gs_preinstall -U omm -G dbgrp -X /opt/software/panweidb/cluster_config.xml -L --non-interactive 2>&1

Success to perform perinstall on nodes ['node3']

Success to change user to [omm]

Installing applications on all new nodes.

Install on new node output: [SUCCESS] node3:

Using omm:dbgrp to install database.

Using installation program path : /apps/app

Command for creating symbolic link: ln -snf /apps/app_9a7e96bc /apps/app.

Decompressing bin file.

Decompress CM package command: export LD_LIBRARY_PATH=$GPHOME/script/gspylib/clib:$LD_LIBRARY_PATH && tar -zxf "/apps/tool/PanWeiDB-1.0.0-CentOS-64bit-cm.tar.gz" -C "/apps/app"

Decompress CM package successfully.

Successfully decompressed bin file.

Modifying Alarm configuration.

Modifying user's environmental variable $GAUSS_ENV.

Successfully modified user's environmental variable $GAUSS_ENV.

Fixing file permission.

Set Cgroup config file to appPath.

Successfully Set Cgroup.

Successfully installed APP on nodes ['node3'].

success to send all CA file.

Success to change user to [omm]

Success to init instance on nodes ['node3']

Start to generate and send cluster static file.

End to generate and send cluster static file.

Ready to perform command on node [node3]. Command is : source /home/omm/.bashrc;gs_guc set -D /apps/data/data -h 'host all omm 192.168.100.14/32 trust' -h 'host all all 192.168.100.14/32 sha256'

Successfully set hba on all nodes.

Success to change user to [omm]

Stopping cluster.

=========================================

Successfully stopped cluster.

=========================================

End stop cluster.

Remove dynamic_config_file and CM metadata directory on all nodes.

Starting cluster.

======================================================================

[GAUSS-51607] : Failed to start cluster. Error:

cm_ctl: checking cluster status.

cm_ctl: checking cluster status.

cm_ctl: checking finished in 780 ms.

cm_ctl: start cluster.

cm_ctl: start nodeid: 1

cm_ctl: start nodeid: 2

cm_ctl: start nodeid: 3

............................

cm_ctl: start cluster failed in (30)s.

The cluster may continue to start in the background.

If you want to see the cluster status, please try command gs_om -t status.

If you want to stop the cluster, please try command gs_om -t stop.

Process Process-3:

Traceback (most recent call last):

File "/opt/python/Python-3.6.9/lib/python3.6/multiprocessing/process.py", line 258, in _bootstrap

self.run()

File "/opt/python/Python-3.6.9/lib/python3.6/multiprocessing/process.py", line 93, in run

self._target(*self._args, **self._kwargs)

File "/apps/tool/script/impl/expansion/expansion_impl_with_cm.py", line 476, in do_start

cm_component.startCluster(self.user, timeout=DefaultValue.TIMEOUT_EXPANSION_SWITCH)

File "/apps/tool/script/gspylib/component/CM/CM_OLAP/CM_OLAP.py", line 289, in startCluster

" Error: \n%s" % result_set[1])

Exception: [GAUSS-51607] : Failed to start cluster. Error:

cm_ctl: checking cluster status.

cm_ctl: checking cluster status.

cm_ctl: checking finished in 780 ms.

cm_ctl: start cluster.

cm_ctl: start nodeid: 1

cm_ctl: start nodeid: 2

cm_ctl: start nodeid: 3

...................

cm_ctl: start cluster successfully.

检查集群状态

[omm@node2 apps]$ gs_om -t status --detail

[ CMServer State ]

node node_ip instance state

---------------------------------------------------------------------

1 node1 192.168.100.10 1 /apps/data/cmserver/cm_server Standby

2 node2 192.168.100.12 2 /apps/data/cmserver/cm_server Primary

3 node3 192.168.100.14 3 /apps/data/cmserver/cm_server Standby

[ Cluster State ]

cluster_state : Normal

redistributing : No

balanced : No

current_az : AZ_ALL

[ Datanode State ]

node node_ip instance state

-----------------------------------------------------------------

1 node1 192.168.100.10 6001 /apps/data/data P Standby Normal

2 node2 192.168.100.12 6002 /apps/data/data S Primary Normal

3 node3 192.168.100.14 6003 /apps/data/data S Standby Normal

扩容已经完成。

五、总结

节点的扩缩容一定要在业务闲时申请变更窗口进行操作,以免操作时由于机器负载过高而对业务造成不良影响。