一. 环境准备

本地VM 3台 2C 4G

master节点需要的组件

- docker

- kubectl:集群命令行交互工具

- kubeadm:集群初始化工具

node节点需要的组件

- docker

- kubelet:管理pod的容器,确保他们健康稳定的运行

- Kube-proxy: 网络代理,负责网络相关工作

安装

vi /etc/resolv.conf

nameserver 8.8.8.8

主机名

分别配置机器名 master node1 node2

hostnamectl set-hostname master

或

vi /etc/hostname

master

配置hosts文件

cat > /etc/hosts <<here

192.168.0.211 master

192.168.0.212 node1

192.168.0.213 node2

here

ulimit

cat <<EOF>>/etc/security/limits.conf

* soft nofile 1000000

* hard nofile 1000000

* soft stack 32768

* hard stack 32768

EOF

修改时区

ln -sf /usr/share/zoneinfo/Asia/Shanghai /etc/localtime

配置yum源

cat <<EOF | tee /etc/yum.repos.d/kubernetes.repo

[kubernetes]

name=Kubernetes

baseurl=https://packages.cloud.google.com/yum/repos/kubernetes-el7-\$basearch

enabled=1

gpgcheck=1

gpgkey=https://packages.cloud.google.com/yum/doc/rpm-package-key.gpg

exclude=kubelet kubeadm kubectl

EOF

sed -e "s|packages.cloud.google.com|mirrors.ustc.edu.cn/kubernetes|" \

-e "s|gpgcheck=1|gpgcheck=0|" \

-i /etc/yum.repos.d/kubernetes.repo

升级内核

cat /etc/redhat-release

CentOS Linux release 7.9.2009 (Core)

uname -r

3.10.0-1160.el7.x86_64

#导入ElRepo仓库公钥

rpm --import https://www.elrepo.org/RPM-GPG-KEY-elrepo.org

#Centos 7 安装 ELRepo

yum -y install https://www.elrepo.org/elrepo-release-7.el7.elrepo.noarch.rpm

#查看稳定镜像

yum --disablerepo="*" --enablerepo="elrepo-kernel" list available

升级到长期稳定

yum --enablerepo=elrepo-kernel install -y kernel-lt

查看当前系统已安装内核

awk -F\' '$1=="menuentry " {print i++ " : " $2}' /etc/grub2.cfg

编辑 /etc/default/grub 把GRUB_DEFAULT=saved修改为

GRUB_DEFAULT=0

重新配置内核参数

grub2-mkconfig -o /boot/grub2/grub.cfg

重启

reboot

最新版本

uname -r

重启没生效的话再试试

grub2-set-default 0

grub2-mkconfig -o /boot/grub2/grub.cfg

关闭selinux

setenforce 0

sed -i --follow-symlinks 's/SELINUX=enforcing/SELINUX=disabled/g' /etc/sysconfig/selinux

验证selinux是否关闭成功

getenforce

关闭防火墙

systemctl stop firewalld

systemctl disable firewalld

iptables

# 关闭防火墙

systemctl stop iptables

systemctl disable iptables

查看

systemctl status firewalld

关闭swapoff

swapoff -a

sed -i 's/.*swap.*/#&/' /etc/fstab

配置L2网桥在转发包时会被iptables的FORWARD规则所过滤,CNI插件需要该配置

cat <<EOF | tee /etc/modules-load.d/k8s.conf

overlay

br_netfilter

EOF

modprobe overlay

modprobe br_netfilter

cat > /etc/sysctl.d/k8s.conf << EOF

net.bridge.bridge-nf-call-ip6tables = 1

net.bridge.bridge-nf-call-iptables = 1

net.ipv4.ip_forward = 1

EOF

# 执行命令使其修改生效

modprobe br_netfilter && sysctl -p /etc/sysctl.d/k8s.conf

检查结果

lsmod | grep br_netfilter

lsmod | grep overlay

sysctl \

net.bridge.bridge-nf-call-iptables \

net.bridge.bridge-nf-call-ip6tables \

net.ipv4.ip_forward

ntp同步

/usr/sbin/ntpdate -u ntp.tencent.com && /usr/sbin/hwclock -w #手动

#自动

cat <<EOF>>/var/spool/cron/root

00 12 * * * /usr/sbin/ntpdate -u ntp.tencent.com && /usr/sbin/hwclock -w

EOF

安装containerd

安装containerd

# 配置docker-ce软件源

# 移除已经安装的docker版本

yum remove -y docker docker-common docker-selinux docker-engine

# 下载docker-ce软件源文件

curl -o /etc/yum.repos.d/docker-ce.repo \

https://download.docker.com/linux/centos/docker-ce.repo

# 替换官方源地址为国内yum源地址。

sed -e 's+download.docker.com+mirrors.tuna.tsinghua.edu.cn/docker-ce+' \

-i.bak /etc/yum.repos.d/docker-ce.repo

# 安装docker-ce

yum makecache fast

# 安装containerd

yum install -y containerd

配置containerd

# 备份默认配置文件

mv /etc/containerd/config.toml /etc/containerd/config.toml.default

# 重新生成配置文件

containerd config default > /etc/containerd/config.toml

sed -i 's|registry.k8s.io|registry.aliyuncs.com/google_containers|g' /etc/containerd/config.toml

启动containerd

systemctl enable containerd

systemctl restart containerd

systemctl status containerd

安装k8s组件

下载安装

cd /root

mkdir -pv k8s/calico

cd k8s/calico

wget https://raw.githubusercontent.com/projectcalico/calico/v3.25.0/manifests/tigera-operator.yaml

wget https://raw.githubusercontent.com/projectcalico/calico/v3.25.0/manifests/custom-resources.yaml

sed -i "s|192.168|10.96|" custom-resources.yaml

kubectl create -f tigera-operator.yaml

kubectl create -f custom-resources.yaml

查看

kubectl get pods -A

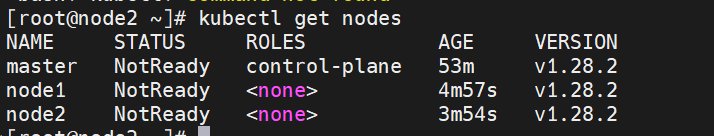

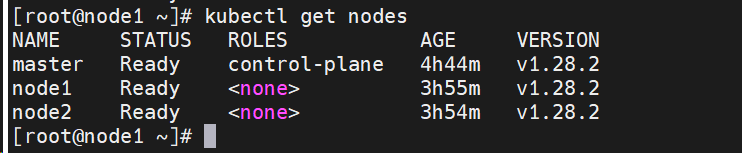

kubectl get nodes

添加k8s安装源

cat <<EOF > /etc/yum.repos.d/kubernetes.repo

[kubernetes]

name=Kubernetes

baseurl=https://mirrors.aliyun.com/kubernetes/yum/repos/kubernetes-el7-x86_64/

enabled=1

gpgcheck=1

repo_gpgcheck=1

gpgkey=https://mirrors.aliyun.com/kubernetes/yum/doc/yum-key.gpg https://mirrors.aliyun.com/kubernetes/yum/doc/rpm-package-key.gpg

EOF

安装kubelet,kubeadm,kubectl

yum install -y kubelet kubeadm kubectl --disableexcludes=kubernetes

systemctl enable --now kubelet

安装docker-ce

yum install -y kubelet kubeadm kubectl docker-ce

准备容器镜像

kubeadm config images list --image-repository registry.aliyuncs.com/google_containers

拉取镜像

kubeadm config images pull \

--image-repository registry.aliyuncs.com/google_containers

用kubeadm来初始化集群(仅master节点执行)

先查看已安装版本

kubectl version

Client Version: v1.28.2

Kustomize Version: v5.0.4-0.20230601165947-6ce0bf390ce3

kubeadm init --kubernetes-version=1.28.2 \

--apiserver-advertise-address=192.168.0.211 \

--image-repository registry.aliyuncs.com/google_containers \

--service-cidr=10.96.0.0/12 \

--pod-network-cidr=10.244.0.0/16

设置环境变量

export KUBECONFIG=/etc/kubernetes/admin.conf

cat <<EOF | tee -a ~/.bash_profile

export KUBECONFIG=/etc/kubernetes/admin.conf

EOF

source ~/.bash_profile

# 复制控制配置,便于后继使用kubectl

mkdir -p $HOME/.kube

cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

chown $(id -u):$(id -g) $HOME/.kube/config

cp /etc/kubernetes/admin.conf ~/.kube/config

worker节点加入

获取加入taken

kubeadm token create --print-join-command

kubeadm token list

kubeadm join 192.168.0.211:6443 --token iz1j56.02jn7kq3o9ktpgim --discovery-token-ca-cert-hash sha256:c21e2012c39fd1632ce69bb9ad761d3323b6866890c8e4dcd955861c64291e93

设置worker环境变量

#master拷贝

scp /etc/kubernetes/admin.conf root@node1:/etc/kubernetes/

#worker执行

export KUBECONFIG=/etc/kubernetes/admin.conf

cat <<EOF | tee -a ~/.bash_profile

export KUBECONFIG=/etc/kubernetes/admin.conf

EOF

source ~/.bash_profile

# 复制控制配置,便于后继使用kubectl

mkdir -p $HOME/.kube

cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

chown $(id -u):$(id -g) $HOME/.kube/config

cp /etc/kubernetes/admin.conf ~/.kube/config

kubectl get nodes #在worker节点上执行验证

安装网络插件Calico

cd /root

mkdir -pv k8s/calico

cd k8s/calico

wget https://raw.githubusercontent.com/projectcalico/calico/v3.25.0/manifests/tigera-operator.yaml

wget https://raw.githubusercontent.com/projectcalico/calico/v3.25.0/manifests/custom-resources.yaml

sed -i "s|192.168|10.244|" custom-resources.yaml

kubectl create -f tigera-operator.yaml

kubectl create -f custom-resources.yaml

监控calico各pods运行状态

watch kubectl get pods -n calico-system

kubectl get pods -A

查看token

kubeadm token create --print-join-command

等待自动加入(VM比较慢,至少等了50分钟)

启动kubelet、docker,并设置开机启动

systemctl enable kubelet

systemctl start kubelet

systemctl enable docker

systemctl start docker

检查kubelet的状态(kubeadm 进行初始化成功,kubelet才能启动成功)

systemctl daemon-reload

systemctl status kubelet

参考:

「喜欢这篇文章,您的关注和赞赏是给作者最好的鼓励」

关注作者

【版权声明】本文为墨天轮用户原创内容,转载时必须标注文章的来源(墨天轮),文章链接,文章作者等基本信息,否则作者和墨天轮有权追究责任。如果您发现墨天轮中有涉嫌抄袭或者侵权的内容,欢迎发送邮件至:contact@modb.pro进行举报,并提供相关证据,一经查实,墨天轮将立刻删除相关内容。