以下是几个典型的应用场景:

单纯的读写分离,此时配置最为简单,支持读写分离,主从切换;

分表分库,对于超过1000万的表进行分片,最大支持1000亿的单表分片;

多租户应用,每个应用一个库,但应用程序只连接Mycat,从而不改造程序本身,实现多租户化;

报表系统,借助于Mycat的分表能力,处理大规模报表的统计;

替代Hbase,分析大数据。

作为海量数据实时查询的一种简单有效方案,比如100亿条频繁查询的记录需要在3秒内查询出来结果,除了基于主键的查询,还可能存在范围查询或其他属性查询,此时Mycat可能是最简单有效的选择。

实验目的:

部署后端MMM集群,使用Mycat对写入MMM中的数据进行分片。

注:使用的是表中具有唯一性的字段如id. 身份证号....

例:

ID=1分到MMM_server1

ID=2分到MMM_server2

ID=3分到MMM_server1

ID=4分到MMM_server2

部署前端Haproxy,用来监测MMM集群是否正常,并部署Keepalived进行高可用*热备*负载均衡。

实验需求:

MMM_server1:

node01 10.15.200.101

node02 10.15.200.102

node03 10.15.200.103

MMM_server2:

node04 10.15.200.104

node05 10.15.200.105

node06 10.15.200.106

Mycat_server1:

tracker01 10.15.200.204

Mycat_server2:

tracker02 10.15.200.205

Keepalived主+Haproxy主:

ha01 10.15.200.115

Keepalived]从+Haproxy从:

ha02 10.15.200.118

VIP:

10.15.200.116

#每三个为一个集群。

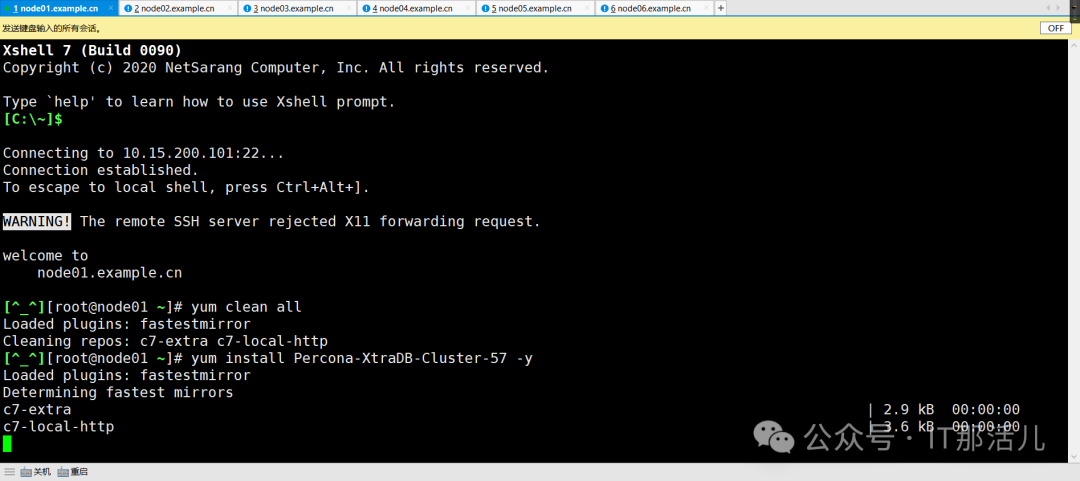

yum clean all

yum install Percona-XtraDB-Cluster-57 -y

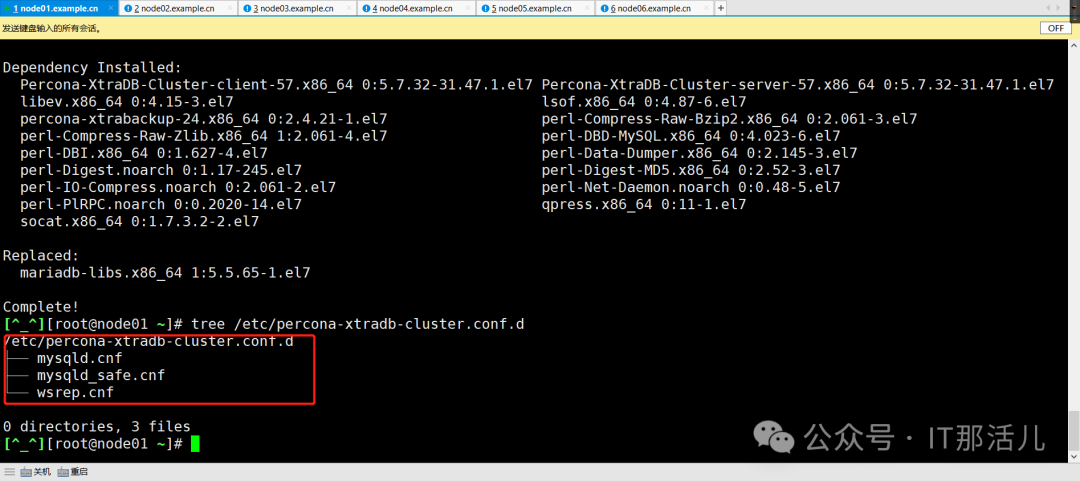

查看安装后是否有配置文件:

[root@node01 ~]# tree etc/percona-xtradb-cluster.conf.d

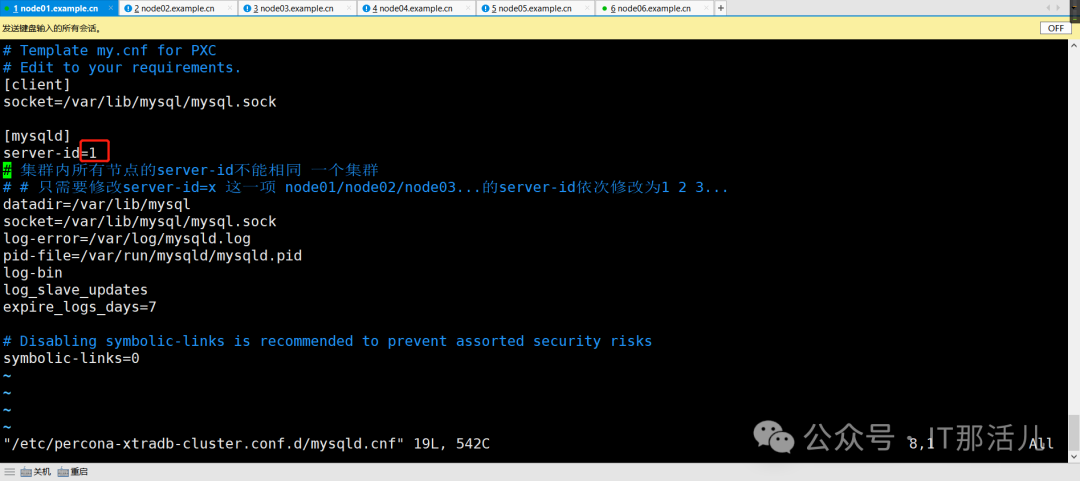

修改配置文件 #mysqld.cnf

vim /etc/percona-xtradb-cluster.conf.d/mysqld.cnf

[client]

socket=/var/lib/mysql/mysql.sock

[mysqld]

server-id=1

# 集群内所有节点的server-id不能相同

# 只需要修改server-id=x 这一项 node01/node02/node03的server-id依次修改为1 2 3

datadir=/var/lib/mysql

socket=/var/lib/mysql/mysql.sock

log-error=/var/log/mysqld.log

pid-file=/var/run/mysqld/mysqld.pid

log-bin

log_slave_updates

expire_logs_days=7

symbolic-links=0

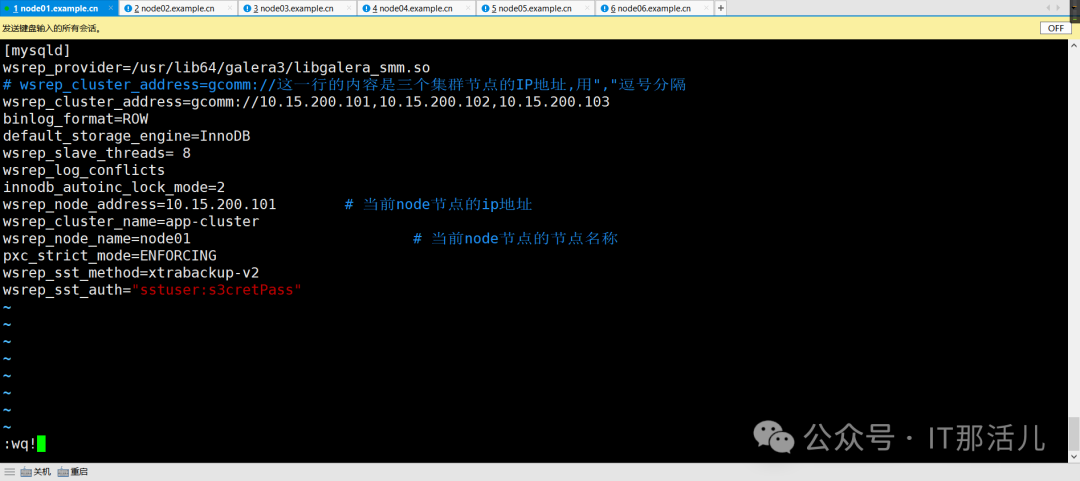

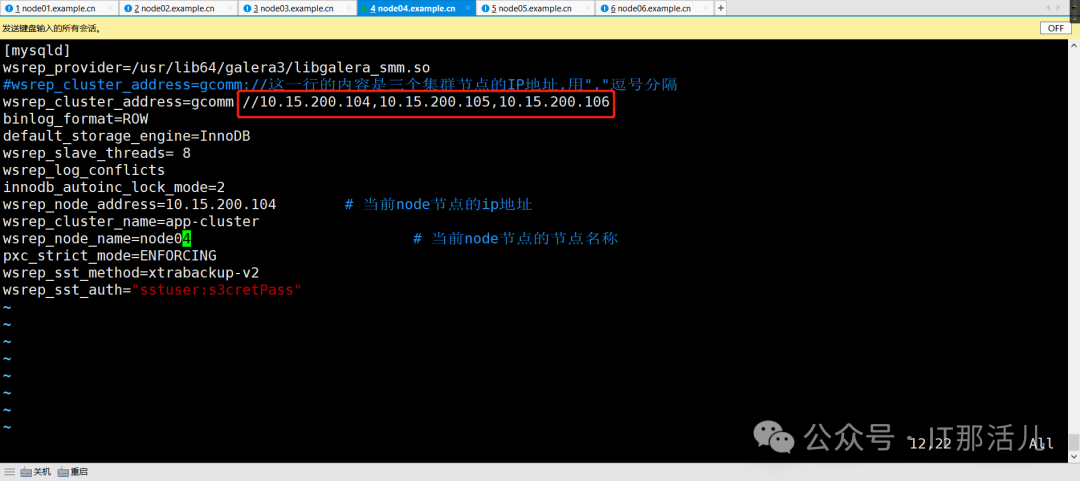

修改配置文件 # wsrep.cnf

vim /etc/percona-xtradb-cluster.conf.d/wsrep.cnf

[mysqld]

wsrep_provider=/usr/lib64/galera3/libgalera_smm.so

# wsrep_cluster_address=gcomm://这一行的内容是三个集群节点的IP地址,用","逗号分隔

wsrep_cluster_address=gcomm://10.15.200.101,10.15.200.102,10.15.200.103

binlog_format=ROW

default_storage_engine=InnoDB

wsrep_slave_threads= 8

wsrep_log_conflicts

innodb_autoinc_lock_mode=2

wsrep_node_address=10.15.200.101 # 当前node节点的ip地址

wsrep_cluster_name=app-cluster

wsrep_node_name=node01 # 当前node节点的节点名称

pxc_strict_mode=ENFORCING

wsrep_sst_method=xtrabackup-v2

wsrep_sst_auth="sstuser:s3cretPass"

在主节点(node01 node04)上启动服务 (node02 03 05 05不是这么的启动服务)

[root@node01 ~]# systemctl start mysql@bootstrap.service

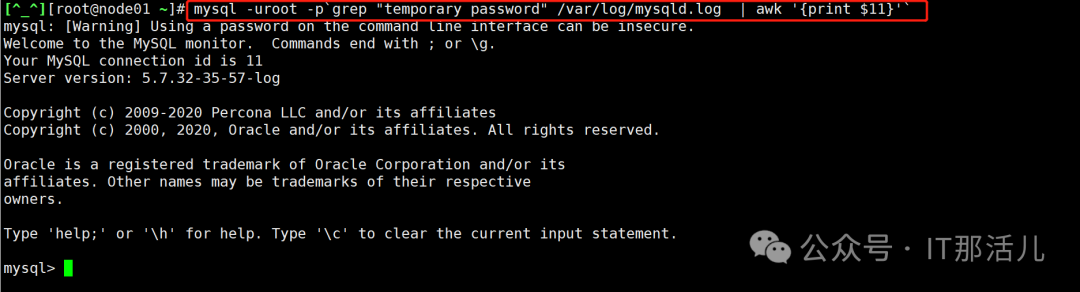

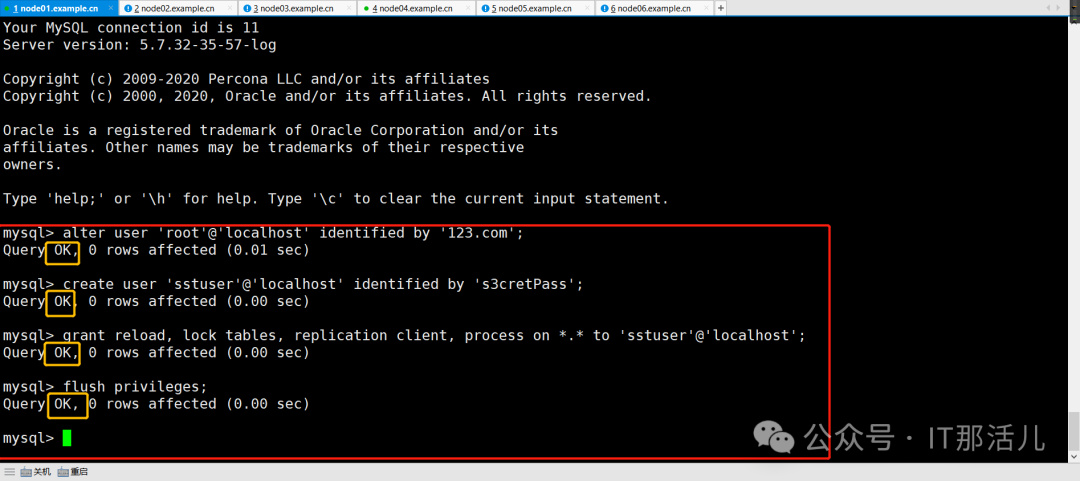

在主节点(node01 node04)上修改root密码并创建同步账号(同步账号需要跟/etc/percona-xtradb-cluster.conf.d/wsrep.cnf的键值wsrep_sst_auth对应)。

注: # 启动服务时 服务自动生成了一个随机密码 记录在/var/log/mysqld.log之中。

# 用临时密码进行登录:

[root@node01 ~]# mysql -uroot -p`grep "temporary password" /var/log/mysqld.log | awk '{print $11}'`

# 进行相关密码的修改或授权 (如果密码强度不够 设置复制的密码强度)

mysql> alter user 'root'@'localhost' identified by '123.com';

mysql> create user 'sstuser'@'localhost' identified by 's3cretPass';

mysql> grant reload, lock tables, replication client, process on *.* to 'sstuser'@'localhost';

mysql>grant all privileges on *.* to 'root'@'%' identified by '123.com';

mysql> flush privileges;

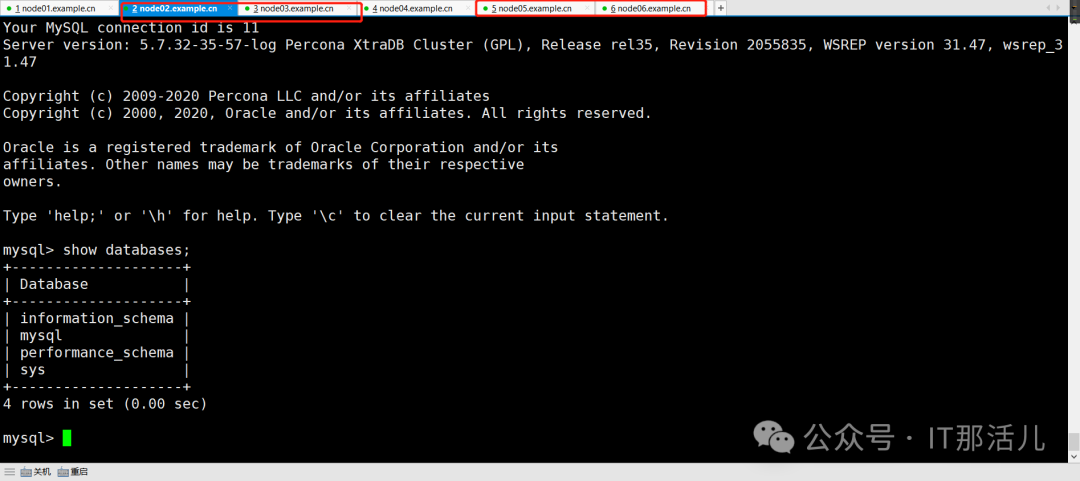

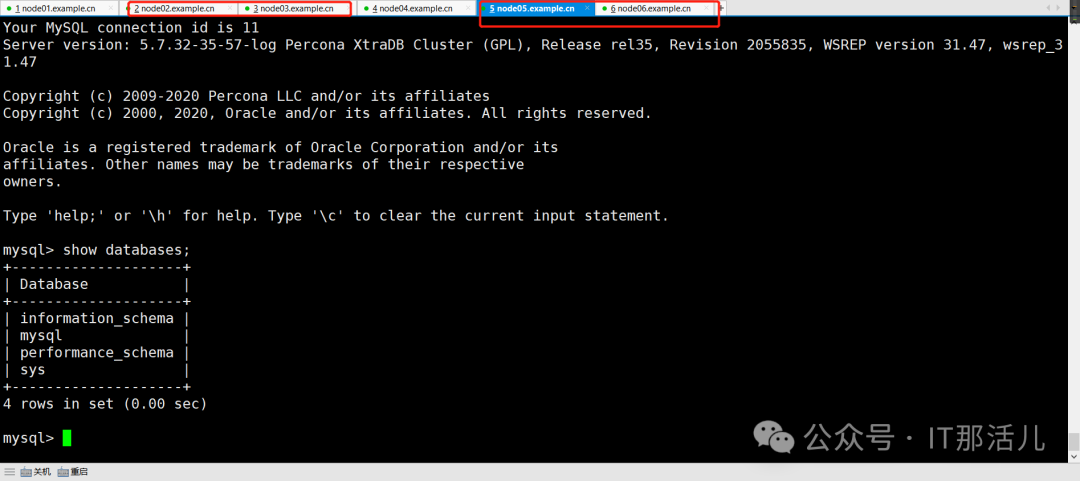

在node02 . node03和node05 . node06上分别启动数据库服务 (用修改好的密码)

[root@node02 ~]# systemctl start mysql

[root@node02 ~]# mysql -uroot -p'123.com'

mysql> show databases;

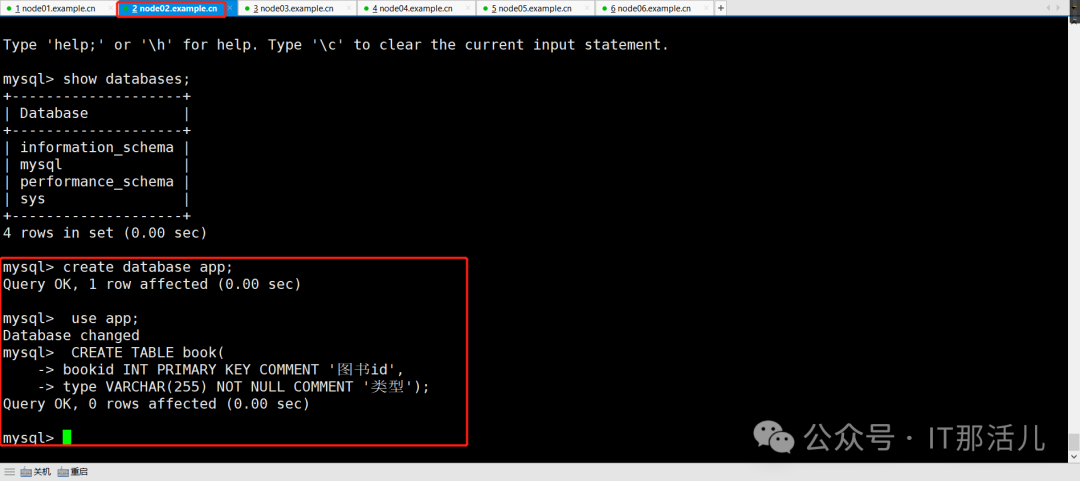

在任意节点创建一张表, 注意这里创建的表必须有主键, 查看其它节点是否有数据, 有就表示同步完成, 没有的话去看日志/var/log/mysqld.log查找原因。

在node02和node05上创建数据库和表:

[root@node02 ~]#

mysql> create database app;

mysql> use app;

mysql> CREATE TABLE book(

bookid INT PRIMARY KEY COMMENT '图书id',

type VARCHAR(255) NOT NULL COMMENT '类型');

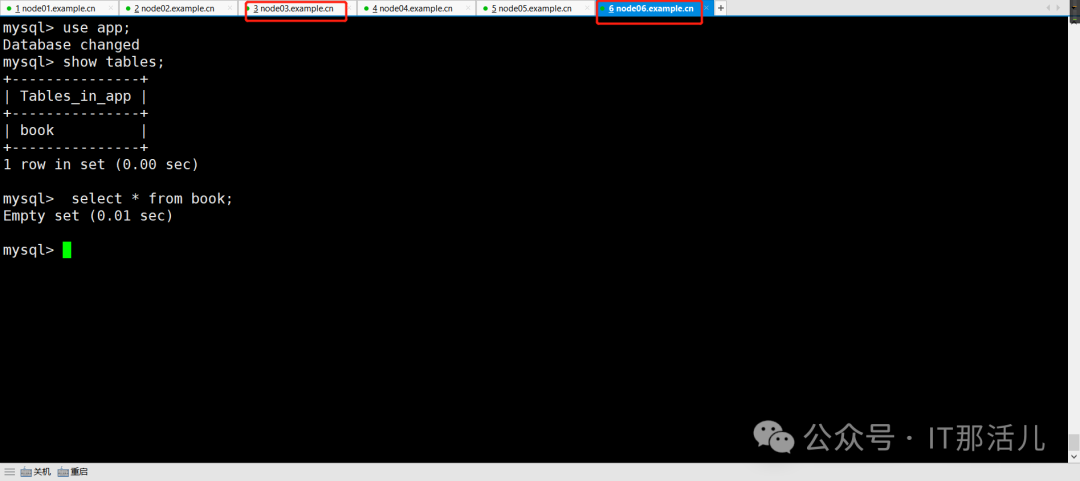

# 在node03和node06上进行校验:

[root@node03 ~]#

mysql> use app;

mysql> show tables;

mysql> select * from book;

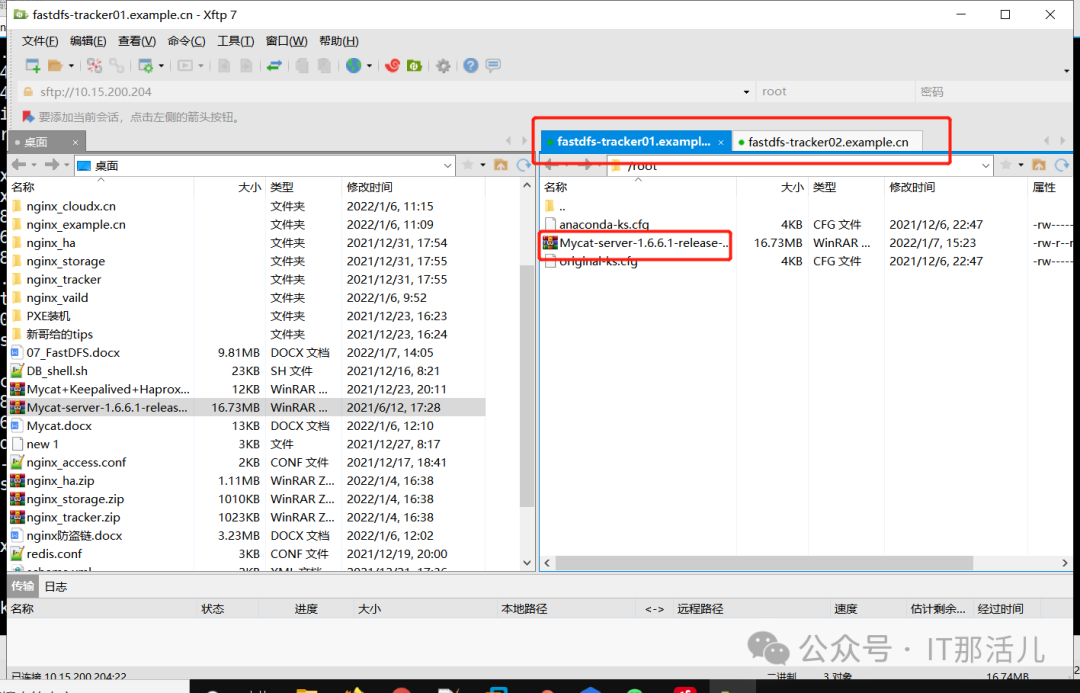

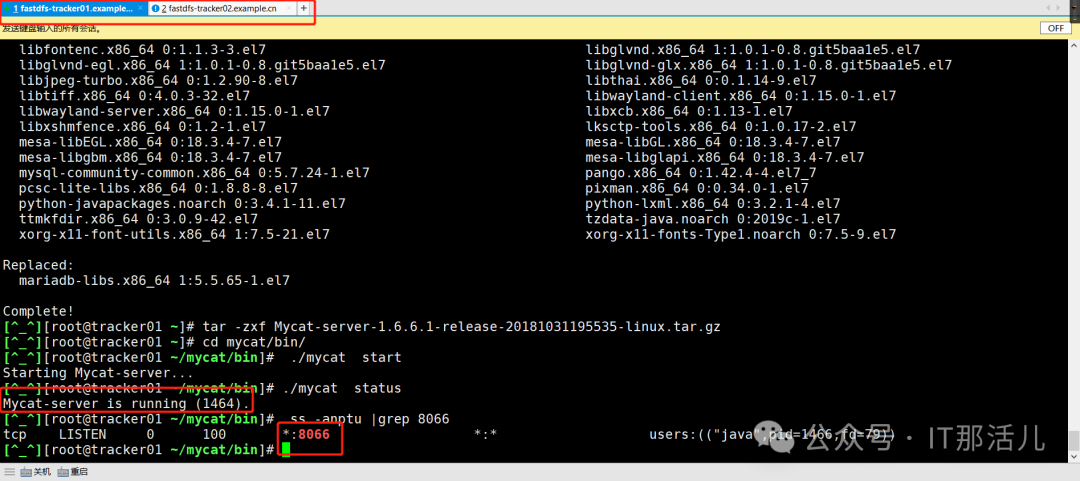

# mycat_server1和mycat_server2都需要安装:

yum -y install mariadb java

# 将mycat的安装包放入服务器或虚拟机中。

# 解压缩:

tar -zxf Mycat-server-1.6.6.1-release-20181031195535-linux.tar.gz

# 切换到mycat的bin路径下并启动:

[root@tracker02 ~]# cd mycat/bin/

[root@tracker02 ~]# /mycat/bin ]# ./mycat start

#启动后测试是否启动:

[root@tracker02 ~/mycat/bin ]# ./mycat status

Mycat-server is running (4025).

[root@tracker02 ~/mycat/bin ]# ss -anptu |grep 8066

#修改配置文件:

vim /root/mycat/conf/schema.xml

<mycat:schema xmlns:mycat="http://io.mycat/">

<!-- mycat 实例逻辑库 -->

<schema name="app" checkSQLschema="true" sqlMaxLimit="100">

<table name="book" primaryKey="id" dataNode="dn1,dn2" rule="role1"/>

<!-- schema name="逻辑库名" checkSQLschema="布尔值,是否拦截SQL语句中mycat相关字段" sqlMaxLimit="SQL返回条数,太大可能会卡死">

<table name="逻辑表名" primaryKey="主键" dataNode="数据节点" rule="分片规则" / -->

</schema>

<dataNode name="dn1" dataHost="mysql_host1" database="app"/>

<dataNode name="dn2" dataHost="mysql_host2" database="app"/>

<!-- dataNode name="数据节点名" dataHost="集群名" database="实例库"/ -->

<dataHost name="mysql_host1" maxCon="1000" minCon="10" balance="2" writeType="0" dbType="mysql" dbDriver="native" switchType="1" slaveThreshold="100">

<!-- dataHost name="集群名,供上层使用" maxCon="定义实例连接池的最大连接" minCon="定义实例连接池的最小连接" balance="负载均衡类型

0 不开启读写分离机制

1 全部的 readHost 与 writeHost 参与 select 语句的负载均衡

2 所有读操作都随机的在 writeHost readhost上分发

3 所有读请求随机的分发到 wiriterHost 对应的 readhost 执行 writerHost 不负担读压力"

writeType="负载均衡类型

0 所有写操作发送到配置的第一个 writeHost 第一个挂了切到还生存的第二个writeHost 重新启动后已切换后的为准

1 所有写操作都随机的发送到配置的 writeHost"

dbType="指定后端连接的数据库类型"

dbDriver="指定连接后端数据库使用的Driver或JDBC"

switchType="

-1 不自动切换

1 默认值 自动切换

2 基于 MySQL 主从同步的状态决定是否切换

3 基于mysql galary cluster 的切换机制 "

slaveThreshold="100" -->

<heartbeat>select user()</heartbeat>

<!-- can have multi write hosts -->

<writeHost host="hostM1" url="10.15.200.101:3306" user="root" password="123.com">

<!-- writeHost host="集群的写入节点" url="IP+port" user="用户名 要和server.xml里的保持一致"

password="密码" -->

<!-- can have multi read hosts -->

<readHost host="hostS1" url="10.15.200.102:3306" user="root" password="123.com"/>

<readHost host="hostS2" url="10.15.200.103:3306" user="root" password="123.com"/>

<!-- readHost host="集群的读取节点" url="IP+port" user="用户名 和server.xml里的保持一致" password="密码" / -->

</writeHost>

</dataHost>

<dataHost name="mysql_host2" maxCon="1000" minCon="10" balance="2" writeType="0" dbType="mysql" dbDriver="native" switchType="1" slaveThreshold="100">

<heartbeat>select user()</heartbeat>

<!-- can have multi write hosts -->

<writeHost host="hostM2" url="10.15.200.104:3306" user="root" password="123.com">

<!-- can have multi read hosts -->

<readHost host="hostS1" url="10.15.200.105:3306" user="root" password="123.com"/>

<readHost host="hostS2" url="10.15.200.106:3306" user="root" password="123.com"/>

</writeHost>

</dataHost>

</mycat:schema>

vim /root/mycat/conf/server.xml

<!-- - - Licensed under the Apache License, Version 2.0 (the "License");

- you may not use this file except in compliance with the License. - You

may obtain a copy of the License at - - http://www.apache.org/licenses/LICENSE-2.0

- - Unless required by applicable law or agreed to in writing, software -

distributed under the License is distributed on an "AS IS" BASIS, - WITHOUT

WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied. - See the

License for the specific language governing permissions and - limitations

under the License. -->

<mycat:server xmlns:mycat="http://io.mycat/">

<system>

<property name="nonePasswordLogin">0</property>

<!-- 0为需要密码登陆、1为不需要密码登陆 ,默认为0,设置为1则需要指定默认账户 -->

<property name="useHandshakeV10">1</property>

<property name="useSqlStat">0</property>

<!-- 1为开启实时统计、0为关闭 -->

<property name="useGlobleTableCheck">0</property>

<!-- 1为开启全加班一致性检测、0为关闭 -->

<property name="sequnceHandlerType">2</property>

<property name="subqueryRelationshipCheck">false</property>

<!-- 子查询中存在关联查询的情况下,检查关联字段中是否有分片字段 .默认 false -->

<!-- <property name="useCompression">1</property> -->

<!-- 1为开启mysql压缩协议 -->

<!-- <property name="fakeMySQLVersion">5.6.20</property> -->

<!-- 设置模拟的MySQL版本号 -->

<!-- <property name="processorBufferChunk">40960</property> -->

<!--

<property name="processors">1</property>

<property name="processorExecutor">32</property>

-->

<!-- 默认为type 0: DirectByteBufferPool | type 1 ByteBufferArena | type 2 NettyBufferPool -->

<property name="processorBufferPoolType">0</property>

<!-- 默认是65535 64K 用于sql解析时最大文本长度 -->

<!-- <property name="maxStringLiteralLength">65535</property> -->

<!-- <property name="sequnceHandlerType">0</property> -->

<!-- <property name="backSocketNoDelay">1</property> -->

<!-- <property name="frontSocketNoDelay">1</property> -->

<!-- <property name="processorExecutor">16</property> -->

<!--

<property name="serverPort">8066</property> <property name="managerPort">9066</property>

<property name="idleTimeout">300000</property> <property name="bindIp">0.0.0.0</property>

<property name="frontWriteQueueSize">4096</property> <property name="processors">32</property> -->

<!-- 分布式事务开关,0为不过滤分布式事务,1为过滤分布式事务(如果分布式事务内只涉及全局表,则不过滤),2为不过滤分布式事务,但是记录分布式事务日志 -->

<property name="handleDistributedTransactions">0</property>

<!--

off heap for merge/order/group/limit 1开启 0关闭

-->

<property name="useOffHeapForMerge">1</property>

<!--

单位为m

-->

<property name="memoryPageSize">64k</property>

<!--

单位为k

-->

<property name="spillsFileBufferSize">1k</property>

<property name="useStreamOutput">0</property>

<!--

单位为m

-->

<property name="systemReserveMemorySize">384m</property>

<!-- 是否采用zookeeper协调切换 -->

<property name="useZKSwitch">false</property>

<!-- XA Recovery Log日志路径 -->

<!-- <property name="XARecoveryLogBaseDir">./</property> -->

<!-- XA Recovery Log日志名称 -->

<!-- <property name="XARecoveryLogBaseName">tmlog</property> -->

<!-- 如果为 true的话 严格遵守隔离级别,不会在仅仅只有select语句的时候在事务中切换连接 -->

<property name="strictTxIsolation">false</property>

<property name="useZKSwitch">true</property>

</system>

<!-- 全局SQL防火墙设置 -->

<!-- 白名单可以使用通配符%或着* -->

<!-- 例如<host host="127.0.0.*" user="root"/> -->

<!-- 例如<host host="127.0.*" user="root"/> -->

<!-- 例如<host host="127.*" user="root"/> -->

<!-- 例如<host host="1*7.*" user="root"/> -->

<!-- 这些配置情况下对于127.0.0.1都能以root账户登录 -->

<!--

<firewall>

<whitehost>

<host host="1*7.0.0.*" user="root"/>

</whitehost>

<blacklist check="false">

</blacklist>

</firewall>

-->

<user name="root" defaultAccount="true">

<property name="password">123.com</property>

<property name="schemas">app</property>

<!-- user name="逻辑用户名,即登录mycat的用户名" defaultAccount="true" -->

<!-- property name="password">逻辑密码,即登录mycat的用户名对应的密码</property -->

<!-- property name="schemas">逻辑数据库,可配置多个,用英文逗号隔开,对应于schema.xml文件中配置的逻辑数据库,两者对应

</property -->

<!-- 表级 DML 权限设置 -->

<!--

<privileges check="false">

<schema name="TESTDB" dml="0110" >

<table name="tb01" dml="0000"></table>

<table name="tb02" dml="1111"></table>

</schema>

</privileges>

-->

</user>

</mycat:server>

vim /root/mycat/conf/rule.xml

<!-- - - Licensed under the Apache License, Version 2.0 (the "License");

- you may not use this file except in compliance with the License. - You

may obtain a copy of the License at - - http://www.apache.org/licenses/LICENSE-2.0

- - Unless required by applicable law or agreed to in writing, software -

distributed under the License is distributed on an "AS IS" BASIS, - WITHOUT

WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied. - See the

License for the specific language governing permissions and - limitations

under the License. -->

<mycat:rule xmlns:mycat="http://io.mycat/">

<tableRule name="role1">

<rule>

<columns>bookid</columns>

<algorithm>mod-long</algorithm>

</rule>

</tableRule>

<!-- tableRule name="用户标识不同的分表规则 规则名">

<rule>

<columns>指定按哪一列进行拆分 主键</columns>

<algorithm>该属性值为下面function标签中name的属性值,定义了连接表规则的具体的路由算法,多个表规则可以连接到同一个路由算法上

引用下面的规则</algorithm>

</rule>

</tableRule -->

<function name="mod-long" class="io.mycat.route.function.PartitionByMod">

<!-- how many data nodes -->

<property name="count">2</property>

</function>

<!-- function name="标识算法的名字" class="指定路由算法具体的类名字">

<property name="count">具体算法用到的一些属性</property>

</function -->

</mycat:rule>

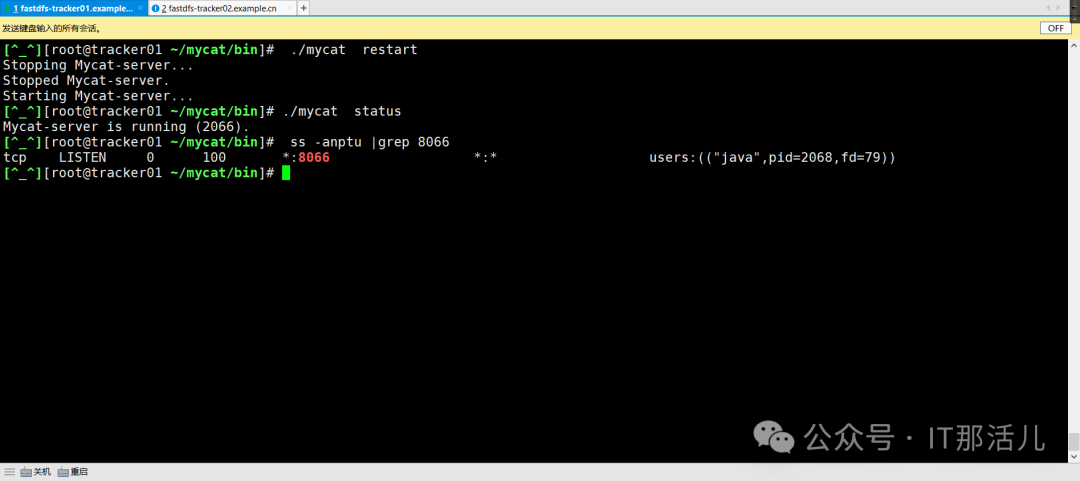

# 重启服务并查看状态和端口:

[root@tracker01 ~/mycat/bin]# ./mycat restart

[root@tracker01 ~/mycat/bin]# ./mycat status

[root@tracker01 ~/mycat/bin]# ss -anptu |grep 8066

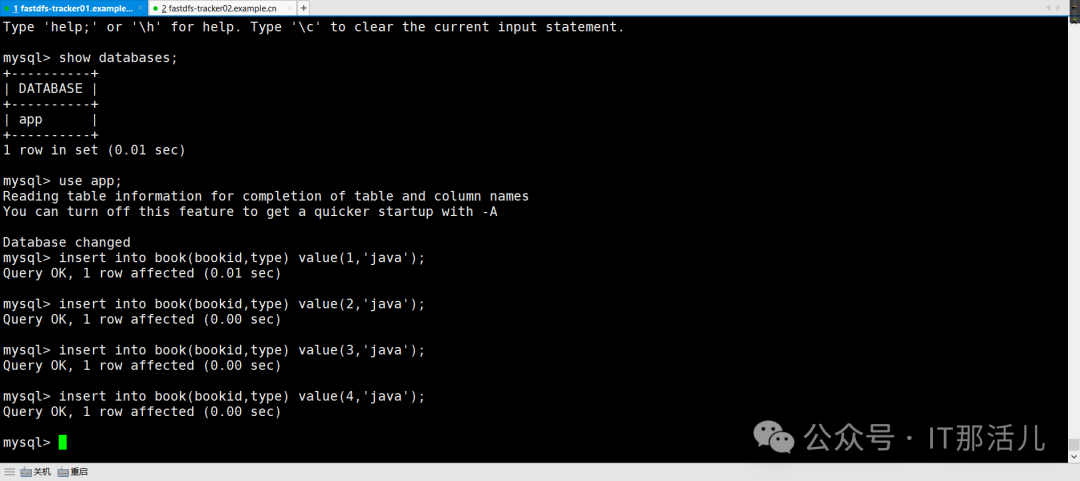

#进入本地数据库查看数据库并测试:

[root@tracker01 ~/mycat/bin]#mysql -uroot -p123.com -h127.0.0.1 -P8066

mysql> show databases;

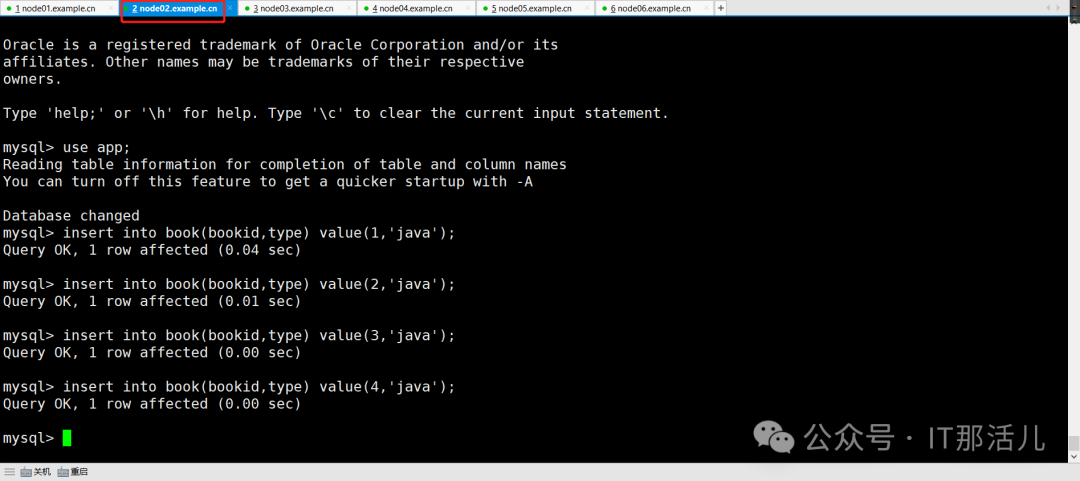

#插入四条数据测试:

mysql> use app;

insert into book(bookid,type) value(1,'java');

insert into book(bookid,type) value(2,'java');

insert into book(bookid,type) value(3,'java');

insert into book(bookid,type) value(4,'java');

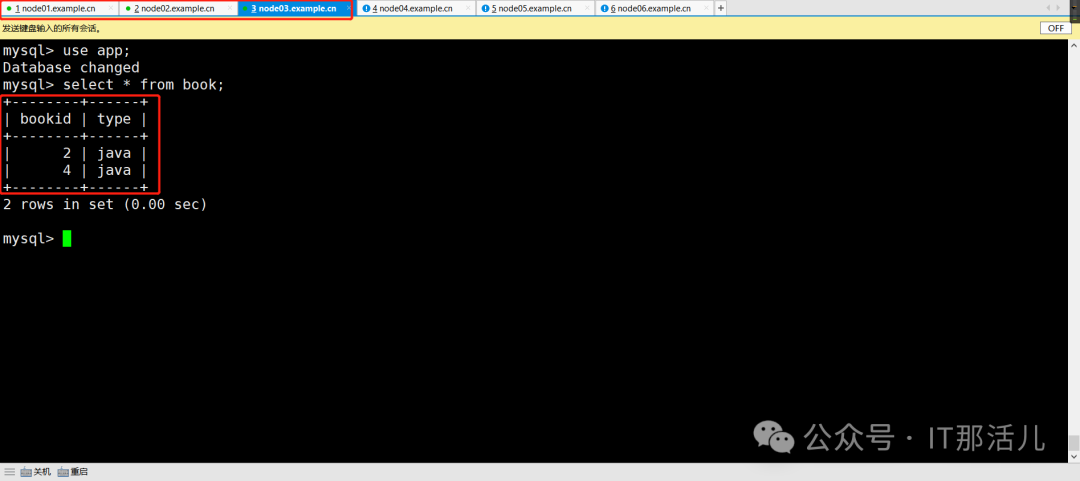

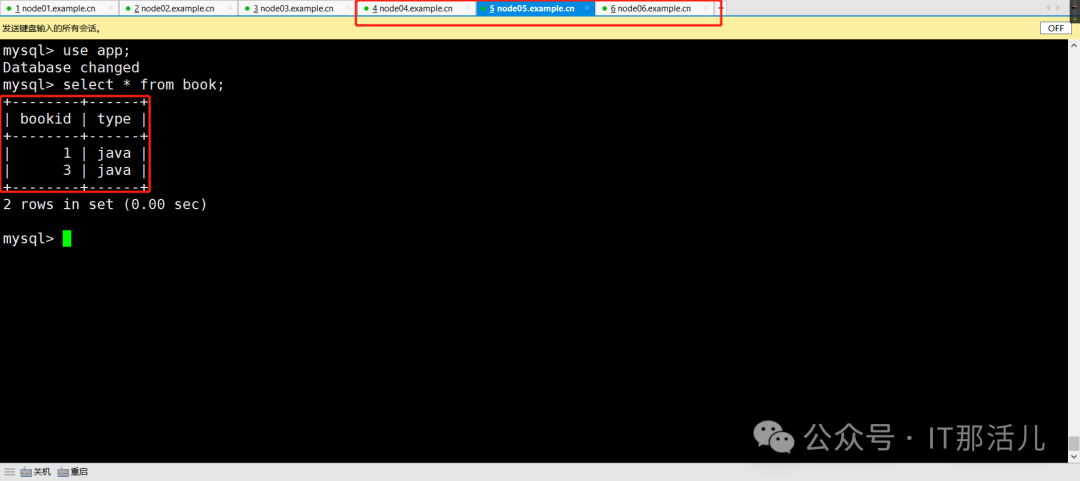

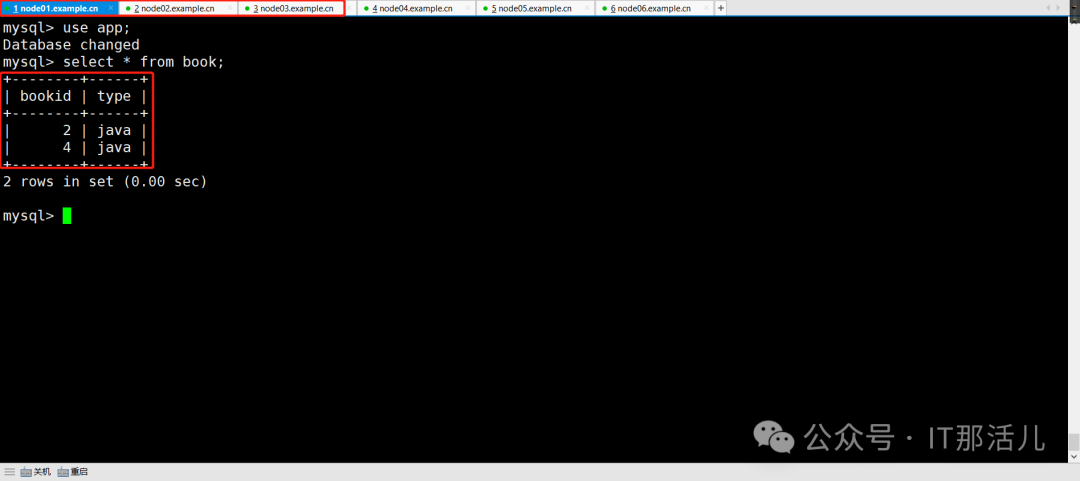

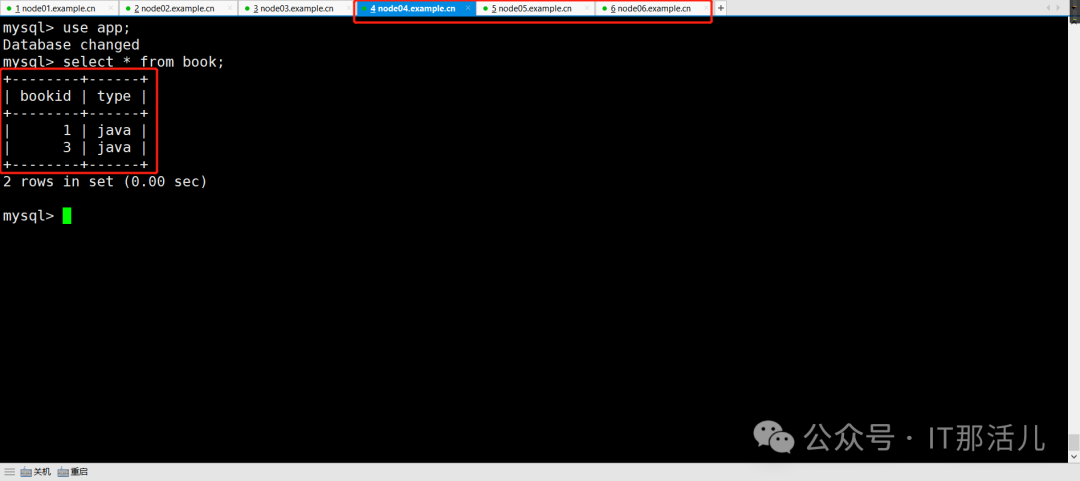

#到MMM集群中查看数据是否分片:

#node01-03和node04-06:

mysql> use app;

mysql> select * from book;

#清除数据等待下次使用:

mysql>truncate table book

#yum安装Keepalived和Haproxy:

yum -y install hapeoxy keepalived

#修改Keepalived主配置文件:

vim /etc/keepalived/keepalived.conf

global_defs {

router_id ha01.example.cn #ha01为ha01 ha02为ha02

}

vrrp_instance node_http {

state MASTER #两个都为BACKUP ha01配置为非抢占

nopreempt #ha01加这一行 ha02不加

interface ens33

virtual_router_id 116

priority 116 #主节点的优先级(1-255之间),备节点的优先级比主节点的低 ha02可以为1-115之间的任意数

advert_int 1

authentication {

auth_type PASS

auth_pass nodehttp

}

track_script {

chk_haproxy ## 检查 HAProxy 服务是否存活

}

virtual_ipaddress {

10.15.200.116

}

}

#修改hapeoxy主配置文件:

vim /etc/haproxy/haproxy.cfg

global

log 127.0.0.1 local2

chroot /var/lib/haproxy

pidfile /var/run/haproxy.pid

maxconn 4000

user haproxy

group haproxy

daemon no

defaults

mode tcp

log global

option dontlognull # 不记录健康检查日志信息

option http-server-close

retries 3

timeout http-request 10s

timeout queue 1m

timeout connect 10s

timeout client 1m

timeout server 1m

timeout http-keep-alive 10s

timeout check 10s

maxconn 3000

listen admin_stats

bind 0.0.0.0:80

mode http

stats uri /haproxy-status

stats auth admin:admin

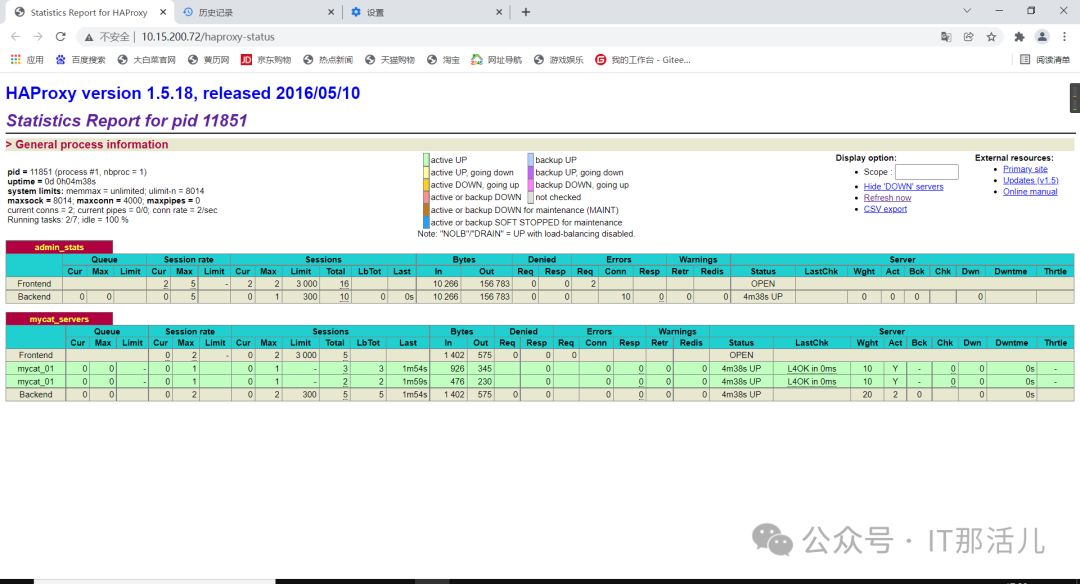

listen mycat_servers 0.0.0.0:8066

mode tcp

balance static-rr

server mycat_01 10.15.200.204:8066 check port 8066 inter 1000 rise 3 fall 3 weight 10

server mycat_01 10.15.200.205:8066 check port 8066 inter 1000 rise 3 fall 3 weight 10

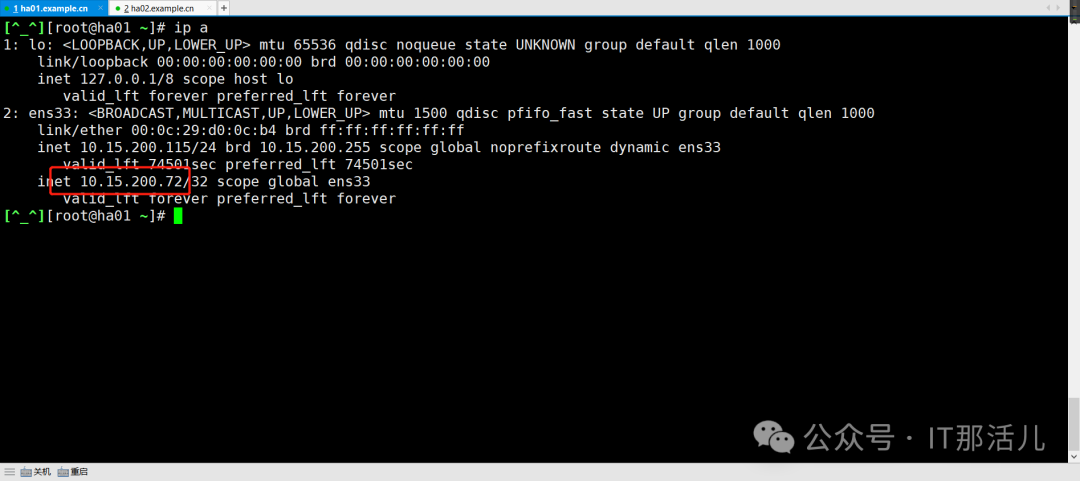

# 分别启动Keepalived和Haproxy:

systemctl restart haproxy

systemctl restart keepalived

查看漂移IP是否存在:

ip a

#使用漂移IP登录网页查看是否监控两个集群:

http://11.11.11.11/haproxy-status

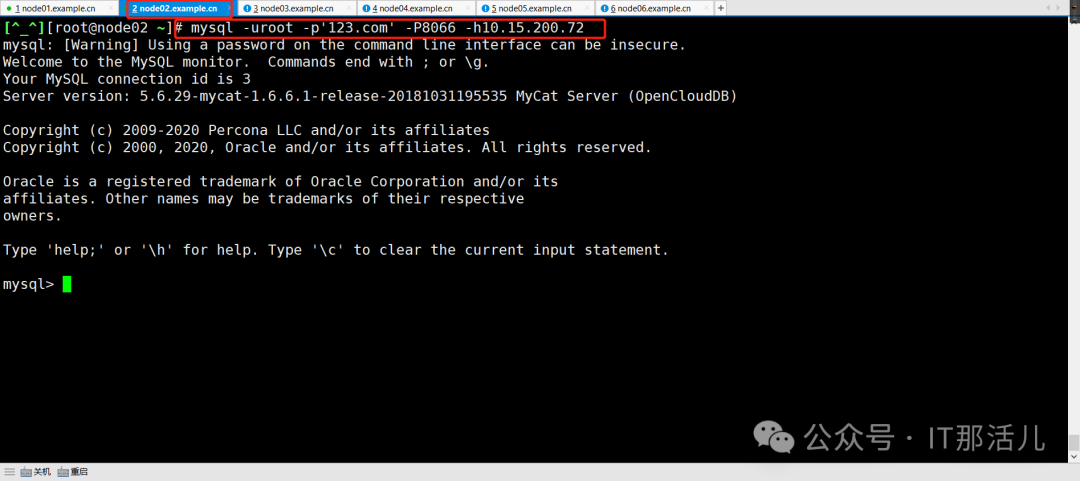

#在有数据库的主机上使用漂移IP连接数据库:

mysql -uroot -p'123456' -P8066 -h11.11.11.11

#连接上后插入四条数据,查看是否分片:

mysql> use app;

insert into book(bookid,type) value(1,'java');

insert into book(bookid,type) value(2,'java');

insert into book(bookid,type) value(3,'java');

insert into book(bookid,type) value(4,'java');

#到MMM集群中查看数据是否分片:

#node01-3:

mysql> use app;

mysql> select * from book;

#node04-6:

mysql> use app;

mysql> select * from book;

至此结束!!!!

本文作者:张 任(上海新炬中北团队)

本文来源:“IT那活儿”公众号