基础环境

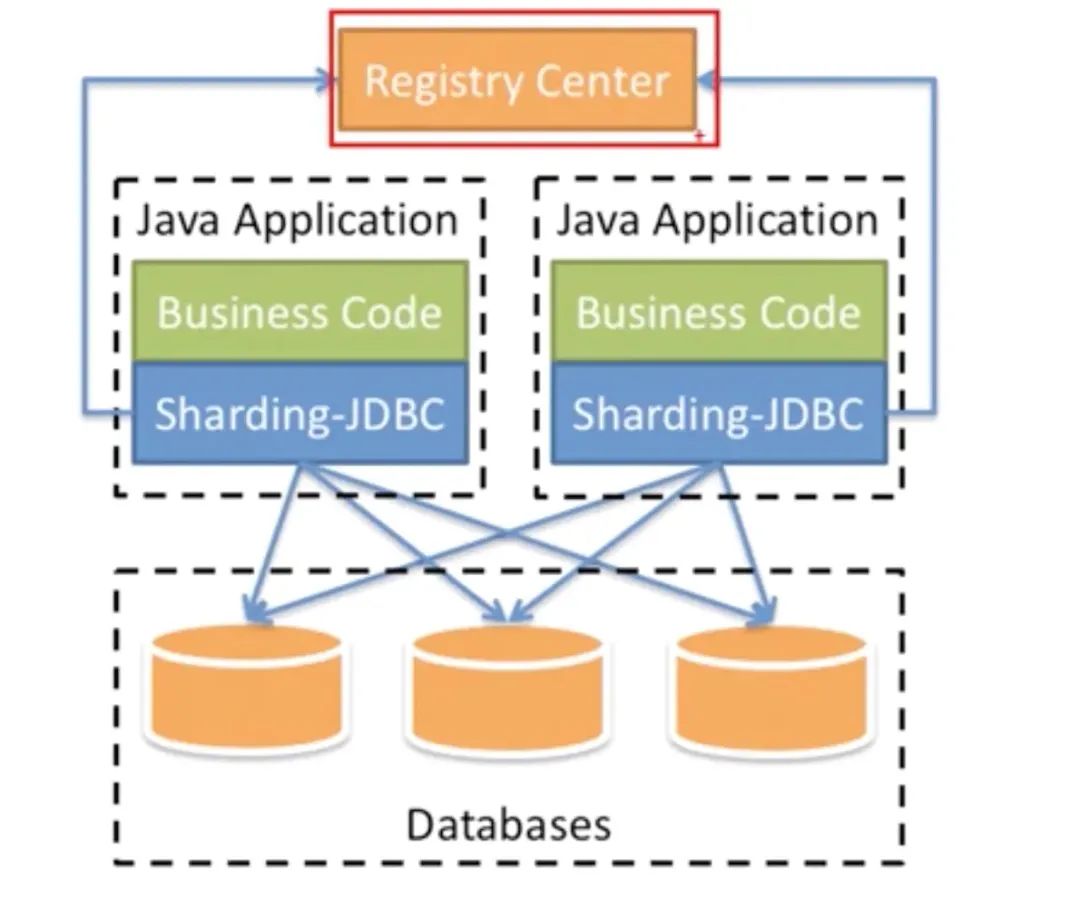

项目是基于springboot + mybatis + shardingsphere

pom

<dependency><groupId>org.springframework.boot</groupId><artifactId>spring-boot-starter-web</artifactId></dependency><dependency><groupId>org.springframework.boot</groupId><artifactId>spring-boot-configuration-processor</artifactId></dependency><dependency><groupId>mysql</groupId><artifactId>mysql-connector-java</artifactId><scope>runtime</scope></dependency><dependency><groupId>org.projectlombok</groupId><artifactId>lombok</artifactId><optional>true</optional></dependency><dependency><groupId>com.alibaba</groupId><artifactId>druid</artifactId><version>1.2.1</version></dependency><dependency><groupId>mysql</groupId><artifactId>mysql-connector-java</artifactId><version>5.1.44</version></dependency><dependency><groupId>org.mybatis.spring.boot</groupId><artifactId>mybatis-spring-boot-starter</artifactId><version>2.1.3</version></dependency><!--添加mybatis分页插件支持 根据需求可要可不要--><dependency><groupId>com.github.pagehelper</groupId><artifactId>pagehelper</artifactId><version>5.2.0</version></dependency>

分表不分库

yml模式

pom

<dependency><groupId>org.apache.shardingsphere</groupId><artifactId>sharding-jdbc-spring-boot-starter</artifactId><version>4.1.0</version></dependency>

yml

spring:shardingsphere:datasource:names: m1 #配置库的名字,随意m1: #配置目前m1库的数据源信息type: com.alibaba.druid.pool.DruidDataSourcedriverClassName: com.mysql.jdbc.Driverurl: jdbc:mysql://localhost:3306/test?useUnicode=trueusername: rootpassword: 123456sharding:tables:t_student: # 指定的数据库名actualDataNodes: m1.t_student_$->{1..2}tableStrategy:inline: # 指定t_student表的分片策略,分片策略包括分片键和分片算法shardingColumn: idalgorithmExpression: t_student_$->{id % 2 + 1}keyGenerator: # 指定t_student表的主键生成策略为SNOWFLAKEtype: SNOWFLAKE #主键生成策略为SNOWFLAKEcolumn: id #指定主键t_student2: # 指定的数据库名2actualDataNodes: m1.t_student2_$->{1..2}tableStrategy:inline: # 指定t_student2表的分片策略,分片策略包括分片键和分片算法shardingColumn: idalgorithmExpression: t_student2_$->{id % 2 + 1}keyGenerator: # 指定t_student2表的主键生成策略为SNOWFLAKEtype: SNOWFLAKE #主键生成策略为SNOWFLAKEcolumn: id #指定主键props:sql:show: true

config模式

pom

<dependency><groupId>org.apache.shardingsphere</groupId><artifactId>sharding-spring-boot-util</artifactId><version>4.1.0</version></dependency><dependency><groupId>org.apache.shardingsphere</groupId><artifactId>sharding-jdbc-core</artifactId><version>4.1.0</version></dependency><dependency><groupId>org.apache.shardingsphere</groupId><artifactId>sharding-transaction-spring</artifactId><version>4.1.0</version></dependency>

config

@Configurationpublic class DataSourceConfig {@Bean(name = "data1")@ConfigurationProperties(prefix = "spring.datasource")public DataSource dataSource() {return new DruidDataSource();}@Bean(name = "dataSource")public ShardingDataSource dataSource(DataSource data1) throws SQLException {Map<String, DataSource> dataSourceMap = new HashMap<>(); //设置分库映射dataSourceMap.put("data1", data1);// 逻辑表名List<String> tableNames = Arrays.asList("t_student");return new ShardingDataSource(dataSourceMap, shardingRule(tableNames), getProperties()); //设置默认库,两个库以上时必须设置默认库。默认库的数据源名称必须是dataSourceMap的key之一}/*** ShardingRule配置整合* @return*/public ShardingRule shardingRule(List<String> tableNames){//具体分库分表策略ShardingRuleConfiguration shardingRuleConfig = new ShardingRuleConfiguration();shardingRuleConfig.setDefaultDataSourceName("data1");// 默认的分表规则shardingRuleConfig.setDefaultTableShardingStrategyConfig(new NoneShardingStrategyConfiguration());// 默认的分库规则shardingRuleConfig.setDefaultDatabaseShardingStrategyConfig(new NoneShardingStrategyConfiguration());// 指定表的主键生成策略为SNOWFLAKEshardingRuleConfig.setDefaultKeyGeneratorConfig(new KeyGeneratorConfiguration("SNOWFLAKE", "id"));// 主从// shardingRuleConfig.setMasterSlaveRuleConfigs(null);// 定义具体的表shardingRuleConfig.setTableRuleConfigs(tableRuleConfigurations(tableNames));//shardingRuleConfig.setBindingTableGroups(Arrays.asList("t_student"));//shardingRuleConfig.setBroadcastTables(Arrays.asList("t_config"));// 数据库指定List<String> dataSourceNames = new ArrayList<>();dataSourceNames.add("data1");return new ShardingRule(shardingRuleConfig, dataSourceNames);}/*** 表规则设定* @return*/public List<TableRuleConfiguration> tableRuleConfigurations(List<String> tableNames){List<TableRuleConfiguration> tableRuleConfigs = new LinkedList();for (String table: tableNames){String actualDataNodes = "data1.".concat(table).concat("_$->{1..2}");TableRuleConfiguration ruleConfiguration = new TableRuleConfiguration(table, actualDataNodes);// ruleConfiguration.setDatabaseShardingStrategyConfig(null);String algorithmExpression = table.concat("_$->{id % 2 + 1}");ruleConfiguration.setTableShardingStrategyConfig(new ModuloTableShardingAlgorithm("id", algorithmExpression));// 指定表的主键生成策略为SNOWFLAKEruleConfiguration.setKeyGeneratorConfig(new KeyGeneratorConfiguration("SNOWFLAKE", "id"));tableRuleConfigs.add(ruleConfiguration);}return tableRuleConfigs;}/*** SqlSessionFactory* @param dataSource* @return* @throws Exception*/@Beanpublic SqlSessionFactory sqlSessionFactory(DataSource dataSource) throws Exception {SqlSessionFactoryBean factoryBean = new SqlSessionFactoryBean();factoryBean.setDataSource(dataSource);factoryBean.setTypeAliasesPackage("com.hzy.sharding.entity");// 设置mapper.xml的位置路径Resource[] resources = new PathMatchingResourcePatternResolver().getResources("classpath:mapper/*.xml");factoryBean.setMapperLocations(resources);return factoryBean.getObject();}/*** 事务* @param dataSource* @return*/@Beanpublic PlatformTransactionManager transactionManager(DataSource dataSource) {return new DataSourceTransactionManager(dataSource);}/*** mybatis配置* @return*/@Beanpublic MapperScannerConfigurer scannerConfigurer() {MapperScannerConfigurer configurer = new MapperScannerConfigurer();configurer.setSqlSessionFactoryBeanName("sqlSessionFactory");configurer.setBasePackage("com.hzy.sharding.dao");return configurer;}private static Properties getProperties() {Properties propertie = new Properties();//是否打印SQL解析和改写日志propertie.setProperty("sql.show",Boolean.TRUE.toString());//用于SQL执行的工作线程数量,为零则表示无限制propertie.setProperty("executor.size","4");//每个物理数据库为每次查询分配的最大连接数量propertie.setProperty("max.connections.size.per.query","1");//是否在启动时检查分表元数据一致性propertie.setProperty("check.table.metadata.enabled","false");return propertie;}}

分表分库

yml模式

spring:shardingsphere:datasource:names: data-0,data-1,data-2,data-3 #配置库的名字,随意data-0: #配置目前master0库的数据源信息type: com.alibaba.druid.pool.DruidDataSourcedriverClassName: com.mysql.jdbc.Driverurl: jdbc:mysql://localhost:3306/test0?useUnicode=trueusername: rootpassword: 123456data-1: #配置目前master0slave0库的数据源信息type: com.alibaba.druid.pool.DruidDataSourcedriverClassName: com.mysql.jdbc.Driverurl: jdbc:mysql://localhost:3306/test01?useUnicode=trueusername: rootpassword: 123456data-2: #配置目前master1库的数据源信息type: com.alibaba.druid.pool.DruidDataSourcedriverClassName: com.mysql.jdbc.Driverurl: jdbc:mysql://localhost:3306/test1?useUnicode=trueusername: rootpassword: 123456data-3: #配置目前master1slave1库的数据源信息type: com.alibaba.druid.pool.DruidDataSourcedriverClassName: com.mysql.jdbc.Driverurl: jdbc:mysql://localhost:3306/test11?useUnicode=trueusername: rootpassword: 123456sharding:#主从配置# master-slave-rules:# ds_0:# slave-data-source-names: master0slave0# master-data-source-name: master0# load-balance-algorithm-type: round_robin #从库负载均衡算法类型,可选值:ROUND_ROBIN,RANDOM。若`loadBalanceAlgorithmClassName`存在则忽略该配置# ds_1:# slave-data-source-names: master1slave1# master-data-source-name: master1# load-balance-algorithm-type: round_robin#默认分库策略default-database-strategy:# #标准分库# standard:# sharding-column: id# precise-algorithm-class-name: com.hzy.sharding.algorithm.UserIdDatabaseAlgorithm#内置inline:sharding-column: create_datealgorithm-expression: data-$->{create_date % 4}#绑定表binding-tables: t_studenttables:t_student: # 指定t_student表的数据分布情况,配置数据节点actualDataNodes: data-$->{0..3}.t_student_$->{1..2}tableStrategy:inline: # 指定t_student表的分片策略,分片策略包括分片键和分片算法shardingColumn: create_datealgorithmExpression: t_student_$->{create_date % 2 + 1}keyGenerator: # 指定t_student表的主键生成策略为SNOWFLAKEtype: SNOWFLAKE #主键生成策略为SNOWFLAKEcolumn: id #指定主键props:sql:show: true

config模式

@Configurationpublic class DataSourceConfig {@Bean(name = "data0")@ConfigurationProperties(prefix = "spring.datasource.data0")public DataSource dataSource0() {return new DruidDataSource();}@Bean(name = "data1")@ConfigurationProperties(prefix = "spring.datasource.data1")public DataSource dataSource1() {return new DruidDataSource();}@Bean(name = "data2")@ConfigurationProperties(prefix = "spring.datasource.data2")public DataSource dataSource2() {return new DruidDataSource();}@Bean(name = "data3")@ConfigurationProperties(prefix = "spring.datasource.data3")public DataSource dataSource3() {return new DruidDataSource();}@Bean(name = "dataSource")public ShardingDataSource dataSource(DataSource data0,DataSource data1,DataSource data2,DataSource data3) throws SQLException {Map<String, DataSource> dataSourceMap = new HashMap<>(); //设置分库映射dataSourceMap.put("data0", data0);dataSourceMap.put("data1", data1);dataSourceMap.put("data2", data2);dataSourceMap.put("data3", data3);// 逻辑表名List<String> tableNames = Arrays.asList("t_student");return new ShardingDataSource(dataSourceMap, shardingRule(tableNames), getProperties()); //设置默认库,两个库以上时必须设置默认库。默认库的数据源名称必须是dataSourceMap的key之一}/*** ShardingRule配置整合* @return*/public ShardingRule shardingRule(List<String> tableNames){//具体分库分表策略ShardingRuleConfiguration shardingRuleConfig = new ShardingRuleConfiguration();// 不分库分表情况下的数据源制定--默认数据库shardingRuleConfig.setDefaultDataSourceName("data0");// 默认的分表规则shardingRuleConfig.setDefaultTableShardingStrategyConfig(new NoneShardingStrategyConfiguration());// databaseshardingRuleConfig.setDefaultDatabaseShardingStrategyConfig(new InlineShardingStrategyConfiguration("create_date", "data$->{create_date % 4}"));// 指定表的主键生成策略为SNOWFLAKEshardingRuleConfig.setDefaultKeyGeneratorConfig(new KeyGeneratorConfiguration("SNOWFLAKE", "id"));// 主从// shardingRuleConfig.setMasterSlaveRuleConfigs(null);// 定义具体的表shardingRuleConfig.setTableRuleConfigs(tableRuleConfigurations(tableNames));// 绑定逻辑表shardingRuleConfig.setBindingTableGroups(tableNames);//shardingRuleConfig.setBroadcastTables(Arrays.asList("t_config"));// 数据库指定List<String> dataSourceNames = new ArrayList<>();dataSourceNames.add("data0");dataSourceNames.add("data1");dataSourceNames.add("data2");dataSourceNames.add("data3");return new ShardingRule(shardingRuleConfig, dataSourceNames);}/*** 表规则设定* @return*/public List<TableRuleConfiguration> tableRuleConfigurations(List<String> tableNames){List<TableRuleConfiguration> tableRuleConfigs = new LinkedList();for (String table: tableNames){String actualDataNodes = "data$->{0..3}.".concat(table).concat("_$->{1..2}");TableRuleConfiguration ruleConfiguration = new TableRuleConfiguration(table, actualDataNodes);// ruleConfiguration.setDatabaseShardingStrategyConfig(null);String algorithmExpression = table.concat("_$->{create_date % 2 + 1}");// 表策略ruleConfiguration.setTableShardingStrategyConfig(new InlineShardingStrategyConfiguration("create_date", algorithmExpression));// 分库策略// ruleConfiguration.setDatabaseShardingStrategyConfig(new InlineShardingStrategyConfiguration("id", "data$->{id % 4}"));// 指定表的主键生成策略为SNOWFLAKEruleConfiguration.setKeyGeneratorConfig(new KeyGeneratorConfiguration("SNOWFLAKE", "id"));tableRuleConfigs.add(ruleConfiguration);}return tableRuleConfigs;}/*** SqlSessionFactory* @param dataSource* @return* @throws Exception*/@Beanpublic SqlSessionFactory sqlSessionFactory(DataSource dataSource) throws Exception {SqlSessionFactoryBean factoryBean = new SqlSessionFactoryBean();factoryBean.setDataSource(dataSource);factoryBean.setTypeAliasesPackage("com.hzy.sharding.entity");// 设置mapper.xml的位置路径Resource[] resources = new PathMatchingResourcePatternResolver().getResources("classpath:mapper/*.xml");factoryBean.setMapperLocations(resources);return factoryBean.getObject();}/*** 事务* @param dataSource* @return*/@Beanpublic PlatformTransactionManager transactionManager(DataSource dataSource) {return new DataSourceTransactionManager(dataSource);}/*** mybatis配置* @return*/@Beanpublic MapperScannerConfigurer scannerConfigurer() {MapperScannerConfigurer configurer = new MapperScannerConfigurer();configurer.setSqlSessionFactoryBeanName("sqlSessionFactory");configurer.setBasePackage("com.hzy.sharding.dao");return configurer;}// 配置private static Properties getProperties() {Properties propertie = new Properties();//是否打印SQL解析和改写日志propertie.setProperty("sql.show",Boolean.TRUE.toString());//用于SQL执行的工作线程数量,为零则表示无限制propertie.setProperty("executor.size","4");//每个物理数据库为每次查询分配的最大连接数量propertie.setProperty("max.connections.size.per.query","1");//是否在启动时检查分表元数据一致性propertie.setProperty("check.table.metadata.enabled","false");return propertie;}}

备注

1、yml中自动加载配置的类:SpringBootPropertiesConfigurationProperties

2、keyGenerator.type: SNOWFLAKE 表主键自动生成,生成分布式主键id

3、config就是将yml中的格式翻译

常见错误

No such property: id for class: Script1问题原因:错误:String actualDataNodes = "data$->{id % 4}.".concat(table).concat("_$->{1..2}");正确:String actualDataNodes = "data$->{0..3}.".concat(table).concat("_$->{1..2}");

Data sources cannot be empty.原因:sharding-jdbc-spring-boot-starter 会自动加载,config的不要引用这个包

如果执行的sql语句没有带编号,那么就需要检查:t_student2: # 指定的数据库名2actualDataNodes: m1.t_student2_$->{1..2}algorithmExpression: t_student2_$->{id % 2 + 1}中 t_student2 是否相同

文章转载自Java技术学习笔记,如果涉嫌侵权,请发送邮件至:contact@modb.pro进行举报,并提供相关证据,一经查实,墨天轮将立刻删除相关内容。