1.window环境调用linux中hadoop集群时,需要配置

1.hadoop在window中的环境变量2.需要在hadoop/bin中添加winutils.exe 和 hadoop.dll下载目录:https://github.com/steveloughran/winutils

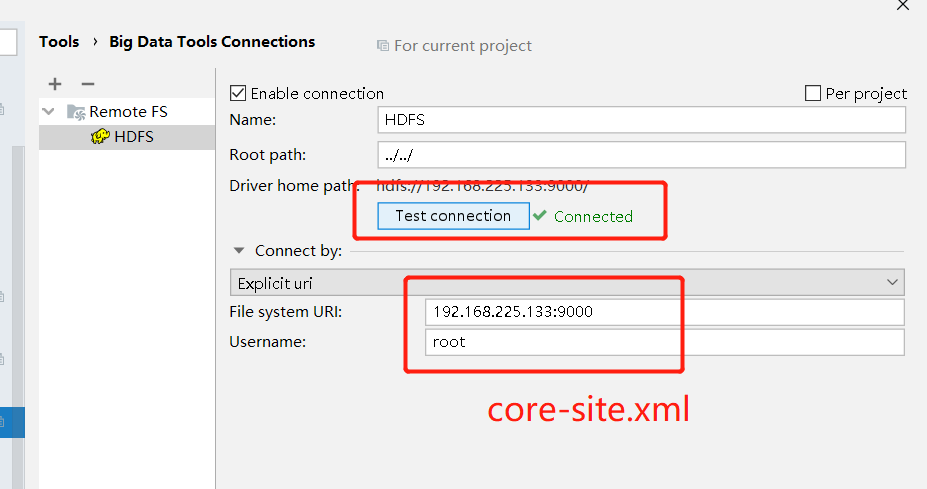

2.idea下载hadoop插件

Big Data Tools

备注:

如果点击 Test Connection 时失败可能原因有:1.虚拟机防火墙没有关闭,或者防火墙对应的端口没有放开2.缺少winutils.exe 和 hadoop.dll3.也可能是第一次连接时里面没有数据

3.异常

Error: java.io.IOException: Filesystem closedat org.apache.hadoop.hdfs.DFSClient.checkOpen(DFSClient.java:823)at org.apache.hadoop.hdfs.DFSInputStream.readWithStrategy(DFSInputStream.java:846)at org.apache.hadoop.hdfs.DFSInputStream.read(DFSInputStream.java:907)at java.io.DataInputStream.readFully(DataInputStream.java:195)at java.io.DataInputStream.readFully(DataInputStream.java:169)at org.apache.parquet.hadoop.ParquetFileReader$ConsecutiveChunkList.readAll(ParquetFileReader.java:756)at org.apache.parquet.hadoop.ParquetFileReader.readNextRowGroup(ParquetFileReader.java:494)at org.apache.parquet.hadoop.InternalParquetRecordReader.checkRead(InternalParquetRecordReader.java:127)at org.apache.parquet.hadoop.InternalParquetRecordReader.nextKeyValue(InternalParquetRecordReader.java:208)at org.apache.parquet.hadoop.ParquetRecordReader.nextKeyValue(ParquetRecordReader.java:201)at org.apache.hadoop.mapreduce.lib.input.DelegatingRecordReader.nextKeyValue(DelegatingRecordReader.java:89)

解决方法:

1.不要调用Hadoop的FileSystem#close2.disable掉FileSystem内部的cache(有性能问题)configuration.setBoolean("fs.hdfs.impl.disable.cache", true);这种情况不能使用下面的项目,因为需要close和创建实例

4.HDFS java API demo

# ymlhdfs:hdfsPath: hdfs://192.168.225.133:9000hdfsName: rootbufferSize: 67108864

# HDFSConfigpublic Configuration getConfiguration() {Configuration configuration = new Configuration();configuration.set("fs.defaultFS", hdfsProperties.getHdfsPath());// 关闭cache// configuration.setBoolean("fs.hdfs.impl.disable.cache", true);return configuration;}@Beanpublic FileSystem getFileSystem () throws Exception {FileSystem fileSystem = FileSystem.get(new URI(hdfsProperties.getHdfsPath()), getConfiguration(), hdfsProperties.getHdfsName());return fileSystem;}

项目地址:

https://gitee.com/hzy100java/spring-hadoop-client.git

不用close的底层代码原因:

//Cache.classprivate FileSystem getInternal(URI uri, Configuration conf, Key key) throws IOException{FileSystem fs;synchronized (this) {fs = map.get(key);}if (fs != null) {return fs;}fs = createFileSystem(uri, conf);synchronized (this) { // refetch the lock againFileSystem oldfs = map.get(key);if (oldfs != null) { // a file system is created while lock is releasingfs.close(); // close the new file systemreturn oldfs; // return the old file system}// now insert the new file system into the mapif (map.isEmpty()&& !ShutdownHookManager.get().isShutdownInProgress()) {ShutdownHookManager.get().addShutdownHook(clientFinalizer, SHUTDOWN_HOOK_PRIORITY);}fs.key = key;map.put(key, fs);if (conf.getBoolean("fs.automatic.close", true)) {toAutoClose.add(key);}return fs;}

文章转载自Java技术学习笔记,如果涉嫌侵权,请发送邮件至:contact@modb.pro进行举报,并提供相关证据,一经查实,墨天轮将立刻删除相关内容。