我们在前面几篇文章终对cilium 原理进行了部分讲解,前期准备相关知识已经就绪,这一期内容请跟着我们的文章,一起分析pod网络数据流转。

在讲数据流转之前,有两个关于网络的说明

Pod 的 IP 会被设置成 32 位。因此在这种情况下,Pod 访问任何其他 IP 都是走 L3 路由

当网卡上的ip不为/32段时,表示还有其它机器和他同网段,如果访问同网段机器时,会走直连路由(就直接从该网卡出去)。但linux中,直连路由的优先级并不是最高的

当网卡上的ip为/32时,表示没有其它机器和他同网段,所以,不管访问哪个ip,都会走路由表查下一跳

由于pod并没有复杂的网卡信息,通过验证发现,不管pod的ip是什么段的,表现结果是一样的。但有一点会不同,就是arp表(后续分析流量时会详细分析)

rp_filter=0

以前所知道的是,流量从哪来,就从哪回去。但系统实际上不是这样的,数据包到达后,回去时,仍然需要经过路由,而如果路由的结果与进来的网卡不同,则会基于rp_filter判断是否丢弃数据包。

当rp_filter为0时,操作系统不进行检查

当rp_filter为1时,操作系统进行严格检查。源网卡与目的网卡必需一致

当rp_filter为2时,只检查源地址是否能匹配到路由(包括默认路由),设置2和设置0差不多,当有默认路由时

对于每一个新建的网卡,Linux会自动将net.ipv4.conf.{interface}.rp_filter设为net.ipv4.conf.default.rp_filter的值。但实际这个网卡的策略是取net.ipv4.conf.{all,interface}.rp_filter中的较大值。

因此,想要关闭入口流量过滤的话,需要将all和对应网卡的rp_filter都设为0。当然,如果使用宽松的反向路径检查,只需要将网卡的rp_filter设置为2。

01

网络数据流转

网络数据流转,主要分如下几个类型:(官方分的比较粗,可参考:https://docs.cilium.io/en/stable/network/ebpf/lifeofapacket/)

同主机pod与pod之间

不同主机pod与pod之间

主机上访问该主机上的pod

主机上访问其它主机上的pod

外部通过vip或者nodeport访问集群内服务时,也同样分两种情况:

vip与目标pod在同主机上

vip与目标pod在不同主机上

而这两种,分别与第3、4情况流量基本相同。

集群内访问vip或者nodeport,在之前sock_conn有描述,会在建立socket时,将目的ip转换,所以流量还是第3、4种情况。所以集群内是无法通过这种方式检查vip的健康状态的。

pod访问外部,这种场景使用比较少,先不分析。另外,网络策略后续会单独讲,先不考虑。

实验环境

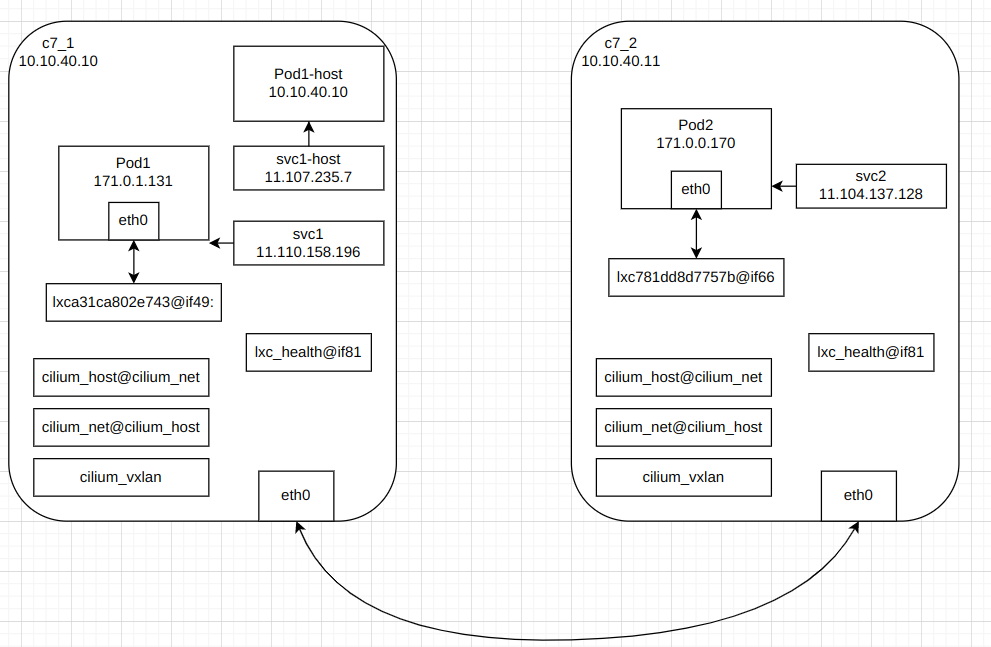

双虚拟机centos7,升级内核到4.19。k8s:1.18.17,cilium:1.12.2(without kube-proxy)

c7_1中,pod1运行在集群网络中,pod1-host运行在主机网络,svc1指向pod1,svc1-host指向pod1-host

c7_2中,pod2运行在集群网络中,svc2指向pod2

02

同主机pod之间的数据流转

在高内核版本(>=5.10)后,cilium可以开启host-routing,性能会更高,数据流转会不同。当前先不分析这种。

实验环境

测试的deploy资源

apiVersion: apps/v1

kind: Deployment

metadata:

labels:

app: c7-1

name: c7-1

namespace: default

spec:

replicas: 2

selector:

matchLabels:

app: c7-1

template:

metadata:

labels:

app: c7-1

spec:

containers:

- command:

- sh

- -c

- |

ln -s /usr/sbin/sshd /tmp/$POD_NAME

/tmp/$POD_NAME -D

env:

- name: POD_NAME

valueFrom:

fieldRef:

apiVersion: v1

fieldPath: metadata.name

image: centos:7

name: centos

dnsPolicy: ClusterFirst

nodeSelector:

kubernetes.io/hostname: c7-1

k get ciliumendpoint

NAME ENDPOINT ID IDENTITY ID INGRESS ENFORCEMENT EGRESS ENFORCEMENT VISIBILITY POLICY ENDPOINT STATE IPV4 IPV6

c7-1-8dc7cbb95-hrq7c 32 43561 <status disabled> <status disabled> <status disabled> ready 171.0.1.227

c7-1-8dc7cbb95-mbtvr 1777 43561 <status disabled> <status disabled> <status disabled> ready 171.0.1.234

k get po -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

c7-1-8dc7cbb95-hrq7c 1/1 Running 0 3m52s 171.0.1.227 c7-1 <none> <none>

c7-1-8dc7cbb95-mbtvr 1/1 Running 0 3m54s 171.0.1.234 c7-1 <none> <none>

# 进程相关信息

ps -ef|grep "/tmp/c7-1"

root 11764 11747 0 16:14 ? 00:00:00 /tmp/c7-1-8dc7cbb95-mbtvr -D

root 11929 11912 0 16:14 ? 00:00:00 /tmp/c7-1-8dc7cbb95-hrq7c -D

# 网卡相关信息(基于进程号获取)

nsenter -n -t 11764 ip a

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN group default qlen 1000

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

2871: eth0@if2872: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue state UP group default qlen 1000

link/ether fe:ca:ae:42:4c:28 brd ff:ff:ff:ff:ff:ff link-netnsid 0

inet 171.0.1.234/32 scope global eth0

valid_lft forever preferred_lft forever

nsenter -n -t 11929 ip a

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN group default qlen 1000

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

2873: eth0@if2874: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue state UP group default qlen 1000

link/ether 52:c9:27:39:38:ec brd ff:ff:ff:ff:ff:ff link-netnsid 0

inet 171.0.1.227/32 scope global eth0

valid_lft forever preferred_lft forever

# 主机上网卡信息(基于上面的if后的数字)

ip a show if2872

2872: lxc5a02905b2747@if2871: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue state UP group default qlen 1000

link/ether 52:97:91:be:3d:b0 brd ff:ff:ff:ff:ff:ff link-netnsid 9

inet6 fe80::5097:91ff:febe:3db0/64 scope link

valid_lft forever preferred_lft forever

ip a show if2874

2874: lxc1ffdc61b0dd3@if2873: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue state UP group default qlen 1000

link/ether c6:3a:d1:28:cb:a2 brd ff:ff:ff:ff:ff:ff link-netnsid 12

inet6 fe80::c43a:d1ff:fe28:cba2/64 scope link

valid_lft forever preferred_lft forever

基于上面信息,整理汇总成如下表格

数据包测试

# 从 171.0.1.227访问171.0.1.234:22 (先运行后面的抓包命令,再运行curl)

nsenter -n -t 11929 curl 171.0.1.234:22

# 主机上基于endpontID抓171.0.1.227相关的数据包

[root@c7-1 ~]# cilium monitor --related-to 32

Listening for events on 8 CPUs with 64x4096 of shared memory

Press Ctrl-C to quit

level=info msg="Initializing dissection cache..." subsys=monitor

<- endpoint 32 flow 0x0 , identity 43561->unknown state unknown ifindex 0 orig-ip 0.0.0.0: 52:c9:27:39:38:ec -> ff:ff:ff:ff:ff:ff ARP

-> lxc1ffdc61b0dd3: c6:3a:d1:28:cb:a2 -> 52:c9:27:39:38:ec ARP

<- endpoint 32 flow 0x8aec6a59 , identity 43561->unknown state unknown ifindex 0 orig-ip 0.0.0.0: 171.0.1.227:39276 -> 171.0.1.234:22 tcp SYN

-> endpoint 32 flow 0xc0c7968a , identity 43561->43561 state reply ifindex lxc1ffdc61b0dd3 orig-ip 171.0.1.234: 171.0.1.234:22 -> 171.0.1.227:39276 tcp SYN, ACK

<- endpoint 32 flow 0x8aec6a59 , identity 43561->unknown state unknown ifindex 0 orig-ip 0.0.0.0: 171.0.1.227:39276 -> 171.0.1.234:22 tcp ACK

<- endpoint 32 flow 0x8aec6a59 , identity 43561->unknown state unknown ifindex 0 orig-ip 0.0.0.0: 171.0.1.227:39276 -> 171.0.1.234:22 tcp ACK

-> endpoint 32 flow 0xc0c7968a , identity 43561->43561 state reply ifindex lxc1ffdc61b0dd3 orig-ip 171.0.1.234: 171.0.1.234:22 -> 171.0.1.227:39276 tcp ACK

-> endpoint 32 flow 0xc0c7968a , identity 43561->43561 state reply ifindex lxc1ffdc61b0dd3 orig-ip 171.0.1.234: 171.0.1.234:22 -> 171.0.1.227:39276 tcp ACK

<- endpoint 32 flow 0x8aec6a59 , identity 43561->unknown state unknown ifindex 0 orig-ip 0.0.0.0: 171.0.1.227:39276 -> 171.0.1.234:22 tcp ACK

-> endpoint 32 flow 0xc0c7968a , identity 43561->43561 state reply ifindex lxc1ffdc61b0dd3 orig-ip 171.0.1.234: 171.0.1.234:22 -> 171.0.1.227:39276 tcp ACK

<- endpoint 32 flow 0x8aec6a59 , identity 43561->unknown state unknown ifindex 0 orig-ip 0.0.0.0: 171.0.1.227:39276 -> 171.0.1.234:22 tcp ACK

-> endpoint 32 flow 0xc0c7968a , identity 43561->43561 state reply ifindex lxc1ffdc61b0dd3 orig-ip 171.0.1.234: 171.0.1.234:22 -> 171.0.1.227:39276 tcp ACK, RST

# 主机上基于endpointID抓171.0.1.234相关的数据包

[root@c7-1 ~]# cilium monitor --related-to 1777

Listening for events on 8 CPUs with 64x4096 of shared memory

Press Ctrl-C to quit

level=info msg="Initializing dissection cache..." subsys=monitor

-> endpoint 1777 flow 0x8aec6a59 , identity 43561->43561 state new ifindex lxc5a02905b2747 orig-ip 171.0.1.227: 171.0.1.227:39276 -> 171.0.1.234:22 tcp SYN

<- endpoint 1777 flow 0x0 , identity 43561->unknown state unknown ifindex 0 orig-ip 0.0.0.0: fe:ca:ae:42:4c:28 -> ff:ff:ff:ff:ff:ff ARP

-> lxc5a02905b2747: 52:97:91:be:3d:b0 -> fe:ca:ae:42:4c:28 ARP

<- endpoint 1777 flow 0xc0c7968a , identity 43561->unknown state unknown ifindex 0 orig-ip 0.0.0.0: 171.0.1.234:22 -> 171.0.1.227:39276 tcp SYN, ACK

-> endpoint 1777 flow 0x8aec6a59 , identity 43561->43561 state established ifindex lxc5a02905b2747 orig-ip 171.0.1.227: 171.0.1.227:39276 -> 171.0.1.234:22 tcp ACK

-> endpoint 1777 flow 0x8aec6a59 , identity 43561->43561 state established ifindex lxc5a02905b2747 orig-ip 171.0.1.227: 171.0.1.227:39276 -> 171.0.1.234:22 tcp ACK

<- endpoint 1777 flow 0xc0c7968a , identity 43561->unknown state unknown ifindex 0 orig-ip 0.0.0.0: 171.0.1.234:22 -> 171.0.1.227:39276 tcp ACK

<- endpoint 1777 flow 0xc0c7968a , identity 43561->unknown state unknown ifindex 0 orig-ip 0.0.0.0: 171.0.1.234:22 -> 171.0.1.227:39276 tcp ACK

-> endpoint 1777 flow 0x8aec6a59 , identity 43561->43561 state established ifindex lxc5a02905b2747 orig-ip 171.0.1.227: 171.0.1.227:39276 -> 171.0.1.234:22 tcp ACK

<- endpoint 1777 flow 0xc0c7968a , identity 43561->unknown state unknown ifindex 0 orig-ip 0.0.0.0: 171.0.1.234:22 -> 171.0.1.227:39276 tcp ACK

-> endpoint 1777 flow 0x8aec6a59 , identity 43561->43561 state established ifindex lxc5a02905b2747 orig-ip 171.0.1.227: 171.0.1.227:39276 -> 171.0.1.234:22 tcp ACK

<- endpoint 1777 flow 0xc0c7968a , identity 43561->unknown state unknown ifindex 0 orig-ip 0.0.0.0: 171.0.1.234:22 -> 171.0.1.227:39276 tcp ACK, RESTARTS

因为抓包的过程中,发现了arp包,所以运行后,各自查下pod中的arp表:

[root@c7-1 ~]# nsenter -n -t 11764 arp -n

Address HWtype HWaddress Flags Mask Iface

171.0.1.95 ether 52:97:91:be:3d:b0 C eth0

[root@c7-1 ~]# nsenter -n -t 11929 arp -n

Address HWtype HWaddress Flags Mask Iface

171.0.1.95 ether c6:3a:d1:28:cb:a2 C eth0

通过查路由表,明确了为什么arp表中只有171.0.1.95

[root@c7-1 ~]# nsenter -n -t 11764 ip r

default via 171.0.1.95 dev eth0 mtu 1450

171.0.1.95 dev eth0 scope link

[root@c7-1 ~]# nsenter -n -t 11929 ip r

default via 171.0.1.95 dev eth0 mtu 1450

171.0.1.95 dev eth0 scope link

由于cilium monitor中,没有输出arp是查哪个ip,可以通过tcpdump来验证

nsenter -n -t 11929 tcpdump -nnn -vvv arp

tcpdump: listening on eth0, link-type EN10MB (Ethernet), capture size 262144 bytes

16:54:14.177150 ARP, Ethernet (len 6), IPv4 (len 4), Request who-has 171.0.1.95 tell 171.0.1.227, length 28

16:54:14.177195 ARP, Ethernet (len 6), IPv4 (len 4), Reply 171.0.1.95 is-at c6:3a:d1:28:cb:a2, length 28

arp数据包分析

1、为什么arp没有查171.0.1.234

,而是直接查网关的?

因为pod ip为/32,表示没有任何ip与它是同网段,所以数据包只能基于路由发给下一跳:网关。而为了补全目的mac,需要通过arp查询网关的mac地址。当然,可以找机器做验证,如果机器ip不是/32,比如是/24的,当请求同网段其它机器时,会直接发起arp,并在arp表中缓存该ip的信息。arp具体验证可参考下面步骤。

[root@c7-1 ~]# docker run -itd centos:7 sleep 12345

dfe7c39d0c88c5f32d64c4a7369c1c375071b82737066f3ea4c5757d3631ecb7

[root@c7-1 ~]# docker run -itd centos:7 sleep 22345

eb2070776843baf0ca124dfd7b6f731bedcdfb341e3cd4a854607c1a64117faa

[root@c7-1 ~]# ps -ef |grep 2345

root 3440 3420 0 17:07 pts/0 00:00:00 sleep 12345

root 3533 3515 1 17:07 pts/0 00:00:00 sleep 22345

root 3585 28332 0 17:07 pts/0 00:00:00 grep --color=auto 2345 "sleep 12345" 12 seconds ago Up 12 seconds happy_elion

[root@c7-1 ~]# nsenter -n -t 3440 ip -4 a

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN group default qlen 1000

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

2885: eth0@if2886: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue state UP group default link-netnsid 0

inet 172.17.0.2/16 brd 172.17.255.255 scope global eth0

valid_lft forever preferred_lft forever

[root@c7-1 ~]# nsenter -n -t 3533 ip -4 a

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN group default qlen 1000

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

2887: eth0@if2888: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue state UP group default link-netnsid 0

inet 172.17.0.3/16 brd 172.17.255.255 scope global eth0

valid_lft forever preferred_lft forever

[root@c7-1 ~]# nsenter -n -t 3440 bash

[root@c7-1 ~]# arp -n

[root@c7-1 ~]# ping 172.17.0.3

PING 172.17.0.3 (172.17.0.3) 56(84) bytes of data.

64 bytes from 172.17.0.3: icmp_seq=1 ttl=64 time=0.085 ms

^C

--- 172.17.0.3 ping statistics ---

1 packets transmitted, 1 received, 0% packet loss, time 0ms

rtt min/avg/max/mdev = 0.085/0.085/0.085/0.000 ms

[root@c7-1 ~]# arp -n

Address HWtype HWaddress Flags Mask Iface

172.17.0.3 ether 02:42:ac:11:00:03 C eth0

2、从上面可看到,虽然两个pod的网关都是同一个地址,而这个地址是cilium_host的地址

ip addr show cilium_host

5: cilium_host@cilium_net: <BROADCAST,MULTICAST,NOARP,UP,LOWER_UP> mtu 1500 qdisc noqueue state UP group default qlen 1000

link/ether fa:51:eb:ab:32:cd brd ff:ff:ff:ff:ff:ff

inet 171.0.1.95/32 scope global cilium_host

valid_lft forever preferred_lft forever

但mac获取的都是不同的,这个就是cilum注册的程序from_container

处理的,它将拦截arp请求,并直接返回arp应答。应答MAC为pod对应主机网卡对的mac地址

,具体操作函数为ep_tail_call(ctx,CILIUM_CALL_ARP)

pod流量分析

同样的抓包命令,但输出格式改为json,会有更详细的输出。下面是单个sync数据包的相关日志。其它数据包是类似的流程。

cilium monitor --related-to 32 -j

{"cpu":"CPU 03:","type":"trace","mark":"0x992dc183","ifindex":"0","state":"unknown","observationPoint":"from-endpoint","traceSummary":"\u003c- endpoint 32","source":32,"bytes":74,"srcLabel":43561,"dstLabel":0,"dstID":0,"summary":{"ethernet":"Ethernet\t{Contents=[..14..] Payload=[..62..] SrcMAC=52:c9:27:39:38:ec DstMAC=c6:3a:d1:28:cb:a2 EthernetType=IPv4 Length=0}","ipv4":"IPv4\t{Contents=[..20..] Payload=[..40..] Version=4 IHL=5 TOS=0 Length=60 Id=8093 Flags=DF FragOffset=0 TTL=64 Protocol=TCP Checksum=49489 SrcIP=171.0.1.227 DstIP=171.0.1.234 Options=[] Padding=[]}","tcp":"TCP\t{Contents=[..40..] Payload=[] SrcPort=50222 DstPort=22(ssh) Seq=2382719515 Ack=0 DataOffset=10 FIN=false SYN=true RST=false PSH=false ACK=false URG=false ECE=false CWR=false NS=false Window=64860 Checksum=23036 Urgent=0 Options=[..5..] Padding=[]}","l2":{"src":"52:c9:27:39:38:ec","dst":"c6:3a:d1:28:cb:a2"},"l3":{"src":"171.0.1.227","dst":"171.0.1.234"},"l4":{"src":"50222","dst":"22"}}}

{"cpu":"CPU 03:","type":"debug","message":"Conntrack lookup 1/2: src=171.0.1.227:50222 dst=171.0.1.234:22"}

{"cpu":"CPU 03:","type":"debug","message":"Conntrack lookup 2/2: nexthdr=6 flags=1"}

{"cpu":"CPU 03:","type":"debug","message":"CT verdict: New, revnat=0"}

{"cpu":"CPU 03:","type":"debug","message":"Successfully mapped addr=171.0.1.234 to identity=43561"}

{"cpu":"CPU 03:","type":"debug","message":"Conntrack create: proxy-port=0 revnat=0 src-identity=43561 lb=0.0.0.0"}

{"cpu":"CPU 03:","type":"debug","message":"Attempting local delivery for container id 1777 from seclabel 43561"}

{"cpu":"CPU 03:","type":"debug","message":"Conntrack lookup 1/2: src=171.0.1.234:22 dst=171.0.1.227:50222"}

{"cpu":"CPU 03:","type":"debug","message":"Conntrack lookup 2/2: nexthdr=6 flags=0"}

{"cpu":"CPU 03:","type":"debug","message":"CT entry found lifetime=6163982, revnat=0"}

{"cpu":"CPU 03:","type":"debug","message":"CT verdict: Reply, revnat=0"}

{"cpu":"CPU 03:","type":"trace","mark":"0xe5613621","ifindex":"lxc1ffdc61b0dd3","state":"reply","observationPoint":"to-endpoint","traceSummary":"-\u003e endpoint 32","source":32,"bytes":74,"srcLabel":43561,"dstLabel":43561,"dstID":32,"summary":{"ethernet":"Ethernet\t{Contents=[..14..] Payload=[..62..] SrcMAC=c6:3a:d1:28:cb:a2 DstMAC=52:c9:27:39:38:ec EthernetType=IPv4 Length=0}","ipv4":"IPv4\t{Contents=[..20..] Payload=[..40..] Version=4 IHL=5 TOS=0 Length=60 Id=0 Flags=DF FragOffset=0 TTL=63 Protocol=TCP Checksum=57838 SrcIP=171.0.1.234 DstIP=171.0.1.227 Options=[] Padding=[]}","tcp":"TCP\t{Contents=[..40..] Payload=[] SrcPort=22(ssh) DstPort=50222 Seq=358617332 Ack=2382719516 DataOffset=10 FIN=false SYN=true RST=false PSH=false ACK=true URG=false ECE=false CWR=false NS=false Window=64308 Checksum=23036 Urgent=0 Options=[..5..] Padding=[]}","l2":{"src":"c6:3a:d1:28:cb:a2","dst":"52:c9:27:39:38:ec"},"l3":{"src":"171.0.1.234","dst":"171.0.1.227"},"l4":{"src":"22","dst":"50222"}}}

当前环境信息如下:

[root@lilh-cilium01 ~]# cilium endpoint list

ENDPOINT POLICY (ingress) POLICY (egress) IDENTITY LABELS (source:key[=value]) IPv6 IPv4 STATUS

ENFORCEMENT ENFORCEMENT

944 Disabled Disabled 1 k8s:node-role.kubernetes.io/control-plane ready

k8s:node-role.kubernetes.io/master

k8s:node.kubernetes.io/exclude-from-external-load-balancers

k8s:nodename=c7-1

k8s:vpc.id

reserved:host

1548 Disabled Disabled 4866 k8s:app=c7-2 171.0.0.182 ready

k8s:io.cilium.k8s.namespace.labels.kubernetes.io/metadata.name=default

k8s:io.cilium.k8s.policy.cluster=default

k8s:io.cilium.k8s.policy.serviceaccount=default

k8s:io.kubernetes.pod.namespace=default

2055 Disabled Disabled 4 reserved:health 171.0.0.244 ready

为方便跟踪函数调用流程,我在日志中增加了调试代码:下面这个日志是基于代码:cilium_dbg3(ctx, DBG_L4_CREATE, 1234, 4321, 22 « 16 | 22); 的输出。其中,5632=22*2^8。

同时,为了方便debug,我在cpu信息中增加了输出:ty表示:debug类型。st表示debug子类型。s表示源endpointID,h表示报文hash。

{"cpu":"CPU 02:1718810640720145931,ty:2,st:55,s:944,h:368041654","type":"debug","message":"Matched L4 policy; creating conntrack src=1234 dst=4321 dport=5632 proto=22"}

为了方便体现函数调用关系,下面输出将按如下格式:

一行为执行函数,一行为相应的日志输出

|代表同函数下的子函数调用

|> 代表进入子函数

<- 代表子函数结束,返回上层

[root@c7-1 ~]# cilium monitor -j # 由于有些包与主机相关,所以抓包时不指定endpointid,或者可以指定 同时 host与 pod的ep id。 从上面可查出来host的ep为944;

Listening for events on 8 CPUs with 64x4096 of shared memory

Press Ctrl-C to quit

# 从主机访问pod,先从主机路由出发,基于路由发到cilium_host,触发cilium_host上注册的ebpf程序。

# cilium_host(6) clsact/ingress bpf_host.o:[to-host] id 1671

# cilium_host(6) clsact/egress bpf_host.o:[from-host] id 1680 流量为egress,走的是from-host

section: from-host

{"cpu":"CPU 02:1718810640720145931,ty:2,st:55,s:944,h:368041654","type":"debug","message":"Matched L4 policy; creating conntrack src=1235 dst=5321 dport=256 proto=22"}

|> handle_netdev

{"cpu":"CPU 02:1718810640720217336,ty:2,st:55,s:944,h:368041654","type":"debug","message":"Matched L4 policy; creating conntrack src=1235 dst=5321 dport=256 proto=20"}

|> do_netdev

{"cpu":"CPU 02:1718810640720233355,ty:2,st:55,s:944,h:368041654","type":"debug","message":"Matched L4 policy; creating conntrack src=1235 dst=5321 dport=256 proto=19"}

| inherit_identity_from_host

{"cpu":"CPU 02:1718810640720246529,ty:2,st:61,s:944,h:368041654","type":"debug","message":"Inheriting identity=1 from stack"}

| send_trace_notify

{"cpu":"CPU 02:1718810640720258958,ty:4,st:7,s:944,h:368041654","type":"trace","mark":"0x15efdeb6","ifindex":"0","state":"unknown","observationPoint":"from-host","traceSu

mmary":"\u003c- host","source":944,"bytes":66,"srcLabel":1,"dstLabel":0,"dstID":0,"summary":{"ethernet":"Ethernet\t{Contents=[..14..] Payload=[..54..] SrcMAC=d6:d5:a2:5e:

f5:8b DstMAC=d6:d5:a2:5e:f5:8b EthernetType=IPv4 Length=0}","ipv4":"IPv4\t{Contents=[..20..] Payload=[..32..] Version=4 IHL=5 TOS=0 Length=52 Id=14171 Flags=DF FragOffset

=0 TTL=64 Protocol=TCP Checksum=43976 SrcIP=171.0.0.234 DstIP=171.0.0.182 Options=[] Padding=[]}","tcp":"TCP\t{Contents=[..32..] Payload=[] SrcPort=41370 DstPort=22(ssh)

Seq=2874142911 Ack=0 DataOffset=8 FIN=false SYN=true RST=false PSH=false ACK=false URG=false ECE=false CWR=false NS=false Window=64240 Checksum=22471 Urgent=0 Options=[..

6..] Padding=[]}","l2":{"src":"d6:d5:a2:5e:f5:8b","dst":"d6:d5:a2:5e:f5:8b"},"l3":{"src":"171.0.0.234","dst":"171.0.0.182"},"l4":{"src":"41370","dst":"22"}}}

|> resolve_srcid_ipv4

{"cpu":"CPU 02:1718810640720499060,ty:2,st:55,s:944,h:368041654","type":"debug","message":"Matched L4 policy; creating conntrack src=1235 dst=5321 dport=256 proto=9"}

| DBG_IP_ID_MAP_SUCCEED4

{"cpu":"CPU 02:1718810640720514698,ty:2,st:58,s:944,h:368041654","type":"debug","message":"Successfully mapped addr=171.0.0.234 to identity=1"}

<-

| ep_tail_call(ctx, CILIUM_CALL_IPV4_FROM_HOST):基于尾调用规则调用:tail_handle_ipv4_from_host

{"cpu":"CPU 02:1718810640720528169,ty:2,st:55,s:944,h:368041654","type":"debug","message":"Matched L4 policy; creating conntrack src=1235 dst=5321 dport=256 proto=12"}

|> tail_handle_ipv4

{"cpu":"CPU 02:1718810640720540272,ty:2,st:55,s:944,h:368041654","type":"debug","message":"Matched L4 policy; creating conntrack src=1235 dst=5321 dport=256 proto=11"}

|> handle_ipv4

{"cpu":"CPU 02:1718810640720552398,ty:2,st:55,s:944,h:368041654","type":"debug","message":"Matched L4 policy; creating conntrack src=1235 dst=5321 dport=256 proto=10"}

| rewrite_dmac_to_host

{"cpu":"CPU 02:1718810640720565413,ty:2,st:55,s:944,h:368041654","type":"debug","message":"Matched L4 policy; creating conntrack src=1235 dst=5321 dport=256 proto=1"}

|> ipv4_local_delivery

{"cpu":"CPU 02:1718810640720590414,ty:2,st:2,s:944,h:368041654","type":"debug","message":"Attempting local delivery for container id 1548 from seclabel 1"}

| tail_call_dynamic(ctx, &POLICY_CALL_MAP, ep->lxc_id):基于policy_call_map中定义的尾调用,调用:handle_policy

# 每个bpf_lxc中都有定义:__section_tail(CILIUM_MAP_POLICY, TEMPLATE_LXC_ID), 用于函数与map中定义关联。

# 同时,从这行日志开始,s 从944变成了1548, 因为从bpf_host的代码转换成bpf_lxc中。

|> handle_policy # 尾调用将不会再返回,进入该函数

{"cpu":"CPU 02:1718810640720604586,ty:2,st:55,s:1548,h:368041654","type":"debug","message":"Matched L4 policy; creating conntrack src=1234 dst=4321 dport=5632 proto=22"}

| invoke_tailcall_if(__and(is_defined(ENABLE_IPV4), is_defined(ENABLE_IPV6)),

CILIUM_CALL_IPV4_CT_INGRESS_POLICY_ONLY,

tail_ipv4_ct_ingress_policy_only);

# invoke_tailcall_if 是个宏,对应的逻辑如下: 当 if中的表达式成立时,则调用第二个参数对应的尾调用。 否则则执行第三个参数对应的函数

# 因为我们是启用ipv4,但未启用ipv6,所以直接调用第三个参数对应的函数:tail_ipv4_ct_ingress_policy_only。 这块可以看编译后的c代码更清晰

# 而tail_ipv4_ct_ingress_policy_only函数是通过宏:TAIL_CT_LOOKUP4(CILIUM_CALL_IPV4_CT_INGRESS_POLICY_ONLY,tail_ipv4_ct_ingress_policy_only.... 生成

|> tail_ipv4_ct_ingress_policy_only

{"cpu":"CPU 02:1718810640720616974,ty:2,st:55,s:1548,h:368041654","type":"debug","message":"Matched L4 policy; creating conntrack src=1234 dst=4321 dport=0 proto=1"}

|> ct_lookup4:DBG_CT_LOOKUP4_1

{"cpu":"CPU 02:1718810640720632433,ty:2,st:48,s:1548,h:368041654","type":"debug","message":"Conntrack lookup 1/2: src=171.0.0.234:41370 dst=171.0.0.182:22"}

| DBG_CT_LOOKUP4_2

{"cpu":"CPU 02:1718810640720646426,ty:2,st:49,s:1548,h:368041654","type":"debug","message":"Conntrack lookup 2/2: nexthdr=6 flags=0"}

| DBG_CT_VERDICT

{"cpu":"CPU 02:1718810640720657835,ty:2,st:15,s:1548,h:368041654","type":"debug","message":"CT verdict: New, revnat=0"}

<-

| invoke_tailcall_if:CILIUM_CALL_IPV4_TO_LXC_POLICY_ONLY

|> tail_ipv4_policy

{"cpu":"CPU 02:1718810640720671626,ty:2,st:55,s:1548,h:368041654","type":"debug","message":"Matched L4 policy; creating conntrack src=1234 dst=4321 dport=5120 proto=20"}

|> ipv4_policy

{"cpu":"CPU 02:1718810640720683870,ty:2,st:55,s:1548,h:368041654","type":"debug","message":"Matched L4 policy; creating conntrack src=1234 dst=4321 dport=4864 proto=19"}

| ct_create4:DBG_CT_CREATED4

{"cpu":"CPU 02:1718810640720695668,ty:2,st:50,s:1548,h:368041654","type":"debug","message":"Conntrack create: proxy-port=0 revnat=0 src-identity=1 lb=0.0.0.0"}

| send_trace_notify4:TRACE_TO_LXC

{"cpu":"CPU 02:1718810640720709590,ty:4,st:0,s:1548,h:368041654","type":"trace","mark":"0x15efdeb6","ifindex":"lxc65e8a9b9c9ad","state":"new","observationPoint":"to-endpo

int","traceSummary":"-\u003e endpoint 1548","source":1548,"bytes":66,"srcLabel":1,"dstLabel":4866,"dstID":1548,"summary":{"ethernet":"Ethernet\t{Contents=[..14..] Payload

=[..54..] SrcMAC=e6:38:e0:d3:6d:6c DstMAC=5e:28:ab:5f:61:0b EthernetType=IPv4 Length=0}","ipv4":"IPv4\t{Contents=[..20..] Payload=[..32..] Version=4 IHL=5 TOS=0 Length=52

Id=14171 Flags=DF FragOffset=0 TTL=63 Protocol=TCP Checksum=44232 SrcIP=171.0.0.234 DstIP=171.0.0.182 Options=[] Padding=[]}","tcp":"TCP\t{Contents=[..32..] Payload=[] S

rcPort=41370 DstPort=22(ssh) Seq=2874142911 Ack=0 DataOffset=8 FIN=false SYN=true RST=false PSH=false ACK=false URG=false ECE=false CWR=false NS=false Window=64240 Checks

um=22471 Urgent=0 Options=[..6..] Padding=[]}","l2":{"src":"e6:38:e0:d3:6d:6c","dst":"5e:28:ab:5f:61:0b"},"l3":{"src":"171.0.0.234","dst":"171.0.0.182"},"l4":{"src":"4137

0","dst":"22"}}}

| redirect_ep(ctx, ifindex, from_host): 到此,报文将从cilium_host转发到lxc网卡中

# 特别说明: 上面的数据包中,SYN=true,表示为tcp三次握手中的第一次sync,而下面的数据包中,sync=true,ack=true,表示是数据回包。

# 所以,当上面报文转发到lxc网卡中后,服务端就已经接收到了syn包,并返回syn+ack。

# lxc数据回包处理:

from-container:

handle_xgress

{"cpu":"CPU 02:1718810640720808035,ty:2,st:55,s:1548,h:3628285243","type":"debug","message":"Matched L4 policy; creating conntrack src=1234 dst=4321 dport=3840 proto=15"}

| send_trace_notify:TRACE_FROM_LXC

{"cpu":"CPU 02:1718810640720840214,ty:4,st:5,s:1548,h:3628285243","type":"trace","mark":"0xd8433d3b","ifindex":"0","state":"unknown","observationPoint":"from-endpoint","t

raceSummary":"\u003c- endpoint 1548","source":1548,"bytes":66,"srcLabel":4866,"dstLabel":0,"dstID":0,"summary":{"ethernet":"Ethernet\t{Contents=[..14..] Payload=[..54..]

SrcMAC=5e:28:ab:5f:61:0b DstMAC=e6:38:e0:d3:6d:6c EthernetType=IPv4 Length=0}","ipv4":"IPv4\t{Contents=[..20..] Payload=[..32..] Version=4 IHL=5 TOS=0 Length=52 Id=0 Flag

s=DF FragOffset=0 TTL=64 Protocol=TCP Checksum=58147 SrcIP=171.0.0.182 DstIP=171.0.0.234 Options=[] Padding=[]}","tcp":"TCP\t{Contents=[..32..] Payload=[] SrcPort=22(ssh)

DstPort=41370 Seq=2405597616 Ack=2874142912 DataOffset=8 FIN=false SYN=true RST=false PSH=false ACK=true URG=false ECE=false CWR=false NS=false Window=64240 Checksum=224

71 Urgent=0 Options=[..6..] Padding=[]}","l2":{"src":"5e:28:ab:5f:61:0b","dst":"e6:38:e0:d3:6d:6c"},"l3":{"src":"171.0.0.182","dst":"171.0.0.234"},"l4":{"src":"22","dst":

"41370"}}}

| ep_tail_call(ctx, CILIUM_CALL_IPV4_FROM_LXC):tail_handle_ipv4

{"cpu":"CPU 02:1718810640720924576,ty:2,st:55,s:1548,h:3628285243","type":"debug","message":"Matched L4 policy; creating conntrack src=1234 dst=4321 dport=3328 proto=13"}

|> __tail_handle_ipv4

{"cpu":"CPU 02:1718810640720938823,ty:2,st:55,s:1548,h:3628285243","type":"debug","message":"Matched L4 policy; creating conntrack src=1234 dst=4321 dport=3072 proto=12"}

| invoke_tailcall_if(is_defined(ENABLE_PER_PACKET_LB),

CILIUM_CALL_IPV4_CT_EGRESS, tail_ipv4_ct_egress)

TAIL_CT_LOOKUP4(CILIUM_CALL_IPV4_CT_EGRESS, tail_ipv4_ct_egress, CT_EGRESS,

is_defined(ENABLE_PER_PACKET_LB),

CILIUM_CALL_IPV4_FROM_LXC_CONT, tail_handle_ipv4_cont)

{"cpu":"CPU 02:1718810640720951291,ty:2,st:55,s:1548,h:3628285243","type":"debug","message":"Matched L4 policy; creating conntrack src=1234 dst=4321 dport=0 proto=1"}

|> ct_lookup4

{"cpu":"CPU 02:1718810640720972199,ty:2,st:48,s:1548,h:3628285243","type":"debug","message":"Conntrack lookup 1/2: src=171.0.0.182:22 dst=171.0.0.234:41370"}

{"cpu":"CPU 02:1718810640720985494,ty:2,st:49,s:1548,h:3628285243","type":"debug","message":"Conntrack lookup 2/2: nexthdr=6 flags=1"}

|> __ct_lookup -> DBG_CT_MATCH

{"cpu":"CPU 02:1718810640721012263,ty:2,st:8,s:1548,h:3628285243","type":"debug","message":"CT entry found lifetime=15284, revnat=0"}

{"cpu":"CPU 02:1718810640721032958,ty:2,st:15,s:1548,h:3628285243","type":"debug","message":"CT verdict: Reply, revnat=0"}

<-

| invoke_tailcall_if -> tail_handle_ipv4_cont

{"cpu":"CPU 02:1718810640721056456,ty:2,st:55,s:1548,h:3628285243","type":"debug","message":"Matched L4 policy; creating conntrack src=1234 dst=4321 dport=2816 proto=11"}

|> handle_ipv4_from_lxc

{"cpu":"CPU 02:1718810640721071155,ty:2,st:55,s:1548,h:3628285243","type":"debug","message":"Matched L4 policy; creating conntrack src=1234 dst=4321 dport=2560 proto=10"}

| cilium_dbg(ctx, info ? DBG_IP_ID_MAP_SUCCEED4

{"cpu":"CPU 02:1718810640721083091,ty:2,st:58,s:1548,h:3628285243","type":"debug","message":"Successfully mapped addr=171.0.0.234 to identity=1"}

| send_trace_notify(ctx, TRACE_TO_STACK

{"cpu":"CPU 02:1718810640721095140,ty:4,st:3,s:1548,h:3628285243","type":"trace","mark":"0xd8433d3b","ifindex":"0","state":"reply","observationPoint":"to-stack","traceSum

mary":"-\u003e stack","source":1548,"bytes":66,"srcLabel":4866,"dstLabel":1,"dstID":0,"summary":{"ethernet":"Ethernet\t{Contents=[..14..] Payload=[..54..] SrcMAC=5e:28:ab

:5f:61:0b DstMAC=e6:38:e0:d3:6d:6c EthernetType=IPv4 Length=0}","ipv4":"IPv4\t{Contents=[..20..] Payload=[..32..] Version=4 IHL=5 TOS=0 Length=52 Id=0 Flags=DF FragOffset

=0 TTL=63 Protocol=TCP Checksum=58403 SrcIP=171.0.0.182 DstIP=171.0.0.234 Options=[] Padding=[]}","tcp":"TCP\t{Contents=[..32..] Payload=[] SrcPort=22(ssh) DstPort=41370

Seq=2405597616 Ack=2874142912 DataOffset=8 FIN=false SYN=true RST=false PSH=false ACK=true URG=false ECE=false CWR=false NS=false Window=64240 Checksum=22471 Urgent=0 Opt

ions=[..6..] Padding=[]}","l2":{"src":"5e:28:ab:5f:61:0b","dst":"e6:38:e0:d3:6d:6c"},"l3":{"src":"171.0.0.182","dst":"171.0.0.234"},"l4":{"src":"22","dst":"41370"}}}

| cilium_dbg_capture(ctx, DBG_CAPTURE_DELIVERY

{"cpu":"CPU 02:1718810640721208807,ty:3,st:4,s:1548,h:3628285243","type":"capture","mark":"0xd8433d3b","message":"Delivery to ifindex 0","prefix":"-\u003e 0","source":154

8,"bytes":66,"summary":"171.0.0.182:22 -\u003e 171.0.0.234:41370 tcp SYN, ACK"}

# 到此。数据回包结束。客户端。

{"cpu":"CPU 02:1718810640721263430,ty:2,st:55,s:944,h:368041654","type":"debug","message":"Matched L4 policy; creating conntrack src=1235 dst=5321 dport=256 proto=22"}

{"cpu":"CPU 02:1718810640721288880,ty:2,st:55,s:944,h:368041654","type":"debug","message":"Matched L4 policy; creating conntrack src=1235 dst=5321 dport=256 proto=20"}

{"cpu":"CPU 02:1718810640721302331,ty:2,st:55,s:944,h:368041654","type":"debug","message":"Matched L4 policy; creating conntrack src=1235 dst=5321 dport=256 proto=19"}

{"cpu":"CPU 02:1718810640721314539,ty:2,st:61,s:944,h:368041654","type":"debug","message":"Inheriting identity=1 from stack"}

{"cpu":"CPU 02:1718810640721325678,ty:4,st:7,s:944,h:368041654","type":"trace","mark":"0x15efdeb6","ifindex":"0","state":"unknown","observationPoint":"from-host","traceSu

mmary":"\u003c- host","source":944,"bytes":54,"srcLabel":1,"dstLabel":0,"dstID":0,"summary":{"ethernet":"Ethernet\t{Contents=[..14..] Payload=[..46..] SrcMAC=d6:d5:a2:5e:

f5:8b DstMAC=d6:d5:a2:5e:f5:8b EthernetType=IPv4 Length=0}","ipv4":"IPv4\t{Contents=[..20..] Payload=[..20..] Version=4 IHL=5 TOS=0 Length=40 Id=14172 Flags=DF FragOffset

=0 TTL=64 Protocol=TCP Checksum=43987 SrcIP=171.0.0.234 DstIP=171.0.0.182 Options=[] Padding=[]}","tcp":"TCP\t{Contents=[..20..] Payload=[] SrcPort=41370 DstPort=22(ssh)

Seq=2874142912 Ack=2405597617 DataOffset=5 FIN=false SYN=false RST=false PSH=false ACK=true URG=false ECE=false CWR=false NS=false Window=502 Checksum=22459 Urgent=0 Opti

ons=[] Padding=[]}","l2":{"src":"d6:d5:a2:5e:f5:8b","dst":"d6:d5:a2:5e:f5:8b"},"l3":{"src":"171.0.0.234","dst":"171.0.0.182"},"l4":{"src":"41370","dst":"22"}}}

分析完上面的数据包发送流程,有几个疑点:

数据包发送,只在cilium_host中就完成,同样,接收只在lxc中就完成。总觉得少了些什么。

分析的是数据包的三次握手环节,应用是无感知的。当涉及用户层数据包时,流程是否发生变化。

在第二部分中,将利用cilium另一个工具pwru,结合内核的动作,将上面的疑问进行补全。

本期作者丨沃趣科技产品研发部

版权作品,未经许可禁止转载

往期作品快速浏览: