关注+星标,干货第一时间送达

前面的一篇文章:

给大家带了一点新玩意儿,

但是,咱们的老本行不能变啊!

SQL boys 和 girls ,

Lets go!

其实,这道题和上一道SQL题:

简直是,异曲同工啊!

不得不佩服出题人,

是"机关算尽",

可是,咱们还能"刻舟求剑",

完美答题。

一个字,绝!

【温馨提示:建表语句及数据导入脚本,已经放在文章末尾】

一、需求场景

题目:

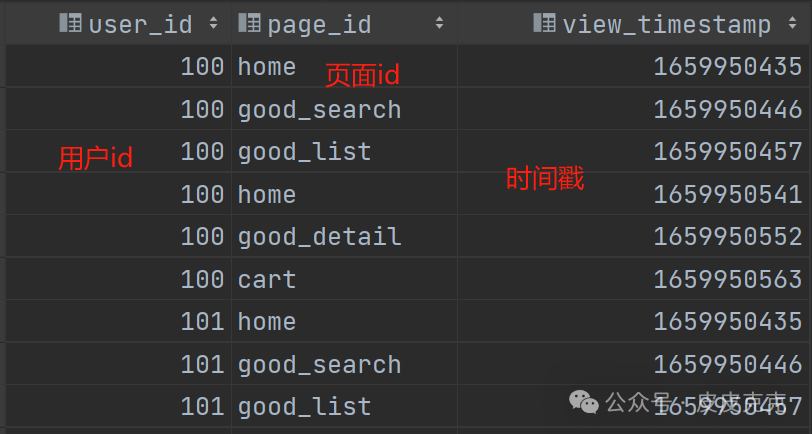

现有页面浏览记录表page_view_events,表中有每个用户的每次页面访问记录。

规定若同一用户相邻两次访问记录时间间隔小于60s,则认为这两次流量记录属于同一会话。

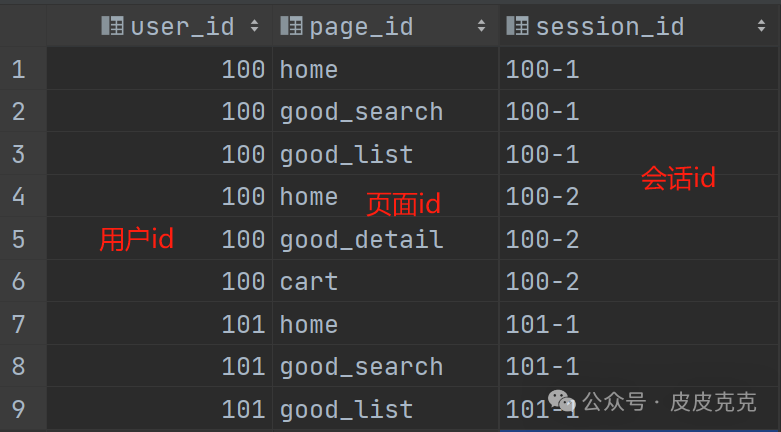

要求:为属于同一会话访问记录增加一个相同的会话id字段。

页面浏览记录表page_view_events:

正确结果:

二、解决方案

演示工具:Hive-3.1.3 + DataGrip2022

对于上面给出的页面浏览记录表page_view_events,

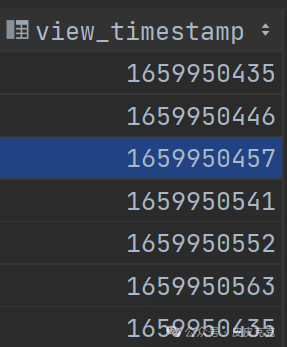

里面的view_timestamp字段:

大家要有个认识,

这个表示:

格林威治时间1970年01月01日00时00分00秒

(北京时间1970年01月01日08时00分00秒)起至现在的总秒数

知道了这个,

在计算会话访问差值60s的时候,

直接两列相减即可。

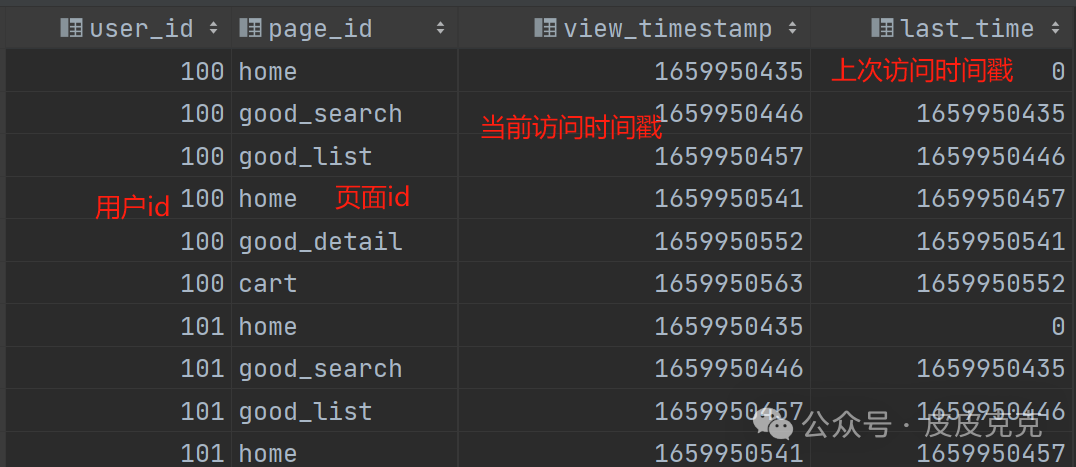

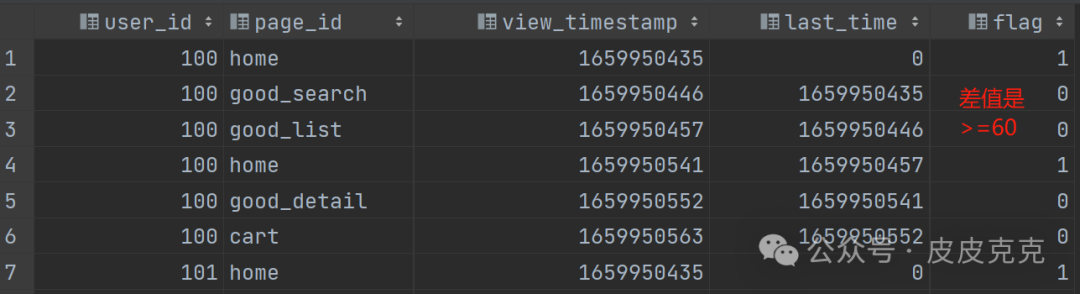

(1)按照user_id分组开窗,访问时间戳view_timestamp排序,新增每行数据的上一行

select user_id,page_id,view_timestamp,

lag(view_timestamp,1,0) over (partition by user_id order by view_timestamp) as last_time

from page_view_events

结果集:

这步应该简单,

主要用到函数:lag()

语法格式:

LAG(column, offset[, default]) OVER ([PARTITION BY partition_expression, ...] ORDER BY sort_expression [ASC|DESC], ...)

参数:column:指定要计算的列。

offset:指定要向前查找多少行。

offset 的默认值为 1。

default:当偏移量超过可用行数时,指定要返回的默认值。默认值为 NULL。

PARTITION BY:可选项,用于按照指定的表达式进行分区。ORDER BY:必须指定,用于根据指定的表达式排序。

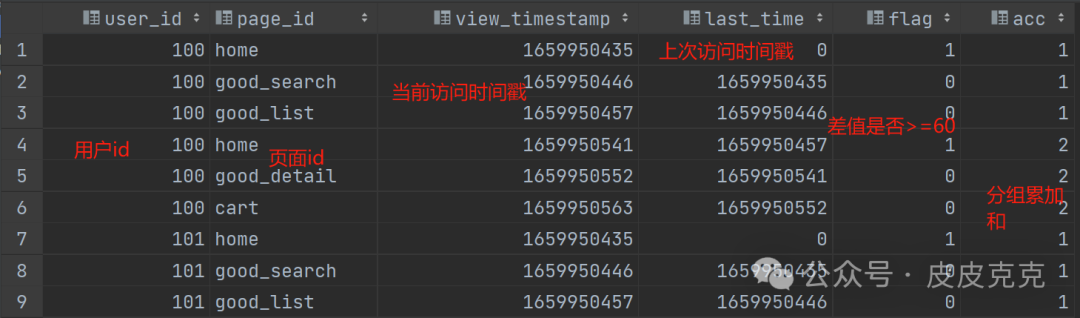

(2)计算每行数据中,当前访问时间戳time_stamp和上次访问时间戳last_time的差值

select user_id,page_id,view_timestamp,last_time,

`if`(view_timestamp-last_time>=60,1,0) as flag

from (

select user_id,page_id,view_timestamp,

lag(view_timestamp,1,0) over (partition by user_id order by view_timestamp) as last_time

from page_view_events

)t1

结果值:

代码中:

`if`(view_timestamp-last_time>=60,1,0) as flag

是增加一个标志位,为下面步骤所用。

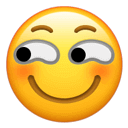

(3)计算每行数据中,当前访问时间戳time_stamp和上次访问时间戳last_time的差值

select *,

sum(flag) over (partition by user_id order by view_timestamp) as acc

from (

select user_id,page_id,view_timestamp,last_time,

`if`(view_timestamp-last_time>=60,1,0) as flag

from (

select user_id,page_id,view_timestamp,

lag(view_timestamp,1,0) over (partition by user_id order by view_timestamp) as last_time

from page_view_events

)t1

)t2

结果集:

代码中,巧妙的利用了前面步骤的flag列,

如果两次会话差值小于60s,则flag=0,

那么累加和acc就表示从这次会话session开始,

到当前行的和,

结果只等于会话开始的那次值1,

而会话session-id的增加,

也可以利用这个 sum() over() 完成,

秒不秒?

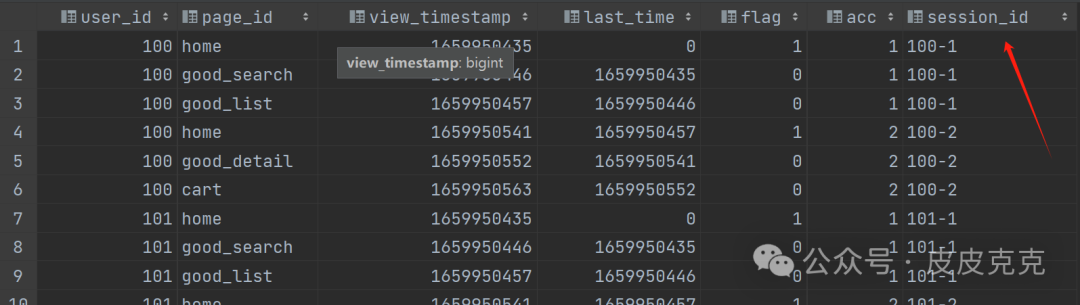

(4)拼接user_id 和acc,作为会话session-id

select *,concat(user_id,'-',acc) as session_id

from (

select *,

sum(flag) over (partition by user_id order by view_timestamp) as acc

from (

select user_id,page_id,view_timestamp,last_time,

`if`(view_timestamp-last_time>=60,1,0) as flag

from (

select user_id,page_id,view_timestamp,

lag(view_timestamp,1,0) over (partition by user_id order by view_timestamp) as last_time

from page_view_events

)t1

)t2

)t3

结果集:

当然,规范的代码中,

select 后面还是不要用 *

完整代码:

select user_id,page_id,concat(user_id,'-',acc) as session_id

from (

select user_id,page_id,

sum(flag) over (partition by user_id order by view_timestamp) as acc

from (

select user_id,page_id,view_timestamp,last_time,

`if`(view_timestamp-last_time>=60,1,0) as flag

from (

select user_id,page_id,view_timestamp,

lag(view_timestamp,1,0) over (partition by user_id order by view_timestamp) as last_time

from page_view_events

)t1

)t2

)t3

三、源数据

页面浏览记录表page_view_events:

create table page_view_events

(

user_id int comment '用户id',

page_id string comment '页面id',

view_timestamp bigint comment '访问时间戳'

)

row format serde 'org.apache.hadoop.hive.serde2.lazy.LazySimpleSerDe'

stored as

inputformat 'org.apache.hadoop.mapred.TextInputFormat'

outputformat 'org.apache.hadoop.hive.ql.io.HiveIgnoreKeyTextOutputFormat'

location 'hdfs://mycluster/user/hive/warehouse/hql_test2.db/page_view_events'

tblproperties ('bucketing_version' = '2');

alter table page_view_events

set tblproperties ('comment' = '页面访问记录');

INSERT INTO hql_test2.page_view_events (user_id, page_id, view_timestamp) VALUES (100, 'home', 1659950435);

INSERT INTO hql_test2.page_view_events (user_id, page_id, view_timestamp) VALUES (100, 'good_search', 1659950446);

INSERT INTO hql_test2.page_view_events (user_id, page_id, view_timestamp) VALUES (100, 'good_list', 1659950457);

INSERT INTO hql_test2.page_view_events (user_id, page_id, view_timestamp) VALUES (100, 'home', 1659950541);

INSERT INTO hql_test2.page_view_events (user_id, page_id, view_timestamp) VALUES (100, 'good_detail', 1659950552);

INSERT INTO hql_test2.page_view_events (user_id, page_id, view_timestamp) VALUES (100, 'cart', 1659950563);

INSERT INTO hql_test2.page_view_events (user_id, page_id, view_timestamp) VALUES (101, 'home', 1659950435);

INSERT INTO hql_test2.page_view_events (user_id, page_id, view_timestamp) VALUES (101, 'good_search', 1659950446);

INSERT INTO hql_test2.page_view_events (user_id, page_id, view_timestamp) VALUES (101, 'good_list', 1659950457);

INSERT INTO hql_test2.page_view_events (user_id, page_id, view_timestamp) VALUES (101, 'home', 1659950541);

INSERT INTO hql_test2.page_view_events (user_id, page_id, view_timestamp) VALUES (101, 'good_detail', 1659950552);

INSERT INTO hql_test2.page_view_events (user_id, page_id, view_timestamp) VALUES (101, 'cart', 1659950563);

INSERT INTO hql_test2.page_view_events (user_id, page_id, view_timestamp) VALUES (102, 'home', 1659950435);

INSERT INTO hql_test2.page_view_events (user_id, page_id, view_timestamp) VALUES (102, 'good_search', 1659950446);

INSERT INTO hql_test2.page_view_events (user_id, page_id, view_timestamp) VALUES (102, 'good_list', 1659950457);

INSERT INTO hql_test2.page_view_events (user_id, page_id, view_timestamp) VALUES (103, 'home', 1659950541);

INSERT INTO hql_test2.page_view_events (user_id, page_id, view_timestamp) VALUES (103, 'good_detail', 1659950552);

INSERT INTO hql_test2.page_view_events (user_id, page_id, view_timestamp) VALUES (103, 'cart', 1659950563);

OK,这就是本期的内容了,下期再见!