点击关注公众号,干货第一时间送达

"签到领金币" 这玩意儿!

太熟悉不过了

不管是之前打王者荣耀,

还是,支付宝芭芭农场里面的浇水种植,

亦或是,某些银行app的抽奖活动,

无外乎是这种"签到机制",

讲究的就是一个,"人气儿"

用书面语来说,就是"活跃度"

是一个衡量流量价值的数据分析指标。

今天,小编就带着大家看看,

这个指标是怎么用SQL编写出来的,

以及"金币",是如何在"连续签到日"

实现"额外收益"的。

【温馨提示:建表语句及数据导入脚本,已经放在文章末尾】

一、需求场景

题目:

用户每天签到可以领取1个金币,连续签到的第3、7天,分别可以额外领取2个和6个金币。

每连续签到7天后重新累计签到天数。

从用户登录明显表user_login_detail中,查询每个用户获得的总金币数,并按照金币数倒序排列。

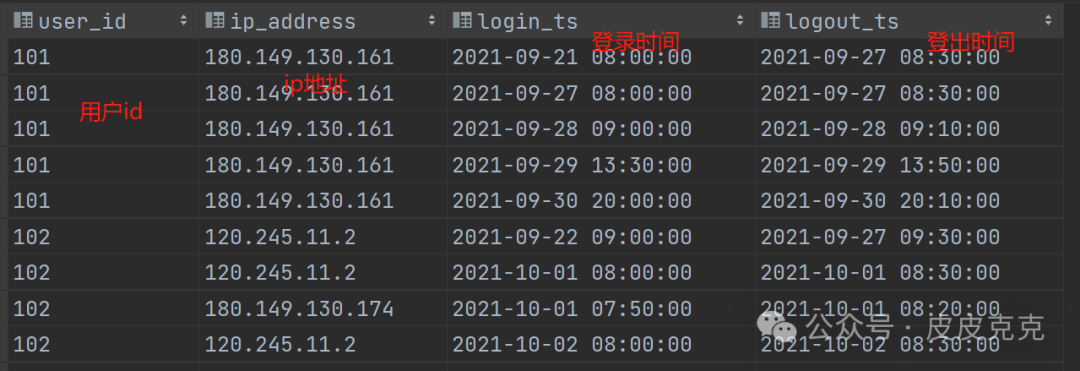

用户登录明细表user_login_detail:

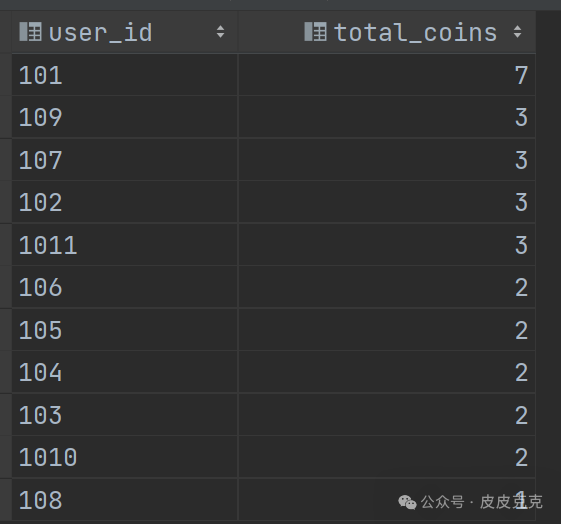

正确结果:

二、解决方案

演示工具:Hive-3.1.3 + DataGrip2022

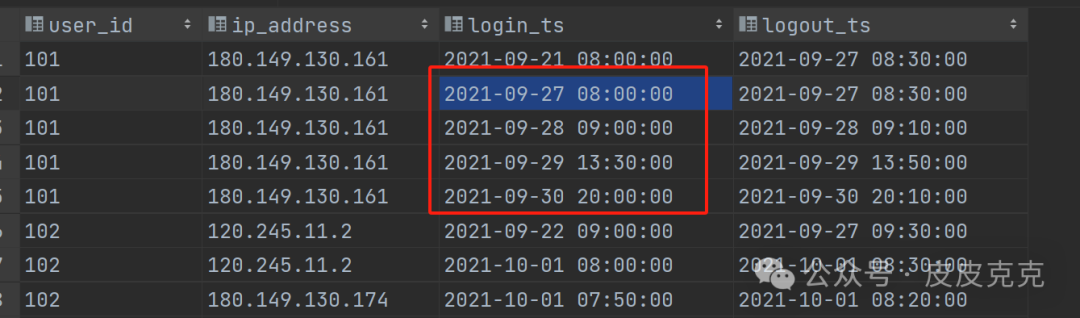

解题的思路,应该先考虑如何计算每个用户的"连续登录区间",

例如:

该用户在 2021-09-27 至 2021-09-30 连续登录4天,

是可以获得额外的2个金币。

"连续登录区间"问题,在前面的文章:

有类似演示,感兴趣的小伙伴可以点击翻看一下

接着,我们可以计算每个登录日期对应可以获得的金币数,

最后累加即可。

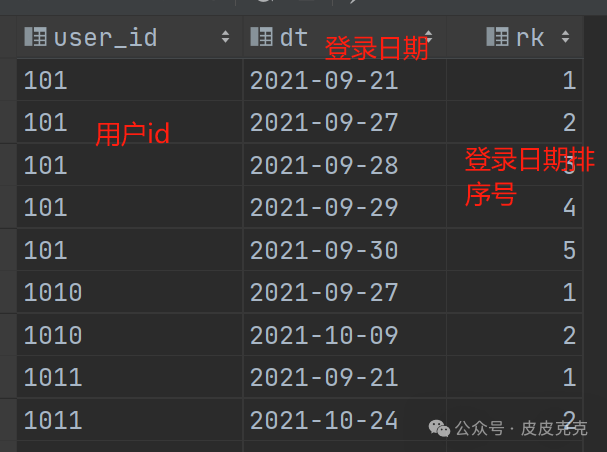

(1)按照用户user_id、登录日期login_ts分组,进行开窗排序

select

user_id,

date(login_ts) as dt,

rank() over (partition by user_id order by date(login_ts)) as rk

from user_login_detail

group by user_id, date(login_ts)

结果集:

有关"开窗排序"的演示,

可以翻看前面的文章:

这里不再赘述

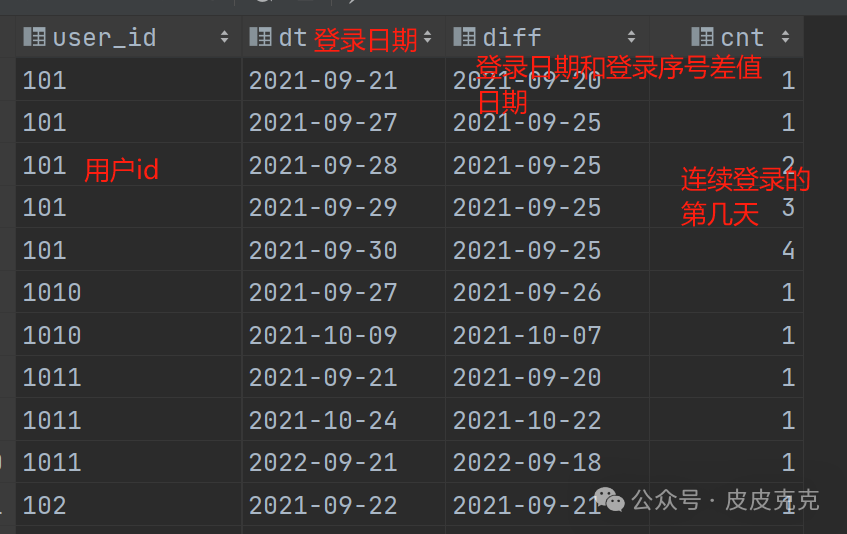

(2)计算每个用户连续登录区间,并标记每个日期是连续登录的第几天

select user_id,dt,

date_sub(dt, rk) as diff,

count(*) over (partition by user_id, date_sub(dt, rk) order by dt) as cnt

from (

select

user_id,

date(login_ts) as dt,

rank() over (partition by user_id order by date(login_ts)) as rk

from user_login_detail

group by user_id, date(login_ts)

)t1

结果集:

(3)计算每个用户在不同连续登录区间获得的金币数

select user_id,

max(cnt) + sum(`if`(cnt%3=0,2,0)) + sum(`if`(cnt%7=0,6,0)) as coins

from (

select user_id,dt,

date_sub(dt, rk) as diff,

count(*) over (partition by user_id, date_sub(dt, rk) order by dt) as cnt

from (

select

user_id,

date(login_ts) as dt,

rank() over (partition by user_id order by date(login_ts)) as rk

from user_login_detail

group by user_id, date(login_ts)

)t1

)t2

group by user_id, diff

结果集:

(4)计算每个用户总的金币数

select user_id, sum(coins) as total_coins

from (

select user_id,

max(cnt) + sum(`if`(cnt%3=0,2,0)) + sum(`if`(cnt%7=0,6,0)) as coins

from (

select user_id,dt,

date_sub(dt, rk) as diff,

count(*) over (partition by user_id, date_sub(dt, rk) order by dt) as cnt

from (

select

user_id,

date(login_ts) as dt,

rank() over (partition by user_id order by date(login_ts)) as rk

from user_login_detail

group by user_id, date(login_ts)

)t1

)t2

group by user_id, diff

)t3

group by user_id

order by total_coins desc

结果集:

三、源数据

用户登录明细表user_login_detail:

create table user_login_detail

(

user_id string,

ip_address string,

login_ts string,

logout_ts string

)

row format serde 'org.apache.hadoop.hive.serde2.lazy.LazySimpleSerDe'

with serdeproperties ('field.delim' = '\t') stored as

inputformat 'org.apache.hadoop.mapred.TextInputFormat'

outputformat 'org.apache.hadoop.hive.ql.io.HiveIgnoreKeyTextOutputFormat'

location 'hdfs://mycluster/user/hive/warehouse/hql_test2.db/user_login_detail'

tblproperties ('bucketing_version' = '2');

INSERT INTO hql_test2.user_login_detail (user_id, ip_address, login_ts, logout_ts) VALUES ('101', '180.149.130.161', '2021-09-21 08:00:00', '2021-09-27 08:30:00');

INSERT INTO hql_test2.user_login_detail (user_id, ip_address, login_ts, logout_ts) VALUES ('101', '180.149.130.161', '2021-09-27 08:00:00', '2021-09-27 08:30:00');

INSERT INTO hql_test2.user_login_detail (user_id, ip_address, login_ts, logout_ts) VALUES ('101', '180.149.130.161', '2021-09-28 09:00:00', '2021-09-28 09:10:00');

INSERT INTO hql_test2.user_login_detail (user_id, ip_address, login_ts, logout_ts) VALUES ('101', '180.149.130.161', '2021-09-29 13:30:00', '2021-09-29 13:50:00');

INSERT INTO hql_test2.user_login_detail (user_id, ip_address, login_ts, logout_ts) VALUES ('101', '180.149.130.161', '2021-09-30 20:00:00', '2021-09-30 20:10:00');

INSERT INTO hql_test2.user_login_detail (user_id, ip_address, login_ts, logout_ts) VALUES ('102', '120.245.11.2', '2021-09-22 09:00:00', '2021-09-27 09:30:00');

INSERT INTO hql_test2.user_login_detail (user_id, ip_address, login_ts, logout_ts) VALUES ('102', '120.245.11.2', '2021-10-01 08:00:00', '2021-10-01 08:30:00');

INSERT INTO hql_test2.user_login_detail (user_id, ip_address, login_ts, logout_ts) VALUES ('102', '180.149.130.174', '2021-10-01 07:50:00', '2021-10-01 08:20:00');

INSERT INTO hql_test2.user_login_detail (user_id, ip_address, login_ts, logout_ts) VALUES ('102', '120.245.11.2', '2021-10-02 08:00:00', '2021-10-02 08:30:00');

INSERT INTO hql_test2.user_login_detail (user_id, ip_address, login_ts, logout_ts) VALUES ('103', '27.184.97.3', '2021-09-23 10:00:00', '2021-09-27 10:30:00');

INSERT INTO hql_test2.user_login_detail (user_id, ip_address, login_ts, logout_ts) VALUES ('103', '27.184.97.3', '2021-10-03 07:50:00', '2021-10-03 09:20:00');

INSERT INTO hql_test2.user_login_detail (user_id, ip_address, login_ts, logout_ts) VALUES ('104', '27.184.97.34', '2021-09-24 11:00:00', '2021-09-27 11:30:00');

INSERT INTO hql_test2.user_login_detail (user_id, ip_address, login_ts, logout_ts) VALUES ('104', '27.184.97.34', '2021-10-03 07:50:00', '2021-10-03 08:20:00');

INSERT INTO hql_test2.user_login_detail (user_id, ip_address, login_ts, logout_ts) VALUES ('104', '27.184.97.34', '2021-10-03 08:50:00', '2021-10-03 10:20:00');

INSERT INTO hql_test2.user_login_detail (user_id, ip_address, login_ts, logout_ts) VALUES ('104', '120.245.11.89', '2021-10-03 08:40:00', '2021-10-03 10:30:00');

INSERT INTO hql_test2.user_login_detail (user_id, ip_address, login_ts, logout_ts) VALUES ('105', '119.180.192.212', '2021-10-04 09:10:00', '2021-10-04 09:30:00');

INSERT INTO hql_test2.user_login_detail (user_id, ip_address, login_ts, logout_ts) VALUES ('106', '119.180.192.66', '2021-10-04 08:40:00', '2021-10-04 10:30:00');

INSERT INTO hql_test2.user_login_detail (user_id, ip_address, login_ts, logout_ts) VALUES ('106', '119.180.192.66', '2021-10-05 21:50:00', '2021-10-05 22:40:00');

INSERT INTO hql_test2.user_login_detail (user_id, ip_address, login_ts, logout_ts) VALUES ('107', '219.134.104.7', '2021-09-25 12:00:00', '2021-09-27 12:30:00');

INSERT INTO hql_test2.user_login_detail (user_id, ip_address, login_ts, logout_ts) VALUES ('107', '219.134.104.7', '2021-10-05 22:00:00', '2021-10-05 23:00:00');

INSERT INTO hql_test2.user_login_detail (user_id, ip_address, login_ts, logout_ts) VALUES ('107', '219.134.104.7', '2021-10-06 09:10:00', '2021-10-06 10:20:00');

INSERT INTO hql_test2.user_login_detail (user_id, ip_address, login_ts, logout_ts) VALUES ('107', '27.184.97.46', '2021-10-06 09:00:00', '2021-10-06 10:00:00');

INSERT INTO hql_test2.user_login_detail (user_id, ip_address, login_ts, logout_ts) VALUES ('108', '101.227.131.22', '2021-10-06 09:00:00', '2021-10-06 10:00:00');

INSERT INTO hql_test2.user_login_detail (user_id, ip_address, login_ts, logout_ts) VALUES ('108', '101.227.131.22', '2021-10-06 22:00:00', '2021-10-06 23:00:00');

INSERT INTO hql_test2.user_login_detail (user_id, ip_address, login_ts, logout_ts) VALUES ('109', '101.227.131.29', '2021-09-26 13:00:00', '2021-09-27 13:30:00');

INSERT INTO hql_test2.user_login_detail (user_id, ip_address, login_ts, logout_ts) VALUES ('109', '101.227.131.29', '2021-10-06 08:50:00', '2021-10-06 10:20:00');

INSERT INTO hql_test2.user_login_detail (user_id, ip_address, login_ts, logout_ts) VALUES ('109', '101.227.131.29', '2021-10-08 09:00:00', '2021-10-08 09:10:00');

INSERT INTO hql_test2.user_login_detail (user_id, ip_address, login_ts, logout_ts) VALUES ('1010', '119.180.192.10', '2021-09-27 14:00:00', '2021-09-27 14:30:00');

INSERT INTO hql_test2.user_login_detail (user_id, ip_address, login_ts, logout_ts) VALUES ('1010', '119.180.192.10', '2021-10-09 08:50:00', '2021-10-09 10:20:00');

INSERT INTO hql_test2.user_login_detail (user_id, ip_address, login_ts, logout_ts) VALUES ('1011', '180.149.130.161', '2021-09-21 08:00:00', '2021-09-27 08:30:00');

INSERT INTO hql_test2.user_login_detail (user_id, ip_address, login_ts, logout_ts) VALUES ('1011', '180.149.130.161', '2021-10-24 08:00:00', '2021-10-29 08:30:00');

INSERT INTO hql_test2.user_login_detail (user_id, ip_address, login_ts, logout_ts) VALUES ('1011', '180.149.130.161', '2022-09-21 08:00:00', '2022-09-27 08:30:00');

INSERT INTO hql_test2.user_login_detail (user_id, ip_address, login_ts, logout_ts) VALUES ('105', '119.180.192.212', '2021-10-06 09:10:00', '2021-10-04 09:30:00');

INSERT INTO hql_test2.user_login_detail (user_id, ip_address, login_ts, logout_ts) VALUES ('106', '119.180.192.66', '2021-10-05 08:50:00', '2021-10-05 12:40:00');

OK,这就是本期内容了,下期再见!

文章转载自皮皮克克,如果涉嫌侵权,请发送邮件至:contact@modb.pro进行举报,并提供相关证据,一经查实,墨天轮将立刻删除相关内容。