本文字数:16079;估计阅读时间:41 分钟 作者:尚雷的驿站平台 来源:尚雷的驿站

Meetup活动

ClickHouse Beijing User Group第2届 Meetup火热报名中,详见文末海报!

这篇文章主要是对近期异机环境备份恢复 clickhouse 遇到的问题及解决办法记录整理。

Part1背景描述

我目前负责管理维护一套基于 ClickHouse 数据库的业务系统。这套系统部署在同城的两个机房,两个机房均各部署了一套 ClickHouse 集群,数据完全同步,均对外提供业务服务。如果其中一个机房发生异常,可以迅速将流量切换到另一个机房,确保业务的连续性。

当前使用的 ClickHouse 版本为 22.5.1.2079。我们发现该版本存在一个 Bug,即在查询 Hive 外表数据时,ClickHouse 实例可能会发生宕机。而根据 ClickHouse 官网信息,版本 24.8.6.70 LTS 已修复此 Bug。为了解决这一问题,我申请了一套新的服务器,部署了 ClickHouse 22.5.1.2079 版本集群用于升级验证,并作为日常生产上线前的业务测试环境。该测试集群采用了 2 台 ClickHouse 集群节点、3 台 ZooKeeper 节点以及 2 台 chproxy-keepalived 节点混合组成一个高可用集群。

我们计划将原 ClickHouse 测试环境的数据备份恢复到新测试集群,但在恢复过程中,由于两边存储路径不一致,导致很多表在执行恢复时出错,报错内容为 "dstDataPaths=map[string]string not contains sdxxx"。经过多次测试和调整存储映射关系,最终成功将数据恢复到 ClickHouse 测试集群,以下是其中一个典型的报错信息:

2024/11/20 09:52:07.952910 error one of restoreDataRegular go-routine return error: can't copy data to detached 'xxxx.ck_dm_xxx_xxx_product_3': dstDataPaths=map[string]string{"default":"/database/clickhouse/store/08f/08f22370-b840-49f8-88f2-2370b840e9f8/"}, not contains sdb

Part2 过程记录

原有的 clickhouse 测试环境是由研发部的同事管理维护的,本次备份和恢复均使用 clickhouse-backup 工具,使用的版本为 2.5.9 (clickhouse-backup-linux-amd64.tar.gz)。

注:可以通过 GitHub 下载该工具,解压后将可执行文件放置于 usr/bin 目录下,这样便可以在任何目录下直接执行 clickhouse-backup 命令。

研发部同事通过 clickhouse-backup 将原有测试环境测试数据备份至一台中转机某个目录下。我需要将其从中转机上下载到本地并进行恢复,我在 etc/ 目录下创建了 clickhouse-backup 目录,并创建对应的 config.yml 文件,该文件内容如下:

general:

remote_storage: sftp # REMOTE_STORAGE, if `none` then `upload` and `download` command will fail

max_file_size: 1099511627776 # MAX_FILE_SIZE, 1G by default, useless when upload_by_part is true, use for split data parts files by archives

disable_progress_bar: false # DISABLE_PROGRESS_BAR, show progress bar during upload and download, have sense only when `upload_concurrency` and `download_concurrency` equal 1

backups_to_keep_local: 0 # BACKUPS_TO_KEEP_LOCAL, how much newest local backup should keep, 0 mean all created backups will keep on local disk

# you shall to run `clickhouse-backup delete local <backup_name>` command to avoid useless disk space allocations

backups_to_keep_remote: 0 # BACKUPS_TO_KEEP_REMOTE, how much newest backup should keep on remote storage, 0 mean all uploaded backups will keep on remote storage.

# if old backup is required for newer incremental backup, then it will don't delete. Be careful with long incremental backup sequences.

log_level: info # LOG_LEVEL

allow_empty_backups: false # ALLOW_EMPTY_BACKUPS

download_concurrency: 1 # DOWNLOAD_CONCURRENCY, max 255

upload_concurrency: 1 # UPLOAD_CONCURRENCY, max 255

restore_schema_on_cluster: "ck_replica1" # RESTORE_SCHEMA_ON_CLUSTER, execute all schema related SQL queries with `ON CLUSTER` clause as Distributed DDL, look to `system.clusters` table for proper cluster name

upload_by_part: true # UPLOAD_BY_PART

download_by_part: true # DOWNLOAD_BY_PART

restore_database_mapping: {} # RESTORE_DATABASE_MAPPING, restore rules from backup databases to target databases, which is useful on change destination database all atomic tables will create with new uuid.

retries_on_failure: 3 # RETRIES_ON_FAILURE, retry if failure during upload or download

retries_pause: 100ms # RETRIES_PAUSE, time duration pause after each download or upload fail

clickhouse:

username: default # CLICKHOUSE_USERNAME

password: "xxxx" # 待恢复的 clickhouse 集群 default 用户密码

host: localhost # CLICKHOUSE_HOST

port: 9000 # CLICKHOUSE_PORT, don't use 8123, clickhouse-backup doesn't support HTTP protocol

disk_mapping: {} # CLICKHOUSE_DISK_MAPPING, use it if your system.disks on restored servers not the same with system.disks on server where backup was created

skip_tables: # CLICKHOUSE_SKIP_TABLES

- system.*

- INFORMATION_SCHEMA.*

- information_schema.*

timeout: 5m # CLICKHOUSE_TIMEOUT

freeze_by_part: false # CLICKHOUSE_FREEZE_BY_PART, allows freeze part by part instead of freeze the whole table

freeze_by_part_where: "" # CLICKHOUSE_FREEZE_BY_PART_WHERE, allows parts filtering during freeze when freeze_by_part: true

secure: false # CLICKHOUSE_SECURE, use SSL encryption for connect

skip_verify: false # CLICKHOUSE_SKIP_VERIFY

sync_replicated_tables: true # CLICKHOUSE_SYNC_REPLICATED_TABLES

tls_key: "" # CLICKHOUSE_TLS_KEY, filename with TLS key file

tls_cert: "" # CLICKHOUSE_TLS_CERT, filename with TLS certificate file

tls_ca: "" # CLICKHOUSE_TLS_CA, filename with TLS custom authority file

log_sql_queries: true # CLICKHOUSE_LOG_SQL_QUERIES, enable log clickhouse-backup SQL queries on `system.query_log` table inside clickhouse-server

debug: false # CLICKHOUSE_DEBUG

config_dir: "/etc/clickhouse-server" # CLICKHOUSE_CONFIG_DIR

restart_command: "systemctl restart clickhouse-server" # CLICKHOUSE_RESTART_COMMAND, this command use when you try to restore with --rbac or --config options

ignore_not_exists_error_during_freeze: true # CLICKHOUSE_IGNORE_NOT_EXISTS_ERROR_DURING_FREEZE, allow avoiding backup failures when you often CREATE / DROP tables and databases during backup creation, clickhouse-backup will ignore `code: 60` and `code: 81` errors during execute `ALTER TABLE ... FREEZE`

check_replicas_before_attach: true # CLICKHOUSE_CHECK_REPLICAS_BEFORE_ATTACH, allow to avoid concurrent ATTACH PART execution when restore ReplicatedMergeTree tables

sftp:

address: "xxx.xxx.xxx.xxx" # SFTP_ADDRESS, 存放备份数据的中转机的 IP 地址

port: 22 # SFTP_PORT 中转机端口号

username: "" # SFTP_USERNAME 中转机的用户

password: "xxxx" # SFTP_PASSWORD 中转机对应用户密码

key: "" # SFTP_KEY

path: "/xxx/xxx/" # SFTP_PATH 中转机存放备份数据的目录

concurrency: 1 # SFTP_CONCURRENCY

compression_format: tar # SFTP_COMPRESSION_FORMAT

compression_level: 1 # SFTP_COMPRESSION_LEVEL

debug: false # SFTP_DEBUG

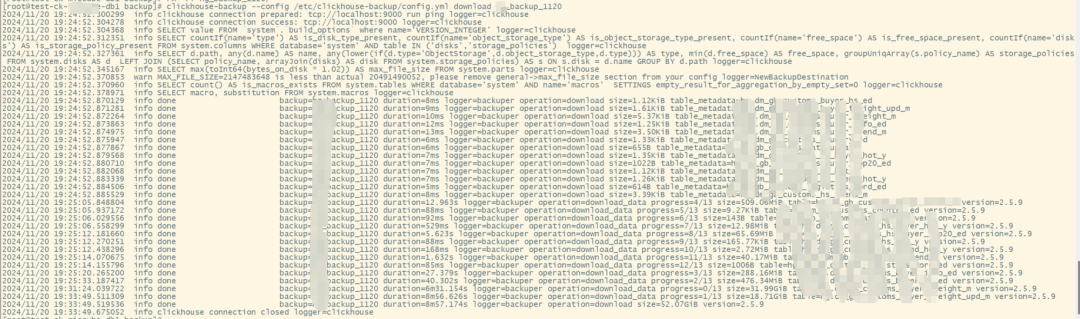

2.1 下载数据

首先通过如下命令将备份数据从中转机下载到 clickhouse 测试服务器本地,默认是下载到 clickhouse 安装目录的 backup 目录下,当前这套测试环境数据库安装目录为 database/clickhouse

通过如下命从中转机下载数据。

clickhouse-backup --config /etc/clickhouse-backup/config.yml download xxx_backup_1120

# xxx_backup_1120 是表示指定的备份名称

# download 操作用于将备份从远程存储下载到本地服务器

-- 执行部分结果如下

[root@test-ck-xxxx-db1 backup]# clickhouse-backup --config /etc/clickhouse-backup/config.yml download xxxxx_backup_1120

2024/11/20 19:24:52.300299 info clickhouse connection prepared: tcp://localhost:9000 run ping logger=clickhouse

2024/11/20 19:24:52.304278 info clickhouse connection success: tcp://localhost:9000 logger=clickhouse

2024/11/20 19:24:52.304368 info SELECT value FROM `system`.`build_options` where name='VERSION_INTEGER' logger=clickhouse

2024/11/20 19:24:52.312351 info SELECT countIf(name='type') AS is_disk_type_present, countIf(name='object_storage_type') AS is_object_storage_type_present, countIf(name='free_space') AS is_free_space_present, countIf(name='disks') AS is_storage_policy_present FROM system.columns WHERE database='system' AND table IN ('disks','storage_policies') logger=clickhouse

2024/11/20 19:24:52.327361 info SELECT d.path, any(d.name) AS name, any(lower(if(d.type='ObjectStorage',d.object_storage_type,d.type))) AS type, min(d.free_space) AS free_space, groupUniqArray(s.policy_name) AS storage_policies FROM system.disks AS d LEFT JOIN (SELECT policy_name, arrayJoin(disks) AS disk FROM system.storage_policies) AS s ON s.disk = d.name GROUP BY d.path logger=clickhouse

2024/11/20 19:24:52.345167 info SELECT max(toInt64(bytes_on_disk * 1.02)) AS max_file_size FROM system.parts logger=clickhouse

2024/11/20 19:24:52.370853 warn MAX_FILE_SIZE=2147483648 is less than actual 20491490052, please remove general->max_file_size section from your config logger=NewBackupDestination

2024/11/20 19:24:52.370960 info SELECT count() AS is_macros_exists FROM system.tables WHERE database='system' AND name='macros' SETTINGS empty_result_for_aggregation_by_empty_set=0 logger=clickhouse

2024/11/20 19:24:52.378971 info SELECT macro, substitution FROM system.macros logger=clickhouse

2024/11/20 19:24:52.870129 info done backup=xxxxx_backup_1120 duration=8ms logger=backuper operation=download size=1.12KiB table_metadata=xxxxx.dm_gb_xxxxx_buyer_hs_ed

。。。。。。。

2024/11/20 19:31:24.039722 info done backup=xxxxx_backup_1120 duration=6m31.154s logger=backuper operation=download_data progress=0/13 size=31.99GiB table=xxxxx.dm_gb_xxxxx_buyer_xxxxx_m version=2.5.9

2024/11/20 19:33:49.511309 info done backup=xxxxx_backup_1120 duration=8m56.626s logger=backuper operation=download_data progress=1/13 size=18.71GiB table=xxxxx.dm_gb_xxxxx_buyer_xxxxx_upd_m version=2.5.9

2024/11/20 19:33:49.519536 info done backup=xxxxx_backup_1120 duration=8m57.174s logger=backuper operation=download size=52.07GiB version=2.5.9

2024/11/20 19:33:49.675052 info clickhouse connection closed logger=clickhouse

这里带大家看下备份下载的数据都含有哪些内容,后面我会整理一篇文章详细介绍 clickhouse 数据库备份和恢复相关信息。

cd /database/clickhouse/backup/xxx_backup_1120

-- 内容如下

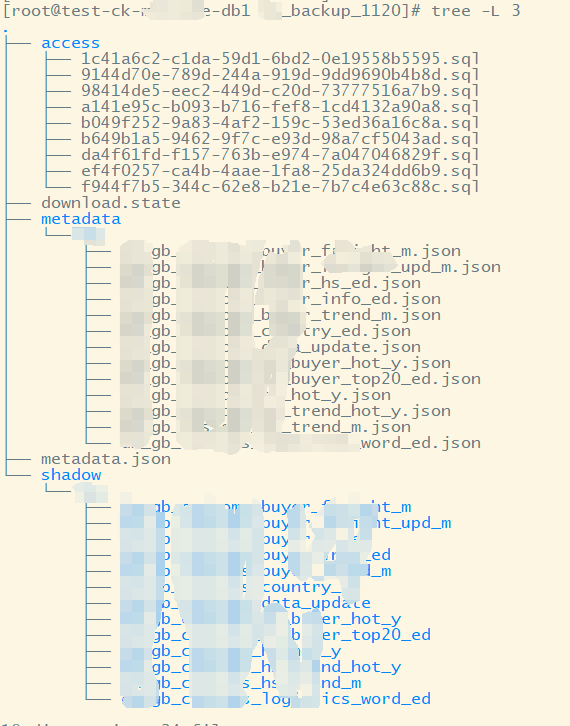

[root@test-ck-xxxx-db1 xxxxx_database_name_backup_1120]# tree -L 3

.

├── access

│ ├── 1c41a6c2-c1da-59d1-6bd2-0e19558b5595.sql

│ ├── 9144d70e-789d-244a-919d-9dd9690b4b8d.sql

│ ├── 98414de5-eec2-449d-c20d-73777516a7b9.sql

│ ├── a141e95c-b093-b716-fef8-1cd4132a90a8.sql

│ ├── b049f252-9a83-4af2-159c-53ed36a16c8a.sql

│ ├── b649b1a5-9462-9f7c-e93d-98a7cf5043ad.sql

│ ├── da4f61fd-f157-763b-e974-7a047046829f.sql

│ ├── ef4f0257-ca4b-4aae-1fa8-25da324dd6b9.sql

│ └── f944f7b5-344c-62e8-b21e-7b7c4e63c88c.sql

├── download.state

├── metadata

│ └── xxxxx_database_name

│ ├── xxx_gb_xxxxx_xxxxx_freight_m.json

│ ├── xxx_gb_xxxxx_xxxxx_freight_upd_m.json

│ ├── xxx_gb_xxxxx_xxxxx_hs_ed.json

│ ├── xxx_gb_xxxxx_xxxxx_info_ed.json

│ ├── xxx_gb_xxxxx_xxxxx_trend_m.json

│ ├── xxx_gb_xxxxx_country_ed.json

│ ├── xxx_gb_xxxxx_data_update.json

│ ├── xxx_gb_xxxxx_hs_xxxxx_hot_y.json

│ ├── xxx_gb_xxxxx_hs_xxxxx_top20_ed.json

│ ├── xxx_gb_xxxxx_hs_hot_y.json

│ ├── xxx_gb_xxxxx_hs_trend_hot_y.json

│ ├── xxx_gb_xxxxx_hs_trend_m.json

│ └── xxx_gb_xxxxx_logistics_word_ed.json

├── metadata.json

└── shadow

└── xxxxx_database_name

├── xxx_gb_xxxxx_xxxxx_freight_m

├── xxx_gb_xxxxx_xxxxx_freight_upd_m

├── xxx_gb_xxxxx_xxxxx_hs_ed

├── xxx_gb_xxxxx_xxxxx_info_ed

├── xxx_gb_xxxxx_xxxxx_trend_m

├── xxx_gb_xxxxx_country_ed

├── xxx_gb_xxxxx_data_update

├── xxx_gb_xxxxx_hs_xxxxx_hot_y

├── xxx_gb_xxxxx_hs_xxxxx_top20_ed

├── xxx_gb_xxxxx_hs_hot_y

├── xxx_gb_xxxxx_hs_trend_hot_y

├── xxx_gb_xxxxx_hs_trend_m

└── xxx_gb_xxxxx_logist_word_ed

--- 下面将对上面的目录和文件大致介绍下含义

1) access:这个目录包含了一些 SQL 文件,通常是备份过程中涉及的权限、用户、角色等信息。如果你在备份中启用了 RBAC(角色权限管理),这部分会用来存储 RBAC 配置的相关信息。每个 .sql 文件代表一个角色或权限的相关设置。

2) download.state:这个文件记录了备份过程的状态信息,尤其是在从远程存储中下载备份时使用。它可以帮助追踪备份的下载状态,确定是否完成或中途中断。

3) metadata:存储了每个表的元数据信息,以 .json 文件的形式保存,通常包括表的结构、字段类型、引擎设置等信息。

-- 文件名如 dm_gb_xxx_xxx_freight_m.json 表示了表的元数据,包含了表结构和相关设置,这些文件用来在恢复备份时重新创建表结构。

metadata.json 文件:通常包含整个数据库或集群的元数据概览,方便在恢复时构建整体的数据库结构。

4) shadow:这是存储表数据的目录,数据是以分片和各个表为单位存储的。

-- 在 shadow 目录下,可以看到各个表的分片数据目录,例如 hg/,这通常是表数据的物理备份。

-- 在 xxx_database_name 目录中,每个文件夹对应一个表,文件夹的名称通常是表的名字(例如 dm_gb_xxx_xxx_freight_m),里面存储了该表的所有数据分片和物理文件。

2.2 恢复数据

接下来就是要对从中转机 download 下载的数据进行restore 恢复,通过如下命令进行恢复。

[root@test-ck-xxxx-db1 clickhouse-backup]# clickhouse-backup --config /etc/clickhouse-backup/config.yml restore xxxx_back_20241120

# 执行的结果部分如下

2024/11/20 09:51:16.807835 info clickhouse connection prepared: tcp://localhost:9000 run ping logger=clickhouse

2024/11/20 09:51:16.810570 info clickhouse connection success: tcp://localhost:9000 logger=clickhouse

2024/11/20 09:51:16.810634 info SELECT value FROM `system`.`build_options` where name='VERSION_INTEGER' logger=clickhouse

2024/11/20 09:51:16.815316 info SELECT countIf(name='type') AS is_disk_type_present, countIf(name='object_storage_type') AS is_object_storage_type_present, countIf(name='free_space') AS is_free_space_present, countIf(name='disks') AS is_storage_policy_present FROM system.columns WHERE database='system' AND table IN ('disks','storage_policies') logger=clickhouse

2024/11/20 09:51:16.824338 info SELECT d.path, any(d.name) AS name, any(lower(if(d.type='ObjectStorage',d.object_storage_type,d.type))) AS type, min(d.free_space) AS free_space, groupUniqArray(s.policy_name) AS storage_policies FROM system.disks AS d LEFT JOIN (SELECT policy_name, arrayJoin(disks) AS disk FROM system.storage_policies) AS s ON s.disk = d.name GROUP BY d.path logger=clickhouse

2024/11/20 09:51:16.835123 info SELECT count() AS is_macros_exists FROM system.tables WHERE database='system' AND name='macros' SETTINGS empty_result_for_aggregation_by_empty_set=0 logger=clickhouse

2024/11/20 09:51:16.841223 info SELECT macro, substitution FROM system.macros logger=clickhouse

2024/11/20 09:51:16.845263 info CREATE DATABASE IF NOT EXISTS `xxx` ON CLUSTER 'xxxx_cluster' ENGINE = Atomic with args [[]] logger=clickhouse

2024/11/20 09:51:16.907465 info CREATE DATABASE IF NOT EXISTS `xxx` ON CLUSTER 'xxxx_cluster' ENGINE = Atomic with args [[]] logger=clickhouse

2024/11/20 09:51:17.092198 info CREATE DATABASE IF NOT EXISTS `xxx` ON CLUSTER 'xxxx_cluster' ENGINE = Atomic with args [[]] logger=clickhouse

。。。。。。。

2024/11/20 09:59:52.295433 info ALTER TABLE `xxx_xxxx`.`ck_xxxx_hive2ck_sync_alarm` ATTACH PART 'bc7fe0eb3fbe1f8a01d06cb882a68204_0_59_14' logger=clickhouse

2024/11/20 09:59:52.318718 info ALTER TABLE `xxx_xxxx`.`ck_xxxx_hive2ck_sync_alarm` ATTACH PART 'bf2f089b2ce218147979c8a80a107706_0_45_11' logger=clickhouse

。。。。。。。

2024/11/20 17:54:02.030459 error one of restoreDataRegular go-routine return error: can't copy data to detached 'xxx_xxxx.ck_hive_test_id_d_time': dstDataPaths=map[string]string{"default":"/database/clickhouse/store/089/089f8a4a-c0d8-4174-9f5d-57ce52f942c1/"}, not contains sdb

clickhouse 数据恢复部分表成功,但最后报错,导致数据恢复中断。什么原因导致报错呢,看到 sdb、sdc,我初步明白了原因,sdc、sdc 是测试环境分配的存储磁盘名,原测试环境为 clickhouse 数据安装目录和数据目录分别指定了对应的存储磁盘,这些信息是写到 clickhouse 配置策略文件 storage.xml 中,这次存储盘未做 LVM,是一个个物理磁盘,测试环境的 storage.xml 文件信息如下:

<yandex>

<storage_configuration>

<disks>

<sdb> <!-- disk_name可自己指定,只要不重复即可 -->

<path>/opt/clickhouse/</path> <!-- disk存储数据的路径,需要以'/'结尾,不要和默认的/var/lib/clickhouse/重复 -->

<keep_free_space_bytes>1048576000</keep_free_space_bytes> <!-- 需要保留的剩余磁盘空间,此处设置的是保留1000M -->

</sdb>

<sdc>

<path>/data/clickhouse/</path> <!-- disk存储数据的路径,需要以'/'结尾,不要和默认的/var/lib/clickhouse/重复 -->

<keep_free_space_bytes>1048576000</keep_free_space_bytes>

</sdc>

</disks>

<policies>

<jbod> <!-- 策略名称可自己指定,只要不重复即可,此处配置的是JBOD策略且只有一个disk -->

<volumes>

<single> <!-- 卷名称,可自己指定 -->

<disk>sdc</disk> <!-- 包含的disk, sdc和上面disks中定义的disk_name需保持一致 -->

</single>

</volumes>

</jbod>

<cold_hot> <!-- 此处配置的是冷热策略 -->

<volumes>

<hot> <!-- 卷名称,可自己指定 -->

<disk>sdb</disk> <!-- 热区是sdb盘 -->

<max_data_part_size_bytes>1073741824</max_data_part_size_bytes> <!-- 卷中可以存储的数据片最大bytes,此处配置的是1G -->

</hot>

<cold> <!-- 卷名称,可自己指定 -->

<disk>sdc</disk>

</cold>

</volumes>

<move_factor>0.1</move_factor> <!-- 卷中可用空间小于10%时,数据自动像冷区移动 -->

</cold_hot>

</policies>

</storage_configuration>

</yandex>

而新安装部署的 clickhouse 的安装目录和数据目录均使用的是 LVM 的文件系统,统一都在 database/clickhouse 目录下,导致两边的路径不对应。熟悉 oracle dg 的朋友都知道,如果 oracle 的主数据和归档路径不对应,主库的数据和归档日志无法传输到备库,需要做路径转换才行。

查询了相关资料,显示 clickhouse-backup 的 config.yml 文件 disk_mapping 这个参数可以进行相应转换策略设置。然后我将新测试环境的已恢复的相关表都进行删除,然后修改了 clickhouse-backup 的 config.yml 文件,修改为 disk_mapping: {sdb:/database/clickhouse},重新进行 restore,依然报之前的错误。接下来我又将其修改为:disk_mapping: {"sdb":"/database/clickhouse","sdc":"/database/clickhouse"} ,再次执行恢复,依然报错。

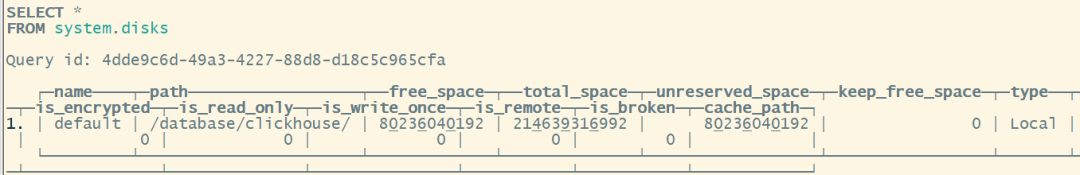

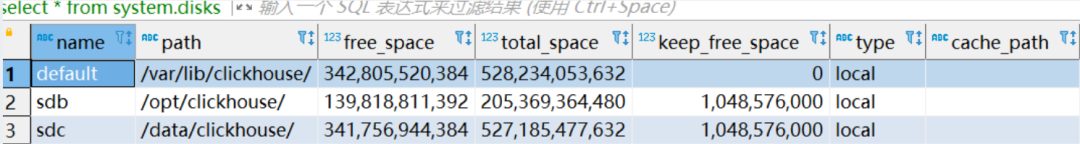

我让研发同事帮我查询了下原测试环境磁盘相关信息,提供给我的截图如下:

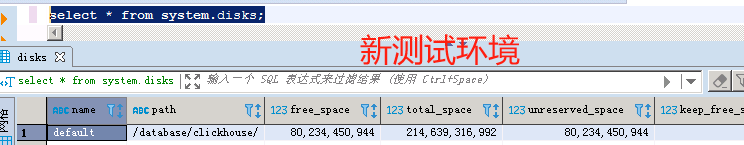

而新的测试环境磁盘相关信息如下:

我又查阅了相关资料,将 config.yml 文件修改为

disk_mapping: sdb: "/database/clickhouse" sdc: "/database/clickhouse" default: "/database/clickhouse" 这次执行 restore 恢复终于没有再报之前的错误。

为了更好的优化 download 和 restore 速度,并且将源端数据基于角色的访问控制全部同步过来,我执行了如下命令,并修改了 config.yml 文件,如下所示。

-- 数据恢复命令

clickhouse-backup --config /etc/clickhouse-backup/config.yml restore xxx_backup_1120 --rbac --configs

--- config.yml 文件如下

general:

remote_storage: sftp

max_file_size: 1073741824 # 1GB

disable_progress_bar: false

backups_to_keep_local: 0

backups_to_keep_remote: 0

log_level: info

allow_empty_backups: false

download_concurrency: 3 # 增加并发数

upload_concurrency: 3 # 增加并发数

restore_schema_on_cluster: "xxxx_cluster"

upload_by_part: true

download_by_part: true

restore_database_mapping:

source_db: target_db # 自定义数据库映射规则

retries_on_failure: 3

retries_pause: 100ms

clickhouse:

username: default

password: "xxxx"

host: localhost

port: 9000

disk_mapping:

sdb: "/database/clickhouse"

sdc: "/database/clickhouse"

default: "/database/clickhouse"

skip_tables:

- system.*

- INFORMATION_SCHEMA.*

- information_schema.*

timeout: 15m # 增加超时时间

freeze_by_part: false

freeze_by_part_where: ""

secure: false

skip_verify: false

sync_replicated_tables: true

tls_key: ""

tls_cert: ""

tls_ca: ""

log_sql_queries: true

debug: false

config_dir: "/etc/clickhouse-server"

restart_command: "systemctl restart clickhouse-server"

ignore_not_exists_error_during_freeze: true

check_replicas_before_attach: true

sftp:

address: "xxx.xxx.xxx.xxx"

port: 22

username: "root"

password: "root"

key: ""

path: "/backup/xxx/"

concurrency: 3 # 增加并发

compression_format: tar

compression_level: 3 # 调整压缩级别

debug: false

Part3 总结

之前做 clickhouse 的备份恢复,很多都是在一台机器上,或者是源端和目标的配置相同,但对于这次两边配置不同导致备份恢复失败,也是第一次遇到,也算是学到了一个知识点。

另外在本次恢复过程中,也出现因为源端和目标端数据库版本不同,导致部分表在更高的版本上不兼容,遇到这种情况,可以先首先排除该表,要删除备份文件 metadata 目录下该表的 json 文件,然后修改 metadata.json 文件,排除掉该表信息,最后还要删除 shadow 目录下该表对应的目录。

这次 ClickHouse 的备份恢复并不顺利,尤其是由于源端和目标端存储路径不一致的问题导致的恢复失败,但却给了我一次宝贵的学习机会。

好消息:ClickHouse Beijing User Group第2届 Meetup 已经开放报名了,将于2024年11月30日在北京朝阳区科荟路33号4幢1层 清泉(奥林匹克森林公园店)举行,扫码免费报名

注册ClickHouse中国社区大使,领取认证考试券

注册ClickHouse中国社区大使,领取认证考试券

试用阿里云 ClickHouse企业版

轻松节省30%云资源成本?阿里云数据库ClickHouse架构全新升级,推出和原厂独家合作的ClickHouse企业版,在存储和计算成本上带来双重优势,现诚邀您参与100元指定规格测一个月的活动,了解详情:https://t.aliyun.com/Kz5Z0q9G

征稿启示

面向社区长期正文,文章内容包括但不限于关于 ClickHouse 的技术研究、项目实践和创新做法等。建议行文风格干货输出&图文并茂。质量合格的文章将会发布在本公众号,优秀者也有机会推荐到 ClickHouse 官网。请将文章稿件的 WORD 版本发邮件至:Tracy.Wang@clickhouse.com