前天一个用户出现了一个较为奇怪的问题,统计信息也是最新的【客户跨年收集了统计信息】,但是如果不加Index,则不会走索引,主要来看就是评估的rows不准确。

当然客户也知道强制加index,效率更高,然而其通过工具查看执行计划发现走索引的情况下,Oracle cbo评估出来的cost非常之高,比走全表还要高。我想想这就是为啥默认不走索引的原因。【文章略长,想看解决方案,可以直接跳到文章最后】

SELECT c.LOTNAME, c.CARRIERNAME, c.PROCESSOPERATION, c.TIMEKEY

FROM ct_defectflagproduct c, (select *+INDEX(p LOT_IDX04)*/ * from lot p where LASTEVENTTIMEKEY >= to_char(sysdate - interval '2' day, 'yyyymmddhh24miss') || '000000') l,CT_IMRVREFERENCE PP

WHERE c.TIMEKEY >= to_char(sysdate - interval '2' day, 'yyyymmddhh24miss') || '000000'

AND c.TIMEKEY <= to_char(sysdate, 'yyyymmddhh24miss') || '999999'

AND c.CARRIERNAME = L.CARRIERNAME

AND c.LOTNAME = L.LOTNAME

AND PP.PREPROCESSOPERATIONNAME = c.PROCESSOPERATION

AND PP.PRODUCTSPECNAME = L.PRODUCTSPECNAME

AND L.PROCESSOPERATIONNAME = PP.PROCESSOPERATIONNAME

GROUP BY c.LOTNAME, c.CARRIERNAME, c.PROCESSOPERATION, c.TIMEKEY

上述是原始SQL语句,接下来我们看看执行计划【来自sqlhc报告】。

---------------------------------------------------------------------------------------------------------------------------------------------------

| Id | Operation | Name | E-Rows |E-Bytes| Cost (%CPU)| E-Time | OMem | 1Mem | Used-Mem |

---------------------------------------------------------------------------------------------------------------------------------------------------

| 0 | SELECT STATEMENT | | | | 4042K(100)| | | | |

| 1 | HASH GROUP BY | | 121 | 15609 | 4042K (1)| 00:02:38 | 998K| 998K| 770K (0)|

|* 2 | FILTER | | | | | | | | |

| 3 | NESTED LOOPS | | 121 | 15609 | 4042K (1)| 00:02:38 | | | |

| 4 | NESTED LOOPS | | | | | | | | |

|* 5 | HASH JOIN | | 10636 | 851K| 4041K (1)| 00:02:38 | 1236K| 1236K| 2422K (0)|

| 6 | TABLE ACCESS FULL | CT_IMRVREFERENCE | 2720 | 68000 | 11 (0)| 00:00:01 | | | |

|* 7 | TABLE ACCESS BY INDEX ROWID BATCHED| LOT | 382K| 20M| 4041K (1)| 00:02:38 | | | |

|* 8 | INDEX RANGE SCAN | LOT_IDX04 | 3925K| | 161K (1)| 00:00:07 | | | |

|* 9 | INDEX RANGE SCAN | CT_DEFECTFLAGPRODUCT_INDEX | | | | | | | |

|* 10 | TABLE ACCESS BY INDEX ROWID | CT_DEFECTFLAGPRODUCT | 1 | 47 | 1622 (2)| 00:00:01 | | | |

---------------------------------------------------------------------------------------------------------------------------------------------------

Query Block Name Object Alias (identified by operation id):

-------------------------------------------------------------

1 - SEL$F5BB74E1

6 - SEL$F5BB74E1 / PP@SEL$1

7 - SEL$F5BB74E1 / P@SEL$2

8 - SEL$F5BB74E1 / P@SEL$2

9 - SEL$F5BB74E1 / C@SEL$1

10 - SEL$F5BB74E1 / C@SEL$1

Outline Data

-------------

*+

BEGIN_OUTLINE_DATA

INDEX(@"SEL$F5BB74E1""C"@"SEL$1" ("CT_DEFECTFLAGPRODUCT"."LOTNAME""CT_DEFECTFLAGPRODUCT"."TIMEKEY""CT_DEFECTFLAGPRODUCT"."CARRIERNAME"))

NLJ_BATCHING(@"SEL$F5BB74E1""C"@"SEL$1")

USE_NL(@"SEL$F5BB74E1""C"@"SEL$1")

BATCH_TABLE_ACCESS_BY_ROWID(@"SEL$F5BB74E1""P"@"SEL$2")

INDEX_RS_ASC(@"SEL$F5BB74E1""P"@"SEL$2" ("LOT"."LASTEVENTTIMEKEY"))

USE_HASH(@"SEL$F5BB74E1""P"@"SEL$2")

IGNORE_OPTIM_EMBEDDED_HINTS

OPTIMIZER_FEATURES_ENABLE('12.2.0.1')

DB_VERSION('12.2.0.1')

OPT_PARAM('_optimizer_use_feedback''false')

ALL_ROWS

OUTLINE_LEAF(@"SEL$F5BB74E1")

MERGE(@"SEL$2" >"SEL$1")

OUTLINE(@"SEL$1")

OUTLINE(@"SEL$2")

FULL(@"SEL$F5BB74E1""PP"@"SEL$1")

LEADING(@"SEL$F5BB74E1""PP"@"SEL$1""P"@"SEL$2""C"@"SEL$1")

USE_HASH_AGGREGATION(@"SEL$F5BB74E1")

END_OUTLINE_DATA

*/

Predicate Information (identified by operation id):

---------------------------------------------------

2 - filter(TO_CHAR(SYSDATE@!,'yyyymmddhh24miss')||'999999'>=TO_CHAR(SYSDATE@!-INTERVAL'+02 00:00:00' DAY(2) TO

SECOND(0),'yyyymmddhh24miss')||'000000')

5 - access("PP"."PRODUCTSPECNAME"="P"."PRODUCTSPECNAME" AND "P"."PROCESSOPERATIONNAME"="PP"."PROCESSOPERATIONNAME")

7 - filter("P"."CARRIERNAME" IS NOT NULL)

8 - access("LASTEVENTTIMEKEY">=TO_CHAR(SYSDATE@!-INTERVAL'+02 00:00:00' DAY(2) TO SECOND(0),'yyyymmddhh24miss')||'000000')

9 - access("C"."LOTNAME"="P"."LOTNAME" AND "C"."TIMEKEY">=TO_CHAR(SYSDATE@!-INTERVAL'+02 00:00:00' DAY(2) TO

SECOND(0),'yyyymmddhh24miss')||'000000' AND "C"."CARRIERNAME"="P"."CARRIERNAME" AND

"C"."TIMEKEY"<=TO_CHAR(SYSDATE@!,'yyyymmddhh24miss')||'999999')

filter("C"."CARRIERNAME"="P"."CARRIERNAME")

10 - filter("PP"."PREPROCESSOPERATIONNAME"="C"."PROCESSOPERATION")

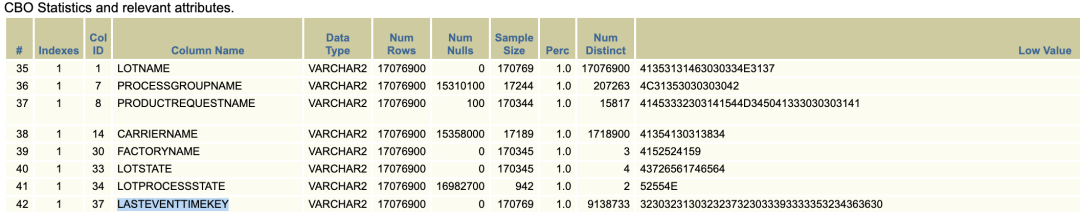

可以看到LOT这个表走index返回评估了几百万数据,这显示是有问题的,据了解这个表本身也哭1700万条数据而已。

后面检查发现这个表的where条件列实际上并不是date类型,而是varchar2.

对于varchar2用来存时间,这显然是有一些问题的。

SINGLE TABLE ACCESS PATH

Single Table Cardinality Estimation for LOT[P]

SPD: Directive valid: dirid = 8340008695565278526, state = 1, flags = 1, loc = 1, forDS = NO, forCG = YES {C(116490)[37]}

SPD: Return code in qosdDSDirSetup: EXISTS, estType = TABLE

kkecdn: Single Table Predicate:"P"."LASTEVENTTIMEKEY">=TO_CHAR(SYSDATE@!-INTERVAL'+02 00:00:00' DAY(2) TO SECOND(0),'yyyymmddhh24miss')||'000000'

Column (#37): LASTEVENTTIMEKEY(VARCHAR2)

AvgLen: 21 NDV: 9138733 Nulls: 0 Density: 0.000000

Estimated selectivity: 0.222452 , col: #37

SQL> select to_char(to_number(utl_raw.cast_to_raw('20241231113434000000'))) from dual;

TO_CHAR(TO_NUMBER(UTL_RAW.CAST_TO_RAW('20241231113434000000')))

--------------------------------------------------------------------------------

3230323431323331313133343334303030303030

SQL> select (3230323530313031313932373338313632333534 - 3230323431323331313133343334303030303030)/(3230323530313031313932373338313632333534-3230323130323237323033393333353234363630) from dual;

(3230323530313031313932373338313632333534-32303234313233313131333433343030303030

--------------------------------------------------------------------------------

.247480564

SQL> select 17076900*0.247480564 from dual;

17076900*0.247480564

--------------------

4226200.84

SQL> ```

根据cbo评估的选择性计算出来的rows确实是大几百万。

这里我们创建一个测试表在测试环境中验证一下。

SQL> conn roger/roger

Connected.

SQL> drop table test;

Table dropped.

SQL> CREATE TABLE test(

2 t_date TIMESTAMP,

v_date varchar2(100)

); 3 4

Table created.

SQL>

SQL> BEGIN

2 FOR i IN 1..1000000 LOOP

INSERT INTO test (t_date)

VALUES (

TO_TIMESTAMP(

TO_CHAR(

TO_DATE('2021-01-01', 'YYYY-MM-DD') +

3 4 5 6 7 8 DBMS_RANDOM.VALUE(0, (TO_DATE('2025-01-02', 'YYYY-MM-DD') - TO_DATE('2021-01-01', 'YYYY-MM-DD'))),

9 'YYYY-MM-DD HH24:MI:SS'

) || '.' || LPAD(ROUND(DBMS_RANDOM.VALUE(0, 1000000)), 6, '0'), 'YYYY-MM-DD HH24:MI:SS.FF6'

)

10 11 12 );

END LOOP;

COMMIT;

END;

/ 13 14 15 16

PL/SQL procedure successfully completed.

SQL> update testset v_date=to_char(t_date,'yyyymmddhh24missff6');

1000000 rows updated.

SQL>

SQL> BEGIN

2 FOR i IN 1..1000000 LOOP

INSERT INTO test (t_date)

VALUES (

TO_TIMESTAMP(

TO_CHAR(

TO_DATE('2021-01-01', 'YYYY-MM-DD') +

3 4 5 6 7 8 DBMS_RANDOM.VALUE(0, (TO_DATE('2025-01-02', 'YYYY-MM-DD') - TO_DATE('2021-01-01', 'YYYY-MM-DD'))),

9 'YYYY-MM-DD HH24:MI:SS'

) || '.' || LPAD(ROUND(DBMS_RANDOM.VALUE(0, 1000000)), 6, '0'), 'YYYY-MM-DD HH24:MI:SS.FF6'

)

10 11 12 );

END LOOP;

COMMIT;

END;

/ 13 14 15 16

PL/SQL procedure successfully completed.

SQL> update testset v_date=to_char(t_date,'yyyymmddhh24missff6');

1000000 rows updated.

SQL> EXEC dbms_stats.gather_table_stats(ownname => 'ROGER',tabname =>'TEST', estimate_percent => dbms_stats.auto_sample_size, CASCADE => true, method_opt => 'for all columns size 1', no_invalidate => false);

PL/SQL procedure successfully completed.

SQL> set autot traceonly exp

SQL> select * from testwhere t_date>=to_date('20241231','yyyymmdd');

Execution Plan

----------------------------------------------------------

Plan hash value: 1357081020

--------------------------------------------------------------------------

| Id | Operation | Name | Rows | Bytes | Cost (%CPU)| Time |

--------------------------------------------------------------------------

| 0 | SELECT STATEMENT | | 1366 | 43712 | 2467 (1)| 00:00:01 |

|* 1 | TABLE ACCESS FULL| TEST | 1366 | 43712 | 2467 (1)| 00:00:01 |

--------------------------------------------------------------------------

Predicate Information (identified by operation id):

---------------------------------------------------

1 - filter("T_DATE">=TIMESTAMP' 2024-12-31 00:00:00')

Note

-----

- automatic DOP: Computed Degree of Parallelism is 1 because of parallel threshold

SQL> set autot off

SQL> select count(1) from testwhere t_date>=to_date('20241231','yyyymmdd');

COUNT(1)

----------

1410

SQL> set autot traceonly exp

SQL> select * from testwhere v_date>='20241231';

Execution Plan

----------------------------------------------------------

Plan hash value: 1357081020

--------------------------------------------------------------------------

| Id | Operation | Name | Rows | Bytes | Cost (%CPU)| Time |

--------------------------------------------------------------------------

| 0 | SELECT STATEMENT | | 221K| 6929K| 2468 (1)| 00:00:01 |

|* 1 | TABLE ACCESS FULL| TEST | 221K| 6929K| 2468 (1)| 00:00:01 |

--------------------------------------------------------------------------

Predicate Information (identified by operation id):

---------------------------------------------------

1 - filter("V_DATE">='20241231')

Note

-----

- automatic DOP: Computed Degree of Parallelism is 1 because of parallel threshold

SQL> set autot off

SQL> select count(1) from testwhere v_date>='20241231';

COUNT(1)

----------

1410

SQL>

大家可以看到,默认没有直方图的情况之下,对于date列的执行计划评估是准确的,test表的rows评估也是准确的。

但是对于v_date这个varchar2的列作为查询条件,我可以发现执行计划rows评估是完全不对的,高达22万【表只有100w条数据】。

实际上我们根据条件count可以发现只有1410条数据。

很显然,对于varchar2存存时间列的情况,Oracle CBO的计算评估是有些不妥的。

这里打开10053 event跟踪一下,看下Oracle cbo的成本计算。

***********************

Table Stats::

Table: TEST Alias: TEST

#Rows: 1000000 SSZ: 0 LGR: 0 #Blks: 9077 AvgRowLen: 32.00 NEB: 0 ChainCnt: 0.00 ScanRate: 0.00 SPC: 0 RFL: 0 RNF: 0 CBK: 0 CHR: 0 KQDFLG: 1

#IMCUs: 0 IMCRowCnt: 0 IMCJournalRowCnt: 0 #IMCBlocks: 0 IMCQuotient: 0.000000

try to generate single-table filter predicates from ORs for query block SEL$1 (#0)

finally: "TEST"."T_DATE">=TIMESTAMP' 2024-12-31 00:00:00'

=======================================

SPD: BEGIN context at query block level

=======================================

Query Block SEL$1 (#0)

Return code in qosdSetupDirCtx4QB: NOCTX

=====================================

SPD: END context at query block level

=====================================

Access path analysis for TEST

***************************************

SINGLE TABLE ACCESS PATH

Single Table Cardinality Estimation for TEST[TEST]

SPD: Return code in qosdDSDirSetup: NOCTX, estType = TABLE

kkecdn: Single Table Predicate:"TEST"."T_DATE">=TIMESTAMP' 2024-12-31 00:00:00'

Column (#1): T_DATE(TIMESTAMP)

AvgLen: 11 NDV: 1000000 Nulls: 0 Density: 0.000001 Min: 2459216.000957 Max: 2460677.995189

Estimated selectivity: 0.001366 , col: #1

Table: TEST Alias: TEST

Card: Original: 1000000.000000rsel = 0.001366 Rounded: 1366 Computed: 1365.703654 Non Adjusted: 1365.703654

Scan IO Cost (Disk) = 2460.000000

Scan CPU Cost (Disk) = 214668630.880000

Cost of predicates:

io = NOCOST, cpu = 50.000000, sel = 0.001366 flag = 2048 ("TEST"."T_DATE">=TIMESTAMP' 2024-12-31 00:00:00')

Total Scan IO Cost = 2460.000000 (scan (Disk))

+ 0.000000 (io filter eval) (= 0.000000 (per row) * 1000000.000000 (#rows))

= 2460.000000

Total Scan CPU Cost = 214668630.880000 (scan (Disk))

+ 50000000.000000 (cpu filter eval) (= 50.000000 (per row) * 1000000.000000 (#rows))

= 264668630.880000

Access Path: TableScan

Cost: 2467.098829 Resp: 2467.098829 Degree: 0

Cost_io: 2460.000000 Cost_cpu: 264668631

Resp_io: 2460.000000 Resp_cpu: 264668631

Best:: AccessPath: TableScan

Cost: 2467.098829 Degree: 1 Resp: 2467.098829 Card: 1365.703654 Bytes: 0.000000

***************************************

SINGLE TABLE ACCESS PATH

Single Table Cardinality Estimation for TEST[TEST]

SPD: Return code in qosdDSDirSetup: NOCTX, estType = TABLE

kkecdn: Single Table Predicate:"TEST"."V_DATE">='20241231'

Column (#2): V_DATE(VARCHAR2)

AvgLen: 21 NDV: 1000000 Nulls: 0 Density: 0.000001

Estimated selectivity: 0.221756 , col: #2

Table: TEST Alias: TEST

Card: Original: 1000000.000000rsel = 0.221756 Rounded: 221756 Computed: 221755.578793 Non Adjusted: 221755.578793

Scan IO Cost (Disk) = 2460.000000

Scan CPU Cost (Disk) = 234641310.880000

Cost of predicates:

io = NOCOST, cpu = 50.000000, sel = 0.221756 flag = 2048 ("TEST"."V_DATE">='20241231')

Total Scan IO Cost = 2460.000000 (scan (Disk))

+ 0.000000 (io filter eval) (= 0.000000 (per row) * 1000000.000000 (#rows))

= 2460.000000

Total Scan CPU Cost = 234641310.880000 (scan (Disk))

+ 50000000.000000 (cpu filter eval) (= 50.000000 (per row) * 1000000.000000 (#rows))

= 284641310.880000

Access Path: TableScan

Cost: 2467.634527 Resp: 2467.634527 Degree: 0

Cost_io: 2460.000000 Cost_cpu: 284641311

Resp_io: 2460.000000 Resp_cpu: 284641311

Best:: AccessPath: TableScan

Cost: 2467.634527 Degree: 1 Resp: 2467.634527 Card: 221755.578793 Bytes: 0.000000

很明显对于date列的选择性计算【Estimated selectivity: 0.001366 】是相对准确的;而varchar2这个列的评估就比较大了【Estimated selectivity: 0.221756】

参考基于Oracle成本优化一书中可以得知对于无直方图的请下,选择性计算大致是如下这个公式: Selectivity = (high_value – limit) (high_value – low_value) + 1/num_distinct

这里我可以大致计算一下:

SQL> l

1 select TABLE_NAME,COLUMN_NAME,NUM_DISTINCT,HIGH_VALUE,LOW_VALUE,DENSITY,NUM_NULLS

2* from dba_tab_col_statistics where table_name='TEST' and owner='ROGER'

SQL>

TABLE_NAME COLUMN_NAME NUM_DISTINCT HIGH_VALUE LOW_VALUE DENSITY NUM_NULLS

------------ --------------- ------------ ------------------------------------------- ----------------------------------------- ---------- ----------

TEST T_DATE 1000000 787D010118360512F40D00 7879010101021728400038 .000001 0

TEST V_DATE 1000000 3230323530313031323335333034333137393834 3230323130313031303030313232363735323833 .000001 0

SQL>

SQL> select to_char(to_number(utl_raw.cast_to_raw('20241231000000000000'))) from dual;

TO_CHAR(TO_NUMBER(UTL_RAW.CAST_TO_RAW('20241231')))

--------------------------------------------------------------------------------

3230323431323331303030303030303030303030

SQL>

SQL> select (3230323530313031323335333034333137393834 - 3230323431323331303030303030303030303030)/(3230323530313031323335333034333137393834-3230323130313031303030313232363735323833) from dual;

(3230323530313031323335333034333137393834-3230323431323331303030303030303030303030)/(3230323530313031323335333034333137393834-3230323130313031303030313232363735323833)

-----------------------------------------------------------------------------------------------------------------------------------------------------------------------

.24747425

SQL>

可以看到计算出来的值cbo评估的是接近的,总之。都不太对,这个数据太大太大。

如果存在直方图的情况下,这个问题会如何呢?

SQL> EXEC dbms_stats.gather_table_stats(ownname => 'ROGER',tabname =>'TEST', estimate_percent => dbms_stats.auto_sample_size, CASCADE => true, method_opt => 'for all columns size auto', no_invalidate => false);

PL/SQL procedure successfully completed.

SQL> set lines 230

SQL> @tab_stat

TABLE_NAME COLUMN_NAME NUM_DISTINCT HIGH_VALUE LOW_VALUE DENSITY NUM_NULLS NUM_BUCKETS HISTOGRAM

---------- ------------- ------------ --------------------------------------------- --------------------------------------------- ---------- ---------- ----------- -----------

TEST T_DATE 1000000 787D010118360512F40D00 7879010101021728400038 .000001 0 1 NONE

TEST V_DATE 1000000 3230323530313031323335333034333137393834 3230323130313031303030313232363735323833 .000001 0 254 HYBRID

SQL>

SQL> set autot traceonly exp

SQL> select * from testwhere v_date>='20241231';

Execution Plan

----------------------------------------------------------

Plan hash value: 1357081020

--------------------------------------------------------------------------

| Id | Operation | Name | Rows | Bytes | Cost (%CPU)| Time |

--------------------------------------------------------------------------

| 0 | SELECT STATEMENT | | 4064 | 127K| 2468 (1)| 00:00:01 |

|* 1 | TABLE ACCESS FULL| TEST | 4064 | 127K| 2468 (1)| 00:00:01 |

--------------------------------------------------------------------------

Predicate Information (identified by operation id):

---------------------------------------------------

1 - filter("V_DATE">='20241231')

Note

-----

- automatic DOP: Computed Degree of Parallelism is 1 because of parallel threshold

SQL>

可以看到有直方图的情况下,评估的信息是相对准确的【4064和实际的rows 1410 差距不大】。

同样此时的10053 event跟踪所看到的信息如下:

SINGLE TABLE ACCESS PATH

Single Table Cardinality Estimation for TEST[TEST]

SPD: Return code in qosdDSDirSetup: NOCTX, estType = TABLE

kkecdn: Single Table Predicate:"TEST"."V_DATE">='20241231'

Column (#2):

NewDensity:0.000001, OldDensity:0.000001 BktCnt:5411.000000, PopBktCnt:0.000000, PopValCnt:0, NDV:1000000

Column (#2): V_DATE(VARCHAR2)

AvgLen: 21 NDV: 1000000 Nulls: 0 Density: 0.000001

Histogram: Hybrid #Bkts: 254 UncompBkts: 5411 EndPtVals: 254 ActualVal: yes

Estimated selectivity: 0.004064 , col: #2

Table: TEST Alias: TEST

Card: Original: 1000000.000000rsel = 0.004064 Rounded: 4064 Computed: 4064.066982 Non Adjusted: 4064.066982

Scan IO Cost (Disk) = 2460.000000

Scan CPU Cost (Disk) = 234641310.880000

Cost of predicates:

io = NOCOST, cpu = 50.000000, sel = 0.004064 flag = 2048 ("TEST"."V_DATE">='20241231')

Total Scan IO Cost = 2460.000000 (scan (Disk))

+ 0.000000 (io filter eval) (= 0.000000 (per row) * 1000000.000000 (#rows))

= 2460.000000

Total Scan CPU Cost = 234641310.880000 (scan (Disk))

+ 50000000.000000 (cpu filter eval) (= 50.000000 (per row) * 1000000.000000 (#rows))

= 284641310.880000

Access Path: TableScan

Cost: 2467.634527 Resp: 2467.634527 Degree: 0

Cost_io: 2460.000000 Cost_cpu: 284641311

Resp_io: 2460.000000 Resp_cpu: 284641311

Best:: AccessPath: TableScan

Cost: 2467.634527 Degree: 1 Resp: 2467.634527 Card: 4064.066982 Bytes: 0.000000

根据cbo评估的选择性可以计算得到和执行计划一样的rows评估。

SQL> select 1000000*0.004064 from dual;

1000000*0.004064

----------------

4064

SQL>

当然对于这个,解决方案主要有2个:

1、固定执行计划,比如sql profile固定即可,强制走index。

2、针对该表的统计信息收集需要单独收集直方图。

3、where 条件列再套一层to_date函数,这样cbo是可以识别的,cbo评估相对准确。至少差异不会那么大。

不过从根因上来讲,这属于开发不规范,使用varchar2类存时间,而Oracle并不知道所存的是时间,那么评估则会存在异常。

~~~~~~~~~~~~~~~~~~~~~~~~~~~

最近和一部分行业数据库专家成了一个数据库交流付费群。

加入DataBase Fans付费群,您能有哪些收获?

1、大家可与顶级数据库专家互动,问题范围不限于Oracle,MySQL,PostgreSQL,openGauss等。

2、群里有哪些数据库专家?

- 某MySQL 源码专家xx{打个码}

- PostgreSQL实战专家灿灿

- Oracle专家 Roger以及另外2位Oracle 实战派ACE专家【匿名一下:擅长SQL优化,优化器】

- 是的,大家没有看错,全都是实战派高手!

- 最近付费群又加入了一个顶级SQL高手,传闻itpub论坛top 级别的存在

2、 入群可以获得群里专家们的收藏脚本。

3、 可提供数据库相关资料共享【包括xxxx账号,你们懂的】

4、 不定期组织直播案例分析【包括但不限于Oracle、MySQL、国产xxx数据库】

5、 付费群:365人/年 【2025/1/1 - 2025/12/31】

想加入的朋友,速进!前100名299,满100名后恢复原价!

扫码加管理员微信咨询 Roger_database