“本文主要介绍大数据相关集群搭建,包括hadoop集群、zookeeper集群、hbase集群、spark集群等”

01

—

免密登录

这里我们演示的为三台机器搭建的集群,分别为node01、node02、node03,使用的ssh工具为crt。

添加用户及用户组:

# 切换到root用户# 添加用户组groupadd hadoop# 添加用户useradd -g hadoop hadoop# 设置密码passwd hadoop# 切换至hadoop用户su - hadoop

设置免密登录

# 三台集群分别执行,每台集群生成公钥私钥ssh-keygen -t rsa# 将node01的公钥拷贝到其他机器,三台都需要执行ssh-copy-id -p 12324 node01# 切换到.ssh目录下cd /home/hadoop/.ssh/# 将 authorized_keys 拷贝到其他两个节点scp -P 12324 authorized_keys node02:$PWDscp -P 12324 authorized_keys node03:$PWD# 注:由于修改默认的22端口,ssh、scp相关命令需要加ssh的端口号

02

—

Hadoop集群搭建

下载hadoop压缩包

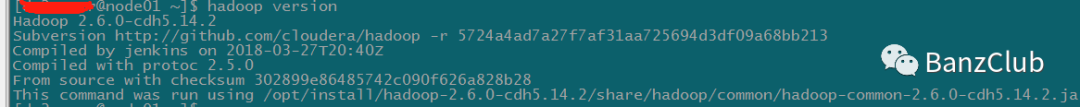

# 本文使用cdh版本wget http://archive.cloudera.com/cdh5/cdh/5/hadoop-2.6.0-cdh5.14.2.tar.gz# 解压tar -xzvf hadoop-2.6.0-cdh5.14.2.tar.gz -C /opt/install# 修改环境变量cd ~vim .bash_profile# 添加JAVA_HOME、HADOOP_HOMEexport JAVA_HOME=/opt/install/jdk1.8.0_141# 注:三台集群都需要提前安装jdk,这里安装的jdk1.8export HADOOP_HOME=/opt/install/hadoop-2.6.0-cdh5.14.2# 修改PATHexport PATH=$PATH:$JAVA_HOME/bin:$HADOOP_HOME/bin:$HADOOP_HOME/sbin# 环境变量生效及验证hadoop版本信息source .bash_profilehadoop version

# 授权.ssh文件权限,否则hadoop集群可能有问题chmod -R 755 .ssh/cd .ssh# 防止被篡改chmod 644 *chmod 600 id_rsa id_rsa.pub# 将公钥添加到本机认证文件中,没有添加集群需要密码才能启动cat id_rsa.pub >> authorized_keys

配置hadoop相关配置文件

cd /opt/install/hadoop-2.6.0-cdh5.14.2/etc/hadoop# 配置hadoop-env.shexport JAVA_HOME=/opt/install/jdk1.8.0_141

# 配置core-site.xml<configuration><property><name>fs.defaultFS</name><value>hdfs://node01:8020</value></property><property><name>hadoop.tmp.dir</name><value>/opt/install/hadoop-2.6.0-cdh5.14.2/hadoopDatas/tempDatas</value></property><!-- 缓冲区大小,实际工作中根据服务器性能动态调整 --><property><name>io.file.buffer.size</name><value>4096</value></property><property><name>fs.trash.interval</name><value>10080</value><description>检查点被删除后的分钟数。如果为零,垃圾桶功能将被禁用。该选项可以在服务器和客户端上配置。如果垃圾箱被禁用服务器端,则检查客户端配置。如果在服务器端启用垃圾箱,则会使用服务器上配置的值,并忽略客户端配置值。</description></property><property><name>fs.trash.checkpoint.interval</name><value>0</value><description>垃圾检查点之间的分钟数。应该小于或等于fs.trash.interval。如果为零,则将该值设置为fs.trash.interval的值。每次检查指针运行时,它都会从当前创建一个新的检查点,并删除比fs.trash.interval更早创建的检查点。</description></property></configuration>

配置详见:https://hadoop.apache.org/docs/r2.7.0/hadoop-project-dist/hadoop-common/core-default.xml

# 配置hdfs-site.xml<configuration><property><name>dfs.namenode.secondary.http-address</name><value>node01:50090</value></property><property><name>dfs.namenode.http-address</name><value>node01:50070</value></property><property><name>dfs.namenode.name.dir</name><value>file:///opt/install/hadoop-2.6.0-cdh5.14.2/hadoopDatas/namenodeDatas</value></property><!-- 定义dataNode数据存储的节点位置,实际工作中,一般先确定磁盘的挂载目录,然后多个目录用,进行分割 --><property><name>dfs.datanode.data.dir</name><value>file:///opt/install/hadoop-2.6.0-cdh5.14.2/hadoopDatas/datanodeDatas</value></property><property><name>dfs.namenode.edits.dir</name><value>file:///opt/install/hadoop-2.6.0-cdh5.14.2/hadoopDatas/dfs/nn/edits</value></property><property><name>dfs.namenode.checkpoint.dir</name><value>file:///opt/install/hadoop-2.6.0-cdh5.14.2/hadoopDatas/dfs/snn/name</value></property><property><name>dfs.namenode.checkpoint.edits.dir</name><value>file:///opt/install/hadoop-2.6.0-cdh5.14.2/hadoopDatas/dfs/nn/snn/edits</value></property><property><name>dfs.replication</name><value>3</value></property><property><name>dfs.permissions</name><value>false</value></property><property><name>dfs.blocksize</name><value>134217728</value></property></configuration>

配置详见:https://hadoop.apache.org/docs/r2.7.0/hadoop-project-dist/hadoop-hdfs/hdfs-default.xml

# 配置mapred-site.xml<configuration><property><name>mapreduce.framework.name</name><value>yarn</value></property><property><name>mapreduce.job.ubertask.enable</name><value>true</value></property><property><name>mapreduce.jobhistory.address</name><value>node01:10020</value></property><property><name>mapreduce.jobhistory.webapp.address</name><value>node01:19888</value></property></configuration>

配置详见:https://hadoop.apache.org/docs/r2.7.0/hadoop-mapreduce-client/hadoop-mapreduce-client-core/mapred-default.xml

# 配置yarn-site.xml<configuration><property><name>yarn.resourcemanager.hostname</name><value>node01</value></property><property><name>yarn.nodemanager.aux-services</name><value>mapreduce_shuffle</value></property><property><name>yarn.log-aggregation-enable</name><value>true</value></property><property><name>yarn.log.server.url</name><value>http://node01:19888/jobhistory/logs</value></property><!--多长时间聚合删除一次日志 此处--><property><name>yarn.log-aggregation.retain-seconds</name><value>2592000</value><!--30 day--></property><!--时间在几秒钟内保留用户日志。只适用于如果日志聚合是禁用的--><property><name>yarn.nodemanager.log.retain-seconds</name><value>604800</value><!--7 day--></property><!--指定文件压缩类型用于压缩汇总日志--><property><name>yarn.nodemanager.log-aggregation.compression-type</name><value>gz</value></property><!-- nodemanager本地文件存储目录--><property><name>yarn.nodemanager.local-dirs</name><value>/opt/install/hadoop-2.6.0-cdh5.14.2/hadoopDatas/yarn/local</value></property><!-- resourceManager 保存最大的任务完成个数 --><property><name>yarn.resourcemanager.max-completed-applications</name><value>1000</value></property></configuration>

配置详见:https://hadoop.apache.org/docs/r2.7.0/hadoop-yarn/hadoop-yarn-common/yarn-default.xml

# 编辑slaves#将localhost这一行删除掉node01node02node03# 创建文件存放目录mkdir -p /opt/install/hadoop-2.6.0-cdh5.14.2/hadoopDatas/tempDatasmkdir -p /opt/install/hadoop-2.6.0-cdh5.14.2/hadoopDatas/namenodeDatasmkdir -p /opt/install/hadoop-2.6.0-cdh5.14.2/hadoopDatas/namenodeDatasmkdir -p /opt/install/hadoop-2.6.0-cdh5.14.2/hadoopDatas/datanodeDatasmkdir -p /opt/install/hadoop-2.6.0-cdh5.14.2/hadoopDatas/dfs/nn/editsmkdir -p /opt/install/hadoop-2.6.0-cdh5.14.2/hadoopDatas/dfs/snn/namemkdir -p /opt/install/hadoop-2.6.0-cdh5.14.2/hadoopDatas/dfs/nn/snn/edits

修改hadoop的pid路径

# 需要修改pid路径,默认在/tmp下,linux系统会定期清理临时目录,会导致正常无法关闭集群vim hadoop-daemon.shHADOOP_PID_DIR=/opt/install/hadoop-2.6.0-cdh5.14.2/pidvim yarn-daemon.shYARN_PID_DIR=/opt/install/hadoop-2.6.0-cdh5.14.2/pid

启动和关闭hadoop集群

cd /opt# 先删除用户文档,否则复制会很慢cd install/hadoop-2.6.0-cdh5.14.2/share/rm -rf doc/# 复制一台的配置到集群其他所有节点scp -P 12324 -r install node02:$PWDscp -P 12324 -r install node03:$PWD# 每个节点都需要保证所有目录权限是hadoop用户chown -R hadoop:hadoop /optchmod -R 755 /optchmod -R g+w /optchmod -R o+w /opt# node01上执行格式化hdfs namenode -format# 启动集群start-all.sh# 关闭集群stop-all.sh

03

—

Zookeeper集群搭建

下载zookeeper压缩包

wget http://archive.cloudera.com/cdh5/cdh/5/zookeeper-3.4.5-cdh5.14.2.tar.gztar -zxvf zookeeper-3.4.5-cdh5.14.2.tar.gz -C /opt/install/

修改zookeeper配置

cd /opt/install/zookeeper-3.4.5-cdh5.14.2/conf# 配置zoo.cfgcp zoo_sample.cfg zoo.cfgmkdir -p /opt/install/zookeeper-3.4.5-cdh5.14.2/zkdatasvim zoo.cfgdataDir=/opt/install/zookeeper-3.4.5-cdh5.14.2/zkdatasautopurge.snapRetainCount=3autopurge.purgeInterval=1server.1=node01:2888:3888server.2=node02:2888:3888server.3=node03:2888:3888# 分发到集群其他节点scp -P 12324 -r /opt/install/zookeeper-3.4.5-cdh5.14.2/ node02:$PWDscp -P 12324 -r /opt/install/zookeeper-3.4.5-cdh5.14.2/ node03:$PWD# 三台节点分别添加myid配置echo 1 > /opt/install/zookeeper-3.4.5-cdh5.14.2/zkdatas/myidecho 2 > /opt/install/zookeeper-3.4.5-cdh5.14.2/zkdatas/myidecho 3 > /opt/install/zookeeper-3.4.5-cdh5.14.2/zkdatas/myid

环境变量

# 集群机器都要修改环境变量cd ~vim .bash_profileexport ZK_HOME=/opt/install/zookeeper-3.4.5-cdh5.14.2export PATH=$PATH:$ZK_HOME/binsource .bash_profile

启动集群

# 集群所有节点都执行zkServer.sh start# 查看集群节点状态zkServer.sh status# 集群所有节点关闭zkServer.sh stop

04

—

HBase集群搭建

下载hbase压缩包

wget http://archive.cloudera.com/cdh5/cdh/5/hbase-1.2.0-cdh5.14.2.tar.gztar -xzvf hbase-1.2.0-cdh5.14.2.tar.gz -C /opt/install/

修改hbase配置

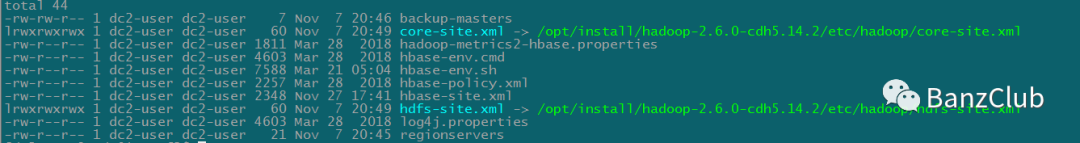

cd /opt/install/hbase-1.2.0-cdh5.14.2/conf/# 配置hbase-env.shvim hbase-env.shexport JAVA_HOME=/opt/install/jdk1.8.0_141export HBASE_MANAGES_ZK=false# 设置ssh端口,非默认22export HBASE_SSH_OPTS="-p 12324"# 修改hbase pid文件位置export HBASE_PID_DIR=/opt/install/hbase-1.2.0-cdh5.14.2/pids# 配置hbase-site.xmlvim hbase-site.xml<configuration><property><name>hbase.rootdir</name><value>hdfs://node01:8020/HBase</value></property><property><name>hbase.cluster.distributed</name><value>true</value></property><!-- 0.98后的新变动,之前版本没有.port,默认端口为60000 --><property><name>hbase.master.port</name><value>16000</value></property><property><name>hbase.zookeeper.quorum</name><value>node01,node02,node03</value></property><property><name>hbase.zookeeper.property.clientPort</name><value>2181</value></property><property><name>hbase.zookeeper.property.dataDir</name><value>/opt/install/zookeeper-3.4.5-cdh5.14.2/zkdatas</value></property><property><name>zookeeper.znode.parent</name><value>/HBase</value></property></configuration># 配置 regionserversvim regionservers# 指定hbase从节点node01node02node03# 配置 backup-mastersvim backup-masters# node02作为备份的HMaster节点node02# 分发到集群其他节点scp -P 12324 -r /opt/install/hbase-1.2.0-cdh5.14.2/ node02:$PWDscp -P 12324 -r /opt/install/hbase-1.2.0-cdh5.14.2/ node03:$PWD# 创建软连接,HBase集群需要读取hadoop的core-site.xml、hdfs-site.xml的配置文件信息ln -s /opt/install/hadoop-2.6.0-cdh5.14.2/etc/hadoop/core-site.xml /opt/install/hbase-1.2.0-cdh5.14.2/conf/core-site.xmlln -s /opt/install/hadoop-2.6.0-cdh5.14.2/etc/hadoop/hdfs-site.xml /opt/install/hbase-1.2.0-cdh5.14.2/conf/hdfs-site.xml

环境变量

# 集群机器都要修改环境变量cd ~vim .bash_profileexport HBASE_HOME=/opt/install/hbase-1.2.0-cdh5.14.2export PATH=$PATH:$HBASE_HOME/binsource .bash_profile

启动集群

# 在主节点node01执行start-hbase.sh# 集群关闭stop-hbase.sh

05

—

Spark集群搭建

下载spark压缩包

wget https://archive.apache.org/dist/spark/spark-2.3.3/spark-2.3.3-bin-hadoop2.7.tgz# 解压tar -zxvf spark-2.3.3-bin-hadoop2.7.tgz -C /opt/install# 重命名mv spark-2.3.3-bin-hadoop2.7 spark

spark配置

cd /opt/install/spark/conf# 配置spark-env.shmv spark-env.sh.template spark-env.shvim spark-env.sh# 增加java\zookeeper配置export JAVA_HOME=/opt/install/jdk1.8.0_141export SPARK_DAEMON_JAVA_OPTS="-Dspark.deploy.recoveryMode=ZOOKEEPER -Dspark.deploy.zookeeper.url=node01:2181,node02:2181,node03:2181 -Dspark.deploy.zookeeper.dir=/spark"# 设定master:qexport SPARK_MASTER_IP=node01export SPARK_MASTER_PORT=7077# 设置ssh端口,非默认22export SPARK_SSH_OPTS="-p 12324"# 修改spark pid文件位置export SPARK_PID_DIR=/opt/install/spark/pid

# 配置slavesmv slaves.template slavesvim slaves#指定spark集群的worker节点node02node03

环境变量

# 分发到集群其他节点scp -P 12324 -r /opt/install/spark node02:/opt/installscp -P 12324 -r /opt/install/spark node03:/opt/install# 集群机器都要修改环境变量cd ~vim .bash_profileexport SPARK_HOME=/opt/install/sparkexport PATH=$PATH:$SPARK_HOME/bin:$SPARK_HOME/sbinsource .bash_profile

启动集群

# 任意一台机器执行$SPARK_HOME/sbin/start-all.sh# 单独启动master$SPARK_HOME/sbin/start-master.sh# 处于active Master主节点执行$SPARK_HOME/sbin/stop-all.sh# 处于standBy Master主节点执行$SPARK_HOME/sbin/stop-master.sh

文章转载自BanzClub,如果涉嫌侵权,请发送邮件至:contact@modb.pro进行举报,并提供相关证据,一经查实,墨天轮将立刻删除相关内容。