因为客户用到 GBase 8a 数据库且期望能够提供支持,就研究一下 GBase 这款数据库。本文主要分享 GBase 8a MPP 数据库部署和备份恢复。初次学习,个人观点,仅供参考。

Gbase 8a

部署

Gbase 8a

数据库简介

GBase 数据库有多个序列版本,官网都有提供试用版下载。就这一点就先点个赞,国产数据库能做到数据库提供公开下载的不多。

GBase 8a

的 a

是 analytic

,分析型。包括单机的 GBase 8a 和 集群版的GBase 8a MPP Cluster

。如果不单独指出单机,默认指集群。本文下载的是集群版本,版本号是 V9

。

GBase 8a

分析型数据库的主要市场是商业分析和商业智能市场。产品主要应用在政府、党委、安全敏感部门、国防、统计、审计、银监、证监等领域,以及电信、金融、电力等拥有海量业务数据的行业。

部署准备

Gbase 8a 安装程序使用自动化脚本,依赖 Python2

。 Gbase 8a 运行支持不同用户的资源管理,依赖 cgroup

组件。所以需要提前安装相应的依赖软件。

yum -y install psmisc libcgroup libcgroup-tools

alternatives --set python usr/bin/python2

此外 Gbase 所有软件进程运行在用户 gbase

下。下载后的安装软件目录(/opt/gcinstall

)也需要授权 gbase

用户访问。

useradd gbase

echo 'gbase:gbase'|chpasswd

chown -R gbase:gbase opt/gcinstall

这个部署目录最好是一直保留,以后做维护也可能还需要。

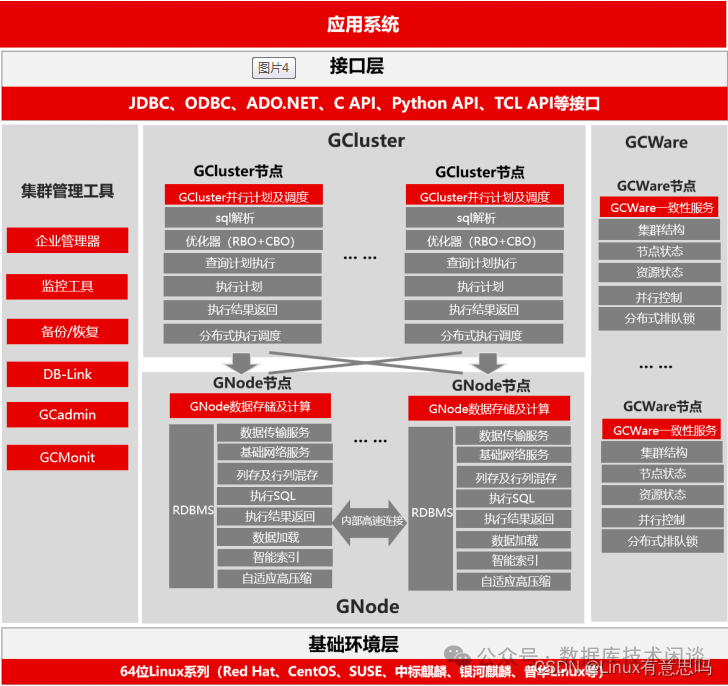

产品架构

注:这一段参考 GBase 官网资料。

GBase 8a MPP Cluster

采用 MPP

+ Shared Nothing

的分布式联邦架构,节点间通过TCP/IP

网络进行通信。每个节点使用本地磁盘来存储数据,支持对称部署和非对称部署。

GBase 8a MPP Cluster

产品总共包含三大核心组件及辅助功能组件,其中核心组件包含分布式管理集群 GCWare

、分布式调度集群 GCluster

和分布式存储计算集群GNode

, 所有组件的功能分别为:

GCWare

组成分布式管理集群,为集群提供一致性服务。主要负责记录并保存集群结构、节 点状态、节点资源状态、并行控制和分布式排队锁等信息。在多副本数据操作时, 记录和查询可操作节点,提供各节点数据一致性状态。

GCluster

组成分布式调度集群,是整个集群得统一入口。主要负责从业务端接受连接并将查 询结果返回给业务端。 GCluster 会接受 SQL、进行解析优化,生成分布式执行计划, 选取可操作的节点执行分布式调度,并将结果反馈给业务端。

GNode

组成分布式存储集群,是集群数据的存储和计算单元。主要负责存储集群数据、接 收和执行 GCluster 下发的 SQL 并将执行结果返回给 GCluster、 从加载服务器接收数据进行数据加载。

GCMonit

用于实时监测 GCluster 和 GNode 核心组件的运行状态, 一旦发现某个服务程序的进 程状态发生变化,就根据配置文件中的内容来执行相应的服务启动命令,从而保证 服务组件正常运行。

GCware_Monit

用于实时监测 GCware 组件的运行状态, 一旦发现服务进程状态发生变化,就根据 配置文件中的内容来执行相应的服务启动命令,从而保证服务组件正常运行。

GCRecover & GCSyncServer

用于多副本间的数据同步。一旦发生多副本间数据文件不一致则调用该进程进行同 步,从而保证多副本数据文件的一致性。

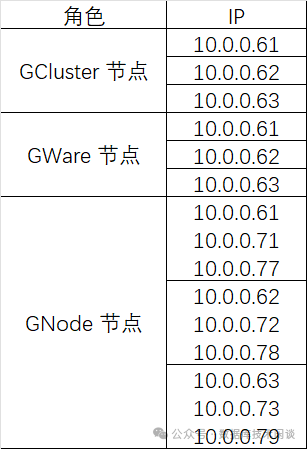

部署规划

GBase 8a MPP Cluster

支持对称和非对称部署,生产环境为了标准化都是对称部署。对称结构符合美学,方便研究。此外,为了最大化利用主机硬件能力,GBase 8a

也支持上面多种角色部署在同一个服务器上,并且数据库节点GNode

实例支持单机部署多实例。当然如果生产环境集群规模和数据量非常大,就还是分开部署比较好。

客户生产部署用了 6 节点,是 3+3

的部署,也就是数据节点是 2 副本架构。同时为了一次性搞清楚 GBase 8a

原理,我在测试环境选用 3 台虚拟机部署三副本,并且在每个虚拟机上部署 3 各 GNode

实例来模拟 3+3+3

的部署拓扑。

单机部署多个 GNode

实例需要为每个 GNode

准备单独的 IP 资源和存储路径。

IP 规划如下:

GBase 8a

各个组件有自己的内存分配策略,这里由于我虚拟机总的可用性内存不大(16G

),因此后面可能会存在组件启动失败。到时候需要相应的修改一下组件的配置文件中内存参数。

部署过程

部署要用到安装目录下的参数文件 demo.options

,填入上面规划的节点。参数中密码替换为真实密码。

[gbase@server061 gcinstall]$ cat demo.options

installPrefix= home/gbase

coordinateHost = 10.0.0.61,10.0.0.62,10.0.0.63

coordinateHostNodeID = 61,62,63

dataHost = 10.0.0.61,10.0.0.62,10.0.0.63,10.0.0.71,10.0.0.72,10.0.0.73,10.0.0.77,10.0.0.78,10.0.0.79

gcwareHost = 10.0.0.61,10.0.0.62,10.0.0.63

gcwareHostNodeID = 61,62,63

dbaUser = gbase

dbaGroup = gbase

dbaPwd = 'gbase'

rootPwd = '******'

部署就一句命令:

su - gbase

cd /opt/gcinstall

./gcinstall.py --silent=demo.options

部署一般都是很顺利的,部署最后会启动集群各个组件。可能有组件启动失败先忽略。使用命令 gcadmin

查看集群节点状态。

[gbase@server061 ~]$ gcadmin

CLUSTER STATE: ACTIVE

====================================

| GBASE GCWARE CLUSTER INFORMATION |

====================================

| NodeName | IpAddress | gcware |

------------------------------------

| gcware1 | 10.0.0.61 | OPEN |

------------------------------------

| gcware2 | 10.0.0.62 | OPEN |

------------------------------------

| gcware3 | 10.0.0.63 | OPEN |

------------------------------------

===================================================

| GBASE COORDINATOR CLUSTER INFORMATION |

===================================================

| NodeName | IpAddress | gcluster | DataState |

---------------------------------------------------

| coordinator1 | 10.0.0.61 | OPEN | 0 |

---------------------------------------------------

| coordinator2 | 10.0.0.62 | OPEN | 0 |

---------------------------------------------------

| coordinator3 | 10.0.0.63 | OPEN | 0 |

---------------------------------------------------

==========================================================

| GBASE CLUSTER FREE DATA NODE INFORMATION |

==========================================================

| NodeName | IpAddress | gnode | syncserver | DataState |

----------------------------------------------------------

| FreeNode1 | 10.0.0.61 | OPEN | OPEN | 0 |

----------------------------------------------------------

| FreeNode2 | 10.0.0.71 | OPEN | OPEN | 0 |

----------------------------------------------------------

| FreeNode3 | 10.0.0.77 | OPEN | OPEN | 0 |

----------------------------------------------------------

| FreeNode4 | 10.0.0.62 | OPEN | OPEN | 0 |

----------------------------------------------------------

| FreeNode5 | 10.0.0.72 | OPEN | OPEN | 0 |

----------------------------------------------------------

| FreeNode6 | 10.0.0.78 | OPEN | OPEN | 0 |

----------------------------------------------------------

| FreeNode7 | 10.0.0.63 | OPEN | OPEN | 0 |

----------------------------------------------------------

| FreeNode8 | 10.0.0.73 | OPEN | OPEN | 0 |

----------------------------------------------------------

| FreeNode9 | 10.0.0.79 | OPEN | OPEN | 0 |

----------------------------------------------------------

0 virtual cluster

3 coordinator node

9 free data node

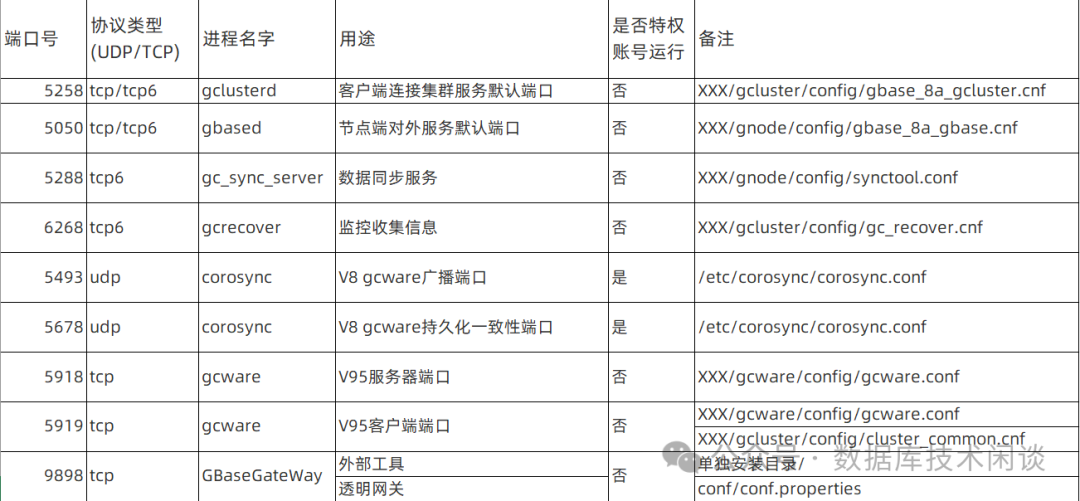

进程监听和列表如下:

gbase@server061 config]$ netstat -ntlp |grep -v "-"

Not all processes could be identified, non-owned process info

will not be shown, you would have to be root to see it all.)

ctive Internet connections (only servers)

cp 0 0 10.0.0.77:5050 0.0.0.0:* LISTEN 10208/gbased

cp 0 0 10.0.0.71:5050 0.0.0.0:* LISTEN 9170/gbased

cp 0 0 10.0.0.61:5050 0.0.0.0:* LISTEN 8130/gbased

cp 0 0 0.0.0.0:6268 0.0.0.0:* LISTEN 11983/gcrecover

cp 0 0 10.0.0.61:5918 0.0.0.0:* LISTEN 3576/gcware

cp 0 0 10.0.0.61:5919 0.0.0.0:* LISTEN 3576/gcware

cp 0 0 10.0.0.77:5288 0.0.0.0:* LISTEN 11351/gc_sync_serve

cp 0 0 10.0.0.71:5288 0.0.0.0:* LISTEN 11332/gc_sync_serve

cp 0 0 10.0.0.61:5288 0.0.0.0:* LISTEN 11317/gc_sync_serve

cp6 0 0 :::5258 :::* LISTEN 11367/gclusterd

其中进程监听端口说明如下

部署目录分析

所有节点的部署目录都相同,严格来说单机多实例部署时,需要将各个目录映射到不同的盘上。这个可以先部署好,然后再停掉集群服务,将相应的目录用软链接方式映射到独立的文件系统(对应独立的盘)下。

下面主要是研究目录结构。

[gbase@server061 ~]$ tree -L 2 home/gbase/10*

/home/gbase/10.0.0.61

├── gbase_8a_5050.sock

├── gbase_profile

├── gcluster

│ ├── config

│ ├── log

│ ├── server

│ ├── tmpdata

│ ├── translog

│ └── userdata

├── gcluster_5258.sock

├── gcware

│ ├── bin

│ ├── config

│ ├── data

│ ├── gcware_server

│ ├── lib64

│ ├── libexec

│ ├── liblog

│ ├── log

│ ├── python

│ ├── run

│ └── sbin

├── gcware_profile

└── gnode

├── config

├── log

├── server

├── tmpdata

├── translog

└── userdata

/home/gbase/10.0.0.71

├── gbase_8a_5050.sock

├── gbase_profile

├── gcluster

│ ├── config

│ ├── log

│ └── server

├── gcware

│ └── lib64

└── gnode

├── config

├── log

├── server

├── tmpdata

├── translog

└── userdata

/home/gbase/10.0.0.77

├── gbase_8a_5050.sock

├── gbase_profile

├── gcluster

│ ├── config

│ ├── log

│ └── server

├── gcware

│ └── lib64

└── gnode

├── config

├── log

├── server

├── tmpdata

├── translog

└── userdata

52 directories, 8 files

每个 IP 一个目录,61

上由于部署有三个组件,所以子目录内容会相对多一些。每个组件都有配置文件目录 config

和运行日志目录 log

,这样出问题定位比较方便。

进程说明

通过两个守护进程(也是监控进程)的状态可以很方便查看相关进程,列表如如下:

[gbase@server061 ~]$ gcmonit.sh status

+-----------------------------------------------------------------------------------------------------------------------------------+

|SEG_NAME PROG_NAME STATUS PID |

+-----------------------------------------------------------------------------------------------------------------------------------+

|gcluster gclusterd Running 11367 |

|gcrecover gcrecover Running 11983 |

|gbase_10.0.0.61 /home/gbase/10.0.0.61/gnode/server/bin/gbased Running 8130 |

|syncserver_10.0.0.61 home/gbase/10.0.0.61/gnode/server/bin/gc_sync_server Running 11317 |

|gbase_10.0.0.71 /home/gbase/10.0.0.71/gnode/server/bin/gbased Running 9170 |

|syncserver_10.0.0.71 home/gbase/10.0.0.71/gnode/server/bin/gc_sync_server Running 11332 |

|gbase_10.0.0.77 /home/gbase/10.0.0.77/gnode/server/bin/gbased Running 10208 |

|syncserver_10.0.0.77 home/gbase/10.0.0.77/gnode/server/bin/gc_sync_server Running 11351 |

|gcmmonit gcmmonit Running 11362 |

+-----------------------------------------------------------------------------------------------------------------------------------+

[gbase@server061 ~]$ gcware_monit.sh status

+-----------------------------------------------------------------------------------------------------------------------------------+

|SEG_NAME PROG_NAME STATUS PID |

+-----------------------------------------------------------------------------------------------------------------------------------+

|gcware gcware Running 3576 |

|gcware_mmonit gcware_mmonit Running 4668 |

+-----------------------------------------------------------------------------------------------------------------------------------+

[gbase@server061 ~]$

查看 GBase 8a

相关服务状态用命令 gcadmin

。如果进程没有启动,可以启动全部服务或者相应的服务。

[gbase@server061 ~]$ gcware_services all start

Starting gcware : [ OK ]

Starting GCWareMonit success!

[gbase@server061 ~]$ gcluster_services all start

Starting gbase : [ OK ]

Starting gbase : [ OK ]

Starting gbase : [ OK ]

Starting syncserver : [ OK ]

Starting syncserver : [ OK ]

Starting syncserver : [ OK ]

Starting gcluster : [ OK ]

Starting gcrecover : [ OK ]

部署问题处理

主要问题可能是 gbase

数据库进程启动失败,跟内存不足有关。上面如果有哪个节点启动失败,就到节点上对应组件目录的配置目录 config

下修改相应的配置文件。

以 IP 71

的 GNode

实例数据库配置文件为例,设置一下 gbase

各个内存参数(默认值都设置的挺小的),这样启动就不报错了。

[gbase@server061 config]$ pwd

/home/gbase/10.0.0.71/gnode/config

[gbase@server061 config]$ vim gbase_8a_gbase.cnf

gbase_memory_pct_target=0.8

gbase_heap_data=512M

gbase_heap_temp=256M

gbase_heap_large=256M

gbase_buffer_insert=256M

gbase_buffer_hgrby=10M

gbase_buffer_distgrby=10M

gbase_buffer_hj=10M

gbase_buffer_sj=10M

gbase_buffer_sort=10M

gbase_buffer_rowset=10M

gbase_buffer_result=10M

修改配置文件后,再单独启动相应的服务。注意每个节点都要修改启动。这里借助于 Linux 下的 clush

命令批量启动。

[gbase@server061 ~]$ cat etc/clustershell/groups

all:gbase0[1-9]

control:gbase0[1-3]

node:gbase0[1-9]

gene:gbase0[1-9]

other:gbase0[2-3]

[gbase@server061 ~]$ clush -g control gcluster_services gbase start

gbase01: Starting gbase : [ OK ]

gbase02: Starting gbase : [ OK ]

gbase01: Starting gbase : [ OK ]

gbase02: Starting gbase : [ OK ]

gbase01: Starting gbase : [ OK ]

gbase02: Starting gbase : [ OK ]

gbase03: Starting gbase : [ OK ]

gbase03: Starting gbase : [ OK ]

gbase03: Starting gbase : [ OK ]

GBase 8a MPP Cluster

使用

GBase 8a

的虚拟集群(VC

)

GBase 8a

数据库支持虚拟集群(Virtual Cluster

,简称 VC

)概念,类似于 ORACLE 和 OceanBase 的租户(tenant

)概念。默认部署的 GBase 8a

集群会有一个默认的 VC

。

集群规模通常也可以很大,如果只有一个 VC

,这种使用方式并不是最优,建议不同业务数据用多 VC 模式。GBase 8a

的多 VC 之间在访问上是彼此隔离的,管理上会稍微方便一些。

登录 gbase 8a

数据库使用命令行 gccli

或 gbase

。

[gbase@server061 ~]$ gccli -u mq -p

Enter password:

GBase client Free Edition 9.5.3.28.12509af27. Copyright (c) 2004-2025, GBase. All Rights Reserved.

gbase> show databases;

ERROR 1818 (HY000): No VC selected.

gbase> use vc vc0;

Query OK, 0 rows affected (Elapsed: 00:00:00.00)

gbase> show databases;

+--------------------+

| Database |

+--------------------+

| information_schema |

| performance_schema |

| gbase |

| gctmpdb |

| gclusterdb |

| tpcds |

| tpcds2 |

+--------------------+

7 rows inset (Elapsed: 00:00:00.00)

gbase> show vcs;

+---------+------+---------+

| id | name | default |

+---------+------+---------+

| vc00003 | vc0 | |

+---------+------+---------+

1 row inset (Elapsed: 00:00:00.00)

上面这种使用体验跟 MySQL 数据库是很像的,多一个区别就是要先切换到对应的 VC

下,切换命令是:use vc vc0

。当然,这里的前提是要先创建 vc0

。如果是默认的 VC

就不需要了。 这里推测 gbase

访问用户表的完整路径是 :VC名.数据库名.表名

。所以,如果没有切换 VC

,用下面方式也可以访问表。

gbase> desc vc0.tpcds.warehouse;

+-------------------+--------------+------+-----+---------+-------+

| Field | Type | Null | Key | Default | Extra |

+-------------------+--------------+------+-----+---------+-------+

| w_warehouse_sk | int(11) | NO | PRI | NULL | |

| w_warehouse_id | char(16) | NO | | NULL | |

| w_warehouse_name | varchar(20) | YES | | NULL | |

| w_warehouse_sq_ft | int(11) | YES | | NULL | |

| w_street_number | char(10) | YES | | NULL | |

| w_street_name | varchar(60) | YES | | NULL | |

| w_street_type | char(15) | YES | | NULL | |

| w_suite_number | char(10) | YES | | NULL | |

| w_city | varchar(60) | YES | | NULL | |

| w_county | varchar(30) | YES | | NULL | |

| w_state | char(2) | YES | | NULL | |

| w_zip | char(10) | YES | | NULL | |

| w_country | varchar(20) | YES | | NULL | |

| w_gmt_offset | decimal(5,2) | YES | | NULL | |

+-------------------+--------------+------+-----+---------+-------+

14 rows in set (Elapsed: 00:00:00.01)

GBase 8a

创建多 `VC·

多 VC 实际上就是将集群的节点资源瓜分掉,不同 VC 的数据节点不一样。创建 VC

也是通过参数化文件。下面就将这 9 台机器分为两个 VC

,参数文件一看就明白。

[gbase@server061 ~]$ cat create_vc1.xml

<?xml version='1.0' encoding="utf-8"?>

<servers>

<rack><node ip="10.0.0.61"/></rack>

<rack><node ip="10.0.0.62"/></rack>

<rack><node ip="10.0.0.63"/></rack>

<vc_name name="vc1"/>

<comment message="vc1 comment."/>

</servers>

[gbase@server061 ~]$ cat create_vc2.xml

<?xml version='1.0' encoding="utf-8"?>

<servers>

<rack>

<node ip="10.0.0.71"/>

<node ip="10.0.0.77"/>

</rack>

<rack>

<node ip="10.0.0.72"/>

<node ip="10.0.0.78"/>

</rack>

<rack>

<node ip="10.0.0.73"/>

<node ip="10.0.0.79"/>

</rack>

<vc_name name="vc2"/>

<comment message="vc2 comment"/>

</servers>

创建命令如下:

[gbase@server061 ~]$ gcadmin createvc create_vc1.xml

parse config file create_vc1.xml

generate vc id: vc00004

add vc information to cluster

add nodes to vc

gcadmin create vc [vc1] successful

[gbase@server061 ~]$ gcadmin createvc create_vc2.xml

parse config file create_vc2.xml

generate vc id: vc00005

add vc information to cluster

add nodes to vc

gcadmin create vc [vc2] successful

分别查看各个虚拟集群的信息用命令 gcadmin showcluster vc [vc名称]

[gbase@server061 ~]$ gcadmin showcluster vc vc1

CLUSTER STATE: ACTIVE

VIRTUAL CLUSTER MODE: NORMAL

================================================

| GBASE VIRTUAL CLUSTER INFORMATION |

================================================

| VcName | DistributionId | comment |

------------------------------------------------

| vc1 | | vc1 comment. |

------------------------------------------------

=========================================================================================================

| VIRTUAL CLUSTER DATA NODE INFORMATION |

=========================================================================================================

| NodeName | IpAddress | DistributionId | gnode | syncserver | DataState |

---------------------------------------------------------------------------------------------------------

| node1 | 10.0.0.61 | | OPEN | OPEN | 0 |

---------------------------------------------------------------------------------------------------------

| node2 | 10.0.0.62 | | OPEN | OPEN | 0 |

---------------------------------------------------------------------------------------------------------

| node3 | 10.0.0.63 | | OPEN | OPEN | 0 |

---------------------------------------------------------------------------------------------------------

3 data node

[gbase@server061 ~]$ gcadmin showcluster vc vc2

CLUSTER STATE: ACTIVE

VIRTUAL CLUSTER MODE: NORMAL

===============================================

| GBASE VIRTUAL CLUSTER INFORMATION |

===============================================

| VcName | DistributionId | comment |

-----------------------------------------------

| vc2 | | vc2 comment |

-----------------------------------------------

=========================================================================================================

| VIRTUAL CLUSTER DATA NODE INFORMATION |

=========================================================================================================

| NodeName | IpAddress | DistributionId | gnode | syncserver | DataState |

---------------------------------------------------------------------------------------------------------

| node1 | 10.0.0.71 | | OPEN | OPEN | 0 |

---------------------------------------------------------------------------------------------------------

| node2 | 10.0.0.77 | | OPEN | OPEN | 0 |

---------------------------------------------------------------------------------------------------------

| node3 | 10.0.0.72 | | OPEN | OPEN | 0 |

---------------------------------------------------------------------------------------------------------

| node4 | 10.0.0.78 | | OPEN | OPEN | 0 |

---------------------------------------------------------------------------------------------------------

| node5 | 10.0.0.73 | | OPEN | OPEN | 0 |

---------------------------------------------------------------------------------------------------------

| node6 | 10.0.0.79 | | OPEN | OPEN | 0 |

---------------------------------------------------------------------------------------------------------

6 data node

此时登录数据库可以查看VC

列表。

[gbase@server061 ~]$ gccli -u gbase -pgbase

GBase client Free Edition 9.5.3.28.12509af27. Copyright (c) 2004-2025, GBase. All Rights Reserved.

gbase> show vcs;

+---------+------+---------+

| id | name | default |

+---------+------+---------+

| vc00004 | vc1 | |

| vc00005 | vc2 | |

+---------+------+---------+

2 rows in set (Elapsed: 00:00:00.00)

查看数据库列表之前需要切换到具体的 VC

。

gbase> show databases;

ERROR 1818 (HY000): No VC selected.

gbase> use vc vc1;

Query OK, 0 rows affected (Elapsed: 00:00:00.00)

gbase> showdatabases;

+--------------------+

| Database |

+--------------------+

| information_schema |

| performance_schema |

| gbase |

| gctmpdb |

+--------------------+

4 rows in set (Elapsed: 00:00:00.00)

gbase> use vc vc2;

Query OK, 0 rows affected (Elapsed: 00:00:00.00)

gbase> showdatabases;

+--------------------+

| Database |

+--------------------+

| information_schema |

| performance_schema |

| gbase |

| gctmpdb |

+--------------------+

4 rows in set (Elapsed: 00:00:00.00)

创建分布策略和数据库

VC

创建好后,直接创建数据库还会报错。

gbase> create database tpcds;

ERROR 1707 (HY000): gcluster command error: (GBA-02CO-0003) nodedatamap is not initialized.

还需要初始化数据目录,使用 SQL 命令:initnodedatamap

。

gbase> initnodedatamap;

ERROR 1707 (HY000): gcluster command error: (GBA-02CO-0004) Can not get a distribution id from gcware.

此时还报错,提示要先创建一个分布策略(distribution

)。这看起来是 GBase 8a

的一个特有的东西,大概是描述数据多副本的分布方式的。 前面创建 VC

使用了三组机架(rack

),其用意是这些机架的服务器是用于存储数据的多个备份(或者叫副本)。但真正要实现数据多副本设置的还是这里的分布策略。

这个分布策略看起来是 MPP 分布式的核心,如果熟悉其他分布式数据库产品的话,看这个概念并不陌生,不同数据库里叫法不一样。

分布策略的创建也是通过配置文件方式,分布策略是在指定的 VC

下创建,所以配置文件中的节点是VC

节点的子集。

<?xml version="1.0" encoding="utf-8"?>

<servers>

<rack><node ip="10.0.0.61"/></rack>

<rack><node ip="10.0.0.62"/></rack>

<rack><node ip="10.0.0.63"/></rack>

</servers>

[gbase@server061 ~]$ cat gcChangeInfo_vc2.xml

<?xml version="1.0" encoding="utf-8"?>

<servers>

<rack>

<node ip="10.0.0.71" />

<node ip="10.0.0.77" />

</rack>

<rack>

<node ip="10.0.0.72" />

<node ip="10.0.0.78" />

</rack>

<rack>

<node ip="10.0.0.73" />

<node ip="10.0.0.79" />

</rack>

</servers>

创建分布策略使用命令:gcadmin showdistribution

。 命令说明:

p

每个数据节点存放的主

分片数。pattern 1

模式下,1<=p<rack 内节点数d

每个主分片的副本数,取值0,1,2

默认1

.最大2pattern

描述分片规则的模板。1

为rack高可用,机架间互备;2

为节点高可用,节点间互备。默认为1

。

下面创建第一个普通的分布策略,1

主1

备。

[gbase@server061 ~]$ gcadmin distribution gcChangeInfo_vc1.xml p 1 d 1 pattern 1 vc vc1 dba_os_password 123456

gcadmin generate distribution ...

NOTE: node [10.0.0.61] is coordinator node, it shall be data node too

NOTE: node [10.0.0.62] is coordinator node, it shall be data node too

NOTE: node [10.0.0.63] is coordinator node, it shall be data node too

gcadmin generate distribution successful

[gbase@server061 ~]$ gcadmin showdistribution vc vc1

Distribution ID: 6 | State: new | Total segment num: 3

Primary Segment Node IP Segment ID Duplicate Segment node IP

========================================================================================================================

| 10.0.0.61 | 1 | 10.0.0.62 |

------------------------------------------------------------------------------------------------------------------------

| 10.0.0.62 | 2 | 10.0.0.63 |

------------------------------------------------------------------------------------------------------------------------

| 10.0.0.63 | 3 | 10.0.0.61 |

========================================================================================================================

下面创建第二个分布策略,1

主2

备。

[gbase@server061 ~]$ gcadmin distribution gcChangeInfo_vc2.xml p 1 d 2 pattern 1 vc vc2 dba_os_password 123456

gcadmin generate distribution ...

check vc vc2 os password

copy system table to 10.0.0.71

copy system table to 10.0.0.73

copy system table to 10.0.0.79

copy system table to 10.0.0.72

copy system table to 10.0.0.77

copy system table to 10.0.0.78

gcadmin generate distribution successful

[gbase@server061 ~]$ gcadmin showdistribution vc vc2

Distribution ID: 7 | State: new | Total segment num: 6

Primary Segment Node IP Segment ID Duplicate Segment node IP

========================================================================================================================

| 10.0.0.71 | 1 | 10.0.0.78 |

| | | 10.0.0.79 |

------------------------------------------------------------------------------------------------------------------------

| 10.0.0.77 | 2 | 10.0.0.72 |

| | | 10.0.0.73 |

------------------------------------------------------------------------------------------------------------------------

| 10.0.0.72 | 3 | 10.0.0.79 |

| | | 10.0.0.77 |

------------------------------------------------------------------------------------------------------------------------

| 10.0.0.78 | 4 | 10.0.0.73 |

| | | 10.0.0.71 |

------------------------------------------------------------------------------------------------------------------------

| 10.0.0.73 | 5 | 10.0.0.77 |

| | | 10.0.0.78 |

------------------------------------------------------------------------------------------------------------------------

| 10.0.0.79 | 6 | 10.0.0.71 |

| | | 10.0.0.72 |

========================================================================================================================

这里的这个分布策略里展示的主和备的数据片(segment

)位置初步接触是很难理解的。GBase 8a

看起来是将数据存储自动分片,并分布在对应VC

上所有节点上。对于同一个分片,其主备副本的位置分布采取的是一种错位分布的方式。就像上面的结果,原本想的 71

、72

、73

是统一位置的三组服务器,可以做 1

主2

备,结果 GBase 8a

偏偏将两个备副本错位存放到 78

和 79

上。我也是一时没有看懂这种用意。在 TDSQL、OceanBase 里,同一份数据的多副本存放就是“水平”存放的。

暂时不用想这个问题,后面初始化一些数据再看这个数据分布特征。

导入 CSV

文件

创建了分布策略后,就可以创建数据库了。

gbase> create database tpcds;

ERROR 1707 (HY000): gcluster command error: (GBA-02CO-0003) nodedatamap is not initialized.

gbase> initnodedatamap;

Query OK, 1 row affected (Elapsed: 00:00:00.32)

gbase> create database tpcds;

Query OK, 1 row affected (Elapsed: 00:00:00.02)

下面就将 TPCDS

的数据导入到两个 VC

的数据库中。TPCDS

数据生成方法这里就忽略了。

建表语句这里就取 2 个表作为例子,一个是非分区表表,一个是分区表。

create table catalog_sales

(

cs_sold_date_sk integer ,

cs_sold_time_sk integer ,

cs_ship_date_sk integer ,

cs_bill_customer_sk integer ,

cs_bill_cdemo_sk integer ,

cs_bill_hdemo_sk integer ,

cs_bill_addr_sk integer ,

cs_ship_customer_sk integer ,

cs_ship_cdemo_sk integer ,

cs_ship_hdemo_sk integer ,

cs_ship_addr_sk integer ,

cs_call_center_sk integer ,

cs_catalog_page_sk integer ,

cs_ship_mode_sk integer ,

cs_warehouse_sk integer ,

cs_item_sk integer notnull,

cs_promo_sk integer ,

cs_order_number integer notnull,

cs_quantity integer ,

cs_wholesale_cost decimal(7,2) ,

cs_list_price decimal(7,2) ,

cs_sales_price decimal(7,2) ,

cs_ext_discount_amt decimal(7,2) ,

cs_ext_sales_price decimal(7,2) ,

cs_ext_wholesale_cost decimal(7,2) ,

cs_ext_list_price decimal(7,2) ,

cs_ext_tax decimal(7,2) ,

cs_coupon_amt decimal(7,2) ,

cs_ext_ship_cost decimal(7,2) ,

cs_net_paid decimal(7,2) ,

cs_net_paid_inc_tax decimal(7,2) ,

cs_net_paid_inc_ship decimal(7,2) ,

cs_net_paid_inc_ship_tax decimal(7,2) ,

cs_net_profit decimal(7,2) ,

primary key (cs_item_sk, cs_order_number)

);

createtable customer

(

c_customer_sk integer notnull,

c_customer_id char(16) notnull,

c_current_cdemo_sk integer ,

c_current_hdemo_sk integer ,

c_current_addr_sk integer ,

c_first_shipto_date_sk integer ,

c_first_sales_date_sk integer ,

c_salutation char(10) ,

c_first_name char(20) ,

c_last_name char(30) ,

c_preferred_cust_flag char(1) ,

c_birth_day integer ,

c_birth_month integer ,

c_birth_year integer ,

c_birth_country varchar(20) ,

c_login char(13) ,

c_email_address char(50) ,

c_last_review_date char(10) ,

primary key (c_customer_sk)

)partitionbyhash(c_customer_sk) partitions8;

加载数据用 load

命令。只需要把 csv

文件放到其中一个数据节点。

load datainfile'file://10.0.0.61/data/1/tpcds2g/customer.dat'intotable customer fieldsterminatedby'|';

loaddatainfile'file://10.0.0.61/data/1/tpcds2g/catalog_sales.dat'intotable catalog_sales fieldsterminatedby'|';

然后查看一下表的文件分布。 首先看的是 VC1

的非分区表数据文件分布。

节点 10.0.0.61

:

[gbase@server061 ~]$ ls 10.0.0.*/gnode/userdata/gbase/tpcds/sys_tablespace/catalog_sales*

10.0.0.61/gnode/userdata/gbase/tpcds/sys_tablespace/catalog_sales_n1:

C00000.seg.1 C00004.seg.1 C00008.seg.1 C00012.seg.1 C00016.seg.1 C00020.seg.1 C00024.seg.1 C00028.seg.1 C00032.seg.1

C00001.seg.1 C00005.seg.1 C00009.seg.1 C00013.seg.1 C00017.seg.1 C00021.seg.1 C00025.seg.1 C00029.seg.1 C00033.seg.1

C00002.seg.1 C00006.seg.1 C00010.seg.1 C00014.seg.1 C00018.seg.1 C00022.seg.1 C00026.seg.1 C00030.seg.1

C00003.seg.1 C00007.seg.1 C00011.seg.1 C00015.seg.1 C00019.seg.1 C00023.seg.1 C00027.seg.1 C00031.seg.1

10.0.0.61/gnode/userdata/gbase/tpcds/sys_tablespace/catalog_sales_n3:

C00000.seg.1 C00004.seg.1 C00008.seg.1 C00012.seg.1 C00016.seg.1 C00020.seg.1 C00024.seg.1 C00028.seg.1 C00032.seg.1

C00001.seg.1 C00005.seg.1 C00009.seg.1 C00013.seg.1 C00017.seg.1 C00021.seg.1 C00025.seg.1 C00029.seg.1 C00033.seg.1

C00002.seg.1 C00006.seg.1 C00010.seg.1 C00014.seg.1 C00018.seg.1 C00022.seg.1 C00026.seg.1 C00030.seg.1

C00003.seg.1 C00007.seg.1 C00011.seg.1 C00015.seg.1 C00019.seg.1 C00023.seg.1 C00027.seg.1 C00031.seg.1

节点 10.0.0.62

:

[gbase@observer062 ~]$ ls 10.0.0.*/gnode/userdata/gbase/tpcds/sys_tablespace/catalog_sales*

10.0.0.62/gnode/userdata/gbase/tpcds/sys_tablespace/catalog_sales_n1:

C00000.seg.1 C00004.seg.1 C00008.seg.1 C00012.seg.1 C00016.seg.1 C00020.seg.1 C00024.seg.1 C00028.seg.1 C00032.seg.1

C00001.seg.1 C00005.seg.1 C00009.seg.1 C00013.seg.1 C00017.seg.1 C00021.seg.1 C00025.seg.1 C00029.seg.1 C00033.seg.1

C00002.seg.1 C00006.seg.1 C00010.seg.1 C00014.seg.1 C00018.seg.1 C00022.seg.1 C00026.seg.1 C00030.seg.1

C00003.seg.1 C00007.seg.1 C00011.seg.1 C00015.seg.1 C00019.seg.1 C00023.seg.1 C00027.seg.1 C00031.seg.1

10.0.0.62/gnode/userdata/gbase/tpcds/sys_tablespace/catalog_sales_n2:

C00000.seg.1 C00004.seg.1 C00008.seg.1 C00012.seg.1 C00016.seg.1 C00020.seg.1 C00024.seg.1 C00028.seg.1 C00032.seg.1

C00001.seg.1 C00005.seg.1 C00009.seg.1 C00013.seg.1 C00017.seg.1 C00021.seg.1 C00025.seg.1 C00029.seg.1 C00033.seg.1

C00002.seg.1 C00006.seg.1 C00010.seg.1 C00014.seg.1 C00018.seg.1 C00022.seg.1 C00026.seg.1 C00030.seg.1

C00003.seg.1 C00007.seg.1 C00011.seg.1 C00015.seg.1 C00019.seg.1 C00023.seg.1 C00027.seg.1 C00031.seg.1

节点 10.0.0.63

:

[gbase@server63 ~]$ ls 10.0.0.*/gnode/userdata/gbase/tpcds/sys_tablespace/catalog_sales*

10.0.0.63/gnode/userdata/gbase/tpcds/sys_tablespace/catalog_sales_n2:

C00000.seg.1 C00004.seg.1 C00008.seg.1 C00012.seg.1 C00016.seg.1 C00020.seg.1 C00024.seg.1 C00028.seg.1 C00032.seg.1

C00001.seg.1 C00005.seg.1 C00009.seg.1 C00013.seg.1 C00017.seg.1 C00021.seg.1 C00025.seg.1 C00029.seg.1 C00033.seg.1

C00002.seg.1 C00006.seg.1 C00010.seg.1 C00014.seg.1 C00018.seg.1 C00022.seg.1 C00026.seg.1 C00030.seg.1

C00003.seg.1 C00007.seg.1 C00011.seg.1 C00015.seg.1 C00019.seg.1 C00023.seg.1 C00027.seg.1 C00031.seg.1

10.0.0.63/gnode/userdata/gbase/tpcds/sys_tablespace/catalog_sales_n3:

C00000.seg.1 C00004.seg.1 C00008.seg.1 C00012.seg.1 C00016.seg.1 C00020.seg.1 C00024.seg.1 C00028.seg.1 C00032.seg.1

C00001.seg.1 C00005.seg.1 C00009.seg.1 C00013.seg.1 C00017.seg.1 C00021.seg.1 C00025.seg.1 C00029.seg.1 C00033.seg.1

C00002.seg.1 C00006.seg.1 C00010.seg.1 C00014.seg.1 C00018.seg.1 C00022.seg.1 C00026.seg.1 C00030.seg.1

C00003.seg.1 C00007.seg.1 C00011.seg.1 C00015.seg.1 C00019.seg.1 C00023.seg.1 C00027.seg.1 C00031.seg.1

从上面三个节点的文件可以看出,非分区表的数据存储会被拆分多个文件,每个文件都在其他节点上有 1个 对应的备份。 如果是分区表,这个会更复杂一些,每个分区的文件被拆分为多个文件。

10.0.0.61/gnode/userdata/gbase/tpcds/sys_tablespace/customer_n3:

10.0.0.61/gnode/userdata/gbase/tpcds/sys_tablespace/customer_n3#P#p0:

C00000.seg.1 C00002.seg.1 C00004.seg.1 C00006.seg.1 C00008.seg.1 C00010.seg.1 C00012.seg.1 C00014.seg.1 C00017.seg.1

C00001.seg.1 C00003.seg.1 C00005.seg.1 C00007.seg.1 C00009.seg.1 C00011.seg.1 C00013.seg.1 C00016.seg.1

10.0.0.61/gnode/userdata/gbase/tpcds/sys_tablespace/customer_n3#P#p1:

C00000.seg.1 C00002.seg.1 C00004.seg.1 C00006.seg.1 C00008.seg.1 C00010.seg.1 C00012.seg.1 C00014.seg.1 C00017.seg.1

C00001.seg.1 C00003.seg.1 C00005.seg.1 C00007.seg.1 C00009.seg.1 C00011.seg.1 C00013.seg.1 C00016.seg.1

总体感觉,GBase 8a

是将数据水平拆分后的管理交给文件系统去了。所以所在操作系统的文件句柄数打开参数设置必须非常高。

向上面这种分析方式在文件非常多的时候非常麻烦,还是要到数据库里看元数据视图方便一些。

select table_vc, table_schema, table_name, suffix, host, round(table_data_size/1024/1024) table_data_size_mb, round(table_storage_size/1024/1024/1024) table_storage_size, data_percent

from information_schema.CLUSTER_TABLE_SEGMENTS a

where table_vc='vc1'and table_schema='tpcds'and table_name='catalog_sales'

orderby suffix;

table_vc table_schema table_name suffix host table_data_size_mb table_storage_size data_percent

vc1 tpcds catalog_sales n1 10.0.0.61 71 0 33.3311%

vc1 tpcds catalog_sales n2 10.0.0.62 71 0 33.3329%

vc1 tpcds catalog_sales n3 10.0.0.63 71 0 33.3360%

select table_vc, table_schema, table_name, suffix, host, round(table_data_size/1024/1024) table_data_size_mb, round(table_storage_size/1024/1024/1024) table_storage_size, data_percent

from information_schema.CLUSTER_TABLE_SEGMENTS a

where table_vc='vc1'and table_schema='tpcds'and table_name='customer'

orderby suffix;

table_vc table_schema table_name suffix host table_data_size_mb table_storage_size data_percent

vc1 tpcds customer n1 10.0.0.61 3 0 33.3344%

vc1 tpcds customer n2 10.0.0.62 3 0 33.3456%

vc1 tpcds customer n3 10.0.0.63 3 0 33.3200%

再看看 VC2

下的数据分布。

select table_vc, table_schema, table_name, suffix, host, round(table_data_size/1024/1024) table_data_size_mb, round(table_storage_size/1024/1024/1024) table_storage_size, data_percent

from information_schema.CLUSTER_TABLE_SEGMENTS a

where table_vc='vc2'and table_schema='tpcds'and table_name='customer'

orderby suffix;

table_vc table_schema table_name suffix host table_data_size_mb table_storage_size data_percent

vc2 tpcds customer n1 10.0.0.71 2 0 16.6812%

vc2 tpcds customer n2 10.0.0.77 2 0 16.6704%

vc2 tpcds customer n3 10.0.0.72 2 0 16.6545%

vc2 tpcds customer n4 10.0.0.78 2 0 16.6698%

vc2 tpcds customer n5 10.0.0.73 2 0 16.6855%

vc2 tpcds customer n6 10.0.0.79 2 0 16.6386%

select table_vc, table_schema, table_name, suffix, host, round(table_data_size/1024/1024) table_data_size_mb, round(table_storage_size/1024/1024/1024) table_storage_size, data_percent

from information_schema.CLUSTER_TABLE_SEGMENTS a

where table_vc='vc2'and table_schema='tpcds'and table_name='catalog_sales'

orderby suffix;

table_vc table_schema table_name suffix host table_data_size_mb table_storage_size data_percent

vc2 tpcds catalog_sales n1 10.0.0.71 41 0 16.6641%

vc2 tpcds catalog_sales n2 10.0.0.77 41 0 16.6679%

vc2 tpcds catalog_sales n3 10.0.0.72 41 0 16.6656%

vc2 tpcds catalog_sales n4 10.0.0.78 41 0 16.6680%

vc2 tpcds catalog_sales n5 10.0.0.73 41 0 16.6665%

vc2 tpcds catalog_sales n6 10.0.0.79 41 0 16.6679%

同样,每个节点 IP

下上都有表的部分数据分片的主副本,同样还有两个备副本的数据。

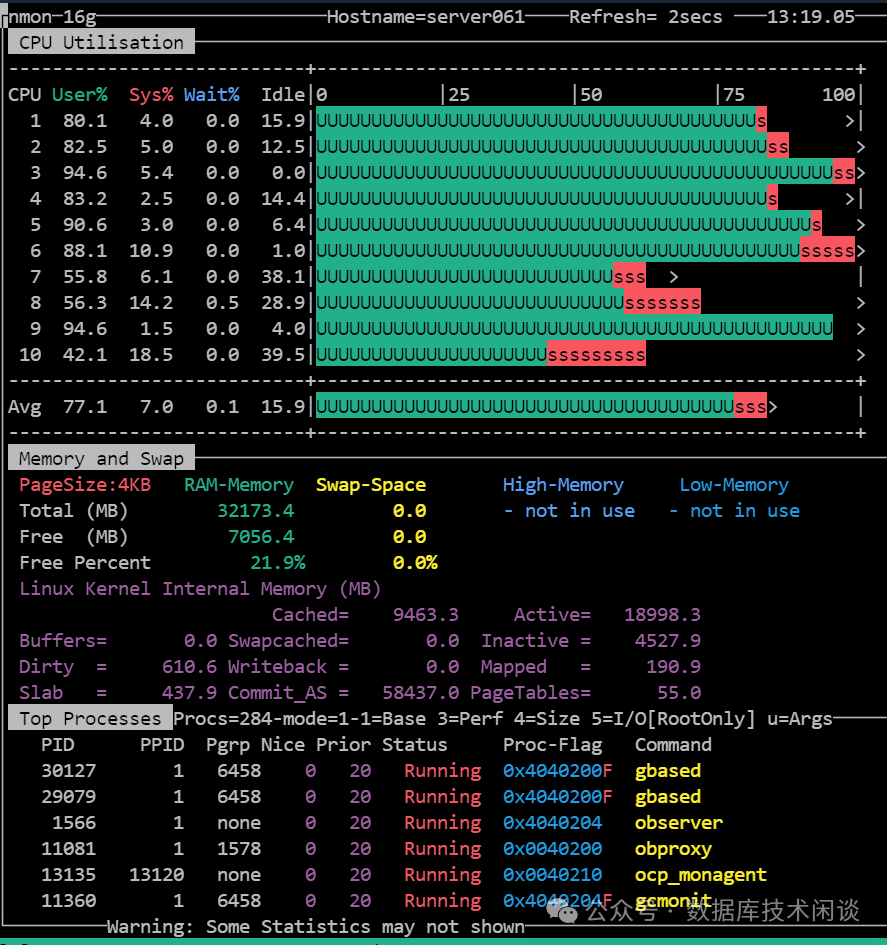

导入 TPCDS

数据后就想跑一下性能看看。不过这么差的虚拟机就不看性能数据。跑的过程中观察到节点 CPU 利用率都有很高的情形,或许这就是数据仓库产品的能力。数据仓库的查询场景通常是大批量数据读取运算等等,能把主机 CPU 都充分利用才有可能做到性能最大化。这个结论是个人观点,不一定正确。

GBase 8a

的运维

运维也支持在线扩容和缩容,这个暂时没有研究。重点看了一下 GBase 8a

的备份和还原。

GBase 8a

的备份和还原

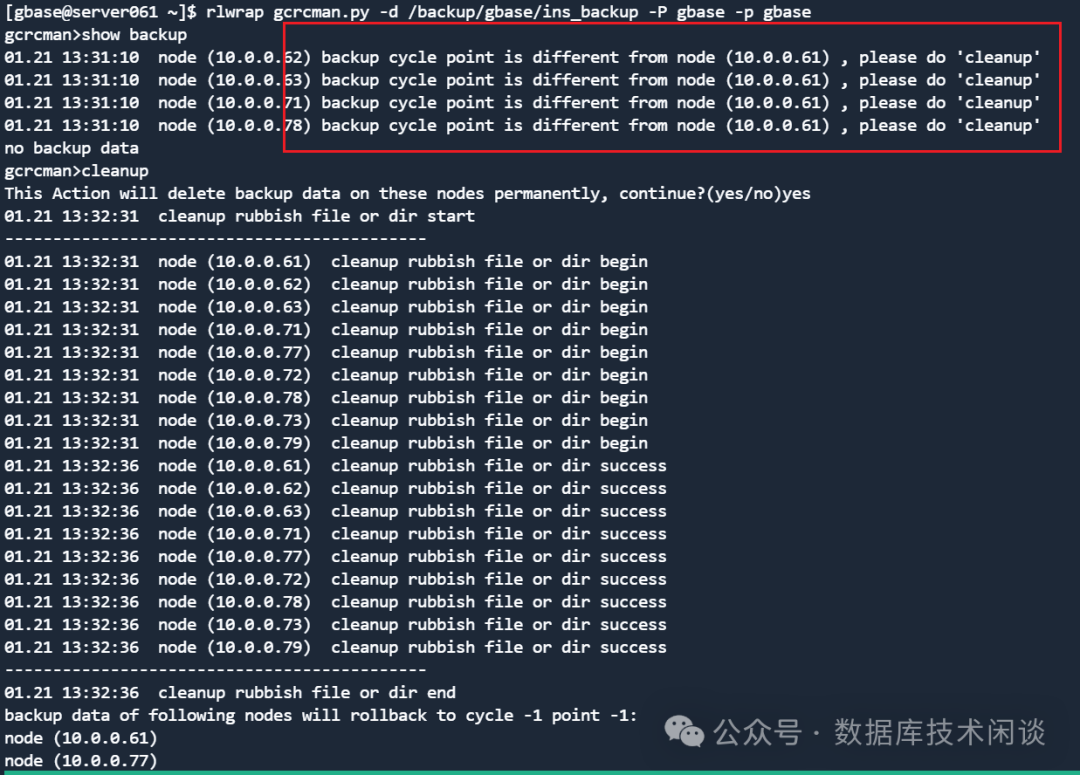

备份命令是:gcrcman

,使用风格类似 ORACLE 的 RMAN

,连其缺点也学习过去了。命令行编辑体验不好。这里结合另外一个命令 rlwrap

一起使用。

gcrcman.py 支持三种级别备份恢复

集群级备份集群数据

不分VC集群所有节点的所有数据的全备或增备,备份前需将8a

集群设置为readonly

只读状态,备份操作完成后,将集群设置为normal状态正常操作; 使用集群级恢复操作前,需将8a

集群设置为recovery

恢复状态,备份操作完成后,将集群设置为normal状态正常操作;

执行命令:backup level < 0 | 1 >库级备份

将 GBase 8a MPP Cluster 数据库中的某个库中所有表全量/增量备份至指定路径中。

执行命令:backup database [vc_name].<database_name> level < 0 | 1 >表级备份

将 GBase 8a MPP Cluster 数据库中的某个表全量备份至指定路径中。

执行命令:backup table [vcname].. level < 0 | 1 >

GBase 8a

的备份支持集群级别、数据库级别和表级别。本来在集群和数据库之间还有个 VC

,目前还不支持 VC

级别的备份。选择哪种级别的备份就要配置独立的目录,不能共用。

然后每个级别的备份策略支持全量备份(level 0

)和增量备份(level 1

),没有看到支持事务日志备份。使用命令倒是很简单。

备份目录尽量在所有节点上保持一致,NFS 目录或者分布式存储是最适合的,分布式数据库产品的备份都有这个特点。不过 GBase 8a

的备份看起来就是将所有节点的数据都备份一次,如果数据有多副本,每个副本都做了备份。默认备份也不压缩,可以指定压缩级别。

GBase 8a

备份会备份所有节点数据,如果集群扩容了,那需要重新做一次全量备份。如果集群重搭了,以前的备份是不能用于现有的集群实例。

整个集群做备份的时候,需要先将集群的所有 VC

的模式转换为只读模式。这个备份就是所有 VC

同时备份。

[gbase@server061 ~]$ gcadmin switchmode readonly vc vc2

========== switch cluster mode...

switch pre mode: [NORMAL]

switch mode to [READONLY]

switch after mode: [READONLY]

[gbase@server061 ~]$ rlwrap gcrcman.py -d backup/gbase/ins_backup -P gbase -p gbase

gcrcman>backup level 0

The gcware not in'READONLY' mode, please switch this mode by hand!

gcrcman>gcrcman Critical error:

[gbase@server061 ~]$ gcadmin switchmode readonly vc vc1

========== switch cluster mode...

switch pre mode: [NORMAL]

switch mode to [READONLY]

switch after mode: [READONLY]

全部设置为只读后,就可以正常发起全量备份、增量备份。命令倒是很简单。

全量备份如下:

[gbase@server061 ~]$ rlwrap gcrcman.py -d backup/gbase/ins_backup -P gbase -p gbase

gcrcman>backup level 0

01.21 13:36:22 BackUp start

--------------------------------------------

01.21 13:36:23 node (10.0.0.61) backup begin

01.21 13:36:23 node (10.0.0.62) backup begin

01.21 13:36:23 node (10.0.0.63) backup begin

01.21 13:36:23 node (10.0.0.71) backup begin

01.21 13:36:23 node (10.0.0.77) backup begin

01.21 13:36:23 node (10.0.0.72) backup begin

01.21 13:36:23 node (10.0.0.78) backup begin

01.21 13:36:23 node (10.0.0.73) backup begin

01.21 13:36:23 node (10.0.0.79) backup begin

01.21 13:38:09 node (10.0.0.61) backup success

01.21 13:38:09 node (10.0.0.62) backup success

01.21 13:38:09 node (10.0.0.63) backup success

01.21 13:38:09 node (10.0.0.71) backup success

01.21 13:38:09 node (10.0.0.77) backup success

01.21 13:38:09 node (10.0.0.72) backup success

01.21 13:38:09 node (10.0.0.78) backup success

01.21 13:38:09 node (10.0.0.73) backup success

01.21 13:38:09 node (10.0.0.79) backup success

--------------------------------------------

01.21 13:38:09 BackUp end

增量备份如下:

gcrcman>backup level 1

01.21 13:41:13 check cluster topology begin

01.21 13:41:13 node (10.0.0.61) check topology begin

01.21 13:41:16 node (10.0.0.61) check topology success

01.21 13:41:16 check cluster topology end

01.21 13:41:16 BackUp start

--------------------------------------------

01.21 13:41:16 node (10.0.0.61) backup begin

01.21 13:41:16 node (10.0.0.62) backup begin

01.21 13:41:16 node (10.0.0.63) backup begin

01.21 13:41:16 node (10.0.0.71) backup begin

01.21 13:41:16 node (10.0.0.77) backup begin

01.21 13:41:16 node (10.0.0.72) backup begin

01.21 13:41:16 node (10.0.0.78) backup begin

01.21 13:41:16 node (10.0.0.73) backup begin

01.21 13:41:16 node (10.0.0.79) backup begin

01.21 13:42:06 node (10.0.0.61) backup success

01.21 13:42:06 node (10.0.0.62) backup success

01.21 13:42:06 node (10.0.0.63) backup success

01.21 13:42:06 node (10.0.0.71) backup success

01.21 13:42:06 node (10.0.0.77) backup success

01.21 13:42:06 node (10.0.0.72) backup success

01.21 13:42:06 node (10.0.0.78) backup success

01.21 13:42:06 node (10.0.0.73) backup success

01.21 13:42:06 node (10.0.0.79) backup success

--------------------------------------------

01.21 13:42:07 BackUp end

查看备份

gcrcman>show backup

cycle point level time

0 0 0 2025-01-21 13:51:51

0 1 1 2025-01-21 13:54:12

gcrcman>

查看备份目录。下面是各个节点的目录。

--- backup/gbase/ins_backup -----------------------------------------------------

560.7 MiB [#######################] 7,533 GclusterData_coordinator2_node2

560.7 MiB [###################### ] 7,541 GclusterData_coordinator1_node1

558.5 MiB [###################### ] 7,529 GclusterData_coordinator3_node3

439.4 MiB [################## ] 8,015 GclusterData_node2

439.4 MiB [################## ] 8,003 GclusterData_node5

439.4 MiB [################## ] 8,001 GclusterData_node3

439.3 MiB [################## ] 7,973 GclusterData_node4

439.2 MiB [################## ] 7,971 GclusterData_node6

439.2 MiB [################## ] 7,959 /GclusterData_node1

然后下面按备份的 Cycle

数字为目录,再往里面还有按 level

为目录。

--- /backup/gbase/ins_backup/GclusterData_node5/BackupCycle_0/gnode -----

/..

429.8 MiB [#######################] 6,799 /20250121135151.lv0

9.6 MiB [ ] 1,193 /20250121135412.lv1

4.0 KiB [ ] backuppoint.ini.B

4.0 KiB [ ] backuppoint.ini.A

4.0 KiB [ ] bk_lock

最深层的目录里就是表的目录。

--- /backup/gbase/ins_backup/GclusterData_node5/BackupCycle_0/gnode/20250121135151.lv0/tpcds/data ---

/..

50.7 MiB [#######################] 46 /store_sales_n5

50.6 MiB [###################### ] 46 /store_sales_n4

50.6 MiB [###################### ] 46 /store_sales_n2

41.0 MiB [################## ] 68 /catalog_sales_n4

41.0 MiB [################## ] 68 /catalog_sales_n2

41.0 MiB [################## ] 68 /catalog_sales_n5

...

GBase 8a

集群的还原需要将状态改为 recover

,数据库和表备份的还原就不需要。GBase 8a

还原的原理推测就是直接复制备份中的表的文件到对应的节点(如果压缩了,则先解压缩)。这里就不再展示了。

总结

总体感觉作为一个 MPP

架构的分布式数据库,GBase 8a

的架构非常清爽,部署和使用也很简单。多集群模式主要是方便隔离业务数据。这种隔离并不是绝对隔离,通过 VC

名字可以跨VC

访问数据。GBase 8a

的高可用原理就是守护进程,备份和还原功能也挺简单,使用也就简单。