点击下方卡片,关注“慢慢学AIGC”

由 DALL·E 3 生成,prompt:A person and a machine are engaged in two-way communication through a microphone and speakers. The person, standing on the left, speaks into the microphone while the machine on the right, resembling a sleek, futuristic robot, responds through speakers. The setting is a modern, well-lit room with a professional atmosphere. The person looks focused and engaged, and the machine's digital display shows sound waves indicating speech.

语音交互系统简介

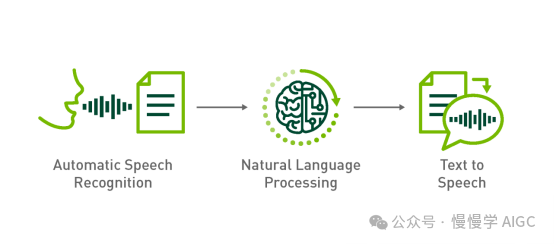

语音交互系统主要由自动语音识别(Automatic Speech Recognition, 简称 ASR)、自然语言处理(Natural Language Processing, 简称 NLP)和文本到语音合成(Text to Speech,简称 TTS)三个环节构成。ASR 相当于人的听觉系统,NLP 相当于人的大脑语言区域,TTS 相当于人的发声系统。

如何构建语音对话机器人

本文将完全利用开源方案构建语音对话机器人。

ASR 采用 OpenAI Whisper,同时支持中、英文。更多技术细节可以看这篇《跟着 Whisper 学说正宗河南话》;

NLP 采用 DeepSeek v2,由于本地运行所需的 GPU 资源不足,我们调用云端 API 实现这一步;

TTS 采用 ChatTTS,它是专门为对话场景设计的文本转语音模型,支持英文和中文两种语言。

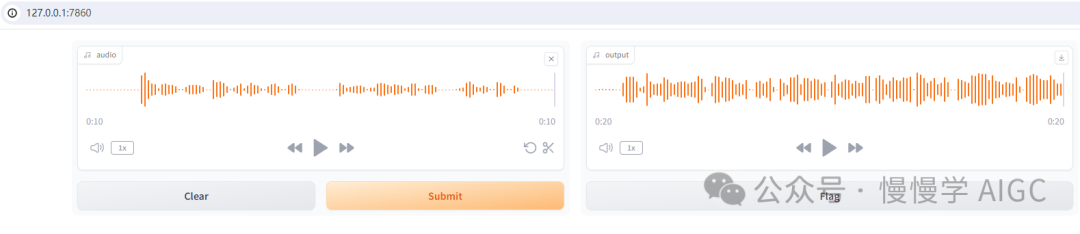

本文基于 Gradio 实现的交互界面如图:

你可以基于系统麦克风采集音频,通过 Whisper 转录为文本,调用 DeepSeek v2 API 后,再将对话输出经过 ChatTTS 合成为语音,点击播放即可听到来自机器人的声音。

硬件环境:RTX 3060, 12GB 显存

软件环境信息(Miniconda3 + Python 3.8.19):

pip listPackage Version----------------------------- --------------absl-py 2.0.0accelerate 0.25.0aiofiles 23.2.1aiohttp 3.8.6aiosignal 1.3.1altair 5.1.2annotated-types 0.6.0antlr4-python3-runtime 4.9.3anyio 4.0.0argon2-cffi 23.1.0argon2-cffi-bindings 21.2.0arrow 1.3.0asttokens 2.4.1astunparse 1.6.3async-lru 2.0.4async-timeout 4.0.3attrs 23.1.0audioread 3.0.1Babel 2.15.0backcall 0.2.0backports.zoneinfo 0.2.1beautifulsoup4 4.12.3bitarray 2.8.2bitsandbytes 0.41.1bleach 6.1.0blinker 1.6.3cachetools 5.3.1cdifflib 1.2.6certifi 2023.7.22cffi 1.16.0charset-normalizer 2.1.1click 8.1.7colorama 0.4.6comm 0.2.2contourpy 1.1.1cpm-kernels 1.0.11cycler 0.12.1Cython 3.0.3debugpy 1.8.1decorator 5.1.1defusedxml 0.7.1distro 1.9.0dlib 19.24.2edge-tts 6.1.8editdistance 0.8.1einops 0.8.0einx 0.2.2encodec 0.1.1exceptiongroup 1.1.3executing 2.0.1face-alignment 1.4.1fairseq 0.12.2faiss-cpu 1.7.4fastapi 0.108.0fastjsonschema 2.19.1ffmpeg 1.4ffmpeg-python 0.2.0ffmpy 0.3.1filelock 3.12.4Flask 2.1.2Flask-Cors 3.0.10flatbuffers 23.5.26fonttools 4.43.1fqdn 1.5.1frozendict 2.4.4frozenlist 1.4.0fsspec 2023.9.2future 0.18.3gast 0.4.0gitdb 4.0.10GitPython 3.1.37google-auth 2.23.3google-auth-oauthlib 1.0.0google-pasta 0.2.0gradio 4.32.2gradio_client 0.17.0grpcio 1.59.0h11 0.14.0h5py 3.10.0httpcore 0.18.0httpx 0.25.0huggingface-hub 0.23.2hydra-core 1.0.7idna 3.4imageio 2.31.5importlib-metadata 6.8.0importlib-resources 6.1.0inflect 7.2.1ipykernel 6.29.4ipython 8.12.3ipywidgets 8.1.3isoduration 20.11.0itsdangerous 2.1.2jedi 0.19.1Jinja2 3.1.2joblib 1.3.2json5 0.9.25jsonpointer 2.4jsonschema 4.19.1jsonschema-specifications 2023.7.1jupyter 1.0.0jupyter_client 8.6.2jupyter-console 6.6.3jupyter_core 5.7.2jupyter-events 0.10.0jupyter-lsp 2.2.5jupyter_server 2.14.1jupyter_server_terminals 0.5.3jupyterlab 4.2.1jupyterlab_pygments 0.3.0jupyterlab_server 2.27.2jupyterlab_widgets 3.0.11keras 2.13.1kiwisolver 1.4.5langdetect 1.0.9latex2mathml 3.77.0lazy_loader 0.3libclang 16.0.6librosa 0.9.1llvmlite 0.41.0loguru 0.7.2lxml 4.9.3Markdown 3.5markdown-it-py 3.0.0MarkupSafe 2.1.3matplotlib 3.7.3matplotlib-inline 0.1.7mdtex2html 1.2.0mdurl 0.1.2mistune 3.0.2more-itertools 10.1.0mpmath 1.3.0multidict 6.0.4nbclient 0.10.0nbconvert 7.16.4nbformat 5.10.4nemo_text_processing 1.0.2nest-asyncio 1.6.0networkx 3.1notebook 7.2.0notebook_shim 0.2.4numba 0.58.0numpy 1.22.4oauthlib 3.2.2omegaconf 2.3.0onnx 1.14.1onnxoptimizer 0.3.13onnxsim 0.4.33openai 1.6.1openai-whisper 20230918opencv-python 4.8.1.78opt-einsum 3.3.0orjson 3.9.9overrides 7.7.0packaging 23.2pandas 2.0.3pandocfilters 1.5.1parso 0.8.4peft 0.7.1pickleshare 0.7.5Pillow 10.0.1pip 24.0pkgutil_resolve_name 1.3.10platformdirs 3.11.0playsound 1.3.0pooch 1.7.0portalocker 2.8.2praat-parselmouth 0.4.3prometheus_client 0.20.0prompt_toolkit 3.0.45protobuf 4.25.1psutil 5.9.5pure-eval 0.2.2pyarrow 13.0.0pyasn1 0.5.0pyasn1-modules 0.3.0PyAudio 0.2.12pycparser 2.21pydantic 2.5.3pydantic_core 2.14.6pydeck 0.8.1b0pydub 0.25.1Pygments 2.16.1pynini 2.1.5pynvml 11.5.0PyOpenGL 3.1.7pyparsing 3.1.1python-dateutil 2.8.2python-json-logger 2.0.7python-multipart 0.0.9pytz 2023.3.post1PyWavelets 1.4.1pywin32 306pywinpty 2.0.13pyworld 0.3.0PyYAML 6.0.1pyzmq 26.0.3qtconsole 5.5.2QtPy 2.4.1referencing 0.30.2regex 2023.10.3requests 2.32.3requests-oauthlib 1.3.1resampy 0.4.2rfc3339-validator 0.1.4rfc3986-validator 0.1.1rich 13.6.0rpds-py 0.10.4rsa 4.9ruff 0.4.7sacrebleu 2.3.1sacremoses 0.1.1safetensors 0.4.3scikit-image 0.18.1scikit-learn 1.3.1scikit-maad 1.3.12scipy 1.7.3semantic-version 2.10.0Send2Trash 1.8.3sentencepiece 0.1.99setuptools 69.5.1shellingham 1.5.4six 1.16.0smmap 5.0.1sniffio 1.3.0sounddevice 0.4.5SoundFile 0.10.3.post1soupsieve 2.5sse-starlette 1.8.2stack-data 0.6.3starlette 0.32.0.post1streamlit 1.29.0sympy 1.12tabulate 0.9.0tenacity 8.2.3tensorboard 2.13.0tensorboard-data-server 0.7.1tensorboardX 2.6.2.2tensorflow 2.13.0tensorflow-estimator 2.13.0tensorflow-intel 2.13.0tensorflow-io-gcs-filesystem 0.31.0termcolor 2.3.0terminado 0.18.1threadpoolctl 3.2.0tifffile 2023.7.10tiktoken 0.3.3timm 0.9.12tinycss2 1.3.0tokenizers 0.19.1toml 0.10.2tomli 2.0.1tomlkit 0.12.0toolz 0.12.0torch 2.1.0+cu121torchaudio 2.1.0+cu121torchcrepe 0.0.22torchvision 0.16.0+cu121tornado 6.3.3tqdm 4.63.0traitlets 5.14.3transformers 4.41.2transformers-stream-generator 0.0.4trimesh 4.0.0typeguard 4.3.0typer 0.12.3types-python-dateutil 2.9.0.20240316typing_extensions 4.12.0tzdata 2023.3tzlocal 5.1uri-template 1.3.0urllib3 2.2.1uvicorn 0.25.0validators 0.22.0vector_quantize_pytorch 1.14.8vocos 0.1.0watchdog 3.0.0wcwidth 0.2.13webcolors 1.13webencodings 0.5.1websocket-client 1.8.0websockets 11.0.3Werkzeug 3.0.0WeTextProcessing 0.1.12wget 3.2wheel 0.43.0widgetsnbextension 4.0.11win32-setctime 1.1.0wrapt 1.15.0yarl 1.9.2zipp 3.17.0

WebUI 代码如下(目前只是演示基本功能,比较简陋):

import gradio as grfrom transformers import pipelineimport numpy as npfrom ChatTTS.experimental.llm import llm_apiimport ChatTTSchat = ChatTTS.Chat()chat.load_models(compile=False) # 设置为True以获得更快速度API_KEY = 'sk-xxxxxxxx' # 需要自行到 https://platform.deepseek.com/api_keys 申请client = llm_api(api_key=API_KEY,base_url="https://api.deepseek.com",model="deepseek-chat")transcriber = pipeline("automatic-speech-recognition", model="openai/whisper-base")def transcribe(audio):sr, y = audioy = y.astype(np.float32)y = np.max(np.abs(y))user_question = transcriber({"sampling_rate": sr, "raw": y})["text"]text = client.call(user_question, prompt_version = 'deepseek')wav = chat.infer(text, use_decoder=True)audio_data = np.array(wav[0]).flatten()sample_rate = 24000return (sample_rate, audio_data)demo = gr.Interface(transcribe,gr.Audio(sources=["microphone"]),"audio",)demo.launch()

在此基础上,可以增加更多功能:

ASR 模型这里只使用 openai/whisper-base,可以在页面上选择多种模型;

DeepSeek v2 API 使用了默认参数配置,可以在页面上增加一些额外参数,如 temperature 和 system prompt 等;

ChatTTS 可以增加如 speaker 身份,打断和笑声控制,实现更丰富的输出;

支持流式对话,像 GPT-4o 那样自然打断;

如果环境搭建遇到困难,可以私信获取完整项目。

点击下方卡片,关注“慢慢学AIGC”