一、环境

Oracle端:IP:192.168.129.73

字符集:ZHS16GBK

pdb名:PDB_CRW

版本:19.3.0.0.0

待迁移的schema:CRW

创建PDB:

sqlplus / as sysdba

-- alter pluggable database PDB_CRW close immediate;

-- drop pluggable database PDB_CRW including datafiles;

show pdbs;

create pluggable database PDB_CRW

admin user pdb_admin identified by Crw_1234

file_name_convert = ('/u01/app/oradata/xxxxxx/pdbseed', '/u01/app/oradata/xxxxxx/pdb_crw')

default tablespace users

datafile '/u01/app/oradata/pdb_crw_crw.dbf' size 100m autoextend on

path_prefix = '/u01/app/oradata/pdb_crw'

storage (maxsize unlimited);

alter pluggable database PDB_CRW open;

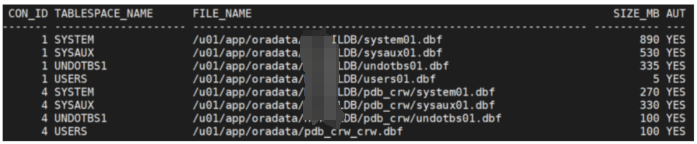

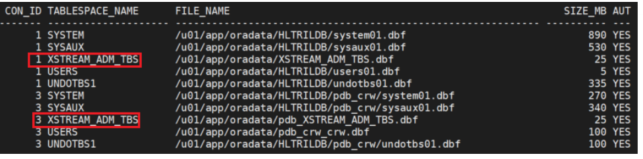

如下所示,CON_ID=3就是PDB_CRW,默认用户表空间是USERS:

col file_name for a60

select con_id,tablespace_name, file_name, bytes/1024/1024 as size_mb, autoextensible from cdb_data_files;

创建业务用户:

alter session set container = PDB_CRW;

create user CRW identified by Crw_1234 default tablespace USERS quota unlimited on USERS;

grant connect,resource to CRW;

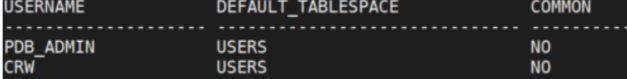

col username for a20

col common for a10

select username,default_tablespace,common from DBA_USERS where username in ('PDB_ADMIN','CRW');

如下所示,用户已创建:

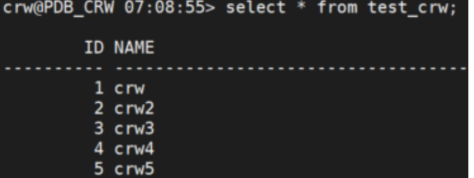

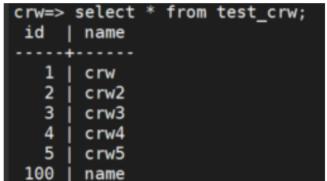

登入业务用户创建测试表:

sqlplus 'crw/"Crw_1234"'@192.168.129.73:1521/PDB_CRW

create table TEST_CRW(id int primary key,name varchar(22));

insert into TEST_CRW values(1,'crw');

commit;

insert into TEST_CRW values(2,'crw2');

commit;

insert into TEST_CRW values(3,'crw3');

commit;

insert into TEST_CRW values(4,'crw4');

commit;

insert into TEST_CRW values(5,'crw5');

commit;

select * from TEST_CRW;

磐维端:

IP:192.168.129.221

版本:gsql (PanWeiDB_V2.0-S3.0.2_B02) compiled at 2024-11-05 00:49:54 commit a25acd9

为测试方便放通所有:

gs_guc reload -N all -I all -h "host all all 0.0.0.0/0 sha256"

创建业务库和用户:

gsql -r

create database crw;

\c crw

-- drop schema crw cascade; --如果有删除重建schema,需要重新做下个步骤的授权

create user crw identified by 'Crw_1234';

create schema crw authorization crw;

创建dtp迁移用户:

create user panwei_dtp identified by 'Crw_1234';

--alter role panwei_dtp sysadmin;

\ crw

grant connect on database crw to panwei_dtp;

grant create on database crw to panwei_dtp;

grant all privileges on schema crw to panwei_dtp;

grant crw TO panwei_dtp;

dtp平台:

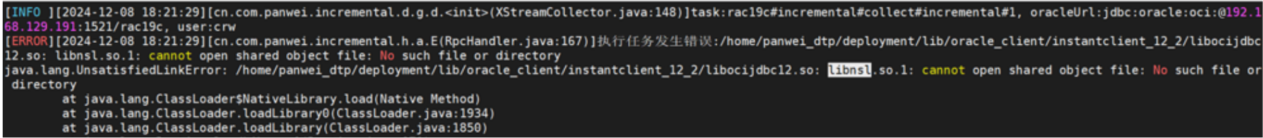

此版本dtp,会报libnsl位置错误,可以创建对应的软链接得以解决:

ln -s /usr/lib64/libnsl.so.2 /usr/lib64/libnsl.so.1

注意,所有的机器都检查一下时间或者ntp,保证时间是一致的。

二、详细步骤

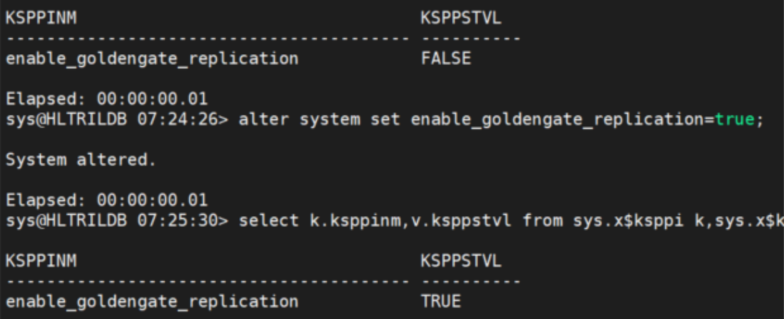

1、开启 Xstream

$ sqlplus / as sysdba

-- 查看

col ksppinm for a40

col ksppstvl for a10

select k.ksppinm,v.ksppstvl from sys.x$ksppi k,sys.x$ksppsv v where k.indx=v.indx and upper(k.ksppinm) = 'ENABLE_GOLDENGATE_REPLICATION';

-- 开启

alter system set enable_goldengate_replication=true;

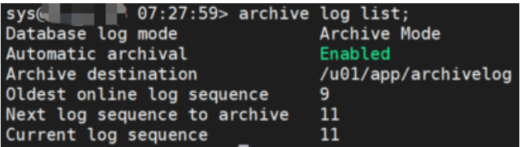

2、归档

$ sqlplus / as sysdba

archive log list;

--查看是否开启归档:

show parameter db_recovery_file_dest;

--通常归档使用快速恢复区

show parameter log_archive_dest;

--如果未使用快速恢复区,可以查看自定义路径

-- 注意,PDB 继承 CDB 的归档设置,无需单独配置

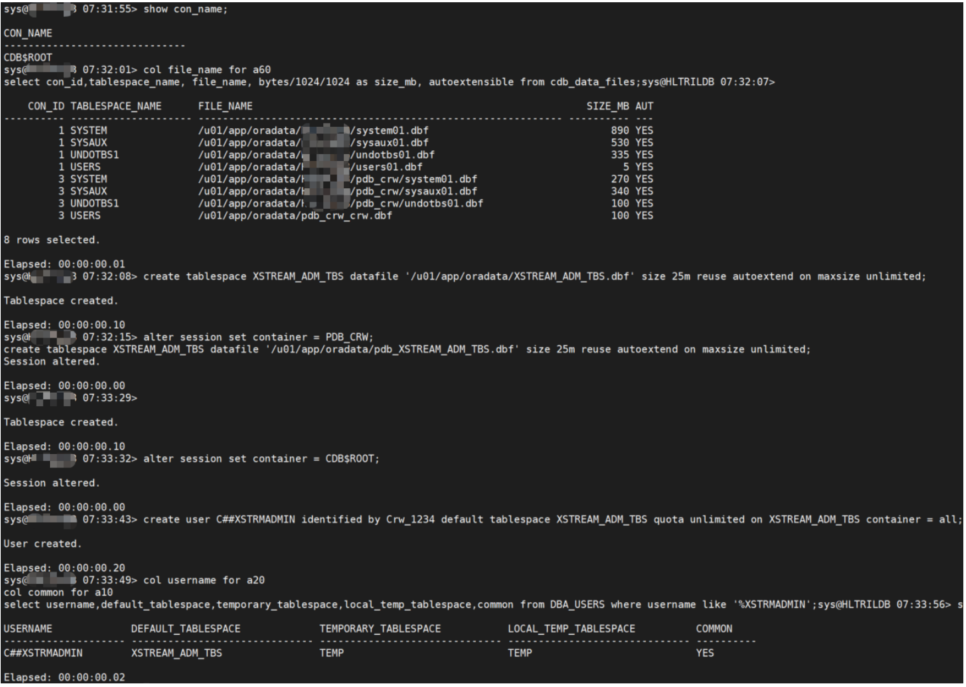

3、创建 XStream 管理员

3.1 创建 XStream 管理员公共用户

C## 开头的公共用户可以在 CDB 和所有 PDB 中使用;

$ sqlplus / as sysdba

show con_name;

-- 查看当前所在的容器名称

col file_name for a60

select con_id,tablespace_name, file_name, bytes/1024/1024 as size_mb, autoextensible from cdb_data_files;

-- 在 cdb 级别查询表空间时,可以查看所有公共表空间和 pdb 的本地表空间,con_id=1,即 cdb$root,是cdb级别的表空间。

create tablespace XSTREAM_ADM_TBS datafile '/u01/app/oradata/XSTREAM_ADM_TBS.dbf' size 25m reuse autoextend on maxsize unlimited;

-- 在cdb级别创建XSTREAM_ADM_TBS表空间

alter session set container = PDB_CRW;

create tablespace XSTREAM_ADM_TBS datafile '/u01/app/oradata/pdb_XSTREAM_ADM_TBS.dbf' size 25m reuse autoextend on maxsize unlimited;

-- 在pdb级别创建XSTREAM_ADM_TBS表空间,如果不创建的话,后面创建用户会报错:error encountered when processing the current ddl statement in pluggable database PDB_CRW

alter session set container = CDB$ROOT;

create user C##XSTRMADMIN identified by Crw_1234 default tablespace XSTREAM_ADM_TBS quota unlimited on XSTREAM_ADM_TBS container = all;

--如果存在PDB_CRW之外的pdb,还需要在那个pdb上面创建表空间

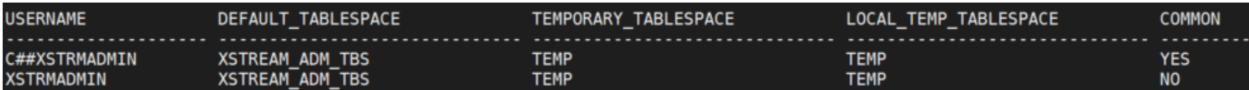

col username for a20

col common for a10

select username,default_tablespace,temporary_tablespace,local_temp_tablespace,common from DBA_USERS where username like '%XSTRMADMIN';

3.2 创建 XStream 管理员PDB用户

alter session set container = PDB_CRW;

create user XSTRMADMIN identified by Crw_1234 default tablespace XSTREAM_ADM_TBS quota unlimited on XSTREAM_ADM_TBS;

4、 XStream 管理员用户授权

4.1、给 XStream 管理员公共用户授权:

sqlplus / as sysdba

grant create session to C##XSTRMADMIN;

begin

dbms_xstream_auth.grant_admin_privilege(

grantee =>'C##XSTRMADMIN',

privilege_type => 'CAPTURE',

grant_select_privileges => TRUE,

container=> 'ALL'

);

end;

/

4.2、给 XStream 管理员PDB用户授权:

alter session set container = PDB_CRW;

grant create session to XSTRMADMIN;

begin

dbms_xstream_auth.grant_admin_privilege(

grantee =>'XSTRMADMIN',

privilege_type => 'CAPTURE',

grant_select_privileges => TRUE

);

end;

/

5、为 xstrmadmin 数据源用户授予权限

alter session set container = PDB_CRW;

grant create session to xstrmadmin;

grant select on v_$database to xstrmadmin;

grant flashback any table to xstrmadmin;

grant select any table to xstrmadmin;

grant lock any table to xstrmadmin;

grant select_catalog_role to xstrmadmin;

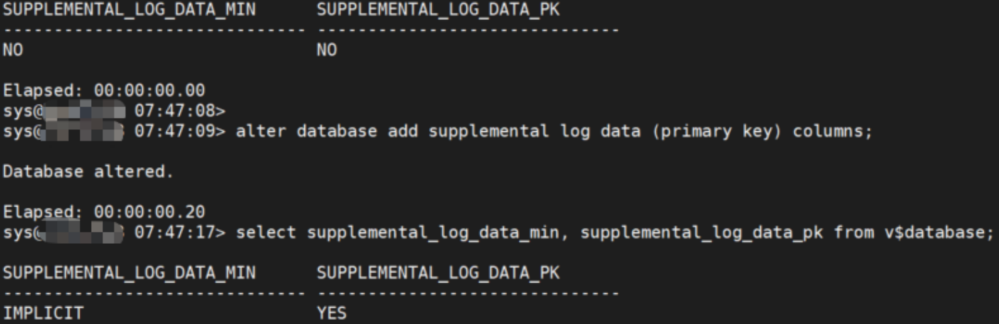

6、配置日志记录参数

在 CDB 级别 开启补充日志时,设置会应用到整个 CDB 和所有 PDB。

sqlplus / as sysdba

-- 查看是否开启补充日志

col supplemental_log_data_min for a30

col supplemental_log_data_pk for a30

select supplemental_log_data_min, supplemental_log_data_pk from v$database;

alter database add supplemental log data (primary key) columns;

--alter database drop supplemental log data (primary key) columns;

7、磐维创建增量前置表

--需要在运行全量迁移任务前,执行以下操作,否则会由于无法查询到全量迁移的完成时间导致增量报错

\c crw

drop table if exists public.kafkaoffset;

create table public.kafkaoffset (

jobid varchar (128) primary key,

topic varchar (64),

lastoffset bigint,

lastsuboffset bigint,

last_scn_number bigint,

scnnumber bigint,

transaction_id text,

applytime timestamp,

updatetime timestamp);

grant select,insert,update,delete on public.kafkaoffset to panwei_dtp;

8、Oracle 用户授权

数据源中的用户 c##xstrmadmin 和 xstrmadmin 都需要授权:

sqlplus / as sysdba

grant connect to c##xstrmadmin;

grant select any table to c##xstrmadmin;

grant select any dictionary to c##xstrmadmin;

grant select_catalog_role to c##xstrmadmin;

grant select any sequence to c##xstrmadmin;

grant select on system.logmnrc_gtlo to c##xstrmadmin;

grant select on system.logmnrc_dbname_uid_map to c##xstrmadmin;

grant execute on dbms_metadata to c##xstrmadmin;

grant execute on dbms_xstream_adm to c##xstrmadmin;

grant execute on dbms_capture_adm to c##xstrmadmin;

grant alter any trigger to c##xstrmadmin;

alter session set container = PDB_CRW;

grant connect to xstrmadmin;

grant select any table to xstrmadmin;

grant select any dictionary to xstrmadmin;

grant select_catalog_role to xstrmadmin;

grant select any sequence to xstrmadmin;

grant select on system.logmnrc_gtlo to xstrmadmin;

grant select on system.logmnrc_dbname_uid_map to xstrmadmin;

grant execute on dbms_metadata to xstrmadmin;

grant execute on dbms_xstream_adm to xstrmadmin;

grant execute on dbms_capture_adm to xstrmadmin;

grant alter any trigger to xstrmadmin;

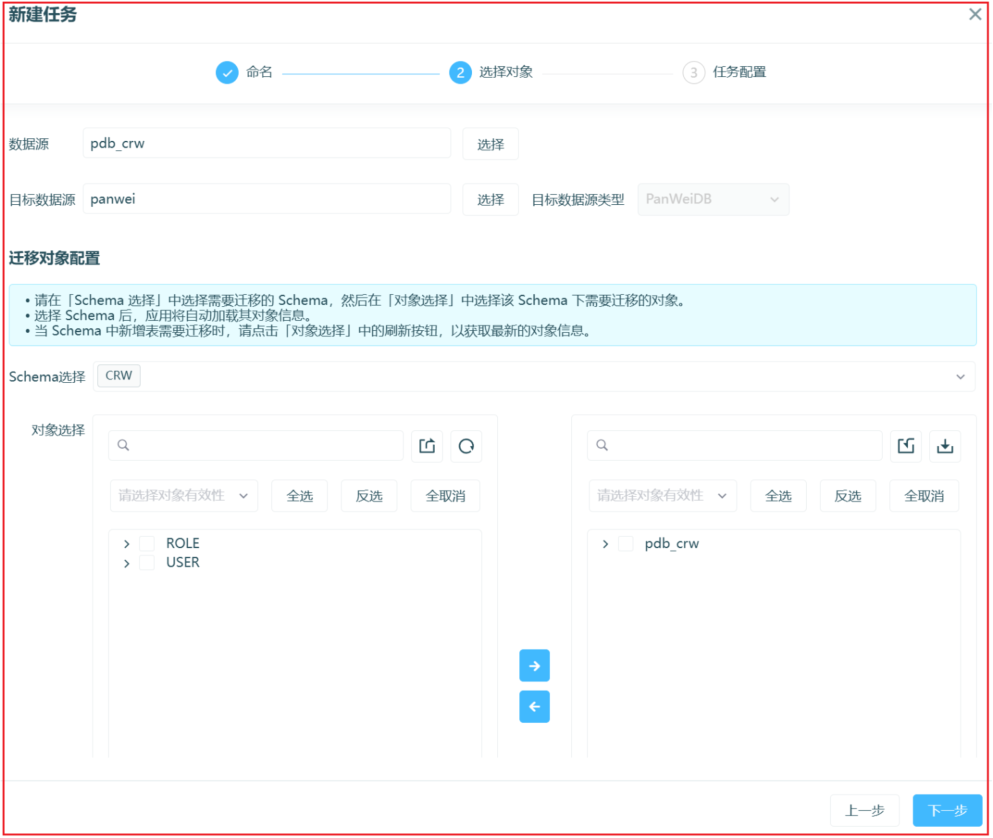

三、DTP平台操作

1、数据源添加Oracle CDB、PDB数据源:

点击测试通过后,“迁移PDB”勾选“是”,如下:

添加磐维数据源:

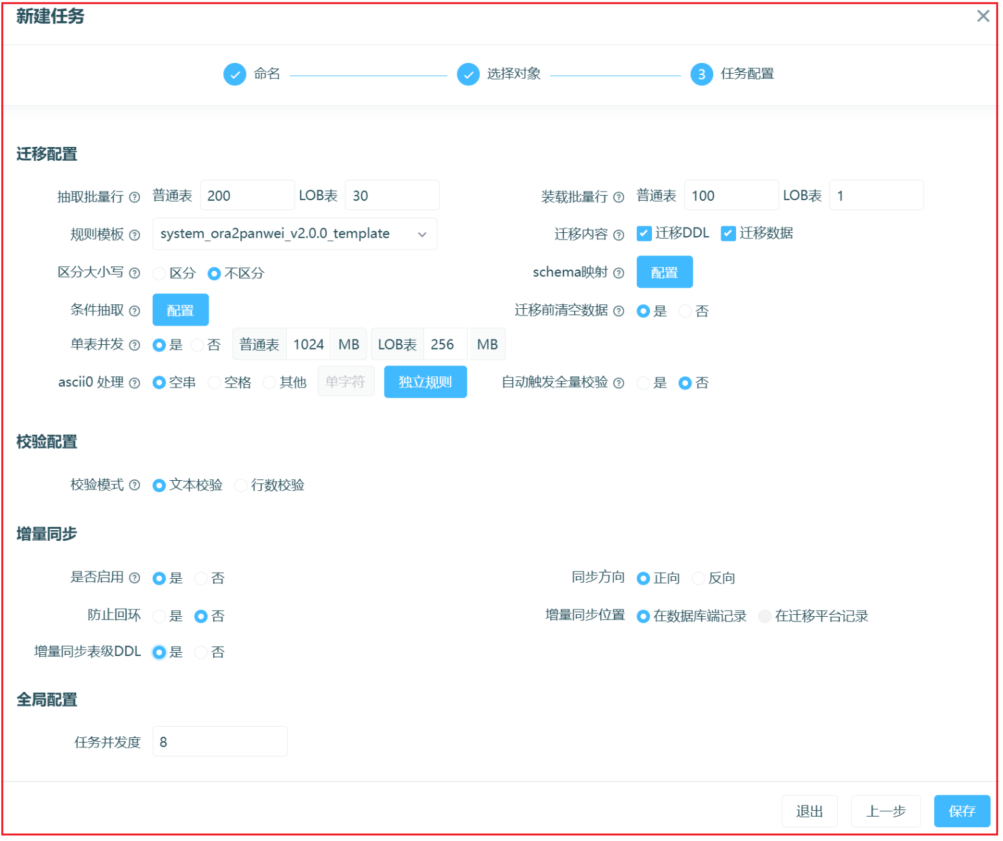

2、创建迁移任务:

3、全增量衔接任务前的准备工作

Oracle 全增量衔接任务执行之前需要在 CDB 进下以下操作:

sqlplus / as sysdba

select inst_id,start_time,status,used_urec,ses_addr from gv$transaction;

ALTER SESSION SET CONTAINER = CDB$ROOT;

exec dbms_capture_adm.build();

begin

dbms_capture_adm.prepare_schema_instantiation(

schema_name => 'CRW',

supplemental_logging => 'KEYS',

container => 'PDB_CRW'

);

end;

/

4、运行全量任务(需先完成前个步骤)

dtp全量日志输出:

[19:08:09:259] [INFO] - dts.schedule.IncrementalServiceHeartbeat.heartbeat(IncrementalServiceHeartbeat.java:61) - 心跳检测启动...

[19:08:09:261] [INFO] - dts.helper.u.b(IncrementalTaskHelper.java:53) - Invoke the incremental service remotely! request: host:[192.168.129.225] port:[31005] body:[{tasktype=heartbeat, taskid=heartbeat}]

[19:08:09:263] [INFO] - dts.helper.u.b(IncrementalTaskHelper.java:66) - Invoke the incremental service remotely success! response: [{msg=success, result=ok, code=0}]

[19:08:10:559] [INFO] - dts.module.SystemConfig.refreshDataIsolationLevel(SystemConfig.java:432) - 获取数据隔离级别最新配置:none

[19:08:10:987] [INFO] - dts.module.Job.run(Job.java:4150) - jobId:cccccccccccccc#migration job begin!

[19:08:10:995] [INFO] - dts.helper.q.a(dtsHelper.java:734) - get Oracle System Dictionaries!

[19:08:10:996] [INFO] - dts.helper.q.a(dtsHelper.java:738) - get Oracle nchar Characterset!

[19:08:11:000] [INFO] - dts.module.Job.run(Job.java:4400) - jobId:cccccccccccccc#migration begin run!

[19:08:11:001] [INFO] - dts.module.thread.MigrateRunnable.run(MigrateRunnable.java:93) - jobId:cccccccccccccc#migration#1740740891,operate:migration begin to run.

[19:08:11:002] [INFO] - dts.module.thread.MigrateRunnable.run(MigrateRunnable.java:100) - test log, jobId:cccccccccccccc#migration#1740740891, jobType:migration

[19:08:11:005] [INFO] - dts.module.thread.MigrateRunnable.run(MigrateRunnable.java:110) - jobId:cccccccccccccc#migration#1740740891,operate:migration insert tb_run_jobinfo.

[19:08:11:010] [INFO] - dts.module.thread.MigrateRunnable.run(MigrateRunnable.java:127) - jobId:cccccccccccccc#migration#1740740891,operate:migration insert tb_run_jobinfo success.

[19:08:11:010] [INFO] - dts.module.thread.MigrateRunnable.run(MigrateRunnable.java:131) - jobId:cccccccccccccc#migration#1740740891,operate:migration get dataSourceConf src&target.

[19:08:11:033] [INFO] - dts.helper.q.a(dtsHelper.java:734) - get Oracle System Dictionaries!

[19:08:11:070] [INFO] - dts.helper.q.a(dtsHelper.java:738) - get Oracle nchar Characterset!

[19:08:11:072] [INFO] - dts.helper.q.a(dtsHelper.java:734) - get Oracle System Dictionaries!

[19:08:11:085] [INFO] - dts.helper.q.a(dtsHelper.java:738) - get Oracle nchar Characterset!

[19:08:11:093] [INFO] - dts.helper.q.a(dtsHelper.java:734) - get Oracle System Dictionaries!

[19:08:11:093] [INFO] - dts.helper.q.a(dtsHelper.java:738) - get Oracle nchar Characterset!

[19:08:11:093] [INFO] - dts.module.thread.MigrateRunnable.run(MigrateRunnable.java:136) - jobId:cccccccccccccc#migration#1740740891,operate:migration get dataSourceConf src&target success.

[19:08:11:093] [INFO] - dts.module.thread.MigrateRunnable.run(MigrateRunnable.java:143) - jobId:[cccccccccccccc#migration#1740740891],operate:migration update tb_portal_job.

[19:08:11:099] [INFO] - dts.helper.w.n(JobInfoHelper.java:354) - update dts.tb_portal_job 1 row,jobName:cccccccccccccc,jobType:migration,

[19:08:11:099] [INFO] - dts.module.thread.MigrateRunnable.run(MigrateRunnable.java:147) - jobId:cccccccccccccc#migration#1740740891,operate:migration update tb_portal_job success.

[19:08:11:119] [INFO] - dts.helper.q.a(dtsHelper.java:734) - get Oracle System Dictionaries!

[19:08:11:144] [INFO] - dts.helper.q.a(dtsHelper.java:738) - get Oracle nchar Characterset!

[19:08:11:147] [INFO] - dts.helper.q.a(dtsHelper.java:734) - get Oracle System Dictionaries!

[19:08:11:157] [INFO] - dts.helper.q.a(dtsHelper.java:738) - get Oracle nchar Characterset!

[19:08:11:166] [INFO] - dts.helper.q.a(dtsHelper.java:734) - get Oracle System Dictionaries!

[19:08:11:166] [INFO] - dts.helper.q.a(dtsHelper.java:738) - get Oracle nchar Characterset!

[19:08:11:169] [INFO] - cn.com.mt.businessHelper.jdbc.ConnectionPoolManager.initPool(ConnectionPoolManager.java:110) - dbypte is null ->org.panweidb.Driver,,,jdbc:panweidb://192.168.129.221:17700/crw?allowEncodingChanges=true&characterEncoding=UTF-8

[19:08:11:169] [INFO] - cn.com.mt.businessHelper.jdbc.ConnectionPoolManager.initPool(ConnectionPoolManager.java:205) - Init connection successed

[19:08:11:632] [INFO] - com.alibaba.druid.pool.DruidDataSource.init(DruidDataSource.java:889) - {dataSource-8} inited

[19:08:11:641] [INFO] - dts.taskconf.g.x(MigrateTaskConf.java:1095) - JobId:cccccccccccccc#migration#1740740891,parallel:8

[19:08:11:927] [INFO] - dts.helper.w.g(JobInfoHelper.java:366) - init process job:cccccccccccccc#migration#1740740891

[19:08:11:931] [INFO] - dts.helper.w.a(JobInfoHelper.java:407) - update process job:cccccccccccccc#migration#1740740891,object,total,batch=2

[19:08:11:933] [INFO] - dts.helper.w.a(JobInfoHelper.java:407) - update process job:cccccccccccccc#migration#1740740891,data,total,batch=1

[19:08:11:935] [INFO] - dts.helper.w.a(JobInfoHelper.java:407) - update process job:cccccccccccccc#migration#1740740891,prepare,handled,batch=1

[19:08:11:942] [INFO] - dts.task.h.l(MigrateTask.java:591) - taskId:cccccccccccccc#migration#1740740891, init migrate config info end!

[19:08:12:334] [INFO] - dts.task.h.run(MigrateTask.java:270) - taskId:cccccccccccccc#migration#1740740891, migrate schemalist:{pdb_crw=[CRW]}

[19:08:12:334] [INFO] - dts.task.h.a(MigrateTask.java:1372) - taskId:cccccccccccccc#migration#1740740891, migrate user begin!

[19:08:12:336] [INFO] - dts.task.h.a(MigrateTask.java:1375) - taskId:cccccccccccccc#migration#1740740891, migrate user finished,using=2, count=0

[19:08:12:339] [INFO] - dts.task.h.fO(MigrateTask.java:656) - 修改事件触发器完成: DISABLE

[19:08:12:353] [ERROR] - dts.helper.q.a(dtsHelper.java:3698) - Fail to execute sql

org.panweidb.util.PSQLException: ERROR: schema "crw" already exists

at org.panweidb.core.v3.QueryExecutorImpl.receiveErrorResponse(QueryExecutorImpl.java:2903) ~[panweidb-jdbc-2.0_pw_2024041716.jar:?]

at org.panweidb.core.v3.QueryExecutorImpl.processResults(QueryExecutorImpl.java:2632) ~[panweidb-jdbc-2.0_pw_2024041716.jar:?]

at org.panweidb.core.v3.QueryExecutorImpl.execute(QueryExecutorImpl.java:341) ~[panweidb-jdbc-2.0_pw_2024041716.jar:?]

at org.panweidb.jdbc.PgStatement.runQueryExecutor(PgStatement.java:570) ~[panweidb-jdbc-2.0_pw_2024041716.jar:?]

at org.panweidb.jdbc.PgStatement.executeInternal(PgStatement.java:547) ~[panweidb-jdbc-2.0_pw_2024041716.jar:?]

at org.panweidb.jdbc.PgStatement.execute(PgStatement.java:405) ~[panweidb-jdbc-2.0_pw_2024041716.jar:?]

at org.panweidb.jdbc.PgStatement.executeWithFlags(PgStatement.java:347) ~[panweidb-jdbc-2.0_pw_2024041716.jar:?]

at org.panweidb.jdbc.PgStatement.executeCachedSql(PgStatement.java:333) ~[panweidb-jdbc-2.0_pw_2024041716.jar:?]

at org.panweidb.jdbc.PgStatement.executeWithFlags(PgStatement.java:310) ~[panweidb-jdbc-2.0_pw_2024041716.jar:?]

at org.panweidb.jdbc.PgStatement.execute(PgStatement.java:306) ~[panweidb-jdbc-2.0_pw_2024041716.jar:?]

at com.alibaba.druid.pool.DruidPooledStatement.execute(DruidPooledStatement.java:497) ~[druid-1.1.3.jar:1.1.3]

at dts.helper.q.a(dtsHelper.java:3692) ~[classes!/:trunk]

at dts.task.h.fM(MigrateTask.java:103) ~[classes!/:trunk]

at dts.task.h.a(MigrateTask.java:395) ~[classes!/:trunk]

at dts.task.h.run(MigrateTask.java:275) ~[classes!/:trunk]

at dts.module.thread.MigrateRunnable.run(MigrateRunnable.java:229) ~[classes!/:trunk]

[19:08:12:355] [INFO] - dts.task.h.a(MigrateTask.java:1101) - taskId:cccccccccccccc#migration#1740740891, schema:CRW, migrate object before data begin!

[19:08:12:355] [INFO] - dts.task.h.a(MigrateTask.java:1317) - taskId:cccccccccccccc#migration#1740740891, schema:CRW, migrate table begin!

[19:08:12:356] [INFO] - dts.task.h.a(MigrateTask.java:1324) - taskId:cccccccccccccc#migration#1740740891, schema:CRW, table count=1

[19:08:12:356] [INFO] - dts.transform.f.migrateTable(MigrateUsingSqlParser.java:123) - start migrate table ddl:tablecount=1

[19:08:12:537] [INFO] - dts.dbexport.o.cO(ExportTableInfo.java:137) - get table metaData

[19:08:12:537] [INFO] - dts.ddlhandler.export.expression.table.c.i(DBExportTableMetadataHandler.java:163) - taskId:cccccccccccccc#migration#1740740891,getIdentityInfoMap start.

[19:08:12:553] [INFO] - dts.ddlhandler.export.expression.table.c.i(DBExportTableMetadataHandler.java:166) - taskId:cccccccccccccc#migration#1740740891,getIdentityInfoMap end!using=16

[19:08:12:553] [INFO] - dts.ddlhandler.export.expression.table.c.i(DBExportTableMetadataHandler.java:168) - taskId:cccccccccccccc#migration#1740740891,getTableExtendInfoMap,getObjectTables start.

[19:08:12:808] [INFO] - dts.ddlhandler.export.expression.table.c.i(DBExportTableMetadataHandler.java:178) - taskId:cccccccccccccc#migration#1740740891,getTableExtendInfoMap,getObjectTables end!using=255

[19:08:12:808] [INFO] - dts.ddlhandler.export.expression.table.c.i(DBExportTableMetadataHandler.java:184) - taskId:cccccccccccccc#migration#1740740891,getUniqueKeyInfoMap,getPrimaryKeyInfoMap start.

[19:08:12:808] [INFO] - dts.ddlhandler.export.expression.table.c.i(DBExportTableMetadataHandler.java:192) - taskId:cccccccccccccc#migration#1740740891,getUniqueKeyInfoMap,getPrimaryKeyInfoMap end!using=0

[19:08:12:809] [INFO] - dts.ddlhandler.export.expression.table.c.i(DBExportTableMetadataHandler.java:194) - taskId:cccccccccccccc#migration#1740740891,getTablePartitionInfoMap,getObjectTables start.

[19:08:12:809] [INFO] - dts.ddlhandler.export.expression.table.c.i(DBExportTableMetadataHandler.java:202) - taskId:cccccccccccccc#migration#1740740891,getTablePartitionInfoMap,getObjectTables end!using=0

[19:08:12:809] [INFO] - dts.ddlhandler.export.expression.table.c.i(DBExportTableMetadataHandler.java:211) - taskId:cccccccccccccc#migration#1740740891,get column info.

[19:08:12:809] [INFO] - dts.thread.Container.c.start(ObjectContainer.java:52) - start task Oracle-export-metadata-get-columninfo!

[19:08:12:810] [INFO] - dts.thread.Container.c.start(ObjectContainer.java:54) - taskId:cccccccccccccc#migration#1740740891 start to do spilt ...

[19:08:12:810] [INFO] - dts.thread.Container.c.start(ObjectContainer.java:58) - taskId:cccccccccccccc#migration#1740740891 start to do schedule, there is 1 tasks, spilt to 1 groups!

[19:08:12:810] [INFO] - dts.thread.Container.c.jP(ObjectContainer.java:88) - taskId:cccccccccccccc#migration#1740740891,wait for thread:0

[19:08:13:350] [INFO] - dts.thread.Container.c.start(ObjectContainer.java:60) - taskId:cccccccccccccc#migration#1740740891, do Oracle-export-metadata-get-columninfo end! total=1, using=540

[19:08:13:351] [INFO] - dts.ddlhandler.export.expression.table.c.i(DBExportTableMetadataHandler.java:214) - taskId:cccccccccccccc#migration#1740740891,get column info using=542

[19:08:13:351] [INFO] - dts.ddlhandler.export.expression.table.c.i(DBExportTableMetadataHandler.java:223) - taskId:cccccccccccccc#migration#1740740891,get table DDL begin!

[19:08:13:351] [INFO] - dts.ddlhandler.export.expression.table.i.h(OracleExportTableMetadataHandler.java:985) - 开始获取DDL

[19:08:13:351] [INFO] - dts.thread.Container.c.start(ObjectContainer.java:52) - start task Oracle-get-table!

[19:08:13:352] [INFO] - dts.thread.Container.c.start(ObjectContainer.java:54) - taskId:cccccccccccccc#migration#1740740891 start to do spilt ...

[19:08:13:352] [INFO] - dts.thread.Container.c.start(ObjectContainer.java:58) - taskId:cccccccccccccc#migration#1740740891 start to do schedule, there is 1 tasks, spilt to 1 groups!

[19:08:16:382] [INFO] - dts.thread.Container.c.start(ObjectContainer.java:60) - taskId:cccccccccccccc#migration#1740740891, do Oracle-get-table end! total=0, using=3030

[19:08:16:383] [INFO] - dts.ddlhandler.export.expression.table.c.i(DBExportTableMetadataHandler.java:225) - taskId:cccccccccccccc#migration#1740740891,get table DDL end!using=3032

[19:08:16:383] [INFO] - dts.dbexport.o.cO(ExportTableInfo.java:146) - get table metaData using:3846ms

[19:08:16:384] [INFO] - dts.transform.f.migrateTable(MigrateUsingSqlParser.java:145) - 获取表DDL using:4028ms

[19:08:16:384] [INFO] - dts.thread.Container.b.start(ApplyContainer.java:48) - start task migrate-table-apply!

[19:08:16:384] [INFO] - dts.thread.Container.b.start(ApplyContainer.java:50) - taskId:cccccccccccccc#migration#1740740891 start to do spilt ...

[19:08:16:384] [INFO] - dts.thread.Container.b.start(ApplyContainer.java:54) - taskId:cccccccccccccc#migration#1740740891 start to do schedule, there is 1 tasks, spilt to 1 groups!

[19:08:16:384] [INFO] - dts.thread.Container.b.jP(ApplyContainer.java:86) - taskId:cccccccccccccc#migration#1740740891,wait for thread:0

[19:08:16:456] [INFO] - cn.com.mt.businessHelper.jdbc.ConnectionPoolManager.initPool(ConnectionPoolManager.java:110) - dbypte is null ->org.panweidb.Driver,,,jdbc:panweidb://192.168.129.221:17700/crw?allowEncodingChanges=true&characterEncoding=UTF-8

[19:08:16:456] [INFO] - cn.com.mt.businessHelper.jdbc.ConnectionPoolManager.initPool(ConnectionPoolManager.java:205) - Init connection successed

[19:08:16:909] [INFO] - com.alibaba.druid.pool.DruidDataSource.init(DruidDataSource.java:889) - {dataSource-9} inited

[19:08:16:953] [INFO] - dts.helper.w.a(JobInfoHelper.java:407) - update process job:cccccccccccccc#migration#1740740891,object,handled,batch=1

[19:08:16:958] [INFO] - dts.thread.Container.b.start(ApplyContainer.java:56) - taskId:cccccccccccccc#migration#1740740891, do migrate-table-apply end!successGroups=1,using=574

[19:08:16:959] [INFO] - dts.transform.f.migrateTable(MigrateUsingSqlParser.java:187) - migrate table ddl end!

[19:08:16:959] [INFO] - dts.task.h.a(MigrateTask.java:1336) - taskId:cccccccccccccc#migration#1740740891, schema:CRW, migrate table finished,using=4604

[19:08:16:960] [INFO] - dts.task.h.a(MigrateTask.java:1125) - taskId:cccccccccccccc#migration#1740740891, schema:CRW, migrate object before data end!

[19:08:16:960] [INFO] - dts.task.h.a(MigrateTask.java:1372) - taskId:cccccccccccccc#migration#1740740891, migrate role begin!

[19:08:16:963] [INFO] - dts.task.h.a(MigrateTask.java:1375) - taskId:cccccccccccccc#migration#1740740891, migrate role finished,using=3, count=0

[19:08:16:965] [INFO] - dts.task.h.u(MigrateTask.java:170) - 失败对象个数: 0

[19:08:16:967] [INFO] - dts.task.h.b(MigrateTask.java:436) - taskId:cccccccccccccc#migration#1740740891, migrate data begin.

[19:08:16:975] [INFO] - dts.datatransfer.data.e.bw(MigrateData.java:79) - taskId:cccccccccccccc#migration#1740740891, start migrate data.

[19:08:16:975] [INFO] - dts.datatransfer.data.e.a(MigrateData.java:168) - taskId:cccccccccccccc#migration#1740740891, schema:CRW, get MigrateTableInfo!

[19:08:16:978] [INFO] - dts.datatransfer.data.e.a(MigrateData.java:182) - taskId:cccccccccccccc#migration#1740740891, schema:CRW, get table conditions.

[19:08:16:979] [INFO] - dts.datatransfer.data.e.a(MigrateData.java:193) - taskId:cccccccccccccc#migration#1740740891, schema:CRW, export source table info.

[19:08:16:988] [INFO] - dts.dbexport.o.cO(ExportTableInfo.java:137) - get table metaData

[19:08:16:988] [INFO] - dts.ddlhandler.export.expression.table.c.i(DBExportTableMetadataHandler.java:157) - taskId:cccccccccccccc#migration#1740740891,getTableSize start.

[19:08:17:449] [INFO] - dts.ddlhandler.export.expression.table.c.i(DBExportTableMetadataHandler.java:160) - taskId:cccccccccccccc#migration#1740740891,getTableSize end!using=461

[19:08:17:450] [INFO] - dts.ddlhandler.export.expression.table.c.i(DBExportTableMetadataHandler.java:163) - taskId:cccccccccccccc#migration#1740740891,getIdentityInfoMap start.

[19:08:17:451] [INFO] - dts.ddlhandler.export.expression.table.c.i(DBExportTableMetadataHandler.java:166) - taskId:cccccccccccccc#migration#1740740891,getIdentityInfoMap end!using=1

[19:08:17:452] [INFO] - dts.ddlhandler.export.expression.table.c.i(DBExportTableMetadataHandler.java:168) - taskId:cccccccccccccc#migration#1740740891,getTableExtendInfoMap,getObjectTables start.

[19:08:17:452] [INFO] - dts.ddlhandler.export.expression.table.c.i(DBExportTableMetadataHandler.java:178) - taskId:cccccccccccccc#migration#1740740891,getTableExtendInfoMap,getObjectTables end!using=0

[19:08:17:452] [INFO] - dts.ddlhandler.export.expression.table.c.i(DBExportTableMetadataHandler.java:184) - taskId:cccccccccccccc#migration#1740740891,getUniqueKeyInfoMap,getPrimaryKeyInfoMap start.

[19:08:17:452] [INFO] - dts.ddlhandler.export.expression.table.c.i(DBExportTableMetadataHandler.java:192) - taskId:cccccccccccccc#migration#1740740891,getUniqueKeyInfoMap,getPrimaryKeyInfoMap end!using=0

[19:08:17:452] [INFO] - dts.ddlhandler.export.expression.table.c.i(DBExportTableMetadataHandler.java:194) - taskId:cccccccccccccc#migration#1740740891,getTablePartitionInfoMap,getObjectTables start.

[19:08:17:494] [INFO] - dts.ddlhandler.export.expression.table.c.i(DBExportTableMetadataHandler.java:202) - taskId:cccccccccccccc#migration#1740740891,getTablePartitionInfoMap,getObjectTables end!using=42

[19:08:17:495] [INFO] - dts.ddlhandler.export.expression.table.c.i(DBExportTableMetadataHandler.java:205) - taskId:cccccccccccccc#migration#1740740891,getIsTemporary start.

[19:08:17:558] [INFO] - dts.ddlhandler.export.expression.table.c.i(DBExportTableMetadataHandler.java:208) - taskId:cccccccccccccc#migration#1740740891,getIsTemporary end!using=63

[19:08:17:558] [INFO] - dts.ddlhandler.export.expression.table.c.i(DBExportTableMetadataHandler.java:211) - taskId:cccccccccccccc#migration#1740740891,get column info.

[19:08:17:993] [INFO] - dts.thread.Container.c.start(ObjectContainer.java:52) - start task Oracle-export-metadata-get-columninfo!

[19:08:17:993] [INFO] - dts.thread.Container.c.start(ObjectContainer.java:54) - taskId:cccccccccccccc#migration#1740740891 start to do spilt ...

[19:08:17:993] [INFO] - dts.thread.Container.c.start(ObjectContainer.java:58) - taskId:cccccccccccccc#migration#1740740891 start to do schedule, there is 1 tasks, spilt to 1 groups!

[19:08:17:993] [INFO] - dts.thread.Container.c.jP(ObjectContainer.java:88) - taskId:cccccccccccccc#migration#1740740891,wait for thread:0

[19:08:18:190] [INFO] - dts.thread.Container.c.start(ObjectContainer.java:60) - taskId:cccccccccccccc#migration#1740740891, do Oracle-export-metadata-get-columninfo end! total=1, using=197

[19:08:18:191] [INFO] - dts.ddlhandler.export.expression.table.c.i(DBExportTableMetadataHandler.java:214) - taskId:cccccccccccccc#migration#1740740891,get column info using=632

[19:08:18:191] [INFO] - dts.dbexport.o.cO(ExportTableInfo.java:146) - get table metaData using:1203ms

[19:08:18:191] [INFO] - dts.datatransfer.data.e.a(MigrateData.java:506) - taskId:cccccccccccccc#migration#1740740891, schema:CRW, export source table info finished,using=1212

[19:08:18:191] [INFO] - dts.datatransfer.data.e.a(MigrateData.java:511) - taskId:cccccccccccccc#migration#1740740891, schema:CRW, get specialchar of each table.

[19:08:18:193] [INFO] - dts.datatransfer.data.e.a(MigrateData.java:206) - taskId:cccccccccccccc#migration#1740740891, schema:CRW, export target table info.

[19:08:18:195] [INFO] - dts.dbexport.o.cO(ExportTableInfo.java:137) - get table metaData

[19:08:18:195] [INFO] - dts.ddlhandler.export.expression.table.c.i(DBExportTableMetadataHandler.java:163) - taskId:cccccccccccccc#migration#1740740891,getIdentityInfoMap start.

[19:08:18:195] [INFO] - dts.ddlhandler.export.expression.table.c.i(DBExportTableMetadataHandler.java:166) - taskId:cccccccccccccc#migration#1740740891,getIdentityInfoMap end!using=0

[19:08:18:196] [INFO] - dts.ddlhandler.export.expression.table.c.i(DBExportTableMetadataHandler.java:168) - taskId:cccccccccccccc#migration#1740740891,getTableExtendInfoMap,getObjectTables start.

[19:08:18:196] [INFO] - dts.ddlhandler.export.expression.table.c.i(DBExportTableMetadataHandler.java:178) - taskId:cccccccccccccc#migration#1740740891,getTableExtendInfoMap,getObjectTables end!using=0

[19:08:18:196] [INFO] - dts.ddlhandler.export.expression.table.c.i(DBExportTableMetadataHandler.java:184) - taskId:cccccccccccccc#migration#1740740891,getUniqueKeyInfoMap,getPrimaryKeyInfoMap start.

[19:08:18:196] [INFO] - dts.ddlhandler.export.expression.table.c.i(DBExportTableMetadataHandler.java:192) - taskId:cccccccccccccc#migration#1740740891,getUniqueKeyInfoMap,getPrimaryKeyInfoMap end!using=0

[19:08:18:197] [INFO] - dts.ddlhandler.export.expression.table.c.i(DBExportTableMetadataHandler.java:194) - taskId:cccccccccccccc#migration#1740740891,getTablePartitionInfoMap,getObjectTables start.

[19:08:18:197] [INFO] - dts.ddlhandler.export.expression.table.c.i(DBExportTableMetadataHandler.java:202) - taskId:cccccccccccccc#migration#1740740891,getTablePartitionInfoMap,getObjectTables end!using=0

[19:08:18:197] [INFO] - dts.ddlhandler.export.expression.table.c.i(DBExportTableMetadataHandler.java:205) - taskId:cccccccccccccc#migration#1740740891,getIsTemporary start.

[19:08:18:198] [INFO] - dts.ddlhandler.export.expression.table.c.i(DBExportTableMetadataHandler.java:208) - taskId:cccccccccccccc#migration#1740740891,getIsTemporary end!using=0

[19:08:18:198] [INFO] - dts.ddlhandler.export.expression.table.c.i(DBExportTableMetadataHandler.java:211) - taskId:cccccccccccccc#migration#1740740891,get column info.

[19:08:18:223] [INFO] - dts.ddlhandler.export.expression.table.c.i(DBExportTableMetadataHandler.java:214) - taskId:cccccccccccccc#migration#1740740891,get column info using=25

[19:08:18:224] [INFO] - dts.dbexport.o.cO(ExportTableInfo.java:146) - get table metaData using:29ms

[19:08:18:224] [INFO] - dts.dbexport.o.cO(ExportTableInfo.java:158) - get check constraint info on tables

[19:08:18:224] [INFO] - dts.dbexport.o.cO(ExportTableInfo.java:170) - get check constraint info using:0ms

[19:08:18:224] [INFO] - dts.datatransfer.data.e.b(MigrateData.java:624) - taskId:cccccccccccccc#migration#1740740891, schema:CRW, export target table info finished,using=31.

[19:08:18:224] [INFO] - dts.datatransfer.data.e.a(MigrateData.java:280) - taskId:cccccccccccccc#migration#1740740891, schema:CRW, start to truncate table.

[19:08:18:228] [INFO] - dts.helper.q.a(dtsHelper.java:1504) - Set db and schema sql:set search_path=CRW,oracle,public

[19:08:18:236] [INFO] - dts.datatransfer.data.e.a(MigrateData.java:331) - taskId:cccccccccccccc#migration#1740740891, schema:CRW, truncate table finished,using=11

[19:08:18:236] [INFO] - dts.datatransfer.data.e.f(MigrateData.java:274) - taskId:cccccccccccccc#migration#1740740891, sort table finished,using=0

[19:08:18:237] [INFO] - dts.datatransfer.data.e.a(MigrateData.java:466) - taskId:cccccccccccccc#migration#1740740891, start to migrate schema data.

[19:08:18:237] [INFO] - dts.datatransfer.data.e.a(MigrateData.java:471) - taskId:cccccccccccccc#migration#1740740891, migrate data with index&constrain.

[19:08:18:237] [INFO] - dts.transform.d.migrateDataWithIndex(MigrateUsingExpression.java:274) - taskId:cccccccccccccc#migration#1740740891, start to transform.

[19:08:18:237] [INFO] - dts.datatransfer.data.f.startTransform(TransformDataHandler.java:311) - TaskId:cccccccccccccc#migration#1740740891,start data Transform!

[19:08:18:237] [INFO] - dts.helper.k.b(DataTransformHelper.java:556) - TaskId:cccccccccccccc#migration#1740740891,begin to get status of trigger&index&constraint!

[19:08:18:237] [INFO] - dts.helper.k.a(DataTransformHelper.java:688) - TaskId:cccccccccccccc#migration#1740740891,add getTrigger&index&constraint to thread:CRW.TEST_CRW,task index:0

[19:08:18:237] [INFO] - dts.helper.k.a(DataTransformHelper.java:640) - TaskId:cccccccccccccc#migration#1740740891,exec getTrigger&index&constraint,tabname:CRW.TEST_CRW

[19:08:18:243] [INFO] - dts.helper.k.b(DataTransformHelper.java:610) - task id:cccccccccccccc#migration#1740740891,getTrigger&index&constraint task finished:1, active threads:0,table:CRW.TEST_CRW,sqlListSize:0

[19:08:18:245] [INFO] - dts.datatransfer.data.f.startTransform(TransformDataHandler.java:341) - TaskId:cccccccccccccc#migration#1740740891,start data Transform with incrmental!

[19:08:18:251] [INFO] - dts.incremental.job.c.a(OldHelper.java:366) - 启用了归档,开始获取firstScn!

[19:08:18:263] [INFO] - dts.helper.m.h(DatabaseSnapshotUtil.java:167) - taskId:cccccccccccccc#migration#1740740891, get firstScn of lastest in 1 day! firstScn=2173895

[19:08:18:273] [INFO] - dts.helper.m.a(DatabaseSnapshotUtil.java:195) - taskId: cccccccccccccc#migration#1740740891, get start scn, current scn: 2175627

[19:08:18:285] [INFO] - dts.helper.m.a(DatabaseSnapshotUtil.java:232) - taskId: cccccccccccccc#migration#1740740891, start scn: 2175627

[19:08:18:286] [INFO] - dts.helper.m.a(DatabaseSnapshotUtil.java:245) - init startScn success! startScn=2175627

[19:08:18:286] [INFO] - dts.helper.m.a(DatabaseSnapshotUtil.java:327) - TaskId:cccccccccccccc#migration#1740740891,begin to update offset info! offsetTable:dts.incremental_offset_oracle

[19:08:18:289] [INFO] - dts.helper.m.a(DatabaseSnapshotUtil.java:385) - taskId: cccccccccccccc#migration#1740740891, get current Oracle scn: 2175627

[19:08:18:291] [INFO] - dts.datatransfer.data.f.startTransform(TransformDataHandler.java:368) - TaskId;cccccccccccccc#migration#1740740891,init transfer thread pool finished, core pool size: 8, max pool size: 8

[19:08:18:291] [INFO] - dts.datatransfer.data.f.a(TransformDataHandler.java:1515) - TaskId:cccccccccccccc#migration#1740740891,add table to transfer thread:CRW.TEST_CRW,none,task index:0

[19:08:18:294] [INFO] - dts.datatransfer.data.a.bq(DataTransfer.java:81) - task:cccccccccccccc#migration#1740740891,start transform table:CRW.TEST_CRW,none

[19:08:18:295] [INFO] - dts.datatransfer.data.a.bq(DataTransfer.java:124) - srcDbId:ccccccc,targetDbId:panwei_crw,table:CRW.TEST_CRW,none,fetchSize:200,batchSize:100

[19:08:18:295] [INFO] - dts.datatransfer.data.a.bq(DataTransfer.java:126) - job:cccccccccccccc#migration#1740740891,is using copy:true

[19:08:18:296] [WARN] - dts.datatransfer.data.read.d.bM(JdbcReader.java:195) - Can not change Catalog!

[19:08:18:297] [INFO] - dts.datatransfer.data.read.h.bK(OracleJdbcReader.java:170) - task:cccccccccccccc#migration#1740740891,sql:select "ID","NAME" from "CRW"."TEST_CRW" AS OF SCN 2175628

[19:08:18:302] [INFO] - dts.datatransfer.data.copyapiwrite.d.init(mtAsyncCopyApiWriter.java:75) - sql:copy CRW.TEST_CRW(ID,NAME ) FROM STDIN WITH CSV

[19:08:18:302] [INFO] - dts.datatransfer.data.a.b(DataTransfer.java:418) - Taskid:cccccccccccccc#migration#1740740891,read table:CRW.TEST_CRW, finished,rows:5

[19:08:18:302] [INFO] - dts.datatransfer.execute.e.ch(TransferExecutor.java:331) - last flush to targetDb!!!!!!!!!!!!!

[19:08:18:305] [INFO] - dts.datatransfer.execute.e.a(TransferExecutor.java:351) - last copy to target:[copy CRW.TEST_CRW(ID,NAME ) FROM STDIN WITH CSV]

[19:08:18:312] [INFO] - dts.datatransfer.data.a.bq(DataTransfer.java:193) - Taskid:cccccccccccccc#migration#1740740891,transform table:CRW.TEST_CRW,none finish,rows:5

[19:08:18:312] [INFO] - dts.datatransfer.data.f.startTransform(TransformDataHandler.java:449) - task id:cccccccccccccc#migration#1740740891,table task finished:1, active threads:0,table:CRW.TEST_CRW,rows:5

[19:08:18:314] [INFO] - dts.datatransfer.data.f.a(TransformDataHandler.java:1710) - TaskId:cccccccccccccc#migration#1740740891,there is no trigger need to be enabled! table:TEST_CRW

[19:08:18:314] [INFO] - dts.datatransfer.data.f.a(TransformDataHandler.java:1668) - TaskId:cccccccccccccc#migration#1740740891,migrate data with index&constraint begin,for table:TEST_CRW

[19:08:18:319] [INFO] - dts.helper.w.a(JobInfoHelper.java:407) - update process job:cccccccccccccc#migration#1740740891,data,handled,batch=1

[19:08:18:321] [INFO] - dts.dbexport.b.cO(ExportConstraintInfo.java:105) - taskId:cccccccccccccc#migration#1740740891,get primary key DDL begin!

[19:08:18:321] [INFO] - dts.thread.Container.c.start(ObjectContainer.java:52) - start task Oracle-get-primary-key!

[19:08:18:321] [INFO] - dts.thread.Container.c.start(ObjectContainer.java:54) - taskId:cccccccccccccc#migration#1740740891 start to do spilt ...

[19:08:18:321] [INFO] - dts.thread.Container.c.start(ObjectContainer.java:58) - taskId:cccccccccccccc#migration#1740740891 start to do schedule, there is 1 tasks, spilt to 1 groups!

[19:08:18:322] [INFO] - dts.thread.Container.c.jP(ObjectContainer.java:88) - taskId:cccccccccccccc#migration#1740740891,wait for thread:0

[19:08:18:322] [INFO] - dts.ddlhandler.export.expression.constraint.e.m(OracleExportConstraintMetadataHandler.java:135) - get pk ddl batchSize=1

[19:08:20:013] [INFO] - dts.ddlhandler.export.expression.constraint.e.m(OracleExportConstraintMetadataHandler.java:224) - get pk ddl of this batch end!

[19:08:20:013] [INFO] - dts.thread.Container.c.start(ObjectContainer.java:60) - taskId:cccccccccccccc#migration#1740740891, do Oracle-get-primary-key end! total=1, using=1692

[19:08:20:013] [INFO] - dts.dbexport.b.cO(ExportConstraintInfo.java:107) - taskId:cccccccccccccc#migration#1740740891,get primary key DDL end!using:1692

[19:08:20:130] [INFO] - dts.helper.w.a(JobInfoHelper.java:407) - update process job:cccccccccccccc#migration#1740740891,object,handled,batch=1

[19:08:20:135] [INFO] - dts.transform.d.migrateObjToTarget(MigrateUsingExpression.java:1139) - object : SYS_C007546 for table : TEST_CRW is finished

[19:08:20:137] [INFO] - dts.datatransfer.data.f.a(TransformDataHandler.java:1671) - TaskId:cccccccccccccc#migration#1740740891,migrate index&constraint finish,for table:TEST_CRW

[19:08:20:138] [INFO] - dts.datatransfer.data.f.startTransform(TransformDataHandler.java:590) - TaskId:cccccccccccccc#migration#1740740891,data transfer finish

[19:08:20:138] [INFO] - dts.datatransfer.data.e.bw(MigrateData.java:118) - taskId:cccccccccccccc#migration#1740740891, migrate data finished, using=3163

[19:08:20:139] [INFO] - dts.helper.w.a(JobInfoHelper.java:407) - update process job:cccccccccccccc#migration#1740740891,data,finish,batch=1

[19:08:20:141] [INFO] - dts.task.h.b(MigrateTask.java:474) - taskId:cccccccccccccc#migration#1740740891, update migrate data process success!

[19:08:20:142] [INFO] - dts.helper.u.a(IncrementalTaskHelper.java:79) - Invoke the incremental service remotely! request: host:[192.168.129.225] port:[31005] body:[{srcdbtype=oracle, jobid=cccccccccccccc#incremental, targetdbid=panwei_crw, tasktype=resetapplyconf, srcdbid=ccccccc, jobname=cccccccccccccc}]

[19:08:21:666] [INFO] - dts.helper.u.a(IncrementalTaskHelper.java:91) - Invoke the incremental service remotely success! response: [{msg=success, result=ok, code=0}]

[19:08:21:668] [INFO] - dts.task.h.b(MigrateTask.java:1142) - taskId:cccccccccccccc#migration#1740740891, schema:CRW, migrate object after migrate data begin!

[19:08:21:668] [INFO] - dts.task.h.b(MigrateTask.java:1455) - taskId:cccccccccccccc#migration#1740740891, schema:CRW, migrate constrain begin!

[19:08:21:669] [INFO] - dts.task.h.b(MigrateTask.java:1461) - taskId:cccccccccccccc#migration#1740740891, schema:CRW, constraintCount:1

[19:08:21:670] [INFO] - dts.task.h.b(MigrateTask.java:1466) - 命名冲突的约束:[]

[19:08:21:670] [INFO] - dts.task.h.b(MigrateTask.java:1469) - taskId:cccccccccccccc#migration#1740740891, schema:CRW, remain to migrate constraintCount:0

[19:08:21:670] [INFO] - dts.transform.d.migrateConstraint(MigrateUsingExpression.java:453) - no table constraint to migrate.

[19:08:21:670] [INFO] - dts.task.h.b(MigrateTask.java:1476) - taskId:cccccccccccccc#migration#1740740891, schema:CRW, migrate constrain finished,using=2

[19:08:21:670] [INFO] - dts.task.h.b(MigrateTask.java:1186) - taskId:cccccccccccccc#migration#1740740891, schema:CRW, migrate object after migrate data end!

[19:08:21:670] [INFO] - dts.task.h.b(MigrateTask.java:566) - 尝试重新执行失败的对象

[19:08:21:671] [INFO] - dts.task.h.u(MigrateTask.java:170) - 失败对象个数: 0

[19:08:21:674] [INFO] - dts.helper.w.a(JobInfoHelper.java:407) - update process job:cccccccccccccc#migration#1740740891,object,handled,batch=2

[19:08:21:677] [INFO] - dts.task.h.fO(MigrateTask.java:656) - 修改事件触发器完成: ENABLE

[19:08:21:677] [INFO] - dts.task.h.run(MigrateTask.java:326) - 完成时间:10578

[19:08:21:679] [INFO] - dts.transform.result.a.jX(RecordingMigrateResult.java:354) - Taskid:cccccccccccccc#migration#1740740891,get result from object migrate result success!

[19:08:21:680] [INFO] - dts.transform.result.a.jY(RecordingMigrateResult.java:402) - Taskid:cccccccccccccc#migration#1740740891,get result of every schema from object migrate result success!

[19:08:21:682] [INFO] - dts.transform.result.a.jW(RecordingMigrateResult.java:317) - Taskid:cccccccccccccc#migration#1740740891,get result from object migrate result success!

[19:08:21:687] [INFO] - dts.helper.w.ai(JobInfoHelper.java:591) - no need to delete jobName:cccccccccccccc, jobType:migration

[19:08:21:689] [INFO] - dts.helper.w.f(JobInfoHelper.java:180) - job:cccccccccccccc#migration#1740740891,success,

[19:08:21:693] [INFO] - dts.helper.w.f(JobInfoHelper.java:234) - update dts.tb_run_jobinfo 1 row,jobid:cccccccccccccc#migration#1740740891,status:success,msg:

增量日志输出:

[INFO ][2025-02-28 19:08:20][cn.com.panwei.incremental.h.a.E(RpcHandler.java:76)]begin:RpcCallHandler-->runningTaskCount:1,param:{srcdbtype=oracle, jobid=cccccccccccccc#incremental, targetdbid=panwei_crw, tasktype=resetapplyconf, srcdbid=ccccccc, jobname=cccccccccccccc}

[INFO ][2025-02-28 19:08:20][cn.com.panwei.incremental.util.j.a(IncrementalHelper.java:98)]getDataSourceConf dbId:ccccccc,isTargetDb:false,pgConn:jdbc:postgresql://127.0.0.1:31003/panweidb

[INFO ][2025-02-28 19:08:20][cn.com.panwei.businessHelper.h.a.a(ConnectionPoolManager.java:112)]dbypte ->oracle

[INFO ][2025-02-28 19:08:20][cn.com.panwei.businessHelper.h.a.a(ConnectionPoolManager.java:205)]Init connection successed

[INFO ][2025-02-28 19:08:20][cn.com.panwei.incremental.util.j.a(IncrementalHelper.java:98)]getDataSourceConf dbId:oracle,isTargetDb:false,pgConn:jdbc:postgresql://127.0.0.1:31003/panweidb

[INFO ][2025-02-28 19:08:20][cn.com.panwei.businessHelper.h.a.a(ConnectionPoolManager.java:112)]dbypte ->oracle

[INFO ][2025-02-28 19:08:20][cn.com.panwei.businessHelper.h.a.a(ConnectionPoolManager.java:205)]Init connection successed

[INFO ][2025-02-28 19:08:21][cn.com.panwei.incremental.util.j.a(IncrementalHelper.java:98)]getDataSourceConf dbId:panwei_crw,isTargetDb:true,pgConn:jdbc:postgresql://127.0.0.1:31003/panweidb

[INFO ][2025-02-28 19:08:21][cn.com.panwei.businessHelper.h.a.a(ConnectionPoolManager.java:110)]dbypte is null ->org.panweidb.Driver,,,jdbc:panweidb://192.168.129.221:17700/crw?allowEncodingChanges=true&characterEncoding=UTF-8

[INFO ][2025-02-28 19:08:21][cn.com.panwei.businessHelper.h.a.a(ConnectionPoolManager.java:205)]Init connection successed

[INFO ][2025-02-28 19:08:21][cn.com.panwei.incremental.h.a.E(RpcHandler.java:166)]end:RpcCallHandler-->runningTaskCount:0,hasRanTaskCount:14

[INFO ][2025-02-28 19:08:21][cn.com.panwei.incremental.h.a.E(RpcHandler.java:168)]end:task:null,taskType:resetapplyconf,RPC请求参数:{srcdbtype=oracle, jobid=cccccccccccccc#incremental, targetdbid=panwei_crw, tasktype=resetapplyconf, srcdbid=ccccccc, jobname=cccccccccccccc},应答参数:{msg=success, result=ok, code=0}

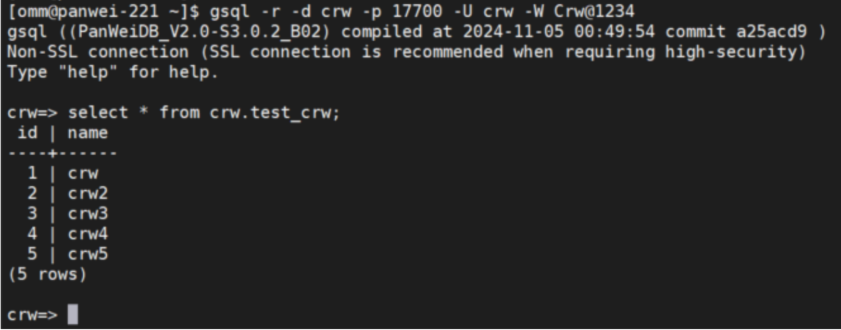

验证磐维目标端已有数据:

gsql -r -d crw -p 17700 -U crw -W Crw_1234

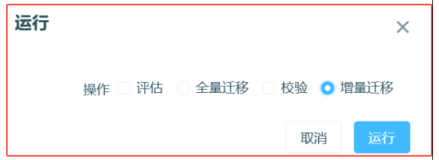

运行增量任务:

增量日志输出:

[INFO ][2025-02-28 19:11:55][cn.com.panwei.incremental.h.a.Rpc(RpcHandler.java:233)]task:cccccccccccccc#incremental#apply#incremental#1,taskType:apply,异步RPC请求参数:{jobName=cccccccccccccc, jobid=cccccccccccccc#incremental, tasktype=apply, thread=Y, jobType=incremental, jobtype=incremental, jobname=cccccccccccccc, taskid=cccccccccccccc#incremental#apply#incremental#1},应答参数:{result=ok, msg=成功, code=0}

[INFO ][2025-02-28 19:11:55][cn.com.panwei.incremental.h.a.E(RpcHandler.java:76)]begin:RpcCallHandler-->runningTaskCount:1,param:{jobName=cccccccccccccc, jobid=cccccccccccccc#incremental, tasktype=apply, thread=Y, jobType=incremental, jobtype=incremental, jobname=cccccccccccccc, taskid=cccccccccccccc#incremental#apply#incremental#1}

[INFO ][2025-02-28 19:11:55][cn.com.panwei.incremental.x.d.I(IncrementalApplyTask.java:133)]TaskID:【cccccccccccccc#incremental#apply#incremental#1】#应用任务:开始执行! rpcCall:{{jobName=cccccccccccccc, jobid=cccccccccccccc#incremental, tasktype=apply, thread=Y, jobType=incremental, jobtype=incremental, jobname=cccccccccccccc, taskid=cccccccccccccc#incremental#apply#incremental#1}}

[INFO ][2025-02-28 19:11:55][cn.com.panwei.incremental.x.d.ox(IncrementalApplyTask.java:210)]PG增量重启周期更新

[INFO ][2025-02-28 19:11:55][cn.com.panwei.incremental.h.a.Rpc(RpcHandler.java:233)]task:cccccccccccccc#incremental#collect#incremental#1,taskType:collect,异步RPC请求参数:{jobName=cccccccccccccc, jobid=cccccccccccccc#incremental, tasktype=collect, thread=Y, jobType=incremental, jobtype=incremental, jobname=cccccccccccccc, taskid=cccccccccccccc#incremental#collect#incremental#1},应答参数:{result=ok, msg=成功, code=0}

[INFO ][2025-02-28 19:11:55][cn.com.panwei.incremental.h.a.E(RpcHandler.java:76)]begin:RpcCallHandler-->runningTaskCount:2,param:{jobName=cccccccccccccc, jobid=cccccccccccccc#incremental, tasktype=collect, thread=Y, jobType=incremental, jobtype=incremental, jobname=cccccccccccccc, taskid=cccccccccccccc#incremental#collect#incremental#1}

[INFO ][2025-02-28 19:11:55][cn.com.panwei.incremental.x.h.J(IncrementalTaskConf.java:686)]查询作业数据源sql:[select dbid, targetdbid, targetdbtype, jobparameters from panwei_dtp.tb_portal_job where jobname = 'cccccccccccccc' limit 1], jobName:[cccccccccccccc]

[INFO ][2025-02-28 19:11:55][cn.com.panwei.incremental.x.h.J(IncrementalTaskConf.java:686)]查询作业数据源sql:[select dbid, targetdbid, targetdbtype, jobparameters from panwei_dtp.tb_portal_job where jobname = 'cccccccccccccc' limit 1], jobName:[cccccccccccccc]

[INFO ][2025-02-28 19:11:55][cn.com.panwei.incremental.util.j.a(IncrementalHelper.java:98)]getDataSourceConf dbId:ccccccc,isTargetDb:false,pgConn:jdbc:postgresql://127.0.0.1:31003/panweidb

[INFO ][2025-02-28 19:11:55][cn.com.panwei.incremental.util.j.a(IncrementalHelper.java:98)]getDataSourceConf dbId:ccccccc,isTargetDb:false,pgConn:jdbc:postgresql://127.0.0.1:31003/panweidb

[INFO ][2025-02-28 19:11:55][cn.com.panwei.incremental.util.j.a(IncrementalHelper.java:98)]getDataSourceConf dbId:oracle,isTargetDb:false,pgConn:jdbc:postgresql://127.0.0.1:31003/panweidb

[INFO ][2025-02-28 19:11:55][cn.com.panwei.incremental.util.j.a(IncrementalHelper.java:98)]getDataSourceConf dbId:oracle,isTargetDb:false,pgConn:jdbc:postgresql://127.0.0.1:31003/panweidb

[INFO ][2025-02-28 19:11:55][cn.com.panwei.incremental.util.j.a(IncrementalHelper.java:98)]getDataSourceConf dbId:panwei_crw,isTargetDb:true,pgConn:jdbc:postgresql://127.0.0.1:31003/panweidb

[INFO ][2025-02-28 19:11:55][cn.com.panwei.incremental.util.j.a(IncrementalHelper.java:98)]getDataSourceConf dbId:panwei_crw,isTargetDb:true,pgConn:jdbc:postgresql://127.0.0.1:31003/panweidb

[INFO ][2025-02-28 19:11:55][cn.com.panwei.incremental.x.h.J(IncrementalTaskConf.java:750)]采集库初始化完成,源数据库类型【oracle】

[INFO ][2025-02-28 19:11:55][cn.com.panwei.incremental.x.c.b.ox(OracleIncrementalCollectTask.java:289)]Oracle增量重启周期限制次数更新

[INFO ][2025-02-28 19:11:55][cn.com.panwei.incremental.x.h.J(IncrementalTaskConf.java:686)]查询作业数据源sql:[select dbid, targetdbid, targetdbtype, jobparameters from panwei_dtp.tb_portal_job where jobname = 'cccccccccccccc' limit 1], jobName:[cccccccccccccc]

[INFO ][2025-02-28 19:11:55][cn.com.panwei.incremental.util.j.a(IncrementalHelper.java:98)]getDataSourceConf dbId:ccccccc,isTargetDb:false,pgConn:jdbc:postgresql://127.0.0.1:31003/panweidb

[INFO ][2025-02-28 19:11:55][cn.com.panwei.incremental.x.h.J(IncrementalTaskConf.java:750)]采集库初始化完成,源数据库类型【oracle】

[INFO ][2025-02-28 19:11:55][cn.com.panwei.incremental.x.h.G(IncrementalTaskConf.java:668)]初始化运行配置完成

[INFO ][2025-02-28 19:11:55][cn.com.panwei.incremental.x.h.a(IncrementalTaskConf.java:882)]oracle

[INFO ][2025-02-28 19:11:55][cn.com.panwei.incremental.util.j.a(IncrementalHelper.java:98)]getDataSourceConf dbId:oracle,isTargetDb:false,pgConn:jdbc:postgresql://127.0.0.1:31003/panweidb

[INFO ][2025-02-28 19:11:55][cn.com.panwei.incremental.util.j.a(IncrementalHelper.java:98)]getDataSourceConf dbId:panwei_crw,isTargetDb:true,pgConn:jdbc:postgresql://127.0.0.1:31003/panweidb

[INFO ][2025-02-28 19:11:55][cn.com.panwei.incremental.x.h.J(IncrementalTaskConf.java:750)]采集库初始化完成,源数据库类型【oracle】

[INFO ][2025-02-28 19:11:55][cn.com.panwei.incremental.x.h.G(IncrementalTaskConf.java:668)]初始化运行配置完成

[INFO ][2025-02-28 19:11:55][cn.com.panwei.incremental.x.g.G(IncrementalCollectTaskConf.java:121)]jobId:cccccccccccccc#incremental获取到collectConf的信息:OracleCollectConf{topic='69453244b661e45f93899f355f6ba182-1', dbType='oracle', collectOffset=0, sourceTime=null, startScn=2175627, collectCommitScn=0, collectLastCommitScn=0, transactionIds=[], serverName=CCCCCCCCCCCCCC_SERVERNAME}

[INFO ][2025-02-28 19:11:55][cn.com.panwei.incremental.x.c.c.c(OracleIncrementalCollectTaskConf.java:172)]task:cccccccccccccc#incremental#collect#incremental#1,作业上次结束采集的位置:scn:2175627

[INFO ][2025-02-28 19:11:55][cn.com.panwei.incremental.x.h.a(IncrementalTaskConf.java:882)]oracle

[INFO ][2025-02-28 19:11:59][cn.com.panwei.incremental.x.h.a(IncrementalTaskConf.java:882)]panweidb

[INFO ][2025-02-28 19:11:59][cn.com.panwei.incremental.x.h.a(IncrementalTaskConf.java:882)]panweidb

[INFO ][2025-02-28 19:11:59][cn.com.panwei.incremental.x.h.I(IncrementalTaskConf.java:482)]初始化迁移对象信息完成,数量:【1】

[INFO ][2025-02-28 19:11:59][cn.com.panwei.incremental.x.h.I(IncrementalTaskConf.java:482)]初始化迁移对象信息完成,数量:【1】

[INFO ][2025-02-28 19:11:59][cn.com.panwei.incremental.x.a.<init>(CollectTaskExecutor.java:43)]task:cccccccccccccc#incremental#collect#incremental#1,[采集端]子线程3[数据发送器]创建成功

[INFO ][2025-02-28 19:11:59][cn.com.panwei.incremental.x.e.S(IncrementalApplyTaskConf.java:671)]job:cccccccccccccc#incremental,初始写入kafkaOffsetTable:public.kafkaoffset

[INFO ][2025-02-28 19:11:59][cn.com.panwei.incremental.x.e.s(IncrementalApplyTaskConf.java:205)]采集库类型:oracle

[INFO ][2025-02-28 19:11:59][cn.com.panwei.incremental.x.e.s(IncrementalApplyTaskConf.java:255)]tabMeta:pdb_crw.CRW.TEST_CRW, tabMeta.pkCount:1

[INFO ][2025-02-28 19:11:59][cn.com.panwei.incremental.util.j.a(IncrementalHelper.java:1848)]task:cccccccccccccc#incremental#apply#incremental#1,表crw.test_crw禁用触发器成功

[INFO ][2025-02-28 19:11:59][cn.com.panwei.incremental.x.d.I(IncrementalApplyTask.java:173)]task:cccccccccccccc#incremental#apply#incremental#1, apply buffer size:32000

[INFO ][2025-02-28 19:11:59][cn.com.panwei.incremental.o.b.c(RecordReceiverV1.java:197)]taskId:cccccccccccccc#incremental#apply#incremental#1, initFilterParameter dbTranCommitScn:0,dbTranCommitLsn:0:0, dbTranSourceTime:null, srcCommitTs: 0

[INFO ][2025-02-28 19:11:59][cn.com.panwei.incremental.x.d.I(IncrementalApplyTask.java:179)]TaskID:【cccccccccccccc#incremental#apply#incremental#1】#应用任务:创建Kafka数据读取器(<应用端>子线程1[数据读取器])成功!

[INFO ][2025-02-28 19:11:59][cn.com.panwei.incremental.a.c.eB(ApplyFilter.java:278)]filetrConfig is not set

[INFO ][2025-02-28 19:11:59][cn.com.panwei.incremental.x.d.I(IncrementalApplyTask.java:188)]TaskID:【cccccccccccccc#incremental#apply#incremental#1】#应用任务:创建数据应用器(<应用端>子线程1[数据读取器])成功!

[INFO ][2025-02-28 19:11:59][cn.com.panwei.incremental.l.a.start(ApplyListener.java:73)]TaskID:【cccccccccccccc#incremental#apply#incremental#1】#应用任务:数据读取和数据应用启动成功,开启任务监听!

[INFO ][2025-02-28 19:11:59][cn.com.panwei.incremental.l.a.b(ApplyListener.java:80)]应用端数据读取线程:Thread-Read-cccccccccccccc#incremental#apply#incremental#1_1740741119474启动!

[INFO ][2025-02-28 19:11:59][cn.com.panwei.incremental.l.a.a(ApplyListener.java:97)]数据应用线程:Thread-Apply-cccccccccccccc#incremental#apply#incremental#1_1740741119474启动!

[INFO ][2025-02-28 19:11:59][cn.com.panwei.incremental.l.a.a(ApplyListener.java:115)]kafka数据清理检测线程:Thread-KafkaCleanerMonitor-cccccccccccccc#incremental#apply#incremental#1_1740741119474启动!

[INFO ][2025-02-28 19:11:59][cn.com.panwei.incremental.k.a.U(Consumer.java:61)]task:cccccccccccccc#incremental#apply#incremental#1,connect to kafka success,server:127.0.0.1:9092,topic:69453244b661e45f93899f355f6ba182-1

[INFO ][2025-02-28 19:11:59][cn.com.panwei.incremental.o.f.start(RecordReceiverV2.java:185)]taskId:cccccccccccccc#incremental#apply#incremental#1,应用端初始化kafkaOffset:0

[INFO ][2025-02-28 19:11:59][cn.com.panwei.incremental.k.a.G(Consumer.java:149)]taskId:cccccccccccccc#incremental#apply#incremental#1, 重置offset0

[INFO ][2025-02-28 19:11:59][cn.com.panwei.incremental.o.f.start(RecordReceiverV2.java:188)]TaskID:【cccccccccccccc#incremental#apply#incremental#1】#应用任务:数据读取器启动成功,开始从Kafka读取数据!

[INFO ][2025-02-28 19:11:59][cn.com.panwei.incremental.k.a.ky(Consumer.java:73)]task:cccccccccccccc#incremental#apply#incremental#1,connect to kafka success,server:127.0.0.1:9092,topic:69453244b661e45f93899f355f6ba182-1

[INFO ][2025-02-28 19:11:59][cn.com.panwei.incremental.a.h.eC(ApplyMasterTask.java:181)]TaskID:【cccccccccccccc#incremental#apply#incremental#1】#应用任务:数据应用器启动成功!开始将数据写入目标库

[INFO ][2025-02-28 19:11:59][cn.com.panwei.incremental.p.b.run(KafkaTopicLogCleaner.java:68)]task:cccccccccccccc#incremental#apply#incremental#1, retention:20000, lastoffset:0, no need to clear data

[INFO ][2025-02-28 19:11:59][cn.com.panwei.incremental.x.c.b.c(OracleIncrementalCollectTask.java:302)]task:cccccccccccccc#incremental#collect#incremental#1,collectOffset:0,topic:69453244b661e45f93899f355f6ba182-1,lastOffset:0,lastRecord:{"offset":"0-0","commitScn":0,"time":"2025-02-28 19:11:59.587","hasChunkData":false,"table":"null.null.null","scn":0}

[INFO ][2025-02-28 19:11:59][cn.com.panwei.incremental.x.c.b.c(OracleIncrementalCollectTask.java:306)]task:cccccccccccccc#incremental#collect#incremental#1,lastOffset == 0

[INFO ][2025-02-28 19:11:59][cn.com.panwei.incremental.q.c.nD(RecordSender.java:307)]采集任务#jobId:【cccccccccccccc#incremental】, 无commit,仅更新collectOffset【0】

[INFO ][2025-02-28 19:11:59][cn.com.panwei.incremental.util.j.a(IncrementalHelper.java:1336)]task:cccccccccccccc#incremental#collect#incremental#1,sourceTime为null,原startScn:2175627,dbStartScn:2176265,返回startScn:2175627

[INFO ][2025-02-28 19:11:59][cn.com.panwei.incremental.x.c.b.I(OracleIncrementalCollectTask.java:236)]采集任务#taskID:【cccccccccccccc#incremental#collect#incremental#1】, 存在历史采集任务

[INFO ][2025-02-28 19:11:59][cn.com.panwei.incremental.x.c.a.oq(OracleCollectTaskExecutor.java:70)]采集任务#taskID:【cccccccccccccc#incremental#collect#incremental#1】, 启动采集端,起点快照信息:【scn:2175627】

[INFO ][2025-02-28 19:11:59][cn.com.panwei.incremental.x.c.a.oq(OracleCollectTaskExecutor.java:96)]task:cccccccccccccc#incremental#collect#incremental#1, TablesFilter中的表名: [crw.test_crw]

[INFO ][2025-02-28 19:11:59][cn.com.panwei.incremental.d.g.d.<init>(XStreamCollector.java:125)]task:cccccccccccccc#incremental#collect#incremental#1, 等待采集端关闭

[INFO ][2025-02-28 19:11:59][cn.com.panwei.incremental.d.g.d.<init>(XStreamCollector.java:134)]task:cccccccccccccc#incremental#collect#incremental#1, 采集端关闭完成

[INFO ][2025-02-28 19:11:59][cn.com.panwei.incremental.d.g.d.D(XStreamCollector.java:226)]task:cccccccccccccc#incremental#collect#incremental#1,出站服务器规则: 总表数:1,表规则数:1,schema规则数:0,表规则:["CRW"."TEST_CRW"],schema规则:[]

[INFO ][2025-02-28 19:11:59][cn.com.panwei.incremental.d.g.d.<init>(XStreamCollector.java:147)]task:cccccccccccccc#incremental#collect#incremental#1,启用outbound规则参数压缩

[INFO ][2025-02-28 19:11:59][cn.com.panwei.incremental.d.g.d.<init>(XStreamCollector.java:153)]task:cccccccccccccc#incremental#collect#incremental#1, oracleUrl:jdbc:oracle:oci:@192.168.129.73:1521/HLTRILDB, user:c##xstrmadmin

[INFO ][2025-02-28 19:11:59][cn.com.panwei.incremental.x.c.a.oq(OracleCollectTaskExecutor.java:109)]采集任务#taskID:【cccccccccccccc#incremental#collect#incremental#1】, 采集器【d】创建成功

[INFO ][2025-02-28 19:11:59][cn.com.panwei.incremental.l.b.i(CollectListener.java:94)]采集任务#taskID:【cccccccccccccc#incremental#collect#incremental#1】, 采集端开始采集, 源库【pdb_crw】

[INFO ][2025-02-28 19:11:59][cn.com.panwei.incremental.l.e.kF(OracleCollectListener.java:80)]task:cccccccccccccc#incremental#collect#incremental#1,[采集端]子线程5[Oracle trace文件清空器]启动成功,开始每30分钟清空Oracle trace文件

[INFO ][2025-02-28 19:11:59][cn.com.panwei.incremental.l.b.start(CollectListener.java:179)]采集任务#taskID:【cccccccccccccc#incremental#collect#incremental#1】, 【采集端】开启任务监听

[INFO ][2025-02-28 19:11:59][cn.com.panwei.incremental.l.b.i(CollectListener.java:96)]采集端数据采集线程:Thread-Collect-cccccccccccccc#incremental#collect#incremental#1_1740741119616启动!

[INFO ][2025-02-28 19:11:59][cn.com.panwei.incremental.l.b.c(CollectListener.java:118)]采集端数据序列化线程:Thread-Stream-cccccccccccccc#incremental#collect#incremental#1_1740741119616启动!

[INFO ][2025-02-28 19:11:59][cn.com.panwei.incremental.l.b.b(CollectListener.java:135)]采集端数据发送线程:Thread-Send-cccccccccccccc#incremental#collect#incremental#1_1740741119616启动!

[INFO ][2025-02-28 19:11:59][cn.com.panwei.incremental.d.g.d.start(XStreamCollector.java:512)]采集任务#taskID:【cccccccccccccc#incremental#collect#incremental#1】, 数据采集器线程【Thread-Collect-cccccccccccccc#incremental#collect#incremental#1_1740741119616】启动成功, 开始从XStream采集数据

[INFO ][2025-02-28 19:11:59][cn.com.panwei.incremental.p.a.<init>(DictionaryBuilder.java:52)]task:cccccccccccccc#incremental#collect#incremental#1, config dictionary start time:[22, 7]

[INFO ][2025-02-28 19:11:59][cn.com.panwei.incremental.l.e.go(OracleCollectListener.java:60)]task:cccccccccccccc#incremental#collect#incremental#1,[采集端]子线程4[事务记录器]启动成功,开始每5分钟更新startScn

[INFO ][2025-02-28 19:11:59][cn.com.panwei.incremental.l.f.run(OracleCollectListener.java:38)]taskId:【cccccccccccccc#incremental#collect#incremental#1】, Oracle Collect 【XSTREAM】 Waiting to parse the message...

[INFO ][2025-02-28 19:11:59][cn.com.panwei.incremental.d.g.d.a(XStreamCollector.java:1190)]task:cccccccccccccc#incremental#collect#incremental#1,[采集端]子线程8[Oracle streams pool size检测器]启动成功,开始每60分钟检测Oracle streams pool size已使用率,检测是否达到阈值:0.85

[INFO ][2025-02-28 19:11:59][cn.com.panwei.incremental.v.a.start(RecordStream.java:68)]采集任务#taskID:【cccccccccccccc#incremental#collect#incremental#1】, 序列化器线程【Thread-Stream-cccccccccccccc#incremental#collect#incremental#1_1740741119616】启动成功, 准备数据序列化

[INFO ][2025-02-28 19:11:59][cn.com.panwei.incremental.p.e.hq(TraceFileCleaner.java:90)]清空Oracle trace文件完成,共清空8个文件

[INFO ][2025-02-28 19:11:59][cn.com.panwei.incremental.l.b.h(CollectListener.java:155)]采集端Kafka日志大小检测线程:Thread-kafkaDataSizeChecker-cccccccccccccc#incremental#collect#incremental#1_1740741119616启动!

[INFO ][2025-02-28 19:12:01][cn.com.panwei.incremental.d.g.d.kg(XStreamCollector.java:800)]task:cccccccccccccc#incremental#collect#incremental#1,出站服务器:CCCCCCCCCCCCCC_SERVERNAME不存在

[INFO ][2025-02-28 19:12:01][cn.com.panwei.incremental.d.g.d.a(XStreamCollector.java:917)]serverName:CCCCCCCCCCCCCC_SERVERNAME, 开始创建队列:C##XSTRMADMIN.Q$_F033E31740741119615

[INFO ][2025-02-28 19:12:03][cn.com.panwei.incremental.d.g.h.l(XStreamUtil.java:264)]XStream创建队列:C##XSTRMADMIN.Q$_F033E31740741119615成功

[INFO ][2025-02-28 19:12:03][cn.com.panwei.incremental.d.g.d.a(XStreamCollector.java:924)]serverName:CCCCCCCCCCCCCC_SERVERNAME, 查找到可用的first scn:[2173895]

[INFO ][2025-02-28 19:12:03][cn.com.panwei.incremental.d.g.d.a(XStreamCollector.java:929)]serverName:CCCCCCCCCCCCCC_SERVERNAME, 开始创建捕获过程:CAP$_F033E31740741119615

[INFO ][2025-02-28 19:12:04][cn.com.panwei.incremental.d.g.h.a(XStreamUtil.java:397)]XStream创建捕获过程:CAP$_F033E31740741119615成功,队列:C##XSTRMADMIN.Q$_F033E31740741119615,firstScn:2173895,startScn:2175627

[INFO ][2025-02-28 19:12:04][cn.com.panwei.incremental.d.g.d.a(XStreamCollector.java:934)]serverName:开始创建出站服务器

[INFO ][2025-02-28 19:12:05][cn.com.panwei.incremental.d.g.h.a(XStreamUtil.java:201)]XStream创建出站服务器成功,server:CCCCCCCCCCCCCC_SERVERNAME,队列:C##XSTRMADMIN.Q$_F033E31740741119615

[INFO ][2025-02-28 19:12:05][cn.com.panwei.incremental.d.g.h.a(XStreamUtil.java:172)]XStream创建出站服务器全部成功:CCCCCCCCCCCCCC_SERVERNAME成功,队列:C##XSTRMADMIN.Q$_F033E31740741119615

[INFO ][2025-02-28 19:12:05][cn.com.panwei.incremental.d.g.d.a(XStreamCollector.java:938)]XStream手动创建出站服务器:CCCCCCCCCCCCCC_SERVERNAME成功,捕获过程:CAP$_F033E31740741119615,队列:C##XSTRMADMIN.Q$_F033E31740741119615,firstScn:2173895,startScn:2175627

[INFO ][2025-02-28 19:12:05][cn.com.panwei.incremental.d.g.d.a(XStreamCollector.java:941)]XStream start check dict instantiation

[INFO ][2025-02-28 19:12:05][cn.com.panwei.incremental.d.g.h.a(XStreamUtil.java:1374)]XStream准备table实例化成功

[INFO ][2025-02-28 19:12:05][cn.com.panwei.incremental.d.g.d.a(XStreamCollector.java:1001)]check prepare dict, task:cccccccccccccc#incremental#collect#incremental#1

[WARN ][2025-02-28 19:12:05][cn.com.panwei.incremental.d.g.d.a(XStreamCollector.java:1004)]prepare dict fail, task:cccccccccccccc#incremental#collect#incremental#1

[WARN ][2025-02-28 19:12:05][cn.com.panwei.incremental.d.g.d.a(XStreamCollector.java:1009)]miss dict for table "CRW"."TEST_CRW", task:cccccccccccccc#incremental#collect#incremental#1

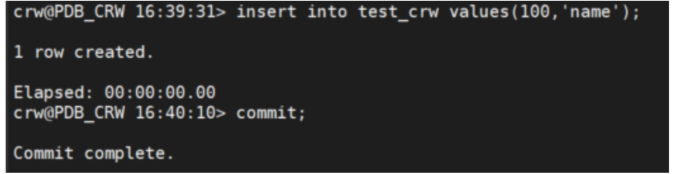

Oracle插入数据:

insert into test_crw values(100,'name');

commit;

增量日志输出:

[INFO ][2025-02-28 19:18:30][cn.com.panwei.incremental.t.a.a(CollectConfDao.java:597)]update collectinfo, jobId:cccccccccccccc#incremental, collectCommitScn:2218370, lastCollectCommitScn:2180567, collectOffset5, sourceTime:2025-02-28 19:18:27.0, transactionIds:[10.4.623]

[INFO ][2025-02-28 19:18:35][cn.com.panwei.incremental.a.h.b(ApplyMasterTask.java:817)]init applySubTask, applyTaskId:cccccccccccccc#incremental#apply#incremental#1_1740741515165, queue size:2, firstRecord:{"xid":"10.4.623","offset":"4-1","commitScn":2218370,"time":"2025-02-28 19:18:25.0","hasChunkData":false,"command":"INSERT","table":"PDB_CRW.CRW.TEST_CRW","scn":2218366}, lastRecord:{"xid":"10.4.623","offset":"5-1","commitScn":2218370,"time":"2025-02-28 19:18:27.0","hasChunkData":false,"command":"COMMIT","db":"PDB_CRW","scn":2218370}

[INFO ][2025-02-28 19:18:35][cn.com.panwei.incremental.a.k.B(ApplySubTask.java:174)]taskId:cccccccccccccc#incremental#apply#incremental#1,applyTaskId:cccccccccccccc#incremental#apply#incremental#1_1740741515165。事务开始,执行RecordList,size:2

[INFO ][2025-02-28 19:18:35][cn.com.panwei.incremental.a.k.B(ApplySubTask.java:219)]recordList应用完成, 即将提交, firstRecord:{"xid":"10.4.623","offset":"4-1","commitScn":2218370,"time":"2025-02-28 19:18:25.0","hasChunkData":false,"command":"INSERT","table":"PDB_CRW.CRW.TEST_CRW","scn":2218366}, lastReocrd:{"xid":"10.4.623","offset":"5-1","commitScn":2218370,"time":"2025-02-28 19:18:27.0","hasChunkData":false,"command":"COMMIT","db":"PDB_CRW","scn":2218370}, lastNonCommitRecord:{"xid":"10.4.623","offset":"4-1","commitScn":2218370,"time":"2025-02-28 19:18:25.0","hasChunkData":false,"command":"INSERT","table":"PDB_CRW.CRW.TEST_CRW","scn":2218366}

[INFO ][2025-02-28 19:18:35][cn.com.panwei.incremental.a.D.b(Transaction.java:320)]taskId:cccccccccccccc#incremental#apply#incremental#1, thread:Thread-Apply-cccccccccccccc#incremental#apply#incremental#1_1740741405034, applyConf:ApplyConf{jobId='cccccccccccccc#incremental', topic='null', lastOffset=5, lastSubOffset=1, lastScnNumber=0, scnNumber=2218370, transactionId=[10.4.623], lastCommitPosition='0:0', applyTime=2025-02-28 19:18:27.0, srcCommitTime=0}, 提交事务, 花费时长:3ms, 执行条数:1

[INFO ][2025-02-28 19:18:35][cn.com.panwei.incremental.a.h.eL(ApplyMasterTask.java:915)]task:cccccccccccccc#incremental#apply#incremental#1, parallel apply speed:0.017 record/sec

[INFO ][2025-02-28 19:18:46][cn.com.panwei.incremental.l.f.run(OracleCollectListener.java:38)]taskId:【cccccccccccccc#incremental#collect#incremental#1】, Oracle Collect 【XSTREAM】 Waiting to parse the message...

[INFO ][2025-02-28 19:19:46][cn.com.panwei.incremental.l.f.run(OracleCollectListener.java:38)]taskId:【cccccccccccccc#incremental#collect#incremental#1】, Oracle Collect 【XSTREAM】 Waiting to parse the message...

全量日志:

[19:34:59:285] [INFO] - dts.helper.q.a(dtsHelper.java:734) - get Oracle System Dictionaries!

[19:34:59:315] [INFO] - dts.helper.q.a(dtsHelper.java:738) - get Oracle nchar Characterset!

[19:34:59:319] [INFO] - dts.helper.q.a(dtsHelper.java:734) - get Oracle System Dictionaries!

[19:34:59:330] [INFO] - dts.helper.q.a(dtsHelper.java:738) - get Oracle nchar Characterset!

[19:34:59:339] [INFO] - dts.helper.q.a(dtsHelper.java:734) - get Oracle System Dictionaries!

[19:34:59:340] [INFO] - dts.helper.q.a(dtsHelper.java:738) - get Oracle nchar Characterset!

[19:35:04:374] [INFO] - dts.helper.q.a(dtsHelper.java:734) - get Oracle System Dictionaries!

[19:35:04:408] [INFO] - dts.helper.q.a(dtsHelper.java:738) - get Oracle nchar Characterset!

[19:35:04:411] [INFO] - dts.helper.q.a(dtsHelper.java:734) - get Oracle System Dictionaries!

磐维目标端已经实时同步到数据:

四、删除任务

点停止任务:

[INFO ][2025-02-28 19:22:04][cn.com.panwei.incremental.h.a.E(RpcHandler.java:76)]begin:RpcCallHandler-->runningTaskCount:3,param:{stoptype=stop, aborttaskid=cccccccccccccc#incremental#collect#incremental#1, tasktype=StopTask, taskid=stopsubtask}

[INFO ][2025-02-28 19:22:04][cn.com.panwei.incremental.x.l.r(StopTask.java:40)]TaskID:【cccccccccccccc#incremental#collect#incremental#1】#停止任务:开始执行! rpcCall:{{stoptype=stop, aborttaskid=cccccccccccccc#incremental#collect#incremental#1, tasktype=StopTask, taskid=stopsubtask}}

[INFO ][2025-02-28 19:22:04][cn.com.panwei.incremental.x.l.d(StopTask.java:100)]TaskID:【cccccccccccccc#incremental#collect#incremental#1】#停止任务:任务:{[采集端]}即将{stop}!

[INFO ][2025-02-28 19:22:04][cn.com.panwei.incremental.x.n.hR(TaskStateBroadcast.java:76)]task:cccccccccccccc#incremental#collect#incremental#1任务状态设为停止

[INFO ][2025-02-28 19:22:04][cn.com.panwei.incremental.x.l.r(StopTask.java:47)]TaskID:【cccccccccccccc#incremental#collect#incremental#1】#停止任务:停止成功!

[INFO ][2025-02-28 19:22:04][cn.com.panwei.incremental.h.a.E(RpcHandler.java:166)]end:RpcCallHandler-->runningTaskCount:2,hasRanTaskCount:19

[INFO ][2025-02-28 19:22:04][cn.com.panwei.incremental.h.a.E(RpcHandler.java:168)]end:task:cccccccccccccc#incremental#collect#incremental#1,taskType:StopTask,RPC请求参数:{stoptype=stop, aborttaskid=cccccccccccccc#incremental#collect#incremental#1, tasktype=StopTask, taskid=stopsubtask},应答参数:{msg=success, result=ok, code=0, status=stop}

[INFO ][2025-02-28 19:22:04][cn.com.panwei.incremental.h.a.E(RpcHandler.java:76)]begin:RpcCallHandler-->runningTaskCount:3,param:{stoptype=stop, aborttaskid=cccccccccccccc#incremental#apply#incremental#1, tasktype=StopTask, taskid=stopsubtask}

[INFO ][2025-02-28 19:22:04][cn.com.panwei.incremental.x.l.r(StopTask.java:40)]TaskID:【cccccccccccccc#incremental#apply#incremental#1】#停止任务:开始执行! rpcCall:{{stoptype=stop, aborttaskid=cccccccccccccc#incremental#apply#incremental#1, tasktype=StopTask, taskid=stopsubtask}}

[INFO ][2025-02-28 19:22:04][cn.com.panwei.incremental.x.l.d(StopTask.java:100)]TaskID:【cccccccccccccc#incremental#apply#incremental#1】#停止任务:任务:{<应用端>}即将{stop}!

[INFO ][2025-02-28 19:22:04][cn.com.panwei.incremental.x.n.hR(TaskStateBroadcast.java:76)]task:cccccccccccccc#incremental#apply#incremental#1任务状态设为停止

[INFO ][2025-02-28 19:22:04][cn.com.panwei.incremental.x.l.r(StopTask.java:47)]TaskID:【cccccccccccccc#incremental#apply#incremental#1】#停止任务:停止成功!

[INFO ][2025-02-28 19:22:04][cn.com.panwei.incremental.h.a.E(RpcHandler.java:166)]end:RpcCallHandler-->runningTaskCount:2,hasRanTaskCount:20

[INFO ][2025-02-28 19:22:04][cn.com.panwei.incremental.h.a.E(RpcHandler.java:168)]end:task:cccccccccccccc#incremental#apply#incremental#1,taskType:StopTask,RPC请求参数:{stoptype=stop, aborttaskid=cccccccccccccc#incremental#apply#incremental#1, tasktype=StopTask, taskid=stopsubtask},应答参数:{msg=success, result=ok, code=0, status=stop}

[INFO ][2025-02-28 19:22:05][cn.com.panwei.incremental.l.a.start(ApplyListener.java:162)]taskId:cccccccccccccc#incremental#apply#incremental#1任务结束,task.isStopeed:true, TaskHelper.isTaskStop(taskId): true, countDownLatch.count:1

[INFO ][2025-02-28 19:22:05][cn.com.panwei.incremental.o.f.close(RecordReceiverV2.java:322)]taskId:cccccccccccccc#incremental#apply#incremental#1, RecordReceiverV2调用receive.wakeup

[INFO ][2025-02-28 19:22:05][cn.com.panwei.incremental.o.f.start(RecordReceiverV2.java:270)]taskId:cccccccccccccc#incremental#apply#incremental#1,准备关闭consumer

[INFO ][2025-02-28 19:22:05][cn.com.panwei.incremental.o.f.start(RecordReceiverV2.java:289)]TaskID:【cccccccccccccc#incremental#apply#incremental#1】#应用任务:数据读取器 Kafka 已经停止!

[INFO ][2025-02-28 19:22:05][cn.com.panwei.incremental.l.a.b(ApplyListener.java:90)]应用端数据读取线程:Thread-Read-cccccccccccccc#incremental#apply#incremental#1_1740741405034结束!

[INFO ][2025-02-28 19:22:05][cn.com.panwei.incremental.a.h.close(ApplyMasterTask.java:405)]task:cccccccccccccc#incremental#apply#incremental#1,<应用端>主应用线程]关闭成功

[INFO ][2025-02-28 19:22:05][cn.com.panwei.incremental.l.a.a(ApplyListener.java:131)]kafka数据清理检测线程:Thread-KafkaCleanerMonitor-cccccccccccccc#incremental#apply#incremental#1_1740741405034结束!

[WARN ][2025-02-28 19:22:05][cn.com.panwei.incremental.a.h.eC(ApplyMasterTask.java:271)]TaskID:【cccccccccccccc#incremental#apply#incremental#1】#应用任务中断

java.lang.InterruptedException

at java.util.concurrent.locks.AbstractQueuedSynchronizer$ConditionObject.reportInterruptAfterWait(AbstractQueuedSynchronizer.java:2014)

at java.util.concurrent.locks.AbstractQueuedSynchronizer$ConditionObject.awaitNanos(AbstractQueuedSynchronizer.java:2088)

at java.util.concurrent.LinkedBlockingQueue.poll(LinkedBlockingQueue.java:467)

at cn.com.atlasdata.incremental.a.h.eC(ApplyMasterTask.java:190)

at cn.com.atlasdata.incremental.l.a.a(ApplyListener.java:99)

at java.util.concurrent.ThreadPoolExecutor.runWorker(ThreadPoolExecutor.java:1149)

at java.util.concurrent.ThreadPoolExecutor$Worker.run(ThreadPoolExecutor.java:624)

at java.lang.Thread.run(Thread.java:750)

[INFO ][2025-02-28 19:22:05][cn.com.panwei.incremental.a.h.eC(ApplyMasterTask.java:278)]TaskID:【cccccccccccccc#incremental#apply#incremental#1】#应用任务:数据应用任务已结束!

[INFO ][2025-02-28 19:22:05][cn.com.panwei.incremental.l.a.a(ApplyListener.java:109)]数据应用线程:Thread-Apply-cccccccccccccc#incremental#apply#incremental#1_1740741405034结束!

[INFO ][2025-02-28 19:22:05][cn.com.panwei.incremental.l.a.start(ApplyListener.java:175)]task:cccccccccccccc#incremental#apply#incremental#1 executorService has been terminated!

[INFO ][2025-02-28 19:22:05][cn.com.panwei.incremental.l.a.start(ApplyListener.java:185)]task:cccccccccccccc#incremental#apply#incremental#1 scheduledService has been terminated!

[INFO ][2025-02-28 19:22:05][cn.com.panwei.incremental.util.j.a(IncrementalHelper.java:1848)]task:cccccccccccccc#incremental#apply#incremental#1,表crw.test_crw启用触发器成功

[INFO ][2025-02-28 19:22:05][cn.com.panwei.incremental.l.a.start(ApplyListener.java:197)]TaskID:【cccccccccccccc#incremental#apply#incremental#1】#应用任务:<应用端>停止,任务监听停止!

[INFO ][2025-02-28 19:22:05][cn.com.panwei.incremental.x.d.I(IncrementalApplyTask.java:200)]TaskID:【cccccccccccccc#incremental#apply#incremental#1】#应用任务运行结束!

[INFO ][2025-02-28 19:22:05][cn.com.panwei.incremental.h.a.b(RpcHandler.java:246)]任务结束,task:cccccccccccccc#incremental#apply#incremental#1,taskType:apply,status:stop,msg:success

[INFO ][2025-02-28 19:22:05][cn.com.panwei.incremental.h.a.E(RpcHandler.java:166)]end:RpcCallHandler-->runningTaskCount:1,hasRanTaskCount:21

[INFO ][2025-02-28 19:22:05][cn.com.panwei.incremental.h.a.E(RpcHandler.java:168)]end:task:cccccccccccccc#incremental#apply#incremental#1,taskType:apply,RPC请求参数:{jobName=cccccccccccccc, jobid=cccccccccccccc#incremental, tasktype=apply, thread=Y, jobType=incremental, jobtype=incremental, jobname=cccccccccccccc, taskid=cccccccccccccc#incremental#apply#incremental#1},应答参数:{msg=success, result=ok, code=0, status=stop}

[INFO ][2025-02-28 19:22:05][cn.com.panwei.incremental.l.b.start(CollectListener.java:209)]采集任务#taskID:【cccccccccccccc#incremental#collect#incremental#1】, 【采集端】停止任务监听

[INFO ][2025-02-28 19:22:05][cn.com.panwei.incremental.d.g.d.close(XStreamCollector.java:625)]task:cccccccccccccc#incremental#collect#incremental#1,采集器关闭

[ERROR][2025-02-28 19:22:05][cn.com.panwei.incremental.v.a.od(RecordStream.java:125)]采集任务#taskID:【cccccccccccccc#incremental#collect#incremental#1】, 采集因中断而停止

java.lang.InterruptedException

at java.util.concurrent.locks.AbstractQueuedSynchronizer$ConditionObject.reportInterruptAfterWait(AbstractQueuedSynchronizer.java:2014)

at java.util.concurrent.locks.AbstractQueuedSynchronizer$ConditionObject.await(AbstractQueuedSynchronizer.java:2048)

at java.util.concurrent.LinkedBlockingQueue.take(LinkedBlockingQueue.java:442)

at cn.com.atlasdata.incremental.v.a.od(RecordStream.java:100)

at cn.com.atlasdata.incremental.v.a.start(RecordStream.java:71)

at cn.com.atlasdata.incremental.l.b.c(CollectListener.java:119)

at java.util.concurrent.ThreadPoolExecutor.runWorker(ThreadPoolExecutor.java:1149)

at java.util.concurrent.ThreadPoolExecutor$Worker.run(ThreadPoolExecutor.java:624)

at java.lang.Thread.run(Thread.java:750)

[INFO ][2025-02-28 19:22:05][cn.com.panwei.incremental.l.b.c(CollectListener.java:127)]采集端数据序列化线程:Thread-Stream-cccccccccccccc#incremental#collect#incremental#1_1740741406065结束!

[INFO ][2025-02-28 19:22:05][cn.com.panwei.incremental.l.b.b(CollectListener.java:144)]采集端数据发送线程:Thread-Send-cccccccccccccc#incremental#collect#incremental#1_1740741406065结束!

[WARN ][2025-02-28 19:22:05][cn.com.panwei.incremental.d.g.d.start(XStreamCollector.java:561)]采集任务#taskID:【cccccccccccccc#incremental#collect#incremental#1】, 采集因中断而停止

[INFO ][2025-02-28 19:22:05][cn.com.panwei.incremental.d.g.d.kf(XStreamCollector.java:685)]task:cccccccccccccc#incremental#collect#incremental#1,[采集端]子线程1[数据采集器]即将关闭

[INFO ][2025-02-28 19:22:05][cn.com.panwei.incremental.d.g.d.kf(XStreamCollector.java:689)]task:cccccccccccccc#incremental#collect#incremental#1,出站服务器:CCCCCCCCCCCCCC_SERVERNAME,即将断开

[INFO ][2025-02-28 19:22:05][cn.com.panwei.incremental.d.g.d.kf(XStreamCollector.java:692)]task:cccccccccccccc#incremental#collect#incremental#1,出站服务器:CCCCCCCCCCCCCC_SERVERNAME,已断开

[INFO ][2025-02-28 19:22:05][cn.com.panwei.incremental.d.g.d.kf(XStreamCollector.java:694)]task:cccccccccccccc#incremental#collect#incremental#1,出站服务器:CCCCCCCCCCCCCC_SERVERNAME,捕获过程:CAP$_F033E31740741119615,即将停止

[INFO ][2025-02-28 19:22:08][cn.com.panwei.incremental.d.g.h.T(XStreamUtil.java:663)]XStream停止捕获过程:CAP$_F033E31740741119615成功

[INFO ][2025-02-28 19:22:08][cn.com.panwei.incremental.d.g.d.kf(XStreamCollector.java:696)]task:cccccccccccccc#incremental#collect#incremental#1,出站服务器:CCCCCCCCCCCCCC_SERVERNAME,捕获过程:CAP$_F033E31740741119615,已停止

[INFO ][2025-02-28 19:22:08][cn.com.panwei.incremental.d.g.d.kf(XStreamCollector.java:698)]task:cccccccccccccc#incremental#collect#incremental#1,出站服务器:CCCCCCCCCCCCCC_SERVERNAME,apply:CCCCCCCCCCCCCC_SERVERNAME,即将停止

[INFO ][2025-02-28 19:22:13][cn.com.panwei.incremental.d.g.h.U(XStreamUtil.java:712)]XStream停止应用:CCCCCCCCCCCCCC_SERVERNAME完成

[INFO ][2025-02-28 19:22:13][cn.com.panwei.incremental.d.g.d.kf(XStreamCollector.java:700)]task:cccccccccccccc#incremental#collect#incremental#1,出站服务器:CCCCCCCCCCCCCC_SERVERNAME,apply:CCCCCCCCCCCCCC_SERVERNAME,已停止

[INFO ][2025-02-28 19:22:13][cn.com.panwei.incremental.l.b.i(CollectListener.java:110)]采集端数据采集线程:Thread-Collect-cccccccccccccc#incremental#collect#incremental#1_1740741406065结束!

[INFO ][2025-02-28 19:22:13][cn.com.panwei.incremental.l.b.start(CollectListener.java:221)]采集端Kafka日志大小检测线程:Thread-17结束!

[INFO ][2025-02-28 19:22:13][cn.com.panwei.incremental.l.b.start(CollectListener.java:226)]task:cccccccccccccc#incremental#collect#incremental#1 executorService has been terminated!

[WARN ][2025-02-28 19:22:13][cn.com.panwei.incremental.d.g.d.close(XStreamCollector.java:618)]task:cccccccccccccc#incremental#collect#incremental#1,采集器已关闭,忽略close

[INFO ][2025-02-28 19:22:13][cn.com.panwei.incremental.h.a.b(RpcHandler.java:246)]任务结束,task:cccccccccccccc#incremental#collect#incremental#1,taskType:collect,status:stop,msg:success

[INFO ][2025-02-28 19:22:13][cn.com.panwei.incremental.h.a.E(RpcHandler.java:166)]end:RpcCallHandler-->runningTaskCount:0,hasRanTaskCount:22

[INFO ][2025-02-28 19:22:13][cn.com.panwei.incremental.h.a.E(RpcHandler.java:168)]end:task:cccccccccccccc#incremental#collect#incremental#1,taskType:collect,RPC请求参数:{jobName=cccccccccccccc, jobid=cccccccccccccc#incremental, tasktype=collect, thread=Y, jobType=incremental, jobtype=incremental, jobname=cccccccccccccc, taskid=cccccccccccccc#incremental#collect#incremental#1},应答参数:{msg=success, result=ok, code=0, status=stop}

删除任务:

[INFO ][2025-02-28 19:23:01][cn.com.panwei.incremental.h.a.E(RpcHandler.java:76)]begin:RpcCallHandler-->runningTaskCount:1,param:{deletereplicationslot=false, jobid=cccccccccccccc#incremental, tasktype=removecollectserver, thread=N, jobtype=incremental, taskid=removecollectserver, jobname=cccccccccccccc}

[INFO ][2025-02-28 19:23:01][cn.com.panwei.incremental.x.h.J(IncrementalTaskConf.java:686)]查询作业数据源sql:[select dbid, targetdbid, targetdbtype, jobparameters from panwei_dtp.tb_portal_job where jobname = 'cccccccccccccc' limit 1], jobName:[cccccccccccccc]

[INFO ][2025-02-28 19:23:01][cn.com.panwei.incremental.util.j.a(IncrementalHelper.java:98)]getDataSourceConf dbId:ccccccc,isTargetDb:false,pgConn:jdbc:postgresql://127.0.0.1:31003/panweidb

[INFO ][2025-02-28 19:23:01][cn.com.panwei.incremental.util.j.a(IncrementalHelper.java:98)]getDataSourceConf dbId:oracle,isTargetDb:false,pgConn:jdbc:postgresql://127.0.0.1:31003/panweidb