点击蓝字

关注我们

1

Shell 脚本中,单引号 (')、

双引号 (") 和反引号 (`)使用

1.1

单引号 (')

用途:完全引用,用于保护字符串中的所有字符,不进行变量替换或命令替换 特性:引号内的任何字符都将原样输出,不会进行任何解释

VAR="world"

echo 'Hello, $VAR' # 输出:Hello, $VAR

1.2

双引号 (")

用途:部分引用,用于保护字符串中的大部分字符,但允许变量替换和命令替换 特性:引号内的变量和命令会被解释,其余字符原样输出

VAR="world"

echo "Hello, $VAR" # 输出:Hello, world

1.3

反引号 (`)

用途:命令替换,用于执行引号内的命令,并将命令的输出作为结果返回 特性:引号内的命令会被执行,结果会替换反引号中的内容 注意:反引号是旧的命令替换方式,更推荐使用 $()

DATE=`date`

echo "Current date and time: $DATE" # 输出当前日期和时间

1.4

推荐使用 $() 进行命令替换

更现代和可读性更好 可以嵌套使用,而反引号嵌套使用比较困难

DATE=$(date)

echo "Current date and time: $DATE" # 输出当前日期和时间

# 嵌套命令替换示例

OUTER=``(echo "Outer ``(echo "Inner")")

echo $OUTER # 输出:Outer Inner

1.5

使用场景

单引号:当你不希望字符串中的任何内容被解释时使用,例如正则表达式、特殊字符等 双引号:当你希望字符串中包含变量或命令替换时使用 反引号/$():当你需要执行命令并使用其输出时使用

2

Dolphinscheduler中的输出变量

和文件传递

2.1

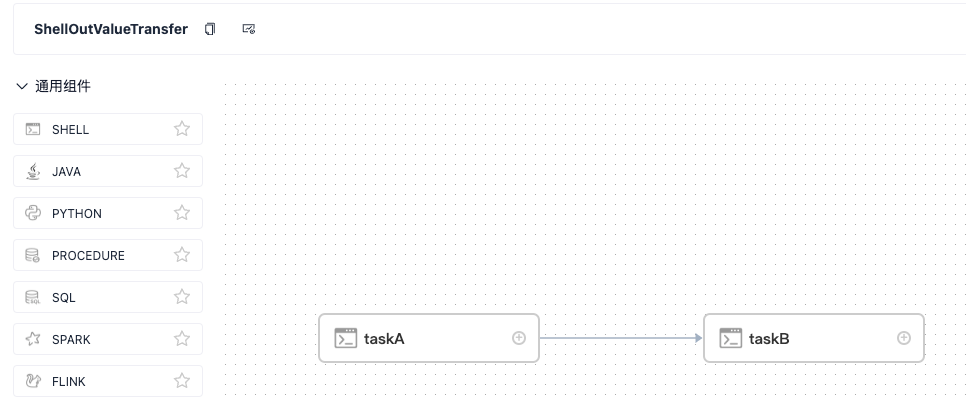

Shell输出变量

2.1.1、流程如下

2.1.2、任务配置

taskA

echo 'taskA'

echo "#{setValue(linesNum=${lines_num})}"

echo '${setValue(words=20)}'

echo 'taskB'

echo ${linesNum}

echo ${words}

2.1.3、结果输出

主要看taskB的输出

}

[INFO] 2024-07-05 10:09:54.539 +0800 - Success initialized task plugin instance successfully

[INFO] 2024-07-05 10:09:54.539 +0800 - Set taskVarPool: [{"prop":"linesNum","direct":"IN","type":"VARCHAR","value":"100"},{"prop":"words","direct":"IN","type":"VARCHAR","value":"20"}] successfully

[INFO] 2024-07-05 10:09:54.539 +0800 - ***********************************************************************************************

[INFO] 2024-07-05 10:09:54.539 +0800 - ********************************* Execute task instance *************************************

[INFO] 2024-07-05 10:09:54.539 +0800 - ***********************************************************************************************

[INFO] 2024-07-05 10:09:54.540 +0800 - Final Shell file is:

[INFO] 2024-07-05 10:09:54.540 +0800 - ****************************** Script Content *****************************************************************

[INFO] 2024-07-05 10:09:54.540 +0800 - #!/bin/bash

BASEDIR=$(cd `dirname $0`; pwd)

cd $BASEDIR

source etc/profile

export HADOOP_HOME=${HADOOP_HOME:-/home/hadoop-3.3.1}

export HADOOP_CONF_DIR=${HADOOP_CONF_DIR:-/opt/soft/hadoop/etc/hadoop}

export SPARK_HOME=${SPARK_HOME:-/home/spark-3.2.1-bin-hadoop3.2}

export PYTHON_HOME=${PYTHON_HOME:-/opt/soft/python}

export HIVE_HOME=${HIVE_HOME:-/home/hive-3.1.2}

export FLINK_HOME=/home/flink-1.18.1

export DATAX_HOME=${DATAX_HOME:-/opt/soft/datax}

export SEATUNNEL_HOME=/opt/software/seatunnel

export CHUNJUN_HOME=${CHUNJUN_HOME:-/opt/soft/chunjun}

export PATH=``HADOOP_HOME/bin:``SPARK_HOME/bin:``PYTHON_HOME/bin:``JAVA_HOME/bin:``HIVE_HOME/bin:``FLINK_HOME/bin:``DATAX_HOME/bin:``SEATUNNEL_HOME/bin:``CHUNJUN_HOME/bin:``PATH

echo 'taskB'

echo 100

echo 20

[INFO] 2024-07-05 10:09:54.540 +0800 - ****************************** Script Content *****************************************************************

[INFO] 2024-07-05 10:09:54.540 +0800 - Executing shell command : sudo -u root -i tmp/dolphinscheduler/exec/process/root/13850571680800/14171397340576_3/1961/1454/1961_1454.sh

[INFO] 2024-07-05 10:09:54.544 +0800 - process start, process id is: 588336

[INFO] 2024-07-05 10:09:56.544 +0800 - ->

taskB

100

20

[INFO] 2024-07-05 10:09:56.546 +0800 - process has exited. execute path:/tmp/dolphinscheduler/exec/process/root/13850571680800/14171397340576_3/1961/1454, processId:588336 ,exitStatusCode:0 ,processWaitForStatus:true ,processExitValue:0

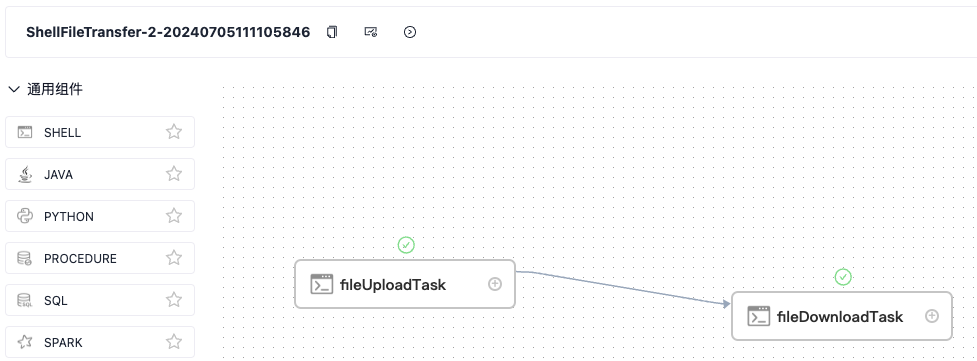

2.2

Shell文件传递

2.2.1、流程如下

2.2.2、任务配置

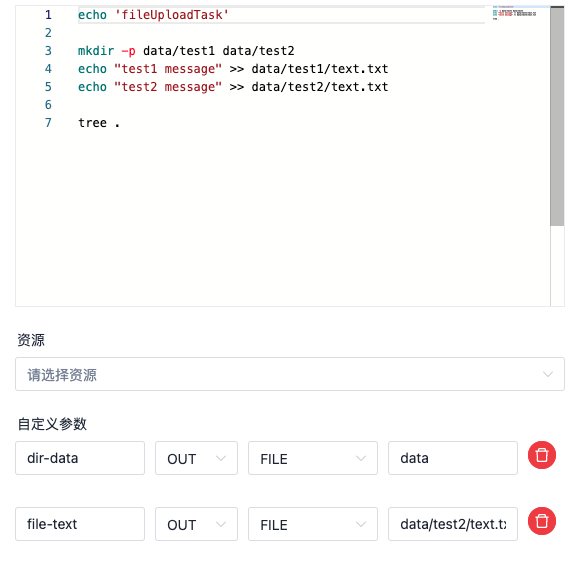

fileUploadTask

echo 'fileUploadTask'

mkdir -p data/test1 data/test2

echo "test1 message" >> data/test1/text.txt

echo "test2 message" >> data/test2/text.txt

tree.

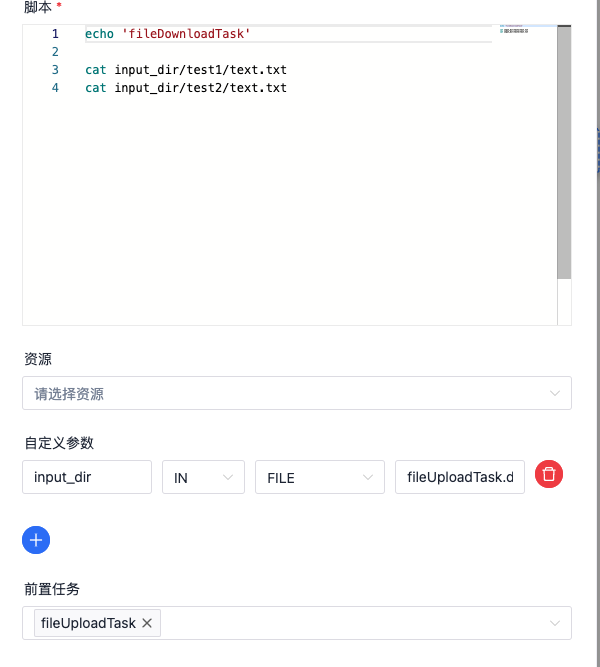

echo 'fileDownloadTask'

cat input_dir/test1/text.txt

cat input_dir/test2/text.txt

[INFO] 2024-07-05 11:11:08.160 +0800 - Success initialized task plugin instance successfully

[INFO] 2024-07-05 11:11:08.160 +0800 - Set taskVarPool: [{"prop":"fileUploadTask.file-text","direct":"IN","type":"FILE","value":"DATA_TRANSFER/20240705/14171986797856/2_1962/fileUploadTask_1455_text.txt"},{"prop":"fileUploadTask.dir-data","direct":"IN","type":"FILE","value":"DATA_TRANSFER/20240705/14171986797856/2_1962/fileUploadTask_1455_data_ds_pack.zip"}] successfully

[INFO] 2024-07-05 11:11:08.160 +0800 - ***********************************************************************************************

[INFO] 2024-07-05 11:11:08.160 +0800 - ********************************* Execute task instance *************************************

[INFO] 2024-07-05 11:11:08.160 +0800 - ***********************************************************************************************

[INFO] 2024-07-05 11:11:08.160 +0800 - Final Shell file is:

[INFO] 2024-07-05 11:11:08.160 +0800 - ****************************** Script Content *****************************************************************

[INFO] 2024-07-05 11:11:08.160 +0800 - #!/bin/bash

BASEDIR=$(cd `dirname $0`; pwd)

cd $BASEDIR

source etc/profile

export HADOOP_HOME=${HADOOP_HOME:-/home/hadoop-3.3.1}

export HADOOP_CONF_DIR=${HADOOP_CONF_DIR:-/opt/soft/hadoop/etc/hadoop}

export SPARK_HOME=${SPARK_HOME:-/home/spark-3.2.1-bin-hadoop3.2}

export PYTHON_HOME=${PYTHON_HOME:-/opt/soft/python}

export HIVE_HOME=${HIVE_HOME:-/home/hive-3.1.2}

export FLINK_HOME=/home/flink-1.18.1

export DATAX_HOME=${DATAX_HOME:-/opt/soft/datax}

export SEATUNNEL_HOME=/opt/software/seatunnel

export CHUNJUN_HOME=${CHUNJUN_HOME:-/opt/soft/chunjun}

export PATH=``HADOOP_HOME/bin:``SPARK_HOME/bin:``PYTHON_HOME/bin:``JAVA_HOME/bin:``HIVE_HOME/bin:``FLINK_HOME/bin:``DATAX_HOME/bin:``SEATUNNEL_HOME/bin:``CHUNJUN_HOME/bin:``PATH

echo 'fileDownloadTask'

cat input_dir/test1/text.txt

cat input_dir/test2/text.txt

[INFO] 2024-07-05 11:11:08.161 +0800 - ****************************** Script Content *****************************************************************

[INFO] 2024-07-05 11:11:08.161 +0800 - Executing shell command : sudo -u root -i tmp/dolphinscheduler/exec/process/root/13850571680800/14171986797856_2/1962/1456/1962_1456.sh

[INFO] 2024-07-05 11:11:08.164 +0800 - process start, process id is: 590323

[INFO] 2024-07-05 11:11:10.164 +0800 - ->

fileDownloadTask

test1 message

test2 message

[INFO] 2024-07-05 11:11:10.166 +0800 - process has exited. execute path:/tmp/dolphinscheduler/exec/process/root/13850571680800/14171986797856_2/1962/1456, processId:590323 ,exitStatusCode:0 ,processWaitForStatus:true ,processExitValue:0

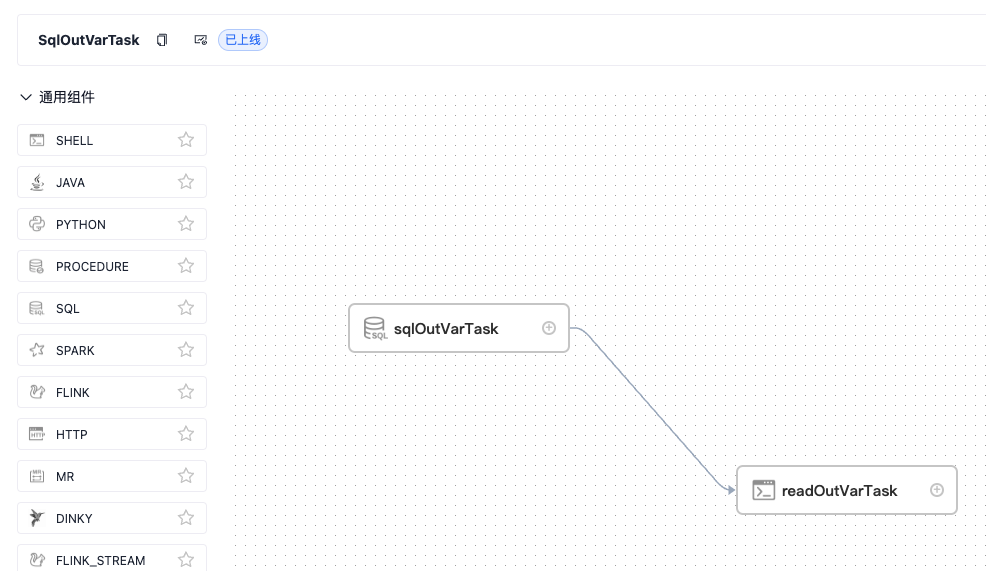

2.3

SQL任务输出变量

2.3.2、任务配置 sqlOutVarTask

select user_name as userNameList from t_ds_user

echo 'readOutVarTask'

echo ${userNameList}

2.3.3、结果输出

readOutVarTask

[INFO] 2024-07-05 11:19:00.294 +0800 - Success initialized task plugin instance successfully

[INFO] 2024-07-05 11:19:00.294 +0800 - Set taskVarPool: [{"prop":"userNameList","direct":"IN","type":"LIST","value":"[\"admin\",\"qiaozhanwei\",\"test\"]"}] successfully

[INFO] 2024-07-05 11:19:00.294 +0800 - ***********************************************************************************************

[INFO] 2024-07-05 11:19:00.294 +0800 - ********************************* Execute task instance *************************************

[INFO] 2024-07-05 11:19:00.294 +0800 - ***********************************************************************************************

[INFO] 2024-07-05 11:19:00.295 +0800 - Final Shell file is:

[INFO] 2024-07-05 11:19:00.295 +0800 - ****************************** Script Content *****************************************************************

[INFO] 2024-07-05 11:19:00.295 +0800 - #!/bin/bash

BASEDIR=$(cd `dirname $0`; pwd)

cd $BASEDIR

source etc/profile

export HADOOP_HOME=${HADOOP_HOME:-/home/hadoop-3.3.1}

export HADOOP_CONF_DIR=${HADOOP_CONF_DIR:-/opt/soft/hadoop/etc/hadoop}

export SPARK_HOME=${SPARK_HOME:-/home/spark-3.2.1-bin-hadoop3.2}

export PYTHON_HOME=${PYTHON_HOME:-/opt/soft/python}

export HIVE_HOME=${HIVE_HOME:-/home/hive-3.1.2}

export FLINK_HOME=/home/flink-1.18.1

export DATAX_HOME=${DATAX_HOME:-/opt/soft/datax}

export SEATUNNEL_HOME=/opt/software/seatunnel

export CHUNJUN_HOME=${CHUNJUN_HOME:-/opt/soft/chunjun}

export PATH=``HADOOP_HOME/bin:``SPARK_HOME/bin:``PYTHON_HOME/bin:``JAVA_HOME/bin:``HIVE_HOME/bin:``FLINK_HOME/bin:``DATAX_HOME/bin:``SEATUNNEL_HOME/bin:``CHUNJUN_HOME/bin:``PATH

echo 'readOutVarTask'

echo ["admin","qiaozhanwei","test"]

[INFO] 2024-07-05 11:19:00.295 +0800 - ****************************** Script Content *****************************************************************

[INFO] 2024-07-05 11:19:00.295 +0800 - Executing shell command : sudo -u root -i tmp/dolphinscheduler/exec/process/root/13850571680800/14172048617888_1/1963/1458/1963_1458.sh

[INFO] 2024-07-05 11:19:00.299 +0800 - process start, process id is: 590781

[INFO] 2024-07-05 11:19:02.299 +0800 - ->

readOutVarTask

[admin,qiaozhanwei,test]

[INFO] 2024-07-05 11:19:02.301 +0800 - process has exited. execute path:/tmp/dolphinscheduler/exec/process/root/13850571680800/14172048617888_1/1963/1458, processId:590781 ,exitStatusCode:0 ,processWaitForStatus:true ,processExitValue:0

原文链接:https://segmentfault.com/a/1190000045035406

活动推荐

点击卡片了解活动详情 🔽

用户案例

迁移实战

发版消息

加入社区

关注社区的方式有很多:

GitHub: https://github.com/apache/dolphinscheduler 官网:https://dolphinscheduler.apache.org/en-us 订阅开发者邮件:dev@dolphinscheduler@apache.org X.com:@DolphinSchedule YouTube:https://www.youtube.com/@apachedolphinscheduler Slack:https://join.slack.com/t/asf-dolphinscheduler/shared_invite/zt-1cmrxsio1-nJHxRJa44jfkrNL_Nsy9Qg

同样地,参与Apache DolphinScheduler 有非常多的参与贡献的方式,主要分为代码方式和非代码方式两种。

📂非代码方式包括:

完善文档、翻译文档;翻译技术性、实践性文章;投稿实践性、原理性文章;成为布道师;社区管理、答疑;会议分享;测试反馈;用户反馈等。

👩💻代码方式包括:

查找Bug;编写修复代码;开发新功能;提交代码贡献;参与代码审查等。

你的好友秀秀子拍了拍你

并请你帮她点一下“分享”

文章转载自海豚调度,如果涉嫌侵权,请发送邮件至:contact@modb.pro进行举报,并提供相关证据,一经查实,墨天轮将立刻删除相关内容。