一、基础信息

--hosts文件

[grid@rac1 ~]$ cat /etc/hosts

#public IP

192.168.115.155 rac1

192.168.115.156 rac2

#VIP

192.168.115.157 rac1-vip

192.168.115.158 rac2-vip

#private IP

192.168.152.155 rac1-priv

192.168.152.156 rac2-priv

#scan

192.168.115.160 rac-scan

本次测试删除节点二。

二、删除节点

2.1 删除前环境检查

2.1.1 集群状态检查

--crsctl信息

[grid@rac1 ~]$ crsctl status res -t

--------------------------------------------------------------------------------

Name Target State Server State details

--------------------------------------------------------------------------------

Local Resources

--------------------------------------------------------------------------------

ora.LISTENER.lsnr

ONLINE ONLINE rac1 STABLE

ONLINE ONLINE rac2 STABLE

ora.chad

ONLINE ONLINE rac1 STABLE

ONLINE ONLINE rac2 STABLE

ora.net1.network

ONLINE ONLINE rac1 STABLE

ONLINE ONLINE rac2 STABLE

ora.ons

ONLINE ONLINE rac1 STABLE

ONLINE ONLINE rac2 STABLE

ora.proxy_advm

OFFLINE OFFLINE rac1 STABLE

OFFLINE OFFLINE rac2 STABLE

--------------------------------------------------------------------------------

Cluster Resources

--------------------------------------------------------------------------------

ora.ASMNET1LSNR_ASM.lsnr(ora.asmgroup)

1 ONLINE ONLINE rac1 STABLE

2 ONLINE ONLINE rac2 STABLE

3 ONLINE OFFLINE STABLE

ora.DATA.dg(ora.asmgroup)

1 ONLINE ONLINE rac1 STABLE

2 ONLINE ONLINE rac2 STABLE

3 OFFLINE OFFLINE STABLE

ora.LISTENER_SCAN1.lsnr

1 ONLINE ONLINE rac1 STABLE

ora.asm(ora.asmgroup)

1 ONLINE ONLINE rac1 Started,STABLE

2 ONLINE ONLINE rac2 Started,STABLE

3 OFFLINE OFFLINE STABLE

ora.asmnet1.asmnetwork(ora.asmgroup)

1 ONLINE ONLINE rac1 STABLE

2 ONLINE ONLINE rac2 STABLE

3 OFFLINE OFFLINE STABLE

ora.cvu

1 ONLINE ONLINE rac1 STABLE

ora.qosmserver

1 ONLINE ONLINE rac1 STABLE

ora.rac.db

1 ONLINE ONLINE rac1 Open,HOME=/u01/app/o

racle/product/19.3.0

.0/db_1,STABLE

2 ONLINE ONLINE rac2 Open,HOME=/u01/app/o

racle/product/19.3.0

.0/db_1,STABLE

ora.rac1.vip

1 ONLINE ONLINE rac1 STABLE

ora.rac2.vip

1 ONLINE ONLINE rac2 STABLE

ora.scan1.vip

1 ONLINE ONLINE rac1 STABLE

--------------------------------------------------------------------------------

2.1.2 集群构成检查

[grid@rac1 ~]$ olsnodes -s -t

rac1 Active Unpinned

rac2 Active Unpinned

2.2 备份

2.2.1 OCR备份

[grid@rac1 ~]$ ocrconfig -showbackup

rac1 2023/09/06 12:58:34 +DATA:/rac/OCRBACKUP/backup00.ocr.275.1146833891 724960844

rac2 2023/09/05 10:21:10 +DATA:/rac/OCRBACKUP/backup01.ocr.258.1146738059 724960844

rac2 2023/09/05 10:21:10 +DATA:/rac/OCRBACKUP/day.ocr.259.1146738071 724960844

rac2 2023/09/05 10:21:10 +DATA:/rac/OCRBACKUP/week.ocr.260.1146738071 724960844

[root@rac1 ~]# /u01/app/19.3.0.0/grid/bin/ocrconfig -manualbackup

rac1 2023/09/11 14:38:51 +DATA:/rac/OCRBACKUP/backup_20230911_143851.ocr.280.1147271933 724960844

2.2.2 数据库备份

rman备份或者expdp备份。

2.3 删除数据库实例

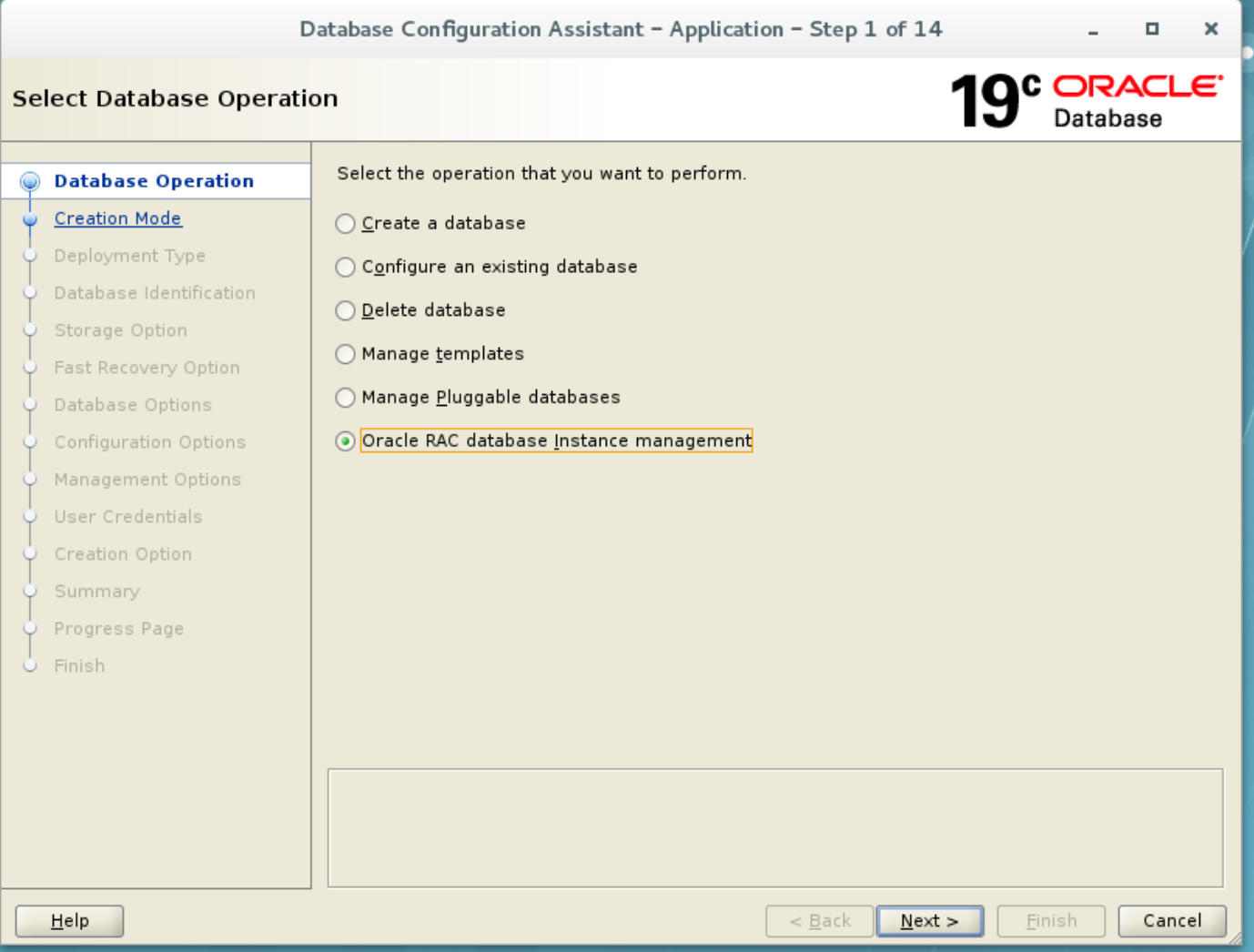

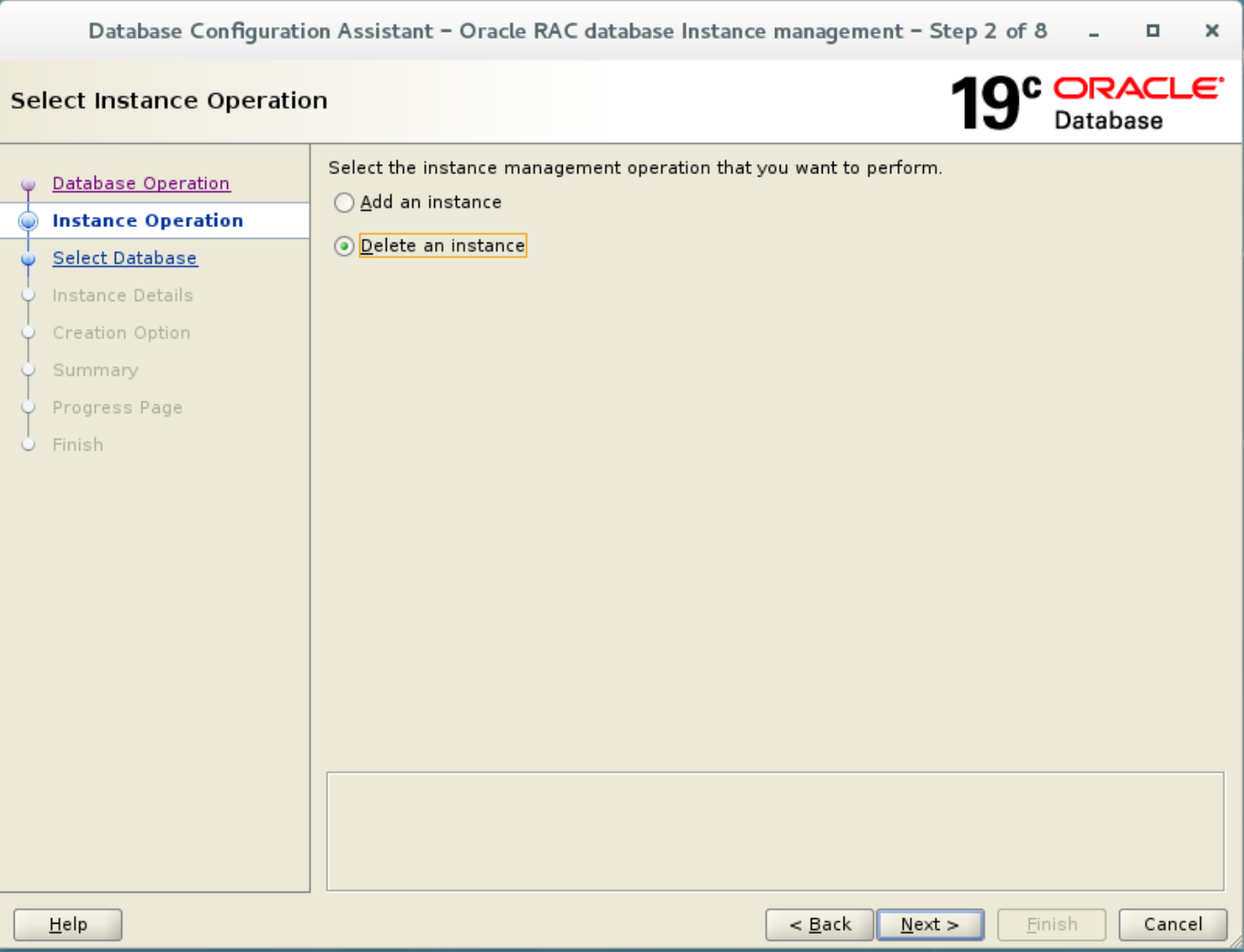

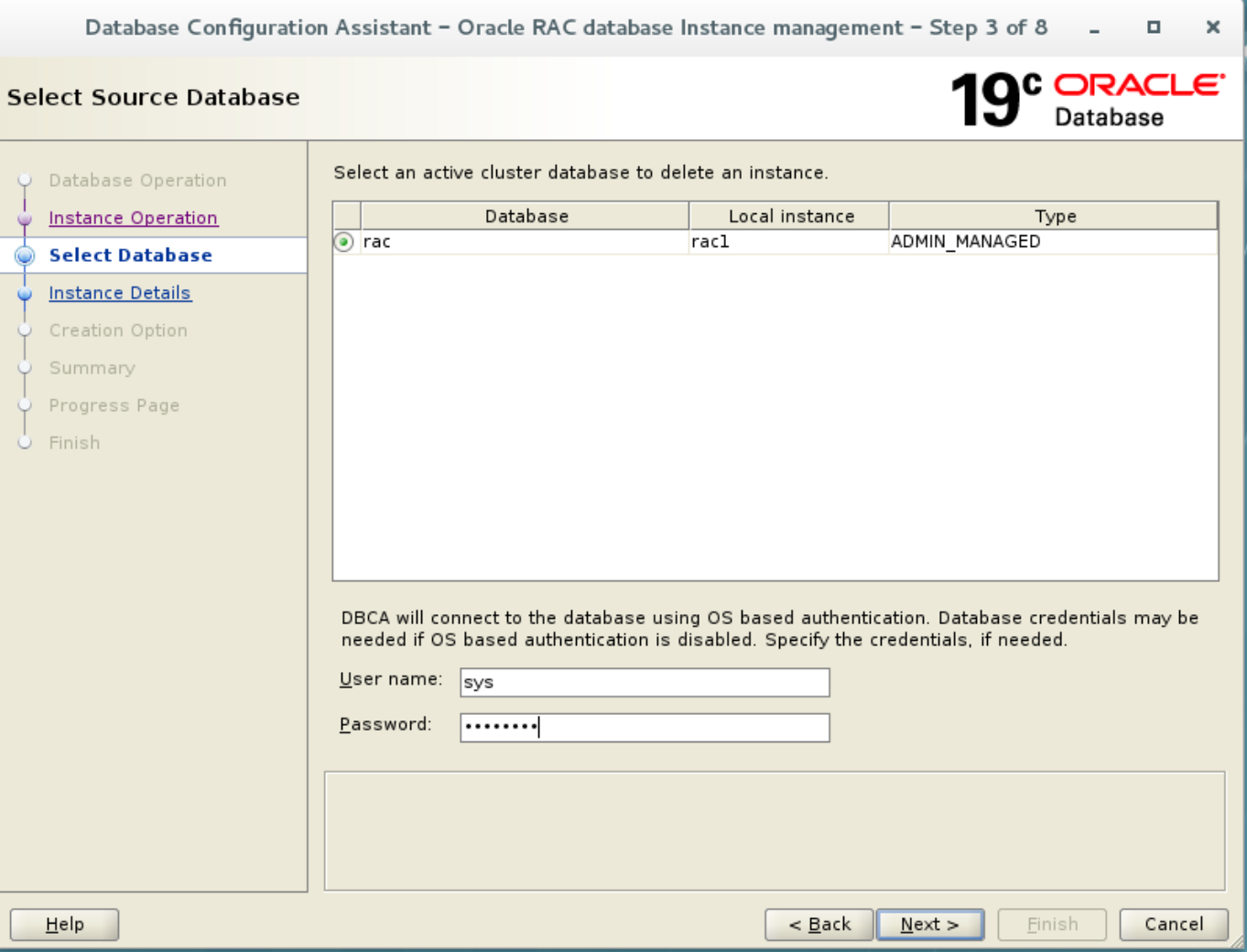

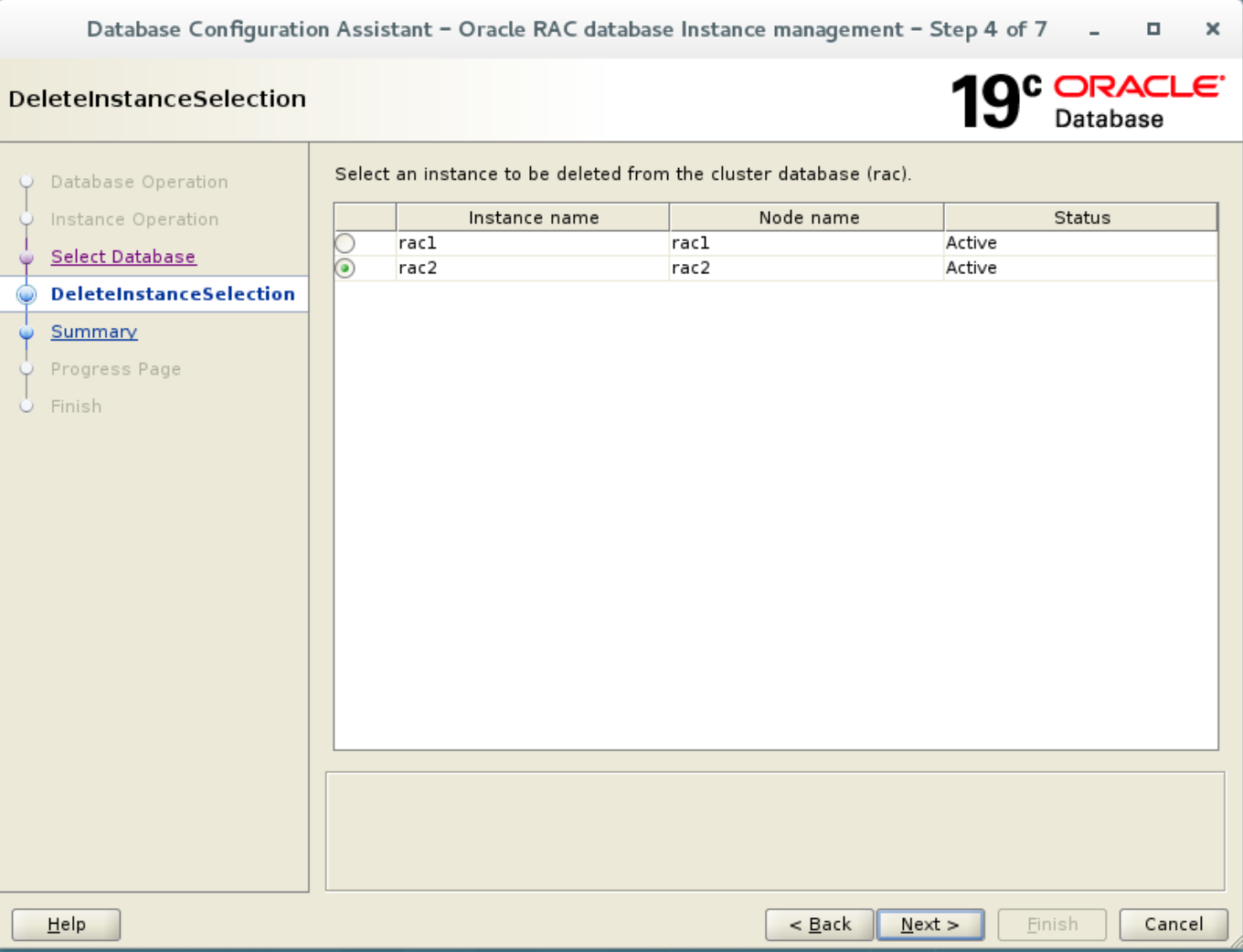

可以选择dbca删除,或者静默方式进行删除。

2.3.1 实例状态检查

SQL> select instance_name,status from gv$instance;

INSTANCE_NAME STATUS

---------------- ------------

rac2 OPEN

rac1 OPEN

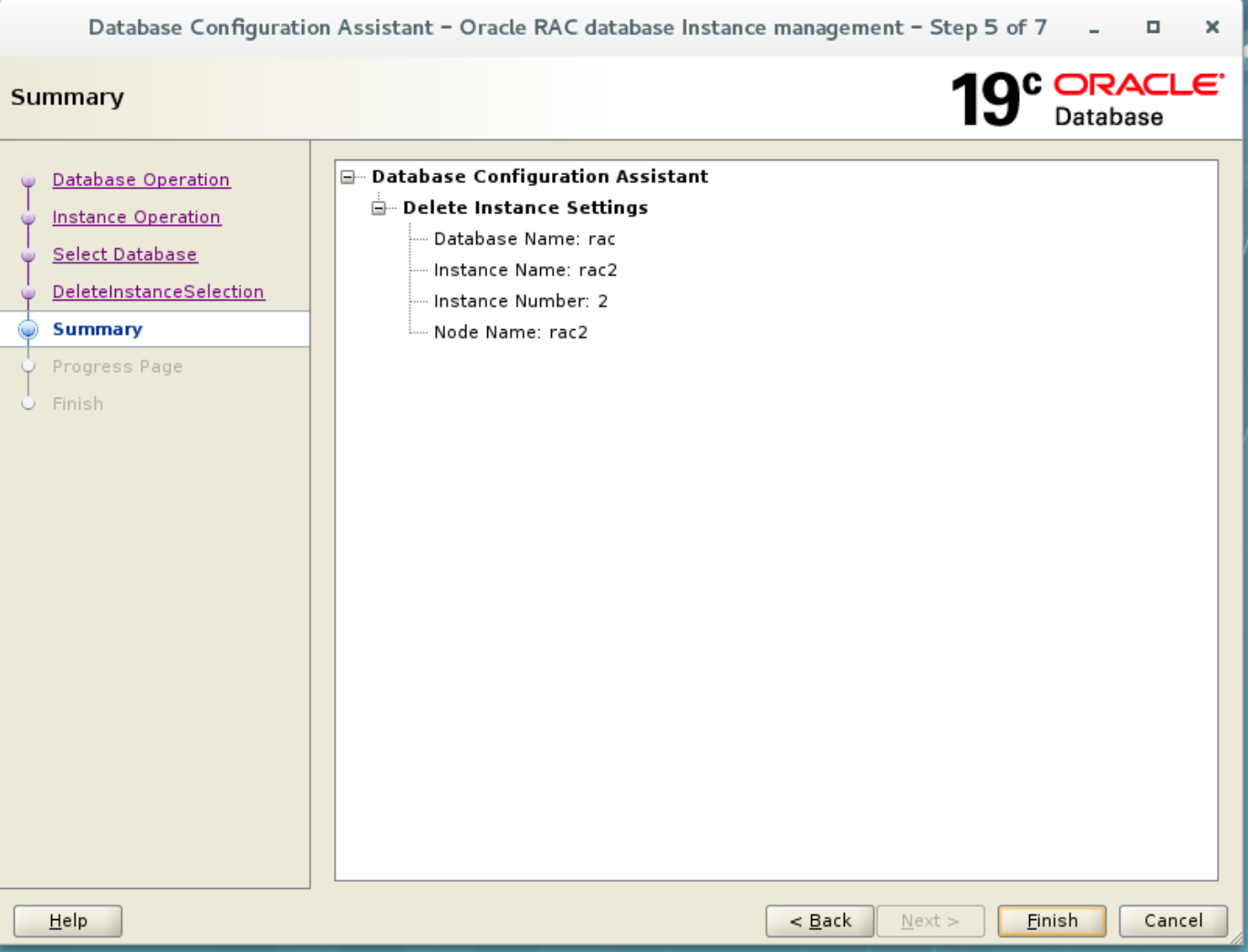

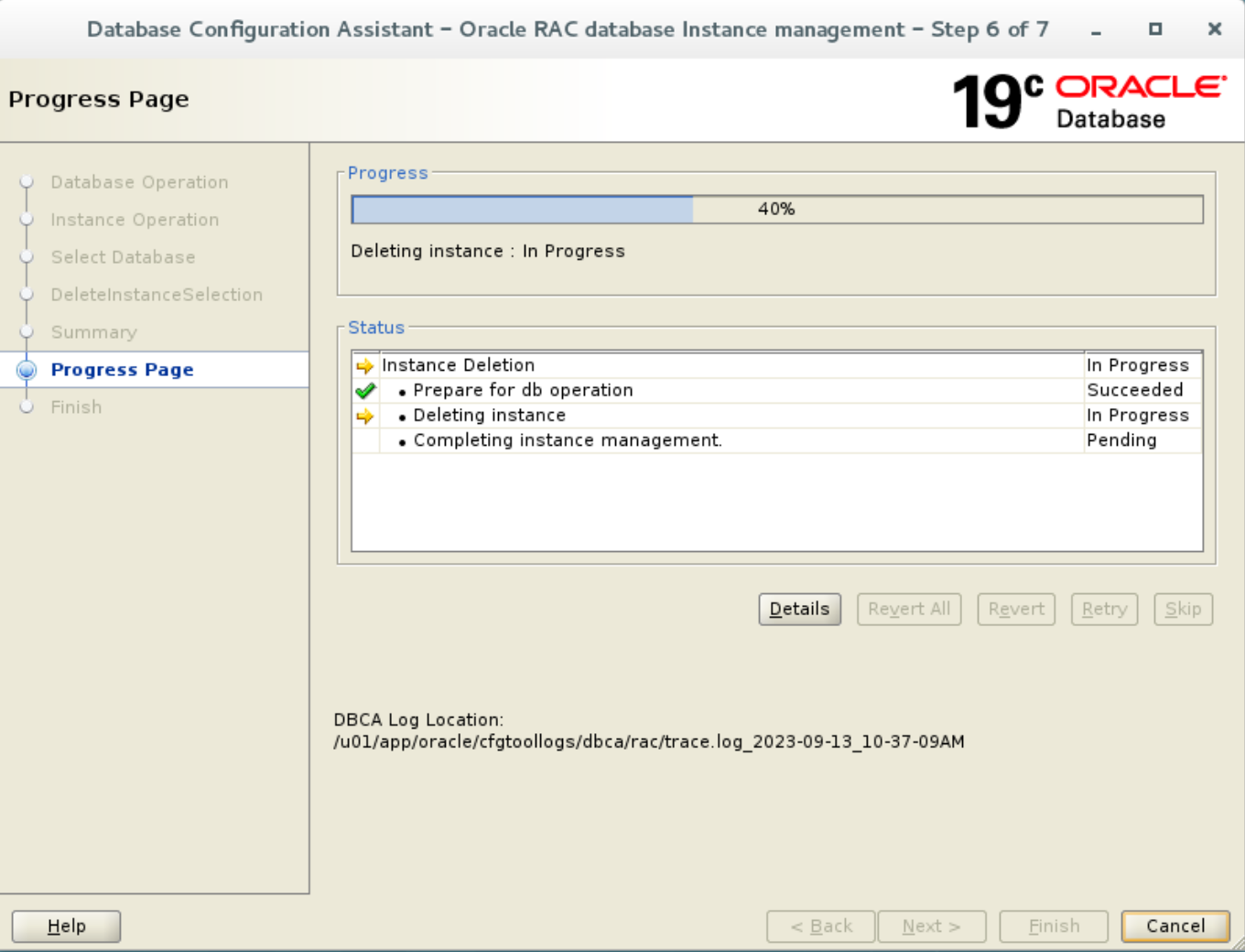

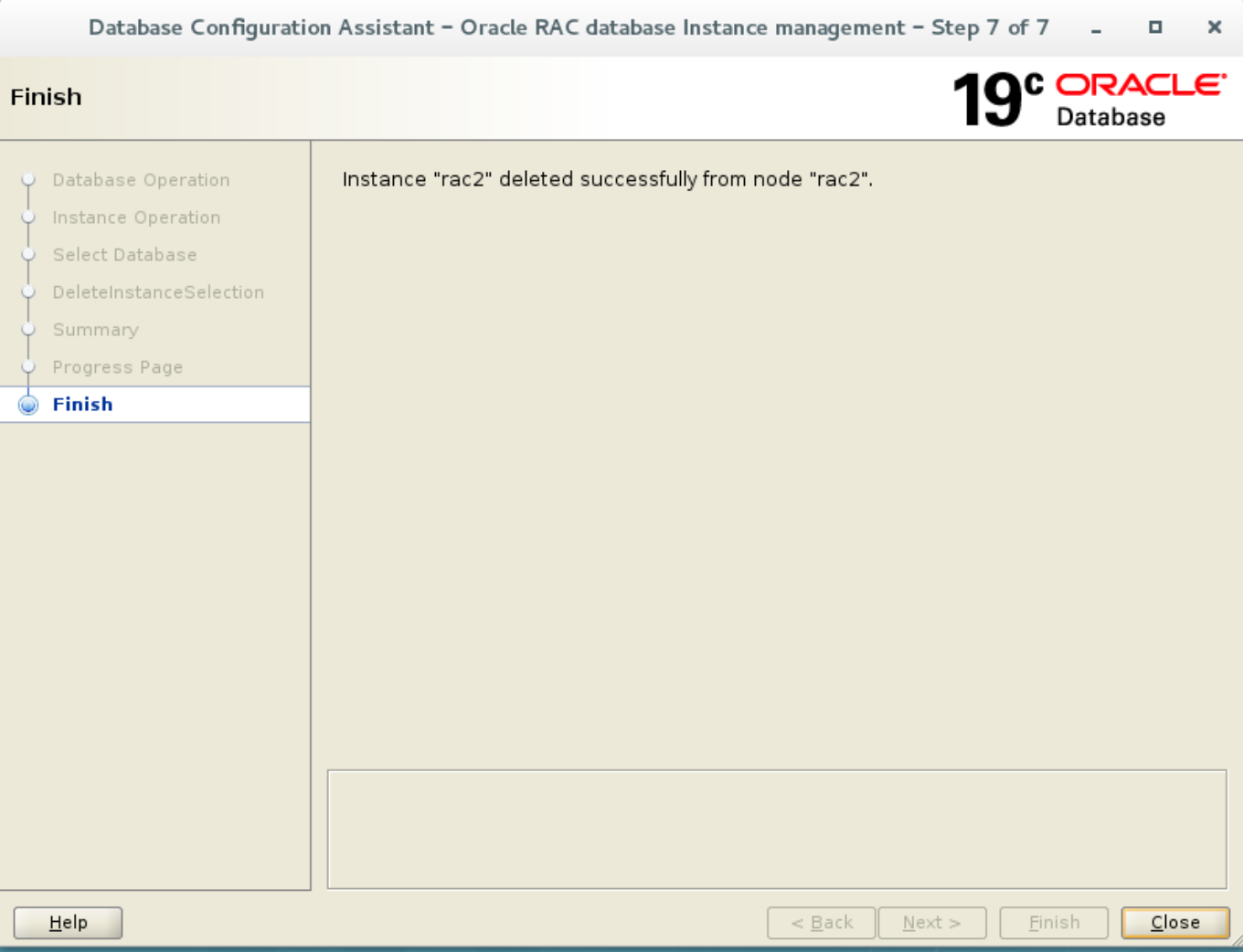

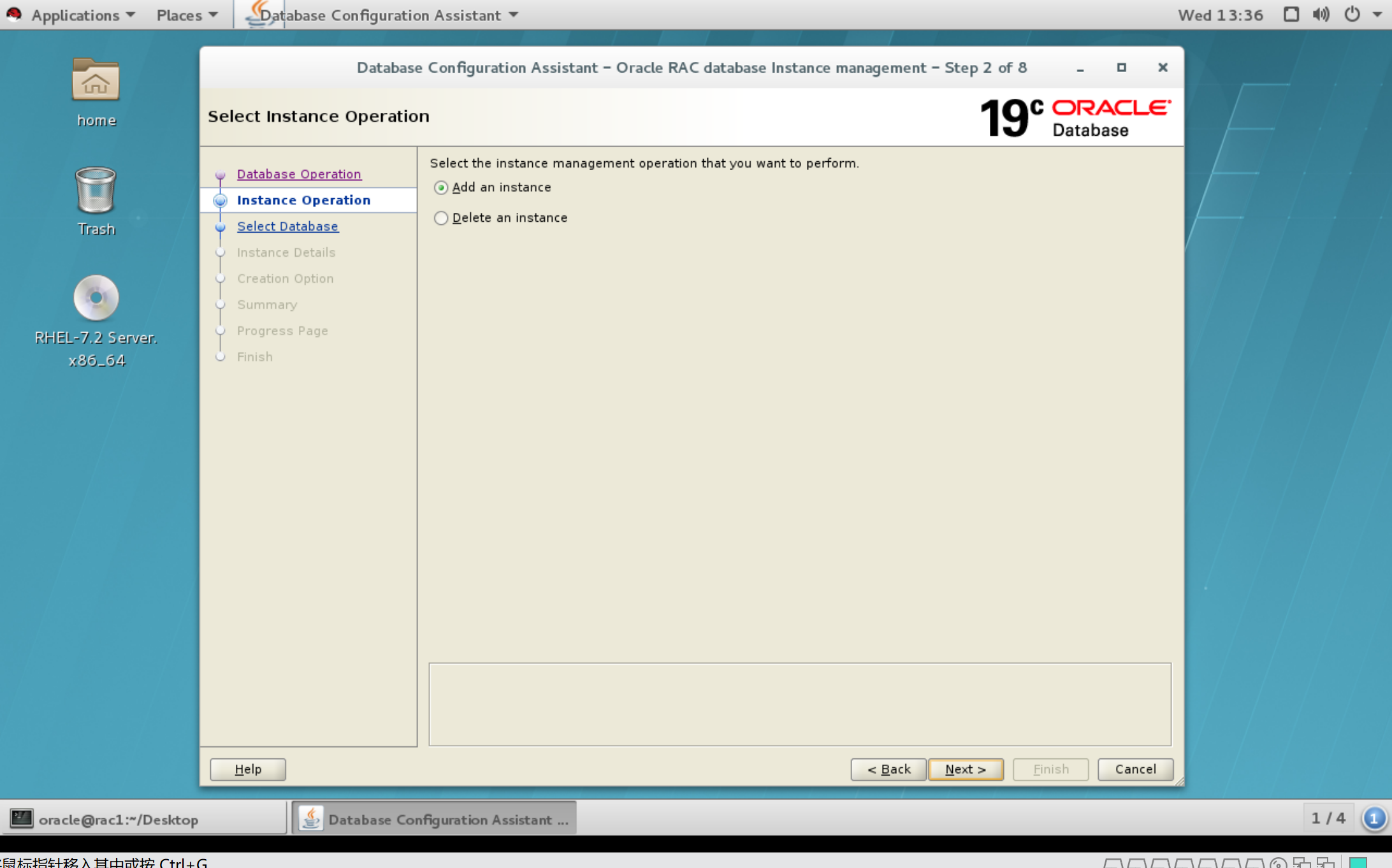

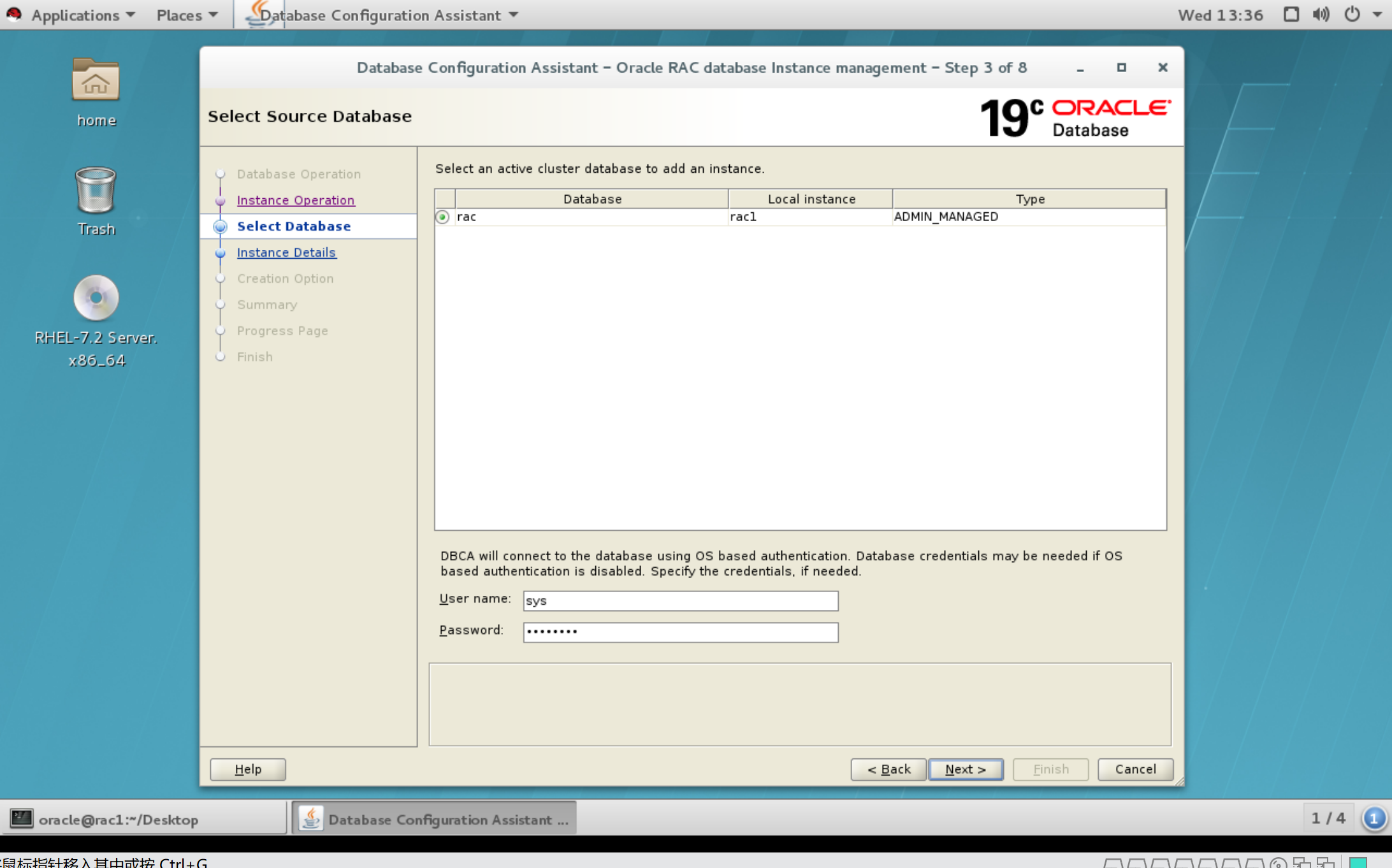

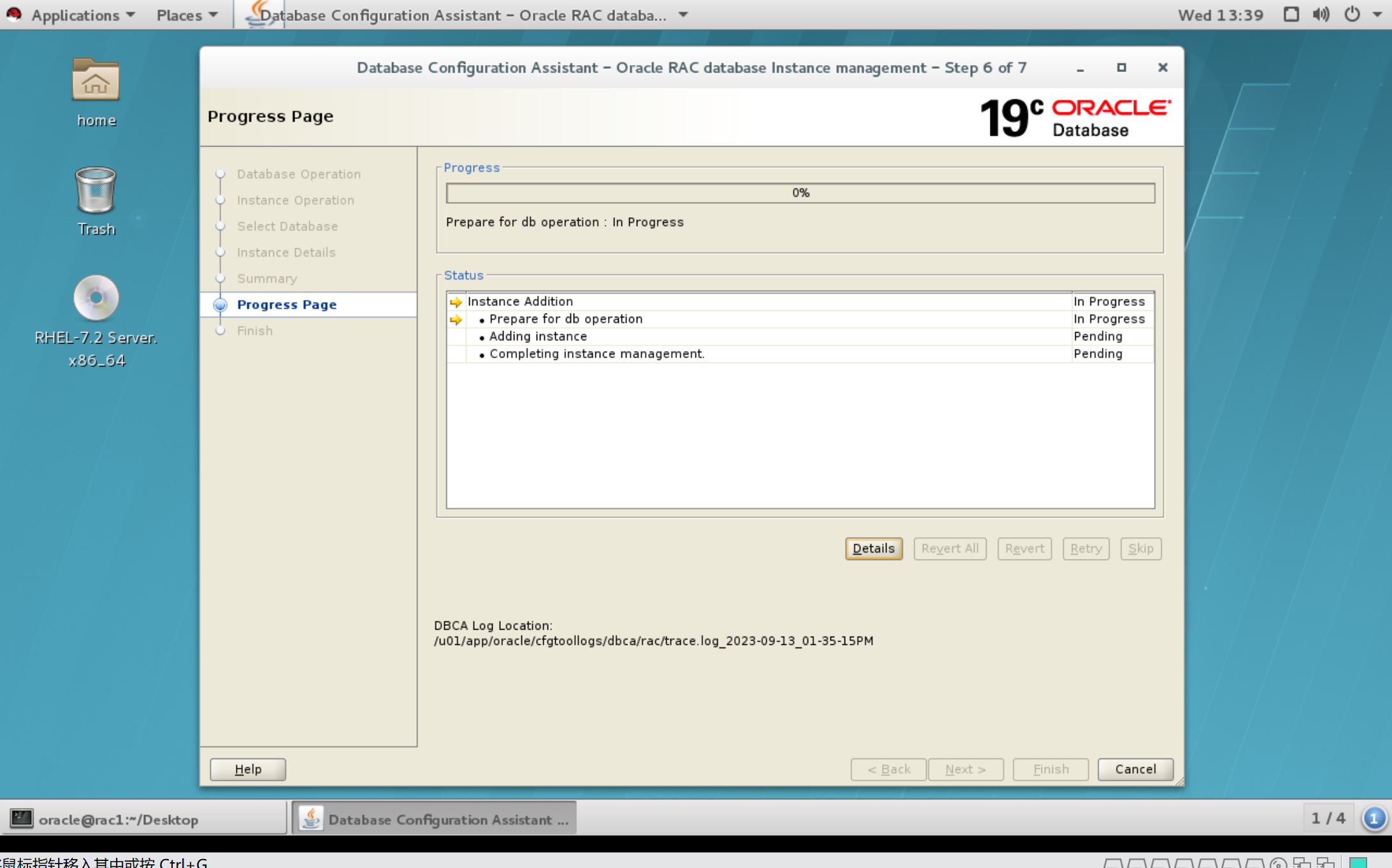

2.3.2 删除数据库实例(保留的节点运行)

因为要删除节点二,所以操作在保留的节点一执行。使用dbca图形化进行删除节点二实例操作。

如果没有图形化环境,也可以使用命令行方式,指令如下:

[oracle@rac1 ~]$ dbca -silent -deleteInstance -nodeList rac2 -gdbName rac -instanceName rac2 -sysDBAUserName sys -sysDBAPassword welcome1

------------------------------------------------参数解释

Oracle用户删除实例(在一个保留的节点上运行),参数含义如下:

-nodeList(删除实例所在的节点)

-gdbName(表示数据库的名字)

-instanceName(表示要删除的实例名字)

-sysDBAUserName(表示超级管理员)

-sysDBAPassword(管理员密码)

2.3.2 删除节点二相关资源

[grid@rac1 ~]$ srvctl disable listener -l listener -n rac2

[grid@rac1 ~]$ srvctl stop listener -l listener -n rac2

2.3.3 检查是否删除

[oracle@rac1 ~]$ srvctl config database -d rac

Database unique name: rac

Database name: rac

Oracle home: /u01/app/oracle/product/19.3.0.0/db_1

Oracle user: oracle

Spfile: +DATA/RAC/PARAMETERFILE/spfile.273.1146826135

Password file: +DATA/RAC/PASSWORD/pwdrac.261.1146825489

Domain:

Start options: open

Stop options: immediate

Database role: PRIMARY

Management policy: AUTOMATIC

Server pools:

Disk Groups: DATA

Mount point paths:

Services:

Type: RAC

Start concurrency:

Stop concurrency:

OSDBA group: dba

OSOPER group: oper

Database instances: rac1

Configured nodes: rac1

CSS critical: no

CPU count: 0

Memory target: 0

Maximum memory: 0

Default network number for database services:

Database is administrator managed

[grid@rac1 ~]$ crsctl status res -t

--------------------------------------------------------------------------------

Name Target State Server State details

--------------------------------------------------------------------------------

Local Resources

--------------------------------------------------------------------------------

ora.LISTENER.lsnr

ONLINE ONLINE rac1 STABLE

OFFLINE OFFLINE rac2 STABLE

ora.chad

ONLINE ONLINE rac1 STABLE

ONLINE ONLINE rac2 STABLE

ora.net1.network

ONLINE ONLINE rac1 STABLE

ONLINE ONLINE rac2 STABLE

ora.ons

ONLINE ONLINE rac1 STABLE

ONLINE ONLINE rac2 STABLE

ora.proxy_advm

OFFLINE OFFLINE rac1 STABLE

OFFLINE OFFLINE rac2 STABLE

--------------------------------------------------------------------------------

Cluster Resources

--------------------------------------------------------------------------------

ora.ASMNET1LSNR_ASM.lsnr(ora.asmgroup)

1 ONLINE ONLINE rac1 STABLE

2 ONLINE ONLINE rac2 STABLE

3 ONLINE OFFLINE STABLE

ora.DATA.dg(ora.asmgroup)

1 ONLINE ONLINE rac1 STABLE

2 ONLINE ONLINE rac2 STABLE

3 OFFLINE OFFLINE STABLE

ora.LISTENER_SCAN1.lsnr

1 ONLINE ONLINE rac1 STABLE

ora.asm(ora.asmgroup)

1 ONLINE ONLINE rac1 Started,STABLE

2 ONLINE ONLINE rac2 Started,STABLE

3 OFFLINE OFFLINE STABLE

ora.asmnet1.asmnetwork(ora.asmgroup)

1 ONLINE ONLINE rac1 STABLE

2 ONLINE ONLINE rac2 STABLE

3 OFFLINE OFFLINE STABLE

ora.cvu

1 ONLINE ONLINE rac1 STABLE

ora.qosmserver

1 ONLINE ONLINE rac1 STABLE

ora.rac.db

1 ONLINE ONLINE rac1 Open,HOME=/u01/app/o

racle/product/19.3.0

.0/db_1,STABLE

ora.rac1.vip

1 ONLINE ONLINE rac1 STABLE

ora.rac2.vip

1 ONLINE ONLINE rac2 STABLE

ora.scan1.vip

1 ONLINE ONLINE rac1 STABLE

--------------------------------------------------------------------------------

2.4 删除数据库软件

2.4.1 更新inventory(在被删除节点执行)

--注意这里的runInstaller目录,不是和安装数据库同一个。

[oracle@rac2 ~]$ cd $ORACLE_HOME/oui/bin

[oracle@rac2 bin]$ ./runInstaller -updateNodeList ORACLE_HOME=$ORACLE_HOME "CLUSTER_NODES={rac2}" -local

Starting Oracle Universal Installer...

Checking swap space: must be greater than 500 MB. Actual 2602 MB Passed

The inventory pointer is located at /etc/oraInst.loc

2.4.2 删除数据库软件(在被删除节点执行)

[oracle@rac2 bin]$ cd $ORACLE_HOME

[oracle@rac2 db_1]$ cd deinstall/

[oracle@rac2 deinstall]$ ./deinstall -local

Checking for required files and bootstrapping ...

Please wait ...

Location of logs /u01/app/oraInventory/logs/

############ ORACLE DECONFIG TOOL START ############

######################### DECONFIG CHECK OPERATION START #########################

## [START] Install check configuration ##

Checking for existence of the Oracle home location /u01/app/oracle/product/19.3.0.0/db_1

Oracle Home type selected for deinstall is: Oracle Real Application Cluster Database

Oracle Base selected for deinstall is: /u01/app/oracle

Checking for existence of central inventory location /u01/app/oraInventory

Checking for existence of the Oracle Grid Infrastructure home /u01/app/19.3.0.0/grid

The following nodes are part of this cluster: rac2,rac1

Checking for sufficient temp space availability on node(s) : 'rac2'

## [END] Install check configuration ##

Network Configuration check config START

Network de-configuration trace file location: /u01/app/oraInventory/logs/netdc_check2023-09-13_10-46-55AM.log

Network Configuration check config END

Database Check Configuration START

Database de-configuration trace file location: /u01/app/oraInventory/logs/databasedc_check2023-09-13_10-46-55AM.log

Use comma as separator when specifying list of values as input

Specify the list of database names that are configured locally on this node for this Oracle home. Local configurations of the discovered databases will be removed []:

Database Check Configuration END

######################### DECONFIG CHECK OPERATION END #########################

####################### DECONFIG CHECK OPERATION SUMMARY #######################

Oracle Grid Infrastructure Home is: /u01/app/19.3.0.0/grid

The following nodes are part of this cluster: rac2,rac1

The cluster node(s) on which the Oracle home deinstallation will be performed are:rac2

Oracle Home selected for deinstall is: /u01/app/oracle/product/19.3.0.0/db_1

Inventory Location where the Oracle home registered is: /u01/app/oraInventory

Do you want to continue (y - yes, n - no)? [n]: y

A log of this session will be written to: '/u01/app/oraInventory/logs/deinstall_deconfig2023-09-13_10-46-41-AM.out'

Any error messages from this session will be written to: '/u01/app/oraInventory/logs/deinstall_deconfig2023-09-13_10-46-41-AM.err'

######################## DECONFIG CLEAN OPERATION START ########################

Database de-configuration trace file location: /u01/app/oraInventory/logs/databasedc_clean2023-09-13_10-46-55AM.log

Network Configuration clean config START

Network de-configuration trace file location: /u01/app/oraInventory/logs/netdc_clean2023-09-13_10-46-55AM.log

Network Configuration clean config END

######################### DECONFIG CLEAN OPERATION END #########################

####################### DECONFIG CLEAN OPERATION SUMMARY #######################

#######################################################################

############# ORACLE DECONFIG TOOL END #############

Using properties file /tmp/deinstall2023-09-13_10-46-20AM/response/deinstall_2023-09-13_10-46-41-AM.rsp

Location of logs /u01/app/oraInventory/logs/

############ ORACLE DEINSTALL TOOL START ############

####################### DEINSTALL CHECK OPERATION SUMMARY #######################

A log of this session will be written to: '/u01/app/oraInventory/logs/deinstall_deconfig2023-09-13_10-46-41-AM.out'

Any error messages from this session will be written to: '/u01/app/oraInventory/logs/deinstall_deconfig2023-09-13_10-46-41-AM.err'

######################## DEINSTALL CLEAN OPERATION START ########################

## [START] Preparing for Deinstall ##

Setting LOCAL_NODE to rac2

Setting CLUSTER_NODES to rac2

Setting CRS_HOME to false

Setting oracle.installer.invPtrLoc to /tmp/deinstall2023-09-13_10-46-20AM/oraInst.loc

Setting oracle.installer.local to true

## [END] Preparing for Deinstall ##

Setting the force flag to false

Setting the force flag to cleanup the Oracle Base

Oracle Universal Installer clean START

Detach Oracle home '/u01/app/oracle/product/19.3.0.0/db_1' from the central inventory on the local node : Done

Delete directory '/u01/app/oracle/product/19.3.0.0/db_1' on the local node : Done

Failed to delete the directory '/u01/app/oracle'. Either user has no permission to delete or it is in use.

Delete directory '/u01/app/oracle' on the local node : Failed <<<<

Oracle Universal Installer cleanup completed with errors.

Oracle Universal Installer clean END

## [START] Oracle install clean ##

## [END] Oracle install clean ##

######################### DEINSTALL CLEAN OPERATION END #########################

####################### DEINSTALL CLEAN OPERATION SUMMARY #######################

Successfully detached Oracle home '/u01/app/oracle/product/19.3.0.0/db_1' from the central inventory on the local node.

Successfully deleted directory '/u01/app/oracle/product/19.3.0.0/db_1' on the local node.

Failed to delete directory '/u01/app/oracle' on the local node due to error : Either user has no permission to delete or file is in use.

Review the permissions and manually delete '/u01/app/oracle' on local node.

Oracle Universal Installer cleanup completed with errors.

Review the permissions and contents of '/u01/app/oracle' on nodes(s) 'rac2'.

If there are no Oracle home(s) associated with '/u01/app/oracle', manually delete '/u01/app/oracle' and its contents.

Oracle deinstall tool successfully cleaned up temporary directories.

#######################################################################

############# ORACLE DEINSTALL TOOL END #############

==========================节点二数据库目录已经不在了

[oracle@rac2 deinstall]$ cd $ORACLE_HOME

-bash: cd: /u01/app/oracle/product/19.3.0.0/db_1: No such file or directory

2.4.3 更新inventory(在保留的节点上执行)

[oracle@rac1 trace]$ cd $ORACLE_HOME/oui/bin

[oracle@rac1 bin]$ ./runInstaller -updateNodeList ORACLE_HOME=$ORACLE_HOME "CLUSTER_NODES=rac1" -local

Starting Oracle Universal Installer...

Checking swap space: must be greater than 500 MB. Actual 2572 MB Passed

The inventory pointer is located at /etc/oraInst.loc

2.4.4 查看实例状态

SQL> select instance_name,status from gv$instance;

INSTANCE_NAME STATUS

---------------- ------------

rac1 OPEN

2.5 删除grid软件

2.5.1 集群构成检查

[grid@rac1 ~]$ olsnodes -s -t

rac1 Active Unpinned

rac2 Active Unpinned

2.5.2 禁用集群资源(被删除节点root执行)

[root@rac2 ~]# cd /u01/app/19.3.0.0/grid/crs/install/

[root@rac2 install]# ./rootcrs.sh -deconfig -force

Using configuration parameter file: /u01/app/19.3.0.0/grid/crs/install/crsconfig_params

The log of current session can be found at:

/u01/app/grid/crsdata/rac2/crsconfig/crsdeconfig_rac2_2023-09-13_10-52-12AM.log

2023/09/13 10:53:50 CLSRSC-4006: Removing Oracle Trace File Analyzer (TFA) Collector.

2023/09/13 10:55:45 CLSRSC-4007: Successfully removed Oracle Trace File Analyzer (TFA) Collector.

2023/09/13 10:55:47 CLSRSC-336: Successfully deconfigured Oracle Clusterware stack on this node

2.5.3 更新inventory(被删除节点)

注意该目录是$ORACLE_HOME/oui/bin

[root@rac2 install]# su - grid

Last login: Mon Sep 11 15:10:31 CST 2023 on pts/0

[grid@rac2 ~]$ cd $ORACLE_HOME/oui/bin

[grid@rac2 bin]$ ./runInstaller -updateNodeList ORACLE_HOME=$ORACLE_HOME "CLUSTER_NODES=rac2" CRS=TRUE -silent -local

Starting Oracle Universal Installer...

Checking swap space: must be greater than 500 MB. Actual 3859 MB Passed

The inventory pointer is located at /etc/oraInst.loc

You can find the log of this install session at:

/u01/app/oraInventory/logs/UpdateNodeList2023-09-13_10-57-08AM.log

'UpdateNodeList' was successful.

2.5.4 删除grid软件(被删除节点)

[grid@rac2 bin]$ cd $ORACLE_HOME

[grid@rac2 grid]$ cd deinstall/

[grid@rac2 deinstall]$ ./deinstall -local

...........................

######################### DEINSTALL CLEAN OPERATION END #########################

####################### DEINSTALL CLEAN OPERATION SUMMARY #######################

Successfully detached Oracle home '/u01/app/19.3.0.0/grid' from the central inventory on the local node.

Failed to delete directory '/u01/app/19.3.0.0/grid' on the local node due to error : Either user has no permission to delete or file is in use.

Review the permissions and manually delete '/u01/app/19.3.0.0/grid' on local node.

Failed to delete directory '/u01/app/oraInventory' on the local node due to error : Either user has no permission to delete or file is in use.

Review the permissions and manually delete '/u01/app/oraInventory' on local node.

Oracle Universal Installer cleanup completed with errors.

Run 'rm -r /etc/oraInst.loc' as root on node(s) 'rac2' at the end of the session.

Run 'rm -r /opt/ORCLfmap' as root on node(s) 'rac2' at the end of the session.

Run 'rm -r /etc/oratab' as root on node(s) 'rac2' at the end of the session.

Review the permissions and contents of '/u01/app/grid' on nodes(s) 'rac2'.

If there are no Oracle home(s) associated with '/u01/app/grid', manually delete '/u01/app/grid' and its contents.

Oracle deinstall tool successfully cleaned up temporary directories.

#######################################################################

############# ORACLE DEINSTALL TOOL END #############

2.5.5 清除残余文件

-------------------------kill 相关进程

[root@rac2 install]# ps -ef |grep ora |awk '{print $2}' |xargs kill -9

kill: sending signal to 65659 failed: No such process

[root@rac2 install]# ps -ef |grep grid |awk '{print $2}' |xargs kill -9

kill: sending signal to 65763 failed: No such process

[root@rac2 install]# ps -ef |grep asm |awk '{print $2}' |xargs kill -9

kill: sending signal to 65768 failed: No such process

[root@rac2 install]# ps -ef |grep storage |awk '{print $2}' |xargs kill -9

kill: sending signal to 65773 failed: No such process

[root@rac2 install]# ps -ef |grep ohasd |awk '{print $2}' |xargs kill -9

kill: sending signal to 65778 failed: No such process

-------------------------删除相关目录,root用户执行

export ORACLE_BASE=/u01/app/grid

export ORACLE_HOME=/u01/app/19.3.0.0/grid/

cd $ORACLE_HOME

rm -rf *

cd $ORACLE_BASE

rm -rf *

rm -rf /etc/rc5.d/S96ohasd

rm -rf /etc/rc3.d/S96ohasd

rm -rf /rc.d/init.d/ohasd

rm -rf /etc/oracle

rm -rf /etc/ora*

rm -rf /etc/oratab

rm -rf /etc/oraInst.loc

rm -rf /opt/ORCLfmap/

rm -rf /u01/app/oracle/oraInventory

rm -rf /usr/local/bin/dbhome

rm -rf /usr/local/bin/oraenv

rm -rf /usr/local/bin/coraenv

rm -rf /tmp/*

rm -rf /var/tmp/.oracle

rm -rf /var/tmp

rm -rf /home/grid/*

rm -rf /home/oracle/*

rm -rf /etc/init/oracle*

rm -rf /etc/init.d/ora

rm -rf /tmp/.*

2.5.6 从集群中移除节点二(保留节点一中执行)

[root@rac1 ~]# cd /u01/app/19.3.0.0/grid/bin/

[root@rac1 bin]# ./crsctl delete node -n rac2

CRS-4661: Node rac2 successfully deleted.

2.5.7 更新inventory(保留节点一中执行)

[root@rac1 bin]# su - grid

Last login: Mon Sep 11 15:15:26 CST 2023

[grid@rac1 ~]$ $ORACLE_HOME/oui/bin/runInstaller -updateNodeList ORACLE_HOME=$ORACLE_HOME "CLUSTER_NODES=rac1" CRS=TRUE -silent -local

Starting Oracle Universal Installer...

Checking swap space: must be greater than 500 MB. Actual 2402 MB Passed

The inventory pointer is located at /etc/oraInst.loc

You can find the log of this install session at:

/u01/app/oraInventory/logs/UpdateNodeList2023-09-13_11-02-12AM.log

'UpdateNodeList' was successful.

2.5.8 运行 CVU 命令验证指定节点是否已成功从群集中删除

[grid@rac1 ~]$ cluvfy stage -post nodedel -n rac2 -verbose

Verifying Node Removal ...

Verifying CRS Integrity ...PASSED

Verifying Clusterware Version Consistency ...PASSED

Verifying Node Removal ...PASSED

Post-check for node removal was successful.

CVU operation performed: stage -post nodedel

Date: Sep 13, 2023 11:02:34 AM

CVU home: /u01/app/19.3.0.0/grid/

User: grid

2.5.9 查看集群状态

------------------------------集群构成检查

[grid@rac1 ~]$ olsnodes -s -t

rac1 Active Unpinned

------------------------------集群状态检查

[grid@rac1 ~]$ crsctl status res -t

--------------------------------------------------------------------------------

Name Target State Server State details

--------------------------------------------------------------------------------

Local Resources

--------------------------------------------------------------------------------

ora.LISTENER.lsnr

ONLINE ONLINE rac1 STABLE

ora.chad

ONLINE ONLINE rac1 STABLE

ora.net1.network

ONLINE ONLINE rac1 STABLE

ora.ons

ONLINE ONLINE rac1 STABLE

ora.proxy_advm

OFFLINE OFFLINE rac1 STABLE

--------------------------------------------------------------------------------

Cluster Resources

--------------------------------------------------------------------------------

ora.ASMNET1LSNR_ASM.lsnr(ora.asmgroup)

1 ONLINE ONLINE rac1 STABLE

2 ONLINE OFFLINE STABLE

3 ONLINE OFFLINE STABLE

ora.DATA.dg(ora.asmgroup)

1 ONLINE ONLINE rac1 STABLE

2 ONLINE OFFLINE STABLE

3 OFFLINE OFFLINE STABLE

ora.LISTENER_SCAN1.lsnr

1 ONLINE ONLINE rac1 STABLE

ora.asm(ora.asmgroup)

1 ONLINE ONLINE rac1 Started,STABLE

2 ONLINE OFFLINE STABLE

3 OFFLINE OFFLINE STABLE

ora.asmnet1.asmnetwork(ora.asmgroup)

1 ONLINE ONLINE rac1 STABLE

2 ONLINE OFFLINE STABLE

3 OFFLINE OFFLINE STABLE

ora.cvu

1 ONLINE ONLINE rac1 STABLE

ora.qosmserver

1 ONLINE ONLINE rac1 STABLE

ora.rac.db

1 ONLINE ONLINE rac1 Open,HOME=/u01/app/o

racle/product/19.3.0

.0/db_1,STABLE

ora.rac1.vip

1 ONLINE ONLINE rac1 STABLE

ora.scan1.vip

1 ONLINE ONLINE rac1 STABLE

--------------------------------------------------------------------------------

如果发现有169.254的地址,影响心跳,需要进行删除:

/u01/app/19.3.0.0/grid/bin/crsctl start crs -excl -nocrs

/u01/app/19.3.0.0/grid/bin/crsctl stop res ora.asm -init

/u01/app/19.3.0.0/grid/bin/crsctl modify res ora.cluster_interconnect.haip -attr "ENABLED=0" -init

/u01/app/19.3.0.0/grid/bin/crsctl modify res ora.asm -attr "START_DEPENDENCIES='hard(ora.cssd,ora.ctssd)pullup(ora.cssd,ora.ctssd)weak(ora.drivers.acfs)',STOP_DEPENDENCIES='hard(intermediate:ora.cssd)'" -init

/u01/app/19.3.0.0/grid/bin/crsctl stop crs

reboot

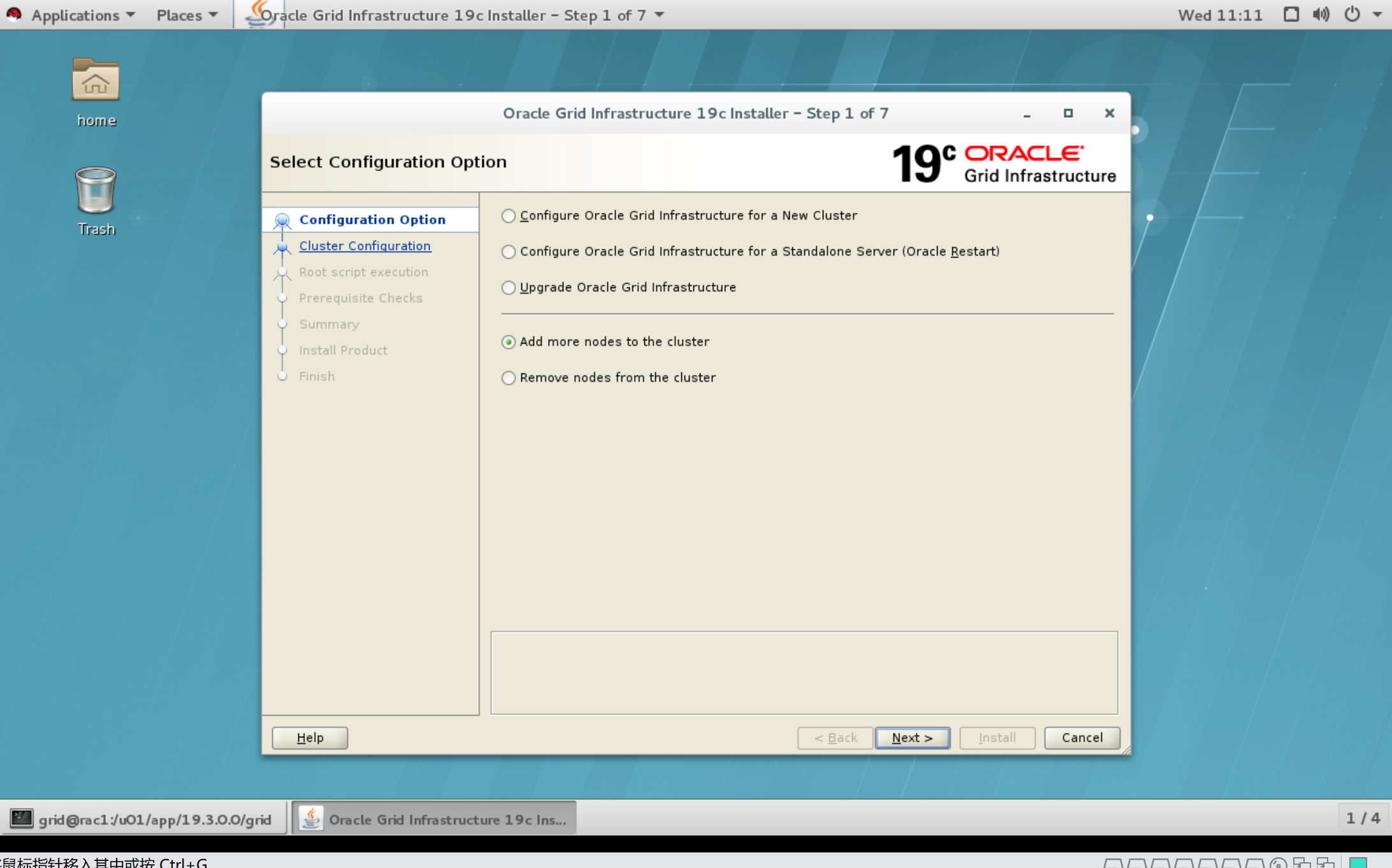

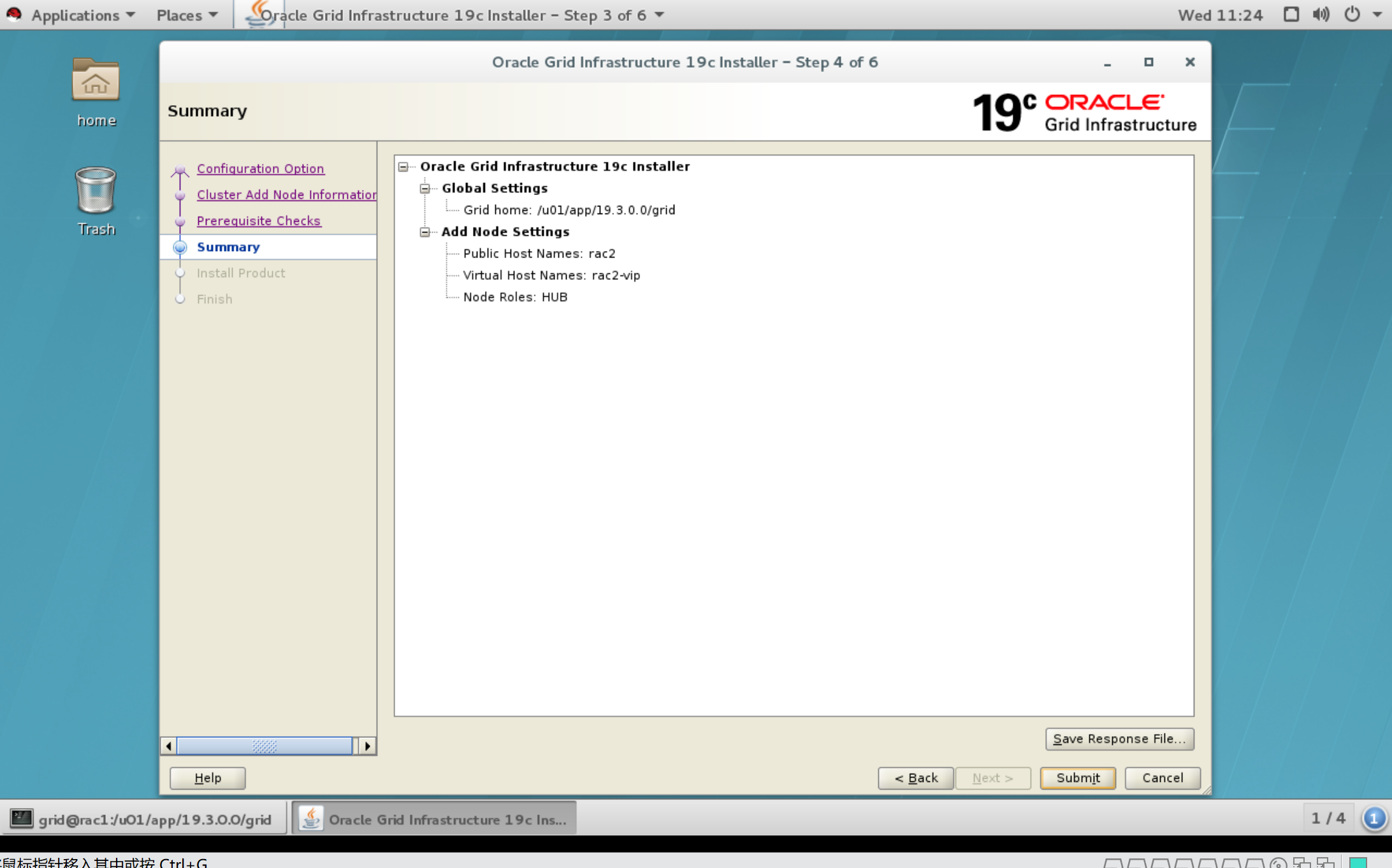

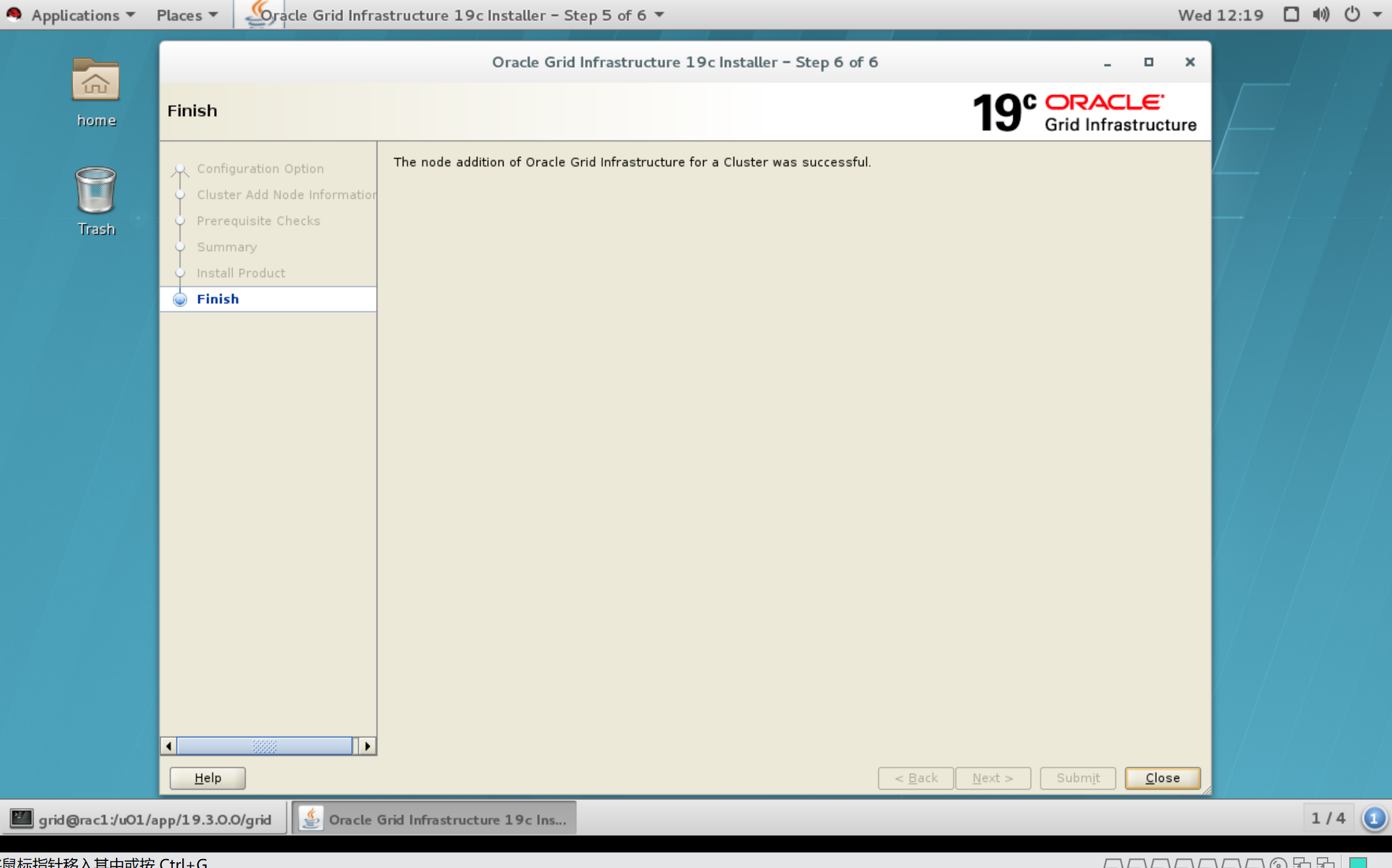

三、新增节点

之前的操作是删除了节点二,现在将把节点二增加回来。

3.1 添加前状态检查(节点一执行)

[grid@rac1 ~]$ cluvfy stage -pre nodeadd -n rac2

如果没有必须要做的修改可以加参数export IGNORE_PREADDNODE_CHECKS=Y进行添加节点 --图形化不用操作

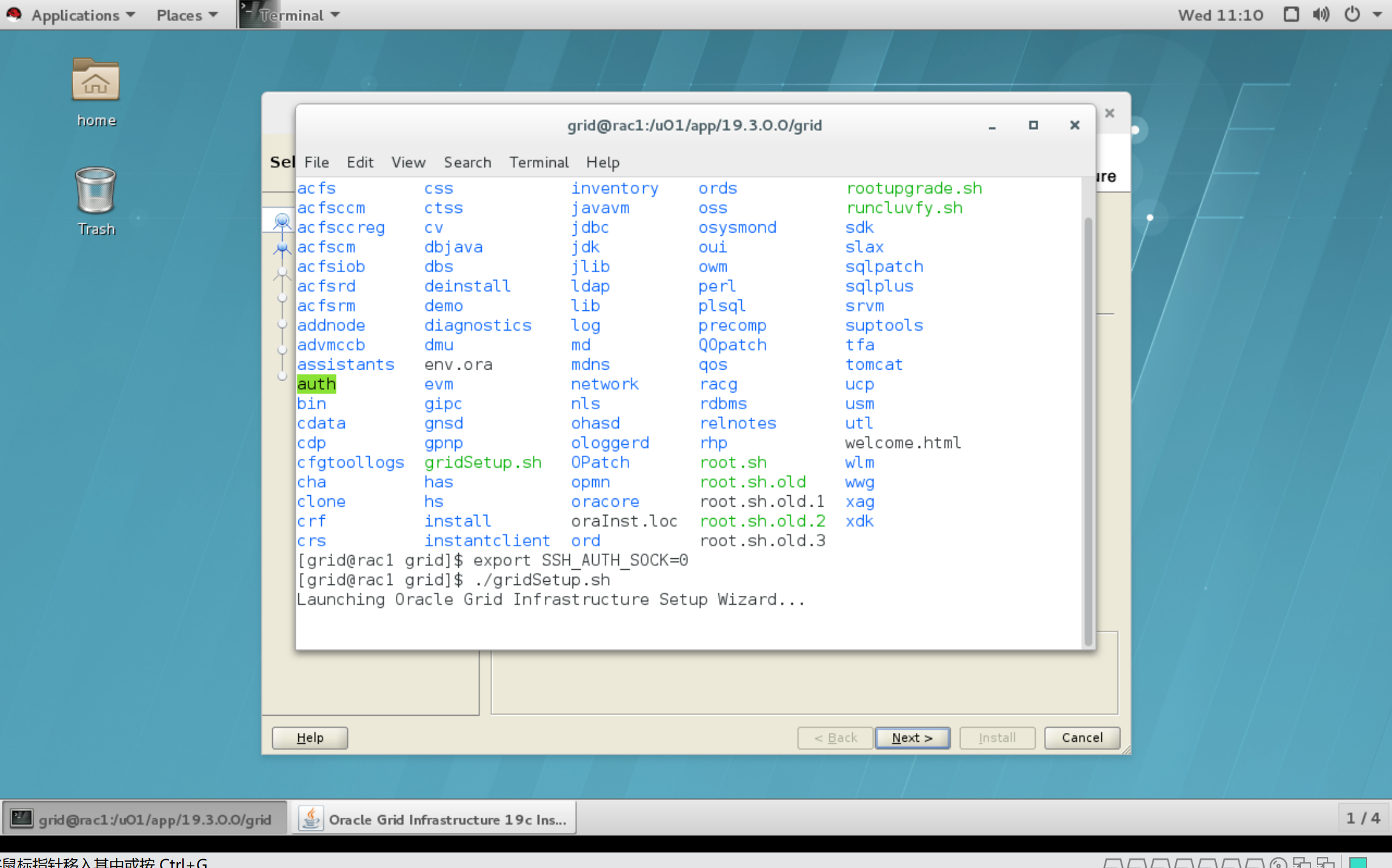

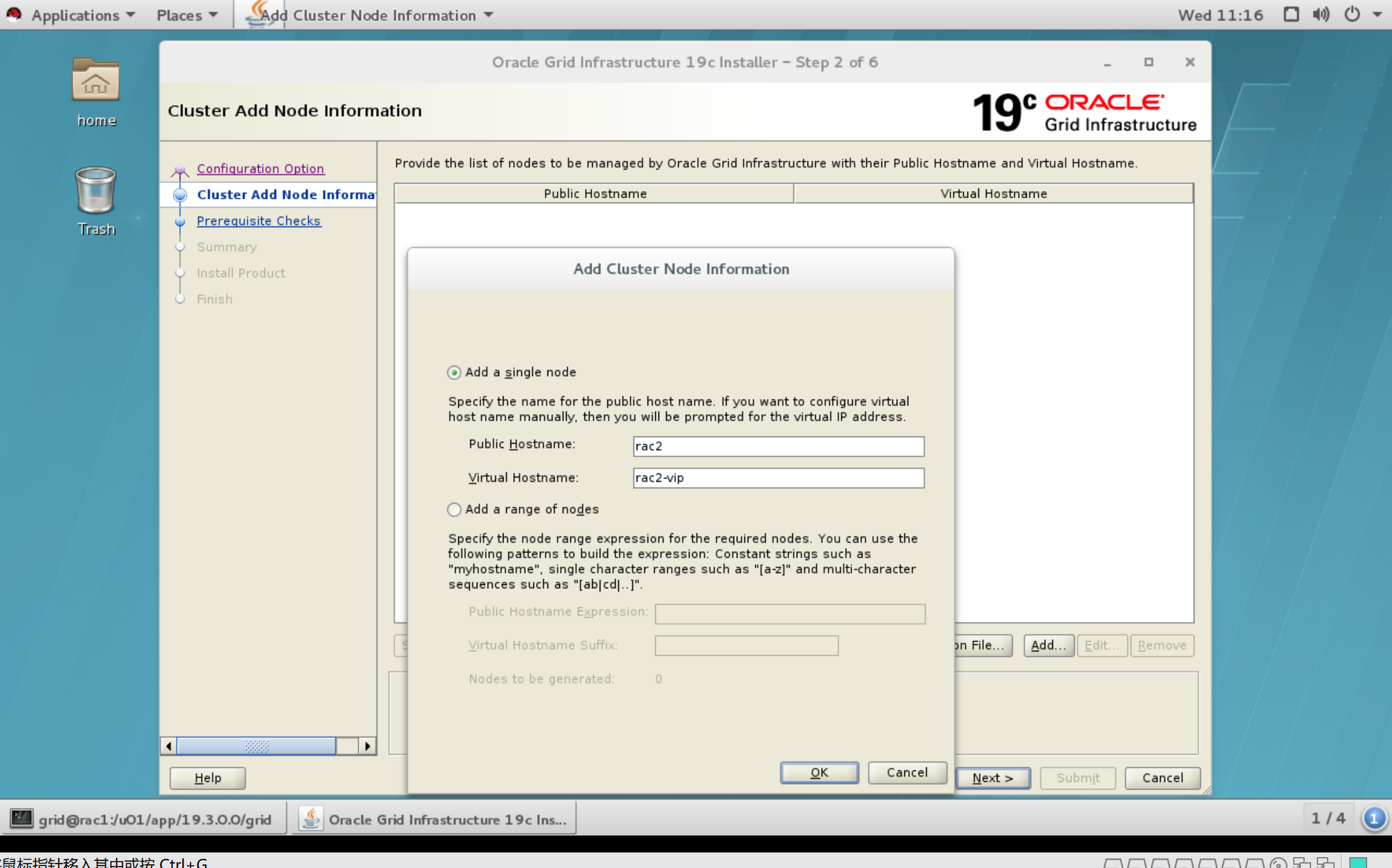

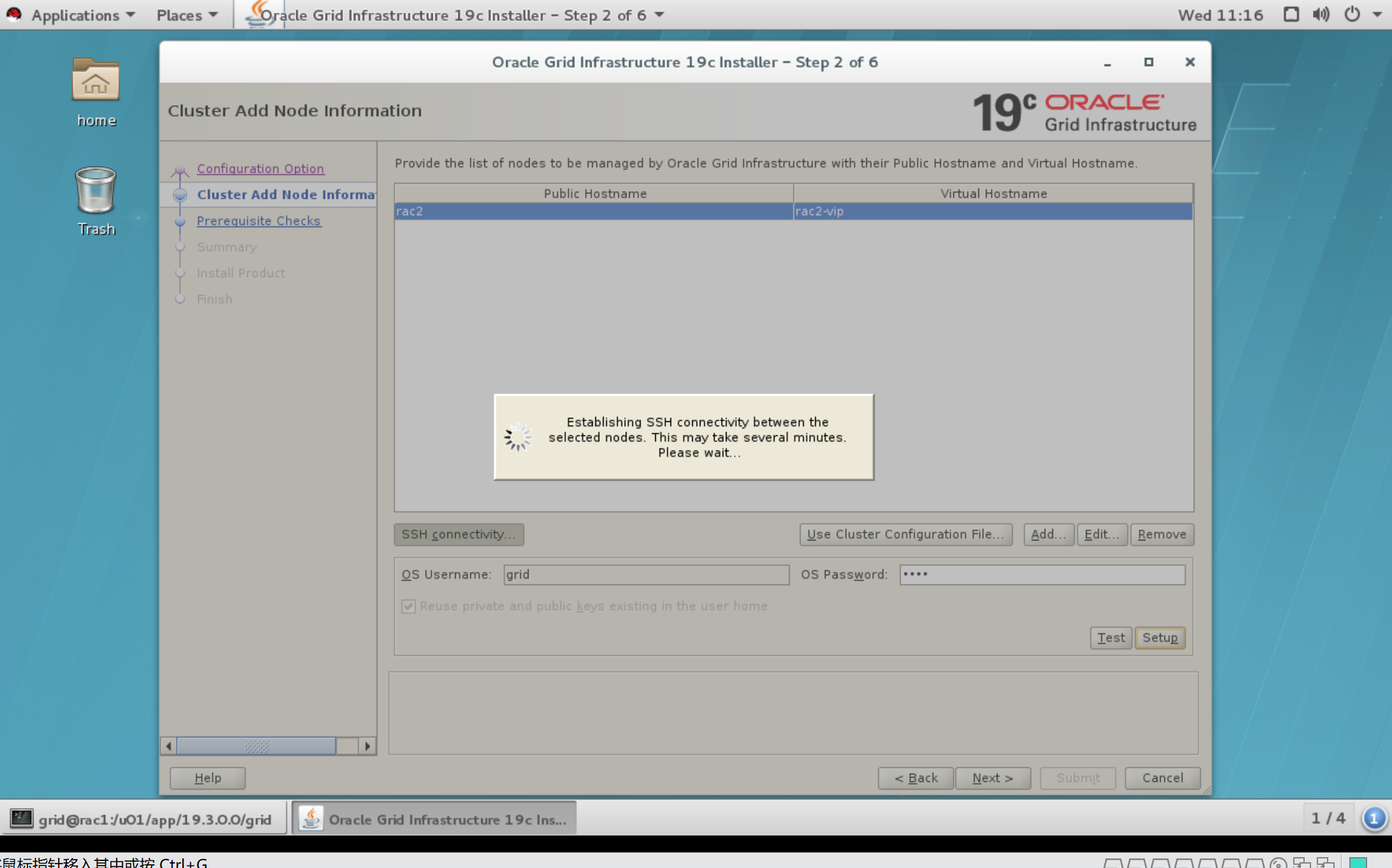

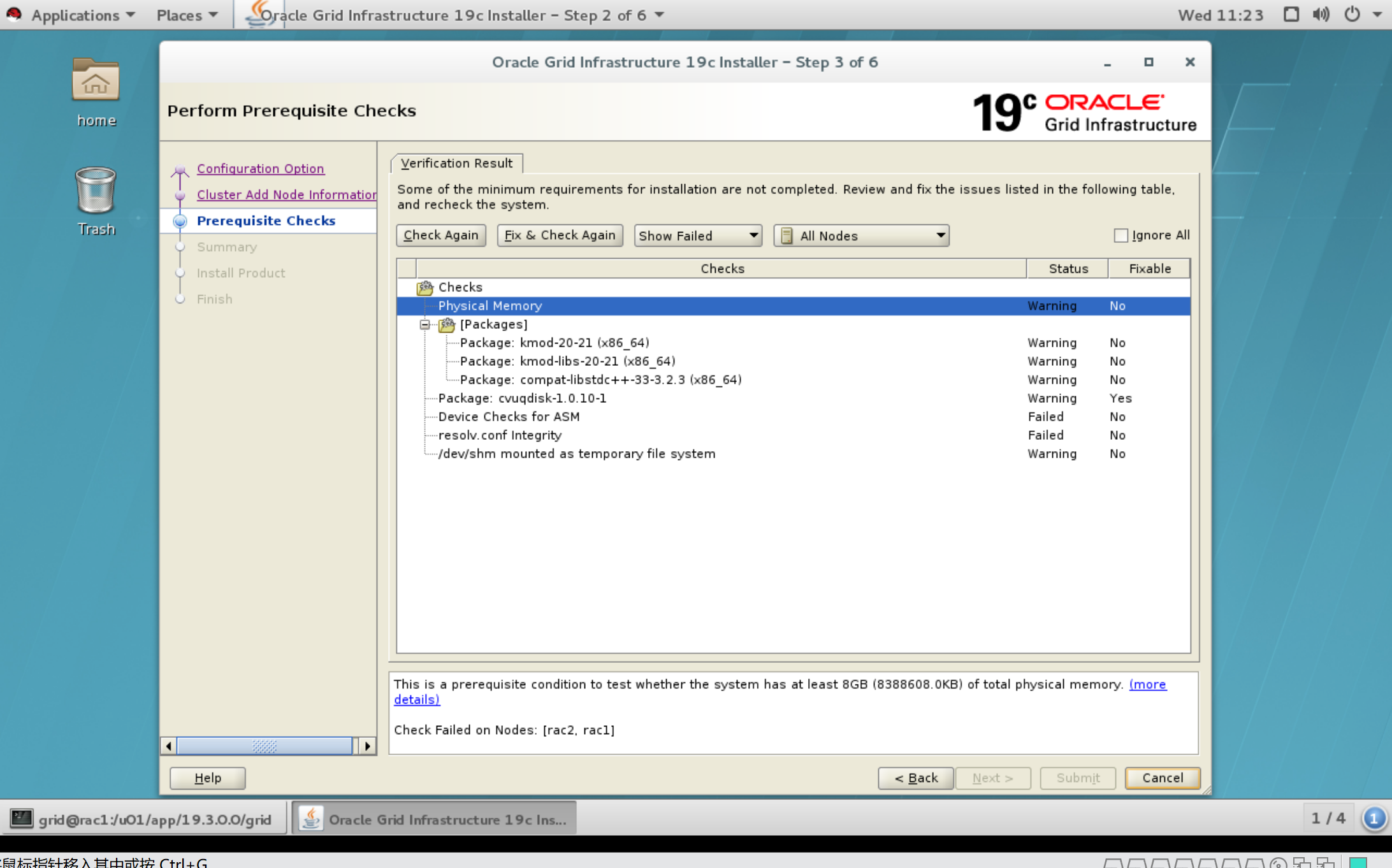

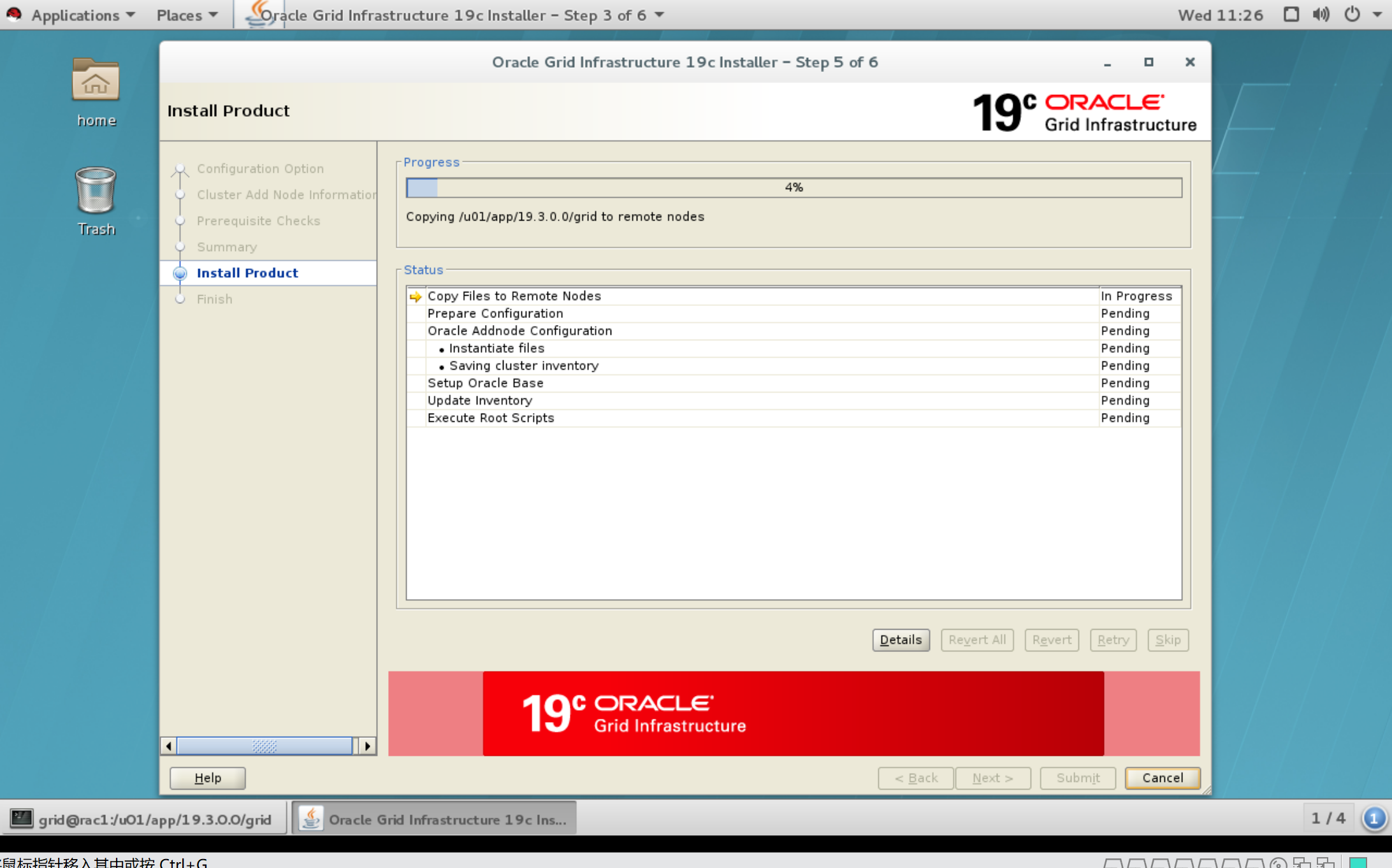

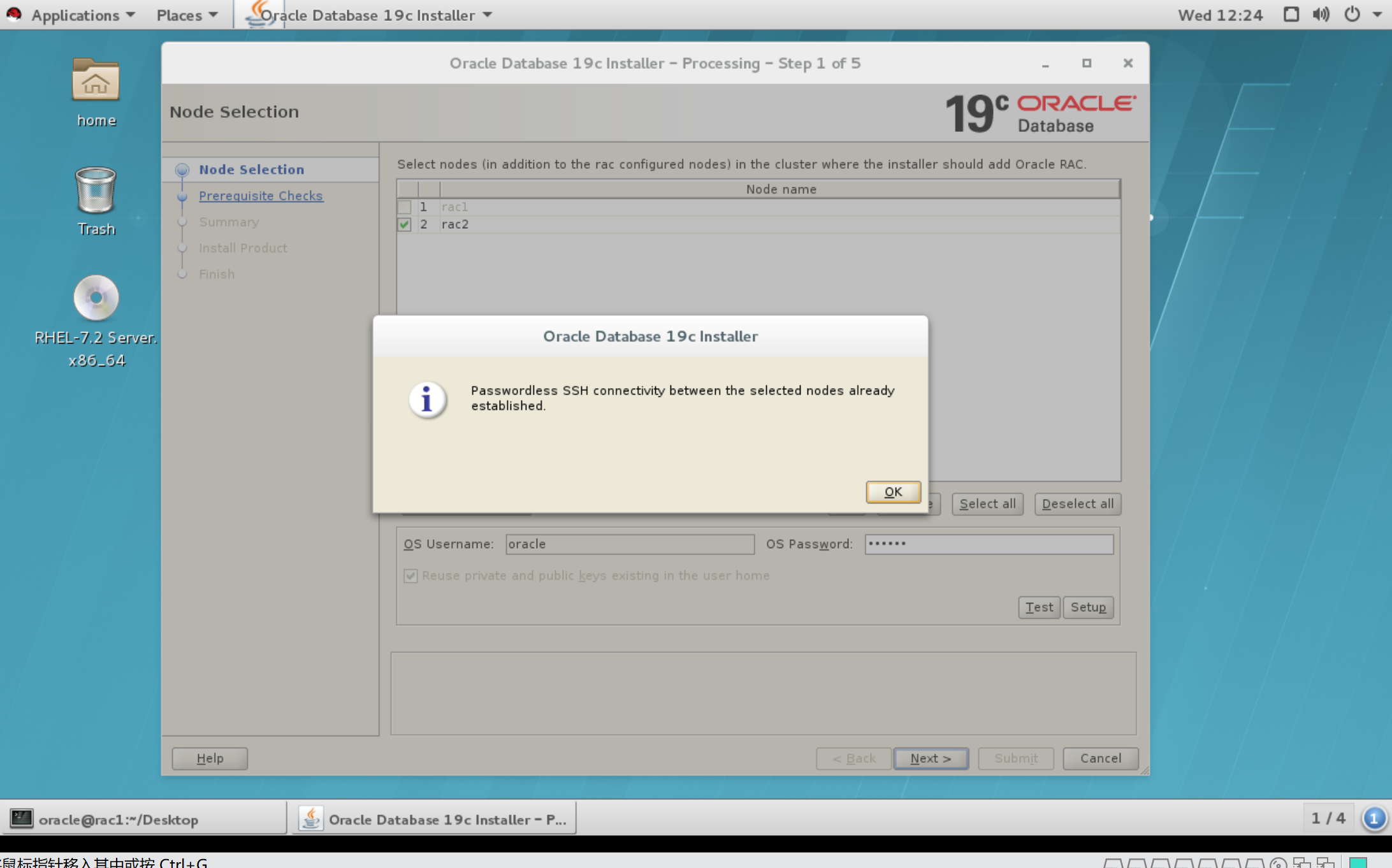

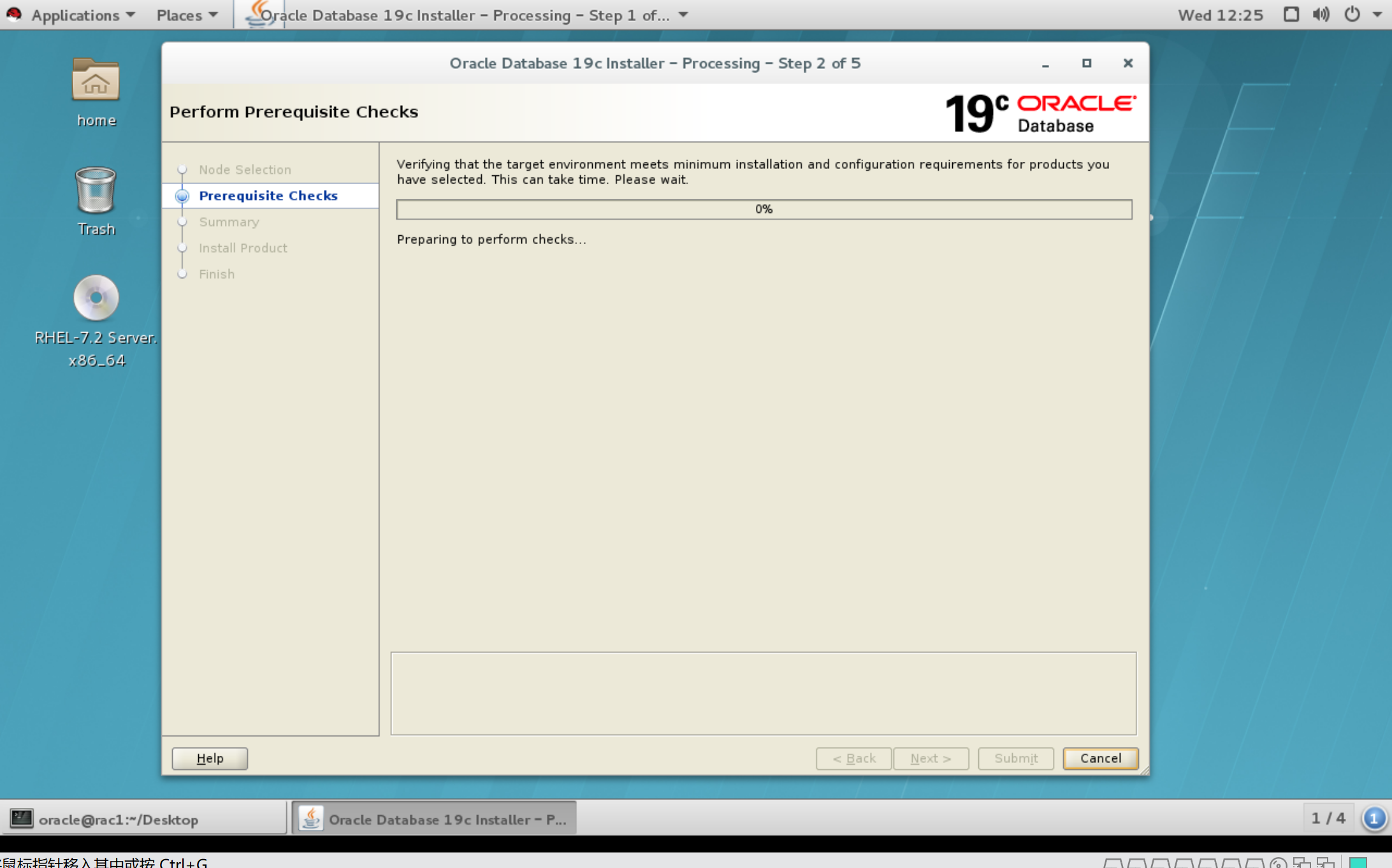

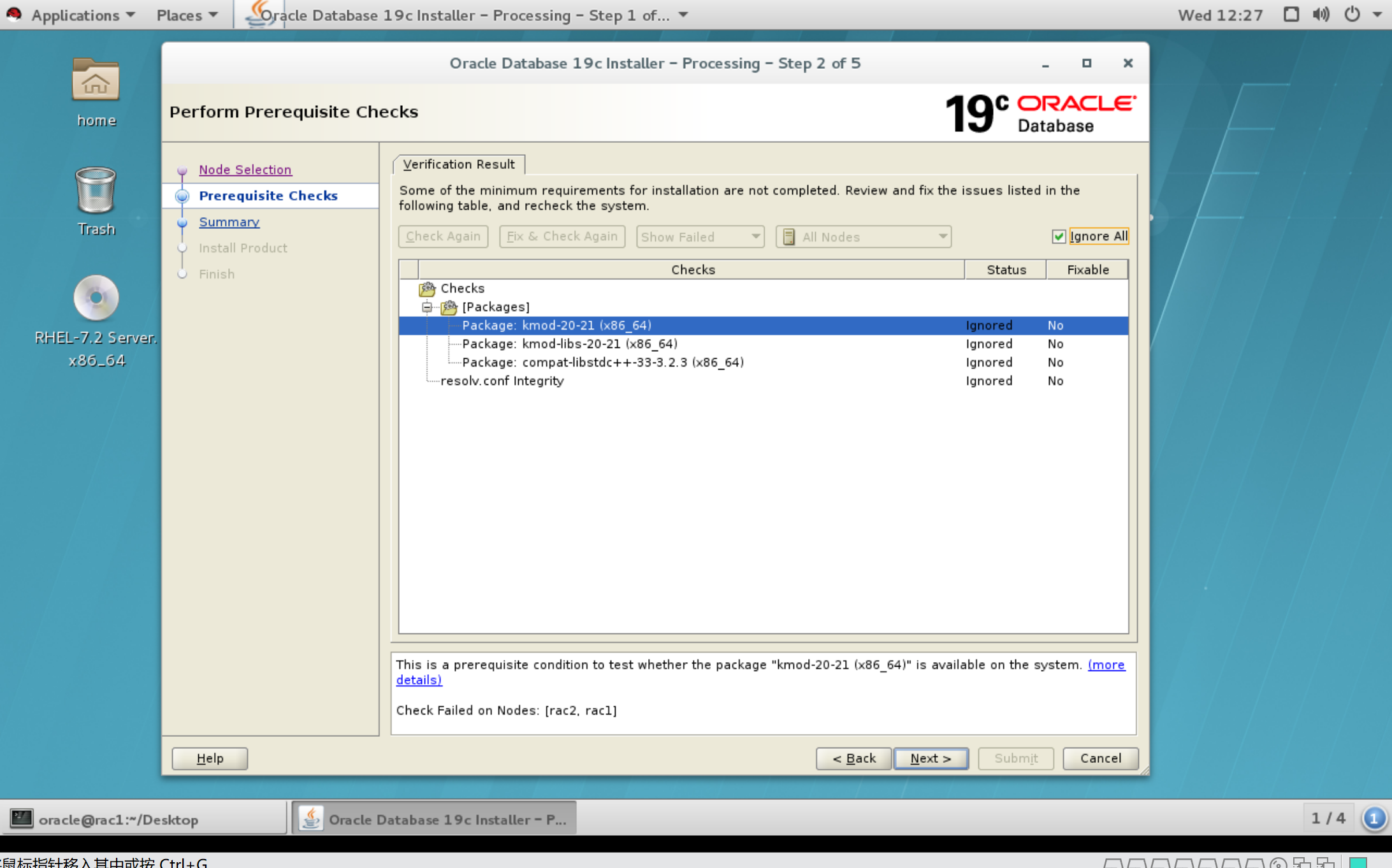

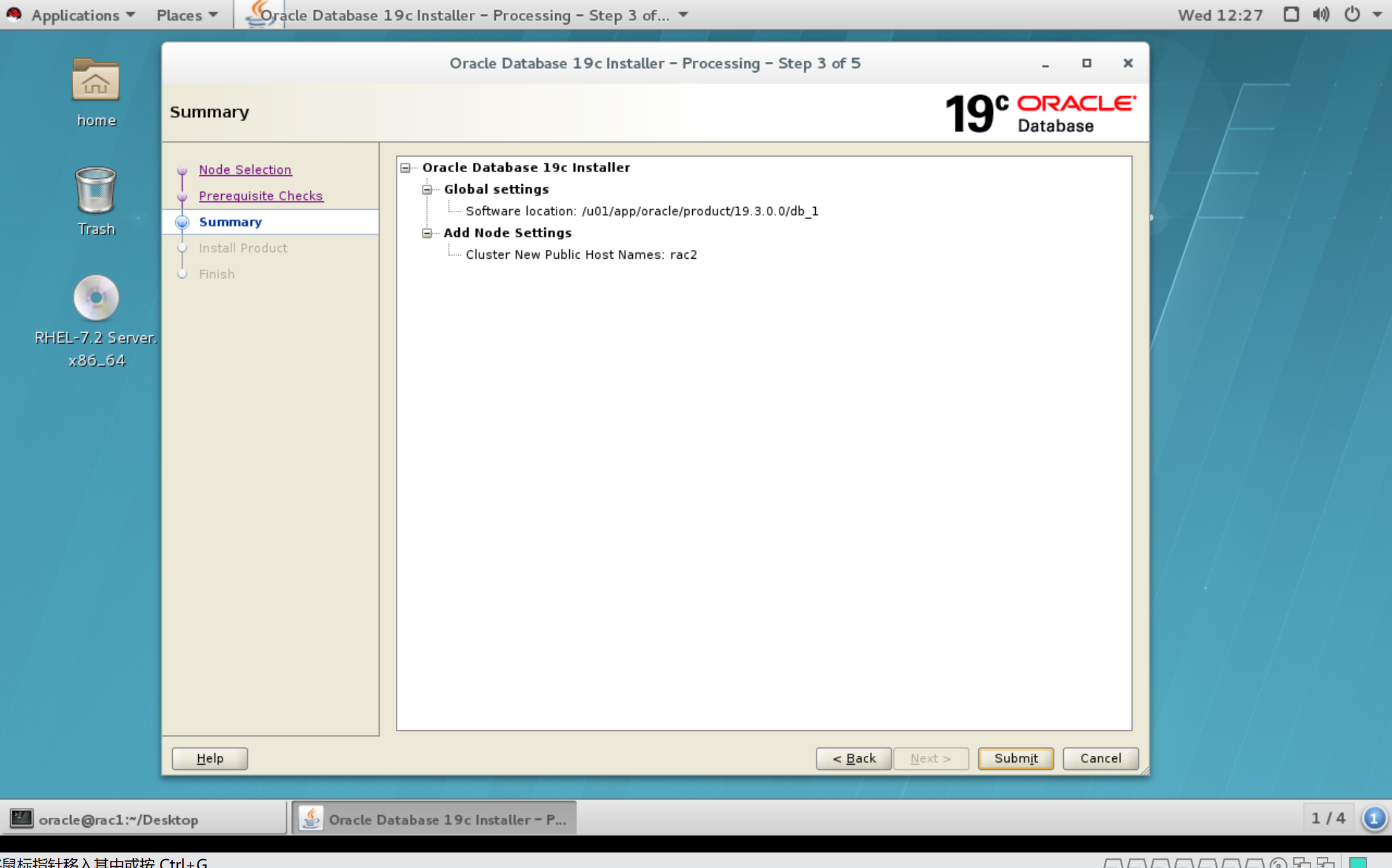

3.2 grid新增节点rac2(节点一执行)

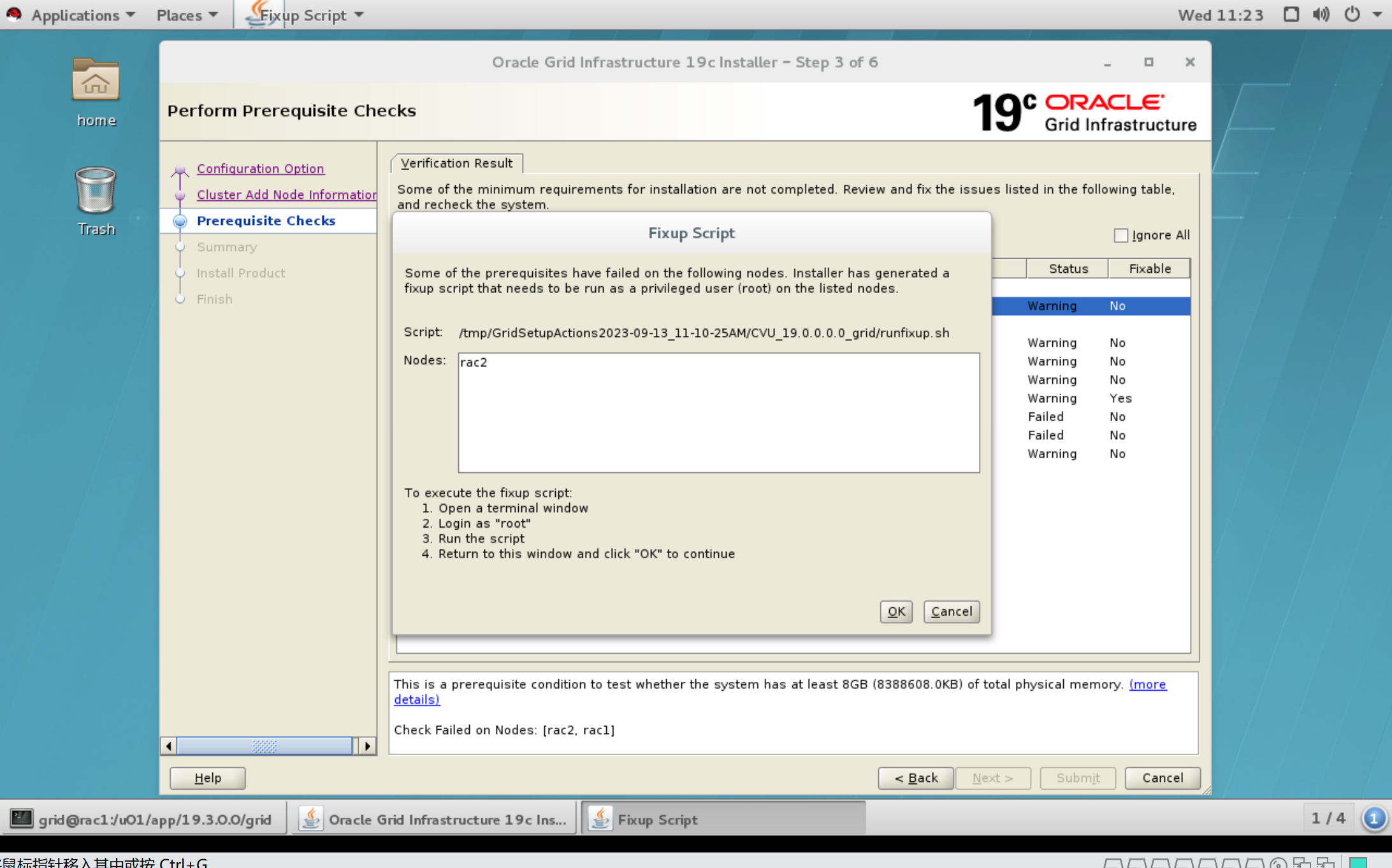

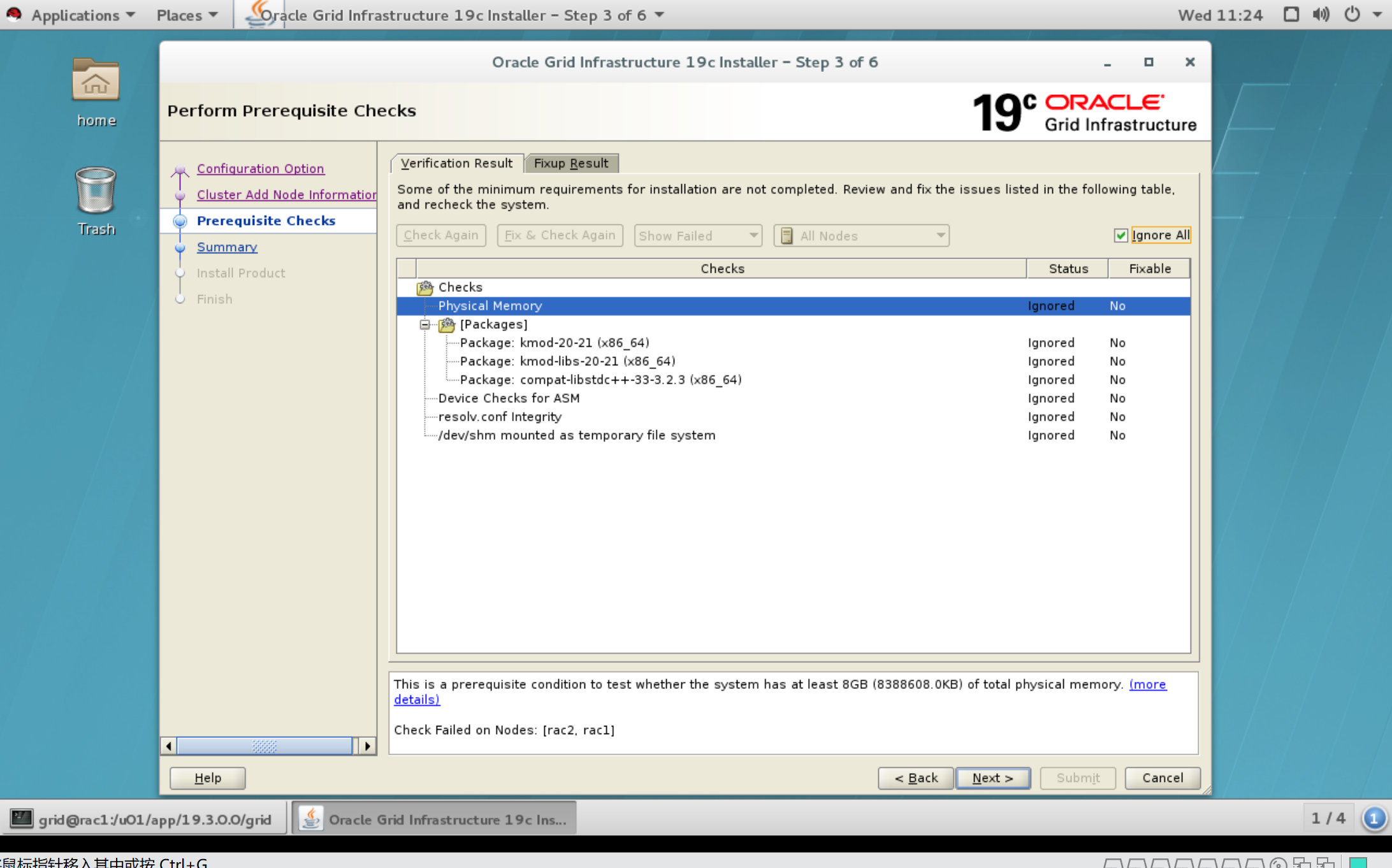

在互信的步骤会报错:

------------------------------------报错信息

PRKN-1038 : The command "/usr/bin/ssh -o FallBackToRsh=no -o PasswordAuthentication=no -o StrictHostKeyChecking=yes -o NumberOfPasswordPrompts=0 rac2 -n /bin/true" run on node "rac1" gave an unexpected output: "Agent admitted failure to sign using the key.Permission denied (publickey,gssapi-keyex,gssapi-with-mic,password)."

------------------------------------参考文档

GI runInstaller Fails with INS-6006 in VNC During SSH test even though SSH Setup is successful (Doc ID 2070270.1)

------------------------------------处理方式如下:

在节点一执行export SSH_AUTH_SOCK=0,再运行gridSetup.sh

grid home下面有隐藏文件没有删除,还有数据库目录权限没有改回来。如果不是先删除再新增加的节点,不会有此次的问题。因为此次是先删除节点,再加回去,需要有以下操作才能继续进行:

[root@rac2 grid]# cd /u01/app/19.3.0.0/grid

[root@rac2 grid]# rm -rf .opatchauto_storage/

[root@rac2 grid]# rm -rf .patch_storage/

[root@rac2 grid]# chown -R grid:oinstall /u01

[root@rac2 grid]# chown -R oracle:oinstall /u01/app/oracle

[root@rac2 grid]# chmod -R 775 /u01

[root@rac2 grid]# /tmp/GridSetupActions2023-09-13_11-10-25AM/CVU_19.0.0.0.0_grid/runfixup.sh

All Fix-up operations were completed successfully.

[root@rac1 bin]# vi /etc/systemd/logind.conf

RemoveIPC=no

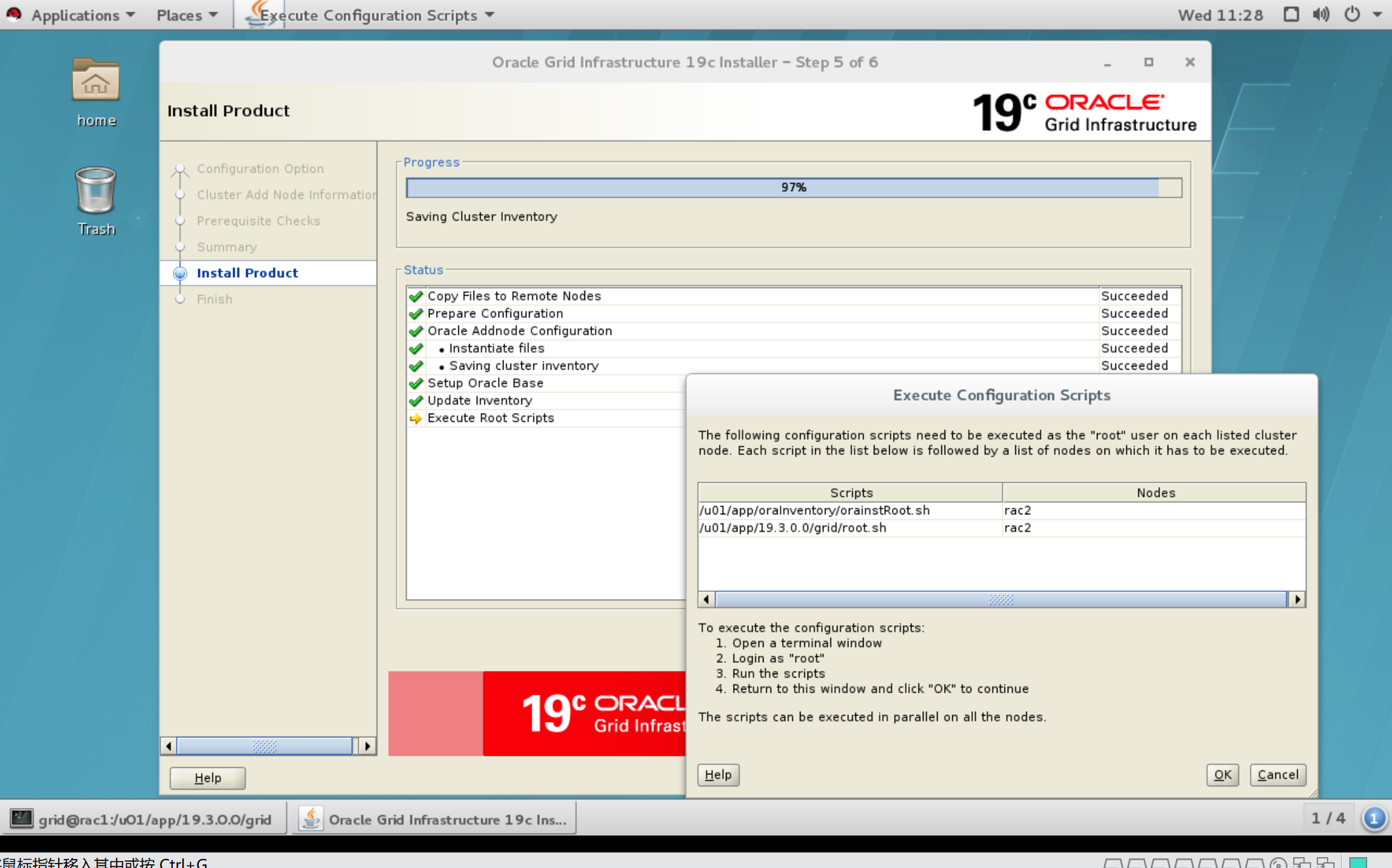

-----------------------------------------orainstRoot.sh脚本执行

[root@rac2 grid]# /u01/app/oraInventory/orainstRoot.sh

Changing permissions of /u01/app/oraInventory.

Adding read,write permissions for group.

Removing read,write,execute permissions for world.

Changing groupname of /u01/app/oraInventory to oinstall.

The execution of the script is complete.

-----------------------------------------root.sh脚本执行

[root@rac2 grid]# /u01/app/19.3.0.0/grid/root.sh

Performing root user operation.

The following environment variables are set as:

ORACLE_OWNER= grid

ORACLE_HOME= /u01/app/19.3.0.0/grid

Enter the full pathname of the local bin directory: [/usr/local/bin]:

The contents of "dbhome" have not changed. No need to overwrite.

The contents of "oraenv" have not changed. No need to overwrite.

The contents of "coraenv" have not changed. No need to overwrite.

Entries will be added to the /etc/oratab file as needed by

Database Configuration Assistant when a database is created

Finished running generic part of root script.

Now product-specific root actions will be performed.

Relinking oracle with rac_on option

Using configuration parameter file: /u01/app/19.3.0.0/grid/crs/install/crsconfig_params

The log of current session can be found at:

/u01/app/grid/crsdata/rac2/crsconfig/rootcrs_rac2_2023-09-13_11-36-33AM.log

2023/09/13 11:36:46 CLSRSC-594: Executing installation step 1 of 19: 'SetupTFA'.

2023/09/13 11:36:46 CLSRSC-594: Executing installation step 2 of 19: 'ValidateEnv'.

2023/09/13 11:36:47 CLSRSC-363: User ignored prerequisites during installation

2023/09/13 11:36:47 CLSRSC-594: Executing installation step 3 of 19: 'CheckFirstNode'.

2023/09/13 11:36:47 CLSRSC-4002: Successfully installed Oracle Trace File Analyzer (TFA) Collector.

2023/09/13 11:36:48 CLSRSC-594: Executing installation step 4 of 19: 'GenSiteGUIDs'.

2023/09/13 11:36:51 CLSRSC-594: Executing installation step 5 of 19: 'SetupOSD'.

2023/09/13 11:36:51 CLSRSC-594: Executing installation step 6 of 19: 'CheckCRSConfig'.

2023/09/13 11:36:56 CLSRSC-594: Executing installation step 7 of 19: 'SetupLocalGPNP'.

2023/09/13 11:36:59 CLSRSC-594: Executing installation step 8 of 19: 'CreateRootCert'.

2023/09/13 11:37:00 CLSRSC-594: Executing installation step 9 of 19: 'ConfigOLR'.

2023/09/13 11:37:02 CLSRSC-594: Executing installation step 10 of 19: 'ConfigCHMOS'.

2023/09/13 11:37:02 CLSRSC-594: Executing installation step 11 of 19: 'CreateOHASD'.

2023/09/13 11:37:06 CLSRSC-594: Executing installation step 12 of 19: 'ConfigOHASD'.

2023/09/13 11:37:18 CLSRSC-594: Executing installation step 13 of 19: 'InstallAFD'.

2023/09/13 11:37:20 CLSRSC-594: Executing installation step 14 of 19: 'InstallACFS'.

2023/09/13 11:37:25 CLSRSC-594: Executing installation step 15 of 19: 'InstallKA'.

2023/09/13 11:37:28 CLSRSC-594: Executing installation step 16 of 19: 'InitConfig'.

2023/09/13 11:37:51 CLSRSC-594: Executing installation step 17 of 19: 'StartCluster'.

2023/09/13 11:39:22 CLSRSC-343: Successfully started Oracle Clusterware stack

2023/09/13 11:39:23 CLSRSC-594: Executing installation step 18 of 19: 'ConfigNode'.

clscfg: EXISTING configuration version 19 detected.

Successfully accumulated necessary OCR keys.

Creating OCR keys for user 'root', privgrp 'root'..

Operation successful.

2023/09/13 11:40:11 CLSRSC-594: Executing installation step 19 of 19: 'PostConfig'.

2023/09/13 11:40:41 CLSRSC-325: Configure Oracle Grid Infrastructure for a Cluster ... succeeded

如果没有图形化环境,可以使用命令行方式进行操作:

[grid@rac1 ~]$ cd $ORACLE_HOME

[grid@rac1 grid]$ cd oui/bin/

[grid@rac1 bin]$ export IGNORE_PREADDNODE_CHECKS=Y

[grid@rac1 bin]$ ./addNode.sh "CLUSTER_NEW_NODES={rac2}" "CLUSTER_NEW_VIRTUAL_HOSTNAMES={rac2-vip}"

[grid@rac1 bin]$ cluvfy stage -post nodeadd -n rac2 –verbose

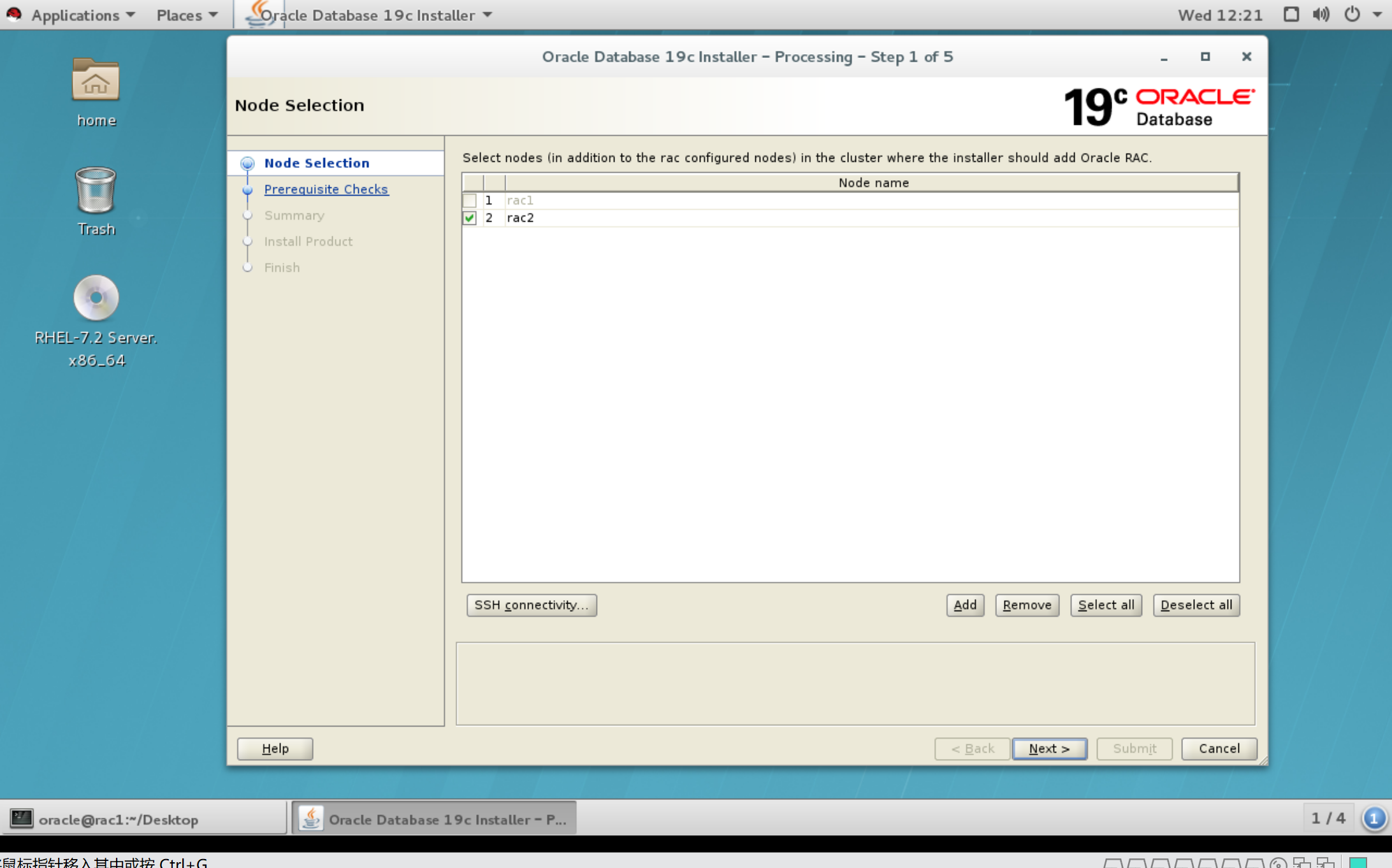

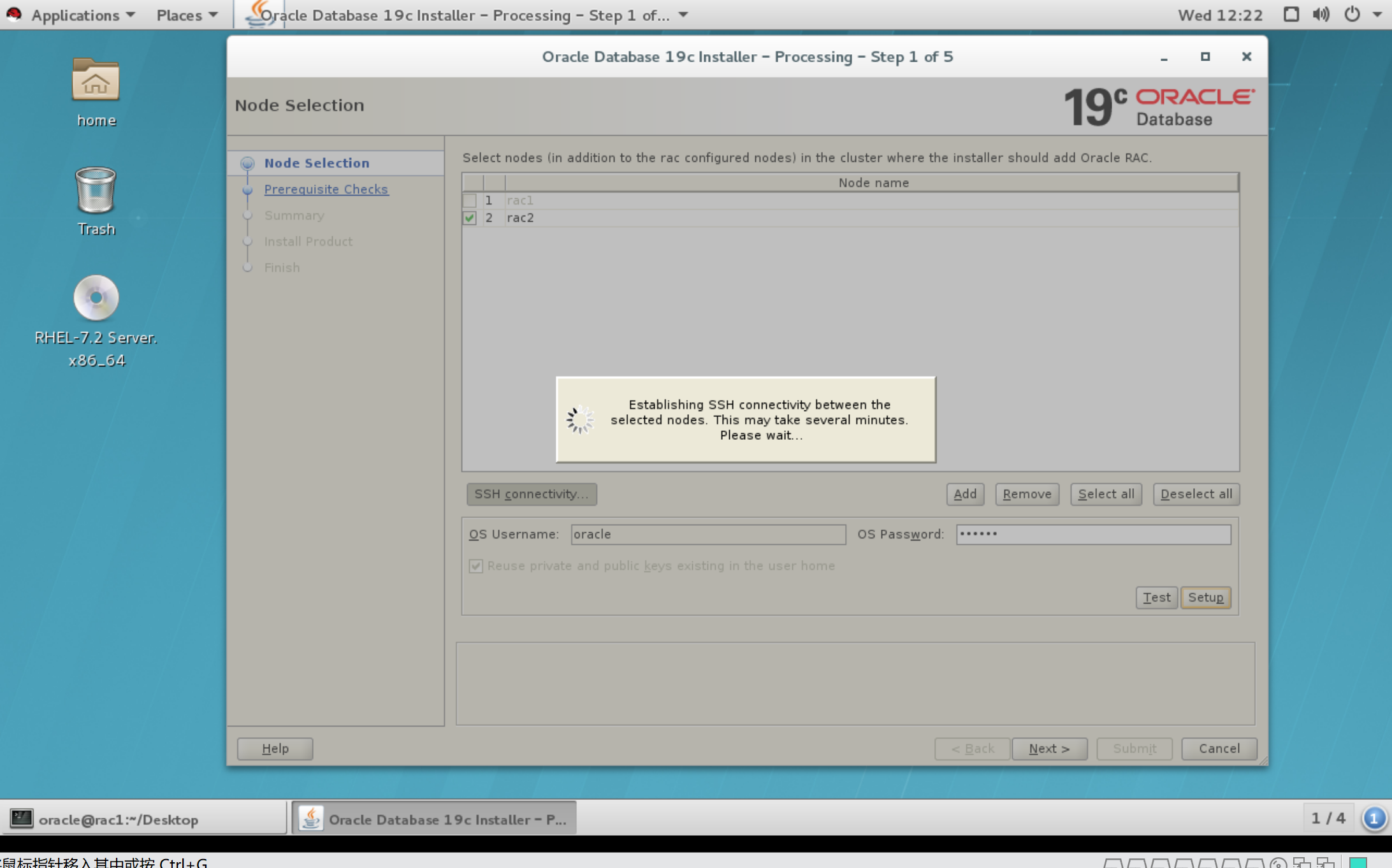

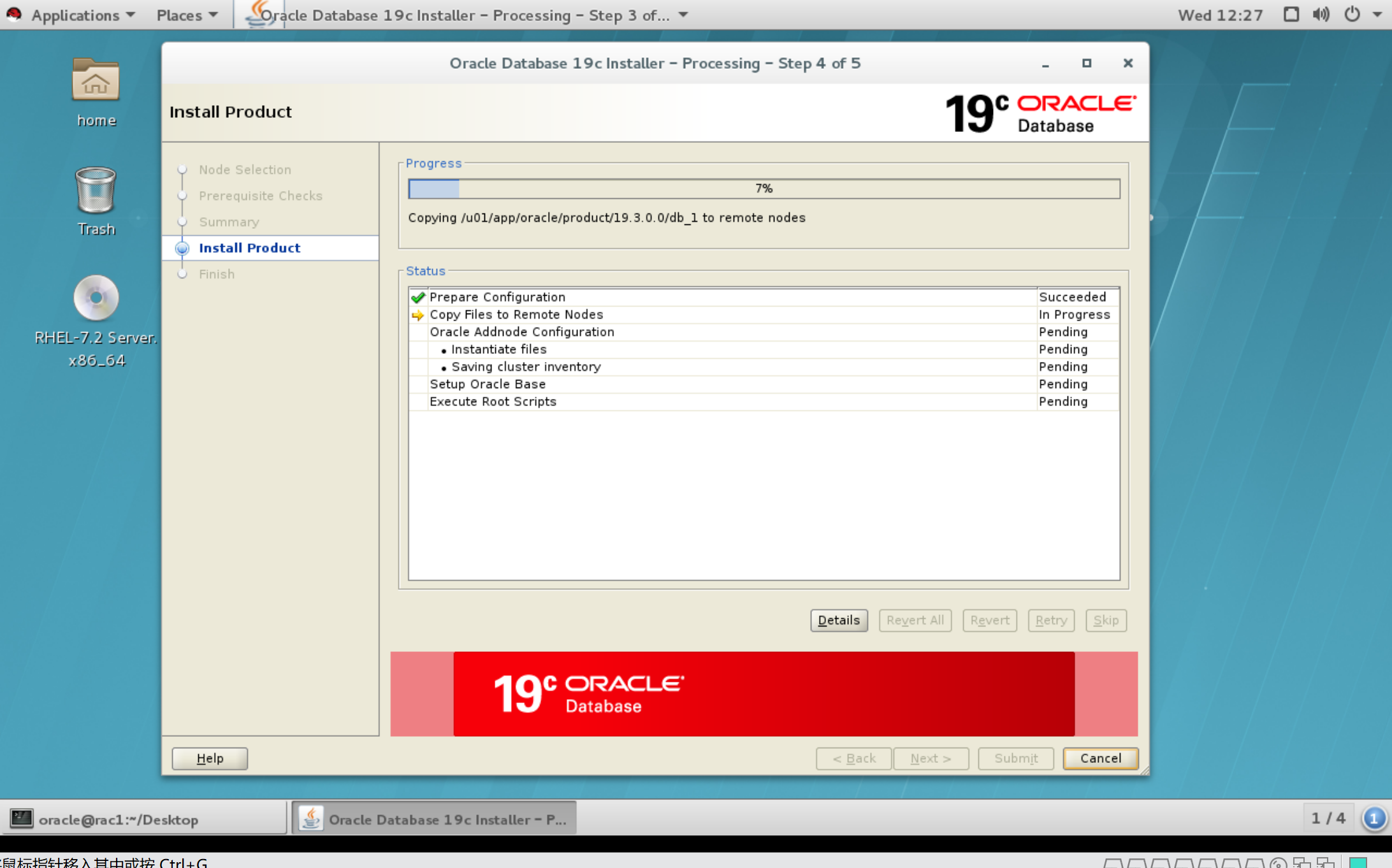

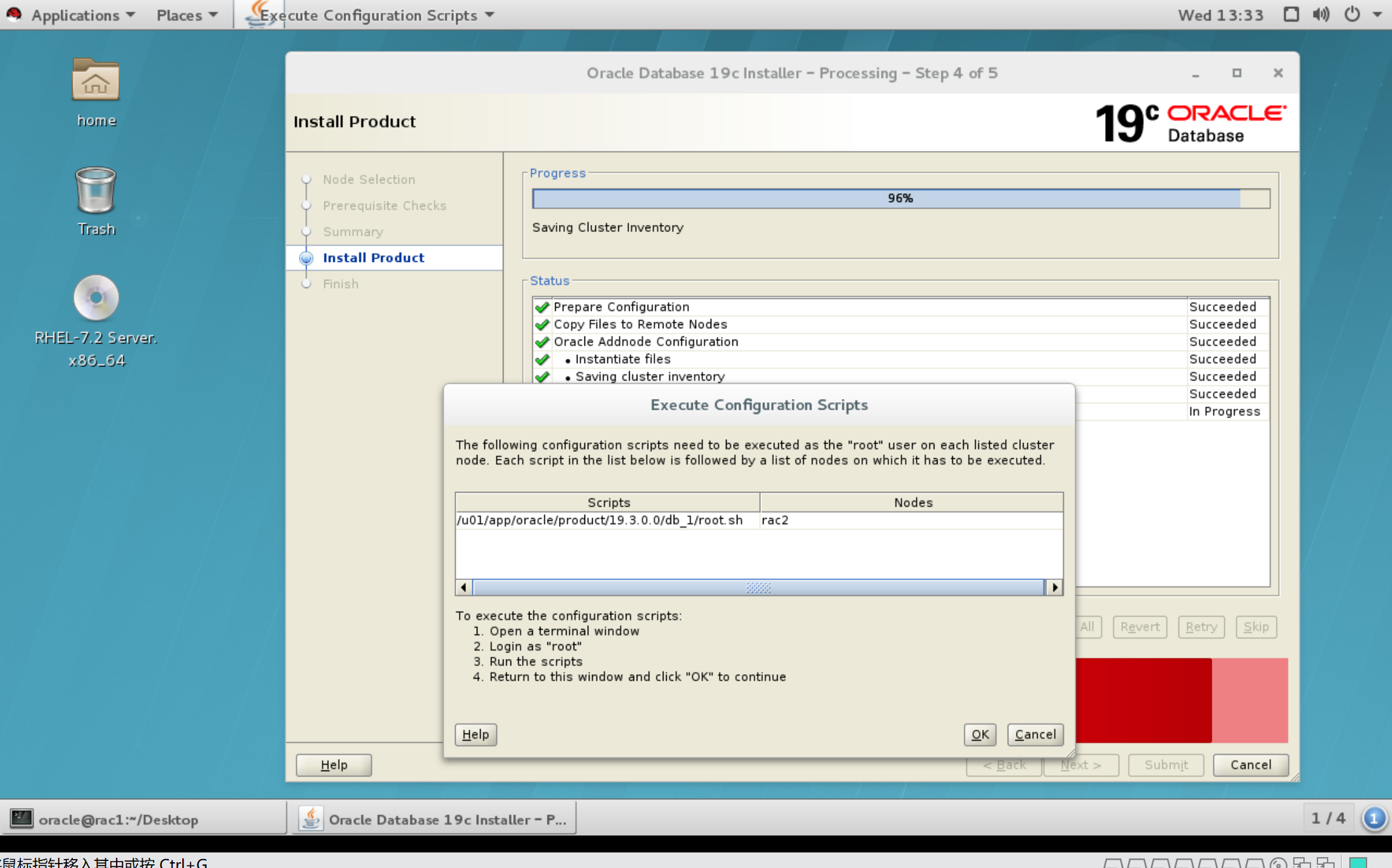

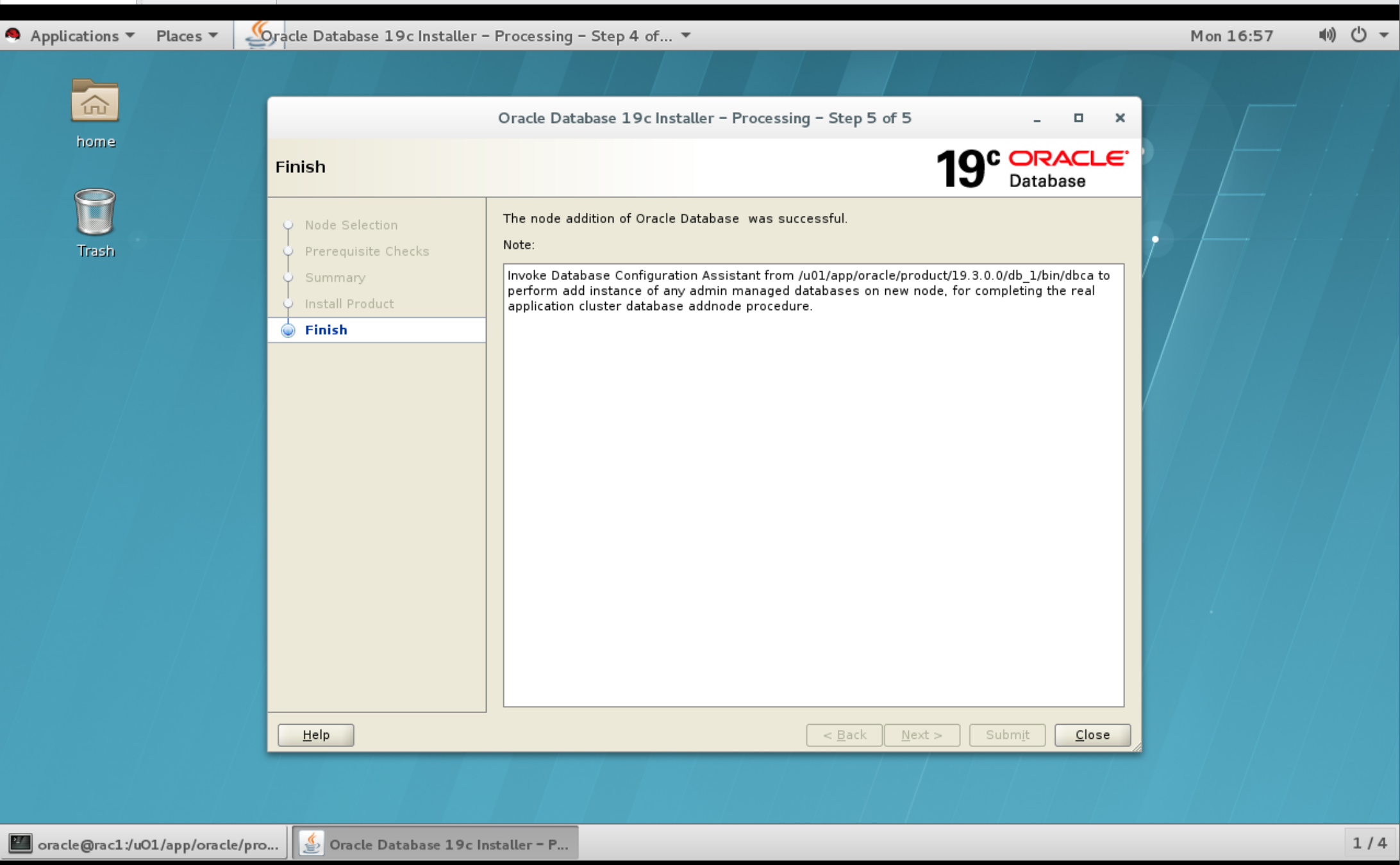

3.3 oracle新增节点rac2(节点一执行)

export SSH_AUTH_SOCK=0

$ORACLE_HOME/addnode/addnode.sh "CLUSTER_NEW_NODES={rac2}"

[root@rac2 product]# /u01/app/oracle/product/19.3.0.0/db_1/root.sh

Performing root user operation.

The following environment variables are set as:

ORACLE_OWNER= oracle

ORACLE_HOME= /u01/app/oracle/product/19.3.0.0/db_1

Enter the full pathname of the local bin directory: [/usr/local/bin]:

The contents of "dbhome" have not changed. No need to overwrite.

The contents of "oraenv" have not changed. No need to overwrite.

The contents of "coraenv" have not changed. No need to overwrite.

Entries will be added to the /etc/oratab file as needed by

Database Configuration Assistant when a database is created

Finished running generic part of root script.

Now product-specific root actions will be performed.

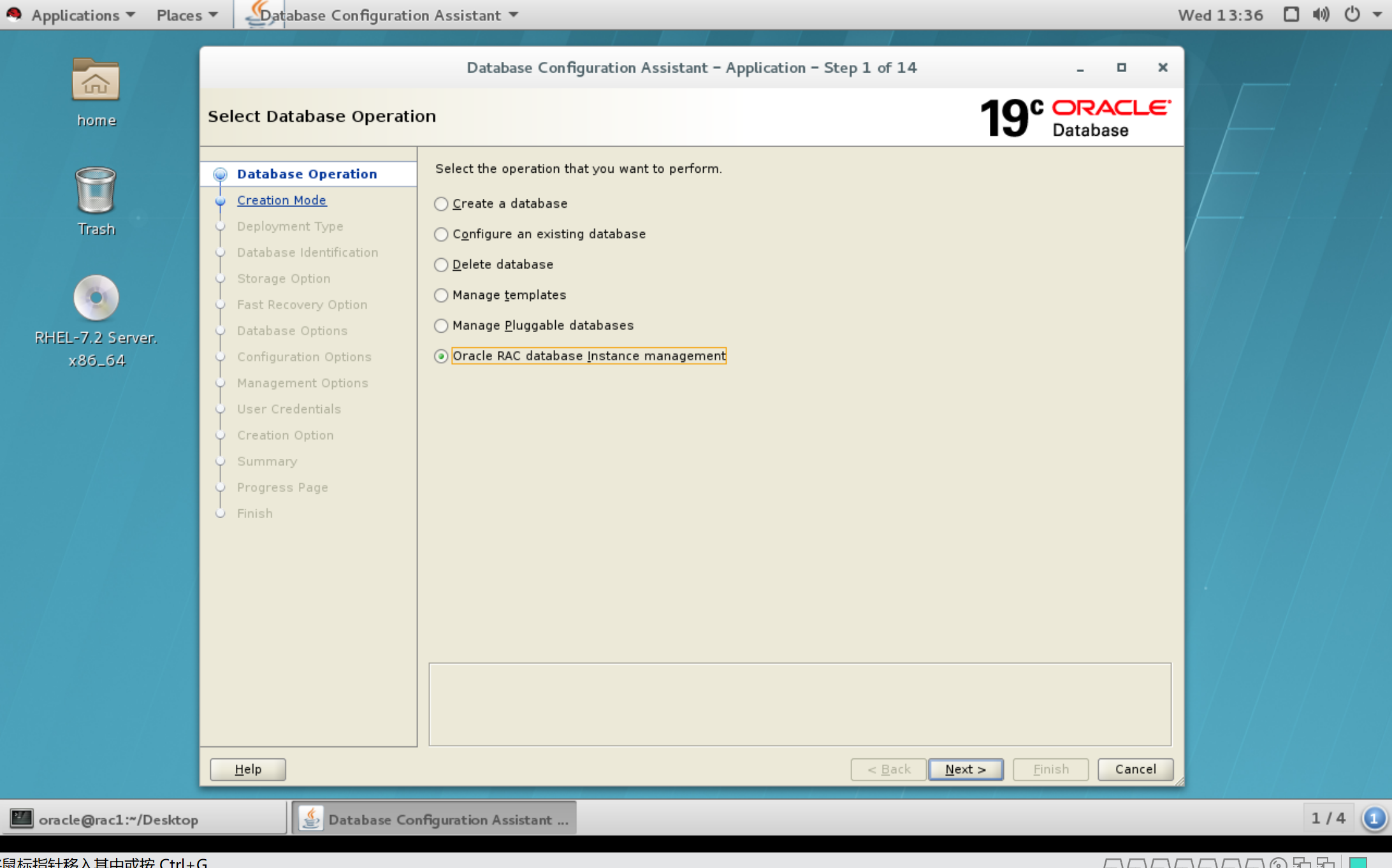

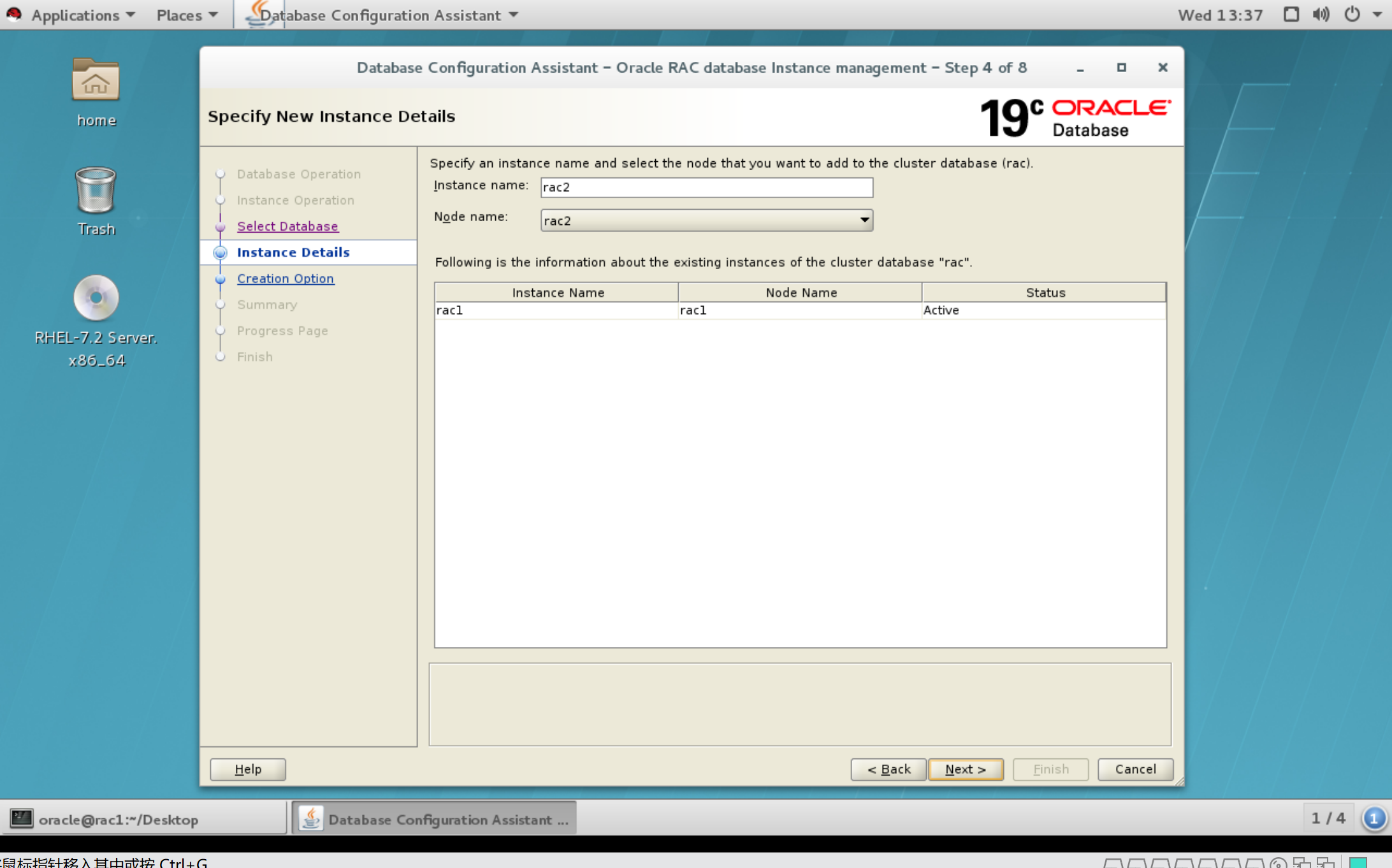

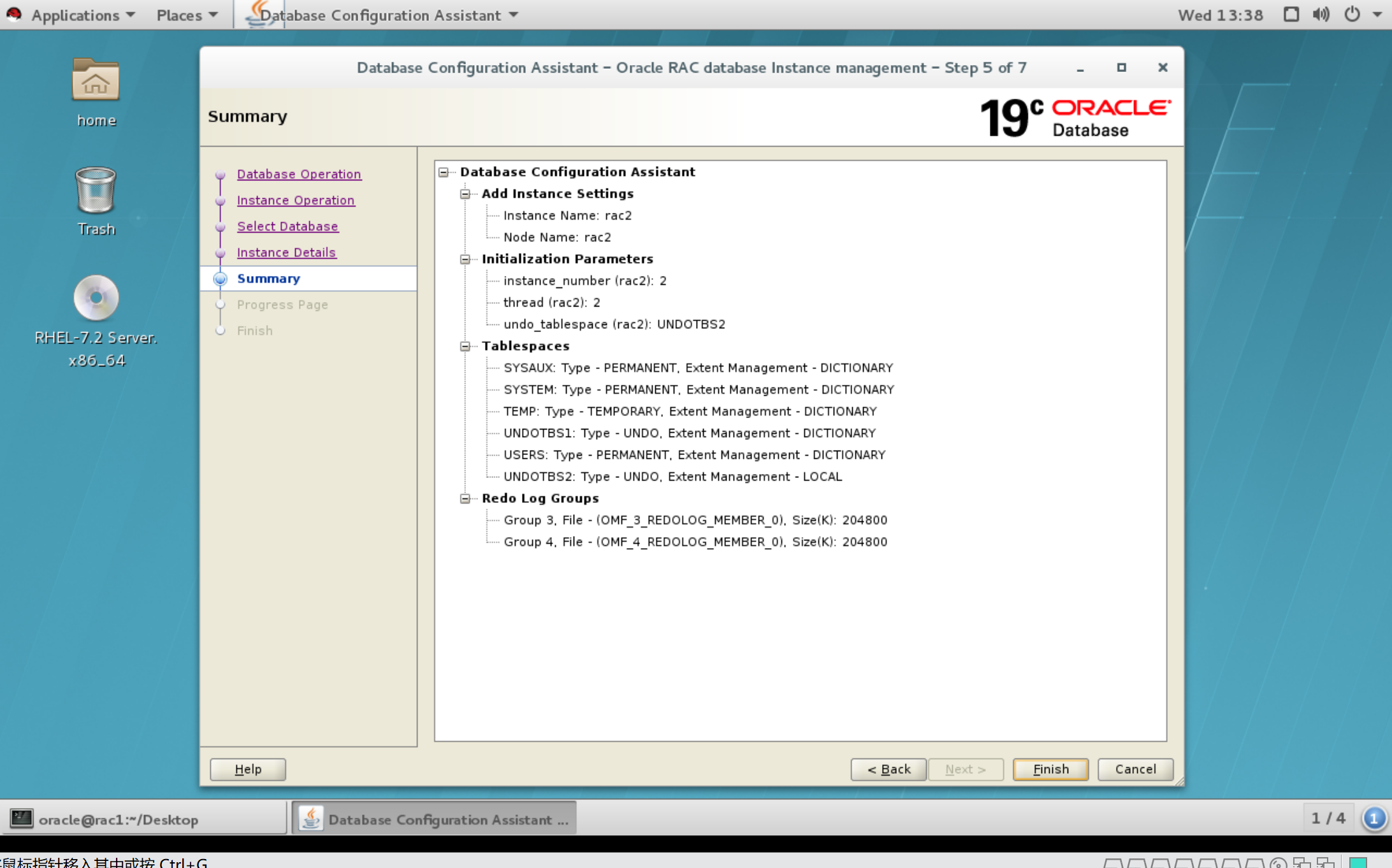

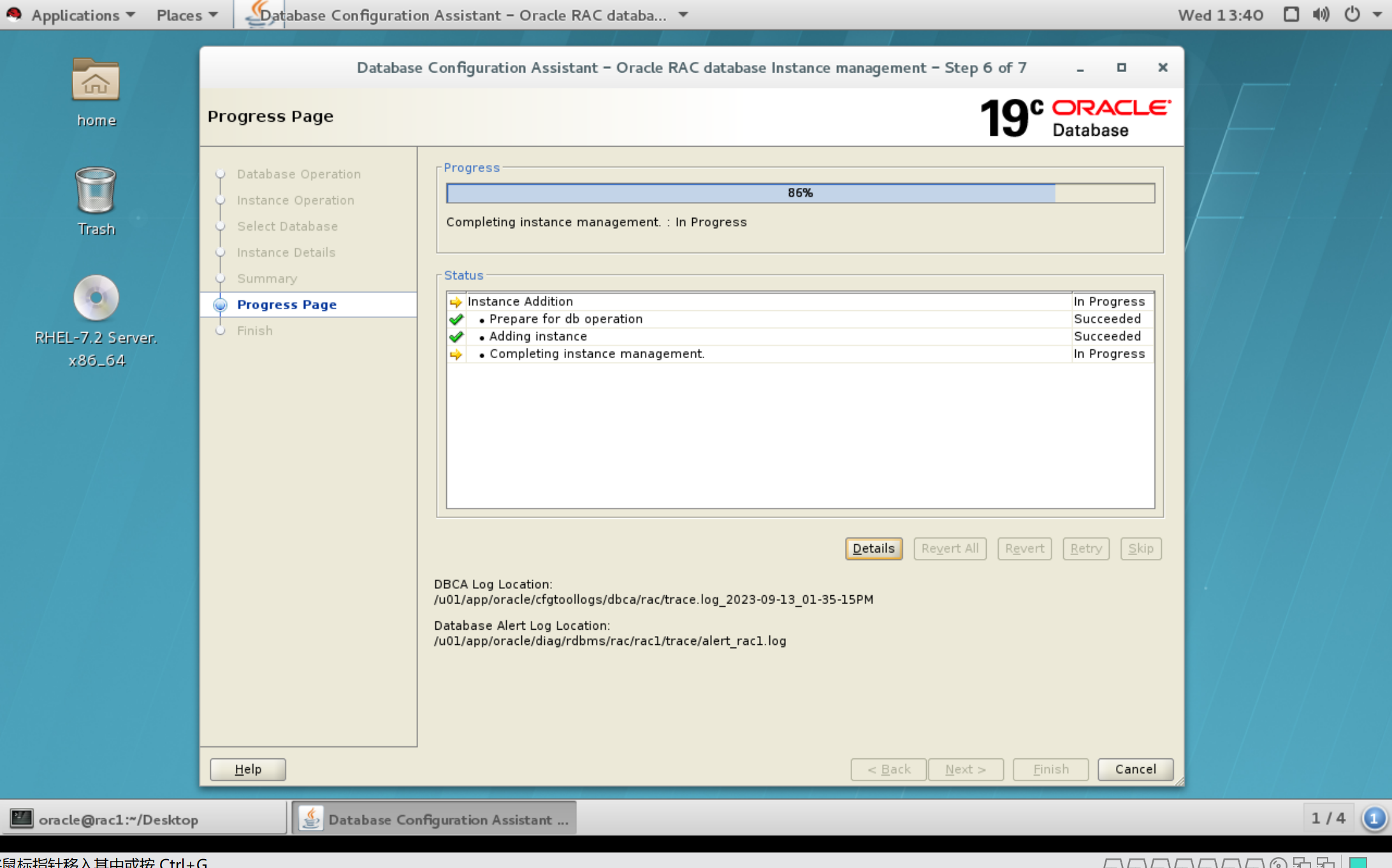

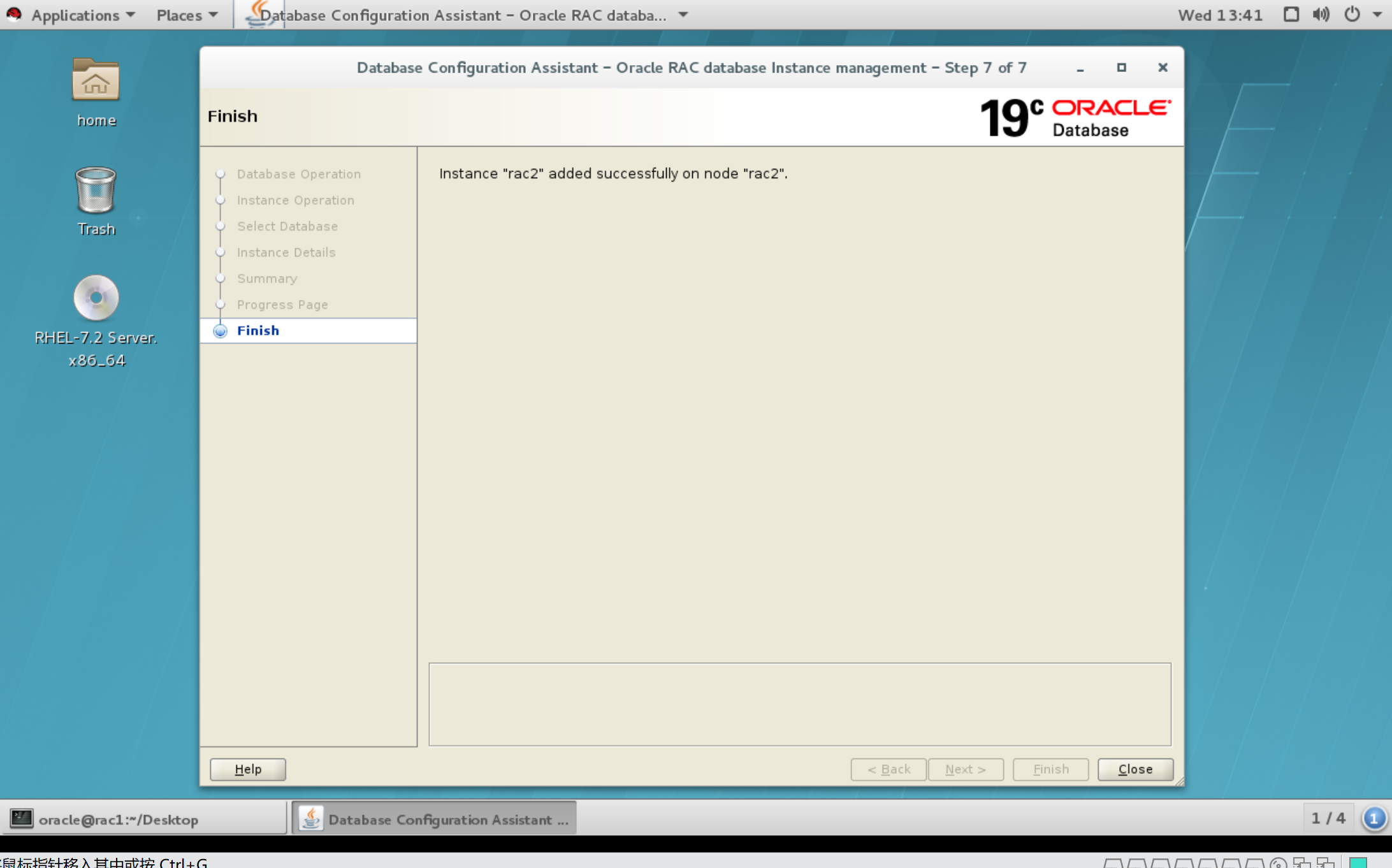

3.4 dbca建库

通过dbca图形化建库,选择实例管理进行库的添加,在节点一运行:

如果没有图形化环境,也可以用以下的命令行实现:

[oracle@rac1 ~]$ dbca -silent -addInstance -nodeName rac2 -gdbName rac -instanceName rac2 -sysDBAUserName sys -sysDBAPassword welcome1

---------------------------------------------资源重新添加

[grid@rac1 ~]$ crsctl modify serverpool ora.rac -attr SERVER_NAMES="rac1"

You cannot directly convert a policy-managed database to an administrator-managed database. Instead, you need to remove the policy-managed configuration and then register the same database as an administrator managed databases.

1. Remove the database and services

srvctl remove database -d <dbname>

srvctl remove service -d <dbname> -s <service name>

2. Register the same database as an administrator-managed databases

srvctl add database -d <dbname> -o <RDBMS_HOME>

srvctl add instance -d <dbname> -i <instance_name> -n <nodename>

srvctl add service -d <db_unique_name> -s <service_name> -u -r <new_pref_inst> | -a <new_avail_inst>

3.5 状态检查

[grid@rac2 ~]$ crsctl status res -t

--------------------------------------------------------------------------------

Name Target State Server State details

--------------------------------------------------------------------------------

Local Resources

--------------------------------------------------------------------------------

ora.LISTENER.lsnr

ONLINE ONLINE rac1 STABLE

ONLINE ONLINE rac2 STABLE

ora.chad

ONLINE ONLINE rac1 STABLE

ONLINE ONLINE rac2 STABLE

ora.net1.network

ONLINE ONLINE rac1 STABLE

ONLINE ONLINE rac2 STABLE

ora.ons

ONLINE ONLINE rac1 STABLE

ONLINE ONLINE rac2 STABLE

ora.proxy_advm

OFFLINE OFFLINE rac1 STABLE

OFFLINE OFFLINE rac2 STABLE

--------------------------------------------------------------------------------

Cluster Resources

--------------------------------------------------------------------------------

ora.ASMNET1LSNR_ASM.lsnr(ora.asmgroup)

1 ONLINE ONLINE rac1 STABLE

2 ONLINE ONLINE rac2 STABLE

3 ONLINE OFFLINE STABLE

ora.DATA.dg(ora.asmgroup)

1 ONLINE ONLINE rac1 STABLE

2 ONLINE ONLINE rac2 STABLE

3 OFFLINE OFFLINE STABLE

ora.LISTENER_SCAN1.lsnr

1 ONLINE ONLINE rac1 STABLE

ora.asm(ora.asmgroup)

1 ONLINE ONLINE rac1 Started,STABLE

2 ONLINE ONLINE rac2 Started,STABLE

3 OFFLINE OFFLINE STABLE

ora.asmnet1.asmnetwork(ora.asmgroup)

1 ONLINE ONLINE rac1 STABLE

2 ONLINE ONLINE rac2 STABLE

3 OFFLINE OFFLINE STABLE

ora.cvu

1 ONLINE ONLINE rac1 STABLE

ora.qosmserver

1 ONLINE ONLINE rac1 STABLE

ora.rac.db

1 ONLINE ONLINE rac1 Open,HOME=/u01/app/o

racle/product/19.3.0

.0/db_1,STABLE

2 ONLINE ONLINE rac2 Open,HOME=/u01/app/o

racle/product/19.3.0

.0/db_1,STABLE

ora.rac1.vip

1 ONLINE ONLINE rac1 STABLE

ora.rac2.vip

1 ONLINE ONLINE rac2 STABLE

ora.scan1.vip

1 ONLINE ONLINE rac1 STABLE

--------------------------------------------------------------------------------

SQL> select instance_name,status from gv$instance;

INSTANCE_NAME STATUS

---------------- ------------

rac1 OPEN

rac_2 OPEN

[oracle@rac2 ~]$ olsnodes -s -t

rac1 Active Unpinned

rac2 Active Unpinned

至此,所以项目测试完成。