OB社区版部署文档

VMware workstation17pro

Oracle Linux8.9

Oceanbase-ce4.3.5.1

root用户离线方式单节点(all-in-one)部署obd,使用obd部署demo集群

先创建一台VM虚拟机oceanbase41:系统盘150G,数据盘150G(非立即分配),单网卡

配置网卡

vi /etc/sysconfig/network-scripts/ifcfg-ens160

TYPE=Ethernet

PROXY_METHOD=none

BROWSER_ONLY=no

BOOTPROTO=static

DEFROUTE=yes

IPV4_FAILURE_FATAL=no

IPV6INIT=yes

IPV6_AUTOCONF=yes

IPV6_DEFROUTE=yes

IPV6_FAILURE_FATAL=no

IPV6_ADDR_GEN_MODE=eui64

NAME=ens160

UUID=233d354c-5970-4256-96b4-49a82653e7fb

DEVICE=ens160

ONBOOT=yes

IPADDR=192.168.229.193

DNS1=192.168.229.2

GATEWAY=192.168.229.2

保存退出(主要改BOOTPROTO=static,ONBOOT=yes,IPADDR=192.168.229.193,DNS1=192.168.229.2,GATEWAY=192.168.229.0)

如果想白屏安装就必须关闭VM的防火墙

systemctl stop firewalld

systemctl status firewalld

配置lvm

fdisk /dev/nvme0n2

n回车 回车 回车 回车 w回车

mkfs.xfs /dev/nvme0n2p1

mkdir /OBdata

mount /dev/nvme0n2p1 /OBdata

blkid /dev/nvme0n2p1

vi /etc/fstab

添加

UUID=fba9c72b-0aff-40a5-9098-51659cbbdcea /OBdata xfs defaults 0 0

保存退出

chmod 777 /OBdata

mkdir /home/OBlog

chmod 777 OBlog

上传oceanbase-all-in-one-4.3.5_bp1_20250320.el8.x86_64.tar.gz到/root

chmod 777 oceanbase-all-in-one-4.3.5_bp1_20250320.el8.x86_64.tar.gz

reboot再关机

到这里复制VM虚拟机

再回到oceanbase41

部署方式一:root离线all-in-one安装方式,obd创建demo集群

cd /root/

tar -zxvf oceanbase-all-in-one-4.3.5_bp1_20250320.el8.x86_64.tar.gz

cd oceanbase-all-in-one/bin

./install.sh

source ~/.oceanbase-all-in-one/bin/env.sh

[root@oceanbase4 bin]# obd demo

Package obproxy-ce-4.3.3.0-5.el8 is available.

Package obagent-4.2.2-100000042024011120.el8 is available.

Package oceanbase-ce-4.3.5.1-101000042025031818.el8 is available.

Package prometheus-2.37.1-10000102022110211.el8 is available.

Package grafana-7.5.17-1 is available.

install obproxy-ce-4.3.3.0 for local ok

install obagent-4.2.2 for local ok

install oceanbase-ce-4.3.5.1 for local ok

install prometheus-2.37.1 for local ok

install grafana-7.5.17 for local ok

Cluster param config check ok

Open ssh connection ok

Generate obproxy configuration ok

Generate obagent configuration ok

Generate prometheus configuration ok

Generate grafana configuration ok

+--------------------------------------------------------------------------------------------+

| Packages |

+--------------+---------+------------------------+------------------------------------------+

| Repository | Version | Release | Md5 |

+--------------+---------+------------------------+------------------------------------------+

| obproxy-ce | 4.3.3.0 | 5.el8 | 3e5179c4e9864a29704d6b4c2a35a4ad40e7ad56 |

| obagent | 4.2.2 | 100000042024011120.el8 | bf152b880953c2043ddaf80d6180cf22bb8c8ac2 |

| oceanbase-ce | 4.3.5.1 | 101000042025031818.el8 | 3a4f23adb7973d6d1d6969bcd9ae108f8c564b66 |

| prometheus | 2.37.1 | 10000102022110211.el8 | e4f8a3e784512fca75bf1b3464247d1f31542cb9 |

| grafana | 7.5.17 | 1 | 1bf1f338d3a3445d8599dc6902e7aeed4de4e0d6 |

+--------------+---------+------------------------+------------------------------------------+

Repository integrity check ok

Load param plugin ok

Open ssh connection ok

Initializes obagent work home ok

Initializes observer work home ok

Initializes obproxy work home ok

Initializes prometheus work home ok

Initializes grafana work home ok

Parameter check ok

Remote obproxy-ce-4.3.3.0-5.el8-3e5179c4e9864a29704d6b4c2a35a4ad40e7ad56 repository install ok

Remote obproxy-ce-4.3.3.0-5.el8-3e5179c4e9864a29704d6b4c2a35a4ad40e7ad56 repository lib check ok

Remote obagent-4.2.2-100000042024011120.el8-bf152b880953c2043ddaf80d6180cf22bb8c8ac2 repository install ok

Remote obagent-4.2.2-100000042024011120.el8-bf152b880953c2043ddaf80d6180cf22bb8c8ac2 repository lib check ok

Remote oceanbase-ce-4.3.5.1-101000042025031818.el8-3a4f23adb7973d6d1d6969bcd9ae108f8c564b66 repository install ok

Remote oceanbase-ce-4.3.5.1-101000042025031818.el8-3a4f23adb7973d6d1d6969bcd9ae108f8c564b66 repository lib check ok

Remote prometheus-2.37.1-10000102022110211.el8-e4f8a3e784512fca75bf1b3464247d1f31542cb9 repository install ok

Remote prometheus-2.37.1-10000102022110211.el8-e4f8a3e784512fca75bf1b3464247d1f31542cb9 repository lib check ok

Remote grafana-7.5.17-1-1bf1f338d3a3445d8599dc6902e7aeed4de4e0d6 repository install ok

Remote grafana-7.5.17-1-1bf1f338d3a3445d8599dc6902e7aeed4de4e0d6 repository lib check ok

demo deployed

Get local repositories ok

Load cluster param plugin ok

Open ssh connection ok

[ERROR] OBD-1007: (127.0.0.1) The value of the ulimit parameter "open files" must not be less than 20000 (Current value: 1024)

[WARN] OBD-1007: (127.0.0.1) The recommended number of max user processes is 655350 (Current value: 127171)

[WARN] OBD-1007: (127.0.0.1) The recommended number of core file size is unlimited (Current value: 0)

[WARN] OBD-1007: (127.0.0.1) The recommended number of stack size is unlimited (Current value: 8192)

[WARN] OBD-1017: (127.0.0.1) The value of the "vm.max_map_count" must be within [327600, 1310720] (Current value: 65530, Recommended value: 655360)

[WARN] OBD-1017: (127.0.0.1) The value of the "fs.file-max" must be greater than 6573688 (Current value: 3246810, Recommended value: 6573688)

[WARN] OBD-1012: (127.0.0.1) clog and data use the same disk (/)

See https://www.oceanbase.com/product/ob-deployer/error-codes .

Trace ID: 9a6740ce-1132-11f0-adb9-000c293d071f

If you want to view detailed obd logs, please run: obd display-trace 9a6740ce-1132-11f0-adb9-000c293d071f

vi /etc/security/limits.conf

* soft nofile unlimited

* hard nofile unlimited

* soft nproc unlimited

* hard nproc unlimited

* soft core unlimited

* hard core unlimited

# End of file

保存退出

vi /etc/sysctl.conf

vm.max_map_count=655360

fs.file-max=6573688

保存退出

reboot

source ~/.oceanbase-all-in-one/bin/env.sh

[root@oceanbase4 bin]# obd demo

Get local repositories ok

Open ssh connection ok

Get deployment connections ok

Get standbys info ok

Cluster status check ok

oceanbase-ce work dir cleaning ok

obproxy-ce work dir cleaning ok

obagent work dir cleaning ok

prometheus work dir cleaning ok

grafana work dir cleaning ok

demo destroyed

Cluster param config check ok

Open ssh connection ok

Generate obagent configuration ok

Generate obproxy configuration ok

Generate prometheus configuration ok

Generate grafana configuration ok

+--------------------------------------------------------------------------------------------+

| Packages |

+--------------+---------+------------------------+------------------------------------------+

| Repository | Version | Release | Md5 |

+--------------+---------+------------------------+------------------------------------------+

| obagent | 4.2.2 | 100000042024011120.el8 | bf152b880953c2043ddaf80d6180cf22bb8c8ac2 |

| obproxy-ce | 4.3.3.0 | 5.el8 | 3e5179c4e9864a29704d6b4c2a35a4ad40e7ad56 |

| oceanbase-ce | 4.3.5.1 | 101000042025031818.el8 | 3a4f23adb7973d6d1d6969bcd9ae108f8c564b66 |

| prometheus | 2.37.1 | 10000102022110211.el8 | e4f8a3e784512fca75bf1b3464247d1f31542cb9 |

| grafana | 7.5.17 | 1 | 1bf1f338d3a3445d8599dc6902e7aeed4de4e0d6 |

+--------------+---------+------------------------+------------------------------------------+

Repository integrity check ok

Load param plugin ok

Open ssh connection ok

Initializes obagent work home ok

Initializes observer work home ok

Initializes obproxy work home ok

Initializes prometheus work home ok

Initializes grafana work home ok

Parameter check ok

Remote obagent-4.2.2-100000042024011120.el8-bf152b880953c2043ddaf80d6180cf22bb8c8ac2 repository install ok

Remote obagent-4.2.2-100000042024011120.el8-bf152b880953c2043ddaf80d6180cf22bb8c8ac2 repository lib check ok

Remote obproxy-ce-4.3.3.0-5.el8-3e5179c4e9864a29704d6b4c2a35a4ad40e7ad56 repository install ok

Remote obproxy-ce-4.3.3.0-5.el8-3e5179c4e9864a29704d6b4c2a35a4ad40e7ad56 repository lib check ok

Remote oceanbase-ce-4.3.5.1-101000042025031818.el8-3a4f23adb7973d6d1d6969bcd9ae108f8c564b66 repository install ok

Remote oceanbase-ce-4.3.5.1-101000042025031818.el8-3a4f23adb7973d6d1d6969bcd9ae108f8c564b66 repository lib check ok

Remote prometheus-2.37.1-10000102022110211.el8-e4f8a3e784512fca75bf1b3464247d1f31542cb9 repository install ok

Remote prometheus-2.37.1-10000102022110211.el8-e4f8a3e784512fca75bf1b3464247d1f31542cb9 repository lib check ok

Remote grafana-7.5.17-1-1bf1f338d3a3445d8599dc6902e7aeed4de4e0d6 repository install ok

Remote grafana-7.5.17-1-1bf1f338d3a3445d8599dc6902e7aeed4de4e0d6 repository lib check ok

demo deployed

Get local repositories ok

Load cluster param plugin ok

Open ssh connection ok

[WARN] OBD-1007: (127.0.0.1) The recommended number of stack size is unlimited (Current value: 8192)

[WARN] OBD-1012: (127.0.0.1) clog and data use the same disk (/)

Check before start obagent ok

Check before start prometheus ok

Check before start grafana ok

cluster scenario: express_oltp

Start observer ok

observer program health check ok

Connect to observer 127.0.0.1:2881 ok

Cluster bootstrap ok

obshell start ok

obshell program health check ok

obshell bootstrap ok

Start obagent ok

obagent program health check ok

start obproxy ok

obproxy program health check ok

Connect to obproxy ok

Start promethues ok

prometheus program health check ok

Start grafana ok

grafana program health check ok

Connect to observer 127.0.0.1:2881 ok

Wait for observer init ok

+---------------------------------------------+

| oceanbase-ce |

+-----------+---------+------+-------+--------+

| ip | version | port | zone | status |

+-----------+---------+------+-------+--------+

| 127.0.0.1 | 4.3.5.1 | 2881 | zone1 | ACTIVE |

+-----------+---------+------+-------+--------+

obclient -h127.0.0.1 -P2881 -uroot -p'Nv2e2CCj4pWCGPw0aOck' -Doceanbase -A

cluster unique id: 04314dca-1166-52c4-a70b-882cfc3e40e6-196004425db-01050304

Connect to Obagent ok

+--------------------------------------------------------------------+

| obagent |

+-----------------+--------------------+--------------------+--------+

| ip | mgragent_http_port | monagent_http_port | status |

+-----------------+--------------------+--------------------+--------+

| 192.168.229.193 | 8089 | 8088 | active |

+-----------------+--------------------+--------------------+--------+

Connect to obproxy ok

+---------------------------------------------------------------+

| obproxy-ce |

+-----------+------+-----------------+-----------------+--------+

| ip | port | prometheus_port | rpc_listen_port | status |

+-----------+------+-----------------+-----------------+--------+

| 127.0.0.1 | 2883 | 2884 | 2885 | active |

+-----------+------+-----------------+-----------------+--------+

obclient -h127.0.0.1 -P2883 -uroot -p'Nv2e2CCj4pWCGPw0aOck' -Doceanbase -A

Connect to Prometheus ok

+--------------------------------------------------------+

| prometheus |

+-----------------------------+------+----------+--------+

| url | user | password | status |

+-----------------------------+------+----------+--------+

| http://192.168.229.193:9090 | | | active |

+-----------------------------+------+----------+--------+

Connect to grafana ok

+---------------------------------------------------------------------+

| grafana |

+-----------------------------------------+-------+----------+--------+

| url | user | password | status |

+-----------------------------------------+-------+----------+--------+

| http://192.168.229.193:3000/d/oceanbase | admin | admin | active |

+-----------------------------------------+-------+----------+--------+

demo running

Trace ID: 45ca6eec-113c-11f0-8783-000c293d071f

If you want to view detailed obd logs, please run: obd display-trace 45ca6eec-113c-11f0-8783-000c293d071f

[root@oceanbase4 bin]#

obd重启demo集群

obd cluster display demo

obd cluster stop demo

obd cluster start demo

obd cluster display demo

也可以使用

obd cluster restart demo

踩坑总结:

1.obd初始化demo前需调整OS参数,并重启使OS参数生效。否则会报错。建议官方添加这部分内容。

2.按照官方的快速部署demo,数据目录和日志目录是建在/root/oceanbase-ce/store/sstable和/root/oceanbase-ce/store/clog,前提是使用root用户。

3.找不到配置文件或参数文件去指定数据目录(data_dir)和日志目录(clog_dir、slog_dir)。求大佬指点。官网找不到是哪个配置文件或参数文件生效。

4.

- admin用户离线方式单节点(all-in-one)部署obd,使用obd部署demo集群和ocp-express、obagent、obproxy,使用ocp-express白屏创建租户mysqltestten1

admin(sudo权限)离线all-in-one安装方式,obd创建demo集群

改OS参数参考一

useradd admin

passwd admin

123456回车

vi /etc/sudoers

admin ALL=(ALL) NOPASSWD:ALL

保存退出

su - admin

sudo mv /root/oceanbase-all-in-one-4.3.5_bp1_20250320.el8.x86_64.tar.gz .

sudo chmod 777 /home/admin/oceanbase-all-in-one-4.3.5_bp1_20250320.el8.x86_64.tar.gz

sudo chown admin /home/admin/oceanbase-all-in-one-4.3.5_bp1_20250320.el8.x86_64.tar.gz

cd /home/admin

tar -zxvf /oceanbase-all-in-one-4.3.5_bp1_20250320.el8.x86_64.tar.gz

cd oceanbase-all-in-one/bin

./install.sh

source ~/.oceanbase-all-in-one/bin/env.sh

systemctl stop firewalld

systemctl disable firewalld

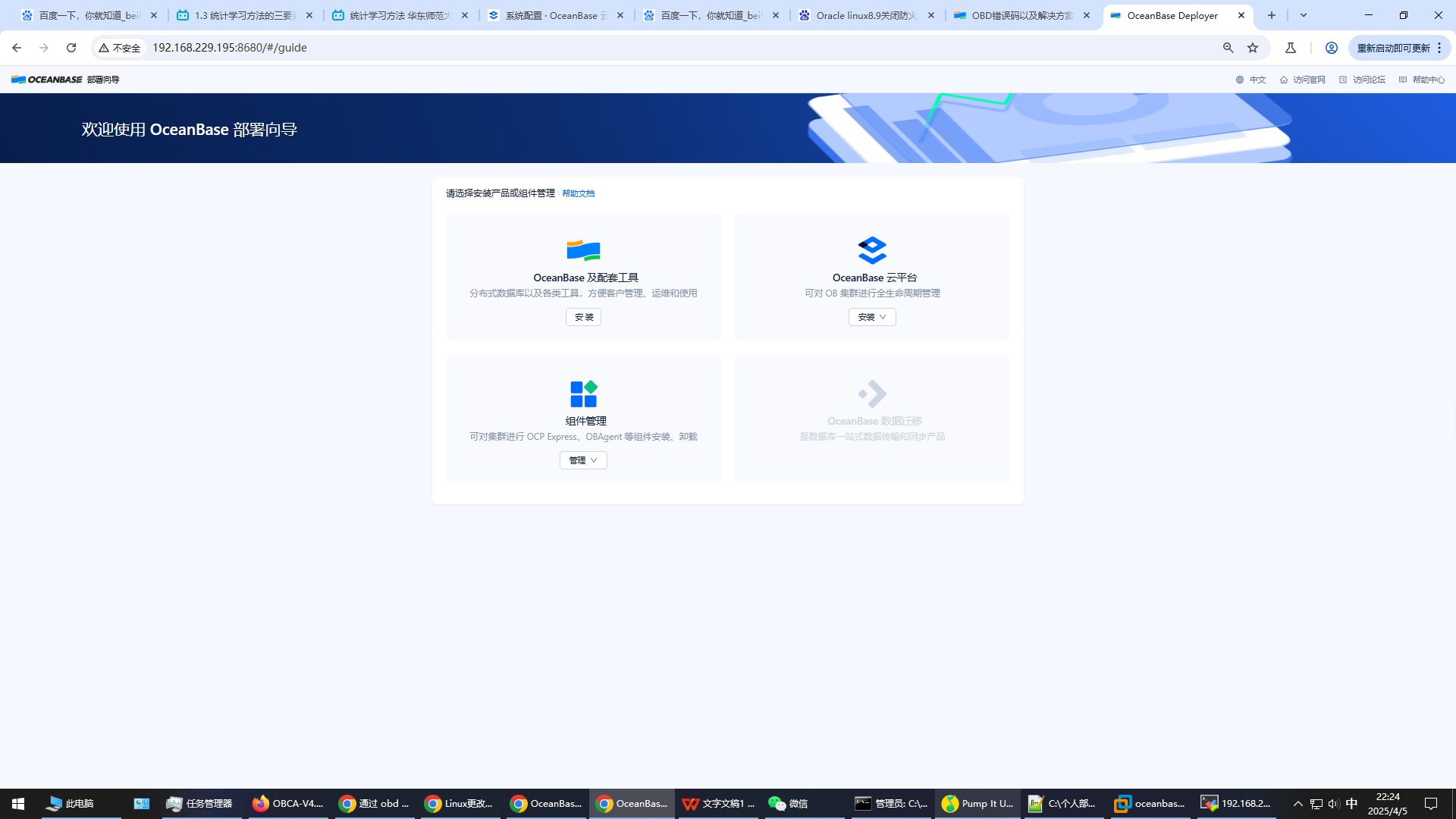

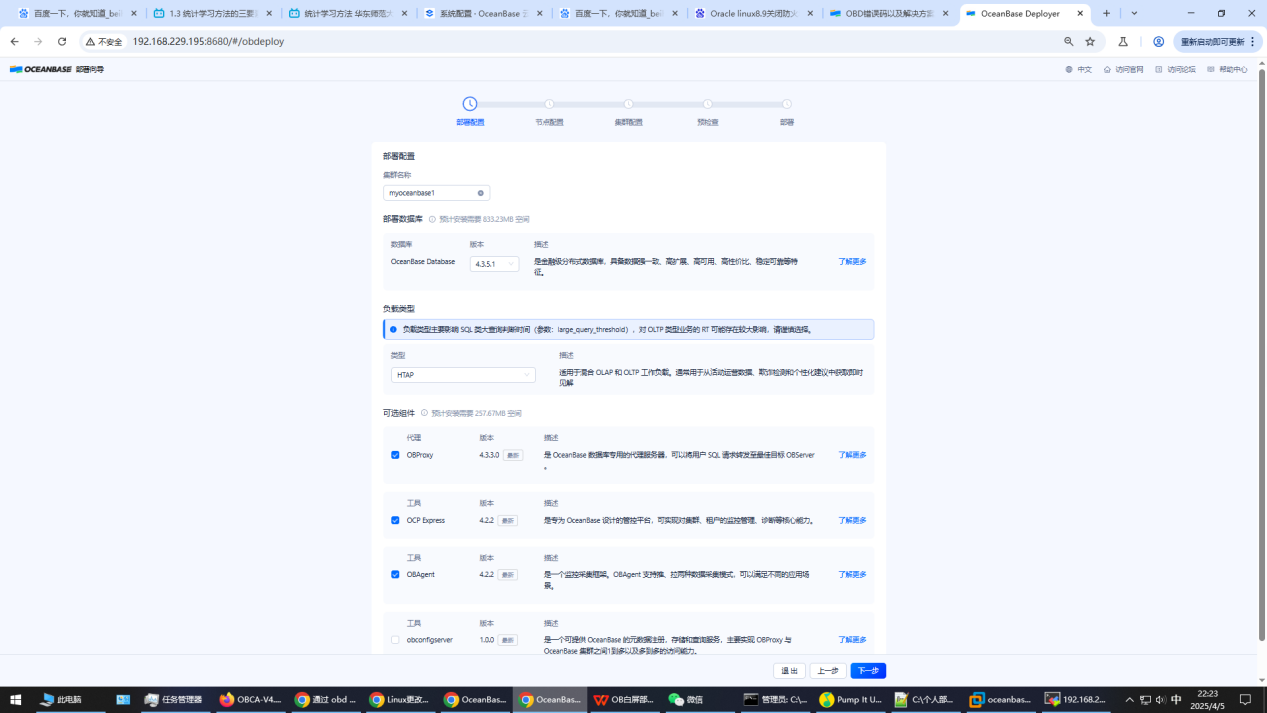

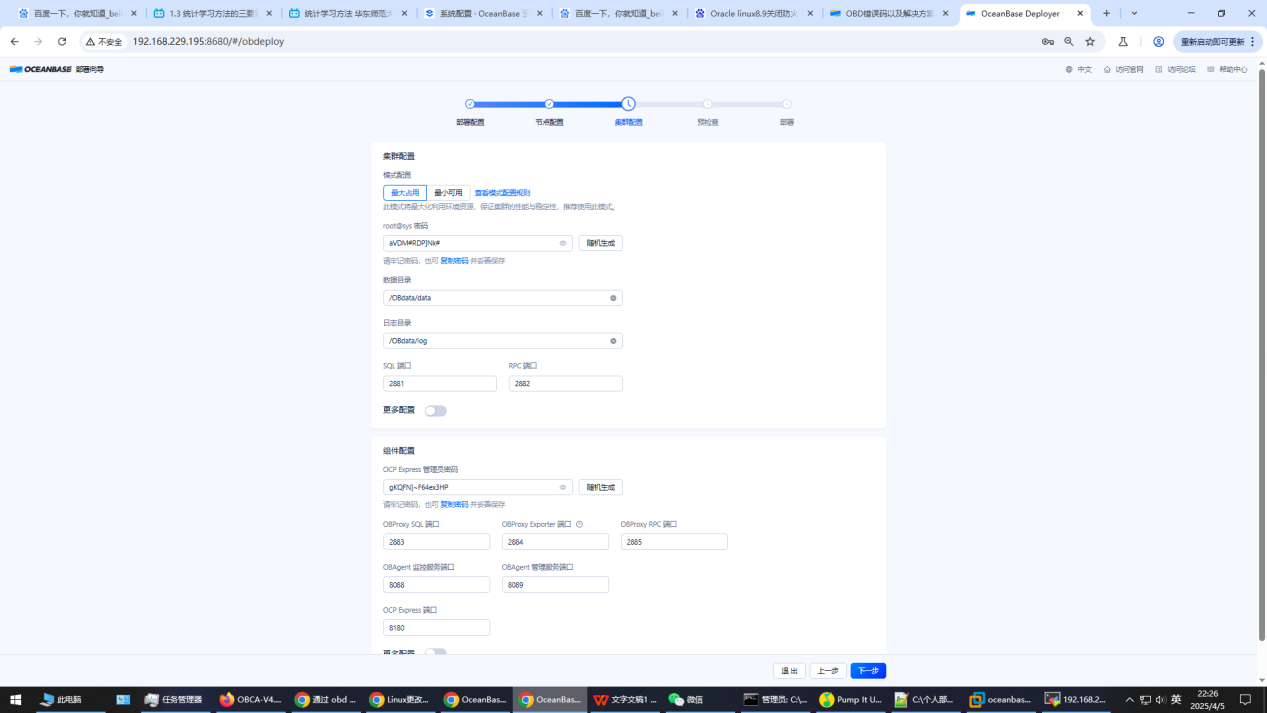

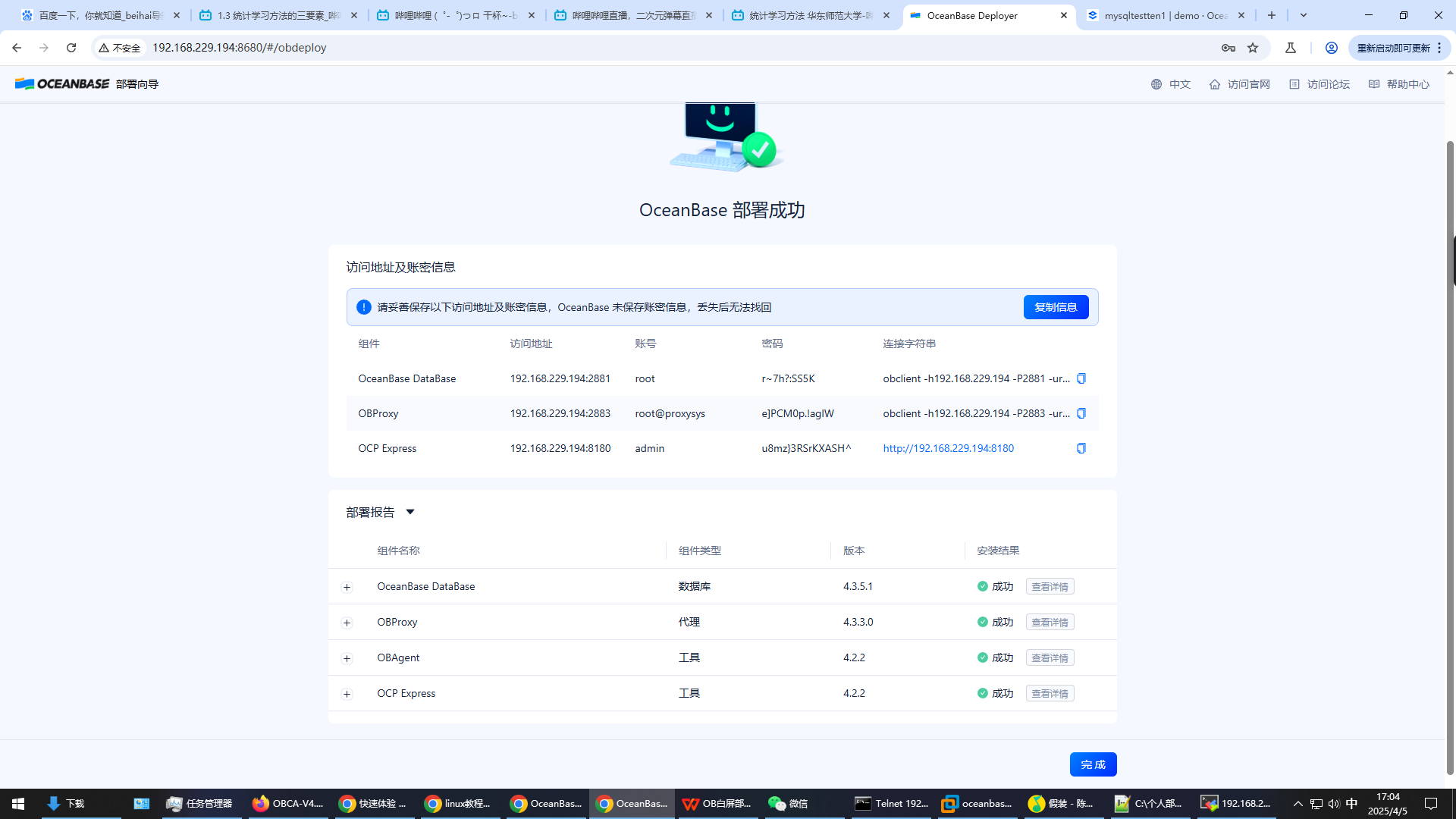

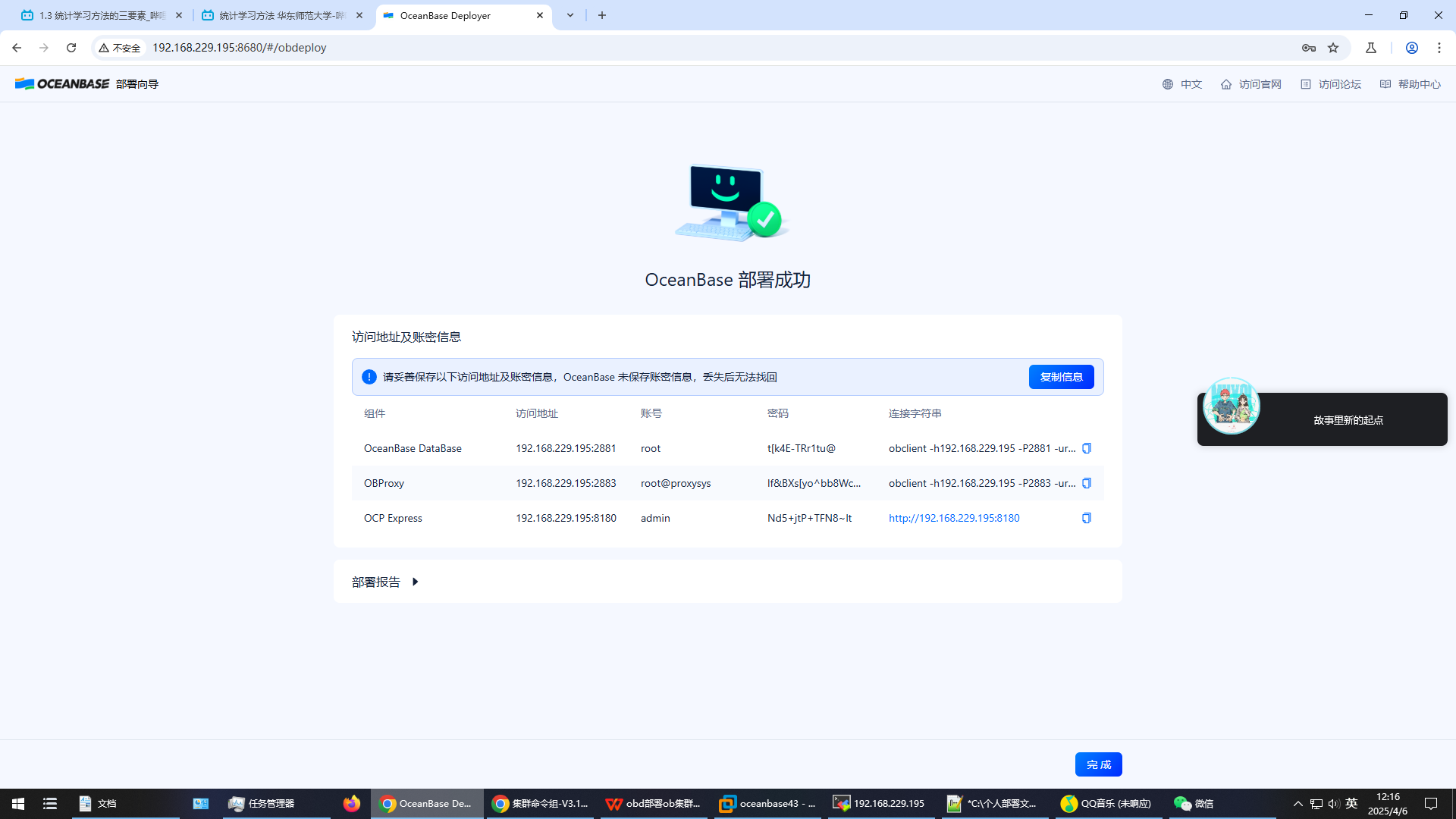

obd web

root@sys r~7h?:SS5K

OCP express 管理员密码 admin u8mz}3RSrKXASH^

OCP express port 8180

数据目录是/OBdata/data 日志目录是/OBdata/log

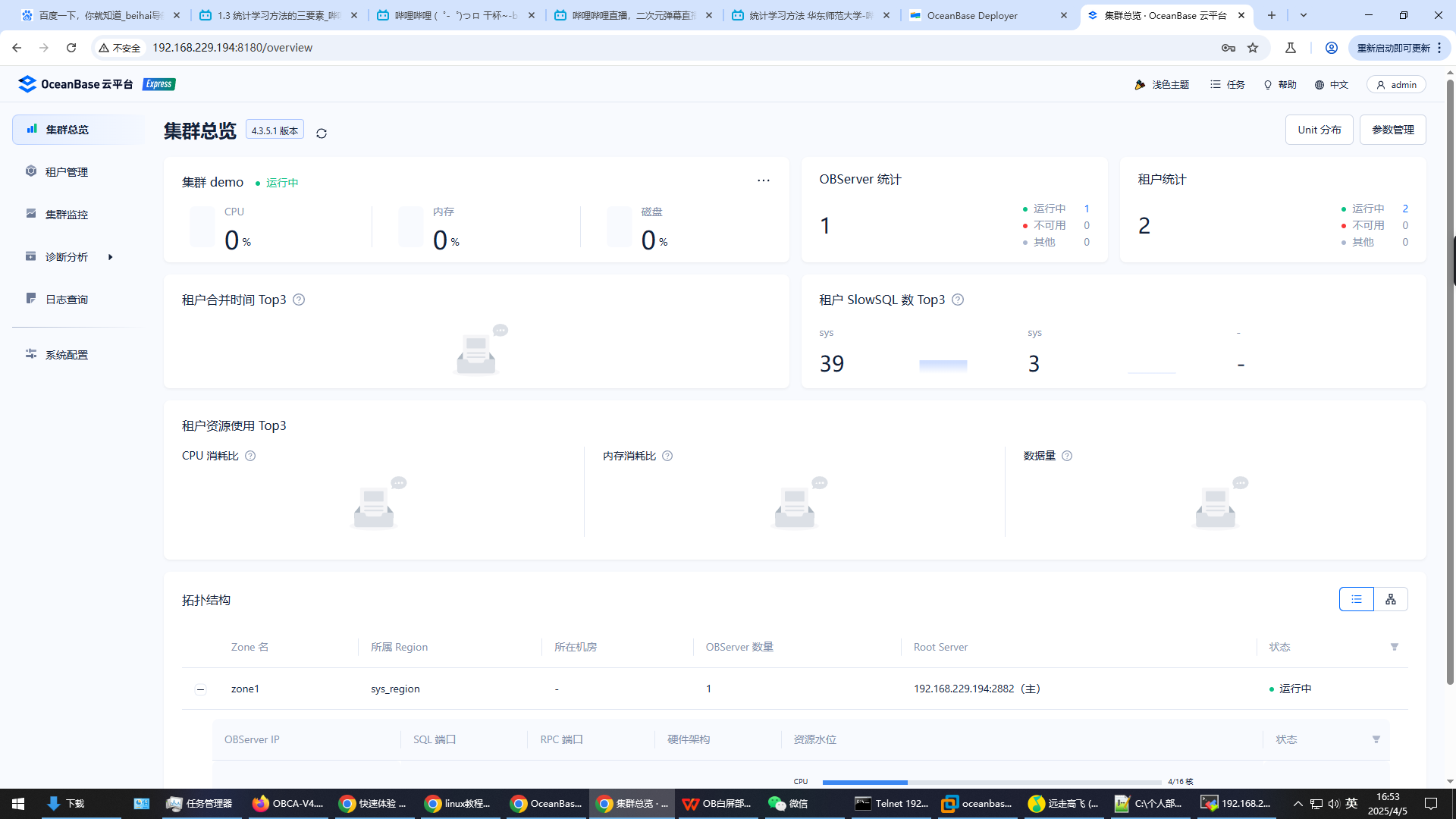

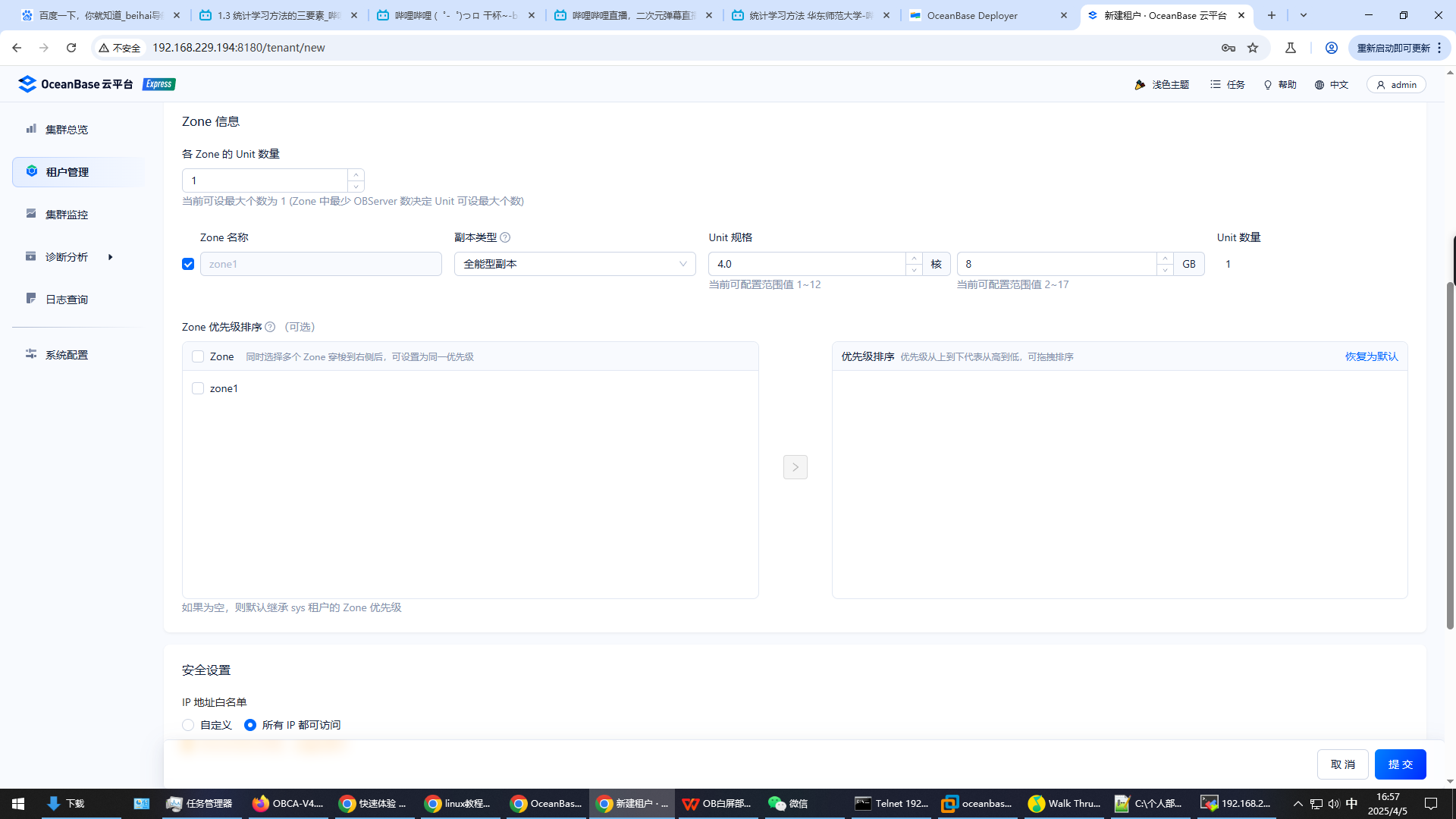

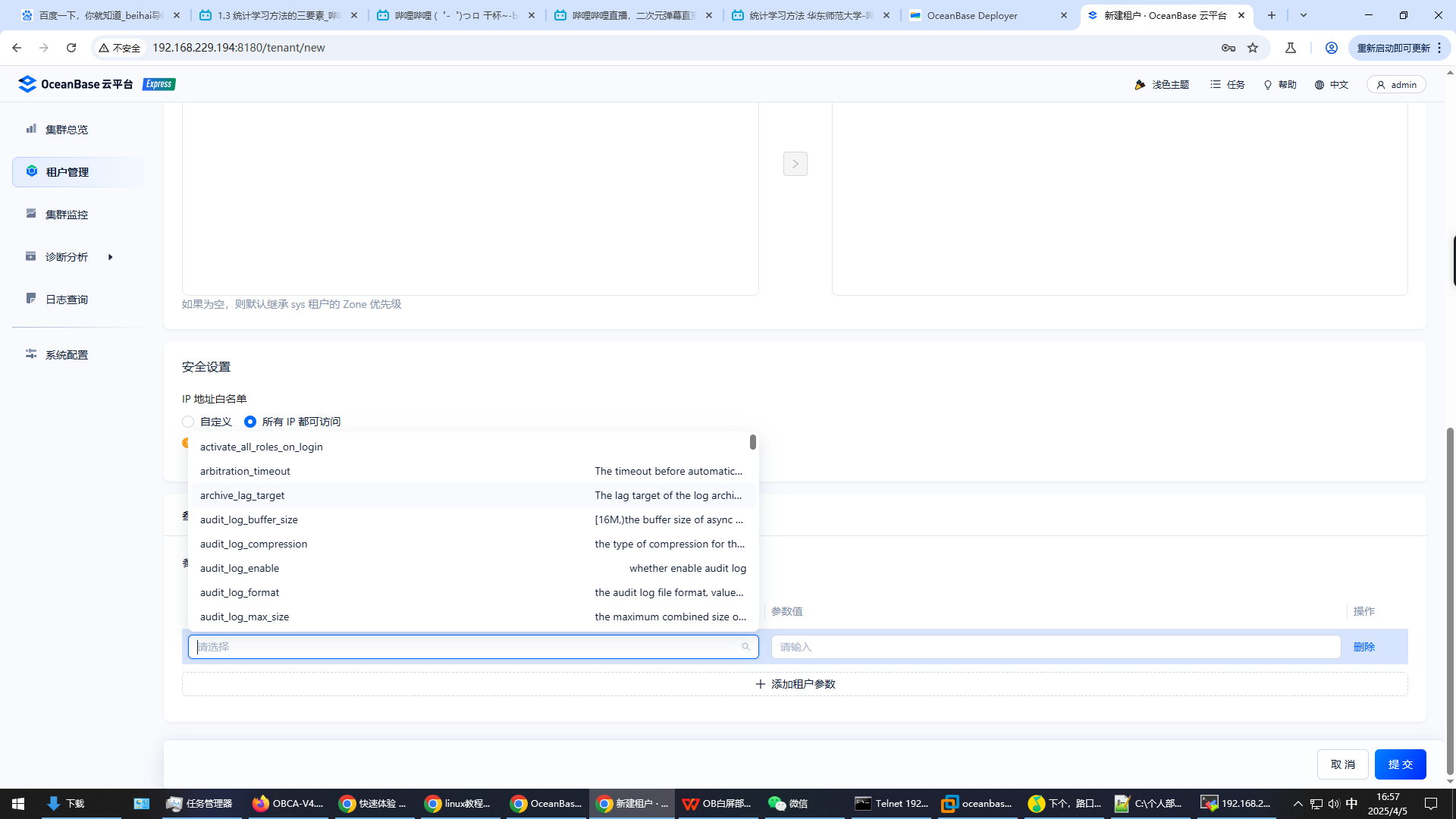

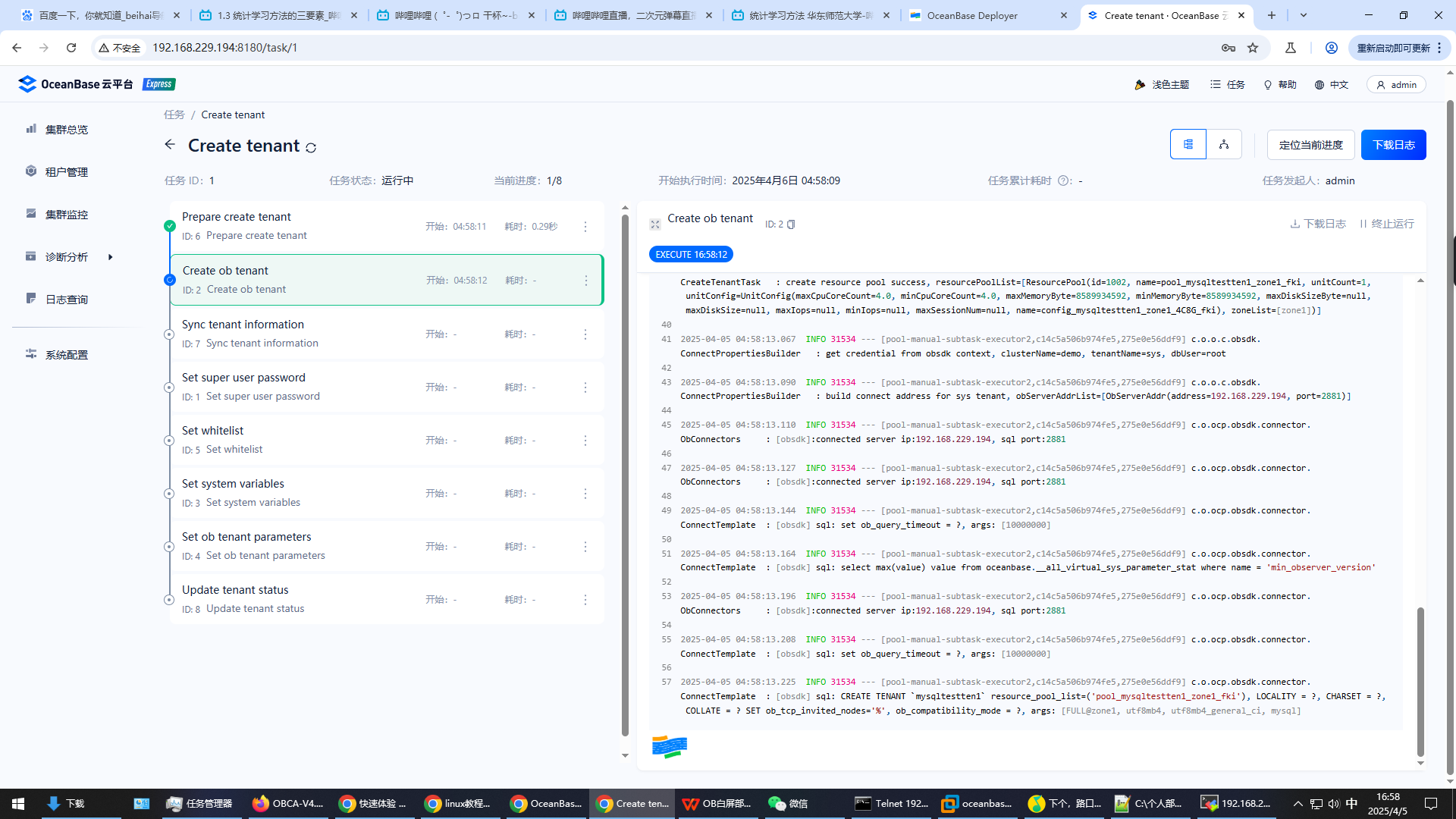

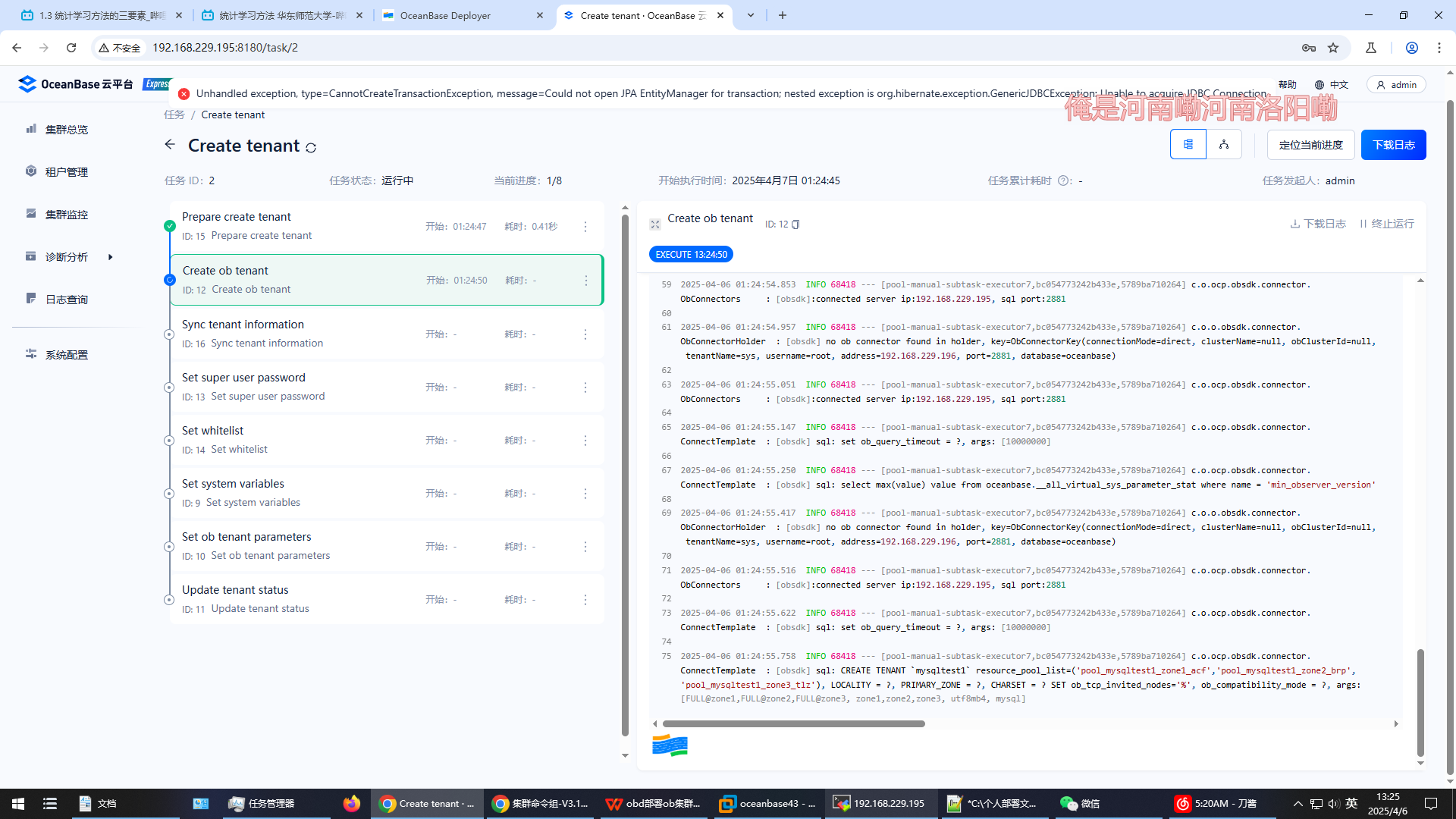

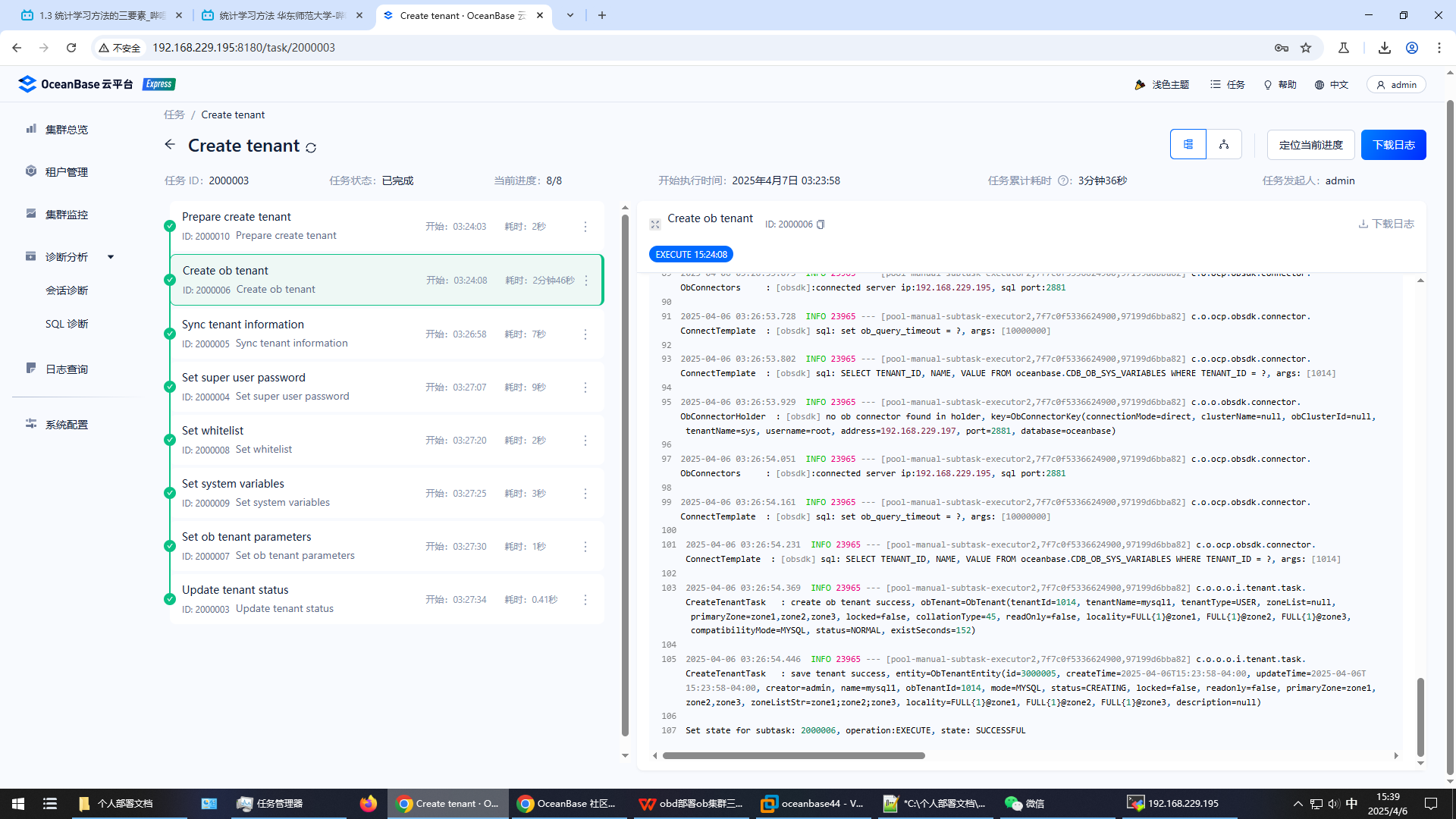

使用ocp-express新建mysql租户,点击租户管理,点击创建租户

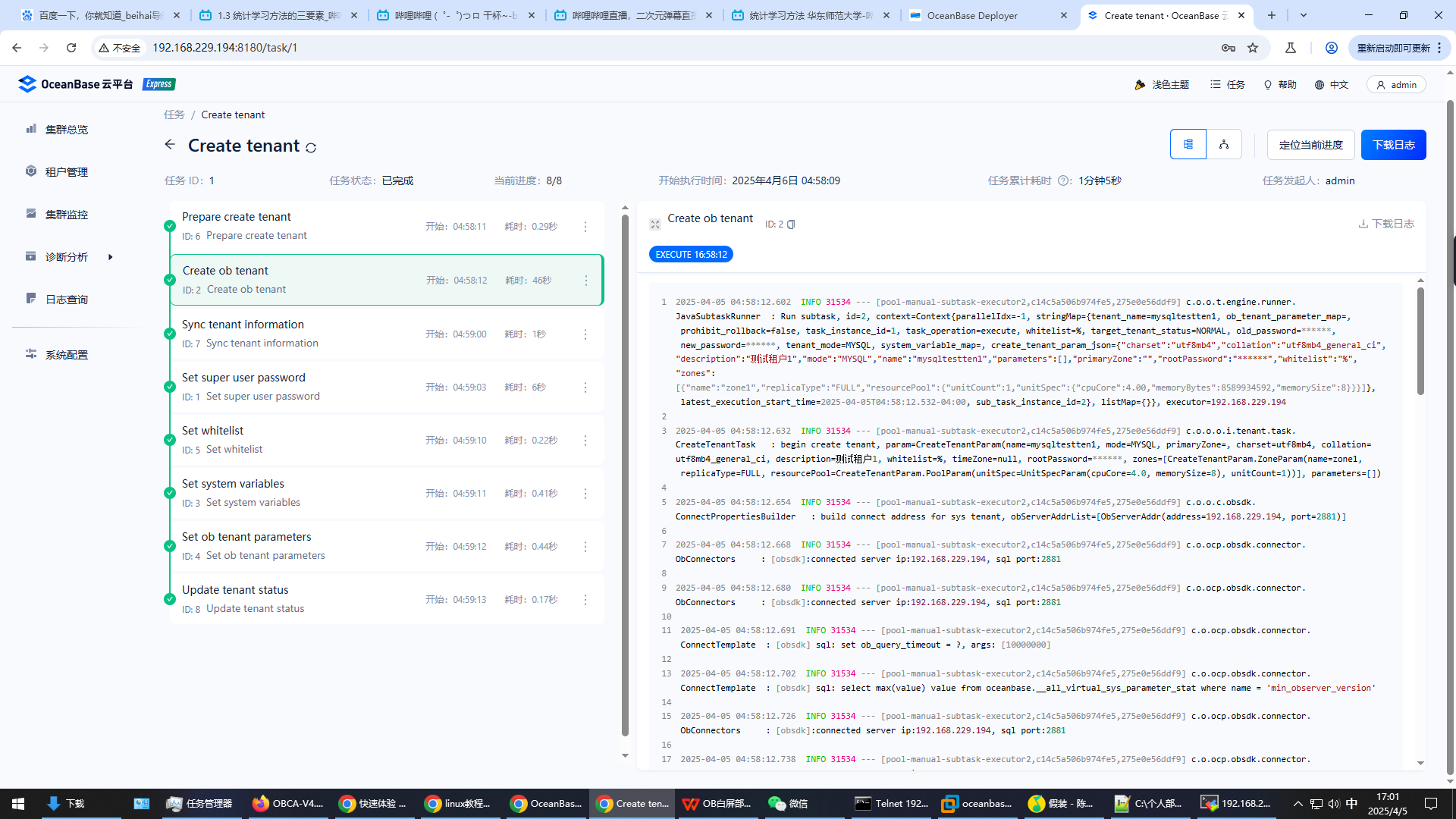

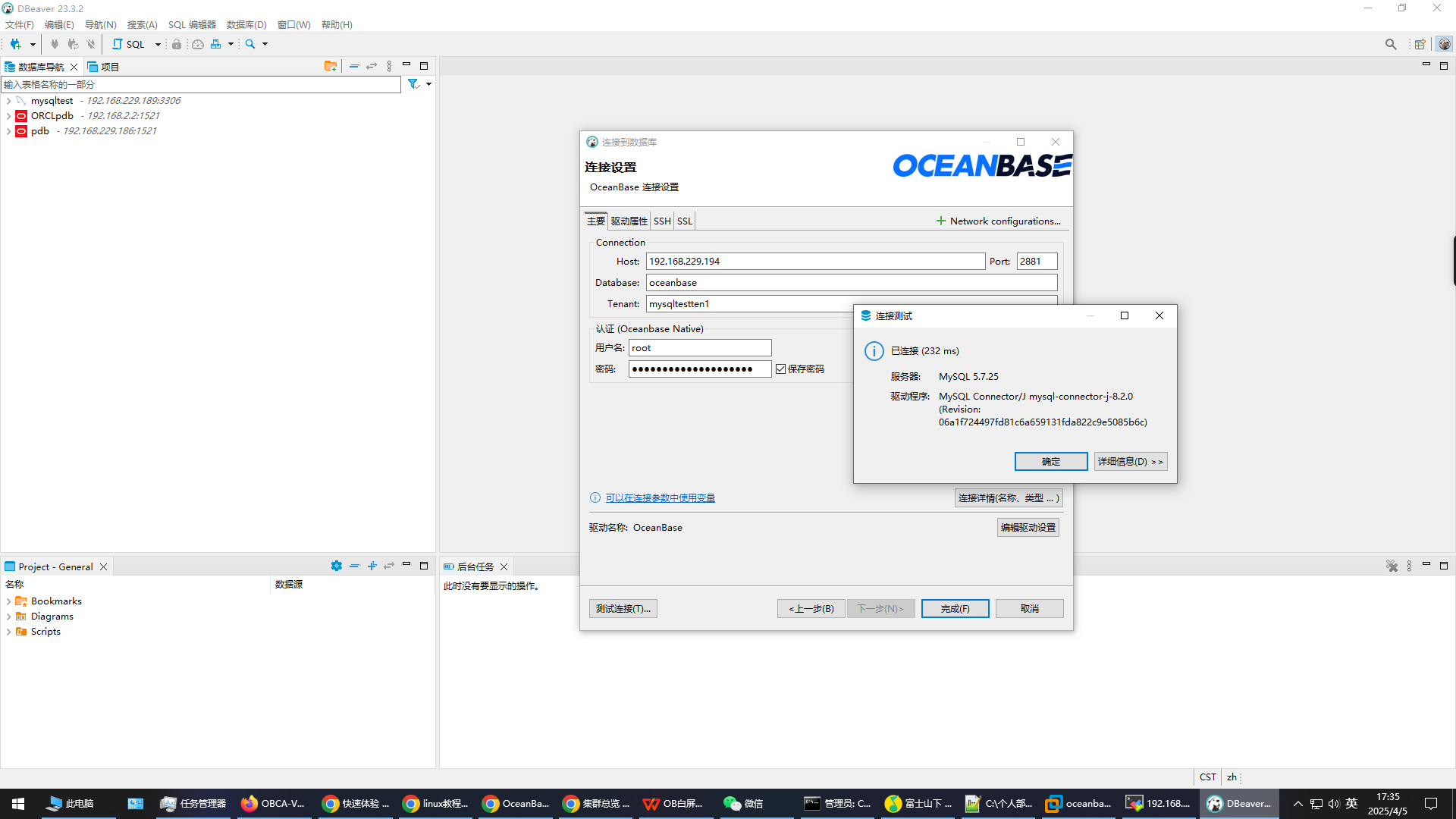

新建mysql租户mysqltestten1

管理员密码 ,IO6mFcmQ#Be^)8I(.E!

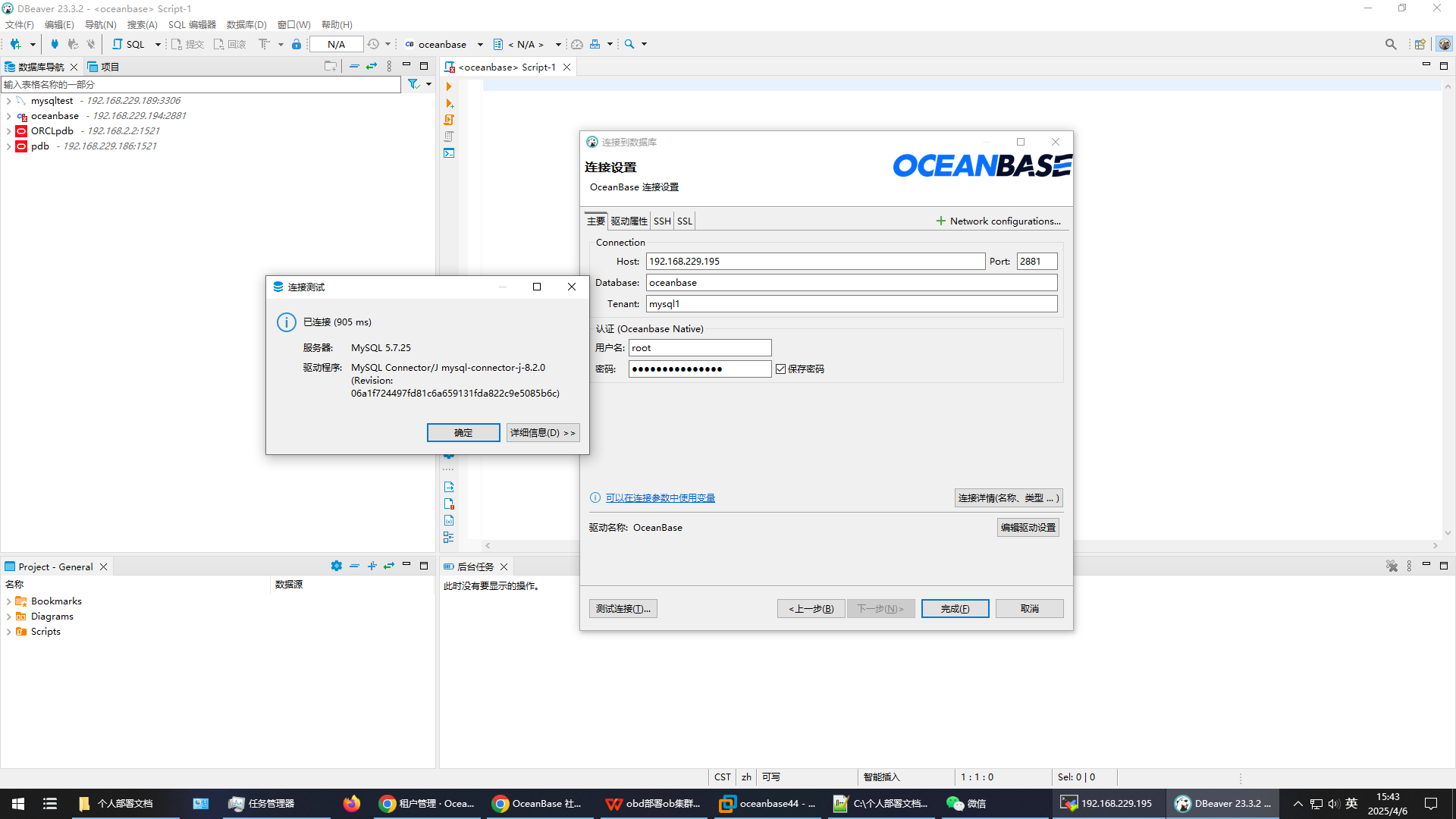

obclient -h192.168.229.194 -P2881 -uroot@mysqltestten1 -p',IO6mFcmQ#Be^)8I(.E!'

obclient -h192.168.229.194 -P2881 -uroot -p'r~7h?:SS5K' -Doceanbase -A

obclient -h192.168.229.194 -P2883 -uroot@proxysys -p'e]PCM0p.!aglW' -Doceanbase -A

http://192.168.229.194:8180 admin u8mz}3RSrKXASH^

Obd stop demo

Obd start demo

报以下错误,但是查看端口2883却没有被占用

[2025-04-05 06:08:55.617] [ERROR] OBD-1001: 192.168.229.194:2883 port is already used

解决办法:多重启几次集群,重启服务器。

踩坑总结:

- 必须把服务器防火墙关了才能访问obd白屏

- 这里的obd创建集群可以选择数据目录和日志目录

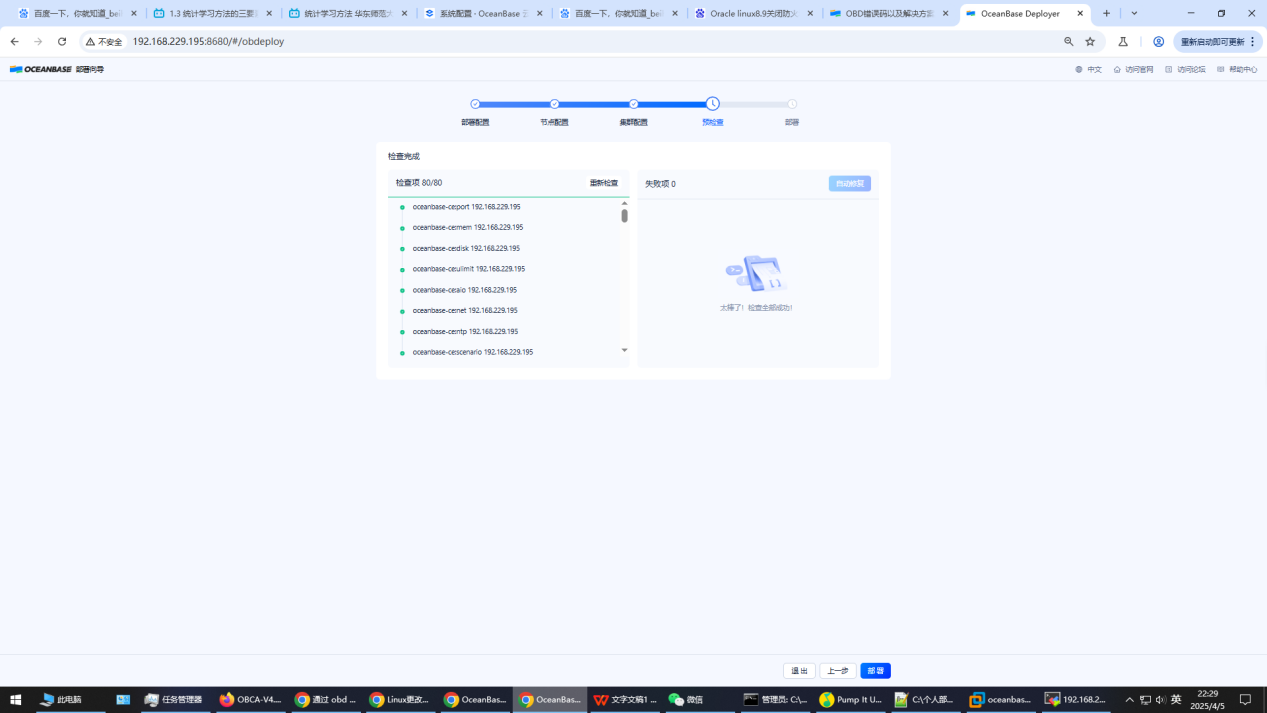

- admin用户离线方式(all-in-one)部署obd,使用obd部署myoceanbase1集群(三节点,即3-1-1)和ocp-express、obagent、obproxy-ce,创建租户mysql1

只需在一个节点上安装obd,在三个节点上创建数据目录和日志目录。

Zone的选择直接输入IP即可

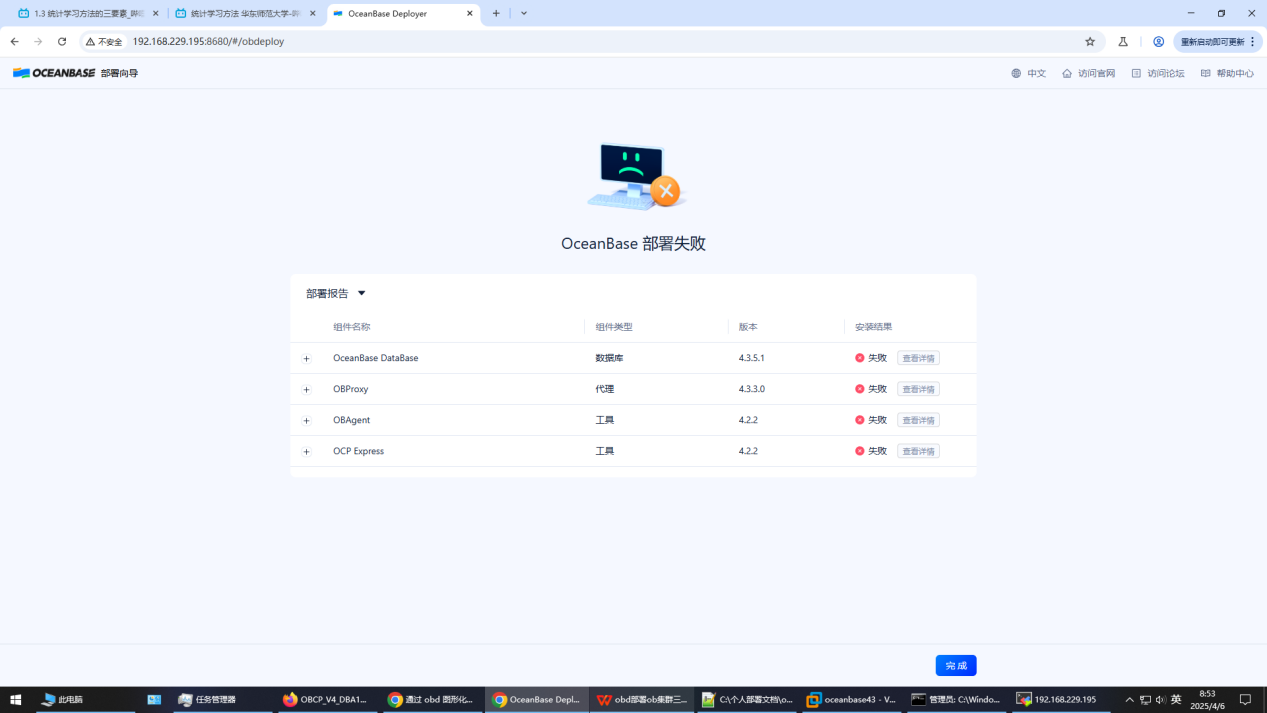

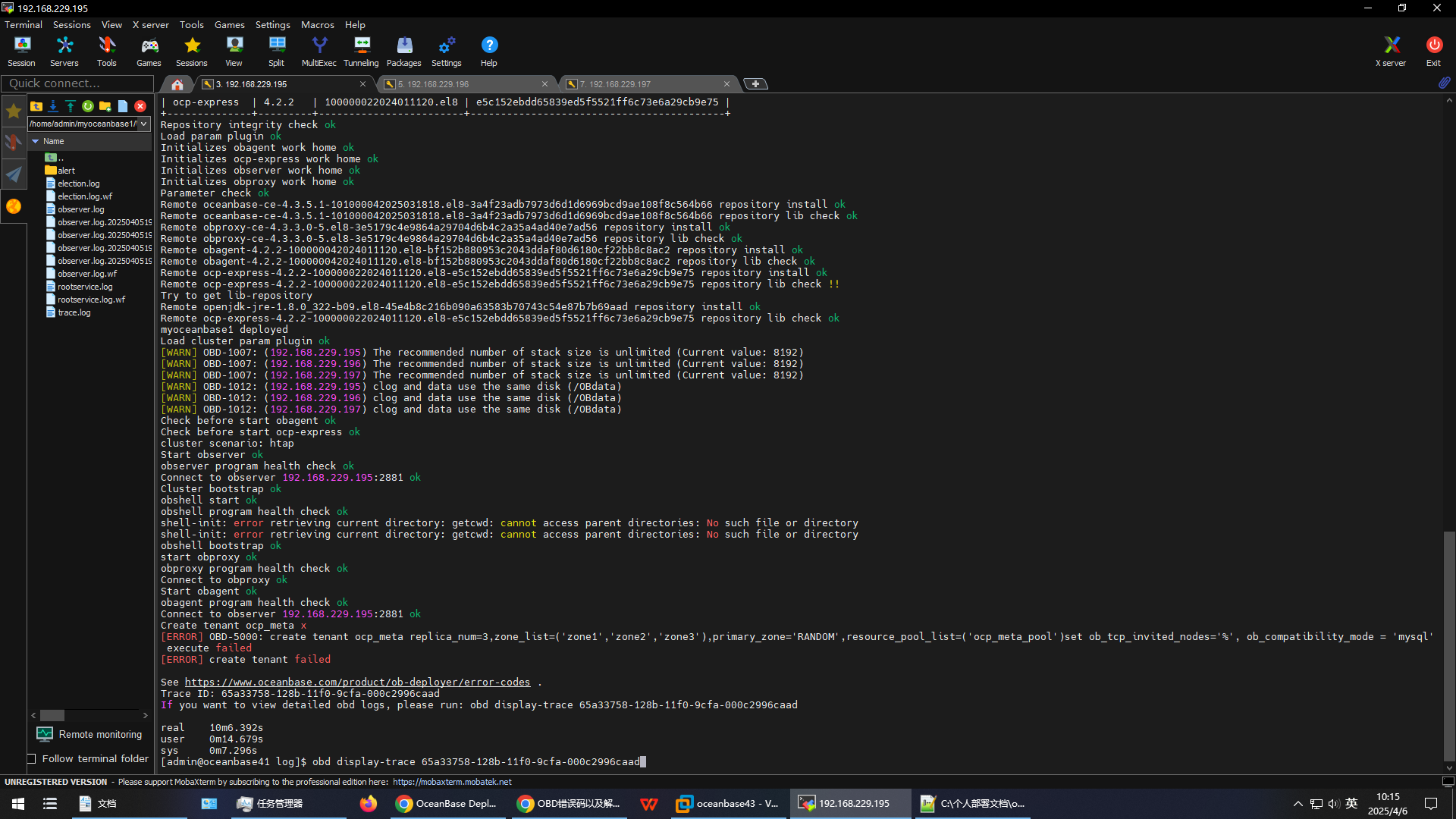

这里部署集群失败,下面是日志报错。查了OBD错误码,没什么用。后面命令行输入

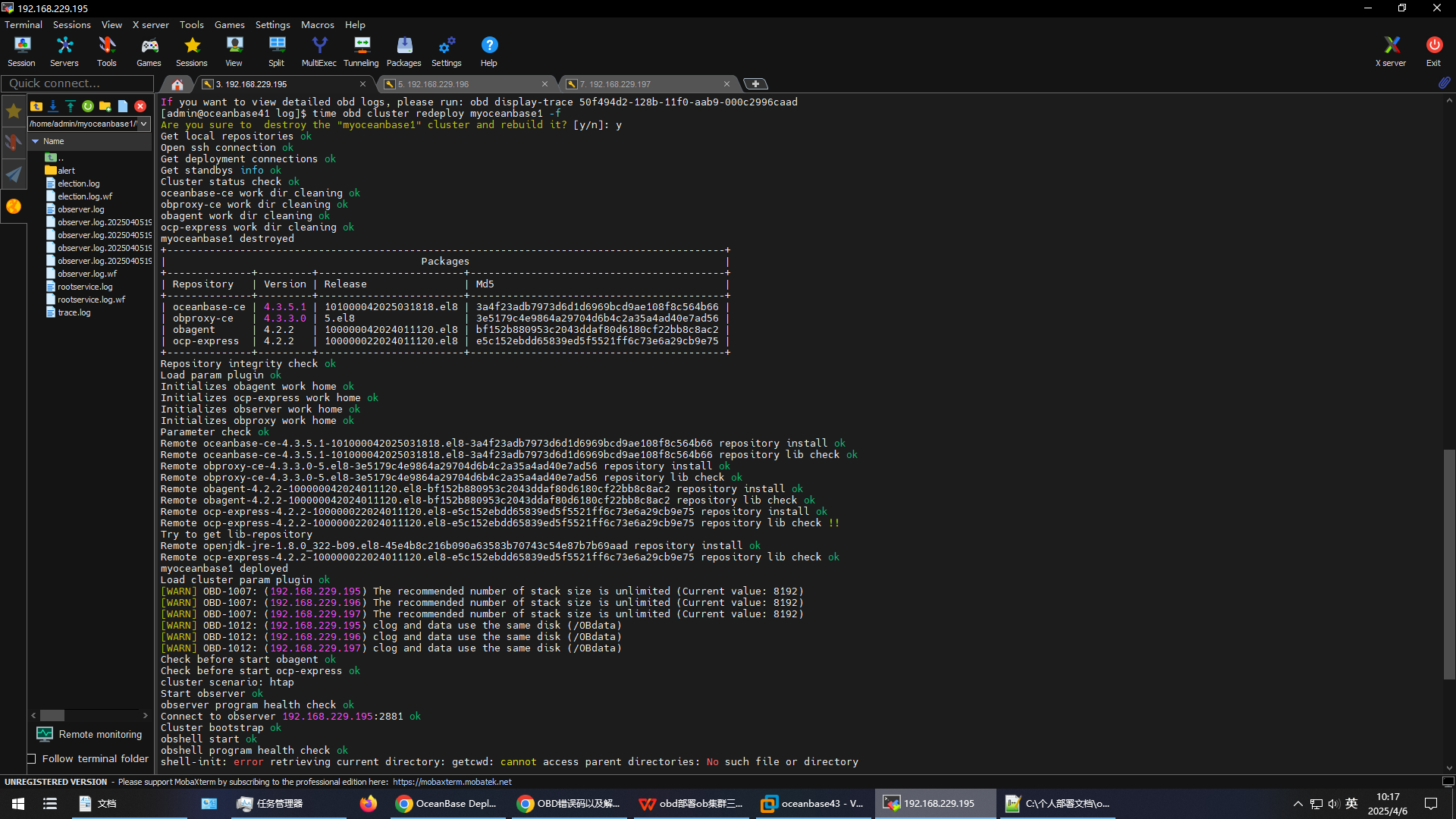

obd cluster redeploy myoceanbase1 -f

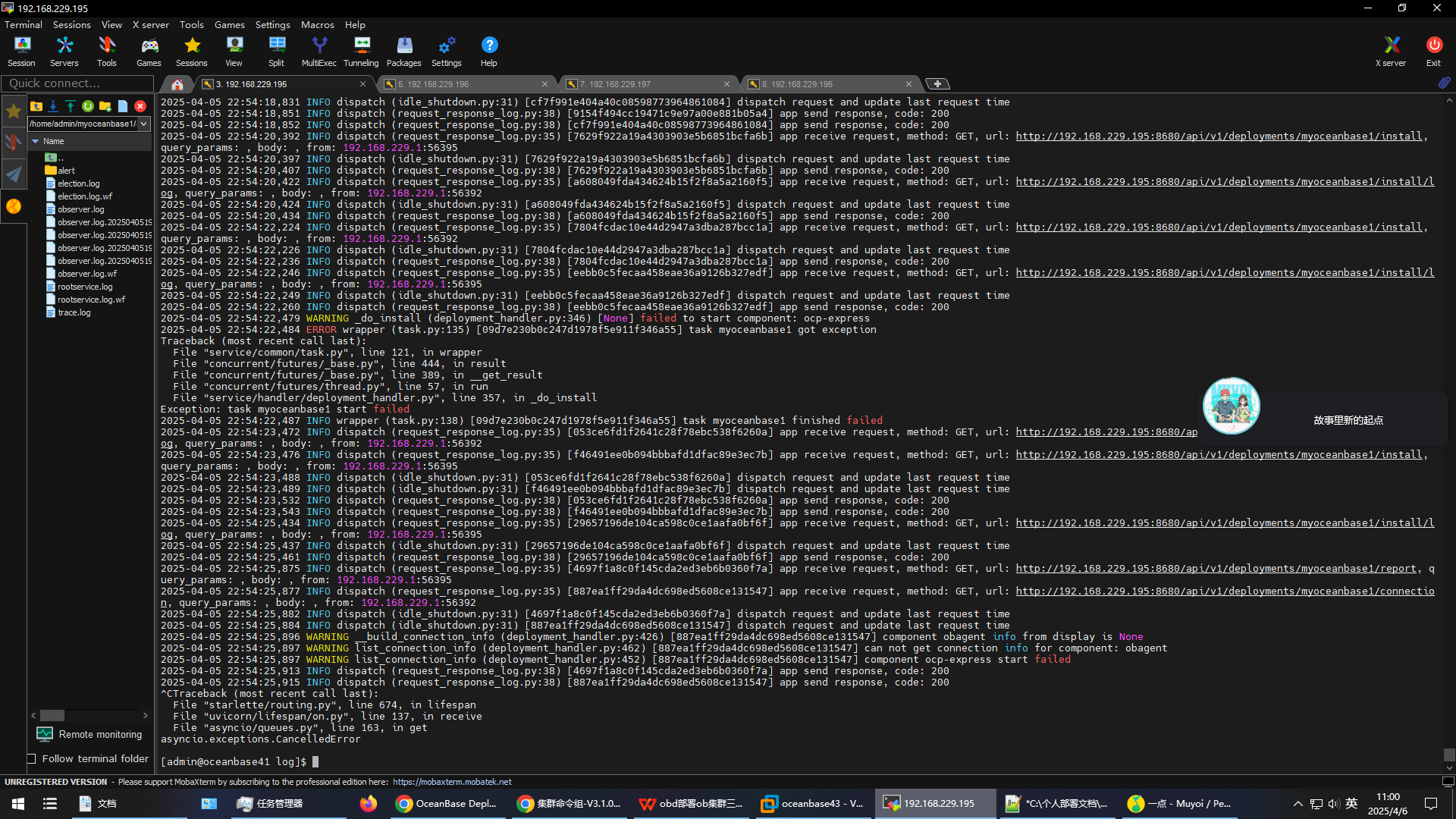

才能创建ocp_meta租户。但启动ocp-express又报错

后面也是把obd重装才解决问题。

使用ocp-express创建用户租户又又又报错,重建多次才解决