案例开发之静态获取数据

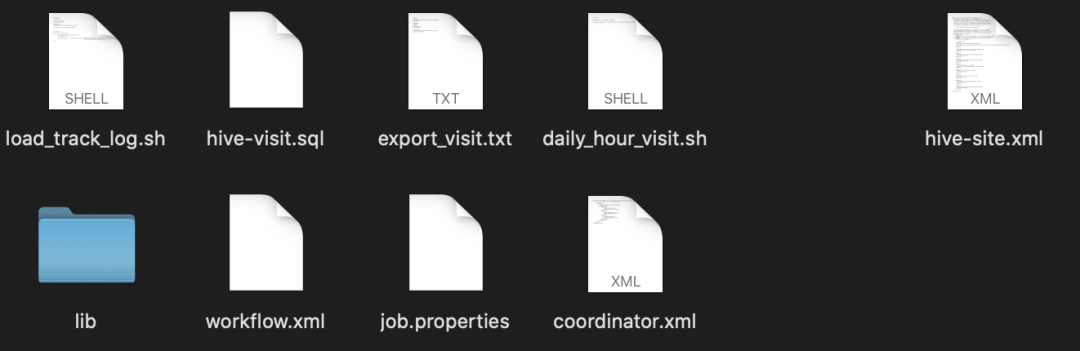

案例开发涉及到的主要文件:

1. job.properties文件,内容如下:

nameNode=hdfs://bigdata-pro-m01.kfk.com:9000jobTracker=bigdata-pro-m01.kfk.com:8032queueName=defaultoozieAppRoot=user/kfk/oozie-appsoozieDataRoot=user/kfk/oozie/datasscript=load_track_log.shEXEC=export_visit.txtSQL=hive-visi.sqlshellHive=daily_hour_visit.sh#oozie.coord.application.path=${nameNode}/${oozieAppRoot}/projectstart=2018-10-27T15:00+0800end=2018-10-27T16:20+0800oozie.use.system.libpath=trueworkflowAppUri=${nameNode}/${oozieAppRoot}/projectoozie.wf.application.path=${nameNode}/${oozieAppRoot}/project

2. workflow.xml文件

根据需求,workflow的开发我们实现了两个方案:

第一个方案(静态获取数据):

(1)数据加载load data -> shell action

load_track_log.sh脚本内容如下:

#!/bin/sh. /etc/profile##track log dir pathLOD_DIR=/opt/track/##hive homeHIVE_HOME=/opt/modules/apache-hive-2.3.6-binyesterday=`date -d "1 day ago" +"%Y%m%d"`cd $LOD_DIRfor line in `ls $yesterday`;dodate=${line:0:4}${line:4:2}${line:6:2}hour=${line:8:2}$HIVE_HOME/bin/hive -e "load data local inpath '$LOD_DIR/$yesterday/$line' overwrite into table track.track_logpartition(p_day='$date',p_hour='$hour')"done

(2)hive数据分析 -> hive action

hive-visi.sql脚本内容如下:

use tracktruncate table daily_hour_visit;insert into daily_hour_visitselect date,hour,count(url) pv,count(distinct guid) uvfrom track_log where date='${YESTERDAY}' group by date,hour;

(3)sqoop数据导出 -> sqoop action

export_visit.txt脚本内容如下:

export--connectjdbc:mysql://bigdata-pro-m01.kfk.com:3306/track--usernameroot--password12345678--tabledaily_hour_visit--num-mappers1--export-dir/user/hive/warehouse/track.db/daily_hour_visit--fields-terminated-by'\t'

3. coordinator.xml文件

<coordinator-app name="cron-coord" frequency="${coord:minutes(10)}" start="${start}" end="${end}" timezone="GMT+0800"xmlns="uri:oozie:coordinator:0.4"><action><workflow><app-path>${workflowAppUri}</app-path><configuration><property><name>jobTracker</name><value>${jobTracker}</value></property><property><name>nameNode</name><value>${nameNode}</value></property><property><name>queueName</name><value>${queueName}</value></property></configuration></workflow></action></coordinator-app>

4. lib目录 (mysql jar包)

5. 注意:

shell action中关系到本地的服务或者数据时,对应的服务或者数据必须是在datanode节点上。

6. 启动迁移命令:

bin/oozie job -oozie http://bigdata-pro-m01.kfk.com:11000/oozie -config oozie-apps/project/job.properties -run

案例开发之动态获取数据

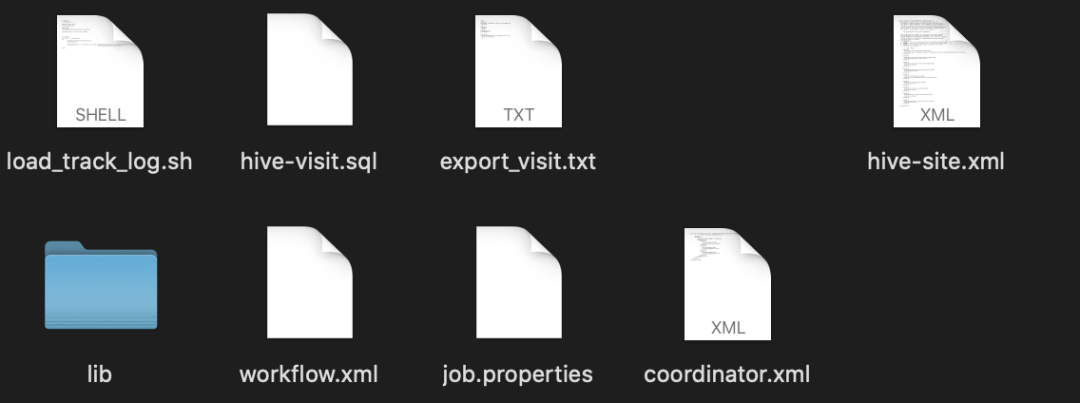

案例开发涉及到的主要文件:

1. job.properties文件,内容如下:

nameNode=hdfs://bigdata-pro-m01.kfk.com:9000jobTracker=bigdata-pro-m01.kfk.com:8032queueName=defaultoozieAppRoot=user/kfk/oozie-appsoozieDataRoot=user/kfk/oozie/datasscript=load_track_log.shEXEC=export_visit.txtSQL=hive-visi.sqlshellHive=daily_hour_visit.sh#oozie.coord.application.path=${nameNode}/${oozieAppRoot}/projectstart=2018-10-27T15:00+0800end=2018-10-27T16:20+0800oozie.use.system.libpath=trueworkflowAppUri=${nameNode}/${oozieAppRoot}/projectoozie.wf.application.path=${nameNode}/${oozieAppRoot}/project

2. workflow.xml文件

根据需求,workflow的开发我们实现了两个方案:

第二个方案(动态获取数据是为了解决动态获取前一天的时间):

(1)数据加载load data -> shell action

load_track_log.sh脚本内容如下:

#!/bin/sh. /etc/profile##track log dir pathLOD_DIR=/opt/track/##hive homeHIVE_HOME=/opt/modules/apache-hive-2.3.6-binyesterday=`date -d "1 day ago" +"%Y%m%d"`cd $LOD_DIRfor line in `ls $yesterday`;dodate=${line:0:4}${line:4:2}${line:6:2}hour=${line:8:2}$HIVE_HOME/bin/hive -e "load data local inpath '$LOD_DIR/$yesterday/$line' overwrite into table track.track_logpartition(p_day='$date',p_hour='$hour')"done

(2)hive数据分析 -> shell action

daily_hour_visit.sh + hive-visi.sql:

daily_hour_visit.sh脚本如下:

#!/bin/shyesterday=`date -d "1 day ago" +"%Y%m%d"`/opt/modules/apache-hive-2.3.6-bin/bin/hive --hiveconf yesterday=${yesterday} -f $

hive-visi.sql脚本如下:

use tracktruncate table daily_hour_visit;insert into daily_hour_visitselect date,hour,count(url) pv,count(distinct guid) uvfrom track_log where date='${hiveconf:yesterday}' group by date,hour;

(3)sqoop数据导出 -> sqoop action

export_visit.txt脚本内容如下:

export--connectjdbc:mysql://bigdata-pro-m01.kfk.com:3306/track--usernameroot--password12345678--tabledaily_hour_visit--num-mappers1--export-dir/user/hive/warehouse/track.db/daily_hour_visit--fields-terminated-by'\t'

3. coordinator.xml文件

<coordinator-app name="cron-coord" frequency="${coord:minutes(10)}" start="${start}" end="${end}" timezone="GMT+0800"xmlns="uri:oozie:coordinator:0.4"><action><workflow><app-path>${workflowAppUri}</app-path><configuration><property><name>jobTracker</name><value>${jobTracker}</value></property><property><name>nameNode</name><value>${nameNode}</value></property><property><name>queueName</name><value>${queueName}</value></property></configuration></workflow></action></coordinator-app>

4. lib目录 (mysql jar包)

5. 注意:

shell action中关系到本地的服务或者数据时,对应的服务或者数据必须是在datanode节点上。

6. 启动迁移命令:

bin/oozie job -oozie http://bigdata-pro-m01.kfk.com:11000/oozie -config oozie-apps/project/job.properties -run