惯例最后一部分为分析的时候代码的备份,篇幅很长,没有什么可阅读性,主要目的在于怕丢失,因此放到文章里面,可以忽略掉。水平有限仅供参考。

最近遇到一个xtrabackup 8034备份失败的问题,日志如下,

[ERROR] [MY-011825] [Xtrabackup] log block numbers mismatch:

[ERROR] [MY-011825] [Xtrabackup] expected log block no. 2281182, but got no. 1069731678 from the log file.

[ERROR] [MY-011825] [Xtrabackup] read_logfile() failed.

[ERROR] [MY-011825] [Xtrabackup] log copying failed.

当然这是一个BUG,而且是偶发的BUG,BUG如下, https://jira.percona.com/browse/PXB-3168

下面我们分析一下这个偶发BUG发生的条件是什么,其中主要设计到了8030新的redo设计。

一、新的redo参数

自从8.0.30及之后,redo 被分为32个文件放到了 #innodb_redo中,控制redo大小的参数也变为innodb_redo_log_capacity进行控制,因为redo的个数已经确认,因此只需要控制其总体大小即可,当设置innodb_redo_log_capacity后原有的参数innodb_log_files_in_group和innodb_log_file_size将会被忽略,如果设置了innodb_log_files_in_group和innodb_log_file_size,那么将会使用他们的计算值作为总量,计算方式为innodb_log_files_in_group * innodb_log_file_size = innodb_redo_log_capacity如果没有设置innodb_log_files_in_group和innodb_log_file_size,那么innodb_redo_log_capacity将会生效默认值为100M。同时这种设计为在线更改redo日志大小提供了可能。

二、相关的数据结构

这部分主要在log_sys中增加了一些元素,用于支持更改主要包含如下,

这里的将redo的写入当做生产者,生产者主要是log_wirter线程的,而redo主要的作用是用于在故障中对脏数据进行恢复,但是在正常的运行的时候,但是在正常运行期间,也有一些功能需要读取redo,这里当做消费者,也就是说在消费者消费完生成的redo之前,redo是不允许清理的,主要的消费者包含如下:

meb(企业级备份)消费者 redo归档消费者 checkpoint消费者

一般情况下只有一个消费者就是checkpoint消费者,也就是说在某一时刻的redo,如果没有进行checkpoint,那么是无法知道这部分redo是否是故障恢复需要的,因此不能进行清理。checkpoint消费者主要通过获取当前的checkpoint 位置存储在last_checkpoint_lsn中,当log_governor清理的时候就会对比清理文件的lsn和这个lsn来确定是否能够清理。

二、新的线程log_governor

这32个文件在初始的情况下,只有一个redo使用,而未使用的redo使用后缀_tmp进行表示,但是随着数据的更改,log_writer线程不断的将redo写入到redo文件,使用的如果需要当前redo已经写满,则寻找下一个可用的redo文件,找到后将_tmp后缀去掉后继续使用,并且信息加入到m_files中(Log_files_write_impl::start_next_file),比如某一刻的redo文件如下,

-rw-r----- 1 mysql mysql 262144 May 22 09:52 #ib_redo355_tmp

-rw-r----- 1 mysql mysql 262144 May 22 09:52 #ib_redo356_tmp

-rw-r----- 1 mysql mysql 262144 May 22 09:52 #ib_redo357_tmp

-rw-r----- 1 mysql mysql 262144 May 22 09:52 #ib_redo358_tmp

-rw-r----- 1 mysql mysql 262144 May 22 09:52 #ib_redo359_tmp

-rw-r----- 1 mysql mysql 262144 May 22 09:52 #ib_redo360_tmp

-rw-r----- 1 mysql mysql 262144 May 22 09:52 #ib_redo361_tmp

-rw-r----- 1 mysql mysql 262144 May 22 09:52 #ib_redo362_tmp

-rw-r----- 1 mysql mysql 262144 May 22 09:52 #ib_redo363_tmp

-rw-r----- 1 mysql mysql 262144 May 22 09:52 #ib_redo364_tmp

...

-rw-r----- 1 mysql mysql 262144 May 22 09:52 #ib_redo383_tmp

-rw-r----- 1 mysql mysql 262144 May 22 09:52 #ib_redo384_tmp

-rw-r----- 1 mysql mysql 262144 May 22 09:52 #ib_redo353

-rw-r----- 1 mysql mysql 262144 May 22 09:55 #ib_redo354

这里ib_redo354是当前使用的redo,而#ib_redo355_tmp是下一个将会被log_writer使用的redo文件,354是一个自增的序号,也就是前面提到的Log_file_id。

如果32个文件都会被使用完,没有任何一个_tmp后缀的文件,这个时候一个引入了一个新的线程log_governor,它会10毫秒醒来一次监控这种情况,主要监控的包含,

A:是否log_writer线程无法写入redo,证明全部的redo空间都已经占满,需要重置为tmp后缀的临时文件,这个是有log_writer线程通过设置m_requested_files_consumption变量对log_governor线程进行通知的 B:是否已经没有临时文件可供使用,也就是m_unused_files_count已经为0,代表所有redo全部使用过一个轮次了但是可能当前redo文件还有空间 C:在线修改redosize后会触发进行一次清理和重置redo文件大小

接着log_governor会对现有的m_files中的文件进行检查,只有所有消费者都消费完成的redo文件才能够清理,主要检查的就是checkpoint消费者,如果文件可以清理,则会设置清理标记,然后批量的对这些redo文件进行清理,清理的步骤大概分为4步:

1、mark file as unused:这个步骤主要增加_tmp后缀,比如ib_redo384变为ib_redo384_tmp 2、change next unused id:这个步骤主要将ib_redo384_tmp变更为ib_redo416_tmp,因为这是本次使用的Log_file_id 3、resize unused file:如果在线调整了redo大小,则这里生效,进行文件ib_redo416_tmp的resize 4、从m_files中将文件去掉,然后m_unused_files_count增加1

随后这个文件就可以供log_writer 线程使用了。

四、关于redo文件中的block no

我们的redo文件中每一个block size为512字节,而在512字节中,存在12字节的header,而header的前面4个字节代表了LOG_BLOCK_HDR_NO和LOG_BLOCK_FLUSH_BIT_MASK,期中LOG_BLOCK_FLUSH_BIT_MASK是4字节的最高位,代表这个block之前的redo已经写入到磁盘,但是好像只在老版本中使用。现在剩下的只有31个字节了,而LOG_BLOCK_HDR_NO最大为30字节也就是1G左右。 当每次lsn转换为block no的时候都是通过如下的方法,

1 + (lsn 512) % 1073741824

这里加1应该是为了避免block no为0,因此redo的block no每到1G就会重置,而这个redo容量就是1G*512 = 512G,也就是lsn每推荐512G整数倍就会重置一次block no,从1开始,那么这种情况下如果仅仅根据block no 来分辨可能会出现重复的block no,那么引入了epoch no,它存在12字节的header的最后4个字节中,主要算法是,

1 + (lsn 512) 1073741824

这样每到了512G重置一次1其就是+1,那么比如block 2 ,epoch no 1 和block 2 ,epoch no 2 并不是同一个block。

五、新旧redo日志问题

通过我们上面的分析,发现1个redo文件,比如为ib_redo353_tmp,它可能经过下面的步骤不断的重复使用。

1、ib_redo353_tmp 被 log_writer线程使用,更改为ib_redo353,存到m_files中 2、ib_redo353使用完成后,log_writer线程继续使用下一个ib_redo354_tmp 3、当log_writer线程使用完所有的ib_*_tmp 文件后,log_governor线程进行清理 4、log_governor发现ib_redo353所有redo的脏数据已经全部落盘,且经过了checkpoint 5、log_governor将ib_redo353设置为可以清理 6、ib_redo353被重置为ib_redo385_tmp,再次供log_writer线程使用

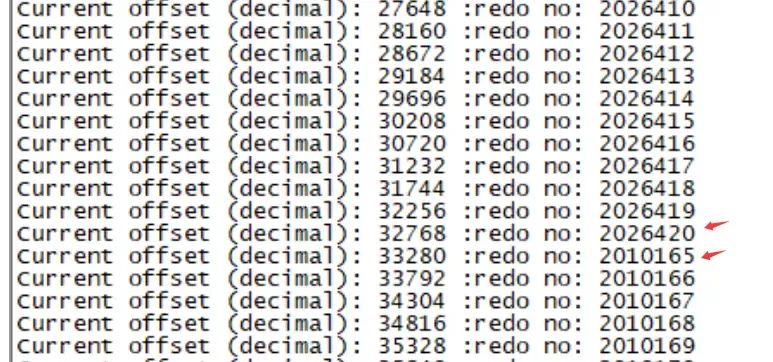

这个步骤中,可能存在ib_redo385中还保存有ib_redo353的信息,我们首先将innodb_redo_log_capacity更改为最小值 8388608,然后重启数据库目的在于做一些较小的操作也能快速的轮换redo文件,然后通过简单的小程序扫描redo的header no,来确认如下,

而(2026420-2010165)*512 差不多就是8388608的大小,也就是参数innodb_redo_log_capacity设计的大小,这也说明2010165是上一次使用后遗留下来的redo block。这个时候如果读到block no 2026420,然后读取下一个block no为2010165,那么读取的下一个block no就比当前读取到block no要小。 那么这里可能有一个问题,如果我们redo 的block no已经达到了512G 整个block no需要重置了,那么可能出现下面的情况,

当前 redo block no:1

下一个redo block no: 1073731451

因为上一个轮次使用redo redo no还没有达到重置的条件,但是本次使用这个redo文件就达到了重置条件从1再次开始。

也就是说如果顺序的读取16K*4(xtrabackup读取redo的大小)大小的redo block,那么可能在某一时刻读取到的信息里面,可能出现3种不同的情况,

当前redo no比下一个redo block no 要大,一般都是这种情况,这可能是读取到了上一次写入的redo 当前redo no比下一个redo block no 要远远小于时,每次lsn 达到512G重置redo block no的时候会遇到 当前redo no比下一个redo block no 小1,这是正常情况。

六、Xtrabackup中的BUG

percona 在8035修复了这个BUG,这个BUG就是和前面的分析有关系,分析Xtrabackup 8034的代码,大概方式是每次读取16K*4的redo block,然后进行校验,每次校验分析一个redo block,通过下一个block no的lsn计算出预期的redo block no和读取到redo block header的block no进行比对,理论上他们应该是一致的,但是如果,

预期的redo no大于 redo block no :则代表扫描的是old block,则本次扫描完成。如下,

if (block_header.m_hdr_no != expected_hdr_no && checksum_is_ok) {

* old log block, do nothing */

if (block_header.m_hdr_no < expected_hdr_no) {

*finished = true;

break;

}

xb::error() << "log block numbers mismatch:";

xb::error() << "expected log block no. " << expected_hdr_no

<< ", but got no. " << block_header.m_hdr_no

<< " from the log file.";

return (-1);

}

预期的redo no远远小于redo block no :并没有处理,这可能是lsn推进了一个512G周期,redo block no重置了, 这这也是本BUG的根本问题。

BUG如下, https://jira.percona.com/browse/PXB-3168

我们分析一下日志中的预期的redo no为2281182,但是实际读取到的redo no为1069731678,刚好1069731678块达到了1073741824的临界值,redo no可能重置了,而(2281182+1073741824 - 1069731678)*512 为3G 左右,也就是说参数innodb_redo_log_capacity的大小大概为3G.

这个问题如果等到innodb_redo_log_capacity参数设置的3G redo 日志被重置后的redo no全部填充后,备份又可以正常继续执行,也就是说每512G redo日志生成后在innodb_redo_log_capacity参数设置大小redo填充的时间内备份可能遇到这个BUG。

七、后记

从这个问题来讲,是8030引入的新的redo设计,而xtrabackup没有完全跟上导致的问题,因此在数据库引入新的重要功能,并且这个功能会设计到一些核心组件的更改,那么我们一般需要持观望态度,延后几个版本再使用,这个时候可能BUG都修复得差不多了,其配套的软件也修复得差不多了。其次对于数据库中那些很少用的功能,虽然经历了不少版本迭代,但是由于使用较少,因此BUG可能发现不了,我们同样要少用,这也是我和几位朋友聊天大家都经常提起的问题。

八、代码备份

读取redo block header no的小程序

#include <stdio.h>

#include <stdint.h>

#include <stdlib.h>

#include <unistd.h>

#include <fcntl.h>

#define CHUNK_SIZE 512

typedef unsigned char byte;

uint32_t LOG_BLOCK_FLUSH_BIT_MASK = 0x80000000UL;

uint32_t mach_read_from_4(const byte *b) {

return (((uint32_t)(b[0]) << 24) | ((uint32_t)(b[1]) << 16) | ((uint32_t)(b[2]) << 8) |

(uint32_t)(b[3]));

}

void process_chunk(const uint8_t* chunk) {

if (chunk == NULL) return;

小端序读取4字节并转为uint32

uint32_t value = mach_read_from_4(chunk);

使用位掩码清除最高位(保留低30位)

uint32_t masked_value = value & ~LOG_BLOCK_FLUSH_BIT_MASK;

printf("redo no: %u \n", value, masked_value);

}

int main(int argc, char** argv) {

if (argc != 2) {

fprintf(stderr, "用法: %s <文件名>\n", argv[0]);

return EXIT_FAILURE;

}

int fd = open(argv[1], O_RDONLY);

if (fd == -1) {

perror("文件打开失败");

return EXIT_FAILURE;

}

uint8_t buffer[CHUNK_SIZE];

ssize_t bytes_read;

while ((bytes_read = read(fd, buffer, sizeof(buffer))) > 0) {

off_t offset = lseek(fd, 0, SEEK_CUR);

printf("Current offset (decimal): %ld :", (long)offset);

if (bytes_read >= 4) {

process_chunk(buffer);

}

}

if (bytes_read == -1) {

perror("读取文件错误");

close(fd);

return EXIT_FAILURE;

}

close(fd);

return EXIT_SUCCESS;

}

xtrabackup 8034 BUG

Redo_Log_Data_Manager::copy_once

-> start_lsn = reader.get_contiguous_lsn();

读取的lsn last read lsn

-> archive_reader.seek_logfile(start_lsn)

设置log_scanned_lsn为start_lsn

->len = archive_reader.read_logfile(finished)

Redo_Log_Reader::read_logfile

->size_t len = 0;*finished = false;

设置初始化大小为0,并且*finished 为false

->start_lsn =ut_uint64_align_down(log_scanned_lsn, OS_FILE_LOG_BLOCK_SIZE)

last scanned LSN 上一次扫描的lsn

->while (!*finished && len <= redo_log_read_buffer_size - RECV_SCAN_SIZE)

循环读取,解析

->end_lsn = read_log_seg(log, log_buf + len, start_lsn, start_lsn + RECV_SCAN_SIZE)

Redo_Log_Reader::read_log_seg

每次读取RECV_SCAN_SIZE 16K*4的redo ,start_lsn为上次扫描结束的lsn

->if (log.m_files.ctx().m_files_ruleset == Log_files_ruleset::CURRENT)

如果是新版本的logfile

->return(read_log_seg_8030(log, buf, start_lsn, end_lsn))

start_lsn为上次扫描结束的lsn,end_lsn为本次扫描结束的预计点

->Redo_Log_Reader::read_log_seg_8030

->auto file = log.m_files.find(start_lsn)

找到读取的文件 ,当前已经处理完成上次读取的redo Log_files_dict::find 返回 Log_file

->file_handle = file->open(Log_file_access_mode::READ_ONLY)

打开文件 Log_file::open

->do { } while (start_lsn != end_lsn)

循环读取到直到计算的结束的预计点

->os_offset_t source_offset = file->offset(start_lsn)

将文件偏移到file的start lsn读取位置,并且返回偏移量

->os_offset_t len = end_lsn - start_lsn;

预计读取的长度

->bool switch_to_next_file = false

是否切换了文件

->if (source_offset + len > file->m_size_in_bytes)

如果需要读取的文件大于了 一个binlog文件大小

->len = file->m_size_in_bytes - source_offset;

先读取文件剩余的部分

->switch_to_next_file = true

设置切换为ture

->const dberr_t err = log_data_blocks_read(file_handle, source_offset, len, buf);

读取 数据 读取的数据为从文件当前offset,到len 放入到buf中

->start_lsn += len ; buf += len;

读取的启始lsn增加len,buf的指针增加len

->if (switch_to_next_file)

->next_id = file->next_id()

获取下一个文件id

->const auto next_file = log.m_files.file(next_id)

获取下一个文件

->if (next_file == log.m_files.end() || !next_file->contains(start_lsn)) {

A:如果已经没有文件了 B:下一个文件不包含需要读取的lsn 也就是start_lsn

-> return start_lsn;

返回这个lsn !!!注意这里返回了

->否则

->file_handle.close()

关闭当前文件

->file = next_file;

设置为下一个文件

->file_handle = file->open(Log_file_access_mode::READ_ONLY)

打开文件,继续读取下一个

->return end_lsn

读取完成返回结束点的lsn

->auto size = scan_log_recs(log_buf + len, is_last, start_lsn, &scanned_lsn,log_scanned_lsn, finished);

Redo_Log_Reader::scan_log_recs

验证读取的redo 信息 block no

->Redo_Log_Reader::scan_log_recs_8030

lsn_t scanned_lsn{start_lsn};const byte *log_block{buf};*finished = false;scanned_epoch_no{0}

初始化信息

->while (log_block < buf + RECV_SCAN_SIZE && !*finished)

->Log_data_block_header block_header;

->log_data_block_header_deserialize(log_block, block_header)

通过读取到buffer的信息读取block header的信息

->expected_hdr_no = log_block_convert_lsn_to_hdr_no(scanned_lsn)

转换扫描的lsn为log no

-> checksum_is_ok = log_block_checksum_is_ok(log_block)

计算block的checksum

->if (block_header.m_hdr_no != expected_hdr_no && checksum_is_ok)

A:如果读取到的block header的 block no和读取计算出来的block不一致

B:cheksum没问题

->if (block_header.m_hdr_no < expected_hdr_no)

如果是小于说明读取完成

->*finished = true

->break;

否则报错

->log block numbers mismatch:

->return (-1);

返回-1

->elseif (!checksum_is_ok)

如果checksum有问题

报错Log block checksum mismatch

-> *finished = true;break;

退出循环

-> const auto data_len = log_block_get_data_len(log_block)

数据的length 如果是满page为512字节

->scanned_lsn = scanned_lsn + data_len;

LSN 是数据的的长度 一个page 512字节 header|512|tailer

-> if (data_len < OS_FILE_LOG_BLOCK_SIZE)

如果数据len小于了512说明读取完成

->*finished = true

否则

->log_block += OS_FILE_LOG_BLOCK_SIZE;

对每个512 字节的 接着校验

->*read_upto_lsn = scanned_lsn

本次读取结束更新read_upto_lsn

->if (!*finished)

如果本次读取并没有读取完成也就是是redo的中间,则肯定读取满了16k*4的redo

->write_size = RECV_SCAN_SIZE

16k*4的redo

->else

否则计算本次读取的大小

->返回读取的大小

return (write_size)

Redo_Log_Data_Manager::copy_func

这里有个大循环

->Redo_Log_Data_Manager::copy_once

->

#0 Log_files_dict::add () at home/source/percona8034/percona-xtrabackup-8.0.34-29/storage/innobase/log/log0files_dict.cc:104

#1 0x000000000154f31c in log_files_find_and_analyze () at home/source/percona8034/percona-xtrabackup-8.0.34-29/storage/innobase/log/log0files_finder.cc:416

#2 0x000000000156344e in log_sys_init () at home/source/percona8034/percona-xtrabackup-8.0.34-29/storage/innobase/log/log0log.cc:1874

#3 0x0000000000cd33f9 in Redo_Log_Data_Manager::init () at home/source/percona8034/percona-xtrabackup-8.0.34-29/storage/innobase/xtrabackup/src/redo_log.cc:1193

#4 0x0000000000c72b04 in xtrabackup_backup_func () at home/source/percona8034/percona-xtrabackup-8.0.34-29/storage/innobase/xtrabackup/src/xtrabackup.cc:4128

#5 0x0000000000c26d7f in main () at home/source/percona8034/percona-xtrabackup-8.0.34-29/storage/innobase/xtrabackup/src/xtrabackup.cc:8033

#0 Log_files_dict::add () at home/source/percona8034/percona-xtrabackup-8.0.34-29/storage/innobase/log/log0files_dict.cc:104

#1 0x000000000154f31c in log_files_find_and_analyze () at home/source/percona8034/percona-xtrabackup-8.0.34-29/storage/innobase/log/log0files_finder.cc:416

#2 0x0000000000ccf915 in reopen_log_files () at home/source/percona8034/percona-xtrabackup-8.0.34-29/storage/innobase/xtrabackup/src/redo_log.cc:148

#3 0x0000000000cd0098 in Redo_Log_Reader::read_log_seg_8030 () at home/source/percona8034/percona-xtrabackup-8.0.34-29/storage/innobase/xtrabackup/src/redo_log.cc:249

#4 0x0000000000cd1879 in Redo_Log_Reader::read_log_seg () at home/source/percona8034/percona-xtrabackup-8.0.34-29/storage/innobase/xtrabackup/src/redo_log.cc:321

#5 Redo_Log_Reader::read_logfile () at home/source/percona8034/percona-xtrabackup-8.0.34-29/storage/innobase/xtrabackup/src/redo_log.cc:340

#6 0x0000000000cd1a85 in Redo_Log_Data_Manager::copy_once () at home/source/percona8034/percona-xtrabackup-8.0.34-29/storage/innobase/xtrabackup/src/redo_log.cc:1375

#7 0x0000000000cd1f72 in Redo_Log_Data_Manager::copy_func () at home/source/percona8034/percona-xtrabackup-8.0.34-29/storage/innobase/xtrabackup/src/redo_log.cc:1411

#8 0x0000000000cd2337 in operator() () at home/source/percona8034/percona-xtrabackup-8.0.34-29/storage/innobase/xtrabackup/src/redo_log.cc:1175

#9 __invoke_impl<void, Redo_Log_Data_Manager::init()::<lambda()>&> () at opt/rh/gcc-toolset-12/root/usr/include/c++/12/bits/invoke.h:61

#10 __invoke<Redo_Log_Data_Manager::init()::<lambda()>&> () at opt/rh/gcc-toolset-12/root/usr/include/c++/12/bits/invoke.h:96

#11 __call<void> () at opt/rh/gcc-toolset-12/root/usr/include/c++/12/functional:495

#12 operator()<> () at opt/rh/gcc-toolset-12/root/usr/include/c++/12/functional:580

#13 operator()<Redo_Log_Data_Manager::init()::<lambda()> > () at home/source/percona8034/percona-xtrabackup-8.0.34-29/storage/innobase/include/os0thread-create.h:194

#14 __invoke_impl<void, Detached_thread, Redo_Log_Data_Manager::init()::<lambda()> > () at opt/rh/gcc-toolset-12/root/usr/include/c++/12/bits/invoke.h:61

#15 __invoke<Detached_thread, Redo_Log_Data_Manager::init()::<lambda()> > () at opt/rh/gcc-toolset-12/root/usr/include/c++/12/bits/invoke.h:96

#16 _M_invoke<0, 1> () at opt/rh/gcc-toolset-12/root/usr/include/c++/12/bits/std_thread.h:258

#17 operator() () at opt/rh/gcc-toolset-12/root/usr/include/c++/12/bits/std_thread.h:265

#18 _M_run () at opt/rh/gcc-toolset-12/root/usr/include/c++/12/bits/std_thread.h:210

#19 0x00007ffff5fc1b23 in execute_native_thread_routine () from lib64/libstdc++.so.6

#20 0x00007ffff7bb51ca in start_thread () from lib64/libpthread.so.0

#21 0x00007ffff55c88d3 in clone () from lib64/libc.so.6

Current offset (decimal): 30720 :redo no: 2026416

Current offset (decimal): 31232 :redo no: 2026417

Current offset (decimal): 31744 :redo no: 2026418

Current offset (decimal): 32256 :redo no: 2026419

Current offset (decimal): 32768 :redo no: 2026420

Current offset (decimal): 33280 :redo no: 2010165

Current offset (decimal): 33792 :redo no: 2010166

Current offset (decimal): 34304 :redo no: 2010167

Current offset (decimal): 34816 :redo no: 2010168

Current offset (decimal): 35328 :redo no: 2010169

Current offset (decimal): 35840 :redo no: 2010170

#0 Log_files_dict::add () at home/source/percona8034/percona-xtrabackup-8.0.34-29/storage/innobase/log/log0files_dict.cc:110

#1 0x000000000154cfa6 in Log_files_dict::add () at home/source/percona8034/percona-xtrabackup-8.0.34-29/storage/innobase/log/log0files_dict.cc:105

#2 0x000000000154f31c in log_files_find_and_analyze () at home/source/percona8034/percona-xtrabackup-8.0.34-29/storage/innobase/log/log0files_finder.cc:416

#3 0x0000000000ccf915 in reopen_log_files () at home/source/percona8034/percona-xtrabackup-8.0.34-29/storage/innobase/xtrabackup/src/redo_log.cc:148

#4 0x0000000000cd0098 in Redo_Log_Reader::read_log_seg_8030 () at home/source/percona8034/percona-xtrabackup-8.0.34-29/storage/innobase/xtrabackup/src/redo_log.cc:249

#5 0x0000000000cd1879 in Redo_Log_Reader::read_log_seg () at home/source/percona8034/percona-xtrabackup-8.0.34-29/storage/innobase/xtrabackup/src/redo_log.cc:321

#6 Redo_Log_Reader::read_logfile () at home/source/percona8034/percona-xtrabackup-8.0.34-29/storage/innobase/xtrabackup/src/redo_log.cc:340

#7 0x0000000000cd1a85 in Redo_Log_Data_Manager::copy_once () at home/source/percona8034/percona-xtrabackup-8.0.34-29/storage/innobase/xtrabackup/src/redo_log.cc:1375

#8 0x0000000000cd1f72 in Redo_Log_Data_Manager::copy_func () at home/source/percona8034/percona-xtrabackup-8.0.34-29/storage/innobase/xtrabackup/src/redo_log.cc:1411

#9 0x0000000000cd2337 in operator() () at home/source/percona8034/percona-xtrabackup-8.0.34-29/storage/innobase/xtrabackup/src/redo_log.cc:1175

#10 __invoke_impl<void, Redo_Log_Data_Manager::init()::<lambda()>&> () at opt/rh/gcc-toolset-12/root/usr/include/c++/12/bits/invoke.h:61

#11 __invoke<Redo_Log_Data_Manager::init()::<lambda()>&> () at opt/rh/gcc-toolset-12/root/usr/include/c++/12/bits/invoke.h:96

#12 __call<void> () at opt/rh/gcc-toolset-12/root/usr/include/c++/12/functional:495

#13 operator()<> () at opt/rh/gcc-toolset-12/root/usr/include/c++/12/functional:580

#14 operator()<Redo_Log_Data_Manager::init()::<lambda()> > () at home/source/percona8034/percona-xtrabackup-8.0.34-29/storage/innobase/include/os0thread-create.h:194

#15 __invoke_impl<void, Detached_thread, Redo_Log_Data_Manager::init()::<lambda()> > () at opt/rh/gcc-toolset-12/root/usr/include/c++/12/bits/invoke.h:61

#16 __invoke<Detached_thread, Redo_Log_Data_Manager::init()::<lambda()> > () at opt/rh/gcc-toolset-12/root/usr/include/c++/12/bits/invoke.h:96

#17 _M_invoke<0, 1> () at opt/rh/gcc-toolset-12/root/usr/include/c++/12/bits/std_thread.h:258

#18 operator() () at opt/rh/gcc-toolset-12/root/usr/include/c++/12/bits/std_thread.h:265

#19 _M_run () at opt/rh/gcc-toolset-12/root/usr/include/c++/12/bits/std_thread.h:210

#20 0x00007ffff5fc1b23 in execute_native_thread_routine () from lib64/libstdc++.so.6

#21 0x00007ffff7bb51ca in start_thread () from lib64/libpthread.so.0

#22 0x00007ffff55c88d3 in clone () from lib64/libc.so.6

设置epoch_no

void Log_data_block_header::set_lsn(lsn_t lsn) {

m_epoch_no = log_block_convert_lsn_to_epoch_no(lsn);

m_hdr_no = log_block_convert_lsn_to_hdr_no(lsn);

}

/** Converts a lsn to a log block epoch number.

For details @see LOG_BLOCK_EPOCH_NO.

@param[in] lsn lsn of a byte within the block

@returnlog block epoch number, it is > 0 */

inline uint32_t log_block_convert_lsn_to_epoch_no(lsn_t lsn) {

return 1 +

static_cast<uint32_t>(lsn OS_FILE_LOG_BLOCK_SIZE LOG_BLOCK_MAX_NO);

}

/** Converts a lsn to a log block number. Consecutive log blocks have

consecutive numbers (unless the sequence wraps). It is guaranteed that

the calculated number is greater than zero.

@param[in] lsn lsn of a byte within the block

@returnlog block number, it is > 0 and <= 1G */

inline uint32_t log_block_convert_lsn_to_hdr_no(lsn_t lsn) {

return 1 +

static_cast<uint32_t>(lsn OS_FILE_LOG_BLOCK_SIZE % LOG_BLOCK_MAX_NO);

}

log_governor线程

dberr_t log_list_existing_unused_files(const Log_files_context &ctx,

ut::vector<Log_file_id> &ret) {

* Possible error is emitted to the log inside function called below. */

return log_list_existing_files_low(ctx, "_tmp", ret);

}

std::string log_file_path_for_unused_file(const Log_files_context &ctx,

Log_file_id file_id) {

return log_file_path(ctx, file_id) + "_tmp";

}

dberr_t log_mark_file_as_in_use(const Log_files_context &ctx,

Log_file_id file_id) {

return log_rename_file_low(ctx, log_file_path_for_unused_file(ctx, file_id),

log_file_path(ctx, file_id),

ER_IB_MSG_LOG_FILE_UNUSED_MARK_AS_IN_USE_FAILED);

}

dberr_t log_mark_file_as_unused(const Log_files_context &ctx,

Log_file_id file_id) {

return log_rename_file_low(ctx, log_file_path(ctx, file_id),

log_file_path_for_unused_file(ctx, file_id),

ER_IB_MSG_LOG_FILE_MARK_AS_UNUSED_FAILED);

}

log_write_buffer

->Log_files_write_impl::start_next_file

log_files_governor_iteration_low

->

log0sys.h

- Log_file m_current_file{m_files_ctx, m_encryption_metadata} 当前redo的Log_file

- alignas(ut::INNODB_CACHE_LINE_SIZE) mutable ib_mutex_t m_files_mutex;

- Log_files_context m_files_ctx;

-- std::string m_root_path

-- Log_files_ruleset m_files_ruleset

-- PRE_8_0_30

-- CURRENT

- Log_files_dict m_files{m_files_ctx}; Protected by: m_files_mutex.

-- using Log_files_map = ut::map<Log_file_id, Log_file>;

-- using Log_files_map_iterator = typename Log_files_map::const_iterator;

-- const Log_files_context &m_files_ctx; 上下文

-- Log_files_map m_files_by_id; map数据结构 排序好的 2个元素为 Log_file_id, Log_file

--key size_t Log_file_id

--value struct Log_file

-- const Log_files_context &m_files_ctx

-- Log_file_id m_id //ID of the file.

-- bool m_consumed //Set to true when file becomes consumed

-- bool m_full //Set to true when file became full and next file exists.

-- os_offset_t m_size_in_bytes //Size, expressed in bytes, including LOG_FILE_HDR_SIZE.

-- lsn_t m_start_lsn //LSN of the first byte within the file, aligned to OS_FILE_LOG_BLOCK_SIZE

-- lsn_t m_end_lsn //LSN of the first byte after the file, aligned to OS_FILE_LOG_BLOCK_SIZE

- size_t m_unused_files_count; //Protected by: m_files_mutex. 当前处于tmp后缀下的文件个数

- os_offset_t m_unused_file_size; Size of each unused redo log file 每个tmp后缀下文件的大小

- Log_files_capacity m_capacity; //Capacity limits for the redo log. Responsible for resize Mutex protection is decided per each Log_files_capacity method

-- Exposed m_exposed{}

-- atomic_lsn_t m_soft_logical_capacity{0} // Value returned by @see soft_logical_capacity

-- atomic_lsn_t m_hard_logical_capacity{0} Value returned by @see hard_logical_capacity

-- atomic_lsn_t m_adaptive_flush_min_age{0} // Value returned by @see adaptive_flush_min_age

-- atomic_lsn_t m_adaptive_flush_max_age{0} Value returned by @see adaptive_flush_max_age

-- atomic_lsn_t m_agressive_checkpoint_min_age{0} Value returned by @see aggressive_checkpoint_min_age

-- os_offset_t m_current_physical_capacity{0} This is limitation for space on disk we are never allowed to exceed. This is the guard of disk space - current size of all log files on disk is always not greater than this value

-- os_offset_t m_target_physical_capacity{0} Goal we are trying to achieve for m_current_physical_capacity when resize operation is in progress

-- Log_resize_mode m_resize_mode{Log_resize_mode::NONE} Current resize direction. When user decides to resize down the redo log, it becomes Log_resize_mode::RESIZING_DOWN until the resize is finished or user decides to stop it (providing other capacity)

- bool m_requested_files_consumption; 如果log writer 线程无法写入redo,也就是redo空间用完的情况下会设置本标记通知log governor线程进行清理

- Log_files_stats m_files_stats; Statistics related to redo log files consumption and creation Protected by: m_files_mutex.

Log_clock_point m_last_update_time // Last time stats were updated

lsn_t m_lsn_consumption_per_1s{0} //LSN difference by which result of log_files_oldest_needed_lsn() advanced during last second

lsn_t m_lsn_production_per_1s{0} //LSN difference by which result of log_files_newest_needed_lsn() advanced during last second

lsn_t m_oldest_lsn_on_update{0} Oldest LSN returned by log_files_oldest_needed_lsn() during last successful call to @see update()

lsn_t m_newest_lsn_on_update{0} Newest LSN returned by log_files_newest_needed_lsn() during last successful call to @see update()

- os_event_t m_files_governor_event; Event used by log files governor thread to wait

- os_event_t m_files_governor_iteration_event; Event used by other threads to wait until log files governor finished its next iteration

//This is useful when some sys_var gets changed to wait until log files governor re-computed everything and then check if the concurrency_margin is safe to emit warning if needed

- std::atomic_bool m_no_more_dummy_records_requested; //False iflog files governor thread is allowed to add new redo records

- std::atomic_bool m_no_more_dummy_records_promised; False if the log files governor thread is allowed to add new dummy redo records

- os_event_t m_file_removed_event; Event used for waiting on next file available. Used by log writer thread to wait when it needs to produce a next log file but there are no free (consumed) log files available.

- byte m_encryption_buf[OS_FILE_LOG_BLOCK_SIZE]; // Buffer that contains encryption meta data encrypted with master key Protected by: m_files_mutex

- Encryption_metadata m_encryption_metadata;

- ut::unordered_set<Log_consumer *> m_consumers; //注册的消费者,包含1、重置消费者\2、checkpoint消费者\3、归档消费者

class Log_user_consumer : public Log_consumer {

public:

explicit Log_user_consumer(const std::string &name);

const std::string &get_name() const override;

** Set the lsn reported by get_consumed_lsn() to the given value.

It is required that the given value is greater or equal to the value

currently reported by the get_consumed_lsn().

@param[in] consumed_lsn the given lsn to report */

void set_consumed_lsn(lsn_t consumed_lsn);

lsn_t get_consumed_lsn() const override;

void consumption_requested() override;

private:

** Name of this consumer (saved value from ctor). */

const std::string m_name;

** Value reported by get_consumed_lsn().

Set by set_consumed_lsn(lsn). */

lsn_t m_consumed_lsn{};

};

Log_files_dict::add

#0 Log_files_dict::erase (this=0x7fffe1f2fc88, file_id=168) at pxc/mysql-8.0.36/storage/innobase/log/log0files_dict.cc:71

#1 0x0000000004ad8208 in log_files_recycle_file (log=..., file_id=168, unused_file_size=262144) at pxc/mysql-8.0.36/storage/innobase/log/log0files_governor.cc:976

#2 0x0000000004ad834a in log_files_process_consumed_file (log=..., file_id=168) at pxc/mysql-8.0.36/storage/innobase/log/log0files_governor.cc:991

#3 0x0000000004ad8430 in log_files_process_consumed_files (log=...) at pxc/mysql-8.0.36/storage/innobase/log/log0files_governor.cc:1014

#4 0x0000000004ad909e in log_files_governor_iteration_low (log=..., has_writer_mutex=true) at pxc/mysql-8.0.36/storage/innobase/log/log0files_governor.cc:1241

#5 0x0000000004ad9316 in log_files_governor_iteration (log=...) at pxc/mysql-8.0.36/storage/innobase/log/log0files_governor.cc:1334

#6 0x0000000004ad9536 in log_files_governor (log_ptr=0x7fffe1f2f340) at pxc/mysql-8.0.36/storage/innobase/log/log0files_governor.cc:1371

#7 0x0000000004afc49b in std::__invoke_impl<void, void (*&)(log_t*), log_t*&> (__f=@0x7fff97ffd9e0: 0x4ad943f <log_files_governor(log_t*)>) at opt/rh/devtoolset-11/root/usr/include/c++/11/bits/invoke.h:61

#8 0x0000000004afc405 in std::__invoke<void (*&)(log_t*), log_t*&> (__fn=@0x7fff97ffd9e0: 0x4ad943f <log_files_governor(log_t*)>) at opt/rh/devtoolset-11/root/usr/include/c++/11/bits/invoke.h:96

#9 0x0000000004afc34c in std::_Bind<void (*(log_t*))(log_t*)>::__call<void, , 0ul>(std::tuple<>&&, std::_Index_tuple<0ul>) (this=0x7fff97ffd9e0, __args=<unknown type in pxc/mysql8036/bin/mysqld, CU 0xd7901da, DIE 0xd7fff00>) at opt/rh/devtoolset-11/root/usr/include/c++/11/functional:420

#10 0x0000000004afc2ae in std::_Bind<void (*(log_t*))(log_t*)>::operator()<, void>() (this=0x7fff97ffd9e0) at opt/rh/devtoolset-11/root/usr/include/c++/11/functional:503

#11 0x0000000004afc1f6 in Detached_thread::operator()<void (*)(log_t*), log_t*>(void (*&&)(log_t*), log_t*&&) (this=0x7fffe27f2178, f=<unknown type in pxc/mysql8036/bin/mysqld, CU 0xd7901da, DIE 0xd80012f>) at pxc/mysql-8.0.36/storage/innobase/include/os0thread-create.h:194

#12 0x0000000004afc0c3 in std::__invoke_impl<void, Detached_thread, void (*)(log_t*), log_t*>(std::__invoke_other, Detached_thread&&, void (*&&)(log_t*), log_t*&&) (__f=<unknown type in pxc/mysql8036/bin/mysqld, CU 0xd7901da, DIE 0xd800233>) at opt/rh/devtoolset-11/root/usr/include/c++/11/bits/invoke.h:61

#13 0x0000000004afc000 in std::__invoke<Detached_thread, void (*)(log_t*), log_t*>(Detached_thread&&, void (*&&)(log_t*), log_t*&&) (__fn=<unknown type in pxc/mysql8036/bin/mysqld, CU 0xd7901da, DIE 0xd8003f3>) at opt/rh/devtoolset-11/root/usr/include/c++/11/bits/invoke.h:96

#14 0x0000000004afbf33 in std::thread::_Invoker<std::tuple<Detached_thread, void (*)(log_t*), log_t*> >::_M_invoke<0ul, 1ul, 2ul> (this=0x7fffe27f2168) at opt/rh/devtoolset-11/root/usr/include/c++/11/bits/std_thread.h:253

#15 0x0000000004afbed0 in std::thread::_Invoker<std::tuple<Detached_thread, void (*)(log_t*), log_t*> >::operator() (this=0x7fffe27f2168) at opt/rh/devtoolset-11/root/usr/include/c++/11/bits/std_thread.h:260

#16 0x0000000004afbeb4 in std::thread::_State_impl<std::thread::_Invoker<std::tuple<Detached_thread, void (*)(log_t*), log_t*> > >::_M_run (this=0x7fffe27f2160) at opt/rh/devtoolset-11/root/usr/include/c++/11/bits/std_thread.h:211

#17 0x00007ffff6c12fdf in execute_native_thread_routine () at ../../../../../libstdc++-v3/src/c++11/thread.cc:80

#18 0x00007ffff7bc6ea5 in start_thread () from lib64/libpthread.so.0

#19 0x00007ffff6370b0d in clone () from lib64/libc.so.6

Breakpoint 2, Log_files_dict::add (this=0x7fffe1f2fc88, file_id=161, size_in_bytes=262144, start_lsn=994580480, full=false, encryption_metadata=...) at pxc/mysql-8.0.36/storage/innobase/log/log0files_dict.cc:105

105 add(file_id, size_in_bytes, start_lsn, full, false, encryption_metadata);

(gdb) bt

#0 Log_files_dict::add (this=0x7fffe1f2fc88, file_id=161, size_in_bytes=262144, start_lsn=994580480, full=false, encryption_metadata=...) at pxc/mysql-8.0.36/storage/innobase/log/log0files_dict.cc:105

#1 0x0000000004ad9f48 in log_files_create_file (log=..., file_id=161, start_lsn=994580480, checkpoint_lsn=0, create_first_data_block=false) at pxc/mysql-8.0.36/storage/innobase/log/log0files_governor.cc:1528

#2 0x0000000004ad8775 in log_files_produce_file (log=...) at pxc/mysql-8.0.36/storage/innobase/log/log0files_governor.cc:1068

#3 0x0000000004b2bce1 in Log_files_write_impl::start_next_file (log=..., start_lsn=994580480) at pxc/mysql-8.0.36/storage/innobase/log/log0write.cc:1374

#4 0x0000000004b2cdb7 in log_write_buffer (log=..., buffer=0x7fff9b480c00 "", buffer_size=53, start_lsn=994580480) at pxc/mysql-8.0.36/storage/innobase/log/log0write.cc:1737

#5 0x0000000004b2ee36 in log_writer_write_buffer (log=..., next_write_lsn=994580533) at pxc/mysql-8.0.36/storage/innobase/log/log0write.cc:2195

#6 0x0000000004b2f42b in log_writer (log_ptr=0x7fffe1f2f340) at pxc/mysql-8.0.36/storage/innobase/log/log0write.cc:2292

#7 0x0000000004afc49b in std::__invoke_impl<void, void (*&)(log_t*), log_t*&> (__f=@0x7fffa0ff99e0: 0x4b2f156 <log_writer(log_t*)>) at opt/rh/devtoolset-11/root/usr/include/c++/11/bits/invoke.h:61

#8 0x0000000004afc405 in std::__invoke<void (*&)(log_t*), log_t*&> (__fn=@0x7fffa0ff99e0: 0x4b2f156 <log_writer(log_t*)>) at opt/rh/devtoolset-11/root/usr/include/c++/11/bits/invoke.h:96

#9 0x0000000004afc34c in std::_Bind<void (*(log_t*))(log_t*)>::__call<void, , 0ul>(std::tuple<>&&, std::_Index_tuple<0ul>) (this=0x7fffa0ff99e0, __args=<unknown type in pxc/mysql8036/bin/mysqld, CU 0xd7901da, DIE 0xd7fff00>) at opt/rh/devtoolset-11/root/usr/include/c++/11/functional:420

#10 0x0000000004afc2ae in std::_Bind<void (*(log_t*))(log_t*)>::operator()<, void>() (this=0x7fffa0ff99e0) at opt/rh/devtoolset-11/root/usr/include/c++/11/functional:503

#11 0x0000000004afc1f6 in Detached_thread::operator()<void (*)(log_t*), log_t*>(void (*&&)(log_t*), log_t*&&) (this=0x7fffe27ecd58, f=<unknown type in pxc/mysql8036/bin/mysqld, CU 0xd7901da, DIE 0xd80012f>) at pxc/mysql-8.0.36/storage/innobase/include/os0thread-create.h:194

#12 0x0000000004afc0c3 in std::__invoke_impl<void, Detached_thread, void (*)(log_t*), log_t*>(std::__invoke_other, Detached_thread&&, void (*&&)(log_t*), log_t*&&) (__f=<unknown type in pxc/mysql8036/bin/mysqld, CU 0xd7901da, DIE 0xd800233>) at opt/rh/devtoolset-11/root/usr/include/c++/11/bits/invoke.h:61

#13 0x0000000004afc000 in std::__invoke<Detached_thread, void (*)(log_t*), log_t*>(Detached_thread&&, void (*&&)(log_t*), log_t*&&) (__fn=<unknown type in pxc/mysql8036/bin/mysqld, CU 0xd7901da, DIE 0xd8003f3>) at opt/rh/devtoolset-11/root/usr/include/c++/11/bits/invoke.h:96

#14 0x0000000004afbf33 in std::thread::_Invoker<std::tuple<Detached_thread, void (*)(log_t*), log_t*> >::_M_invoke<0ul, 1ul, 2ul> (this=0x7fffe27ecd48) at opt/rh/devtoolset-11/root/usr/include/c++/11/bits/std_thread.h:253

#15 0x0000000004afbed0 in std::thread::_Invoker<std::tuple<Detached_thread, void (*)(log_t*), log_t*> >::operator() (this=0x7fffe27ecd48) at opt/rh/devtoolset-11/root/usr/include/c++/11/bits/std_thread.h:260

#16 0x0000000004afbeb4 in std::thread::_State_impl<std::thread::_Invoker<std::tuple<Detached_thread, void (*)(log_t*), log_t*> > >::_M_run (this=0x7fffe27ecd40) at opt/rh/devtoolset-11/root/usr/include/c++/11/bits/std_thread.h:211

#17 0x00007ffff6c12fdf in execute_native_thread_routine () at ../../../../../libstdc++-v3/src/c++11/thread.cc:80

#18 0x00007ffff7bc6ea5 in start_thread () from lib64/libpthread.so.0

#19 0x00007ffff6370b0d in clone () from lib64/libc.so.6

(gdb) b Log_files_dict::erase

Breakpoint 3 at 0x4ad2fbc: file pxc/mysql-8.0.36/storage/innobase/log/log0files_dict.cc, line 71.

log_files_create_file

log.m_files.add(file_id, file_size, start_lsn, false, log.m_encryption_metadata);

log.m_unused_files_count--;

log_files_prepare_unused_file

Log_files_capacity::update

->Log_files_capacity::update_exposed

#0 Log_files_capacity::update (this=0x7fffe1f2fcd0, files=..., current_logical_size=512, current_checkpoint_age=0) at pxc/mysql-8.0.36/storage/innobase/log/log0files_capacity.cc:192

#1 0x0000000004ad89e1 in log_files_update_capacity_limits (log=...) at pxc/mysql-8.0.36/storage/innobase/log/log0files_governor.cc:1117

#2 0x0000000004ad9048 in log_files_governor_iteration_low (log=..., has_writer_mutex=false) at pxc/mysql-8.0.36/storage/innobase/log/log0files_governor.cc:1233

#3 0x0000000004ad92c7 in log_files_governor_iteration (log=...) at pxc/mysql-8.0.36/storage/innobase/log/log0files_governor.cc:1330

#4 0x0000000004ad9536 in log_files_governor (log_ptr=0x7fffe1f2f340) at pxc/mysql-8.0.36/storage/innobase/log/log0files_governor.cc:1371

#5 0x0000000004afc49b in std::__invoke_impl<void, void (*&)(log_t*), log_t*&> (__f=@0x7fff97ffd9e0: 0x4ad943f <log_files_governor(log_t*)>) at opt/rh/devtoolset-11/root/usr/include/c++/11/bits/invoke.h:61

#6 0x0000000004afc405 in std::__invoke<void (*&)(log_t*), log_t*&> (__fn=@0x7fff97ffd9e0: 0x4ad943f <log_files_governor(log_t*)>) at opt/rh/devtoolset-11/root/usr/include/c++/11/bits/invoke.h:96

#7 0x0000000004afc34c in std::_Bind<void (*(log_t*))(log_t*)>::__call<void, , 0ul>(std::tuple<>&&, std::_Index_tuple<0ul>) (this=0x7fff97ffd9e0, __args=<unknown type in pxc/mysql8036/bin/mysqld, CU 0xd7901da, DIE 0xd7fff00>) at opt/rh/devtoolset-11/root/usr/include/c++/11/functional:420

#8 0x0000000004afc2ae in std::_Bind<void (*(log_t*))(log_t*)>::operator()<, void>() (this=0x7fff97ffd9e0) at opt/rh/devtoolset-11/root/usr/include/c++/11/functional:503

#9 0x0000000004afc1f6 in Detached_thread::operator()<void (*)(log_t*), log_t*>(void (*&&)(log_t*), log_t*&&) (this=0x7fffe27edbc8, f=<unknown type in pxc/mysql8036/bin/mysqld, CU 0xd7901da, DIE 0xd80012f>) at pxc/mysql-8.0.36/storage/innobase/include/os0thread-create.h:194

#10 0x0000000004afc0c3 in std::__invoke_impl<void, Detached_thread, void (*)(log_t*), log_t*>(std::__invoke_other, Detached_thread&&, void (*&&)(log_t*), log_t*&&) (__f=<unknown type in pxc/mysql8036/bin/mysqld, CU 0xd7901da, DIE 0xd800233>) at opt/rh/devtoolset-11/root/usr/include/c++/11/bits/invoke.h:61

#11 0x0000000004afc000 in std::__invoke<Detached_thread, void (*)(log_t*), log_t*>(Detached_thread&&, void (*&&)(log_t*), log_t*&&) (__fn=<unknown type in pxc/mysql8036/bin/mysqld, CU 0xd7901da, DIE 0xd8003f3>) at opt/rh/devtoolset-11/root/usr/include/c++/11/bits/invoke.h:96

#12 0x0000000004afbf33 in std::thread::_Invoker<std::tuple<Detached_thread, void (*)(log_t*), log_t*> >::_M_invoke<0ul, 1ul, 2ul> (this=0x7fffe27edbb8) at opt/rh/devtoolset-11/root/usr/include/c++/11/bits/std_thread.h:253

#13 0x0000000004afbed0 in std::thread::_Invoker<std::tuple<Detached_thread, void (*)(log_t*), log_t*> >::operator() (this=0x7fffe27edbb8) at opt/rh/devtoolset-11/root/usr/include/c++/11/bits/std_thread.h:260

#14 0x0000000004afbeb4 in std::thread::_State_impl<std::thread::_Invoker<std::tuple<Detached_thread, void (*)(log_t*), log_t*> > >::_M_run (this=0x7fffe27edbb0) at opt/rh/devtoolset-11/root/usr/include/c++/11/bits/std_thread.h:211

#15 0x00007ffff6c12fdf in execute_native_thread_routine () at ../../../../../libstdc++-v3/src/c++11/thread.cc:80

#16 0x00007ffff7bc6ea5 in start_thread () from lib64/libpthread.so.0

#17 0x00007ffff6370b0d in clone () from lib64/libc.so.6

log_files_logical_size_and_checkpoint_age

->

Log_consumer

->Arch_log_consumer

redo归档相关,先不考虑

->Log_user_consumer

mysql meb(企业级备份)相关

->get_consumed_lsn

return m_consumed_lsn

->consumption_requested

空函数

->Log_checkpoint_consumer

->get_consumed_lsn

->log_get_checkpoint_lsn(m_log)

->return log.last_checkpoint_lsn.load()

Updated by: log_checkpointer

->consumption_requested

这个函数主要是给检查点发送一个唤醒信号

->log_request_checkpoint_in_next_file

->log_request_checkpoint_in_next_file_low

->log_request_checkpoint_validate

仅仅用于debug环境下

->auto oldest_file = log.m_files.begin()

获取最老的一个file

->if (oldest_file == log.m_files.end())

如果最老的文件和最新的文件是一个说明只有1消费者,也就是一个redo使用的情况下

直接reture

->const lsn_t checkpoint_lsn = log.last_checkpoint_lsn.load()

获取ckpt lsn

->const lsn_t current_lsn = log_get_lsn(log)

获取当前LSN

->if (oldest_file->m_end_lsn > checkpoint_lsn && current_lsn >= oldest_file->m_end_lsn)

如果checkpoint 没有 检查道本文件

->const lsn_t request_lsn = oldest_file->m_end_lsn + LOG_BLOCK_HDR_SIZE

加上header的大小

->log_request_checkpoint_low(log, request_lsn)

触发一次唤醒操作,唤醒检查点线程

->if (requested_lsn > log.requested_checkpoint_lsn)

->log.requested_checkpoint_lsn = requested_lsn

->if (requested_lsn > log.last_checkpoint_lsn.load())

->os_event_set(log.checkpointer_event)

redo生成后总共有3个消费者

1、meb(企业级备份)消费者

2、redo归档消费者

3、checkpoint消费者

Log_files_stats::update

->log_files_oldest_needed_lsn

->log_consumer_get_oldest

log_files_mark_consumed_file

->log_files_oldest_needed_lsn

(gdb) p log_sys->m_files->m_files_by_id

Python Exception <class 'gdb.error'> There is no member or method named _M_value_field.:

$5 = std::map with 1 elements

redo_log_consumer_register

redo_log_consumer_unregister

重置由governor线程进行

log_files_governor

-> const auto sig_count = os_event_reset(log.m_files_governor_event)

重置log.m_files_governor_event event,记录唤醒次数

-> log_files_governor_iteration

->Iteration_result result = log_files_governor_iteration_low(log, false)

->log_files_update_capacity_limits(log)

->log_files_adjust_unused_files_sizes

-> if (log_files::is_consumption_needed(log))

判断是否需要重置老的 redo log 判断是否可以重置 也就是删除老的redo log

其中最重要的就是是否有redo空间 可以使用,也就是否有tmp后缀的临时文件

A:是否log write无法写入文件,证明全部的redo空间都已经占满,需要重置为tmp临时文件

B:是否已经没有m_unused_files_count已经为0,也就所有redo全部使用过一个轮次了

但是可能当前redo文件还有空间

C D和重置文件大小相关,也就是在线修改redosize后会触发进行一次清理和重置

redo文件大小,但是只对消费完成的redo文件有效

->log_files_mark_consumed_files(log)

如果需要新的redo文件 则,调用log_files_mark_consumed_files

来将能够清理的redo文件进行标记,随后将其更改为tmp为后缀的临时文件

->log_files_mark_consumed_files

const lsn_t oldest_lsn = log_files_oldest_needed_lsn(log)

->log_consumer_get_oldest(log, oldest_need_lsn)

->for (auto consumer : log.m_consumers)

循环每个消费者,通常情况下只有一个checkpoint消费者,获取最老的消费者的lsn

oldest_consumer

->return oldest_consumer

返回这个lsn

->log_files_for_each..

对log.m_files中的每个文件调用函数

if (!file.m_consumed && file.m_end_lsn <= oldest_lsn){

log_files_mark_consumed_file(log, file.m_id)}

A:如果文件当前为m_consumed=false,也就是没有标记过可以清理

B:文件的最大lsn小于 oldest_lsn

则说明可以进行清理,进行标记

->log_files_mark_consumed_file(log, file.m_id)

-> log.m_files.set_consumed(file_id)

Log_files_dict::set_consumed

->it->second.m_consumed = true

设置信息m_consumed为true

->if (log_files_number_of_consumed_files(log.m_files) != 0)

->std::count_if(files.begin(), files.end()

遍历每个文件的file.m_consumed

如果为true进行统计

->如果有需要清理的redo,则调用

log_files_process_consumed_files

->log_files_for_each...

对log.m_files中的每个文件调用函数

if (file.m_consumed){to_process.push_back(file.m_id);}

将文件插入到vector to_process中

->for (Log_file_id file_id : to_process)

遍历需要处理的file_id

-> if (!log_files_process_consumed_file(log, file_id))

->const os_offset_t unused_file_size = log.m_capacity.next_file_size();

文件的大小 也就是重置后redo文件

->if (log_files::might_recycle_file(log, file->m_size_in_bytes,unused_file_size))

判定是否可以循环使用文件,如果是则循环

->return log_files_recycle_file(log, file_id, unused_file_size)

->const auto file = log.m_files.file(file_id)

获取文件属性

->dberr_t err = log_mark_file_as_unused(log.m_files_ctx, file_id)

->log_rename_file_low

重置文件后缀为_tmp

-rw-r----- 1 mysql mysql 262144 May 19 11:25 #ib_redo236_tmp

->const auto next_unused_id = log_files_next_unused_id(log)

重置tmp文件序号

-rw-r----- 1 mysql mysql 262144 May 19 11:25 #ib_redo268_tmp

->log_resize_unused_file(log.m_files_ctx, next_unused_id, unused_file_size)

改变文件大小,这只会在redo大小修改的时间的使用到

否则直接删除文件

->return log_files_remove_consumed_file(log, file_id)

->log.m_unused_files_count++

设置没有使用的tmp文件++

->if (log.m_requested_files_consumption && log_files_next_file_size(log) != 0)

->log.m_requested_files_consumption = false

通知log writer 已经重置可以使用

->log.m_files_stats.update(log)

-> if (log_files_should_rush_oldest_file_consumption(log))

如果还是不行 及没有可以清理的 redo 文件

->if (log_files_consuming_oldest_file_takes_too_long(log))

是否10秒内没有消费完成

->if (auto *consumer = log_consumer_get_oldest(log, oldest_needed_lsn))

->consumer->consumption_requested()

发送最拖进度的消费者一个信号,再次提醒进行消费

->if (log_files_filling_oldest_file_takes_too_long(log))

是否10秒没有消费完成

-> if (log_files_is_truncate_allowed(log))

只包含CKPT 消费者的时候

-> if (has_writer_mutex)

如果持有write mutex,可以truncate当前redo文件 ???

->log_files_truncate(log)

->否则再次尝试

return Iteration_result::RETRY_WITH_WRITER_MUTEX

.....

->os_event_wait_time_low(log.m_files_governor_event, std::chrono::milliseconds{10}, sig_count);

等待10毫秒

加入和通知

log_writer_write_buffer

-> const lsn_t checkpoint_limited_lsn =

log_writer_wait_on_checkpoint(log, last_write_lsn, next_write_lsn)

->log_writer_wait_on_archiver(log, next_write_lsn)

->log_writer_wait_on_consumers

->log_write_buffer

->Log_files_write_impl::start_next_file

->log_files_produce_file

->log_files_create_file

->Log_files_dict::add

->log_writer_write_failed

->log_files_wait_for_next_file_available

->log.m_requested_files_consumption =