点击上方“IT那活儿”公众号--专注于企业全栈运维技术分享,不管IT什么活儿,干就完了!!!

k8s集群搭建

1)GFW; 2)需要使用代理上网(毕竟个人PC配置能和生产相匹配的太少了,动辄上百G内存,且因为网络安全,不大可能把应用放在自己电脑上,因此多数还是依赖公司的内网服务器); 3)对软件版本要求苛刻(如果要按照教程来,就要保持版本完全一致)。

cent7-k8s-master, *.*.206.40 cent7-k8s-slave01, *.*.206.22 cent7-k8s-slave02, *.*.206.42 cent7-k8s-slave03, *.*.206.24 cent7-k8s-slave04, *.*.206.25

ovftool home/wmare/cent7-k8s-master/cent7-k8s-master.vmx home/virtual/cent7-k8s-master.ovf

ovftool home/wmare/cent7-k8s-slave01/cent7-k8s-slave01.vmx home/virtual/cent7-k8s-slave01.ovf

ovftool home/wmare/cent7-k8s-slave02/cent7-k8s-slave02.vmx home/virtual/cent7-k8s-slave02.ovf

ovftool home/wmare/cent7-k8s-slave03/cent7-k8s-slave03.vmx home/virtual/cent7-k8s-slave03.ovf

ovftool home/wmare/cent7-k8s-slave04/cent7-k8s-slave04.vmx home/virtual/cent7-k8s-slave04.ovf

vmrun -T ws start /home/wmare/cent7-k8s-master/cent7-k8s-master.vmx

vmrun -T ws start /home/wmare/cent7-k8s-slave01/cent7-k8s-slave01.vmx

vmrun -T ws start /home/wmare/cent7-k8s-slave02/cent7-k8s-slave02.vmx

vmrun -T ws start /home/wmare/cent7-k8s-slave03/cent7-k8s-slave03.vmx

vmrun -T ws start /home/wmare/cent7-k8s-slave04/cent7-k8s-slave04.vmx

cat >> /etc/hosts <<EOF

*.*.206.40 cent7-k8s-master

*.*.206.41 cent7-k8s-slave01

*.*.206.42 cent7-k8s-slave02

EOF

ssh-keygen -t dsa -P "" -f ~/.ssh/id_dsa

cd root

ssh-copy-id -i .ssh/id_dsa.pub root@cent7-k8s-master

ssh-copy-id -i .ssh/id_dsa.pub root@cent7-k8s-slave01

ssh-copy-id -i .ssh/id_dsa.pub root@cent7-k8s-slave02

yum install -y epel-release

yum install -y ansible

cat >> /etc/ansible/hosts <<EOF

[k8scluster]

cent7-k8s-master

cent7-k8s-slave01

cent7-k8s-slave02

[node]

cent7-k8s-slave01

cent7-k8s-slave02

EOF

ansible node -mcopy -a'src=/etc/hosts dest=/etc/hosts'

ansible all -i cent7-k8s-master, -mshell -a'hostnamectl set-hostname cent7-k8s-master' -u root

ansible all -i cent7-k8s-slave01, -mshell -a'hostnamectl set-hostname cent7-k8s-slave01' -u root

ansible all -i cent7-k8s-slave02, -mshell -a'hostnamectl set-hostname cent7-k8s-slave02' -u root

ansible all -i cent7-k8s-slave03, -mshell -a'hostnamectl set-hostname cent7-k8s-slave03' -u root

ansible all -i cent7-k8s-slave04, -mshell -a'hostnamectl set-hostname cent7-k8s-slave04' -u root

ansible all -mcommand -a'sed -i "s/^SELINUX=enforcing/SELINUX=disabled/g" etc/sysconfig/selinux'

ansible all -mcommand -a'setenforce 0'

ansible all -mcommand -a'systemctl stop firewalld'

ansible all -mcommand -a'systemctl disable firewalld'

ansible all -mcommand -a'swapoff -a'

ansible all -mcommand -a'echo "vm.swappiness = 0" >> etc/sysctl.conf'

ansible all -mcommand -a'sysctl -p'

ansible all -mcommand -a'sed -i "s/^.*centos_centos7-swap/#&/g" etc/fstab'

kubernetes的想法是将实例紧密包装到尽可能接近100%,所有的部署应该与CPU 内存限制固定在一起。所以如果调度程序发送一个pod到一台机器,它不应该使用交换。设计者不想交换,因为它会减慢速度。所以关闭swap主要是为了性能考虑。为了一些节省资源的场景,比如运行容器数量较多,可添加kubelet参数--fail-swap-on=false来解决。

ansible all -mcommand -a'yum install -y telnet'

cat <<EOF > etc/sysctl.d/k8s.conf

net.bridge.bridge-nf-call-ip6tables = 1

net.bridge.bridge-nf-call-iptables = 1

net.ipv4.ip_forward = 1

EOF

sysctl --system /etc/sysctl.d/k8s.conf

ansible node -mcopy -a'src=/etc/sysctl.d/k8s.conf dest=/etc/sysctl.d/k8s.conf'

ansible node -mcommand -a'sysctl --system etc/sysctl.d/k8s.conf'

ansible all -mcommand -a'yum install -y ntpdate'

ansible all -mcommand -a'ntpdate ntp1.aliyun.com'

ansible all -mcommand -a'yum install -y ntp'

ansible all -mcommand -a'systemctl enable ntpd'

ansible all -mcommand -a'systemctl start ntpd'

ansible all -mcommand -a'timedatectl set-timezone Asia/Shanghai'

ansible all -mcommand -a'timedatectl set-ntp yes'

ansible all -mcommand -a'hwclock --hctosys'

ansible all -mcommand -a'timedatectl set-local-rtc 0'

ansible all -mcommand -a'hwclock --show'

ansible all -mcommand -a'yum install -y yum-utils device-mapper-persistent-data lvm2'

ansible all -mcommand -a'yum-config-manager --add-repo http://mirrors.aliyun.com/docker-ce/linux/centos/docker-ce.repo'

ansible all -mcommand -a'yum makecache fast'

ansible all -mcommand -a'yum -y update'

ansible all -mcommand -a'yum -y install docker-ce-18.06.2.ce'

ansible all -mcommand -a'systemctl start docker'

cat > etc/docker/daemon.json <<EOF

{

"exec-opts": ["native.cgroupdriver=systemd"],

"log-driver": "json-file",

"log-opts": {

"max-size": "100m"

},

"storage-driver": "overlay2",

"storage-opts": [

"overlay2.override_kernel_check=true"

],

"registry-mirrors": ["https://ya78xgj1.mirror.aliyuncs.com"]

}

EOF

ansible node -mcopy -a'src=/etc/docker/daemon.json dest=/etc/docker/daemon.json'

ansible all -mcommand -a'systemctl daemon-reload'

ansible all -mcommand -a'systemctl enable docker'

ansible all -mcommand -a'systemctl restart docker'

ansible all -mcommand -a'docker images'

cat <<EOF > etc/yum.repos.d/kubernetes.repo

[kubernetes]

name=Kubernetes

baseurl=https://mirrors.aliyun.com/kubernetes/yum/repos/kubernetes-el7-x86_64/

enabled=1

gpgcheck=1

repo_gpgcheck=1

gpgkey=https://mirrors.aliyun.com/kubernetes/yum/doc/yum-key.gpg https://mirrors.aliyun.com/kubernetes/yum/doc/rpm-package-key.gpg

EOF

ansible node -mcopy -a'src=/etc/yum.repos.d/kubernetes.repo dest=/etc/yum.repos.d/kubernetes.repo'

ansible all -mcommand -a'yum install -y kubelet kubeadm kubectl'

ansible all -mcommand -a'systemctl enable kubelet'

ansible all -mcommand -a'systemctl start kubelet'

ansible all -m command -a 'cp etc/yum.conf etc/yum.conf.old'

cat <<EOF >> etc/yum.conf

exclude=docker* kube*

EOF

ansible node -m copy -a 'src=/etc/yum.conf dest=/etc/yum.conf'

kubeadm init --image-repository registry.aliyuncs.com/google_containers --kubernetes-version v1.16.1 --pod-network-cidr=10.244.0.0/16

# kubeadm join *.*.206.40:6443 --token 6r4dak.9409ob3rqcwo0091 \

--discovery-token-ca-cert-hash sha256:fe694b09bdf11ddee1328cc52868eb55e62022aa633f3c1ea06a2a3e1aaac669

注:如果token当时忘记保存了,通过kubeadmin token list也可查到join验证信息。

docker search flannel 去docker镜像库查看flannel的镜像,pull最新版下来。

docker pull jmgao1983/flannel:v0.11.0-amd64

mkdir -p $HOME/.kube

cp -i etc/kubernetes/admin.conf $HOME/.kube/config

chown $(id -u):$(id -g) $HOME/.kube/config

ansible node -m command -a 'mkdir -p $HOME/.kube'

ansible node -m copy -a 'src=/root/.kube/config dest=/root/.kube/'

cd /root/.kube

wget https://raw.githubusercontent.com/coreos/flannel/master/Documentation/kube-flannel.yml

sed -i 's#quay.io/coreos/flannel:v0.11.0-amd64#jmgao1983/flannel:v0.11.0-amd64#g' kube-flannel.yml

kubectl apply -f kube-flannel.yml

kubectl taint nodes --all node-role.kubernetes.io/master-

ansible node -m command -a 'kubeadm join *.*.206.40:6443 --token 3k99j7.d0ovp7pwnlmkfmdp

--discovery-token-ca-cert-hash sha256:b65bcaa046d002ed167f00692755020248eb6c1d52bee409c13f4a067a285f85'

NAME STATUS ROLES AGE VERSION

cent7-k8s-master Ready master 2d22h v1.16.1

cent7-k8s-slave01 Ready <none> 2d22h v1.16.1

cent7-k8s-slave02 Ready <none> 2d22h v1.16.1

cent7-k8s-slave03 Ready <none> 2d22h v1.16.1

cent7-k8s-slave04 Ready <none> 2d22h v1.16.1

NAMESPACE NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

cattle-system cattle-cluster-agent-5f49cbcb67-r8wbl 1/1 Running 0 47s xx.xxx.1.29 cent7-k8s-slave03 <none> <none>

cattle-system cattle-node-agent-4mzvh 1/1 Running 0 9s *.*.133.25 cent7-k8s-slave04 <none> <none>

cattle-system cattle-node-agent-dmg96 1/1 Running 0 5s *.*.133.41 cent7-k8s-slave01 <none> <none>

cattle-system cattle-node-agent-fx59q 1/1 Running 0 25s *.*.133.40 cent7-k8s-master <none> <none>

cattle-system cattle-node-agent-j57sn 1/1 Running 0 39s *.*.133.42 cent7-k8s-slave02 <none> <none>

cattle-system cattle-node-agent-w55nt 1/1 Running 0 18s *.*.133.24 cent7-k8s-slave03 <none> <none>

docker pull rancher/rancher

docker run -d

--name=rancher-ui --restart=unless-stopped -p 80:80 -p 443:443 rancher/rancher

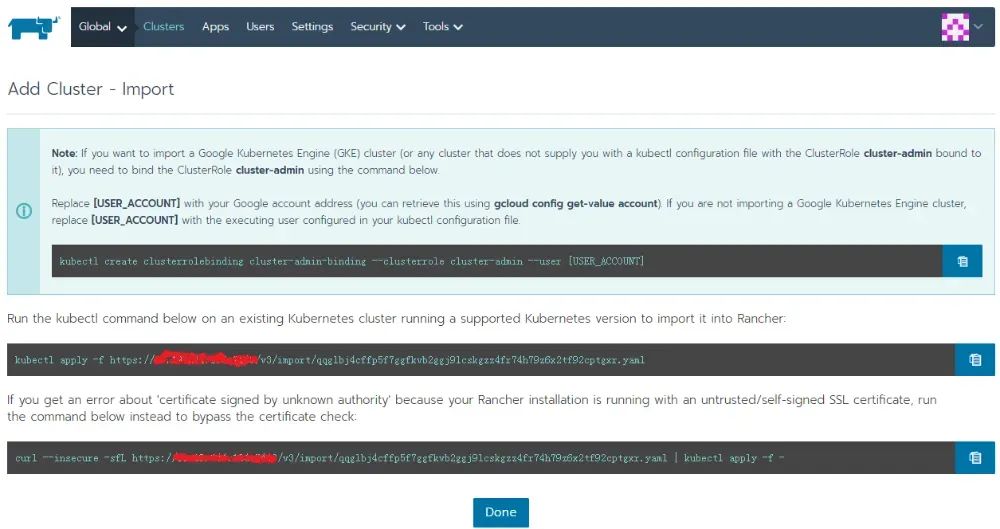

Add Cluster Import an existing cluster Cluster Name: Kubernetes

curl --insecure -sfL https://*.*.206.40 /v3/import/qqglbj4cffp5f7ggfkvb2ggj9lcskgzz4fr74h79z6x2tf92cptgxr.yaml | kubectl apply -f –

kubectl get ns

kubectl get pods -n cattle-system -o wide

NAMESPACE NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

cattle-system cattle-cluster-agent-5f49cbcb67-r8wbl 1/1 Running 0 47s 10.244.1.29 cent7-k8s-slave03 <none> <none>

cattle-system cattle-node-agent-4mzvh 1/1 Running 0 9s *.*.133.25 cent7-k8s-slave04 <none> <none>

cattle-system cattle-node-agent-dmg96 1/1 Running 0 5s *.*.133.41 cent7-k8s-slave01 <none> <none>

cattle-system cattle-node-agent-fx59q 1/1 Running 0 25s *.*.133.40 cent7-k8s-master <none> <none>

cattle-system cattle-node-agent-j57sn 1/1 Running 0 39s *.*.133.42 cent7-k8s-slave02 <none> <none>

k8s集群搬迁

尝试修改k8s集群IP; 重建k8s集群。

sed -i "s/*.*.206.40/*.*.133.40/g" `grep -rl "*.*.206.40" /etc/`

sed -i "s/*.*.206.40/*.*.133.40/g" `grep -rl "*.*.206.40" $HOME/`

mv$HOME/.kube/cache/discovery/*.*.206.40_6443 $HOME/.kube/cache/discovery/*.*.133.40_6443

ansible all -mcommand -a'systemctl daemon-reload'

ansible node -mcommand -a'reboot'

reboot

mv etc/kubernetes/pki/apiserver.key etc/kubernetes/pki/apiserver.key.old

mv etc/kubernetes/pki/apiserver.crt etc/kubernetes/pki/apiserver.crt.old

mv etc/kubernetes/pki/apiserver-kubelet-client.crt etc/kubernetes/pki/apiserver-kubelet-client.crt.old

mv etc/kubernetes/pki/apiserver-kubelet-client.key etc/kubernetes/pki/apiserver-kubelet-client.key.old

mv /etc/kubernetes/pki/front-proxy-client.crt /etc/kubernetes/pki/front-proxy-client.crt.old

mv /etc/kubernetes/pki/front-proxy-client.key /etc/kubernetes/pki/front-proxy-client.key.old

kubeadm init phase certs all

kubectl get configmaps -n kube-system -o yaml > kube-system_configmaps.yaml

kubectl get configmaps -n kube-public -o yaml > kube-public_configmaps.yaml

perl -p -i -e's/*.*.206.40/*.*.133.40/g' kube-system_configmaps.yaml

perl -p -i -e's/*.*.206.40/*.*.133.40/g' kube-public_configmaps.yaml

kubectl apply -f kube-system_configmaps.yaml

kubectl apply -f kube-public_configmaps.yaml

ansible node -mcopy -a'src=/etc/ntp.conf dest=/etc/ntp.conf'

ansible all -mcommand -a'systemctl start ntpd'

ansible all -mcommand -a'ntpdate XX.XX.244.52'

kubeadm reset

systemctl daemon-reload && systemctl restart kubelet

k8s应用迁移

configmaps; deployments; endpoints; limitranges; namespaces; secrets; services。

kubectl get configmaps -n business -o yaml > configmaps_default.yaml

kubectl get deployments -n business -o yaml > deployments_default.yaml

kubectl get secrets sc-first -n business -o yaml > sc-first.yaml

kubectl get secrets sc-second -n business -o yaml > sc-second.yaml

kubectl get secrets sc-third -n default -o yaml > sc-third.yaml

kubectl apply -f configmaps_default.yaml --validate=false

kubectl apply -f deployments_default.yaml --validate=false

kubectl apply -f sc-first.yaml --validate=false

kubectl label node cent7-k8s-slave03 zone="xxx"

dockersave-obusiness_app.tar *.*.133.100:20202/business/business_app:1.1

docker load -i business_app.tar

kubectl expose deployment business_app -n business --port=19001 --target-port=19002

--port为service端口(一个随机创建的ClusterIP的端口); --target-port为容器内部IP的端口。

kubectl expose deployment business_app -n business --port=19001 --target-port=19002 --type=NodePort

kubectl get svc -n business business_app -o yaml > business_app.yaml

perl -p -i -e's/nodePort\: 30142/nodePort\: 19001/g' business_app.yaml

kubectl apply -f business_app.yaml

本文作者:徐兴强(上海新炬中北团队)

本文来源:“IT那活儿”公众号

文章转载自IT那活儿,如果涉嫌侵权,请发送邮件至:contact@modb.pro进行举报,并提供相关证据,一经查实,墨天轮将立刻删除相关内容。