提问:作为DBA运维的你是否遇到过这些烦恼

1、现场进行项目部署测试时,突然发生slave节点的sql线程异常?

2、唯一索引列中居然存在重复数据,且因唯一键重复导致数据无法插入?

心中有章,遇事不慌

作为DBA的你,遇到问题无从下手,除了在问题面前徘徊,还能如何选择?如果你一次或多次遇到该问题还是无法解决,又很懊恼,该如何排忧呢?关注公众号,关注《包拯断案》专栏,让小编为你排忧解难~

一整套故障排错及应对策略送给你,让你像包拯一样断案如神:

#首先

遇到此类问题后,我们要做到心中有章(章程),遇事不慌。一定要冷静,仔细了解故障现象(与研发/用户仔细沟通其反馈的问题,了解故障现象、操作流程、数据库架构等信息)

#其次

我们要根据故障现象进行初步分析。心中要想:什么情况下,客户会禁用“会话级唯一性检查”?例如:是客户输入了重复数据,还是因为误操作导致的?

#然后

针对上述思考,我们需要逐步验证并排除,确定问题排查方向。

#接着

确定了问题方向,进行具体分析。通过现象得出部分结论,通过部分结论继续排查并论证。

#最后

针对问题有了具体分析后,再进行线下复现,最终梳理故障报告。

真刀实战,我们能赢

说了这么多理论,想必实战更让你心动。那我们就拿一个真实案例进行分析——某商业银行客户的开发测试环境,使用GreateRouter三节点集中式数据库产品,后端的数据节点是1主2从半同步复制GreatDB。

现场DBA发现slave节点的sql线程异常,show slave status检查Slave_SQL_Running=No,Last_Errno=1062。

greatdb> show slave status \G*************************** 1. row ***************************Slave_IO_State: Waiting for source to send event......Last_Errno: 1062Last_Error: Coordinator stopped because there were error(s) in the worker(s). The most recent failure being: Worker 1 failed executing transaction '1b79061d-71ae-11ef-9633-00163e2cb875:17436241' at master log greatdb-bin.000288, end_log_pos 362599392.. See error log and/or performance_schema.replication_applier_status_by_worker table for more details about this failure or others, if any.......

检查表performance_schema.replication_applier_status_by_worker,显示信息如下:

greatdb> select * from performanceschema.replication_applier_status \G....LAST_ERROR_NUMBER: 1062LAST_ERROR_MESSAGE: Wroker 1 failed executing transaction '1b79061d-71ae-11ef-9633-00163e2cb875:17436241' at master log greatdb-bin.000288, end_log_pos 362599392; Could not execute Write_rows event on table xxxx; Duplicate entry 'Ms001-0xx-20230901-1' for key 'fnd_rixin_intrt.UK0_Fnd_Rixin_intrt', Error_code: 1062; handler error HA_ERR_FOUND_DUPP_KEY; the event's master log First, end_log_pos 362599392...

提示表nfesp_fesl1_db_jy.fnd_rixin_intrt的唯一索引UK0_fnd_Rixin_Intrt有重复数据。

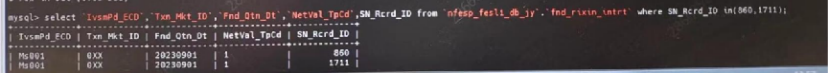

检查表nfesp_fesl1_db_jy.fnd_rixin_intrt的信息,有唯一索引UK0_fnd_Rixin_Intrt ( IvsmPd_ECD , Txn_Mkt_ID , Fnd_Qtn_Dt , NetVal_TpCd ) ,检查mysql主库中的数据,发现SN_Rcrd_ID=860和1711的2条数据唯一索引列完全相同。

问题总结

1主2从GreatDB架构中,nfesp_fesl1_db_jy.fnd_rixin_intrt表在master节点中,唯一索引列存在2条重复数据;重复数据的第2条在slave节点上回放时,由于唯一键重复而无法插入,导致sql_thread停止。

问题分析

使用greatsqlbinlog解析master节点的binlog文件greatdb-bin.000288,检查该事务是一个多值insert语句。在gtid事务中,有如下设置会话变量的信息:

SET @@session.foreign_key_checks=1, @@session.sql_auto_is_null=0,@@session.unique_checks=0, @@session.autocommit=1/*!*/;

该信息表明,在执行该事务时,用户修改了会话级变量unique_checks,禁用了唯一性检查。

2.1 源码分析

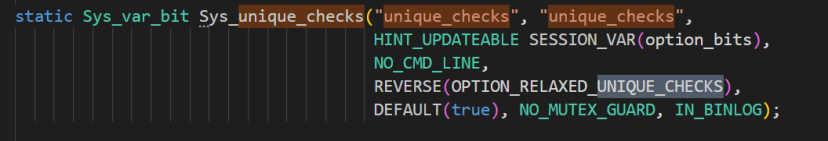

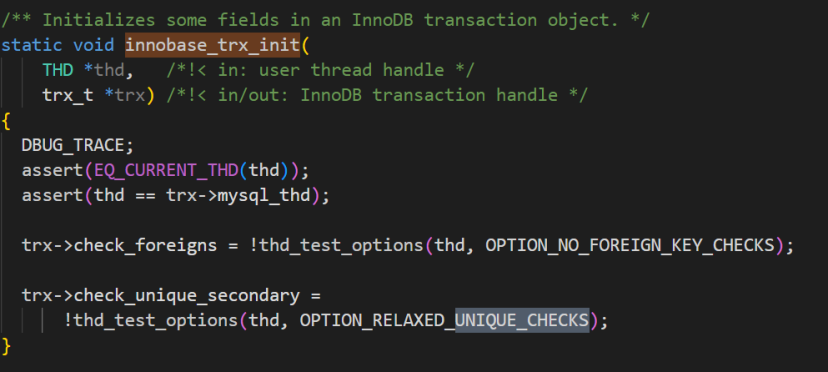

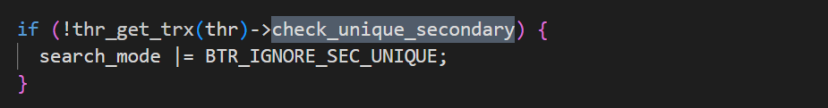

unique_checks 的设置是改变option_bits变量第OPTION_RELAXED_UNIQUE_CHECKS位的值,该值最终会保存到事务结构trx->check_unique_secondary上:

在函数row_ins_sec_index_entry_low中,转换为

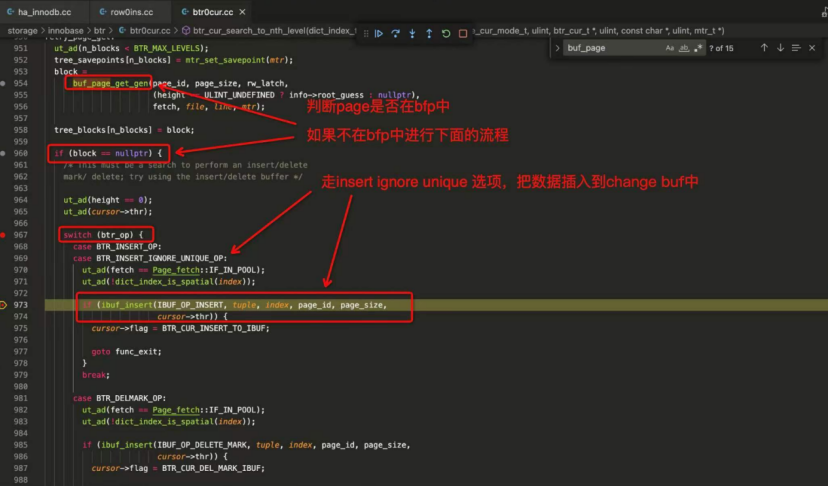

在函数btr_cur_search_to_nth_level(Searches an index tree and positions a tree cursor on a given level.)中的处理如下:

先在buffer pool中扫描寻找对应的页面,如果找不到(即block =nullptr),btr_op=BTR_INSERT_IGNORE_UNIQUE_OP,则不检查数据的唯一性,直接插入到change buffer中。

总结:从源码上看,满足以下几个条件可能导致唯一索引插入重复值

禁用唯一索引检查unique_checks=OFF

使用了change buffer

要更新的唯一索引页不在buffer pool中

2.2 问题复现

1.检查环境变量,使用change buffer

greatdb> show variables like 'unique%';+---------------+-------+| Variable_name | Value |+---------------+-------+| unique_checks | ON |+---------------+-------+1 row in set (0.01 sec)greatdb> show variables like 'innodb_change_buffering';+-------------------------+-------+| Variable_name | Value |+-------------------------+-------+| innodb_change_buffering | all |+-------------------------+-------+1 row in set (0.01 sec)

2.创建测试表sbtest_uq,在(c,pad)上创建唯一索引

CREATE TABLE `sbtest_uq` (`id` int NOT NULL AUTO_INCREMENT,`k` int NOT NULL DEFAULT '0',`c` char(120) NOT NULL DEFAULT '',`pad` char(60) NOT NULL DEFAULT '',PRIMARY KEY (`id`),KEY `k_1` (`k`),unique key `uk_c_pad`(`c`,`pad`)) ENGINE=InnoDB AUTO_INCREMENT=1000001 DEFAULT CHARSET=utf8mb4 COLLATE=utf8mb4_0900_ai_ci;

3.在sbtest_uq中插入唯一索引列(c,pad)='1','1'的一条数据(id=1)

greatdb> truncate table sbtest_uq;Query OK, 0 rows affected (0.10 sec)greatdb> insert into sbtest_uq(id,k,c,pad) values(1,1,'1','1');Query OK, 1 row affected (0.01 sec)greatdb> insert into sbtest_uq select * from sbtest1 where id>1;Query OK, 999999 rows affected (55.99 sec)Records: 999999 Duplicates: 0 Warnings: 0

4.在master节点执行其他DML操作,将sbtest_uq从buffer pool中挤出

#'执行其他操作,将sbtest_uq从buffer pool中挤出'greatdb>truncate table sbtest12;Query OK, 0 rows affected (0.51 sec)greatdb>insert into sbtest12 select * from sbtest2;Query OK, 1000000 rows affected (28.36 sec)Records: 1000000 Duplicates: 0 Warnings: 0#'检查buffer pool中已没有sbtest_uq'greatdb>select * from sys.innodb_buffer_stats_by_table where object_schema='test' and object_name='sbtest_uq';Empty set (0.26 sec)

5.slave节点1:确认buffer pool中没有表sbtest_uq的数据

#'检查buffer pool中已经没有sbtest_uq'greatdb> select * from sys.innodb_buffer_stats_by_table where object_schema='test' and object_name='sbtest_uq';Empty set (0.26 sec)

6.slave节点2:将sbtest_uq的索引数据加载到buffer pool中

greatdb> select * from sbtest_uq where c='1' and pad='1';+----+---+---+-----+| id | k | c | pad |+----+---+---+-----+| 1 | 1 | 1 | 1 |+----+---+---+-----+1 row in set (0.19 sec)greatdb> select * from sys.innodb_buffer_stats_by_table where object_schema='test' and object_name='sbtest_uq';+---------------+-------------+------------+-----------+-------+--------------+-----------+-------------+| object_schema | object_name | allocated | data | pages | pages_hashed | pages_old | rows_cached |+---------------+-------------+------------+-----------+-------+--------------+-----------+-------------+| test | sbtest_uq | 112.00 KiB | 48.45 KiB | 7 | 0 | 7 | 413 |+---------------+-------------+------------+-----------+-------+--------------+-----------+-------------+1 row in set (0.28 sec)

7.在master节点插入唯一索引列(c,pad)='1','1'的另一条数据(id=9999999)

#'关闭唯一键检查,插入重复数据'greatdb> set unique_checks=OFF;Query OK, 0 rows affected (0.00 sec)#'插入重复数据'greatdb> insert into sbtest_uq(id,k,c,pad) values(9999999,1,'1','1');Query OK, 1 row affected (0.01 sec)

8.检查master和slave上的数据及复制状态

master节点:(c,pad)列上已存在重复数据

#'查询表sbtest_uq,确认c+pad列上已存在重复数据'greatdb> select * from sbtest_uq where id in(1,9999999);+---------+---+---+-----+| id | k | c | pad |+---------+---+---+-----+| 1 | 1 | 1 | 1 || 9999999 | 1 | 1 | 1 |+---------+---+---+-----+2 rows in set (0.00 sec)

slave节点1:(c,pad)列上已存在重复数据;复制线程Slave_SQL_Running=Yes

greatdb> select * from sbtest_uq where id in(1,9999999);+---------+---+---+-----+| id | k | c | pad |+---------+---+---+-----+| 1 | 1 | 1 | 1 || 9999999 | 1 | 1 | 1 |+---------+---+---+-----+2 rows in set (0.07 sec)greatdb> show slave status\G*************************** 1. row ***************************Slave_IO_State: Waiting for source to send event。。。。。。Slave_IO_Running: YesSlave_SQL_Running: Yes。。。。。。Retrieved_Gtid_Set: e6ea0eeb-591c-11ef-8984-00163e919d0a:209358-209371Executed_Gtid_Set: e6cbf195-591c-11ef-99d9-00163e33cf00:1-1572367,e6d361d5-591c-11ef-b1d5-00163e0ea4a5:1-138208,e6ea0eeb-591c-11ef-8984-00163e919d0a:1-209371......1 row in set, 1 warning (0.00 sec)

slave节点2:(c,pad)列上没有重复数据;复制线程Slave_SQL_Running=No,提示唯一键重复

greatdb> select * from sbtest_uq where id in(1,9999999);+----+---+---+-----+| id | k | c | pad |+----+---+---+-----+| 1 | 1 | 1 | 1 |+----+---+---+-----+1 row in set (0.00 sec)greatdb> show slave status\G*************************** 1. row ***************************Slave_IO_State: Waiting for source to send event......Slave_IO_Running: YesSlave_SQL_Running: No......Last_Errno: 1062Last_Error: Coordinator stopped because there were error(s) in the worker(s). The most recent failure being: Worker 1 failed executing transaction 'e6ea0eeb-591c-11ef-8984-00163e919d0a:209371' at master log greatdb-bin.000020, end_log_pos 606. See error log and/or performance_schema.replication_applier_status_by_worker table for more details about this failure or others, if any.......Last_SQL_Errno: 1062Last_SQL_Error: Coordinator stopped because there were error(s) in the worker(s). The most recent failure being: Worker 1 failed executing transaction 'e6ea0eeb-591c-11ef-8984-00163e919d0a:209371' at master log greatdb-bin.000020, end_log_pos 606. See error log and/or performance_schema.replication_applier_status_by_worker table for more details about this failure or others, if any.......Retrieved_Gtid_Set: e6ea0eeb-591c-11ef-8984-00163e919d0a:209366-209371Executed_Gtid_Set: e6cbf195-591c-11ef-99d9-00163e33cf00:1-1572367,e6d361d5-591c-11ef-b1d5-00163e0ea4a5:1-138208,e6ea0eeb-591c-11ef-8984-00163e919d0a:1-209370......1 row in set, 1 warning (0.00 sec)greatdb> select * fromperformance_schema.replication_applier_status_by_worker\G*************************** 1. row ***************************CHANNEL_NAME:WORKER_ID: 1THREAD_ID: NULLSERVICE_STATE: OFFLAST_ERROR_NUMBER: 1062LAST_ERROR_MESSAGE: Worker 1 failed executing transaction 'e6ea0eeb-591c-11ef-8984-00163e919d0a:209371' at master log greatdb-bin.000020, end_log_pos 606; Could not execute Write_rows event on table test.sbtest_uq; Duplicate entry '1-1' for key 'sbtest_uq.uk_c_pad', Error_code: 1062; handler error HA_ERR_FOUND_DUPP_KEY; the event`s master log greatdb-bin.000019, end_log_pos 606LAST_ERROR_TIMESTAMP: 2024-09-14 09:35:47.947584LAST_APPLIED_TRANSACTION: e6ea0eeb-591c-11ef-8984-00163e919d0a:209370LAST_APPLIED_TRANSACTION_ORIGINAL_COMMIT_TIMESTAMP: 2024-09-14 09:31:27.529353LAST_APPLIED_TRANSACTION_IMMEDIATE_COMMIT_TIMESTAMP: 2024-09-14 09:31:27.529353LAST_APPLIED_TRANSACTION_START_APPLY_TIMESTAMP: 2024-09-14 09:31:28.832359LAST_APPLIED_TRANSACTION_END_APPLY_TIMESTAMP: 2024-09-14 09:31:55.294233APPLYING_TRANSACTION: e6ea0eeb-591c-11ef-8984-00163e919d0a:209371APPLYING_TRANSACTION_ORIGINAL_COMMIT_TIMESTAMP: 2024-09-14 09:35:47.858275APPLYING_TRANSACTION_IMMEDIATE_COMMIT_TIMESTAMP: 2024-09-14 09:35:47.858275APPLYING_TRANSACTION_START_APPLY_TIMESTAMP: 2024-09-14 09:35:47.861972LAST_APPLIED_TRANSACTION_RETRIES_COUNT: 0LAST_APPLIED_TRANSACTION_LAST_TRANSIENT_ERROR_NUMBER: 0LAST_APPLIED_TRANSACTION_LAST_TRANSIENT_ERROR_MESSAGE:LAST_APPLIED_TRANSACTION_LAST_TRANSIENT_ERROR_TIMESTAMP: 0000-00-00 00:00:00.000000APPLYING_TRANSACTION_RETRIES_COUNT: 0APPLYING_TRANSACTION_LAST_TRANSIENT_ERROR_NUMBER: 0APPLYING_TRANSACTION_LAST_TRANSIENT_ERROR_MESSAGE:APPLYING_TRANSACTION_LAST_TRANSIENT_ERROR_TIMESTAMP: 0000-00-00 00:00:00.000000#'完成事务回放的方法:stop slave;查询其他大表,将sbtest_uq从buffer pool中挤出;start slave;'

小结

测试过程验证了可以在唯一索引列中插入重复数据:在主从复制的slave节点,如果buffer pool中没有唯一索引信息,该事务会正常回放,插入重复数据;如果buffer pool中存在唯一索引信息,该事务回放时,会由于主键重复而失败,从而导致sql线程中断。

回到客户的问题:由于buffer pool中没有索引UK0_fnd_Rixin_Intrt的信息,所以在master节点能插入重复数据;但slave端回放binlog时,索引UK0_fnd_Rixin_Intrt信息在buffer pool中,会由于主键重复而导致sql线程中断。

复盘总结

应用系统禁用唯一索引检查(设置unique_checks=OFF),一般是为了提高数据加载速度,但前提是确保唯一索引列的数据唯一。在不能确保唯一性时,请不要禁用唯一性检查,否则可能导致数据重复,对业务的正常运行造成威胁。