MySQL Group Replication(MGR)基于 shared-nothing架构,通过多节点完整数据副本实现数据强一致性,并支持故障自动切换与主节点选举。自MySQL 8.0.23版本后稳定性显著提升,本文结合生产实践总结关键优化经验。

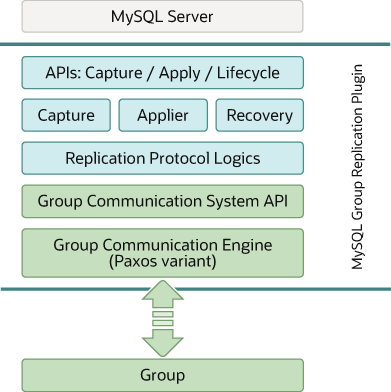

技术架构图

一、MGR参数建议与避坑总结

1、单主模式

建议使用单主模式。MGR依赖Paxos协议实现多节点共识,在多主模式下,每个事务需在多数节点认证(Certify)并排序,容易出现冲突、网络抖动引起的事务回滚,并且需跨节点分析日志,运维成本高。另外MGR不检测DDL冲突,多主模式下,若不同节点并发执行DDL和DML,可能破坏表结构或数据完整性。

loose-group_replication_single_primary_mode=ON

2、存储引擎

建议使用InnoDB引擎。 MGR基于Paxos协议实现多节点数据强一致性,要求事务具备原子性(提交或回滚)。InnoDB是MySQL唯一支持完整 ACID 事务的引擎。另外使用mysql clone插件时,非innodb引擎会引发报错。

default_storage_engine = "InnoDB" # 默认使用innodb引擎

disabled_storage_engines = "MyISAM" # 禁用MyISAM引擎

3、强制主键

所有表必须要有主键或者唯一非空健。 MGR要求 binlog_format=ROW,该格式依赖主键高效定位修改行。无主键时,ROW 格式可能退化为全表扫描,大幅降低复制效率。另外在使用mysql clone插件时,无主键也会引发报错。

sql_require_primary_key = ON # 所有表必须有主键

sql_generate_invisible_primary_key=ON # 自动为无主键表创建隐式主键,8.0.30引入

4、限制大事务

<1> MGR 心跳机制敏感,依赖节点间的周期性心跳(硬编码5秒)检测存活。若事务处理时间过长(如传输大事务阻塞通信线程),节点可能因未及时响应心跳被误判为故障,触发自动驱逐(group_replication_member_expel_timeout)。

<2> 减轻 XCom 引擎压力,MGR 的组通信引擎(XCom)为单线程设计,负责事务消息的分发与排序。大事务会长时间占用 XCom 线程,导致心跳消息堆积,触发节点驱逐。限制事务大小可以显著降低单事务处理时长,避免 XCom 成为性能瓶颈。

# 默认值150MB,但建议调低在20MB以内,不要使用大事务

loose-group_replication_transaction_size_limit = 20M

5、表名大小写设置

所有节点必须统一大小写规则。 表名作为元数据的一部分,若大小写不统一,MGR冲突检测机制可能无法正确关联同一张表的数据变更,误判冲突或漏判冲突。

lower_case_table_names = 1

6、隔离级别

Gap Lock 是 InnoDB 在 REPEATABLE READ 隔离级别下为防幻读引入的锁机制,其锁定的是索引记录之间的“间隙”。然而,Gap Lock 的信息仅在 InnoDB 引擎内部维护,无法通过 Write Set 暴露给 MGR 的分布式冲突检测层。因此MGR无法支持gap lock,官方推荐 READ COMMITTED 隔离级别。

transaction_isolation = 'READ-COMMITTED'

7、禁用压缩

虽然禁用压缩会导致带宽压力变大,但是可以消除压缩/解压的CPU 开销,提升事务处理吞吐量。另外最重要一点,禁用压缩后,DBA可以使用第三方工具(binlog2sql、my2sql等)解析binlog、生成sql本文,这对dml误操作后的数据恢复非常有利。

loose-group_replication_compression_threshold=0

8、关闭流控

流控触发时会阻塞本地事务提交,导致业务响应延迟增加,在高并发场景下可能引发吞吐量骤降,应用程序无响应等问题,甚至对业务有损,权衡利弊后,建议关闭。

loose-group_replication_flow_control_mode = "DISABLED"

9、禁用hash_scan算法

参数slave_rows_search_algorithms用于控制从库在应用binlog时如何定位数据行。当表缺少主键或者唯一索引,且指定算法为HASH_SCAN时存在哈希碰撞风险,这可能导致从库误更新/删除非目标行,造成数据不一致。

slave_rows_search_algorithms=INDEX_SCAN,TABLE_SCAN

二、MGR故障切换流程与相关参数优化

2.1 先看一个测试案例

测试案例中,使用下面两个参数值

group_replication_member_expel_timeout=30

group_replication_unreachable_majority_timeout=80 # 为了便于观察,暂设置为80秒

故障测试前的集群状态

root@localhost: 10:24: [(none)]> select MEMBER_HOST,MEMBER_STATE,MEMBER_ROLE,MEMBER_VERSION from performance_schema.replication_group_members;

+----------------+--------------+-------------+----------------+

| MEMBER_HOST | MEMBER_STATE | MEMBER_ROLE | MEMBER_VERSION |

+----------------+--------------+-------------+----------------+

| node03 | ONLINE | PRIMARY | 8.0.28 |

| node02 | ONLINE | SECONDARY | 8.0.28 |

| node01 | ONLINE | SECONDARY | 8.0.28 |

+----------------+--------------+-------------+----------------+

3 rows in set (0.00 sec)

模拟故障:此时primary节点是node3,断开node3与另外两个节点的网络,然后观察日志变化。

日志变化

<1> 5秒后,心跳检测网络中断,所有节点检测到网络不可达,并更新集群状态视图。

#少数派节点(node3)

2023-05-27T13:16:01.716803+08:00 0 [Warning] [MY-011493] [Repl] Plugin group_replication reported: 'Member with address mysqlwjhtest01:3307 has become unreachable.'

2023-05-27T13:16:01.716934+08:00 0 [Warning] [MY-011493] [Repl] Plugin group_replication reported: 'Member with address mysqlwjhtest02:3307 has become unreachable.'

2023-05-27T13:16:01.716951+08:00 0 [ERROR] [MY-011496] [Repl] Plugin group_replication reported: 'This server is not able to reach a majority of members in the group. This server will now block all updates. The server will remain blocked for the next 85 seconds. Unless contact with the majority is restored, after this time the member will error out and leave the group. It is possible to use group_replication_force_members to force a new group membership.'

2023-05-27T13:17:26.724015+08:00 71231 [ERROR] [MY-011711] [Repl] Plugin group_replication reported: 'This member could not reach a majority of the members for more than 85 seconds. The member will now leave the group as instructed by the group_replication_unreachable_majority_timeout option.'

#多数派节点(node1、node2)

2023-05-27T13:16:01.719345+08:00 0 [Warning] [MY-011493] [Repl] Plugin group_replication reported: 'Member with address mysqlwjhtest03:3307 has become unreachable.'

<2> 35秒后,多数派节点(node1、node2)将node3逐出集群,并新主node2,更新状态视图。少数派节点(故障节点)无日志输出。

2023-05-27T13:16:32.706137+08:00 0 [Warning] [MY-011499] [Repl] Plugin group_replication reported: 'Members removed from the group: mysqlwjhtest03:3307'

2023-05-27T13:16:32.706150+08:00 0 [System] [MY-011500] [Repl] Plugin group_replication reported: 'Primary server with address mysqlwjhtest03:3307 left the group. Electing new Primary.'

2023-05-27T13:16:32.706259+08:00 0 [Note] [MY-013519] [Repl] Plugin group_replication reported: 'Elected primary member gtid_executed: debbaad4-1fd7-4652-80a4-5c87cfde0db6:1-19'

2023-05-27T13:16:32.706272+08:00 0 [Note] [MY-013519] [Repl] Plugin group_replication reported: 'Elected primary member applier channel received_transaction_set: debbaad4-1fd7-4652-80a4-5c87cfde0db6:1-19'

2023-05-27T13:16:32.706284+08:00 0 [System] [MY-011507] [Repl] Plugin group_replication reported: 'A new primary with address mysqlwjhtest02:3307 was elected. The new primary will execute all previous group transactions before allowing writes.'

<3> 85秒后,少数派节点(node3)更改自己状态,由online改为error。多数派节点无日志输出。

2023-05-27T13:17:26.724015+08:00 71231 [ERROR] [MY-011711] [Repl] Plugin group_replication reported: 'This member could not reach a majority of the members for more than 85 seconds. The member will now leave the group as instructed by the group_replication_unreachable_majority_timeout option.'

2023-05-27T13:17:26.724483+08:00 0 [Note] [MY-011735] [Repl] Plugin group_replication reported: '[GCS] xcom_client_remove_node: Try to push xcom_client_remove_node to XCom'

2023-05-27T13:17:26.724560+08:00 71231 [ERROR] [MY-011712] [Repl] Plugin group_replication reported: 'The server was automatically set into read only mode after an error was detected.'

2023-05-27T13:17:26.725179+08:00 71231 [System] [MY-011565] [Repl] Plugin group_replication reported: 'Setting super_read_only=ON.'

2023-05-27T13:17:26.725340+08:00 71231 [Note] [MY-011647] [Repl] Plugin group_replication reported: 'Going to wait for view modification'

<4> 115秒以后,少数派节点(node3)从自己的集群状态视图中踢出node1、node2节点信息,然后auto-rejoin尝试加入集群(如果在85s-115s间网络未恢复,则加入失败)。

2023-05-27T13:17:56.296998+08:00 0 [ERROR] [MY-011735] [Repl] Plugin group_replication reported: '[GCS] Timeout while waiting for the group communication engine to exit!'

2023-05-27T13:17:56.297075+08:00 0 [ERROR] [MY-011735] [Repl] Plugin group_replication reported: '[GCS] The member has failed to gracefully leave the group.'

2023-05-27T13:17:56.396287+08:00 0 [System] [MY-011504] [Repl] Plugin group_replication reported: 'Group membership changed: This member has left the group.'

2023-05-27T13:17:56.396530+08:00 72720 [System] [MY-013373] [Repl] Plugin group_replication reported: 'Started auto-rejoin procedure attempt 1 of 3'

2023-05-27T13:17:56.397353+08:00 71913 [Note] [MY-010596] [Repl] Error reading relay log event for channel 'group_replication_applier': slave SQL thread was killed

2023-05-27T13:17:56.397914+08:00 71913 [Note] [MY-010587] [Repl] Slave SQL thread for channel 'group_replication_applier' exiting, replication stopped in log 'FIRST' at position 0

2023-05-27T13:17:56.398188+08:00 71911 [Note] [MY-011444] [Repl] Plugin group_replication reported: 'The group replication applier thread was killed.'

2023-05-27T13:17:56.398830+08:00 72720 [Note] [MY-011673] [Repl] Plugin group_replication reported: 'Group communication SSL configuration: group_replication_ssl_mode: "DISABLED"'

2023-05-27T13:17:56.400717+08:00 72720 [Note] [MY-011735] [Repl] Plugin group_replication reported: '[GCS] Debug messages will be sent to: asynchronous::/data/mysql/data/GCS_DEBUG_TRACE'

2023-05-27T13:17:56.400984+08:00 72720 [Warning] [MY-011735] [Repl] Plugin group_replication reported: '[GCS] Automatically adding IPv4 localhost address to the allowlist. It is mandatory that it is added.'

2023-05-27T13:17:56.401014+08:00 72720 [Warning] [MY-011735] [Repl] Plugin group_replication reported: '[GCS] Automatically adding IPv6 localhost address to the allowlist. It is mandatory that it is added.'

少数派节点(node3)集群视图变化

#5秒后

root@localhost: 14:05: [(none)]> select MEMBER_HOST,MEMBER_STATE,MEMBER_ROLE,MEMBER_VERSION from performance_schema.replication_group_members;system date;

+----------------+--------------+-------------+----------------+

| MEMBER_HOST | MEMBER_STATE | MEMBER_ROLE | MEMBER_VERSION |

+----------------+--------------+-------------+----------------+

| mysqlwjhtest01 | UNREACHABLE | SECONDARY | 8.0.28 |

| mysqlwjhtest03 | ONLINE | PRIMARY | 8.0.28 |

| mysqlwjhtest02 | UNREACHABLE | SECONDARY | 8.0.28 |

+----------------+--------------+-------------+----------------+

3 rows in set (0.00 sec)

#85秒后

root@localhost: 14:07: [(none)]> select MEMBER_HOST,MEMBER_STATE,MEMBER_ROLE,MEMBER_VERSION from performance_schema.replication_group_members;system date;

+----------------+--------------+-------------+----------------+

| MEMBER_HOST | MEMBER_STATE | MEMBER_ROLE | MEMBER_VERSION |

+----------------+--------------+-------------+----------------+

| mysqlwjhtest01 | UNREACHABLE | SECONDARY | 8.0.28 |

| mysqlwjhtest03 | ERROR | | 8.0.28 |

| mysqlwjhtest02 | UNREACHABLE | SECONDARY | 8.0.28 |

+----------------+--------------+-------------+----------------+

3 rows in set (0.00 sec)

#115秒后

root@localhost: 14:08: [(none)]> select MEMBER_HOST,MEMBER_STATE,MEMBER_ROLE,MEMBER_VERSION from performance_schema.replication_group_members;system date;

+----------------+--------------+-------------+----------------+

| MEMBER_HOST | MEMBER_STATE | MEMBER_ROLE | MEMBER_VERSION |

+----------------+--------------+-------------+----------------+

| mysqlwjhtest03 | ERROR | | 8.0.28 |

+----------------+--------------+-------------+----------------+

1 row in set (0.01 sec)

2.2 案例中的集群状态变化分析

几个重要的时间点

- <1> 网络中断5秒时,心跳检测超时,少数派节点和多数派节点均将可疑节点设置为unreachable。

- <2> 网络中断35秒时,多数派节点将可疑节点逐出集群,同时会选举出新的主库。

- <3> 网络中断85秒时,少数派节点(故障节点)将自己的状态改为error。(如果在85秒前网络恢复,则集群自动恢复)

- <4> 网络中断115秒时,少数派节点(故障节点)将两个失联节点逐出集群。(如果在115秒前网络恢复,则在115秒时mgr先逐出可疑节点,然后auto-rejoin尝试将error节点重新加入集群,且可以成功加入。)

mgr参数值和以上时间点的关系

- 35秒:心跳检测5s + group_replication_member_expel_timeout=30s

- 85秒:心跳检测5s + group_replication_unreachable_majority_timeout=80s

- 115秒:心跳检测5s + group_replication_unreachable_majority_timeout=80s + (试图退出集群,硬编码超时时间30秒 WAITING_TIME = 30s)

mgr相关参数的作用

1、group_replication_member_expel_timeout 表示多数派中驱逐unreable节点的时间,也就是容忍多少秒,看看网络是否能够恢复。同时也表示多数派选举新主的时间,驱逐后会立马选择新主;

2、少数派节点首先读取参数group_replication_unreachable_majority_timeout,到达超时时间后进入error状态。然后节点做退出操作,试图通知其他节点,但是由于无法通信造成超时,超时时间同样为30秒,这里大概流程如下:

unreable参数打开,partition线程

Group_partition_handling::partition_thread_handler

->leave_group_on_failure::leave

->Gcs_operations::leave

->Gcs_xcom_control::leave

->Gcs_xcom_notification *notification = new Control_notification(do_function_leave, this);

bool scheduled = m_gcs_engine->push(notification);

发送操作给xcom engine 。

xcom engine 操作,

Gcs_xcom_control::do_leave

-> m_xcom_proxy->xcom_remove_node(*m_local_node_info, m_gid_hash)

->Gcs_xcom_proxy_base::xcom_remove_nodes

->xcom_client_remove_node

->xcom_send_cfg_wait

通知其他节点进行配置

-> int is_xcom_exit = m_xcom_proxy->xcom_wait_exit()

等待xcom 的新配置处理完成,这里30秒超时

/*

Time is defined in seconds.

*/

static const unsigned int WAITING_TIME = 30;

紧接着触发auto-rejoin重试(group_replication_autorejoin_tries)。

3、group_replication_unreachable_majority_timeout参数的值必须大于group_replication_member_expel_timeout,否则故障节点会先于大多数节点的容忍时间而将自身状态设置为ERROR。

2.3 故障检测流程

结合上面的案例,我们来看看 Group Repliction 的故障检测流程。

-

集群中每个节点都会定期(每秒 1 次)向其它节点发送心跳信息。如果在 5s 内(固定值,无参数调整)没有收到其它节点的心跳信息,则会将该节点标记为可疑节点,同时会将该节点的状态设置为 UNREACHABLE 。如果集群中有等于或超过 1/2 的节点显示为 UNREACHABLE ,则该集群不能对外提供写服务。

-

如果在group_replication_member_expel_timeout(从 MySQL 8.0.21 开始,该参数的默认值为 5,单位 s,最大可设置值为3600,即 1 小时)时间内,多数派中可疑节点恢复正常,则会直接应用 XCom Cache 中的消息。XCom Cache 的大小由group_replication_message_cache_size 决定,默认是 1G。

-

如果在group_replication_member_expel_timeout时间内,多数派中可疑节点没有恢复正常,则会被驱逐出集群。

-

在少数派中可疑节点不会自动离开集群,

- 如果参数group_replication_unreachable_majority_timeout没有设置(默认值为0),一直处于UNREACHABLE,直到网络恢复。

- 达到 group_replication_unreachable_majority_timeout 的限制,自动设置为error。

-

如果group_replication_auto_rejoin_tries不为 0,对于 ERROR 状态的节点,会自动重试,重新加入集群(auto-rejoin)。

-

如果group_replication_auto_rejoin_tries为 0 或重试失败,则会执行 group_replication_exit_state_action 指定的操作。可选的操作有:

-

READ_ONLY:只读模式。在这种模式下,会将 super_read_only 设置为 ON。默认值。

-

OFFLINE_MODE:离线模式。在这种模式下,会将 offline_mode 和 super_read_only 设置为 ON,此时,只有CONNECTION_ADMIN(SUPER)权限的用户才能登陆,普通用户不能登录。

-

ABORT_SERVER:关闭实例。

-

2.4 故障切换参数

#适用于8.0.21以上版本

loose-group_replication_member_expel_timeout=30 # 只对多数派中可疑节点有效,超过30秒后可疑节点将被驱逐集群,默认5秒。

loose-group_replication_unreachable_majority_timeout=30 # 只对少数派有效,当超过设置的网络不可达超时时间时,会进入error状态。默认值为0,表示一直处于UNREACHABLE状态。

loose-group_replication_message_cache_size=1G # XCom cache使用的消息缓存,默认1G,最高控制在2G。

loose-group_replication_autorejoin_tries=3 # error状态节点重新加入集群的次数

通过测试案例,当参数group_replication_member_expel_timeout和group_replication_unreachable_majority_timeout的值相同时,即少数派节点进入error状态时,多数派节点恰好选举了新主,可以避免出现“双主”的现象。

三、MGR参数优化总结

[mysqld]

###### 基本参数

binlog_format = row # binlog format格式必须设为row

binlog_row_image = full

gtid_mode = on # mgr必须开启GTID

log_slave_updates = 1

enforce_gtid_consistency = 1

binlog_gtid_simple_recovery = 1

default_storage_engine = "InnoDB" # 默认使用innodb引擎

disabled_storage_engines = "MyISAM" # 禁用MyISAM引擎

sql_require_primary_key = ON # 所有表必须有主键

lower_case_table_names = 1 # 所有节点统一大小写规则

transaction_isolation = 'READ-COMMITTED' # 隔离级别为读提交

slave_rows_search_algorithms=INDEX_SCAN,TABLE_SCAN # 禁用HASH_SCAN,减少复制错误

###### mgr参数

# 单主模式

loose-group_replication_single_primary_mode=ON

# 节点重启自动加入集群

loose-group_replication_start_on_boot = off

# 并行复制度,性能最佳

binlog_transaction_dependency_tracking = WRITESET

# 多数派30秒驱逐可疑节点,并选主

loose-group_replication_member_expel_timeout=30

# 少数派35秒后进入error状态

loose-group_replication_unreachable_majority_timeout=30

# XCom cache消息缓存,大事务频繁执行时,可适当调至2G,可避免缓存溢出。

loose-group_replication_message_cache_size=1G

# 少数派进入error状态30秒后尝试加入集群的次数

loose-group_replication_autorejoin_tries=3

# 默认值150MB,但建议调低在20MB以内,不要使用大事务

loose-group_replication_transaction_size_limit = 20M

# 禁止压缩

loose-group_replication_compression_threshold=0

# 关闭流控

loose-group_replication_flow_control_mode = "DISABLED"