问题背景

某客户反馈主节点磁盘达到了85%阈值,发生切主。客户根据之前经验怀疑是发布订阅导致,已经 drop 掉,需排查根因。

分析

根据订阅端 pg_log,判断根因为数据冲突,导致发布端复制槽的 restart_lsn 无法推进,进而导致xlog堆积(restart_lsn记录了每个复制槽需要保留的XLog位置,数据库必须保留restart_lsn之后的XLog)。据了解,业务侧会在订阅端进行写入。

解决

- 设置磁盘告警;

- restart_lsn 长时间不推进时,进行告警;

- 订阅的表,将业务用户设为只读权限。

接下来在本地测试环境,对该问题进行复现分析。

1. 创建发布

- 建表

CREATE TABLE t_1 (id int, name text unique);

CREATE TABLE t_2 (id int, name text unique);

insert into t_1 values (1,'a'),(2,'b');

insert into t_2 values (1,'a'),(2,'b');

- 创建发布

CREATE PUBLICATION zc_pub FOR TABLE t_1,t_2;

zc_test=> select * from pg_publication;

pubname | pubowner | puballtables | pubinsert | pubupdate | pubdelete | pubtruncate | pubddl | ignorerefreshmatview

---------+----------+--------------+-----------+-----------+-----------+-------------+--------+----------------------

zc_pub | 19760 | f | t | t | t | t | 0 | t

(1 row)

2. 创建订阅

gs_guc generate -S "xxx" -D $GAUSSHOME/bin -o subscription

CREATE SUBSCRIPTION zc_sub

CONNECTION 'hostxxx port=xxx user=zc dbname=zc_test password=xxx'

PUBLICATION zc_pub;

postgres=# select * from pg_subscription;

-[ RECORD 1 ]-----+------------------------------------------------------------------------------------------------------------------------------------------

subdbid | 20680

subname | zc_sub

subowner | 10

subenabled | t

subconninfo | host=xxx port=xxx user=zc dbname=zc_test password=xxx

subslotname | zc_sub

subsynccommit | off

subpublications | {zc_pub}

subbinary | f

submatchddlowner | t

subskiplsn | 0/0

ignoreconninfomsg | f

3. 检查状态

- 发布端复制槽正常:

postgres=# select * from pg_replication_slots;

slot_name | plugin | slot_type | datoid | database | active | xmin | catalog_xmin | restart_lsn | dummy_standby | confirmed_flush

-----------+----------+-----------+--------+----------+--------+------+--------------+-------------+---------------+-----------------

zc_sub | pgoutput | logical | 19763 | zc_test | t | | 55302 | 0/6E1DD30 | f | 0/6E1DE20

(1 row)

- 发布端新增一条数据,可正常同步:

insert into t_1 values (3,'c');

postgres=# select * from t_1;

id | name

----+------

1 | a

2 | b

3 | c

(3 rows)

postgres=# select * from t_2;

id | name

----+------

1 | a

2 | b

3 | c

(3 rows)

4. 复现数据冲突

- 当发布的表没有数据变更时,restartlsn也是在正常推进:

[omm_oracle@panweidb dn_6001]$ cat postgresql-2025-10-09_125741.log | grep 'slotname: zc_sub, dummy: 0, restartlsn'

2025-10-09 13:27:44.327 68e740c6.3934 [unknown] 47312622798592 dn_6001 0 dn_6001 00000 0 [BACKEND] LOG: slotname: zc_sub, dummy: 0, restartlsn: 0/6E1DA70

2025-10-09 13:28:44.598 68e740c6.3934 [unknown] 47312622798592 dn_6001 0 dn_6001 00000 0 [BACKEND] LOG: slotname: zc_sub, dummy: 0, restartlsn: 0/6E1DCB0

2025-10-09 13:29:44.865 68e740c6.3934 [unknown] 47312622798592 dn_6001 0 dn_6001 00000 0 [BACKEND] LOG: slotname: zc_sub, dummy: 0, restartlsn: 0/6E1DD30

...

- 订阅段新增数据 (4,‘d’),随后发布端也插入该条数据,制造数据冲突:

insert into t_1 values (4,'d');

- 两边pg_log均出现异常,发布端 walsender 不断重启:

2025-10-09 14:22:59.087 68e754c3.10000 zc_test 47313529677568 dn_6001 0 dn_6001 57P03 0 [BACKEND] WARNING: the ha connection is not in the channel list

2025-10-09 14:22:59.089 68e754c3.3059 zc_test 47313529677568 subscription 0 dn_6001 00000 0 [BACKEND] LOG: walsender thread started

2025-10-09 14:22:59.089 68e754c3.3059 zc_test 47313529677568 subscription 0 dn_6001 00000 0 [BACKEND] LOG: received wal replication command: IDENTIFY_MODE

2025-10-09 14:22:59.089 68e754c3.3059 zc_test 47313529677568 subscription 0 dn_6001 00000 0 [BACKEND] LOG: received wal replication command: select (select setting from pg_settings where name='enable_oralob_type') as guc_setting, (select t.typname from pg_type t , pg_proc p where p.prorettype=t.oid and p.proname='empty_blob' and p.pronargs=0) as init_lob_type

2025-10-09 14:22:59.092 68e754c3.3059 zc_test 47313529677568 subscription 0 dn_6001 00000 2533274790397390 [BACKEND] LOG: received wal replication command: IDENTIFY_SYSTEM

2025-10-09 14:22:59.092 68e754c3.3059 zc_test 47313529677568 subscription 0 dn_6001 00000 2533274790397390 [BACKEND] LOG: received wal replication command: START_REPLICATION SLOT "zc_sub" LOGICAL 0/6E1F470 (proto_version '2', publication_names '"zc_pub"')

2025-10-09 14:22:59.092 68e754c3.3059 zc_test 47313529677568 subscription 0 dn_6001 00000 2533274790397390 [LOGICAL_DECODE] LOG: starting logical decoding for slot zc_sub

2025-10-09 14:22:59.092 68e754c3.3059 zc_test 47313529677568 subscription 0 dn_6001 00000 2533274790397390 [LOGICAL_DECODE] DETAIL: streaming transactions committing after 0/55001468, reading WAL from 0/55001418

2025-10-09 14:22:59.092 68e754c3.3059 zc_test 47313529677568 subscription 0 dn_6001 00000 2533274790397390 [LOGICAL_DECODE] LOG: logical decoding found consistent point at 0/55001418

2025-10-09 14:22:59.092 68e754c3.3059 zc_test 47313529677568 subscription 0 dn_6001 00000 2533274790397390 [LOGICAL_DECODE] DETAIL: running xacts with xcnt == 0

2025-10-09 14:22:59.095 68e754c3.3059 zc_test 47313529677568 subscription 0 dn_6001 00000 2533274790397390 [BACKEND] LOG: walsender thread shut down

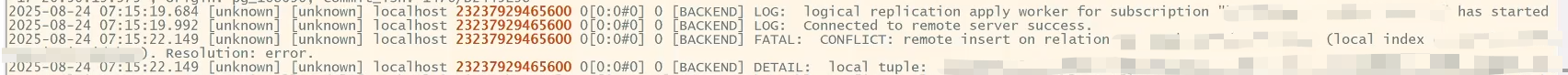

- 订阅端不断刷数据冲突的信息:

2025-10-09 14:23:14.188 68e754d2.3058 [unknown] 47882409416448 dn_6001 0 dn_6001 00000 0 [BACKEND] LOG: logical replication apply worker for subscription "zc_sub" has started

2025-10-09 14:23:14.216 68e754d2.3058 [unknown] 47882409416448 dn_6001 0 dn_6001 00000 0 [BACKEND] LOG: Connected to remote server success.

2025-10-09 14:23:14.221 68e754d2.3058 [unknown] 47882409416448 dn_6001 33137 dn_6001 42809 0 [BACKEND] FATAL: CONFLICT: remote insert on relation t_1 (local index t_1_name_key). Resolution: error.

2025-10-09 14:23:14.221 68e754d2.3058 [unknown] 47882409416448 dn_6001 33137 dn_6001 42809 0 [BACKEND] DETAIL: local tuple: id[integer]:4 name[text]:'d', remote tuple: id[integer]:4 name[text]:'d', origin: pg_20726, commit_lsn: 0/55001558

- 发布端 restart_lsn 停止推进,复制槽状态变为 f:

zc_test=# select * from pg_replication_slots;

slot_name | plugin | slot_type | datoid | database | active | xmin | catalog_xmin | restart_lsn | dummy_standby | confirmed_flush

-----------+----------+-----------+--------+----------+--------+------+--------------+-------------+---------------+-----------------

zc_sub | pgoutput | logical | 19763 | zc_test | f | | 55445 | 0/55001418 | f | 0/55001468

(1 row)

2025-10-09 14:19:36.510 68e740c6.3934 [unknown] 47312622798592 dn_6001 0 dn_6001 00000 0 [BACKEND] LOG: slotname: zc_sub, dummy: 0, restartlsn: 0/55001018

2025-10-09 14:20:36.774 68e740c6.3934 [unknown] 47312622798592 dn_6001 0 dn_6001 00000 0 [BACKEND] LOG: slotname: zc_sub, dummy: 0, restartlsn: 0/55001138

2025-10-09 14:21:37.040 68e740c6.3934 [unknown] 47312622798592 dn_6001 0 dn_6001 00000 0 [BACKEND] LOG: slotname: zc_sub, dummy: 0, restartlsn: 0/55001418

2025-10-09 14:22:36.303 68e740c6.3934 [unknown] 47312622798592 dn_6001 0 dn_6001 00000 0 [BACKEND] LOG: slotname: zc_sub, dummy: 0, restartlsn: 0/55001418

2025-10-09 14:23:36.553 68e740c6.3934 [unknown] 47312622798592 dn_6001 0 dn_6001 00000 0 [BACKEND] LOG: slotname: zc_sub, dummy: 0, restartlsn: 0/55001418

2025-10-09 14:24:36.821 68e740c6.3934 [unknown] 47312622798592 dn_6001 0 dn_6001 00000 0 [BACKEND] LOG: slotname: zc_sub, dummy: 0, restartlsn: 0/55001418

2025-10-09 14:25:37.089 68e740c6.3934 [unknown] 47312622798592 dn_6001 0 dn_6001 00000 0 [BACKEND] LOG: slotname: zc_sub, dummy: 0, restartlsn: 0/55001418

- 发布端在t_1, t_2插入数据,均无法同步,发布订阅被阻塞。

5. 结论

至此,客户的问题已在本地复现。问题过程为:订阅端数据冲突 -> 发布端复制槽的 restart_lsn 无法推进 -> 发布端 xlog堆积 -> 磁盘达到 85% 阈值 -> 切主

最后修改时间:2025-10-09 17:08:48

「喜欢这篇文章,您的关注和赞赏是给作者最好的鼓励」

关注作者

【版权声明】本文为墨天轮用户原创内容,转载时必须标注文章的来源(墨天轮),文章链接,文章作者等基本信息,否则作者和墨天轮有权追究责任。如果您发现墨天轮中有涉嫌抄袭或者侵权的内容,欢迎发送邮件至:contact@modb.pro进行举报,并提供相关证据,一经查实,墨天轮将立刻删除相关内容。