大家好,我是JiekeXu,江湖人称“强哥”,青学会MOP技术社区主席,荣获Oracle ACE Pro称号,金仓最具价值倡导者KVA,崖山最具价值专家YVP,IvorySQL开源社区专家顾问委员会成员,墨天轮MVP,墨天轮年度“墨力之星”,拥有 Oracle OCP/OCM 认证,MySQL 5.7/8.0 OCP 认证以及金仓KCA、KCP、KCM、KCSM证书,PCA、PCTA、OBCA、OGCA等众多国产数据库认证证书,欢迎关注我的微信公众号“JiekeXu DBA之路”,然后点击右上方三个点“设为星标”置顶,更多干货文章才能第一时间推送,谢谢!

前 言

上一篇文章《Oracle 19c RAC ADG on RHEL9.6 遇到的几个坑》中最后说道“将数据库迁移至 RHEL 9.6 并将其更新至 19.27 版本遇到了 REDO Block Header ORA-00354: corrupt redo log block header ”,将 Oracle 数据库磁盘的 discard_max_bytes 设置为 0 则可以得到解决,但是我看 cat /sys/block/sdc/queue/discard_max_bytes 值默认为 0 就没有去管这事了;后面类总介绍说 RedHat 解决了这个问题,就是更新内核到“kernel-5.14.0-570.24.1.el9_6” 或者更高版本修复了此问题。

Please update the system to kernel-5.14.0-570.24.1.el9_6 or later which contains the fix for this bug

注意:此问题即使重建重做日志也无法修复此损坏。

Even rebuilding the redo logs does not fix the corruption. This issue is only observed for VMs configured in Microsoft Azure. Same problem is not observed for VMs in other virtualization environments or on physical servers. 不过此问题仅在 Microsoft Azure 中配置的 VM 上观察到。相同的问题未在其他虚拟化环境或物理服务器上的VM中观察到。

根本原因是 RHEL9.6 内核中的 storvsc 驱动程序使用 vint_boundary 来定义一个段,而没有定 max_segment_size。由于这一点,_b1k_rq_map_sg() 函数会采用默认的 64K 最大段大小,并将一个虚拟段分割成两部分,从而突破了 virtboundary 的限制。这导致上文提到的块损坏问题。这个问题已通过补丁进行了修复:

- scsi: storvsc: 明确设置 max_segment_size 为 UINT_MAX

下面接着说最近遇到的几个新坑。前两个坑大概是和 RHEL9 相关,但后两个坑均是人为误操作,和 RHEL9 没有关系,特此说明。

坑六 主机重启后 CRS 无法实现开机自启动

在RHEL 9中,作为SysV初始化系统一部分的传统 /etc/init.d 脚本在很大程度上已被 systemd 取代。从RHEL 7 开始,红帽采用 systemd 作为默认的初始化系统,这一做法在 RHEL 9 中得以延续。虽然对旧版 SysV 初始化脚本存在一定的向后兼容性,但重点是使用 systemd 单元文件来管理服务。

RHEL 9 的主要变化

-

Systemd 作为默认初始化系统:Systemd 提供了一种更现代、更高效的服务管理方式,取代了旧的 SysV 初始化系统。现在,服务使用 .service 文件而不是 /etc/init.d 中的脚本进行定义。

-

对 SysV 初始化脚本的遗留支持:RHEL 9 包含 initscripts 软件包,为遗留的 SysV 初始化脚本提供有限支持。不过,这种支持主要针对红帽提供的脚本,不建议用于第三方或自定义脚本。使用此类脚本可能会导致诸如启动失败或服务管理不当等问题。

-

转换为 Systemd:强烈建议将所有剩余的 SysV 初始化脚本转换为 systemd 单元文件,以确保兼容性和正常功能。红帽提供了将初始化脚本转换为 systemd 单元文件的详细指南。

如何在 RHEL 9 中处理遗留的 SysV 初始化脚本

• 如果尚未安装 initscripts 软件包,请进行安装: dnf install initscripts

• 对初始化脚本进行更改后,重新加载 systemd 以识别这些更改: systemctl daemon-reload

• 像运行 systemd 服务一样运行初始化脚本: systemctl status <init_script_name>

建议

• 迁移到 Systemd:将所有传统的 SysV 初始化脚本转换为 systemd 单元文件,以避免启动顺序和服务依赖方面的潜在问题。

• 避免使用第三方初始化脚本:不支持使用第三方或自定义初始化脚本,因为这可能导致系统不稳定。

• 使用 Systemd 工具:利用 systemd 的特性,如 systemctl、journalctl 和定时器,以实现更好的服务管理和日志记录。 通过完全过渡到 systemd,您可以确保更顺畅的操作,并与未来的 RHEL 版本保持兼容。

CRS 无法开机自启动解决办法

cd /etc/rc.d/init.d/

cp /etc/init.d/* /etc/rc.d/init.d/

# ll

total 76

-rwxr-xr-x 1 root root 25631 Aug 27 15:34 init.ohasd

-rwxr-xr-x 1 root root 33194 Aug 27 15:34 init.tfa

-rwxr-xr-x 1 root root 8054 Aug 27 15:34 ohasd

-rw-r--r--. 1 root root 1161 Jan 28 2025 README

--或者使用如下方式

cp /etc/init.d/* /etc/rc.d/init.d/

mv init.d init.d_bak20250827

ln -s /etc/rc.d/init.d init.d

ll init.d

lrwxrwxrwx 1 root root 16 Aug 27 15:29 init.d -> /etc/rc.d/init.d

# systemctl status ohasd

● ohasd.service - LSB: Start and Stop Oracle High Availability Service

Loaded: loaded (/etc/rc.d/init.d/ohasd; generated)

Active: active (exited) since Fri 2025-09-12 16:29:52 CST; 2 weeks 3 days ago

Docs: man:systemd-sysv-generator(8)

CPU: 346ms

Notice: journal has been rotated since unit was started, output may be incomplete.

坑七 多路径聚合问题导致执行 root.sh 报错ORA-59066

在安装 GI 集群件的时候,执行 root.sh 脚本在第 16 步后创建 OCR 磁盘组时报错 ORA-15020 和 ORA-59066 多个磁盘路径(/dev/sdk、/dev/sdw、/dev/sdau、/dev/sdai)正在发现具有相同磁盘头内容的磁盘。

[root@t-19crac-r1 ~]# /u01/app/19.0.0/grid/root.sh Performing root user operation. The following environment variables are set as: ORACLE_OWNER= grid ORACLE_HOME= /u01/app/19.0.0/grid Enter the full pathname of the local bin directory: [/usr/local/bin]: Copying dbhome to /usr/local/bin ... Copying oraenv to /usr/local/bin ... Copying coraenv to /usr/local/bin ... 2025/09/26 18:44:27 CLSRSC-594: Executing installation step 15 of 19: 'InstallKA'. 2025/09/26 18:44:30 CLSRSC-594: Executing installation step 16 of 19: 'InitConfig'. [FATAL] [DBT-30002] Disk group OCR creation failed. ORA-15018: diskgroup cannot be created ORA-15020: discovered duplicate ASM disk "OCR_0000" ORA-59066: Multiple disk paths (/dev/sdk, /dev/sdw, /dev/sdau, /dev/sdai) are discovering disk with identical disk header contents. ORA-59066: Multiple disk paths (/dev/sdl, /dev/sdx, /dev/sdav, /dev/sdaj) are discovering disk with identical disk header contents. ORA-59066: Multiple disk paths (/dev/sdm, /dev/sdy, /dev/sdaw, /dev/sdak) are discovering disk with identical disk header contents. 2025/09/26 18:45:23 CLSRSC-184: Configuration of ASM failed 2025/09/26 18:45:24 CLSRSC-258: Failed to configure and start ASM Died at /u01/app/19.0.0/grid/crs/install/crsinstall.pm line 2624. [root@t-19crac-r1 ~]# 2025/09/26 18:46:22 CLSRSC-4002: Successfully installed Oracle Trace File Analyzer (TFA) Collector.

报错的原因当然也很简单,就是在创建 OCR 磁盘组时选择错了参数 “asm_diskstring ” 的值为“/dev/sd*”,当然这个也不算是坑,当时没有手动去修改,选择默认路径 “/dev/sd*” 导致的。其他时候创建 OCR 时都不需要手动修改直接默认就好,这次也是由于惯性选择默认的路径导致的。我们可以通过 ASM 命令我们选择的错误路径。

[root@t-19crac-r1 ~]# su - grid

Last login: Sun Sep 28 10:28:24 CST 2025 on pts/1

mld-19crac-136:/home/grid(+ASM1)$ sqlplus / as sysasm

SQL*Plus: Release 19.0.0.0.0 - Production on Sun Sep 28 10:29:00 2025

Version 19.23.0.0.0

Copyright (c) 1982, 2023, Oracle. All rights reserved.

Connected to:

Oracle Database 19c Enterprise Edition Release 19.0.0.0.0 - Production

Version 19.23.0.0.0

SQL> show parameter asm_diskst

NAME TYPE VALUE

------------------------------------ ----------- ------------------------------

asm_diskstring string /dev/sd*

SQL> exit

t-19crac-r1:/home/grid(+ASM1)$ asmcmd

ASMCMD> lsdg

ASMCMD> dsget

parameter:/dev/sd*

profile:/dev/sd*

如下所示,如果选择 “/dev/sd*” 就还包含了本地磁盘或者多路径聚合磁盘。

[root@t-19crac-r1 backup]# ll /dev/mapper/ total 0 crw------- 1 root root 10, 236 Sep 29 17:08 control lrwxrwxrwx 1 root root 7 Sep 29 17:08 datavg01-lv_db_bak01 -> ../dm-2 lrwxrwxrwx 1 root root 7 Sep 29 17:08 JIEKEDB-ARCH01 -> ../dm-5 lrwxrwxrwx 1 root root 7 Sep 29 17:08 JIEKEDB-ARCH02 -> ../dm-6 lrwxrwxrwx 1 root root 7 Sep 29 17:08 JIEKEDB-DATA01 -> ../dm-9 lrwxrwxrwx 1 root root 8 Sep 29 17:08 JIEKEDB-DATA02 -> ../dm-10 lrwxrwxrwx 1 root root 8 Sep 29 17:08 JIEKEDB-DATA03 -> ../dm-11 lrwxrwxrwx 1 root root 8 Sep 29 17:08 JIEKEDB-DATA04 -> ../dm-12 lrwxrwxrwx 1 root root 8 Sep 29 17:08 JIEKEDB-DATA05 -> ../dm-13 lrwxrwxrwx 1 root root 8 Sep 29 17:08 JIEKEDB-DATA06 -> ../dm-14 lrwxrwxrwx 1 root root 8 Sep 29 17:08 JIEKEDB-OCR01 -> ../dm-15 lrwxrwxrwx 1 root root 8 Sep 29 17:08 JIEKEDB-OCR02 -> ../dm-16 lrwxrwxrwx 1 root root 7 Sep 29 17:08 JIEKEDB-OCR03 -> ../dm-7 lrwxrwxrwx 1 root root 7 Sep 29 17:08 JIEKEDB-REDO01 -> ../dm-8 lrwxrwxrwx 1 root root 7 Sep 29 17:08 rootvg-lvbackup -> ../dm-4 lrwxrwxrwx 1 root root 7 Sep 29 17:08 rootvg-lvroot -> ../dm-0 lrwxrwxrwx 1 root root 7 Sep 29 17:08 rootvg-lvswap -> ../dm-1 lrwxrwxrwx 1 root root 7 Sep 29 17:08 rootvg-lvu01 -> ../dm-3 [root@t-19crac-r1 backup]# ll /dev/mapper/JIEKE* lrwxrwxrwx 1 root root 7 Sep 29 17:08 /dev/mapper/JIEKEDB-ARCH01 -> ../dm-5 lrwxrwxrwx 1 root root 7 Sep 29 17:08 /dev/mapper/JIEKEDB-ARCH02 -> ../dm-6 lrwxrwxrwx 1 root root 7 Sep 29 17:08 /dev/mapper/JIEKEDB-DATA01 -> ../dm-9 lrwxrwxrwx 1 root root 8 Sep 29 17:08 /dev/mapper/JIEKEDB-DATA02 -> ../dm-10 lrwxrwxrwx 1 root root 8 Sep 29 17:08 /dev/mapper/JIEKEDB-DATA03 -> ../dm-11 lrwxrwxrwx 1 root root 8 Sep 29 17:08 /dev/mapper/JIEKEDB-DATA04 -> ../dm-12 lrwxrwxrwx 1 root root 8 Sep 29 17:08 /dev/mapper/JIEKEDB-DATA05 -> ../dm-13 lrwxrwxrwx 1 root root 8 Sep 29 17:08 /dev/mapper/JIEKEDB-DATA06 -> ../dm-14 lrwxrwxrwx 1 root root 8 Sep 29 17:08 /dev/mapper/JIEKEDB-OCR01 -> ../dm-15 lrwxrwxrwx 1 root root 8 Sep 29 17:08 /dev/mapper/JIEKEDB-OCR02 -> ../dm-16 lrwxrwxrwx 1 root root 7 Sep 29 17:08 /dev/mapper/JIEKEDB-OCR03 -> ../dm-7 lrwxrwxrwx 1 root root 7 Sep 29 17:08 /dev/mapper/JIEKEDB-REDO01 -> ../dm-8 [root@t-19crac-r1 backup]# [root@t-19crac-r1 backup]# ll /dev/dm-* brw-rw---- 1 root disk 253, 0 Sep 29 17:08 /dev/dm-0 brw-rw---- 1 root disk 253, 1 Sep 29 17:08 /dev/dm-1 brw-rw---- 1 root disk 253, 10 Sep 29 17:08 /dev/dm-10 brw-rw---- 1 root disk 253, 11 Sep 29 17:08 /dev/dm-11 brw-rw---- 1 root disk 253, 12 Sep 29 17:08 /dev/dm-12 brw-rw---- 1 root disk 253, 13 Sep 29 17:08 /dev/dm-13 brw-rw---- 1 root disk 253, 14 Sep 29 17:08 /dev/dm-14 brw-rw---- 1 root disk 253, 15 Sep 29 17:08 /dev/dm-15 brw-rw---- 1 root disk 253, 16 Sep 29 17:08 /dev/dm-16 brw-rw---- 1 root disk 253, 2 Sep 29 17:08 /dev/dm-2 brw-rw---- 1 root disk 253, 3 Sep 29 17:08 /dev/dm-3 brw-rw---- 1 root disk 253, 4 Sep 29 17:08 /dev/dm-4 brw-rw---- 1 root disk 253, 5 Sep 29 17:08 /dev/dm-5 brw-rw---- 1 root disk 253, 6 Sep 29 17:08 /dev/dm-6 brw-rw---- 1 root disk 253, 7 Sep 29 17:08 /dev/dm-7 brw-rw---- 1 root disk 253, 8 Sep 29 17:08 /dev/dm-8 brw-rw---- 1 root disk 253, 9 Sep 29 17:08 /dev/dm-9 [root@t-19crac-r1 backup]# ll /dev/sd* brw-rw---- 1 root disk 8, 0 Sep 29 17:08 /dev/sda brw-rw---- 1 root disk 8, 1 Sep 29 17:08 /dev/sda1 brw-rw---- 1 root disk 8, 2 Sep 29 17:08 /dev/sda2 brw-rw---- 1 root disk 8, 3 Sep 29 17:08 /dev/sda3 brw-rw---- 1 grid asmadmin 65, 160 Sep 29 17:08 /dev/sdaa brw-rw---- 1 grid asmadmin 65, 176 Sep 30 15:27 /dev/sdab brw-rw---- 1 grid asmadmin 65, 192 Sep 29 17:08 /dev/sdac brw-rw---- 1 grid asmadmin 65, 208 Sep 29 17:08 /dev/sdad brw-rw---- 1 grid asmadmin 65, 224 Sep 29 17:08 /dev/sdae brw-rw---- 1 grid asmadmin 65, 240 Sep 29 17:08 /dev/sdaf brw-rw---- 1 grid asmadmin 66, 0 Sep 29 17:08 /dev/sdag brw-rw---- 1 grid asmadmin 66, 16 Sep 29 17:08 /dev/sdah brw-rw---- 1 grid asmadmin 66, 32 Sep 30 15:27 /dev/sdai brw-rw---- 1 grid asmadmin 66, 48 Sep 29 17:08 /dev/sdaj brw-rw---- 1 grid asmadmin 66, 64 Sep 29 17:08 /dev/sdak brw-rw---- 1 grid asmadmin 66, 80 Sep 30 15:27 /dev/sdal brw-rw---- 1 grid asmadmin 66, 96 Sep 29 17:08 /dev/sdam brw-rw---- 1 grid asmadmin 66, 112 Sep 29 17:08 /dev/sdan brw-rw---- 1 grid asmadmin 66, 128 Sep 30 15:27 /dev/sdao brw-rw---- 1 grid asmadmin 66, 144 Sep 29 17:08 /dev/sdap brw-rw---- 1 grid asmadmin 66, 160 Sep 29 17:08 /dev/sdaq brw-rw---- 1 grid asmadmin 66, 176 Sep 29 17:08 /dev/sdar brw-rw---- 1 grid asmadmin 66, 192 Sep 29 17:08 /dev/sdas brw-rw---- 1 grid asmadmin 66, 208 Sep 30 15:27 /dev/sdat brw-rw---- 1 grid asmadmin 66, 224 Sep 29 17:08 /dev/sdau brw-rw---- 1 grid asmadmin 66, 240 Sep 29 17:08 /dev/sdav brw-rw---- 1 grid asmadmin 67, 0 Sep 29 17:08 /dev/sdaw brw-rw---- 1 grid asmadmin 67, 16 Sep 29 17:08 /dev/sdax brw-rw---- 1 root disk 8, 16 Sep 29 17:08 /dev/sdb brw-rw---- 1 grid asmadmin 8, 32 Sep 29 17:08 /dev/sdc srwxr--r-- 1 root root 0 Sep 29 17:08 /dev/sd_cloudhelper_update brw-rw---- 1 grid asmadmin 8, 48 Sep 29 17:08 /dev/sdd brw-rw---- 1 grid asmadmin 8, 64 Sep 29 17:08 /dev/sde brw-rw---- 1 grid asmadmin 8, 80 Sep 30 15:27 /dev/sdf brw-rw---- 1 grid asmadmin 8, 96 Sep 30 15:27 /dev/sdg brw-rw---- 1 grid asmadmin 8, 112 Sep 30 15:27 /dev/sdh brw-rw---- 1 grid asmadmin 8, 128 Sep 30 15:27 /dev/sdi brw-rw---- 1 grid asmadmin 8, 144 Sep 29 17:08 /dev/sdj brw-rw---- 1 grid asmadmin 8, 160 Sep 29 17:08 /dev/sdk brw-rw---- 1 grid asmadmin 8, 176 Sep 30 15:27 /dev/sdl brw-rw---- 1 grid asmadmin 8, 192 Sep 30 15:27 /dev/sdm brw-rw---- 1 grid asmadmin 8, 208 Sep 29 17:08 /dev/sdn brw-rw---- 1 grid asmadmin 8, 224 Sep 30 15:27 /dev/sdo brw-rw---- 1 grid asmadmin 8, 240 Sep 29 17:08 /dev/sdp brw-rw---- 1 grid asmadmin 65, 0 Sep 29 17:08 /dev/sdq brw-rw---- 1 grid asmadmin 65, 16 Sep 29 17:08 /dev/sdr brw-rw---- 1 grid asmadmin 65, 32 Sep 29 17:08 /dev/sds srwxr--r-- 1 root root 0 Sep 29 17:08 /dev/sd_sdcc_signin srwxr--r-- 1 root root 0 Sep 29 17:08 /dev/sd_sdec_signin srwxr--r-- 1 root root 0 Sep 29 17:08 /dev/sd_sdexam_signin srwxr--r-- 1 root root 0 Sep 29 17:08 /dev/sd_sdmonitor_command srwxr--r-- 1 root root 0 Sep 29 17:08 /dev/sd_sdsvrd_signin srwxr--r-- 1 root root 0 Sep 29 17:08 /dev/sdsock brw-rw---- 1 grid asmadmin 65, 48 Sep 29 17:08 /dev/sdt brw-rw---- 1 grid asmadmin 65, 64 Sep 29 17:08 /dev/sdu srwxr--r-- 1 root root 0 Sep 29 17:08 /dev/sd_udcenter_signin srwxr--r-- 1 root root 0 Sep 29 17:08 /dev/sd_update_config brw-rw---- 1 grid asmadmin 65, 80 Sep 29 17:08 /dev/sdv brw-rw---- 1 grid asmadmin 65, 96 Sep 29 17:08 /dev/sdw brw-rw---- 1 grid asmadmin 65, 112 Sep 29 17:08 /dev/sdx brw-rw---- 1 grid asmadmin 65, 128 Sep 29 17:08 /dev/sdy brw-rw---- 1 grid asmadmin 65, 144 Sep 29 17:08 /dev/sdz [root@t-19crac-r1 backup]#

从上面示例中我们可以发现,同一个多路径磁盘可以以三种不同的形式出现:

- /dev/mapper/JIEKEDB* 设备别名在启动过程早期作为符号链接文件创建,在添加/移除设备和重启系统时保持持久。建议将其用作ASM磁盘标识符。

- /dev/dm-* 实际的多路径设备名称(ID),在系统重启后可能会改变,仅由多路径工具内部使用。切勿直接将其用于ASM磁盘

- /dev/sd* 每个非多路径设备或多路径设备的每个通道(路径)的设备名称

然后我们通过多路径聚合 multipath -ll 来查看磁盘路径。

[root@t-19crac-r1 backup]# multipath -ll JIEKEDB-ARCH01 (36000144000000010e0177a93f3962e72) dm-5 EMC,Invista size=2.0T features='1 queue_if_no_path' hwhandler='0' wp=rw `-+- policy='service-time 0' prio=1 status=active |- 13:0:0:0 sdc 8:32 active ready running |- 13:0:1:0 sdo 8:224 active ready running |- 15:0:0:0 sdaa 65:160 active ready running `- 15:0:1:0 sdam 66:96 active ready running JIEKEDB-ARCH02 (36000144000000010e0177a93f3962e75) dm-6 EMC,Invista size=2.0T features='1 queue_if_no_path' hwhandler='0' wp=rw `-+- policy='service-time 0' prio=1 status=active |- 13:0:0:1 sdd 8:48 active ready running |- 13:0:1:1 sdp 8:240 active ready running |- 15:0:0:1 sdab 65:176 active ready running `- 15:0:1:1 sdan 66:112 active ready running JIEKEDB-DATA01 (36000144000000010e0177a93f3962e71) dm-9 EMC,Invista size=2.0T features='1 queue_if_no_path' hwhandler='0' wp=rw `-+- policy='service-time 0' prio=1 status=active |- 13:0:0:2 sde 8:64 active ready running |- 13:0:1:2 sdq 65:0 active ready running |- 15:0:0:2 sdac 65:192 active ready running `- 15:0:1:2 sdao 66:128 active ready running [root@t-19crac-r1 backup]# multipath -ll | grep OCR JIEKEDB-OCR01 (36000144000000010e0177a93f3962e76) dm-15 EMC,Invista JIEKEDB-OCR02 (36000144000000010e0177a93f3962e79) dm-16 EMC,Invista JIEKEDB-OCR03 (36000144000000010e0177a93f3962e7b) dm-7 EMC,Invista [root@t-19crac-r1 backup]# [root@t-19crac-r1 backup]# multipath -ll | grep -A 8 OCR JIEKEDB-OCR01 (36000144000000010e0177a93f3962e76) dm-15 EMC,Invista size=5.0G features='1 queue_if_no_path' hwhandler='0' wp=rw `-+- policy='service-time 0' prio=1 status=active |- 13:0:0:8 sdk 8:160 active ready running |- 13:0:1:8 sdw 65:96 active ready running |- 15:0:0:8 sdai 66:32 active ready running `- 15:0:1:8 sdau 66:224 active ready running JIEKEDB-OCR02 (36000144000000010e0177a93f3962e79) dm-16 EMC,Invista size=5.0G features='1 queue_if_no_path' hwhandler='0' wp=rw `-+- policy='service-time 0' prio=1 status=active |- 13:0:0:9 sdl 8:176 active ready running |- 13:0:1:9 sdx 65:112 active ready running |- 15:0:0:9 sdaj 66:48 active ready running `- 15:0:1:9 sdav 66:240 active ready running JIEKEDB-OCR03 (36000144000000010e0177a93f3962e7b) dm-7 EMC,Invista size=5.0G features='1 queue_if_no_path' hwhandler='0' wp=rw `-+- policy='service-time 0' prio=1 status=active |- 13:0:0:10 sdm 8:192 active ready running |- 13:0:1:10 sdy 65:128 active ready running |- 15:0:0:10 sdak 66:64 active ready running `- 15:0:1:10 sdaw 67:0 active ready running JIEKEDB-REDO01 (36000144000000010e0177a93f3962e7a) dm-8 EMC,Invista size=100G features='1 queue_if_no_path' hwhandler='0' wp=rw [root@t-19crac-r1 backup]#

在示例中,/dev/mapper/JIEKEDB-ARCH01 是实际多路径设备 /dev/dm-5 的别名,/dev/dm-5 设备有四条路径,并且创建了四个设备(/dev/sdc、/dev/sdo、/dev/sdaa、/dev/sdam)来分别表示这四条路径。这四个 /dev/sd* 设备中的任何一个都可以被访问,但访问的是同一个磁盘。然而,当使用其中一个 /dev/sd* 设备名来访问磁盘,并且由于任何原因导致路径断开时,你将失去对该设备的访问权限。相反,如果使用 /dev/mapper/JIEKEDB* 来访问磁盘,磁盘将保持可用,直到所有四条路径同时出现问题。 运行命令’udevadm info’以获取设备标识信息。

udevadm info --query=all --name=/dev/mapper/JIEKEDB-ARCH01 [root@t-19crac-r1 backup]# udevadm info --query=all --name=/dev/mapper/JIEKEDB-ARCH01 P: /devices/virtual/block/dm-5 M: dm-5 R: 5 U: block T: disk D: b 253:5 N: dm-5 L: 50 S: disk/by-id/dm-name-JIEKEDB-ARCH01 S: disk/by-id/dm-uuid-mpath-36000144000000010e0177a93f3962e72 S: mapper/JIEKEDB-ARCH01 S: disk/by-id/wwn-0x6000144000000010e0177a93f3962e72 S: disk/by-id/scsi-36000144000000010e0177a93f3962e72 Q: 56 E: DEVPATH=/devices/virtual/block/dm-5 E: DEVNAME=/dev/dm-5 E: DEVTYPE=disk E: DISKSEQ=56 E: MAJOR=253 E: MINOR=5 E: SUBSYSTEM=block E: USEC_INITIALIZED=36071786 E: DM_UDEV_DISABLE_LIBRARY_FALLBACK_FLAG=1 E: DM_UDEV_PRIMARY_SOURCE_FLAG=1 E: DM_ACTIVATION=1 E: DM_NAME=JIEKEDB-ARCH01 E: DM_UUID=mpath-36000144000000010e0177a93f3962e72 E: DM_SUSPENDED=0 E: DM_UDEV_RULES_VSN=2 E: MPATH_SBIN_PATH=/sbin E: MPATH_DEVICE_READY=1 E: DM_TYPE=scsi E: DM_WWN=0x6000144000000010e0177a93f3962e72 E: DM_SERIAL=36000144000000010e0177a93f3962e72 E: ID_FS_TYPE=oracleasm E: ID_FS_USAGE=filesystem E: NVME_HOST_IFACE=none E: SYSTEMD_READY=1 E: DEVLINKS=/dev/disk/by-id/dm-name-JIEKEDB-ARCH01 /dev/disk/by-id/dm-uuid-mpath-36000144000000010e0177a93f3962e72 /dev/mapper/JIEKEDB-ARCH01 /dev/disk/by-id/wwn-0x6000144000000010e0177a93f3962e72 /dev> E: TAGS=:systemd: E: CURRENT_TAGS=:systemd:

在命令输出中,DM_NAME 或 DM_UUID 均可用于唯一标识多路径设备 /dev/mapper/JIEKEDB-ARCH01。

然后我们来看 udev 配置文件,这里配置的是 /dev/asmdisks/jiekedb* ,所以 ASM 参数 asm_diskstring 可以写成 /dev/asmdisks/jiekedb* 或者 /dev/asm*,总之,路径指向 /dev/asmdisks 文件夹均可,而不能是 /dev/sd* 。

[root@t-19crac-r1 backup]# cat /etc/udev/rules.d/99-oracle-asmdevices.rules KERNEL=="sd*",SUBSYSTEM=="block", PROGRAM=="/usr/lib/udev/scsi_id -g -u -d $devnode",RESULT=="36000144000000010e0177a93f3962e72",SYMLINK+="asmdisks/jiekedb-arch01",OWNER="grid", GROUP="asmadmin", MODE="0660" KERNEL=="sd*",SUBSYSTEM=="block", PROGRAM=="/usr/lib/udev/scsi_id -g -u -d $devnode",RESULT=="36000144000000010e0177a93f3962e75",SYMLINK+="asmdisks/jiekedb-arch02",OWNER="grid", GROUP="asmadmin", MODE="0660" KERNEL=="sd*",SUBSYSTEM=="block", PROGRAM=="/usr/lib/udev/scsi_id -g -u -d $devnode",RESULT=="36000144000000010e0177a93f3962e71",SYMLINK+="asmdisks/jiekedb-data01",OWNER="grid", GROUP="asmadmin", MODE="0660" KERNEL=="sd*",SUBSYSTEM=="block", PROGRAM=="/usr/lib/udev/scsi_id -g -u -d $devnode",RESULT=="36000144000000010e0177a93f3962e70",SYMLINK+="asmdisks/jiekedb-data02",OWNER="grid", GROUP="asmadmin", MODE="0660" KERNEL=="sd*",SUBSYSTEM=="block", PROGRAM=="/usr/lib/udev/scsi_id -g -u -d $devnode",RESULT=="36000144000000010e0177a93f3962e73",SYMLINK+="asmdisks/jiekedb-data03",OWNER="grid", GROUP="asmadmin", MODE="0660" KERNEL=="sd*",SUBSYSTEM=="block", PROGRAM=="/usr/lib/udev/scsi_id -g -u -d $devnode",RESULT=="36000144000000010e0177a93f3962e74",SYMLINK+="asmdisks/jiekedb-data04",OWNER="grid", GROUP="asmadmin", MODE="0660" KERNEL=="sd*",SUBSYSTEM=="block", PROGRAM=="/usr/lib/udev/scsi_id -g -u -d $devnode",RESULT=="36000144000000010e0177a93f3962e78",SYMLINK+="asmdisks/jiekedb-data05",OWNER="grid", GROUP="asmadmin", MODE="0660" KERNEL=="sd*",SUBSYSTEM=="block", PROGRAM=="/usr/lib/udev/scsi_id -g -u -d $devnode",RESULT=="36000144000000010e0177a93f3962e77",SYMLINK+="asmdisks/jiekedb-data06",OWNER="grid", GROUP="asmadmin", MODE="0660" KERNEL=="sd*",SUBSYSTEM=="block", PROGRAM=="/usr/lib/udev/scsi_id -g -u -d $devnode",RESULT=="36000144000000010e0177a93f3962e76",SYMLINK+="asmdisks/jiekedb-ocr01",OWNER="grid", GROUP="asmadmin", MODE="0660" KERNEL=="sd*",SUBSYSTEM=="block", PROGRAM=="/usr/lib/udev/scsi_id -g -u -d $devnode",RESULT=="36000144000000010e0177a93f3962e79",SYMLINK+="asmdisks/jiekedb-ocr02",OWNER="grid", GROUP="asmadmin", MODE="0660" KERNEL=="sd*",SUBSYSTEM=="block", PROGRAM=="/usr/lib/udev/scsi_id -g -u -d $devnode",RESULT=="36000144000000010e0177a93f3962e7b",SYMLINK+="asmdisks/jiekedb-ocr03",OWNER="grid", GROUP="asmadmin", MODE="0660" KERNEL=="sd*",SUBSYSTEM=="block", PROGRAM=="/usr/lib/udev/scsi_id -g -u -d $devnode",RESULT=="36000144000000010e0177a93f3962e7a",SYMLINK+="asmdisks/jiekedb-redo01",OWNER="grid", GROUP="asmadmin", MODE="0660" t-19crac-r1:/home/grid(+ASM1)$ ll /dev/asm* total 0 lrwxrwxrwx 1 root root 6 Sep 30 15:35 jiekedb-arch01 -> ../sdo lrwxrwxrwx 1 root root 7 Sep 30 15:08 jiekedb-arch02 -> ../sdab lrwxrwxrwx 1 root root 7 Sep 30 15:35 jiekedb-data01 -> ../sdao lrwxrwxrwx 1 root root 6 Sep 30 15:35 jiekedb-data02 -> ../sdf lrwxrwxrwx 1 root root 6 Sep 30 15:35 jiekedb-data03 -> ../sdg lrwxrwxrwx 1 root root 6 Sep 30 15:35 jiekedb-data04 -> ../sdh lrwxrwxrwx 1 root root 6 Sep 30 15:35 jiekedb-data05 -> ../sdi lrwxrwxrwx 1 root root 7 Sep 30 15:35 jiekedb-data06 -> ../sdat lrwxrwxrwx 1 root root 7 Sep 30 15:08 jiekedb-ocr01 -> ../sdai lrwxrwxrwx 1 root root 6 Sep 30 15:08 jiekedb-ocr02 -> ../sdl lrwxrwxrwx 1 root root 6 Sep 30 15:08 jiekedb-ocr03 -> ../sdm lrwxrwxrwx 1 root root 7 Sep 30 15:08 jiekedb-redo01 -> ../sdal

但是,这里已经跑完 root.sh 脚本了,也是前面图形化选择路径时出错了,就只能卸载 GI 集群件重新安装了,就算在 ASM 实例里修改参数也是徒劳。

t-19crac-r1:/home/grid(+ASM1)$ sqlplus / as sysasm

SQL*Plus: Release 19.0.0.0.0 - Production on Tue Sep 29 19:33:25 2025

Version 19.23.0.0.0

Copyright (c) 1982, 2023, Oracle. All rights reserved.

Connected to:

Oracle Database 19c Enterprise Edition Release 19.0.0.0.0 - Production

Version 19.23.0.0.0

SQL> show parameter asm_dis

NAME TYPE VALUE

------------------------------------ ----------- ------------------------------

asm_diskgroups string

asm_diskstring string /dev/sd*

SQL>

SQL> alter system set asm_diskstring='/dev/asm*';

System altered.

SQL> show parameter asm_dis

NAME TYPE VALUE

------------------------------------ ----------- ------------------------------

asm_diskgroups string

asm_diskstring string /dev/asm*

卸载重装

===================== GI 卸载=======================================

t-19crac-r1:/home/grid(+ASM1)$ $ORACLE_HOME/deinstall/deinstall

Checking for required files and bootstrapping ...

Please wait ...

Location of logs /tmp/deinstall2025-09-28_11-33-47AM/logs/

############ ORACLE DECONFIG TOOL START ############

Is this home configured on other nodes (y - yes, n - no)?[n]:y

Specify a comma-separated list of remote nodes to cleanup :t-19crac-r2

--选择 Y ,输入远程主机名称即节点 2 主机名

Oracle Home selected for deinstall is: /u01/app/19.0.0/grid

Inventory Location where the Oracle home registered is: /u01/app/oraInventory

ASM was not detected in the Oracle Home

Oracle Grid Management database was not found in this Grid Infrastructure home

Do you want to continue (y - yes, n - no)? [n]: y --输入 Y 继续,稍等一会便会生成 deconfig 命令

--

The deconfig command below can be executed in parallel on all the remote nodes. Execute the command on the local node after the execution completes on all the remote nodes. --以下deconfig命令可以在所有远程节点上并行执行。在所有远程节点执行完毕后,再在本地节点上执行该命令。

Run the following command as the root user or the administrator on node "t-19crac-r1". --以root用户或管理员身份在节点“t-19crac-r1”上运行以下命令。

/u01/app/19.0.0/grid/crs/install/rootcrs.sh -force -deconfig -paramfile "/tmp/deinstall2025-09-28_11-33-47AM/response/deinstall_OraGI19Home1.rsp" -lastnode

Press Enter after you finish running the above commands

从Oracle Database 12c Release 1 (12.1.0.2)开始,roothas.sh 脚本在 Oracle Restart 的 Oracle Grid Infrastructure 主目录中取代了 roothas.pl 脚本,而rootcrs.sh 脚本在集群的 Oracle Grid Infrastructure 的 Grid 主目录中取代了 rootcrs.pl 脚本。

通过上面卸载后,删除的干干净净,就连 grid 所在的目录也被删除了,重新解压软件安装时需要先建目录,授权,然后打补丁安装集群件,这次非常顺利,但也遇到了一个小坑,也属于个人误操作了。如下所示,卸载后需要两节点新建目录并授权,但由于疏忽最后一条授权语句没有执行在节点二执行,又遇到了新坑。

mkdir -p /u01/app/19.0.0/grid mkdir -p /u01/app/grid chown -R grid:oinstall /u01 chmod -R 775 /u01 mkdir -p /u01/app/oracle/product/19.0.0/dbhome_1 chown -R oracle:oinstall /u01/app/oracle

坑八 sqlplus 登录报错 ORA-12547: TNS:lost contact

由于疏忽忘记在节点二给 Oracle 家目录授权,导致家目录属主为 grid:oinstall,软件安装时没有什么问题,正常执行脚本安装顺利通过,但当添加 ADG 备库实例后,通过 sqlplus 登录却报错无法连接 ORA-12547: TNS:lost contact。

t-19crac-r2:/u01/app/oracle/product/19.0.0/dbhome_1/network/admin(Jieke2)$ sqlplus / as sysdba

SQL*Plus: Release 19.0.0.0.0 - Production on Mon Sep 29 10:31:22 2025

Version 19.23.0.0.0

Copyright (c) 1982, 2023, Oracle. All rights reserved.

ERROR:

ORA-12547: TNS:lost contact

Enter user-name: ^C

t-19crac-r1:/home/oracle(Jieke1)$ srvctl start database -d Jieke

PRCR-1079 : Failed to start resource ora.Jieke.db

CRS-5017: The resource action "ora.Jieke.db start" encountered the following error:

ORA-12547: TNS:lost contact

. For details refer to "(:CLSN00107:)" in "/u01/app/grid/diag/crs/t-19crac-r2/crs/trace/crsd_oraagent_oracle.trc".

CRS-2674: Start of 'ora.Jieke.db' on 't-19crac-r2' failed

CRS-2632: There are no more servers to try to place resource 'ora.Jieke.db' on that would satisfy its placement policy

t-19crac-r1:/home/oracle(Jieke1)$ srvctl status database -d Jieke

Instance Jieke1 is running on node t-19crac-r1

Instance Jieke2 is not running on node t-19crac-r2

查看节点二的Oracle家目录属主,确实也是 grid:oinstall,那么需要修改 Oracle 家目录的属主权限,这里不能直接修改,家目录下的文件权限错综复杂,如果修改错误就会遇到一些意想不到的问题,恰好这里就出现了一个错误的示范,如下最后一条 SQL 命令直接-R级联修改了家目录属主,埋下了一个新坑。

[root@t-19crac-r2 ~]# cd /u01 [root@t-19crac-r2 u01]# ll total 0 drwxr-xr-x 6 root oinstall 66 Sep 28 14:43 app drwxrwxr-x 2 grid oinstall 6 Sep 26 11:27 backup [root@t-19crac-r2 u01]# cd app [root@t-19crac-r2 app]# ll total 0 drwxr-xr-x 3 root oinstall 18 Sep 28 14:00 19.0.0 drwxrwxr-x 7 grid oinstall 83 Sep 28 14:54 grid drwxrwx--- 5 grid oinstall 92 Sep 29 10:51 oraInventory drwxrwxr-x 5 grid oinstall 46 Sep 28 18:03 oracle <<<---- 这里属主应该是 oracle:oinstall [root@t-19crac-r2 app]# cd oracle [root@t-19crac-r2 oracle]# ll total 4 drwxr-xr-x 3 oracle oinstall 18 Sep 28 18:03 admin drwxrwxr-x 23 oracle oinstall 4096 Sep 28 15:44 diag drwxrwxr-x 3 grid oinstall 20 Sep 26 11:27 product <<<---- 这里属主应该是 oracle:oinstall [root@t-19crac-r2 oracle]# cd product/ [root@t-19crac-r2 product]# ll total 0 drwxrwxr-x 3 grid oinstall 22 Sep 26 11:27 19.0.0 <<<---- 这里属主应该是 oracle:oinstall [root@t-19crac-r2 product]# cd 19.0.0/ [root@t-19crac-r2 19.0.0]# ll total 4 drwxrwxr-x 72 grid oinstall 4096 Sep 28 15:44 dbhome_1 <<<---- 这里属主应该是 oracle:oinstall [root@t-19crac-r2 19.0.0]# cd dbhome_1/ [root@t-19crac-r2 dbhome_1]# ll total 100 drwxr-x--- 15 oracle oinstall 4096 Sep 28 15:44 OPatch drwxr-x--- 14 oracle oinstall 4096 Sep 28 15:44 OPatch_bak0928 drwxr-xr-x 2 oracle oinstall 26 Sep 28 15:44 QOpatch drwxr-xr-x 5 oracle oinstall 52 Sep 28 15:42 R drwxr-xr-x 2 oracle oinstall 102 Sep 28 15:44 addnode drwxr-xr-x 5 oracle oinstall 4096 Sep 28 15:44 apex drwxr-xr-x 9 oracle oinstall 93 Sep 28 15:43 assistants [root@t-19crac-r2 oracle]# chown -R oracle:oinstall /u01/app/oracle <<<---错误的示范,不能这么简单粗暴

通过这么简单粗暴的权限修改,是解决了ORA-12547: TNS:lost contact问题,能够顺利登录到数据库,并能正常启动数据库实例,但是通过 tns 远程连接却无法连接,报监听相关错误。所以,如果你的数据库被错误的修改权限后,会导致应用、监控等程序无法连接哦,幸好,Oracle 提供了一个权限修复的脚本可以修复,不然只能通过删除节点、新增节点等方式修复咯。

[root@t-19crac-r2 oracle]# ll

total 4

drwxr-xr-x 3 oracle oinstall 18 Sep 28 18:03 admin

drwxrwxr-x 23 oracle oinstall 4096 Sep 28 15:44 diag

drwxrwxr-x 3 oracle oinstall 20 Sep 26 11:27 product

[root@t-19crac-r2 oracle]# su - oracle

Last login: Mon Sep 29 14:43:53 CST 2025 on pts/0

t-19crac-r2:/home/oracle(Jieke2)$ sys

SQL*Plus: Release 19.0.0.0.0 - Production on Mon Sep 29 14:50:04 2025

Version 19.23.0.0.0

Copyright (c) 1982, 2023, Oracle. All rights reserved.

Connected to an idle instance.

SQL> startup

ORACLE instance started.

Total System Global Area 2.1475E+11 bytes

Fixed Size 37410568 bytes

Variable Size 5.4895E+10 bytes

Database Buffers 1.5905E+11 bytes

Redo Buffers 767893504 bytes

Database mounted.

Database opened.

SQL>

SQL> select status from v$instance;

STATUS

------------

OPEN

--远程连接测试

$ sqlplus sys/Oracle19C123@192.168.21.71:61512/Jieke as sysdba

SQL*Plus: Release 19.0.0.0.0 - Production on Mon Sep 29 18:06:10 2025

Version 19.23.0.0.0

Copyright (c) 1982, 2022, Oracle. All rights reserved.

ERROR:

ORA-12537: TNS:connection closed

Enter user-name:

坑九 修复 RAC 节点目录权限

由于上面对 Oracle 家目录错误的执行 -R 级联修改属主权限,导致应用程序无法远程连接,不过 Oracle 提供了一个 permission.pl 脚本用来捕获正常节点的权限来生成一个权限回放的 cmd 文件,用来在错误权限的节点上执行。

脚本生成两个文件

a. permission-<时间戳> - 此文件包含以八进制值表示的文件权限、所捕获文件的所有者和组信息

b. restore-perm-<时间戳>.cmd - 此文件包含用于更改捕获文件的权限、所有者和组的命令

恢复目录捕获权限的步骤

- 将 “restore-perm-<时间戳>.cmd” 复制到所需位置或节点

- 为捕获期间生成的文件授予执行权限

--停止 RAC2 数据库实例,备份家目录,防止出错。

shu immediate

--备份目录

# cp -r /u01/app/oracle/ /u01/app/oracle_bak0929

--正常节点,使用 root 用户,即 节点一 获取正常权限

[root@t-19crac-r1 tmp]# ./permission.pl /u01/app/oracle

Following log files are generated

logfile : permission-Mon-Sep-29-18-32-24-2025

Command file : restore-perm-Mon-Sep-29-18-32-24-2025.cmd

Linecount : 47305

[root@t-19crac-r1 tmp]# ls -lrth restore-perm-Mon-Sep-29-18-32-24-2025.cmd

-rw-r--r-- 1 root root 12M Sep 29 18:32 restore-perm-Mon-Sep-29-18-32-24-2025.cmd

[root@t-19crac-r1 tmp]# ls -lrth permission-Mon-Sep-29-18-32-24-2025

-rw-r--r-- 1 root root 5.7M Sep 29 18:32 permission-Mon-Sep-29-18-32-24-2025

--传到权限错误节点,即 节点二,授予执行权限

chmod 755 restore-perm-Mon-Sep-29-18-32-24-2025.cmd

--root 用户执行

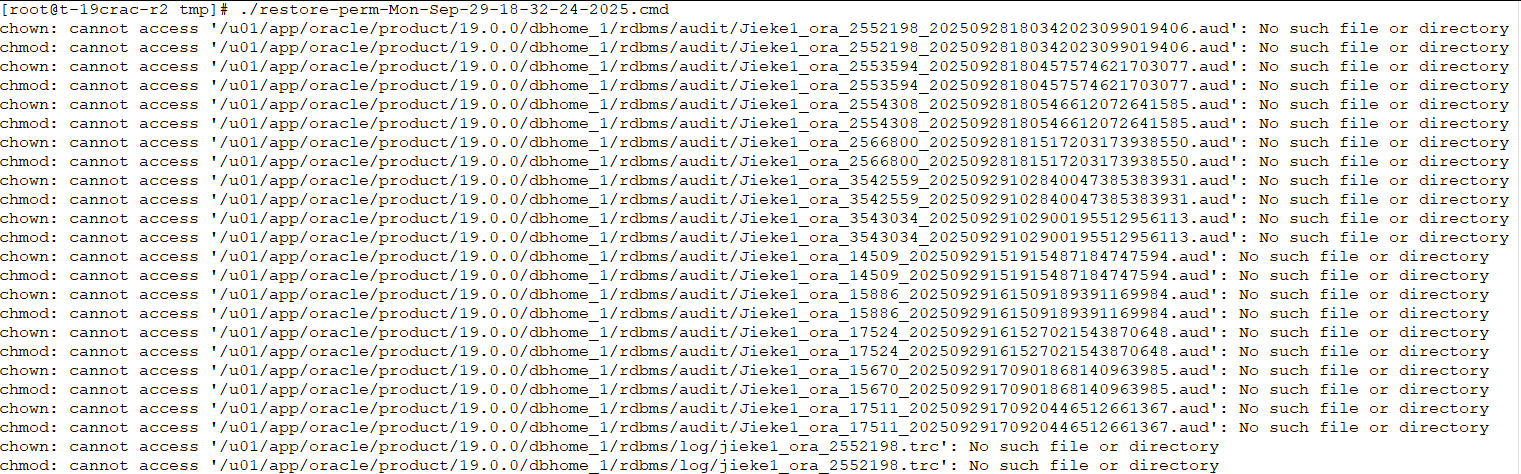

./restore-perm-Mon-Sep-29-18-32-24-2025.cmd

在执行 restore 脚本时可能会有一些找不到文件的提示,不用担心,因为很多日志,审计等文件仅是节点一特有的,权限不用修改,等执行完毕后我们在远程登录测试,则恢复正常。

$ sqlplus sys/Oracle19C123@192.168.21.71:61512/Jieke as sysdba

SQL*Plus: Release 19.0.0.0.0 - Production on Mon Sep 29 18:46:35 2025

Version 19.23.0.0.0

Copyright (c) 1982, 2023, Oracle. All rights reserved.

Connected to:

Oracle Database 19c Enterprise Edition Release 19.0.0.0.0 - Production

Version 19.23.0.0.0

SQL>

set line 240

col HOST_NAME for a30

select INSTANCE_NAME,HOST_NAME,VERSION,STARTUP_TIME,STATUS from gv$instance;

SQL>

INSTANCE_NAME HOST_NAME VERSION STARTUP_TIME STATUS

---------------- ------------------------------ ----------------- ------------------- ------------

Jieke2 t-19crac-r2 19.0.0.0.0 2025-09-29 18:01:27 OPEN

SQL>

注意:此示例代码仅用于教育目的,Oracle 支持服务不提供支持。不过,它已经过官方内部测试,且按文档说明正常运行。我们不保证它在您的环境中也能正常工作,因此在依赖它之前,请务必在您的环境中进行测试。 在使用此示例代码之前,请先进行校对!由于文本编辑器、电子邮件软件包和操作系统处理文本格式(空格、制表符和回车符)的方式存在差异,您首次收到此示例代码时,它可能处于不可执行状态。请检查示例代码,确保此类错误得到纠正。 注意:此脚本可以将权限恢复到捕获时的状态,并非用于重置权限。

参考文章

https://access.redhat.com/solutions/7125112 https://access.redhat.com/solutions/7030065 https://docs.oracle.com/en/database/oracle/oracle-database/19/cwlin/oracle-deinstallation-tool-deinstall.html Srvctl Database Startup Failure: PRCR-1079 CRS-2674 GIM-00104 GIM-00093 (Doc ID 3080414.1) "ORA-12547: TNS:lost Contact" When conncting to DB with OS Authentication (Doc ID 3084142.1) Script to capture and restore file permission in a directory (for eg. ORACLE_HOME) (Doc ID 1515018.1)

全文完,希望可以帮到正在阅读的你,如果觉得有帮助,可以分享给你身边的朋友,同事,你关心谁就分享给谁,一起学习共同进步~~~

欢迎关注我的公众号【JiekeXu DBA之路】,一起学习新知识!

——————————————————————————

公众号:JiekeXu DBA之路

墨天轮:https://www.modb.pro/u/4347

CSDN :https://blog.csdn.net/JiekeXu

ITPUB:https://blog.itpub.net/69968215

腾讯云:https://cloud.tencent.com/developer/user/5645107

——————————————————————————