大家好,我是JiekeXu,江湖人称“强哥”,青学会MOP技术社区主席,荣获Oracle ACE Pro称号,金仓最具价值倡导者KVA,崖山最具价值专家YVP,IvorySQL开源社区专家顾问委员会成员,墨天轮MVP,墨天轮年度“墨力之星”,拥有 Oracle OCP/OCM 认证,MySQL 5.7/8.0 OCP 认证以及金仓KCA、KCP、KCM、KCSM证书,PCA、PCTA、OBCA、OGCA等众多国产数据库认证证书,欢迎关注我的微信公众号“JiekeXu DBA之路”,然后点击右上方三个点“设为星标”置顶,更多干货文章才能第一时间推送,谢谢!

前 言

最近,遇到了 Oracle ASM 磁盘组故障的几个小问题,感觉比较典型,可能有很多朋友在工作中也会遇到,特此记录一下。Oracle ASM 官方有一本在线书籍《Automatic Storage Management Administrator’s Guide》,详细记录了 ASM 相关的管理员指南操作,使用 ASMCA、ASMCMD 命令行实用程序,可用于管理 Oracle ASM 实例、磁盘组、磁盘组的文件权限改造、磁盘组内的文件和目录、磁盘组模板以及卷。记得大概在六年前也写过一篇 《Oracle ASM 磁盘组基础知识整理收藏版》,内容较多,阅读量也比较高,看来很受欢迎。

问题一、OCR 磁盘为 DROPPED 状态处理

近期搭建了一套生产仿真环境的 RAC,由于系统工程师配置多路径时没有配置正确,仅配置了单路径,测试高可用的时候发现多路径链路并没有高可用,在测试的过程中导致 OCR 磁盘出现异常,被踢出 OCR 磁盘组出现了DROPPED 状态。

检查 OCR 状态

使用 query 命令查看仅有两块磁盘,缺少一块盘。因 OCR 是默认冗余,数据库还能正常提供服务,暂时没有影响,但不能再失去一块盘,否则就会挂掉。

$ crsctl query css votedisk

## STATE File Universal Id File Name Disk group

-- ----- ----------------- --------- ---------

1. ONLINE 47bc00a3d8c34f2fbf56c11613ce71e7 (/dev/asmdisks/otwrac-ocr01) [OCR]

2. ONLINE 060ea9af3ca04f20bf3d01e5ef7e9f91 (/dev/asmdisks/otwrac-ocr02) [OCR]

Located 2 voting disk(s).

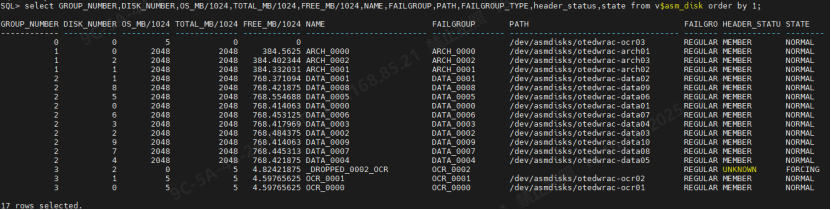

使用 SQL 在 ASM 实例或者 DB 实例查看,确实发现有一块 5GB 大小的磁盘出现 UNKNOWN 状态,磁盘名字为 _DROPPED_0002_OCR 则可以确定就是 OCR 磁盘。

SQL> set lin 1000 pagesize 999

SQL> col NAME for a25

SQL> col FAILGROUP for a15

SQL> col path for a35

SQL> select GROUP_NUMBER,DISK_NUMBER,OS_MB/1024,TOTAL_MB/1024,FREE_MB/1024,NAME,FAILGROUP,PATH,FAILGROUP_TYPE,header_status,state from v$asm_disk order by 1;

GROUP_NUMBER DISK_NUMBER OS_MB/1024 TOTAL_MB/1024 FREE_MB/1024 NAME FAILGROUP PATH FAILGRO HEADER_STATU STATE

------------ ----------- ---------- ------------- ------------ ------------------------- --------------- ----------------------------------- ------- ------------ --------

0 0 5 0 0 /dev/asmdisks/otedwrac-ocr03 REGULAR MEMBER NORMAL

1 0 2048 2048 384.5625 ARCH_0000 ARCH_0000 /dev/asmdisks/otedwrac-arch01 REGULAR MEMBER NORMAL

1 2 2048 2048 384.402344 ARCH_0002 ARCH_0002 /dev/asmdisks/otedwrac-arch03 REGULAR MEMBER NORMAL

1 1 2048 2048 384.332031 ARCH_0001 ARCH_0001 /dev/asmdisks/otedwrac-arch02 REGULAR MEMBER NORMAL

2 1 2048 2048 768.371094 DATA_0001 DATA_0001 /dev/asmdisks/otedwrac-data02 REGULAR MEMBER NORMAL

2 8 2048 2048 768.421875 DATA_0008 DATA_0008 /dev/asmdisks/otedwrac-data09 REGULAR MEMBER NORMAL

2 5 2048 2048 768.554688 DATA_0005 DATA_0005 /dev/asmdisks/otedwrac-data06 REGULAR MEMBER NORMAL

2 0 2048 2048 768.414063 DATA_0000 DATA_0000 /dev/asmdisks/otedwrac-data01 REGULAR MEMBER NORMAL

2 6 2048 2048 768.453125 DATA_0006 DATA_0006 /dev/asmdisks/otedwrac-data07 REGULAR MEMBER NORMAL

2 3 2048 2048 768.417969 DATA_0003 DATA_0003 /dev/asmdisks/otedwrac-data04 REGULAR MEMBER NORMAL

2 2 2048 2048 768.484375 DATA_0002 DATA_0002 /dev/asmdisks/otedwrac-data03 REGULAR MEMBER NORMAL

2 9 2048 2048 768.414063 DATA_0009 DATA_0009 /dev/asmdisks/otedwrac-data10 REGULAR MEMBER NORMAL

2 7 2048 2048 768.445313 DATA_0007 DATA_0007 /dev/asmdisks/otedwrac-data08 REGULAR MEMBER NORMAL

2 4 2048 2048 768.421875 DATA_0004 DATA_0004 /dev/asmdisks/otedwrac-data05 REGULAR MEMBER NORMAL

3 2 0 5 4.82421875 _DROPPED_0002_OCR OCR_0002 REGULAR UNKNOWN FORCING

3 1 5 5 4.59765625 OCR_0001 OCR_0001 /dev/asmdisks/otedwrac-ocr02 REGULAR MEMBER NORMAL

3 0 5 5 4.59765625 OCR_0000 OCR_0000 /dev/asmdisks/otedwrac-ocr01 REGULAR MEMBER NORMAL

--也可以使用 lsdsk -p 命令在 ASM 中查询。

--示例:正常输出

ASMCMD> lsdsk -p

Group_Num Disk_Num Incarn Mount_Stat Header_Stat Mode_Stat State Path

2 0 4081510609 CACHED MEMBER ONLINE NORMAL /dev/sdc

2 1 4081510610 CACHED MEMBER ONLINE NORMAL /dev/sdd

1 0 4081510605 CACHED MEMBER ONLINE NORMAL /dev/sde

3 0 4081510612 CACHED MEMBER ONLINE NORMAL /dev/sdf

3 1 4081510613 CACHED MEMBER ONLINE NORMAL /dev/sdg

3 2 4081510611 CACHED MEMBER ONLINE NORMAL /dev/sdh

2 2 4081510608 CACHED MEMBER ONLINE NORMAL /dev/sdi

1 1 4081510604 CACHED MEMBER ONLINE NORMAL /dev/sdj

2 4 4081510607 CACHED MEMBER ONLINE NORMAL /dev/sdk

2 3 4081510606 CACHED MEMBER ONLINE NORMAL /dev/sdl

1 2 4081510603 CACHED MEMBER ONLINE NORMAL /dev/sdm

ASMCMD>

我们去查看 ASM 日志,发现此 OCR 磁盘确实也是被 ASM 实例强制 drop 掉了,这里仅提供日志路径,如果有相似问题,请自行查看。

--19c /u01/app/grid/diag/asm/+asm/+ASM1/trace/alert_+ASM1.log grep OCR_00 alert_+ASM1.log

强制重新添加

这里如果不使用强制,可能无法添加磁盘,会报已经存在;但如果使用 Force 强制添加时一定要确认是正确的磁盘,如果把其他正常磁盘加进去问题就大了,赶紧跑路吧。。。

--alter diskgroup OCR drop disk OCR_02 FORCE;

alter diskgroup OCR add disk '/dev/asmdisks/otwrac-ocr03' force;

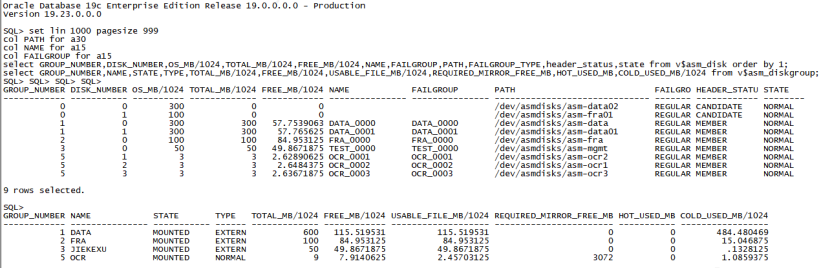

问题二、添加 ASM 磁盘遇到 ORA-15137 问题处理

还有一次在测试环境中,表空间不足,需要添加 ASM 磁盘,但在 ASM 实例中添加磁盘时报错 ORA-15137 问题处理。

su - grid

sqlplus / as sysasm

alter diskgroup DATA add disk '/dev/asmdisks/asm-data04'rebalance power 10;

ORA-15032: not all alterations performed

ORA-15137: The ASM cluster is in rolling patch state.

确认集群的当前状态是否为滚动补丁状态

(+ASM1)$ crsctl query crs activeversion -f Oracle Clusterware active version on the cluster is [19.0.0.0.0]. The cluster upgrade state is [ROLLING PATCH]. The cluster active patch level is [3509829075]. (+ASM1)$ asmcmd showclusterstate In Rolling Patch

检查各节点的补丁是否一致

$ /u01/app/19.0.0.0/grid/bin/kfod op=patches

(+ASM1)$ kfod op=patches

kgfnGetFacility: facility=0x15f4d90

kgfnInitDiag: diagctx=0x14c1fc0

---------------

List of Patches

===============

29517242

29517247

29585399

36233263

36233343

36240578

36383196

36460248

$ /u01/app/19.0.0.0/grid/bin/kfod op=PATCHLVL

kgfnGetFacility: facility=0x24a8d90

kgfnInitDiag: diagctx=0x2375fc0

-------------------

Current Patch level

===================

1545812015

(+ASM2)$ kfod op=patches

kgfnGetFacility: facility=0x2161d90

kgfnInitDiag: diagctx=0x202efc0

---------------

List of Patches

===============

29517242

29517247

29585399

36233263

36233343

36240578

36383196

36460248

(+ASM2)$ kfod op=PATCHLVL

kgfnGetFacility: facility=0x1badd90

kgfnInitDiag: diagctx=0x1a7afc0

-------------------

Current Patch level

===================

1545812015

opatch lspatches

36460248;TOMCAT RELEASE UPDATE 19.0.0.0.0 (36460248)

36383196;DBWLM RELEASE UPDATE 19.0.0.0.0 (36383196)

36240578;OCW RELEASE UPDATE 19.23.0.0.0 (36240578)

36233343;ACFS RELEASE UPDATE 19.23.0.0.0 (36233343)

36233263;Database Release Update : 19.23.0.0.240416 (36233263)

以上排查结果一致,如果不一致要么打补丁,要么回退小补丁;接下来排查这四项。

$ <GRID_HOME>/bin/crsctl query crs releasepatch

$ <GRID_HOME>/bin/crsctl query crs softwarepatch

$ <GRID_HOME>/bin/crsctl query crs releaseversion

$ <GRID_HOME>/bin/crsctl query crs softwareversion

(+ASM1)$ crsctl query crs releasepatch

Oracle Clusterware release patch level is [1545812015] and the complete list of patches [29517242 29517247 29585399 36233263 36233343 36240578 36383196 36460248 ] have been applied on the local node. The release patch string is [19.23.0.0.0].

Oracle Clusterware release patch level is [1545812015] and the complete list of patches [29517242 29517247 29585399 36233263 36233343 36240578 36383196 36460248 ] have been applied on the local node. The release patch string is [19.23.0.0.0].

(+ASM1)$ crsctl query crs softwarepatch

Oracle Clusterware patch level on node dwrac-86 is [3509829075].

Oracle Clusterware patch level on node dwrac-87 is [1545812015].

(+ASM1)$ crsctl query crs releaseversion

Oracle High Availability Services release version on the local node is [19.0.0.0.0]

Oracle High Availability Services release version on the local node is [19.0.0.0.0]

(+ASM1)$ crsctl query crs softwareversion

Oracle Clusterware version on node [dwrac-86] is [19.0.0.0.0]

Oracle Clusterware version on node [dwrac-87] is [19.0.0.0.0]

(+ASM1)$ cluutil -ckpt -oraclebase $ORACLE_BASE -chkckpt -name ROOTCRS_PREPATCH -status

FAIL

(+ASM2)$ cluutil -ckpt -oraclebase $ORACLE_BASE -chkckpt -name ROOTCRS_PREPATCH -status

START

如果发现补丁不一致,需要对不一致的节点进行修复。这里发现 softwarepatch 不一致。

解决办法

根据文档ORA-15137 After Rolling Back Failed Patch at GI_HOME (Doc ID 1968727.1)

Case 1. Update the patch level on all nodes, As root user

$ clscfg -patch

Example:

# <GRID_HOME>/bin/clscfg -patch

clscfg: -patch mode specified

clscfg: EXISTING configuration version 5 detected.

clscfg: version 5 is 12c Release 1.

Successfully accumulated necessary OCR keys.

Creating OCR keys for user 'root', privgrp 'root'..

Operation successful.

And validate as root user

[root@dwrac-86 ~]# /u01/app/19.0.0.0/grid/bin/clscfg -patch

clscfg: -patch mode specified

clscfg: EXISTING configuration version 19 detected.

Successfully accumulated necessary OCR keys.

Creating OCR keys for user 'root', privgrp 'root'..

Operation successful.

[root@dwrac-87 ~]# /u01/app/19.0.0.0/grid/bin/clscfg -patch

clscfg: -patch mode specified

clscfg: EXISTING configuration version 19 detected.

Successfully accumulated necessary OCR keys.

Creating OCR keys for user 'root', privgrp 'root'..

Operation successful.

$ crsctl query crs softwarepatch

Example:

# <GRID_HOME>/bin/crsctl query crs softwarepatch

Oracle Clusterware patch level on node host01 is [1964077727].

# <GRID_HOME>/bin/crsctl query crs softwarepatch

Oracle Clusterware patch level on node host02 is [1964077727].

dwrac-86:/home/grid(+ASM1)$ crsctl query crs softwarepatch

Oracle Clusterware patch level on node dwrac-86 is [1545812015].

dwrac-87:/home/grid(+ASM2)$ crsctl query crs softwarepatch

Oracle Clusterware patch level on node dwrac-87 is [1545812015].

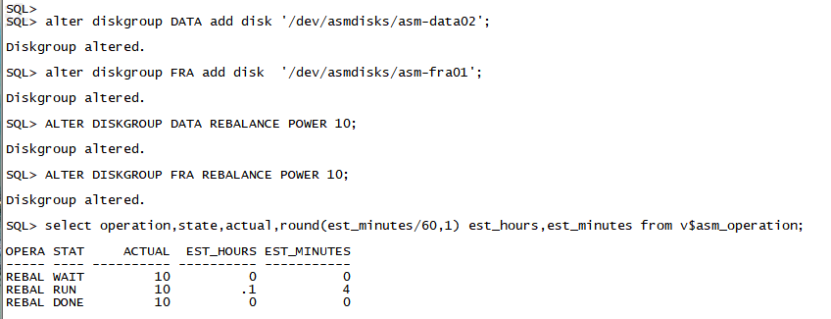

If both were at same level,stop rolling patch mode from one of the node as root user

如果两者处于同一级别,则以根用户身份从其中一个节点停止滚动补丁模式

# crsctl stop rollingpatch

Then startup the cluster and create asm diskgroup. 然后启动群集并创建 asm 磁盘组。

Example:

[root@dwrac-86 ~]# /u01/app/19.0.0.0/grid/bin/crsctl stop rollingpatch

CRS-1161: The cluster was successfully patched to patch level [1545812015].

另外一种情况就是补丁不一致的情况下,应用相同补丁或者回退小补丁,这里没有遇到,就不在说明了。

修复不一致的节点,在多余补丁的节点上执行以下操作:

# 停止数据库

$ shutdown immediate

# 准备补丁前操作

$ ./rootcrs.sh -prepatch

# 提交并回滚多余补丁(假设多余补丁ID为29757256)

$ ./patchgen commit -rb 29757256

# 补丁后操作

$ ./rootcrs.sh -postpatch

# 再次执行准备和补丁后操作以确保一致性

$ ./rootcrs.sh -prepatch

$ ./rootcrs.sh -postpatch

验证集群状态,确保集群状态从滚动补丁变为正常。

$ asmcmd showclusterstate

In Rolling Patch -> NORMAL

ASM 其他常用命令 SQL

--使用 ASMCMD showclustermode 命令来确定是否启用了 Oracle Flex ASM

$ asmcmd showclustermode

ASM cluster : Flex mode enabled - Direct Storage Access

--使用 SQL*Plus 和 ASMCMD 命令查看 Oracle Flex ASM 连接

col INSTANCE_NAME for a35

set line 345

SELECT instance_name, db_name, status FROM V$ASM_CLIENT;

INSTANCE_NAME DB_NAME STATUS

--------------- -------- ------------

+ASM1 +ASM CONNECTED

orcl1 orcl CONNECTED

orcl2 orcl CONNECTED

$ asmcmd lsct data

DB_Name Status Software_Version Compatible_version Instance_Name Disk_Group

+ASM CONNECTED 19.0.0.0.0 19.0.0.0.0 +ASM DATA

orcl CONNECTED 19.0.0.0.0 19.0.0.0.0 orcl1 DATA

orcl CONNECTED 19.0.0.0.0 19.0.0.0.0 orcl2 DATA

--示例 6-1 使用 V$ASM_ATTRIBUTE 视图查看磁盘组属性

--这是使用V$ASM_ATTRIBUTE和V$ASM_DISKGROUP视图的一个示例。要使磁盘组在V$ASM_ATTRIBUTE视图输出中显示,COMPATIBLE.ASM值必须设置为11.1或更高。标记为只读(Y)的属性只能在磁盘组创建期间进行设置。

SELECT SUBSTR(dg.name,1,12) AS diskgroup, SUBSTR(a.name,1,24) AS name,

SUBSTR(a.value,1,24) AS value, read_only FROM V$ASM_DISKGROUP dg,

V$ASM_ATTRIBUTE a WHERE dg.name = 'DATA' AND dg.group_number = a.group_number

AND a.name NOT LIKE '%template%';

DISKGROUP NAME VALUE READ_ON

------------ ------------------------ ------------------------ -------

DATA idp.type dynamic N

DATA idp.boundary auto N

DATA vam_migration_done false Y

DATA scrub_metadata.enabled TRUE N

DATA scrub_async_limit 1 N

DATA content_hardcheck.enable FALSE N

DATA access_control.umask 066 N

DATA access_control.enabled FALSE N

DATA cell.sparse_dg allnonsparse N

DATA cell.smart_scan_capable FALSE N

DATA compatible.advm 19.0.0.0.0 N

DATA compatible.rdbms 19.0.0.0.0 N

DATA compatible.asm 19.0.0.0.0 N

DATA appliance._partnering_ty GENERIC Y

DATA au_size 1048576 Y

DATA content.check FALSE N

DATA content.type data N

DATA logical_sector_size 512 N

DATA sector_size 512 N

DATA ate_conversion_done true Y

DATA preferred_read.enabled FALSE N

DATA thin_provisioned FALSE N

DATA failgroup_repair_time 24.0h N

DATA phys_meta_replicated true Y

DATA disk_repair_time 12.0h N

--示例6-3 使用V$ASM_DISK查看磁盘组中的磁盘

--这是在Oracle ASM实例上运行V$ASM_DISK和V$ASM_DISKGROUP视图的使用示例。该示例显示与磁盘组关联的磁盘,以及磁盘的挂载状态、状态和故障组。

SELECT SUBSTR(dg.name,1,16) AS diskgroup, SUBSTR(d.name,1,16) AS asmdisk,

d.mount_status, d.state, SUBSTR(d.failgroup,1,16) AS failgroup

FROM V$ASM_DISKGROUP dg, V$ASM_DISK d WHERE dg.group_number = d.group_number;

DISKGROUP ASMDISK MOUNT_S STATE FAILGROUP

---------------- ---------------- ------- -------- ----------------

DATA DATA_0008 CACHED NORMAL DATA_0008

--示例6-4 使用V$ASM_DISK_STAT查看磁盘组中的磁盘

--这是在Oracle ASM实例上使用V$ASM_DISK_STAT和V$ASM_DISKGROUP_STAT视图的一个示例。该示例显示与特定磁盘组关联的磁盘,以及挂载状态、状态和各种读写统计信息。

SELECT SUBSTR(dgs.name,1,10) AS diskgroup, SUBSTR(ds.name,1,10) AS asmdisk,

ds.mount_status, ds.state, ds.reads, ds.writes, ds.read_time, ds.write_time,

bytes_read, bytes_written

FROM V$ASM_DISKGROUP_STAT dgs, V$ASM_DISK_STAT ds

WHERE dgs.group_number = ds.group_number AND dgs.name = 'DATA';

DISKGROUP ASMDISK MOUNT_S STATE READS WRITES READ_TIME WRITE_TIME BYTES_READ BYTES_WRITTEN

---------- ---------- ------- ------- ------ ------ --------- ---------- ---------- -------------

DATA DATA_0000 CACHED NORMAL 841 10407 1.212218 3.511977 23818240 178369024

DATA DATA_0008 CACHED NORMAL 26065 1319 1.592524 .297728 436203520 38358528

DATA DATA_0010 CACHED NORMAL 561 868 .794849 .337575 18631680 22584320

--示例6-5 使用V$ASM_CLIENT查看磁盘组客户端

这是在Oracle ASM实例上使用V$ASM_CLIENT和V$ASM_DISKGROUP视图的一个示例。该示例显示磁盘组以及有关已连接数据库客户端实例的信息。

SELECT dg.name AS diskgroup, SUBSTR(c.instance_name,1,12) AS instance,

SUBSTR(c.db_name,1,12) AS dbname, SUBSTR(c.SOFTWARE_VERSION,1,12) AS software,

SUBSTR(c.COMPATIBLE_VERSION,1,12) AS compatible

FROM V$ASM_DISKGROUP dg, V$ASM_CLIENT c

WHERE dg.group_number = c.group_number;

DISKGROUP INSTANCE DBNAME SOFTWARE COMPATIBLE

------------------------------ ------------ -------- ------------ ------------

DATA +ASM +ASM 19.0.0.0.0 19.0.0.0.0

DATA orcl orcl 19.0.0.0.0 19.0.0.0.0

DATA +ASM asmvol 19.0.0.0.0 19.0.0.0.0

FRA orcl orcl 19.0.0.0.0 19.0.0.0.0

...

--示例6-9 使用V$ASM_FILE查看Oracle ASM文件访问控制信息

--此示例显示了在V$ASM_FILE视图中显示的Oracle ASM文件访问控制文件权限信息。

SELECT dg.name AS diskgroup, a.name, f.permissions, f.user_number, u.os_name,

f.usergroup_number, ug.name

FROM V$ASM_DISKGROUP dg, V$ASM_USER u, V$ASM_USERGROUP ug, V$ASM_FILE f, V$ASM_ALIAS a

WHERE dg.name = 'FRA' AND dg.group_number = u.group_number AND

u.group_number = ug.group_number AND ug.group_number = f.group_number AND

f.group_number = a.group_number AND

f.usergroup_number = ug.usergroup_number AND f.user_number = u.user_number AND

f.file_number = a.file_number;

DISKGROUP NAME PERMISSIONS USER_NUMBER OS_NAME USERGROUP_NUMBER NAME

--------- ---------------------- ----------- ----------- ------- ---------------- -------

DATA USERS.259.685366091 rw-r----- 3 grid 1 asm_fra

DATA TEMP.264.685366227 rw-r----- 3 grid 1 asm_fra

...

参考链接

https://docs.oracle.com/en/database/oracle/oracle-database/19/ostmg/asm-intro.html#GUID-D577D6A5-0A08-4070-B4FA-A39C5D548CD2 https://docs.oracle.com/en/database/oracle/oracle-database/26/ostmg/create-diskgroups.html#GUID-D8A4F1E1-D5F2-4372-8BF8-2A6CB9724930 ORA-15137 After Rolling Back Failed Patch at GI_HOME (Doc ID 1968727.1)

全文完,希望可以帮到正在阅读的你,如果觉得有帮助,可以分享给你身边的朋友,同事,你关心谁就分享给谁,一起学习共同进步~~~

欢迎关注我的公众号【JiekeXu DBA之路】,一起学习新知识!

——————————————————————————

公众号:JiekeXu DBA之路

墨天轮:https://www.modb.pro/u/4347

CSDN :https://blog.csdn.net/JiekeXu

ITPUB:https://blog.itpub.net/69968215

腾讯云:https://cloud.tencent.com/developer/user/5645107

——————————————————————————