伟大的梦想始于渺小的起点,渺小的起点可以是一个想法,也可以是一个选择…

而学习伟大的数据库的起点可以是一次认证,也可以是一份安装教程文档。

OceanBase的梦想是成为最优秀的世界级数据库。

那本文主要目的是按照官方教程的指引,一步步实践,总结部署过程中遇到的问题和一些思考,尽可能为学习安装OceanBase的伙伴做到一次看懂,一次部署完成 ^ v ^

一. 前提须知

1.1 本文原则

- 按照官方教程实践,把部署过程尽可能做到一次完成。

- 将实践与理论进行结合,理解OceanBase的架构。

1.2 部署方式

本文使用手动离线部署集群。虽然OBD工具很不错,但是笔者认为手动部署掌控性更强,有助于理解OceanBase的架构,并且比较适用于生产环境。

二. 部署条件

OceanBase社区版安装包加起来不过上百MB,但是集群的最低硬件配置还是挺高的。

2.1 服务器配置

笔者的电脑只有20个CPU,为了能够部署OB Cluster,使用超额分配的方式,在使用的时候,需要多留意电脑的资源。

笔者在使用过程中,由于没有注意内存的使用,已经重启好几次。

| 服务器 | OS版本 | IP 地址 | CPU 核数 | 内存 GB | 磁盘 | 用途 |

|---|---|---|---|---|---|---|

| RHOB-DB00 | RHEL 7.6 | 192.168.117.170 | 1 | 1 | SSD | OBD、OBproxy、OBclient |

| RHOB-DB01 | RHEL 7.6 | 192.168.117.171 | 16 | 8 | SSD | OBServer1 |

| RHOB-DB02 | RHEL 7.6 | 192.168.117.172 | 16 | 8 | SSD | OBServer2 |

| RHOB-DB03 | RHEL 7.6 | 192.168.117.173 | 16 | 8 | SSD | OBServer3 |

2.2 存储配置

添加3块硬盘(按需划分即可),分别为/dev/sdb、/dev/sdc、/dev/sdd,用于构建oblog和obdata文件系统。

[root@RHOB-DB01 ~]# fdisk -l |grep "Disk /dev/sd" Disk /dev/sda: 85.9 GB, 85899345920 bytes, 167772160 sectors Disk /dev/sdb: 10.7 GB, 10737418240 bytes, 20971520 sectors Disk /dev/sdc: 21.5 GB, 21474836480 bytes, 41943040 sectors Disk /dev/sdd: 53.7 GB, 53687091200 bytes, 104857600 sectors

2.2.1 创建pv

[root@RHOB-DB01 ~]# pvcreate /dev/sdb /dev/sdc /dev/sdd Physical volume "/dev/sdb" successfully created. Physical volume "/dev/sdc" successfully created. Physical volume "/dev/sdd" successfully created.`

2.2.2 创建vg

[root@RHOB-DB01 ~]# vgcreate obdatavg /dev/sdb /dev/sdc /dev/sdd Volume group "obdatavg" successfully created`

2.2.3 创建lv

[root@RHOB-DB01 ~]# lvcreate -n lvoblog -L 20G obdatavg Logical volume “lvoblog” created. [root@RHOB-DB01 ~]# lvcreate -n lvobdata -l 100%FREE obdatavg Logical volume “lvobdata” created.

2.2.4 格式化

[root@RHOB-DB01 ~]# mkfs.ext4 /dev/obdatavg/lvoblog [root@RHOB-DB01 ~]# mkfs.ext4 /dev/obdatavg/lvobdata

2.2.5 挂盘

[root@RHOB-DB01 ~]# mkdir /oblog [root@RHOB-DB01 ~]# mkdir /obdata [root@RHOB-DB01 ~]# mount /dev/obdatavg/lvobdata /obdata [root@RHOB-DB01 ~]# mount /dev/obdatavg/lvoblog /oblog [root@RHOB-DB01 ~]# df -h Filesystem Size Used Avail Use% Mounted on /dev/mapper/rhel-root 50G 5.6G 45G 12% / devtmpfs 894M 0 894M 0% /dev tmpfs 910M 0 910M 0% /dev/shm tmpfs 910M 9.9M 900M 2% /run tmpfs 910M 0 910M 0% /sys/fs/cgroup /dev/sda1 1014M 178M 837M 18% /boot /dev/mapper/rhel-home 27G 37M 27G 1% /home tmpfs 182M 0 182M 0% /run/user/0 /dev/mapper/obdatavg-lvobdata 59G 53M 56G 1% /obdata /dev/mapper/obdatavg-lvoblog 20G 45M 19G 1% /oblog

2.2.6 挂盘重启自动生效

添加以下内容

参照官方教程配置。

[root@RHOB-DB01 ~]# cat /etc/fstab |grep obdata /dev/mapper/obdatavg-lvoblog /oblog ext4 defaults,noatime,nodiratime,nodelalloc,barrier=0 0 0 /dev/mapper/obdatavg-lvobdata /obdata ext4 defaults,noatime,nodiratime,nodelalloc,barrier=0 0 0

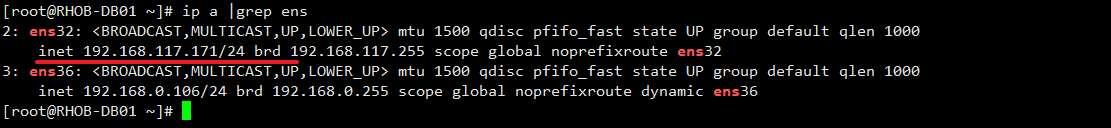

2.3 检查网卡

三. 环境配置

3.1 内核参数

[root@RHOB-DB01 ~]# cat /etc/sysctl.conf | grep -Ev '^#|^$' net.core.somaxconn = 2048 net.core.netdev_max_backlog = 10000 net.core.rmem_default = 16777216 net.core.wmem_default = 16777216 net.core.rmem_max = 16777216 net.core.wmem_max = 16777216 net.ipv4.ip_local_port_range = 3500 65535 net.ipv4.ip_forward = 0 net.ipv4.conf.default.rp_filter = 1 net.ipv4.conf.default.accept_source_route = 0 net.ipv4.tcp_syncookies = 0 net.ipv4.tcp_rmem = 4096 87380 16777216 net.ipv4.tcp_wmem = 4096 65536 16777216 net.ipv4.tcp_max_syn_backlog = 16384 net.ipv4.tcp_fin_timeout = 15 net.ipv4.tcp_max_syn_backlog = 16384 net.ipv4.tcp_tw_reuse = 1 net.ipv4.tcp_tw_recycle = 1 net.ipv4.tcp_slow_start_after_idle=0 vm.swappiness = 0 vm.min_free_kbytes = 2097152 vm.max_map_count=655360 fs.aio-max-nr=1048576

配置生效

[root@RHOB-DB01 ~]# sysctl -p

vm.min_free_kbytes = 2097152 ,如果虚拟机内存不够,可能会卡住。

解决方案:关掉虚拟机,增大虚拟机的内存,重启虚拟机。

3.2 修改会话变量设置

查看ulimit的默认值

[root@RHOB-DB01 ~]# ulimit -a core file size (blocks, -c) 0 data seg size (kbytes, -d) unlimited scheduling priority (-e) 0 file size (blocks, -f) unlimited pending signals (-i) 31078 max locked memory (kbytes, -l) 64 max memory size (kbytes, -m) unlimited open files (-n) 1024 pipe size (512 bytes, -p) 8 POSIX message queues (bytes, -q) 819200 real-time priority (-r) 0 stack size (kbytes, -s) 8192 cpu time (seconds, -t) unlimited max user processes (-u) 31078 virtual memory (kbytes, -v) unlimited file locks (-x) unlimited

添加参数

[root@RHOB-DB01 ~]# cat /etc/security/limits.conf |grep -Ev '^#|^$' * soft nofile 655360 * hard nofile 655360 * soft nproc 655360 * hard nproc 655360 * soft core unlimited * hard core unlimited * soft stack unlimited * hard stack unlimited

查看ulimit的最新值

[root@RHOB-DB01 ~]# ulimit -a core file size (blocks, -c) unlimited data seg size (kbytes, -d) unlimited scheduling priority (-e) 0 file size (blocks, -f) unlimited pending signals (-i) 31078 max locked memory (kbytes, -l) 64 max memory size (kbytes, -m) unlimited open files (-n) 655360 pipe size (512 bytes, -p) 8 POSIX message queues (bytes, -q) 819200 real-time priority (-r) 0 stack size (kbytes, -s) unlimited cpu time (seconds, -t) unlimited max user processes (-u) 655360 virtual memory (kbytes, -v) unlimited file locks (-x) unlimited

3.3 关闭防火墙和 selinux

3.3.1 关闭防火墙

systemctl disable firewalld systemctl stop firewalld systemctl status firewalld

3.3.2 selinux

[root@RHOB-DB01 ~]# cat /etc/selinux/config |grep disabled disabled - No SELinux policy is loaded. SELINUX=disabled

setenforce 0

3.4 新建用户

3.4.1 新建admin账号

如果不想用root用户,那就使用admin用户。

笔者尝试新建obadmin用户,会默认没有admin用户,自动使用root用户安装。

解决方案 : 新建admin用户

[root@RHOB-DB01 ~]# useradd admin [root@RHOB-DB01 ~]# passwd admin Changing password for user admin. New password: [root@RHOB-DB01 ~]# [root@RHOB-DB01 ~]# [root@RHOB-DB01 ~]# echo 'admin:ObAdMin&123' | chpasswd [root@RHOB-DB01 ~]# usermod admin -G wheel [root@RHOB-DB01 ~]# id admin uid=1002(admin) gid=1002(admin) groups=1002(admin),10(wheel)

3.4.2 配置sudo

[root@RHOB-DB01 ~]# cat /etc/sudoers |grep wheel

Allows people in group wheel to run all commands

%wheel ALL=(ALL) ALL

# %wheel ALL=(ALL) NOPASSWD: ALL

新增如下配置,每次使用sudo,均需要密码。

[root@RHOB-DB01 ~]# cat /etc/sudoers |grep admin admin ALL=(ALL) ALL

注意 : 以上的操作,笔者都是在一台虚拟机完成,然后按照前文规划,复制3台服务器后,逐个修改主机名和IP地址,再继续下面的操作。

3.5 SSH互信

总共有四台机服务器,笔者将四台服务器均配置互信。

操作方法:每台服务器使用admin账号均执行以下命令。

–- 密码 : ObAdMin&123 [admin@RHOB-DB01 ~]$ ssh-keygen -t rsa # 敲几次回车。 [admin@RHOB-DB01 ~]$ ssh-copy-id 192.168.117.171 # 输入密码 [admin@RHOB-DB01 ~]$ ssh-copy-id 192.168.117.172 [admin@RHOB-DB01 ~]$ ssh-copy-id 192.168.117.173 [admin@RHOB-DB01 ~]$ ssh-copy-id 192.168.117.170 [admin@RHOB-DB01 ~]$ ssh RHOB-DB01 [admin@RHOB-DB01 ~]$ ssh RHOB-DB02 [admin@RHOB-DB01 ~]$ ssh RHOB-DB03 [admin@RHOB-DB01 ~]$ ssh RHOB-DB00

3.6 配置时间同步服务

3.6.1 查看时区设置

时区为Asia/Shanghai,没有问题。

[root@RHOB-DB01 ~]# timedatectl Local time: Sun 2021-12-19 14:37:59 CST Universal time: Sun 2021-12-19 06:37:59 UTC RTC time: Sun 2021-12-19 06:37:59 Time zone: Asia/Shanghai (CST, +0800) NTP enabled: yes NTP synchronized: no RTC in local TZ: no DST active: n/a

3.6.2 配置 chrony

OB Cluster的三个节点时间同步误差如果超过 50ms,则后面初始化集群一定会失败。

部署成功后,如果某个节点的时间误差大于50ms ,该节点就会掉线。

3.6.2.1 服务端配置

以 192.168.117.171 [ RHOB-DB01 ] 作为服务端。

[root@RHOB-DB01 ~]# cat /etc/chrony.conf |grep -Ev '^$|^#' server 127.127.1.0 driftfile /var/lib/chrony/drift makestep 1.0 3 rtcsync allow 192.168.117.0/24 logdir /var/log/chrony

3.6.2.2 客户端配置

其他的服务器均以客户端的形式配置。

[root@RHOB-DB02 ~]# cat /etc/chrony.conf |grep -Ev '^$|^#' server 192.168.117.171 iburst driftfile /var/lib/chrony/drift makestep 1.0 3 rtcsync logdir /var/log/chrony

3.6.2.3 启动chrony & 开机自启动

[root@RHOB-DB01 ~]# systemctl start chronyd.service [root@RHOB-DB01 ~]# systemctl enable chronyd.service

3.6.2.4 chrony 常用命令

非重点内容,根据官方教程的指示,每台服务器各跑一条命令,主要感受一下。

# 查看时间同步活动

[root@RHOB-DB01 ~]# chronyc activity

200 OK

1 sources online

0 sources offline

0 sources doing burst (return to online)

0 sources doing burst (return to offline)

0 sources with unknown address

# 查看时间服务器

[admin@RHOB-DB02 ~]$ chronyc sources

210 Number of sources = 1

MS Name/IP address Stratum Poll Reach LastRx Last sample

===============================================================================

^* RHOB-DB01 10 10 377 352 -7308ns[-9110ns] +/- 274us

# 查看同步状态

[admin@RHOB-DB03 ~]$ chronyc sources -v

210 Number of sources = 1

.-- Source mode '^' = server, '=' = peer, '#' = local clock.

/ .- Source state '*' = current synced, '+' = combined , '-' = not combined,

| / '?' = unreachable, 'x' = time may be in error, '~' = time too variable.

|| .- xxxx [ yyyy ] +/- zzzz

|| Reachability register (octal) -. | xxxx = adjusted offset,

|| Log2(Polling interval) --. | | yyyy = measured offset,

|| \ | | zzzz = estimated error.

|| | | \

MS Name/IP address Stratum Poll Reach LastRx Last sample

===============================================================================

^* RHOB-DB01 10 10 377 549 -38us[ -44us] +/- 265us

# 校准时间服务器:

[root@RHOB-DB00 ~]# chronyc tracking

Reference ID : C0A875AB (RHOB-DB01)

Stratum : 11

Ref time (UTC) : Fri Dec 24 15:05:59 2021

System time : 0.000022480 seconds fast of NTP time

Last offset : +0.000023738 seconds

RMS offset : 0.000058456 seconds

Frequency : 0.061 ppm slow

Residual freq : +0.001 ppm

Skew : 0.012 ppm

Root delay : 0.000333177 seconds

Root dispersion : 0.000083476 seconds

Update interval : 1034.6 seconds

Leap status : Normal

3.6.3 clockdiff & ping

有了chrony时间同步服务后,可以使用clockdiff检测一下时间延迟。

官方说明 :>50ms的延迟无法部署集群。

3.6.3.1 clockdiff 延迟测试

## RHOB-DB01 clockdiff

[root@RHOB-DB01 ~]# clockdiff 192.168.117.172

.

host=192.168.117.172 rtt=750(187)ms/0ms delta=0ms/0ms Sun Dec 19 14:35:57 2021

[root@RHOB-DB01 ~]# clockdiff 192.168.117.173

…

host=192.168.117.173 rtt=315(315)ms/0ms delta=0ms/0ms Sun Dec 19 14:36:02 2021

##RHOB-DB02 clockdiff

[root@RHOB-DB02 ~]# clockdiff 192.168.117.171

.

host=192.168.117.171 rtt=750(187)ms/0ms delta=0ms/0ms Sun Dec 19 14:36:47 2021

[root@RHOB-DB02 ~]# clockdiff 192.168.117.173

…

host=192.168.117.173 rtt=421(315)ms/0ms delta=0ms/0ms Sun Dec 19 14:36:51 2021

## RHOB-DB03 clockdiff

[root@RHOB-DB03 ~]# clockdiff 192.168.117.171

…

host=192.168.117.171 rtt=3(18)ms/0ms delta=0ms/0ms Sun Dec 19 14:37:34 2021

[root@RHOB-DB03 ~]# clockdiff 192.168.117.172

…

host=192.168.117.172 rtt=315(315)ms/0ms delta=0ms/0ms Sun Dec 19 14:37:37 2021

## RHOB-DB00 clockdiff

[root@RHOB-DB00 ~]# clockdiff 192.168.117.171

.

host=192.168.117.171 rtt=750(187)ms/0ms delta=0ms/0ms Sun Dec 19 21:42:21 2021

[root@RHOB-DB00 ~]# clockdiff 192.168.117.172

…

host=192.168.117.172 rtt=562(280)ms/0ms delta=0ms/0ms Sun Dec 19 21:42:40 2021

[root@RHOB-DB00 ~]# clockdiff 192.168.117.173

.

host=192.168.117.173 rtt=750(187)ms/0ms delta=0ms/0ms Sun Dec 19 21:42:41 2021

3.6.3.2 ping 延迟 测试

[root@RHOB-DB01 ~]# ping -T tsandaddr 192.168.117.172 -c 2 PING 192.168.117.172 (192.168.117.172) 56(124) bytes of data. 64 bytes from 192.168.117.172: icmp_seq=1 ttl=64 time=0.603 ms TS: 192.168.117.171 23780762 absolute 192.168.117.172 0 192.168.117.172 0 192.168.117.171 0 64 bytes from 192.168.117.172: icmp_seq=2 ttl=64 time=0.550 ms TS: 192.168.117.171 23781762 absolute 192.168.117.172 0 192.168.117.172 0 192.168.117.171 1 — 192.168.117.172 ping statistics — 2 packets transmitted, 2 received, 0% packet loss, time 1000ms rtt min/avg/max/mdev = 0.550/0.576/0.603/0.035 ms [root@RHOB-DB01 ~]# ping -T tsandaddr 192.168.117.173 -c 2 PING 192.168.117.173 (192.168.117.173) 56(124) bytes of data. 64 bytes from 192.168.117.173: icmp_seq=1 ttl=64 time=0.475 ms TS: 192.168.117.171 23790341 absolute 192.168.117.173 0 192.168.117.173 0 192.168.117.171 1 64 bytes from 192.168.117.173: icmp_seq=2 ttl=64 time=0.650 ms TS: 192.168.117.171 23791342 absolute 192.168.117.173 1 192.168.117.173 0 192.168.117.171 0 — 192.168.117.173 ping statistics — 2 packets transmitted, 2 received, 0% packet loss, time 1001ms rtt min/avg/max/mdev = 0.475/0.562/0.650/0.090 ms

四. 安装部署

4.1 安装包

| ID | 最新版本 | 用途 |

|---|---|---|

| 1 | obclient-2.0.0-2.el7.x86_64.rpm | obclient |

| 2 | libobclient-2.0.0-2.el7.x86_64.rpm | obclient |

| 3 | obproxy-3.2.0-1.el7.x86_64.rpm | obproxy |

| 4 | ob-deploy-1.1.2-1.el7.x86_64.rpm | obd部署工具[可选] |

| 5 | oceanbase-ce-3.1.1-4.el7.x86_64.rpm | oceanbase 数据库 |

| 6 | oceanbase-ce-libs-3.1.1-4.el7.x86_64.rpm | oceanbase lib包 |

4.2 安装oceanbase

[admin@RHOB-DB01 OceanBase]$ sudo rpm -ivh oceanbase-ce-libs-3.1.1-4.el7.x86_64.rpm [sudo] password for admin: warning: oceanbase-ce-libs-3.1.1-4.el7.x86_64.rpm: Header V4 RSA/SHA1 Signature, key ID e9b4a7aa: NOKEY Preparing… ################################# [100%] Updating / installing… 1:oceanbase-ce-libs-3.1.1-4.el7 ################################# [100%] [admin@RHOB-DB01 OceanBase]$ sudo rpm -ivh oceanbase-ce-3.1.1-4.el7.x86_64.rpm warning: oceanbase-ce-3.1.1-4.el7.x86_64.rpm: Header V4 RSA/SHA1 Signature, key ID e9b4a7aa: NOKEY Preparing… ################################# [100%] Updating / installing… 1:oceanbase-ce-3.1.1-4.el7 ################################# [100%] [admin@RHOB-DB01 OceanBase]$ rpm -qa|grep oceanbase oceanbase-ce-libs-3.1.1-4.el7.x86_64 oceanbase-ce-3.1.1-4.el7.x86_64 [admin@RHOB-DB01 ~]$ ll total 0 drwxr-xr-x 5 root root 39 Dec 19 17:14 oceanbase [admin@RHOB-DB01 ~]$ tree oceanbase oceanbase ├── bin │ ├── import_time_zone_info.py │ └── observer ├── etc │ └── timezone_V1.log └── lib ├── libaio.so -> libaio.so.1.0.1 ├── libaio.so.1 -> libaio.so.1.0.1 ├── libaio.so.1.0.1 ├── libmariadb.so -> libmariadb.so.3 └── libmariadb.so.3 3 directories, 8 files

4.3 创建OB目录及授权

[admin@RHOB-DB01 ~]$ ll drwxr-xr-x 3 admin admin 17 Dec 19 17:15 obproxy-3.2.0 drwxr-xr-x 5 root root 39 Dec 19 17:14 oceanbase [root@RHOB-DB01 bin]# chown -R admin:admin /obdata [root@RHOB-DB01 bin]# chown -R admin:admin /oblog [root@RHOB-DB01 bin]# chmod -R 755 /obdata [root@RHOB-DB01 bin]# chmod -R 755 /oblog [root@RHOB-DB01 ~]# chown -R admin:admin /home/admin/oceanbase/ [root@RHOB-DB01 ~]# su - admin [admin@RHOB-DB01 ~]$ mkdir -p ~/oceanbase/store/obdemo /obdata/obdemo/{sstable,etc3} /oblog/obdemo/{clog,ilog,slog,etc2} [admin@RHOB-DB01 ~]$ for f in {clog,ilog,slog,etc2}; do ln -s /oblog/obdemo/$f ~/oceanbase/store/obdemo/$f ; done [admin@RHOB-DB01 ~]$ for f in {sstable,etc3}; do ln -s /obdata/obdemo/$f ~/oceanbase/store/obdemo/$f; done

4.4 检查observer执行程序

检查执行程序是否有缺少lib包

[admin@RHOB-DB01 bin]$ ldd observer linux-vdso.so.1 => (0x00007fff319fe000) libmariadb.so.3 => not found libaio.so.1 => /lib64/libaio.so.1 (0x00002b56da968000) libm.so.6 => /lib64/libm.so.6 (0x00002b56dab6a000) libpthread.so.0 => /lib64/libpthread.so.0 (0x00002b56dae6c000) libdl.so.2 => /lib64/libdl.so.2 (0x00002b56db088000) librt.so.1 => /lib64/librt.so.1 (0x00002b56db28c000) libc.so.6 => /lib64/libc.so.6 (0x00002b56db494000) /lib64/ld-linux-x86-64.so.2 (0x00002b56da744000)

添加环境变量

[admin@RHOB-DB01 bin]$ cd [admin@RHOB-DB01 ~]$ echo 'export LD_LIBRARY_PATH=$LD_LIBRARY_PATH:~/oceanbase/lib' >> ~/.bash_profile [admin@RHOB-DB01 ~]$ . ~/.bash_profile

再次检查,没有问题

[admin@RHOB-DB01 bin]$ ldd observer linux-vdso.so.1 => (0x00007ffc44f9d000) libmariadb.so.3 => /home/admin/oceanbase/lib/libmariadb.so.3 (0x00002ab8bf977000) libaio.so.1 => /home/admin/oceanbase/lib/libaio.so.1 (0x00002ab8bfbdd000) libm.so.6 => /lib64/libm.so.6 (0x00002ab8bfddf000) libpthread.so.0 => /lib64/libpthread.so.0 (0x00002ab8c00e1000) libdl.so.2 => /lib64/libdl.so.2 (0x00002ab8c02fd000) librt.so.1 => /lib64/librt.so.1 (0x00002ab8c0501000) libc.so.6 => /lib64/libc.so.6 (0x00002ab8c0709000) /lib64/ld-linux-x86-64.so.2 (0x00002ab8bf753000)

4.5 启动observer

4.5.1 查看observer参数选项

[admin@RHOB-DB01 bin]$ ./observer -h ./observer -h observer [OPTIONS] -h,–help print this help -V,–version print the information of version -z,–zone ZONE zone -p,–mysql_port PORT mysql port -P,–rpc_port PORT rpc port -N,–nodaemon dont run in daemon -n,–appname APPNAME application name -c,–cluster_id ID cluster id -d,–data_dir DIR OceanBase data directory -i,–devname DEV net dev interface -o,–optstr OPTSTR extra options string -r,–rs_list RS_LIST root service list -l,–log_level LOG_LEVEL server log level -6,–ipv6 USE_IPV6 server use ipv6 address -m,–mode MODE server mode -f,–scn flashback_scn

注意

- 以下的命令在哪台服务器执行。

- syslog_level 默认INFO ,建议修改成ERROR,避免INFO产生大量的信息。

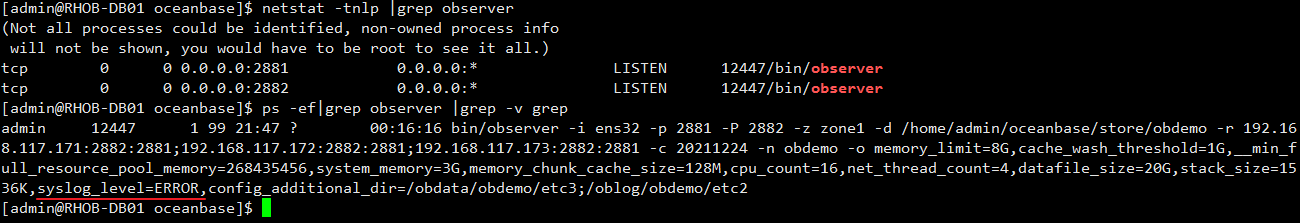

4.5.2 启动observer-zone1

[admin@RHOB-DB01 ~]$ cd ~/oceanbase && bin/observer -i ens32 -p 2881 -P 2882 -z zone1 -d ~/oceanbase/store/obdemo -r '192.168.117.171:2882:2881;192.168.117.172:2882:2881;192.168.117.173:2882:2881' -c 20211224 -n obdemo -o “memory_limit=8G,cache_wash_threshold=1G,__min_full_resource_pool_memory=268435456,system_memory=3G,memory_chunk_cache_size=128M,cpu_count=16,net_thread_count=4,datafile_size=20G,stack_size=1536K,syslog_level=ERROR,config_additional_dir=/obdata/obdemo/etc3;/oblog/obdemo/etc2” bin/observer -i ens32 -p 2881 -P 2882 -z zone1 -d /home/admin/oceanbase/store/obdemo -r 192.168.117.171:2882:2881;192.168.117.172:2882:2881;192.168.117.173:2882:2881 -c 20211224 -n obdemo -o memory_limit=8G,cache_wash_threshold=1G,__min_full_resource_pool_memory=268435456,system_memory=3G,memory_chunk_cache_size=128M,cpu_count=16,net_thread_count=4,datafile_size=20G,stack_size=1536K,syslog_level=ERROR,config_additional_dir=/obdata/obdemo/etc3;/oblog/obdemo/etc2 devname: ens32 mysql port: 2881 rpc port: 2882 zone: zone1 data_dir: /home/admin/oceanbase/store/obdemo rs list: 192.168.117.171:2882:2881;192.168.117.172:2882:2881;192.168.117.173:2882:2881 cluster id: 20211224 appname: obdemo optstr: memory_limit=8G,cache_wash_threshold=1G,__min_full_resource_pool_memory=268435456,system_memory=3G,memory_chunk_cache_size=128M,cpu_count=16,net_thread_count=4,datafile_size=20G,stack_size=1536K,syslog_level=ERROR,config_additional_dir=/obdata/obdemo/etc3;/oblog/obdemo/etc2

检查

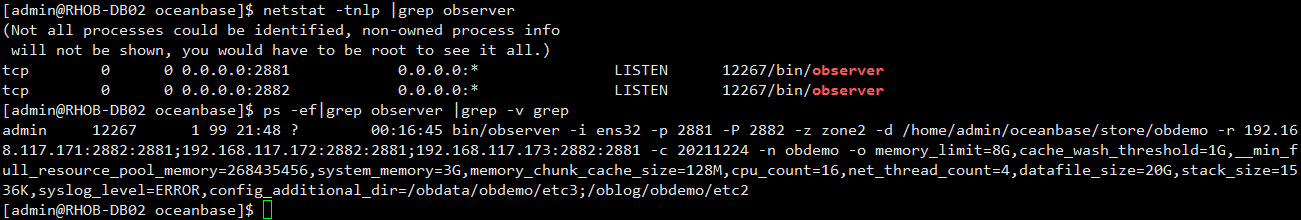

4.5.3 启动observer-zone2

[admin@RHOB-DB02 ~]$ cd ~/oceanbase && bin/observer -i ens32 -p 2881 -P 2882 -z zone2 -d ~/oceanbase/store/obdemo -r '192.168.117.171:2882:2881;192.168.117.172:2882:2881;192.168.117.173:2882:2881' -c 20211224 -n obdemo -o “memory_limit=8G,cache_wash_threshold=1G,__min_full_resource_pool_memory=268435456,system_memory=3G,memory_chunk_cache_size=128M,cpu_count=16,net_thread_count=4,datafile_size=20G,stack_size=1536K,syslog_level=ERROR,config_additional_dir=/obdata/obdemo/etc3;/oblog/obdemo/etc2” bin/observer -i ens32 -p 2881 -P 2882 -z zone2 -d /home/admin/oceanbase/store/obdemo -r 192.168.117.171:2882:2881;192.168.117.172:2882:2881;192.168.117.173:2882:2881 -c 20211224 -n obdemo -o memory_limit=8G,cache_wash_threshold=1G,__min_full_resource_pool_memory=268435456,system_memory=3G,memory_chunk_cache_size=128M,cpu_count=16,net_thread_count=4,datafile_size=20G,stack_size=1536K,syslog_level=ERROR,config_additional_dir=/obdata/obdemo/etc3;/oblog/obdemo/etc2 devname: ens32 mysql port: 2881 rpc port: 2882 zone: zone2 data_dir: /home/admin/oceanbase/store/obdemo rs list: 192.168.117.171:2882:2881;192.168.117.172:2882:2881;192.168.117.173:2882:2881 cluster id: 20211224 appname: obdemo optstr: memory_limit=8G,cache_wash_threshold=1G,__min_full_resource_pool_memory=268435456,system_memory=3G,memory_chunk_cache_size=128M,cpu_count=16,net_thread_count=4,datafile_size=20G,stack_size=1536K,syslog_level=ERROR,config_additional_dir=/obdata/obdemo/etc3;/oblog/obdemo/etc2

检查

4.5.4 启动observer-zone3

[admin@RHOB-DB03 ~]$ cd ~/oceanbase && bin/observer -i ens32 -p 2881 -P 2882 -z zone3 -d ~/oceanbase/store/obdemo -r '192.168.117.171:2882:2881;192.168.117.172:2882:2881;192.168.117.173:2882:2881' -c 20211224 -n obdemo -o “memory_limit=8G,cache_wash_threshold=1G,__min_full_resource_pool_memory=268435456,system_memory=3G,memory_chunk_cache_size=128M,cpu_count=16,net_thread_count=4,datafile_size=20G,stack_size=1536K,syslog_level=ERROR,config_additional_dir=/obdata/obdemo/etc3;/oblog/obdemo/etc2” bin/observer -i ens32 -p 2881 -P 2882 -z zone3 -d /home/admin/oceanbase/store/obdemo -r 192.168.117.171:2882:2881;192.168.117.172:2882:2881;192.168.117.173:2882:2881 -c 20211224 -n obdemo -o memory_limit=8G,cache_wash_threshold=1G,__min_full_resource_pool_memory=268435456,system_memory=3G,memory_chunk_cache_size=128M,cpu_count=16,net_thread_count=4,datafile_size=20G,stack_size=1536K,syslog_level=ERROR,config_additional_dir=/obdata/obdemo/etc3;/oblog/obdemo/etc2 devname: ens32 mysql port: 2881 rpc port: 2882 zone: zone3 data_dir: /home/admin/oceanbase/store/obdemo rs list: 192.168.117.171:2882:2881;192.168.117.172:2882:2881;192.168.117.173:2882:2881 cluster id: 20211224 appname: obdemo optstr: memory_limit=8G,cache_wash_threshold=1G,__min_full_resource_pool_memory=268435456,system_memory=3G,memory_chunk_cache_size=128M,cpu_count=16,net_thread_count=4,datafile_size=20G,stack_size=1536K,syslog_level=ERROR,config_additional_dir=/obdata/obdemo/etc3;/oblog/obdemo/etc2

检查

注意 : 如果使用OBD自动化部署,参数文件有密码,那么进程信息也会显示密码。

4.6 集群初始化

使用 mysql客户端 或者 obclient 进入OceanBase数据库。

本次使用 192.168.117.170 [RHOB-DB00]的mysql客户端工具登录192.168.117.171 [RHOB-DB01]的OceanBase。

[admin@RHOB-DB00 ~]$ mysql -h 192.168.117.171 -u root -P 2881 -p -c -A

# 直接敲回车键登录数据库。

Enter password:

Welcome to the MySQL monitor. Commands end with ; or \\g.

Your MySQL connection id is 3221225472

Server version: 5.7.25 OceanBase 3.1.1 (r4-8c615943cbd25a6f7b8bdfd8677a13a21709a05e) (Built Oct 21 2021 10:33:14)

Copyright ? 2000, 2020, Oracle and/or its affiliates. All rights reserved.

Oracle is a registered trademark of Oracle Corporation and/or its

affiliates. Other names may be trademarks of their respective

owners.

Type 'help;' or '\\h' for help. Type '\\c' to clear the current input statement.

mysql> show databases;

ERROR 1146 (42S02): Table 'oceanbase.__all_database' doesnt exist

mysql> set session ob_query_timeout=1000000000;

Query OK, 0 rows affected (0.01 sec)

mysql> alter system bootstrap ZONE 'zone1' SERVER '192.168.117.171:2882', ZONE 'zone2' SERVER '192.168.117.172:2882', ZONE 'zone3' SERVER '192.168.117.173:2882' ;

Query OK, 0 rows affected (1 min 22.02 sec)

# 初始化集群后,可以进行操作。

mysql> show databases;

+--------------------+

| Database |

+--------------------+

| oceanbase |

| information_schema |

| mysql |

| SYS |

| LBACSYS |

| ORAAUDITOR |

| test |

+--------------------+

7 rows in set (0.03 sec)

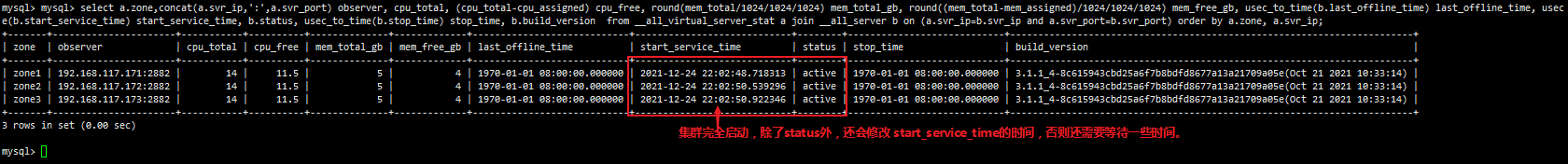

mysql> select a.zone,concat(a.svr_ip,':',a.svr_port) observer, cpu_total, (cpu_total-cpu_assigned) cpu_free, round(mem_total/1024/1024/1024) mem_total_gb, round((mem_total-mem_assigned)/1024/1024/1024) mem_free_gb, usec_to_time(b.last_offline_time) last_offline_time, usec_to_time(b.start_service_time) start_service_time, b.status, usec_to_time(b.stop_time) stop_time, b.build_version from __all_virtual_server_stat a join __all_server b on (a.svr_ip=b.svr_ip and a.svr_port=b.svr_port) order by a.zone, a.svr_ip;

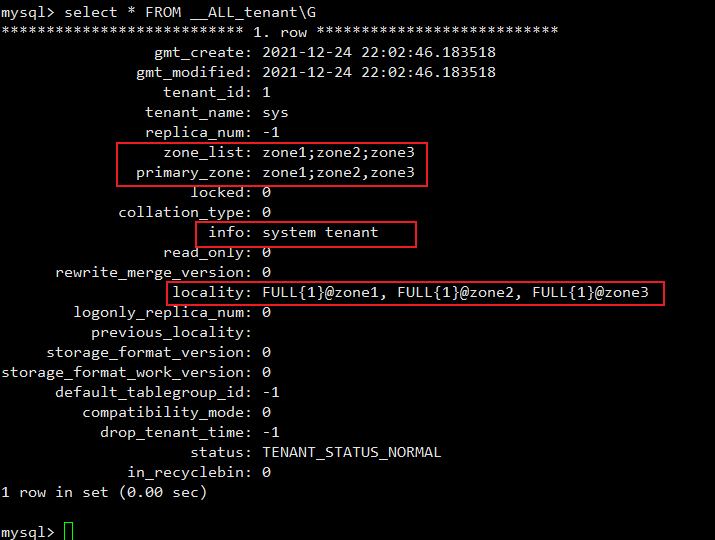

查看租户的zone_list、primary_zone、locality属性。

5. 安装obclient

如果没有mysql客户端,可以安装obclient,直接登陆数据库,比较方便。

[admin@RHOB-DB00 OceanBase]$ sudo rpm -ivh libobclient-2.0.0-2.el7.x86_64.rpm [sudo] password for admin: warning: libobclient-2.0.0-2.el7.x86_64.rpm: Header V4 RSA/SHA1 Signature, key ID e9b4a7aa: NOKEY Preparing… ################################# [100%] Updating / installing… 1:libobclient-2.0.0-2.el7 ################################# [100%] [admin@RHOB-DB00 OceanBase]$ sudo rpm -ivh obclient-2.0.0-2.el7.x86_64.rpm warning: obclient-2.0.0-2.el7.x86_64.rpm: Header V4 RSA/SHA1 Signature, key ID e9b4a7aa: NOKEY Preparing… ################################# [100%] Updating / installing… 1:obclient-2.0.0-2.el7 ################################# [100%] [admin@RHOB-DB00 OceanBase]$ which obclient /bin/obclient

6. obproxy

OBProxy实现接受来自应用的请求,并转发给OBServer,然后OBServer将数据返回给OBProxy,OBProxy将数据转发给应用客户端。

支持的路由策略的方式多样,详见官方文档 OBProxy 的路由策略。

6.1 安装obproxy

[admin@RHOB-DB00 OceanBase]$ sudo rpm -ivh obproxy-3.2.0-1.el7.x86_64.rpm warning: obproxy-3.2.0-1.el7.x86_64.rpm: Header V4 RSA/SHA1 Signature, key ID e9b4a7aa: NOKEY Preparing… ################################# [100%] Updating / installing… 1:obproxy-3.2.0-1.el7 ################################# [100%] [admin@RHOB-DB00 OceanBase]$ rpm -qa|grep obproxy obproxy-3.2.0-1.el7.x86_64

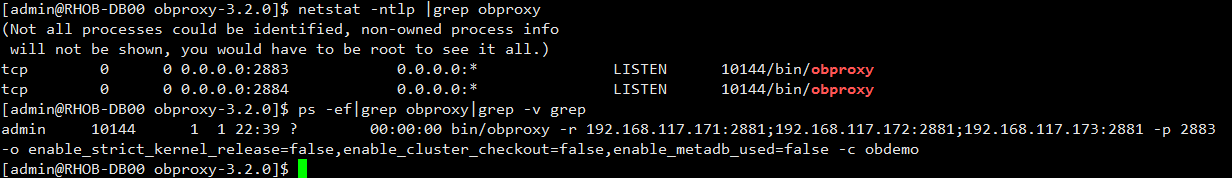

6.2 启动obproxy

[admin@RHOB-DB00 ~]$ cd ~/obproxy-3.2.0/ && bin/obproxy -r “192.168.117.171:2881;192.168.117.172:2881;192.168.117.173:2881” -p 2883 -o “enable_strict_kernel_release=false,enable_cluster_checkout=false,enable_metadb_used=false” -c obdemo bin/obproxy -r 192.168.117.171:2881;192.168.117.172:2881;192.168.117.173:2881 -p 2883 -o enable_strict_kernel_release=false,enable_cluster_checkout=false,enable_metadb_used=false -c obdemo rs list: 192.168.117.171:2881;192.168.117.172:2881;192.168.117.173:2881 listen port: 2883 optstr: enable_strict_kernel_release=false,enable_cluster_checkout=false,enable_metadb_used=false cluster_name: obdemo

7. 设置密码

本次把root、proxyro等等用户一起配置。

| 用户 | 端口 | 用途 |

|---|---|---|

| root | 2881 | 数据库 root 用户 |

| proxyro | 2881 | 数据库 proxyro 用户 |

| proxysys | 2883 | obproxy的sys用户,登录obproxy |

| observer_sys_password | 2883 | 数据库 proxyro 用户,obproxy通过proxyro 用户连接数据库 |

| obproxy_sys_password | 2883 | obproxy的sys用户密码 |

[admin@RHOB-DB00 ~]$ mysql -h 192.168.117.171 -u root -P 2881 -p -c -A

# 直接敲回车键登录数据库。

# 本次登录是sys租户。

Enter password:

# root用户修改密码后,以后都要用密码登录。

mysql> alter user 'root'@'%' identified by 'rO0t&123' ;

Query OK, 0 rows affected (0.12 sec)

# observer_sys_password 密码 要 和 proxyro 的密码一致。

mysql> create user proxyro identified by 'Serproxy@123' ;

Query OK, 0 rows affected (0.10 sec)

mysql> grant select on oceanbase.* to proxyro;

Query OK, 0 rows affected (0.07 sec)

[admin@RHOB-DB00 ~]$ obclient -h 192.168.117.170 -u root@proxysys -P 2883 -p

# 修改 OBPROXY 的密码 : obproxy_sys_password

mysql> alter proxyconfig set obproxy_sys_password = 'Sysproxy@123' ;

Query OK, 0 rows affected (0.01 sec)

# observer_sys_password

# 修改 OBPROXY 连接 OceanBase 集群用户 proxyro 的密码。这样 OBPROXY 才能跟 OceanBase 集群正常连接。

# observer_sys_password 密码 要 和 proxyro 的密码一致。

mysql> alter proxyconfig set observer_sys_password = 'Serproxy@123' ;

Query OK, 0 rows affected (0.00 sec)

mysql> show proxyconfig like '%sys_password%';

+------------------------+------------------------------------------+--------------------------------+-------------+---------------+

| name | value | info | need_reboot | visible_level |

+------------------------+------------------------------------------+--------------------------------+-------------+---------------+

| observer_sys_password1 | | password for observer sys user | false | SYS |

| observer_sys_password | e1e4ccbd352584c0e44a20a89debca6cfa64c5f7 | password for observer sys user | false | SYS |

| obproxy_sys_password | f8543646780b3c88b1a9b11be3e62881e437b95c | password for obproxy sys user | false | SYS |

+------------------------+------------------------------------------+--------------------------------+-------------+---------------+

3 rows in set (0.01 sec)

登录proxy 管理使用 obproxy_sys_password 的密码登录。

[admin@RHOB-DB00 ~]$ obclient -h 192.168.117.170 -u root@proxysys -P 2883 -p

Enter password:

Welcome to the OceanBase. Commands end with ; or \\g.

Your MySQL connection id is 9

Server version: 5.6.25

Copyright ? 2000, 2018, Oracle, MariaDB Corporation Ab and others.

Type 'help;' or '\\h' for help. Type '\\c' to clear the current input statement.

MySQL [(none)]>

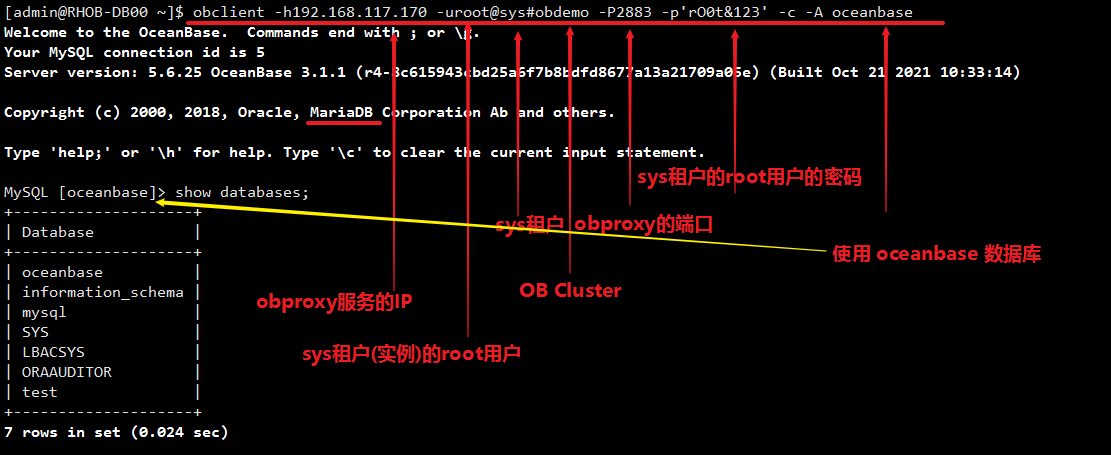

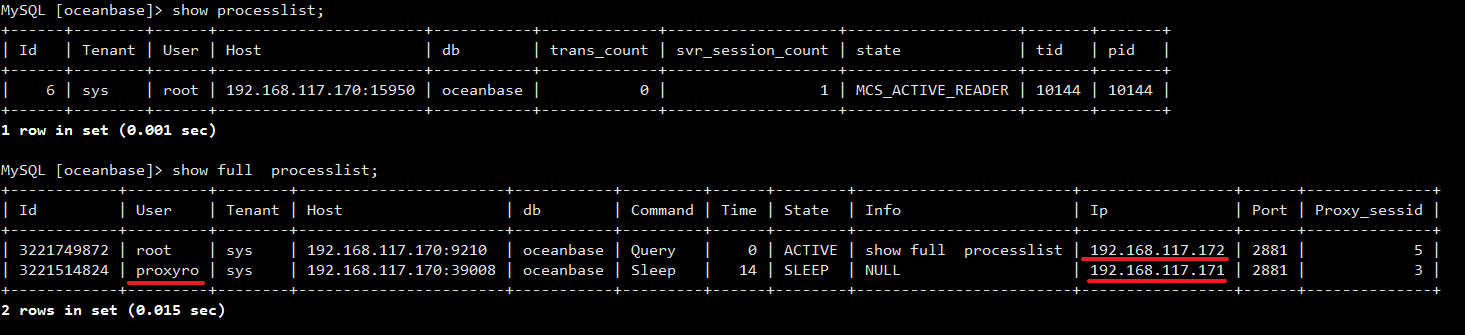

通过 OBPROXY 连接 OceanBase 集群看看, 如果能查看所有会话,则说明 OBPROXY 部署成功。

[admin@RHOB-DB00 ~]$ mysql -h192.168.117.170 -uroot@sys#obdemo -P2883 -p'rO0t&123' -c -A oceanbase 或者 [admin@RHOB-DB00 ~]$ obclient -h192.168.117.170 -uroot@sys#obdemo -P2883 -p'rO0t&123' -c -A oceanbase

8. 启动&关闭

看了比较多的文章,好像都讲了如何部署、启动,但其实正确地关闭数据库也是非常重要的。

小插曲 : 笔者的电脑由于内存不够,重启了好几回。每次重启试图开启集群,但都失败了。

如果逐个正常关闭<=2台服务器,然后启动observer,集群是正常的。

手动部署集群的灵活性要比OBD工具部署集群好很多,OBD工具应该没有办法对某个节点启停,只能对整个集群启停。

笔者尝试了对OBD部署集群的某个节点进行关闭,但发现启动无从下手。最后,尝试obd stop 再 start 集群,结果报错"[ERROR] Cluster NTP is out of sync"。

后续有机会再尝试解决。

8.1 检查集群的状态

检查集群关闭前的状态,确认集群正常。

MySQL [oceanbase]> select a.zone,concat(a.svr_ip,':',a.svr_port) observer, cpu_total, (cpu_total-cpu_assigned) cpu_free, round(mem_total/1024/1024/1024) mem_total_gb, round((mem_total-mem_assigned)/1024/1024/1024) mem_free_gb, usec_to_time(b.last_offline_time) last_offline_time, usec_to_time(b.start_service_time) start_service_time, b.status, usec_to_time(b.stop_time) stop_time, b.build_version from __all_virtual_server_stat a join __all_server b on (a.svr_ip=b.svr_ip and a.svr_port=b.svr_port) order by a.zone, a.svr_ip;

+-------+----------------------+-----------+----------+--------------+-------------+----------------------------+----------------------------+----------+----------------------------+------------------------------------------------------------------------+

| zone | observer | cpu_total | cpu_free | mem_total_gb | mem_free_gb | last_offline_time | start_service_time | status | stop_time | build_version |

+-------+----------------------+-----------+----------+--------------+-------------+----------------------------+----------------------------+----------+----------------------------+------------------------------------------------------------------------+

| zone1 | 192.168.117.171:2882 | 14 | 11.5 | 5 | 4 | 1970-01-01 08:00:00.000000 | 2021-12-25 10:00:16.958704 | active | 1970-01-01 08:00:00.000000 | 3.1.1_4-8c615943cbd25a6f7b8bdfd8677a13a21709a05e(Oct 21 2021 10:33:14) |

| zone2 | 192.168.117.172:2882 | 14 | 11.5 | 5 | 4 | 1970-01-01 08:00:00.000000 | 2021-12-25 10:00:18.078459 | active | 1970-01-01 08:00:00.000000 | 3.1.1_4-8c615943cbd25a6f7b8bdfd8677a13a21709a05e(Oct 21 2021 10:33:14) |

| zone3 | 192.168.117.173:2882 | 14 | 11.5 | 5 | 4 | 1970-01-01 08:00:00.000000 | 2021-12-25 10:00:20.061450 | active | 1970-01-01 08:00:00.000000 | 3.1.1_4-8c615943cbd25a6f7b8bdfd8677a13a21709a05e(Oct 21 2021 10:33:14) |

+-------+----------------------+-----------+----------+--------------+-------------+----------------------------+----------------------------+----------+----------------------------+------------------------------------------------------------------------+

8.2 关闭单个Server

关闭单个OBServer [以observer3为例]

[admin@RHOB-DB03 ~]$ ps -ef|grep observer admin 11383 1 99 18:08 ? 07:34:59 bin/observer -i ens32 -p 2881 -P 2882 -z zone3 -d /home/admin/oceanbase/store/obdemo -r 192.168.117.171:2882:2881;192.168.117.172:2882:2881;192.168.117.173:2882:2881 -c 20211224 -n obdemo -o memory_limit=8G,cache_wash_threshold=1G,__min_full_resource_pool_memory=268435456,system_memory=3G,memory_chunk_cache_size=128M,cpu_count=16,net_thread_count=4,datafile_size=20G,stack_size=1536K,config_additional_dir=/obdata/obdemo/etc3;/oblog/obdemo/etc2 -d /home/admin/oceanbase/store/obdemo admin 14895 13253 0 21:13 pts/1 00:00:00 grep --color=auto observer

不要使用kill -9,等待1分钟左右。

参考官方教程。

[admin@RHOB-DB03 ~]$ kill `pidof observer` [admin@RHOB-DB03 ~]$ ps -ef | grep observer | grep -v grep admin 15805 1 99 09:59 ? 02:23:08 bin/observer -i ens32 -p 2881 -P 2882 -z zone3 -d /home/admin/oceanbase/store/obdemo -r 192.168.117.171:2882:2881;192.168.117.172:2882:2881;192.168.117.173:2882:2881 -c 20211224 -n OBCluster -o memory_limit=8G,cache_wash_threshold=1G,__min_full_resource_pool_memory=268435456,system_memory=3G,memory_chunk_cache_size=128M,cpu_count=16,net_thread_count=4,datafile_size=20G,stack_size=1536K,syslog_level=ERROR,config_additional_dir=/obdata/obdemo/etc3;/oblog/obdemo/etc2

status已经变为inactive,本次只关闭一个observer,集群可以正常对外提供服务。

MySQL [oceanbase]> select a.zone,concat(a.svr_ip,':',a.svr_port) observer, cpu_total, (cpu_total-cpu_assigned) cpu_free, round(mem_total/1024/1024/1024) mem_total_gb, round((mem_total-mem_assigned)/1024/1024/1024) mem_free_gb, usec_to_time(b.last_offline_time) last_offline_time, usec_to_time(b.start_service_time) start_service_time, b.status, usec_to_time(b.stop_time) stop_time, b.build_version from __all_virtual_server_stat a join __all_server b on (a.svr_ip=b.svr_ip and a.svr_port=b.svr_port) order by a.zone, a.svr_ip;

+-------+----------------------+-----------+----------+--------------+-------------+----------------------------+----------------------------+----------+----------------------------+------------------------------------------------------------------------+

| zone | observer | cpu_total | cpu_free | mem_total_gb | mem_free_gb | last_offline_time | start_service_time | status | stop_time | build_version |

+-------+----------------------+-----------+----------+--------------+-------------+----------------------------+----------------------------+----------+----------------------------+------------------------------------------------------------------------+

| zone1 | 192.168.117.171:2882 | 14 | 11.5 | 5 | 4 | 1970-01-01 08:00:00.000000 | 2021-12-25 10:12:55.991612 | active | 1970-01-01 08:00:00.000000 | 3.1.1_4-8c615943cbd25a6f7b8bdfd8677a13a21709a05e(Oct 21 2021 10:33:14) |

| zone2 | 192.168.117.172:2882 | 14 | 11.5 | 5 | 4 | 1970-01-01 08:00:00.000000 | 2021-12-25 10:00:18.078459 | active | 1970-01-01 08:00:00.000000 | 3.1.1_4-8c615943cbd25a6f7b8bdfd8677a13a21709a05e(Oct 21 2021 10:33:14) |

| zone3 | 192.168.117.173:2882 | 14 | 11.5 | 5 | 4 | 1970-01-01 08:00:00.000000 | 1970-01-01 08:00:00.000000 | inactive | 1970-01-01 08:00:00.000000 | 3.1.1_4-8c615943cbd25a6f7b8bdfd8677a13a21709a05e(Oct 21 2021 10:33:14) |

+-------+----------------------+-----------+----------+--------------+-------------+----------------------------+----------------------------+----------+----------------------------+------------------------------------------------------------------------+

3 rows in set (0.007 sec)

确保进程已经不在,否则重启会报错。

[admin@RHOB-DB03 ~]$ ps -ef | grep observer | grep -v grep

8.3 启动 observer

关闭两个observer后 [observer2、observer3],集群无法对外提供服务。

[admin@RHOB-DB02 ~]$ kill `pidof observer` [admin@RHOB-DB02 ~]$ [admin@RHOB-DB02 ~]$ ps -ef | grep observer | grep -v grep

# 未登录的场景

[admin@RHOB-DB00 ~]$ obclient -h192.168.117.171 -uroot -P2881 -p'rO0t&123' -c -A oceanbase

ERROR 4012 (25000): Statement is timeout

# 已登陆的场景

MySQL [oceanbase]> show databases;

ERROR 4012 (25000): Statement is timeout

启动单个OBServer [以observer3为例]

[admin@RHOB-DB03 ~]$ cd ~/oceanbase && bin/observer -i ens32 -p 2881 -P 2882 -z zone3 -d ~/oceanbase/store/obdemo -r '192.168.117.171:2882:2881;192.168.117.172:2882:2881;192.168.117.173:2882:2881' -c 20211224 -n obdemo -o "memory_limit=8G,cache_wash_threshold=1G,__min_full_resource_pool_memory=268435456,system_memory=3G,memory_chunk_cache_size=128M,cpu_count=16,net_thread_count=4,datafile_size=20G,stack_size=1536K,syslog_level=ERROR,config_additional_dir=/obdata/obdemo/etc3;/oblog/obdemo/etc2" bin/observer -i ens32 -p 2881 -P 2882 -z zone2 -d /home/admin/oceanbase/store/obdemo -r 192.168.117.171:2882:2881;192.168.117.172:2882:2881;192.168.117.173:2882:2881 -c 20211224 -n obdemo -o memory_limit=8G,cache_wash_threshold=1G,__min_full_resource_pool_memory=268435456,system_memory=3G,memory_chunk_cache_size=128M,cpu_count=16,net_thread_count=4,datafile_size=20G,stack_size=1536K,syslog_level=ERROR,config_additional_dir=/obdata/obdemo/etc3;/oblog/obdemo/etc2 devname: ens32 mysql port: 2881 rpc port: 2882 zone: zone3 data_dir: /home/admin/oceanbase/store/obdemo rs list: 192.168.117.171:2882:2881;192.168.117.172:2882:2881;192.168.117.173:2882:2881 cluster id: 20211224 appname: obdemo optstr: memory_limit=8G,cache_wash_threshold=1G,__min_full_resource_pool_memory=268435456,system_memory=3G,memory_chunk_cache_size=128M,cpu_count=16,net_thread_count=4,datafile_size=20G,stack_size=1536K,syslog_level=ERROR,config_additional_dir=/obdata/obdemo/etc3;/oblog/obdemo/etc2 [admin@RHOB-DB03 oceanbase]$ ps -ef | grep observer | grep -v grep admin 11257 1 99 21:17 ? 00:04:11 bin/observer -i ens32 -p 2881 -P 2882 -z zone3 -d /home/admin/oceanbase/store/obdemo -r 192.168.117.171:2882:2881;192.168.117.172:2882:2881;192.168.117.173:2882:2881 -c 20211224 -n obdemo -o memory_limit=8G,cache_wash_threshold=1G,__min_full_resource_pool_memory=268435456,system_memory=3G,memory_chunk_cache_size=128M,cpu_count=16,net_thread_count=4,datafile_size=20G,stack_size=1536K,config_additional_dir=/obdata/obdemo/etc3;/oblog/obdemo/etc2 -d /home/admin/oceanbase/store/obdemo

在启动observer后,跟踪集群资源的状态变化。

mysql> use oceanbase;

Database changed

# 检查集群资源,可以看到 192.168.117.173 observer3的status为inactive,且资源为NULL。

MySQL [oceanbase]> select a.zone,concat(a.svr_ip,':',a.svr_port) observer, cpu_total, (cpu_total-cpu_assigned) cpu_free, round(mem_total/1024/1024/1024) mem_total_gb, round((mem_total-mem_assigned)/1024/1024/1024) mem_free_gb, usec_to_time(b.last_offline_time) last_offline_time, usec_to_time(b.start_service_time) start_service_time, b.status, usec_to_time(b.stop_time) stop_time, b.build_version from __all_virtual_server_stat a join __all_server b on (a.svr_ip=b.svr_ip and a.svr_port=b.svr_port) order by a.zone, a.svr_ip;

+-------+----------------------+-----------+----------+--------------+-------------+----------------------------+----------------------------+----------+----------------------------+------------------------------------------------------------------------+

| zone | observer | cpu_total | cpu_free | mem_total_gb | mem_free_gb | last_offline_time | start_service_time | status | stop_time | build_version |

+-------+----------------------+-----------+----------+--------------+-------------+----------------------------+----------------------------+----------+----------------------------+------------------------------------------------------------------------+

| zone1 | 192.168.117.171:2882 | 14 | 11.5 | 5 | 4 | 1970-01-01 08:00:00.000000 | 2021-12-25 11:07:55.559403 | active | 1970-01-01 08:00:00.000000 | 3.1.1_4-8c615943cbd25a6f7b8bdfd8677a13a21709a05e(Oct 21 2021 10:33:14) |

| zone2 | 192.168.117.172:2882 | 14 | 11.5 | 5 | 4 | 1970-01-01 08:00:00.000000 | 2021-12-25 10:59:23.040243 | active | 1970-01-01 08:00:00.000000 | 3.1.1_4-8c615943cbd25a6f7b8bdfd8677a13a21709a05e(Oct 21 2021 10:33:14) |

| zone3 | 192.168.117.173:2882 | NULL | NULL | NULL | NULL | 1970-01-01 08:00:00.000000 | 1970-01-01 08:00:00.000000 | inactive | 1970-01-01 08:00:00.000000 | 3.1.1_4-8c615943cbd25a6f7b8bdfd8677a13a21709a05e(Oct 21 2021 10:33:14) |

+-------+----------------------+-----------+----------+--------------+-------------+----------------------------+----------------------------+----------+----------------------------+------------------------------------------------------------------------+

# 集群尚未修改 start_service_time 信息

mysql> select a.zone,concat(a.svr_ip,':',a.svr_port) observer, cpu_total, (cpu_total-cpu_assigned) cpu_free, round(mem_total/1024/1024/1024) mem_total_gb, round((mem_total-mem_assigned)/1024/1024/1024) mem_free_gb, usec_to_time(b.last_offline_time) last_offline_time, usec_to_time(b.start_service_time) start_service_time, b.status, usec_to_time(b.stop_time) stop_time, b.build_version from __all_virtual_server_stat a join __all_server b on (a.svr_ip=b.svr_ip and a.svr_port=b.svr_port) order by a.zone, a.svr_ip;

+-------+----------------------+-----------+----------+--------------+-------------+----------------------------+----------------------------+----------+----------------------------+------------------------------------------------------------------------+

| zone | observer | cpu_total | cpu_free | mem_total_gb | mem_free_gb | last_offline_time | start_service_time | status | stop_time | build_version |

+-------+----------------------+-----------+----------+--------------+-------------+----------------------------+----------------------------+----------+----------------------------+------------------------------------------------------------------------+

| zone1 | 192.168.117.171:2882 | 14 | 11.5 | 5 | 4 | 1970-01-01 08:00:00.000000 | 2021-12-25 11:07:55.559403 | active | 1970-01-01 08:00:00.000000 | 3.1.1_4-8c615943cbd25a6f7b8bdfd8677a13a21709a05e(Oct 21 2021 10:33:14) |

| zone2 | 192.168.117.172:2882 | 14 | 11.5 | 5 | 4 | 1970-01-01 08:00:00.000000 | 2021-12-25 10:59:23.040243 | active | 1970-01-01 08:00:00.000000 | 3.1.1_4-8c615943cbd25a6f7b8bdfd8677a13a21709a05e(Oct 21 2021 10:33:14) |

| zone3 | 192.168.117.173:2882 | 14 | 11.5 | 5 | 4 | 1970-01-01 08:00:00.000000 | 1970-01-01 08:00:00.000000 | active | 1970-01-01 08:00:00.000000 | 3.1.1_4-8c615943cbd25a6f7b8bdfd8677a13a21709a05e(Oct 21 2021 10:33:14) |

+-------+----------------------+-----------+----------+--------------+-------------+----------------------------+----------------------------+----------+----------------------------+------------------------------------------------------------------------+

# 集群已经修改start_service_time 信息,集群启动完成

MySQL [oceanbase]> select a.zone,concat(a.svr_ip,':',a.svr_port) observer, cpu_total, (cpu_total-cpu_assigned) cpu_free, round(mem_total/1024/1024/1024) mem_total_gb, round((mem_total-mem_assigned)/1024/1024/1024) mem_free_gb, usec_to_time(b.last_offline_time) last_offline_time, usec_to_time(b.start_service_time) start_service_time, b.status, usec_to_time(b.stop_time) stop_time, b.build_version from __all_virtual_server_stat a join __all_server b on (a.svr_ip=b.svr_ip and a.svr_port=b.svr_port) order by a.zone, a.svr_ip;

+-------+----------------------+-----------+----------+--------------+-------------+----------------------------+----------------------------+--------+----------------------------+------------------------------------------------------------------------+

| zone | observer | cpu_total | cpu_free | mem_total_gb | mem_free_gb | last_offline_time | start_service_time | status | stop_time | build_version |

+-------+----------------------+-----------+----------+--------------+-------------+----------------------------+----------------------------+--------+----------------------------+------------------------------------------------------------------------+

| zone1 | 192.168.117.171:2882 | 14 | 11.5 | 5 | 4 | 1970-01-01 08:00:00.000000 | 2021-12-25 11:07:55.559403 | active | 1970-01-01 08:00:00.000000 | 3.1.1_4-8c615943cbd25a6f7b8bdfd8677a13a21709a05e(Oct 21 2021 10:33:14) |

| zone2 | 192.168.117.172:2882 | 14 | 11.5 | 5 | 4 | 1970-01-01 08:00:00.000000 | 2021-12-25 10:59:23.040243 | active | 1970-01-01 08:00:00.000000 | 3.1.1_4-8c615943cbd25a6f7b8bdfd8677a13a21709a05e(Oct 21 2021 10:33:14) |

| zone3 | 192.168.117.173:2882 | 14 | 11.5 | 5 | 4 | 1970-01-01 08:00:00.000000 | 2021-12-25 11:12:21.488835 | active | 1970-01-01 08:00:00.000000 | 3.1.1_4-8c615943cbd25a6f7b8bdfd8677a13a21709a05e(Oct 21 2021 10:33:14) |

+-------+----------------------+-----------+----------+--------------+-------------+----------------------------+----------------------------+--------+----------------------------+------------------------------------------------------------------------+

9. 了解 zone

本次部署的集群有三个节点,分别是zone1,zone2,zone3,每个zone各一台服务器。

如果OB cluster的集群不需要占用太多的资源,那么通过扩容实践将能更好地理解OceanBase架构。

同时,笔者也认为会有更多的学习者投入时间和精力研究。

OceanBase 集群由若干个 Zone 组成。

zone可以代表是一个机房的某些服务器,也可以是一个机房、一个数据中心。

OceanBase 可以跨机房部署,即可理解成不同地域(Region),一个 Region 可以包含一个或者多个 Zone。

YY一下,本集群的每个zone各来自不同地域(Region)的机房的1个服务器。

如果想要扩展,可以使用以下命令,由于笔者的电脑资源有限,只能等资源充足再进行演示。

把各机房再划分一台服务器到对应的zone上。

alter system add server '192.168.117.174:3882' zone 'zone1', '192.168.117.175:3882' zone 'zone2', '192.168.117.176:3882' zone 'zone3';

10. 了解observer

见第9点,可以知道每一个服务器都可以成为一个observer。

11. 了解资源池和资源单位

首先,创建资源单元。[它是资源分配的最小单位,同一个Unit不能跨OBServer]

其次,把资源单位按照一定的数量分配给资源池。

最后,把资源池绑定到租户/实例。

数据是有多份冗余的。把一个资源单位给了资源池,意味着每一个zone都要挖出一块资源给到资源池。

每一张表在每一个zone都会有备份,这和mycat中间件架构是完全不同的。

创建资源单元

MySQL [oceanbase]> create resource unit mysql_s1 max_cpu=1,min_cpu=1,max_memory='1G',min_memory='1G',max_iops=20000,min_iops=20000,max_session_num=10000,max_disk_size='4G';

Query OK, 0 rows affected (0.053 sec)

MySQL [oceanbase]> create resource unit mysql_s2 max_cpu=1,min_cpu=1,max_memory='1G',min_memory='1G',max_iops=40000,min_iops=40000,max_session_num=100000,max_disk_size='4G';

Query OK, 0 rows affected (0.070 sec)

创建资源池

MySQL [oceanbase]> create resource pool POO1_MYSQL_S1 unit='mysql_s1' ,unit_num=1;

Query OK, 0 rows affected (0.070 sec)

MySQL [oceanbase]> create resource pool POO1_MYSQL_S2 unit='mysql_s2' , unit_num=1, zone_list=('zone1' ,'zone2') ;

Query OK, 0 rows affected (0.045 sec)

12. 了解 primary zone 和 locality

12.1 primary zone

参考官方 OBCP学习资料 。

数据的leader副本在哪个节点的Unit里,业务读写请求就落在哪个节点上。

leader副本默认位置由表的primary_zone属性控制,可以继承自数据库以及租户(实例)的primary_zone设置。

笔者尝试查看某表的primary_zone,信息显示为zone1,zone2,zone3。

那究竟哪个才是是leader副本呢?见下面信息。

重点:即使primary_zone设置为RANDOM,在只有三个Unit的情况下(即Resource Pool的unit_num=1的情况下),每个leader副本会默认在第一个Zone里。

本集群的租户分配的资源池的资源单元unit_num=1,所以,leader副本在第一个zone。

那如果unit_num>=2呢?待笔者了解后,再来演示,敬请期待!

12.2 locality

参考官方文档 。

不同的租户在同一个集群内可以配置不同的 Locality 并且彼此之间相互独立不受影响。

Locality 的设置通常用于集群的副本数升级、降级或集群的搬迁:

集群副本数升级

以租户为粒度,对集群中的每一个租户,增加租户下 Partition 的副本数。例如,将 Locality 由 F@z1,F@z2,F@z3变更为 F@z1,F@z2,F@z3,F@z4,F@z5,租户从 3 副本变为 5 副本。

集群副本数降级

以租户为粒度,对集群内的每一个租户减少其中 Partition 的副本数。例如,将 Locality 由 F@z1,F@z2,F@z3,F@z4,F@z5变更为 F@z1,F@z2,F@z3,F@z4,租户从 5 副本变为 4 副本。

集群搬迁

以租户为粒度,对集群内的每一个租户通过若干次 Locality 变更。比如,将 Locality 从 F@hz1,F@hz2,F@hz3变更为 F@hz1,F@sh1,F@sh2即代表将原集群中属于杭州的两个 Zone 迁到上海。

简单理解,Zone的数量多或者少了,locality的可选择性也就多了。

副本可以增减、也可以变更地点。

13. 了解租户

13.1 租户概念

参考官方 OBCP学习资料 。

OceanBase集群是一个大资源池,不可能把所有资源全部给某一个业务用。

OceanBase集群实行的是按需分配给不同的业务,以租户/实例的形式实现。

实现资源统一管理,便于IT人员掌控。

13.2 创建租户

MySQL [oceanbase]> create tenant mysql_sales resource_pool_list=('POO1_MYSQL_S1'),primary_zone='zone1;zone2,zone3',charset='utf8mb4' set ob_tcp_invited_nodes='%',ob_compatibility_mode='mysql';

Query OK, 0 rows affected (3.827 sec)

MySQL [oceanbase]> create tenant mysql_conf resource_pool_list=('POO1_MYSQL_S2'), primary_zone='RANDOM',comment 'mysql tenant/instance', charset='utf8mb4' set ob_tcp_invited_nodes='%' ;

Query OK, 0 rows affected (5.152 sec)

# 检查

MySQL [oceanbase]> select * from __all_tenant;

+----------------------------+----------------------------+-----------+-------------+-------------+-------------------+-------------------+--------+----------------+-----------------------+-----------+-----------------------+---------------------------------------------+---------------------+-------------------+------------------------+-----------------------------+-----------------------+--------------------+------------------+----------------------+---------------+

| gmt_create | gmt_modified | tenant_id | tenant_name | replica_num | zone_list | primary_zone | locked | collation_type | info | read_only | rewrite_merge_version | locality | logonly_replica_num | previous_locality | storage_format_version | storage_format_work_version | default_tablegroup_id | compatibility_mode | drop_tenant_time | status | in_recyclebin |

+----------------------------+----------------------------+-----------+-------------+-------------+-------------------+-------------------+--------+----------------+-----------------------+-----------+-----------------------+---------------------------------------------+---------------------+-------------------+------------------------+-----------------------------+-----------------------+--------------------+------------------+----------------------+---------------+

| 2021-12-24 22:02:46.183518 | 2021-12-24 22:02:46.183518 | 1 | sys | -1 | zone1;zone2;zone3 | zone1;zone2,zone3 | 0 | 0 | system tenant | 0 | 0 | FULL{1}@zone1, FULL{1}@zone2, FULL{1}@zone3 | 0 | | 0 | 0 | -1 | 0 | -1 | TENANT_STATUS_NORMAL | 0 |

| 2021-12-25 12:35:39.886851 | 2021-12-25 12:35:39.886851 | 1001 | mysql_sales | -1 | zone1;zone2;zone3 | zone1;zone2,zone3 | 0 | 0 | | 0 | 0 | FULL{1}@zone1, FULL{1}@zone2, FULL{1}@zone3 | 0 | | 0 | 0 | -1 | 0 | -1 | TENANT_STATUS_NORMAL | 0 |

| 2021-12-25 12:48:20.793068 | 2021-12-25 12:48:20.793068 | 1003 | mysql_conf | -1 | zone1;zone2 | RANDOM | 0 | 0 | mysql tenant/instance | 0 | 0 | FULL{1}@zone1, FULL{1}@zone2 | 0 | | 0 | 0 | -1 | 0 | -1 | TENANT_STATUS_NORMAL | 0 |

+----------------------------+----------------------------+-----------+-------------+-------------+-------------------+-------------------+--------+----------------+-----------------------+-----------+-----------------------+---------------------------------------------+---------------------+-------------------+------------------------+-----------------------------+-----------------------+--------------------+------------------+----------------------+---------------+

3 rows in set (0.005 sec)

# 查看现有的租户/实例。

# 一个视图可以看到一个集群的所有实例。

# 试想一下,对于一个公司来说,一个集群就可以满足管理N个实例,一目了然,是不是很酷。

MySQL [oceanbase]> select * from gv$tenant;

+-----------+-------------+-------------------+-------------------+----------------+-----------------------+-----------+---------------------------------------------+

| tenant_id | tenant_name | zone_list | primary_zone | collation_type | info | read_only | locality |

+-----------+-------------+-------------------+-------------------+----------------+-----------------------+-----------+---------------------------------------------+

| 1 | sys | zone1;zone2;zone3 | zone1;zone2,zone3 | 0 | system tenant | 0 | FULL{1}@zone1, FULL{1}@zone2, FULL{1}@zone3 |

| 1001 | mysql_sales | zone1;zone2;zone3 | zone1;zone2,zone3 | 0 | | 0 | FULL{1}@zone1, FULL{1}@zone2, FULL{1}@zone3 |

| 1003 | mysql_conf | zone1;zone2 | RANDOM | 0 | mysql tenant/instance | 0 | FULL{1}@zone1, FULL{1}@zone2 |

+-----------+-------------+-------------------+-------------------+----------------+-----------------------+-----------+---------------------------------------------+

3 rows in set (0.026 sec)

# 查看剩余的资源

MySQL [oceanbase]> select a.zone,concat(a.svr_ip,':',a.svr_port) observer, cpu_total, cpu_assigned, (cpu_total-cpu_assigned) cpu_free, mem_total/1024/1024/1024 mem_total_gb, mem_assigned/1024/1024/1024 mem_assign_gb, (mem_total-mem_assigned)/1024/1024/1024 mem_free_gb

from __all_virtual_server_stat a join __all_server b on (a.svr_ip=b.svr_ip and a.svr_port=b.svr_port)

order by a.zone, a.svr_ip;

+-------+----------------------+-----------+--------------+----------+----------------+----------------+----------------+

| zone | observer | cpu_total | cpu_assigned | cpu_free | mem_total_gb | mem_assign_gb | mem_free_gb |

+-------+----------------------+-----------+--------------+----------+----------------+----------------+----------------+

| zone1 | 192.168.117.171:2882 | 14 | 4.5 | 9.5 | 5.000000000000 | 3.250000000000 | 1.750000000000 |

| zone2 | 192.168.117.172:2882 | 14 | 4.5 | 9.5 | 5.000000000000 | 3.250000000000 | 1.750000000000 |

| zone3 | 192.168.117.173:2882 | 14 | 3.5 | 10.5 | 5.000000000000 | 2.250000000000 | 2.750000000000 |

+-------+----------------------+-----------+--------------+----------+----------------+----------------+----------------+

3 rows in set (0.009 sec)

13.3 还原环境[可选]

删除租户

MySQL [oceanbase]> DROP TENANT mysql_conf FORCE;

删除资源池

MySQL [oceanbase]> DROP RESOURCE POOL POO1_MYSQL_S2;

删除资源单位

MySQL [oceanbase]> DROP RESOURCE UNIT mysql_s2;

13.4 登录租户/实例

13.4.1 修改新建实例的root密码

生成一个随机密码

[admin@RHOB-DB00 ~]$ strings /dev/urandom |tr -dc A-Za-z0-9 | head -c8; echo ZbkErGYS

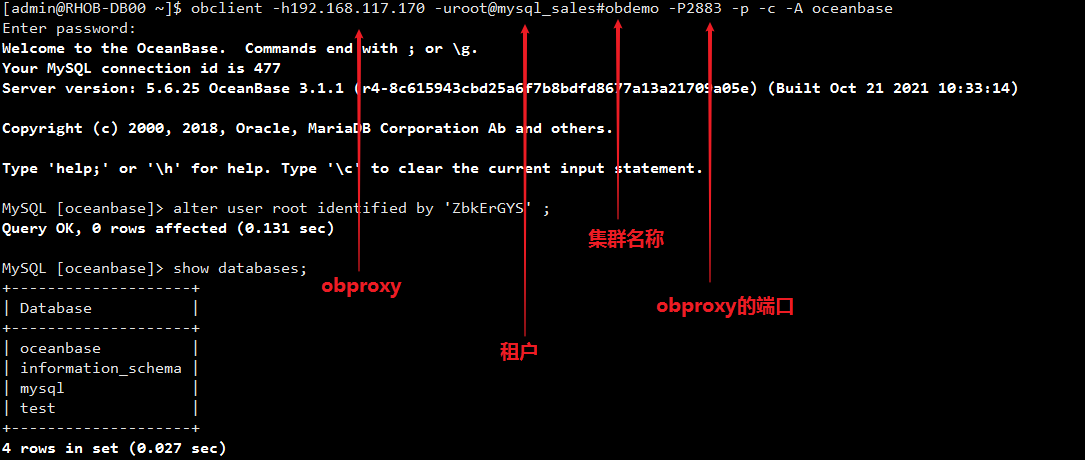

13.4.2 登录新建的mysql租户

登录租户,修改密码

14. 建表测试

14.1 分区表

use test;

CREATE TABLE test_range(

id INT,

gmt_create TIMESTAMP,

info VARCHAR(20),

PRIMARY KEY (gmt_create))

PARTITION BY RANGE(UNIX_TIMESTAMP(gmt_create))

(PARTITION p2014 VALUES LESS THAN (UNIX_TIMESTAMP('2015-01-01 00:00:00')),

PARTITION p2015 VALUES LESS THAN (UNIX_TIMESTAMP('2016-01-01 00:00:00')),

PARTITION p2016 VALUES LESS THAN (UNIX_TIMESTAMP('2017-01-01 00:00:00')));

MySQL [test]> insert into test_range values (1,'2014-06-02','hhha');

Query OK, 1 row affected (0.200 sec)

MySQL [test]> insert into test_range values (2,'2015-07-03','hxxhha');

Query OK, 1 row affected (0.015 sec)

MySQL [test]> insert into test_range values (3,'2016-09-09','xxx');

Query OK, 1 row affected (0.011 sec)

MySQL [test]> select * from test_range;

+------+---------------------+--------+

| id | gmt_create | info |

+------+---------------------+--------+

| 1 | 2014-06-02 00:00:00 | hhha |

| 2 | 2015-07-03 00:00:00 | hxxhha |

| 3 | 2016-09-09 00:00:00 | xxx |

+------+---------------------+--------+

3 rows in set (0.042 sec)

MySQL [test]> select * from test_range partition (p2016);

+------+---------------------+------+

| id | gmt_create | info |

+------+---------------------+------+

| 3 | 2016-09-09 00:00:00 | xxx |

+------+---------------------+------+

1 row in set (0.004 sec)

14.2 分区表的分布

笔者想通过视图了解各个分区表的分区的leader副本在哪里,但没有找出,后续有时间再试试看。

即使primary_zone设置为RANDOM,在只有三个Unit的情况下(即Resource Pool的unit_num=1的情况下),每个leader副本会默认在第一个Zone里。

MySQL [oceanbase]> select * from gv$table where table_name ='test_range'\G

*************************** 1. row ***************************

tenant_id: 1001

tenant_name: mysql_sales

table_id: 1100611139453777

table_name: test_range

database_id: 1100611139404776

database_name: test

tablegroup_id: -1

tablegroup_name: NULL

table_type: 3

zone_list: zone1;zone2;zone3

primary_zone: zone1;zone2,zone3

collation_type: 45

locality: FULL{1}@zone1, FULL{1}@zone2, FULL{1}@zone3

schema_version: 1640439545025432

read_only: 0

comment:

index_status: 1

index_type: 0

part_level: 1

part_func_type: 3

part_func_expr: UNIX_TIMESTAMP(gmt_create)

part_num: 3

sub_part_func_type: 0

sub_part_func_expr:

sub_part_num: 1

dop: 1

auto_part: 0

auto_part_size: -1

1 row in set (0.015 sec)

MySQL [oceanbase]> select * from v$partition where table_id =1100611139453777 order by zone;

+-----------+------------------+---------------+--------------+-----------------+----------+----------+---------+---------------+-------+------+-------------------------------------------------------------------------------------------------------------------+-----------+-----------+--------------+--------------------+---------------+--------------+-----------------+---------+--------------+---------------+-----------------------+------------+--------+

| tenant_id | table_id | tablegroup_id | partition_id | svr_ip | svr_port | sql_port | unit_id | partition_cnt | zone | role | member_list | row_count | data_size | data_version | partition_checksum | data_checksum | row_checksum | column_checksum | rebuild | replica_type | required_size | status | is_restore | quorum |

+-----------+------------------+---------------+--------------+-----------------+----------+----------+---------+---------------+-------+------+-------------------------------------------------------------------------------------------------------------------+-----------+-----------+--------------+--------------------+---------------+--------------+-----------------+---------+--------------+---------------+-----------------------+------------+--------+

| 1001 | 1100611139453777 | -1 | 0 | 192.168.117.171 | 2882 | 2881 | 1001 | 0 | zone1 | 1 | 192.168.117.171:2882:1640439545129458,192.168.117.172:2882:1640439545129458,192.168.117.173:2882:1640439545129458 | 0 | 0 | 1 | 0 | 0 | 0 | | 0 | 0 | 0 | REPLICA_STATUS_NORMAL | 0 | 3 |

| 1001 | 1100611139453777 | -1 | 1 | 192.168.117.171 | 2882 | 2881 | 1001 | 0 | zone1 | 1 | 192.168.117.171:2882:1640439545129583,192.168.117.172:2882:1640439545129583,192.168.117.173:2882:1640439545129583 | 0 | 0 | 1 | 0 | 0 | 0 | | 0 | 0 | 0 | REPLICA_STATUS_NORMAL | 0 | 3 |

| 1001 | 1100611139453777 | -1 | 2 | 192.168.117.171 | 2882 | 2881 | 1001 | 0 | zone1 | 1 | 192.168.117.171:2882:1640439545129686,192.168.117.172:2882:1640439545129686,192.168.117.173:2882:1640439545129686 | 0 | 0 | 1 | 0 | 0 | 0 | | 0 | 0 | 0 | REPLICA_STATUS_NORMAL | 0 | 3 |

| 1001 | 1100611139453777 | -1 | 0 | 192.168.117.172 | 2882 | 2881 | 1002 | 0 | zone2 | 2 | 192.168.117.171:2882:1640439545129458,192.168.117.172:2882:1640439545129458,192.168.117.173:2882:1640439545129458 | 0 | 0 | 1 | 0 | 0 | 0 | | 0 | 0 | 0 | REPLICA_STATUS_NORMAL | 0 | 3 |

| 1001 | 1100611139453777 | -1 | 1 | 192.168.117.172 | 2882 | 2881 | 1002 | 0 | zone2 | 2 | 192.168.117.171:2882:1640439545129583,192.168.117.172:2882:1640439545129583,192.168.117.173:2882:1640439545129583 | 0 | 0 | 1 | 0 | 0 | 0 | | 0 | 0 | 0 | REPLICA_STATUS_NORMAL | 0 | 3 |

| 1001 | 1100611139453777 | -1 | 2 | 192.168.117.172 | 2882 | 2881 | 1002 | 0 | zone2 | 2 | 192.168.117.171:2882:1640439545129686,192.168.117.172:2882:1640439545129686,192.168.117.173:2882:1640439545129686 | 0 | 0 | 1 | 0 | 0 | 0 | | 0 | 0 | 0 | REPLICA_STATUS_NORMAL | 0 | 3 |

| 1001 | 1100611139453777 | -1 | 0 | 192.168.117.173 | 2882 | 2881 | 1003 | 0 | zone3 | 2 | 192.168.117.171:2882:1640439545129458,192.168.117.172:2882:1640439545129458,192.168.117.173:2882:1640439545129458 | 0 | 0 | 1 | 0 | 0 | 0 | | 0 | 0 | 0 | REPLICA_STATUS_NORMAL | 0 | 3 |

| 1001 | 1100611139453777 | -1 | 1 | 192.168.117.173 | 2882 | 2881 | 1003 | 0 | zone3 | 2 | 192.168.117.171:2882:1640439545129583,192.168.117.172:2882:1640439545129583,192.168.117.173:2882:1640439545129583 | 0 | 0 | 1 | 0 | 0 | 0 | | 0 | 0 | 0 | REPLICA_STATUS_NORMAL | 0 | 3 |

| 1001 | 1100611139453777 | -1 | 2 | 192.168.117.173 | 2882 | 2881 | 1003 | 0 | zone3 | 2 | 192.168.117.171:2882:1640439545129686,192.168.117.172:2882:1640439545129686,192.168.117.173:2882:1640439545129686 | 0 | 0 | 1 | 0 | 0 | 0 | | 0 | 0 | 0 | REPLICA_STATUS_NORMAL | 0 | 3 |

+-----------+------------------+---------------+--------------+-----------------+----------+----------+---------+---------------+-------+------+-------------------------------------------------------------------------------------------------------------------+-----------+-----------+--------------+--------------------+---------------+--------------+-----------------+---------+--------------+---------------+-----------------------+------------+--------+

9 rows in set (0.048 sec)

15. 了解表组

分区的分布以及leader的分布是随机的,在分布式数据库里,跨节点的请求时性能会有下降。

OceanBase 对于同一个表分组中的表的同号分区会管理为一个分区组。

同一个分区组中的分区,OceanBase 会尽可能的分配到同一个节点内部,这样就可以规避跨节点的请求。

举例:前提条件: 表>=2 且为分区表。

ordr 和 ordl都为hash分区表,它们的同个分区号 如p0会在同一个observer里面,而不会跨observer分布。

目前官方举例hash分区,不知道range分区是否可行?后续有机会测试一波。

15.1 创建表组

MySQL [test]> show tablegroups;

+-----------------+------------+---------------+

| Tablegroup_name | Table_name | Database_name |

+-----------------+------------+---------------+

| oceanbase | NULL | NULL |

+-----------------+------------+---------------+

3 rows in set (0.017 sec)

MySQL [test]> create tablegroup tgorder partition by hash partitions 3;

Query OK, 0 rows affected (0.053 sec)

MySQL [test]> show tablegroups;

+-----------------+------------+---------------+

| Tablegroup_name | Table_name | Database_name |

+-----------------+------------+---------------+

| oceanbase | NULL | NULL |

| tgorder | NULL | NULL |

+-----------------+------------+---------------+

3 rows in set (0.017 sec)

15.2 新建表并加入表组

create table ordr (

o_w_id int

, o_d_id int

, o_id int

, o_c_id int

, o_carrier_id int

, o_ol_cnt int

, o_all_local int

, o_entry_d date

, index iordr(o_w_id, o_d_id, o_c_id, o_id) local

, primary key ( o_w_id, o_d_id, o_id )

)tablegroup tgorder partition by hash(o_w_id) partitions 3;

create table ordl (

ol_w_id int

, ol_d_id int

, ol_o_id int

, ol_number int

, ol_delivery_d date

, ol_amount decimal(6, 2)

, ol_i_id int

, ol_supply_w_id int

, ol_quantity int

, ol_dist_info char(24)

, primary key (ol_w_id, ol_d_id, ol_o_id, ol_number )

)tablegroup tgorder partition by hash(ol_w_id) partitions 3;

MySQL [test]> show tablegroups;

+-----------------+------------+---------------+

| Tablegroup_name | Table_name | Database_name |

+-----------------+------------+---------------+

| oceanbase | NULL | NULL |

| tgorder | ordl | test |

| tgorder | ordr | test |

+-----------------+------------+---------------+

4 rows in set (0.014 sec)

# 或者使用以下方法,加入表组。

MySQL [test]> alter tablegroup tgorder add ordl , ordr ;

Query OK, 0 rows affected (0.016 sec)

MySQL [test]> show tablegroups;

+-----------------+------------+---------------+

| Tablegroup_name | Table_name | Database_name |

+-----------------+------------+---------------+

| oceanbase | NULL | NULL |

| tgorder | ordl | test |

| tgorder | ordr | test |

+-----------------+------------+---------------+

3 rows in set (0.004 sec)

15.3 将表从表组移出

MySQL [test]> alter table ordl tablegroup = '';

Query OK, 0 rows affected (0.148 sec)

MySQL [test]> alter table ordr tablegroup = '';

Query OK, 0 rows affected (0.018 sec)

MySQL [test]> show tablegroups;

+-----------------+------------+---------------+

| Tablegroup_name | Table_name | Database_name |

+-----------------+------------+---------------+

| oceanbase | NULL | NULL |

| tgorder | NULL | NULL |

+-----------------+------------+---------------+

2 rows in set (0.004 sec)

16. 了解复制表

参考官方文档。

普通表

普通的表在生产环境,默认有三副本,其中一个主副本和两个备副本。备副本通过同步主副本的事务日志 clog 保持同步,同步协议是 Paxos 协议,主副本的事务日志只有在多数成员里确认落盘后,事务修改才会生效。

复制表

普通表可以变为复制表,然后主副本和所有备副本之间使用全同步协议,主副本的事务日志只有在所有副本成员里确认落盘后,事务修改才会生效。

注意 : 不要被 冗余副本影响了,通过区分协议理解普通表和复制表的概念。

复制表解决的问题

业务上存在一些表,这些表的更新的频率很低,但是访问的频率非常高,并且要求总是能够访问到最新的数据。

而普通表的风险就是备副本的读会有些许延迟,并且可能有远程SQL的问题。

create table test_dup

(

id bigint not null auto_increment ,

c1 varchar(50),

c2 timestamp not null default current_timestamp

)

duplicate_scope='cluster' ;

Query OK, 0 rows affected (0.12 sec)

MySQL [oceanbase]> select table_name,duplicate_scope from oceanbase.__all_table_v2 where table_name in ('test_range','test_dup');

+------------+-----------------+

| table_name | duplicate_scope |

+------------+-----------------+

| test_range | 0 |

| test_dup | 1 |

+------------+-----------------+

2 rows in set (0.003 sec)

17. 查看执行计划

OceanBase的执行计划,阅读起来还是可以的。后续有机会对比下Oracle和MySQL8的执行计划。

17.1 复制表/普通表

简单了解非分区表的执行计划。

MySQL [test]> desc test_dup;

+-------+-------------+------+-----+-------------------+----------------+

| Field | Type | Null | Key | Default | Extra |

+-------+-------------+------+-----+-------------------+----------------+

| id | bigint(20) | NO | | NULL | auto_increment |

| c1 | varchar(50) | YES | | NULL | |

| c2 | timestamp | NO | | CURRENT_TIMESTAMP | |

+-------+-------------+------+-----+-------------------+----------------+

# 估算行数 好像有点不对劲 ? 空表 100000 rows 还是挺多的。

MySQL [test]> explain select * from test_dup\G

*************************** 1. row ***************************

Query Plan: ========================================

|ID|OPERATOR |NAME |EST. ROWS|COST |

----------------------------------------

|0 |TABLE SCAN|test_dup|100000 |66272|

========================================

Outputs & filters:

-------------------------------------

0 - output([test_dup.id], [test_dup.c1], [test_dup.c2]), filter(nil),

access([test_dup.id], [test_dup.c1], [test_dup.c2]), partitions(p0)

1 row in set (1.085 sec)

MySQL [test]> insert into test_dup values (1,'haha','2021-12-25');

Query OK, 1 row affected (1.683 sec)

MySQL [test]> commit;

Query OK, 0 rows affected (0.003 sec)

MySQL [test]> select * from test_dup;

+----+------+---------------------+

| id | c1 | c2 |

+----+------+---------------------+

| 1 | haha | 2021-12-25 00:00:00 |

+----+------+---------------------+

1 row in set (0.070 sec)

# 插入数据后,应该是重新估算了,行数正常。

# partitions(p0) , 非分区表的partitions默认为p0。

MySQL [test]> explain select * from test_dup\G

*************************** 1. row ***************************

Query Plan: =======================================

|ID|OPERATOR |NAME |EST. ROWS|COST|

---------------------------------------

|0 |TABLE SCAN|test_dup|1 |36 |

=======================================

Outputs & filters:

-------------------------------------

0 - output([test_dup.id], [test_dup.c1], [test_dup.c2]), filter(nil),

access([test_dup.id], [test_dup.c1], [test_dup.c2]), partitions(p0)

17.2 分区表

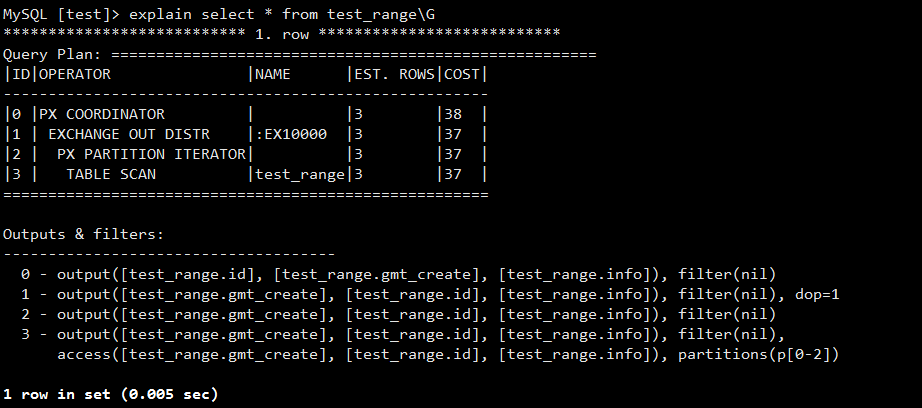

根据使用分区条件简单了解OceanBase的执行计划。

17.2.1 查询分区表全表

可以看到左下角的partitionsp[0-2],代表所有分区都会被扫描。

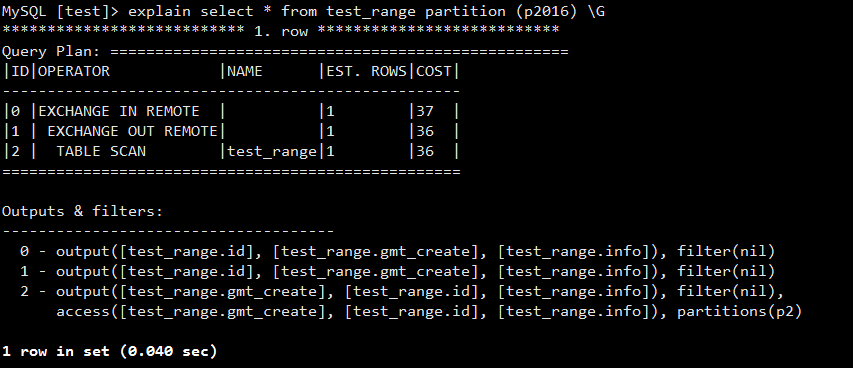

17.2.1 查询分区

可以看到执行计划中,显示的partitions是p2,即只扫描了P2016分区,不过不是笔者指定的分区名字。

18. 总结经验

- 内存要充足。因为笔者的电脑资源有限,所以关闭了一个节点,发现无法truncate和新建表。OceanBase 数据库中的系统变量 ob_create_table_strict_mode= TRUE,表示会严格按照 Locality 建立副本,任何副本建立失败,则创建表失败。将 ob_create_table_strict_mode 设置为 FALSE,保证在节点下线期间可以正常创建表。

所以,资源充足的情况下,要看看是不是有个节点下线了。

ERROR 4624 (HY000): machine resource is not enough to hold a new unit

-

因为OBD工具有维护功能,笔者尝试利用OBD工具加入手动部署的集群,但失败告终。其实问题不大,查询状态的信息基本上可以通过脚本实现,修改配置也可以通过修改启动observer的配置完成。另外,手动部署的集群在启停方面的灵活性比OBD工具部署的集群更好,请见本文第8点!

-

为了进一步了解zone的扩展,笔者尝试先部署zone1-observer1,然后初始化集群,最后加入新的zone,但发现新增的zone的zone_type信息为LOCAL,而不是ReadWrite。通过查询__all_tenant的zone_list、primary_zone、locality只有zone1,尝试ALTER TENANT 增加 zone2和zone3,但该版本不支持修改。所以,笔者建议社区版初始化集群时,就把rs_list写全。

-

了解分区表的leader主副本分布,由于笔者经验有限,没有找出,但笔者相信肯定是可以找到的,因为了解主副本的分布,对于架构优化,还是有挺大的帮助。

-

由于笔者电脑资源有限,无法演示 1-1-1 集群扩展至 3-3-3 集群,比较遗憾,否则将会更好地理解OceanBase的易扩展性。后续有机会再补上。

-

集群的某个节点正常关闭,过一段时间后,启动该节点,集群正常,且相应的数据变更会同步到刚启动的节点。

最后,感谢您的阅读,如有不妥之处,欢迎提出,谢谢!