三、构建实验OpenStack.Networking(neutron)(yuanjian)

——>1<——安装并配置controller节点

配置先决条件

安装网络服务组件

配置网络服务组件

配置Modular Layer2(ML2)插件)

配置计算服务使用Neutron

完成安装

验证

——>环境验证配置<——

1、在neutron节点配置信息

//1、在neutron节点配置//删除更新后的官方YUM源文件[root@network ~]# mv /etc/yum.repos.d/CentOS-* /etc/yum.repos.d/back/[root@network ~]# ll /etc/yum.repos.d/ftp.repo-rw-r--r-- 1 root root 434 Jan 9 01:19 /etc/yum.repos.d/ftp.repo[root@network ~]# yum install -y lrzsz net-tools vim//2、设置hosts文件信息[root@controller ~]# scp /etc/hosts root@192.168.222.6:/root/hosts//3、修改主机名[root@network ~]# hostnamectl set-hostname network.nice.com//4、查看防火墙当前的状态[root@network ~]# systemctl status firewalld.service//5、selinux状态[root@network ~]# getenforceDisabled//6、同步时间服务器[root@network ~]# yum install -y ntpdate //安装时间同步服务器客户端工具[root@network ~]# ntpdate -u 192.168.222.5 //同步时间//7、配置一下crontab;在compute下查找crontab信息;并复制[root@compute1 ~]# crontab -l*/1 * * * * /sbin/ntpdate -u controller.nice.com &> /dev/null//在neutron下配置crontab信息[root@network ~]# crontab -e*/1 * * * * /sbin/ntpdate -u controller.nice.com &> /dev/null[root@network ~]# systemctl restart crond.service[root@network ~]# systemctl enable crond.service

2、检查controller节点配置环境是否正常

//检查所有服务是否都为up状态[root@controller ~]# nova service-list+----+------------------+---------------------+----------+---------+-------+----------------------------+-----------------+| Id | Binary | Host | Zone | Status | State | Updated_at | Disabled Reason |+----+------------------+---------------------+----------+---------+-------+----------------------------+-----------------+| 1 | nova-consoleauth | controller.nice.com | internal | enabled | up | 2021-01-11T03:36:03.000000 | - || 2 | nova-cert | controller.nice.com | internal | enabled | up | 2021-01-11T03:36:03.000000 | - || 3 | nova-conductor | controller.nice.com | internal | enabled | up | 2021-01-11T03:36:02.000000 | - || 4 | nova-scheduler | controller.nice.com | internal | enabled | up | 2021-01-11T03:36:03.000000 | - || 5 | nova-compute | compute1.nice.com | nova | enabled | up | 2021-01-11T03:35:58.000000 | - |+----+------------------+---------------------+----------+---------+-------+----------------------------+-----------------+//查看镜像是否为active状态[root@controller ~]# nova image-list+--------------------------------------+---------------------+--------+--------+| ID | Name | Status | Server |+--------------------------------------+---------------------+--------+--------+| 9254729e-15b4-4315-b396-3f3e2e5b339f | cirros-0.3.3-x86_64 | ACTIVE | |+--------------------------------------+---------------------+--------+--------+

——>openstackneutron节点配置<——

在openstack_controller节点配置

1、配置先决条件

//1、创建数据库,完成下列步骤//1.1、使用root用户连接mysql数据库[root@controller ~]# mysql -uroot -pEnter password: 123456//1.2、创建neutron数据库MariaDB [(none)]> CREATE DATABASE neutron;Query OK, 1 row affected (0.00 sec)//1.3、创建数据库用户neutron,并授予neutron用户对neutron数据库完全控制权限MariaDB [(none)]> GRANT ALL PRIVILEGES ON neutron.* TO 'neutron'@'localhost' IDENTIFIED BY 'NEUTRON_DBPASS';Query OK, 0 rows affected (0.01 sec)MariaDB [(none)]> GRANT ALL PRIVILEGES ON neutron.* TO 'neutron'@'%' IDENTIFIED BY 'NEUTRON_DBPASS';Query OK, 0 rows affected (0.00 sec)//1.4、退出数据库连接//2、执行admin环境变量脚本[root@controller ~]# source admin-openrc.sh//3、在认证服务中创建网络服务的认证信息,完成下列步骤://3.1、创建neutron用户[root@controller ~]# keystone user-create --name neutron --pass NEUTRON_PASS+----------+----------------------------------+| Property | Value |+----------+----------------------------------+| email | || enabled | True || id | 0e2c1cbe865742a7be0ce02f3bb9227d || name | neutron || username | neutron |+----------+----------------------------------+//3.2、连接neutron用户到service租户和admin角色[root@controller ~]# keystone user-role-add --user neutron --tenant service --role admin//3.3、创建neutron服务[root@controller ~]# keystone service-create --name neutron --type network --description "OpenStack Networking"+-------------+----------------------------------+| Property | Value |+-------------+----------------------------------+| description | OpenStack Networking || enabled | True || id | 2a780bab744f4af9bf53af14b125a444 || name | neutron || type | network |+-------------+----------------------------------+[root@controller ~]# keystone endpoint-create \> --service-id $(keystone service-list | awk '/ network / {print $2}') \> --publicurl http://controller.nice.com:9696 \> --adminurl http://controller.nice.com:9696 \> --internalurl http://controller.nice.com:9696 \> --region regionOne+-------------+----------------------------------+| Property | Value |+-------------+----------------------------------+| adminurl | http://controller.nice.com:9696 || id | a1a573e58fbf4635ac6be5e2a44af52c || internalurl | http://controller.nice.com:9696 || publicurl | http://controller.nice.com:9696 || region | regionOne || service_id | 2a780bab744f4af9bf53af14b125a444 |+-------------+----------------------------------+

2、安装网络服务组件

[root@controller ~]# yum install openstack-neutron openstack-neutron-ml2 python-neutronclient whichInstalled:openstack-neutron.noarch 0:2014.2-5.el7.centos openstack-neutron-ml2.noarch 0:2014.2-5.el7.centosDependency Installed:conntrack-tools.x86_64 0:1.4.2-3.el7 dnsmasq-utils.x86_64 0:2.66-12.el7 ipset.x86_64 0:6.19-4.el7 ipset-libs.x86_64 0:6.19-4.el7libnetfilter_cthelper.x86_64 0:1.0.0-4.el7 libnetfilter_cttimeout.x86_64 0:1.0.0-2.el7 libnetfilter_queue.x86_64 0:1.0.2-2.el7 python-jsonrpclib.noarch 0:0.1.3-1.el7python-neutron.noarch 0:2014.2-5.el7.centos radvd.x86_64 0:2.7-1.el7.centosComplete!

3、配置网络服务组件

//1、编辑/etc/neutron/neutron.conf文件,并完成下列操作:[root@controller ~]# vim /etc/neutron/neutron.conf//1.1、编辑[database]小节,配置数据库访问[database]......connection=mysql://neutron:NEUTRON_DBPASS@controller.nice.com/neutron//1.2、编辑[DEFAULT]......rpc_backend=rabbitrabbit_host=controller.nice.comrabbit_password=guest//编辑[DEFAULT]修改一下认证方式为keystone的方式......auth_strategy=keystone//1.3、编辑[DEFAULT]和[keystone_authtoken]小节,配置认证服务访问:[DEFAULT]......auth_uri=http://controller.nice.com:5000/v2.0identity_uri=http://controller.nice.com:35357admin_tenant_name=serviceadmin_user=neutronadmin_password=NEUTRON_PASS//1.4、编辑[DEFAULT]小节,启用Modular Layer2(ML2)插件,路由服务和重叠IP地址功能:[DEFAULT]......core_plugin=ml2service_plugins=routerallow_overlapping_ips=True //开启安全组设置,相当于云环境的防火墙//1.5、编辑[DEFAULT]小节,配置当网络拓扑结构发生变化时通知计算服务:[DEFAULT]......notify_nova_on_port_status_changes=True //开启端口状态,相当于网卡连接部分notify_nova_on_port_data_changes=True //开启端口的数据发生变化,状态连接数据nova_url=http://controller.nice.com:8774/v2 //nova的访问地址nova_admin_auth_url=http://controller.nice.com:35357/v2.0 //nova下admin的访问地址nova_region_name=regionOne //nova的可用域nova_admin_username=nova //nova当前的管理用户nova_admin_tenant_id=48bee3be288e477889d404a41a0b6f33 //nova的admin管理员用户的ID号nova_admin_password=NOVA_PASS //nova管理员的密码//注:通过命令获取SERVICE_TENANT_ID号[root@controller ~]# source admin-openrc.sh[root@controller ~]# keystone tenant-get service+-------------+----------------------------------+| Property | Value |+-------------+----------------------------------+| description | Service Tenant || enabled | True || id | 48bee3be288e477889d404a41a0b6f33 || name | service |+-------------+----------------------------------+//ID号获取即可//1.6、(可选)在[DEFAULT]小节中配置详细日志输出,方便排错。[DEFAULT]......verbose=True

3、配置Modular Layer2(ML2)plug-in

//1、编辑/etc/neutron/plugins/ml2/ml2_conf.ini文件,并完成下列操作://1.1、编辑[ml2]小节,启用flat和generic routing encapsulation(GRE)网络类型驱动,[root@controller ~]# vim /etc/neutron/plugins/ml2/ml2_conf.ini//配置GRE租户网络和OVS驱动机制。[ml2]......type_drivers=flat,gre //flat是一般网络类型,gre是多租户组网tenant_network_types=gre //租户的默认类型为gre,默认使用gre租户组网mechanism_drivers=openvswitch //以及我们的组件openvswitch,软件级别的交换,做的功能是负责交换的事情//1.2、编辑[ml2_type_gre]小节,配置隧道标识范围:[ml2_type_gre]......tunnel_id_ranges=1:1000 //ml2租户使用的ID的范围为1~1000//1.3、编辑[securitygroup]小节,启用安全组,启用ipset并配置OVS防火墙驱动:[securitygroup]......enable_security_group=True //开启安全组enable_ipset=True //允许ml2相关对它的调试开启firewall_driver=neutron.agent.linux.iptables_firewall.OVSHybridIptablesFirewallDriver //添加一个防火墙驱动,驱动是固定化的,不需要再去配置

4、配置计算服务使用neutron

//默认情况下,计算服务使用传统网络,我们需要重新配置;把nova和neutron平台结合起来//1、编辑/etc/nova/nova.conf文件,并完成下列操作:[root@controller ~]# vim /etc/nova/nova.conf//1.1、编辑[DEFAULT]小节,配置API接口和驱动程序:[DEFAULT]......network_api_class=nova.network.neutronv2.api.API //网络连接过程的类security_group_api=neutron //修改安全组为neutron为用户去提供的linuxnet_interface_driver=nova.network.linux_net.LinuxOVSInterfaceDriver //修改它的驱动类型 //虚拟机的网卡驱动,一般在开启虚拟化的时候会访问网卡驱动是什么firewall_driver==nova.virt.firewall.NoopFirewallDriver //设置一下防火墙//1.4、编辑[neutron]小节,配置访问参数:[neutron]......url=http://controller.nice.com:9696 //修改neutron的访问路径auth_strategy=keystone //修改认证方案admin_auth_url=http://controller.nice.com:35357/v2.0 //注意空格,会有报错,曾经auth_strategy=keystone 前多了个空格,导致我无法解析admin_tenant_name=service //配置admin租户它的名称admin_username=neutron //配置用户名称admin_password=NEUTRON_PASS //admin的密码

5、完成配置

//1、为ML2 插件配置文件创建连接文件。默认是不被使用的。所以需要创建软连接[root@controller ~]#ln -s /etc/neutron/plugins/ml2/ml2_conf.ini /etc/neutron/plugin.ini//2、初始化数据库[root@controller ~]# su -s /bin/sh -c "neutron-db-manage --config-file /etc/neutron/neutron.conf --config-file /etc/neutron/plugins/ml2/ml2_conf.ini upgrade juno" neutronINFO [alembic.migration] Running upgrade 544673ac99ab -> juno, juno//进入数据库,检查有没有问题[root@controller ~]# mysql -uroot -pMariaDB [(none)]> use neutronMariaDB [neutron]> show tables;+-------------------------------------+| Tables_in_neutron |+-------------------------------------+| agents || alembic_version || allowedaddresspairs || arista_provisioned_nets || arista_provisioned_tenants || arista_provisioned_vms || brocadenetworks || brocadeports || cisco_credentials || cisco_csr_identifier_map || cisco_hosting_devices || cisco_ml2_apic_contracts || cisco_ml2_apic_host_links || cisco_ml2_apic_names || cisco_ml2_nexusport_bindings || cisco_n1kv_multi_segments || cisco_n1kv_network_bindings || cisco_n1kv_port_bindings || cisco_n1kv_profile_bindings || cisco_n1kv_trunk_segments || cisco_n1kv_vlan_allocations || cisco_n1kv_vmnetworks || cisco_n1kv_vxlan_allocations || cisco_network_profiles || cisco_policy_profiles || cisco_port_mappings || cisco_provider_networks || cisco_qos_policies || cisco_router_mappings || consistencyhashes || csnat_l3_agent_bindings || dnsnameservers || dvr_host_macs || embrane_pool_port || externalnetworks || extradhcpopts || firewall_policies || firewall_rules || firewalls || floatingips || ha_router_agent_port_bindings || ha_router_networks || ha_router_vrid_allocations || healthmonitors || hyperv_network_bindings || hyperv_vlan_allocations || ikepolicies || ipallocationpools || ipallocations || ipavailabilityranges || ipsec_site_connections || ipsecpeercidrs || ipsecpolicies || lsn || lsn_port || maclearningstates || members || meteringlabelrules || meteringlabels || ml2_brocadenetworks || ml2_brocadeports || ml2_dvr_port_bindings || ml2_flat_allocations || ml2_gre_allocations || ml2_gre_endpoints || ml2_network_segments || ml2_port_bindings || ml2_vlan_allocations || ml2_vxlan_allocations || ml2_vxlan_endpoints || mlnx_network_bindings || multi_provider_networks || network_bindings || network_states || networkconnections || networkdhcpagentbindings || networkflavors || networkgatewaydevicereferences || networkgatewaydevices || networkgateways || networkqueuemappings || networks || networksecuritybindings || neutron_nsx_network_mappings || neutron_nsx_port_mappings || neutron_nsx_router_mappings || neutron_nsx_security_group_mappings || nexthops || nuage_net_partition_router_mapping || nuage_net_partitions || nuage_provider_net_bindings || nuage_subnet_l2dom_mapping || ofcfiltermappings || ofcnetworkmappings || ofcportmappings || ofcroutermappings || ofctenantmappings || ovs_network_bindings || ovs_tunnel_allocations || ovs_tunnel_endpoints || ovs_vlan_allocations || packetfilters || poolloadbalanceragentbindings || poolmonitorassociations || pools || poolstatisticss || port_profile || portbindingports || portinfos || portqueuemappings || ports || portsecuritybindings || providerresourceassociations || qosqueues || quotas || router_extra_attributes || routerflavors || routerl3agentbindings || routerports || routerproviders || routerroutes || routerrules || routers || routerservicetypebindings || securitygroupportbindings || securitygrouprules || securitygroups || segmentation_id_allocation || servicerouterbindings || sessionpersistences || subnetroutes || subnets || tunnelkeylasts || tunnelkeys || tz_network_bindings || vcns_edge_monitor_bindings || vcns_edge_pool_bindings || vcns_edge_vip_bindings || vcns_firewall_rule_bindings || vcns_router_bindings || vips || vpnservices |+-------------------------------------+142 rows in set (0.00 sec)//3、重新启动计算服务:重启nova的API接口,scheduler调度,conductor数据库交互的部分systemctl restart openstack-nova-api.service openstack-nova-scheduler.service openstack-nova-conductor.service//检查重启后所有服务的状态是否为up状态[root@controller ~]# nova service-list+----+------------------+---------------------+----------+---------+-------+----------------------------+-----------------+| Id | Binary | Host | Zone | Status | State | Updated_at | Disabled Reason |+----+------------------+---------------------+----------+---------+-------+----------------------------+-----------------+| 1 | nova-consoleauth | controller.nice.com | internal | enabled | up | 2021-01-11T06:30:50.000000 | - || 2 | nova-cert | controller.nice.com | internal | enabled | up | 2021-01-11T06:30:50.000000 | - || 3 | nova-conductor | controller.nice.com | internal | enabled | up | 2021-01-11T06:30:54.000000 | - || 4 | nova-scheduler | controller.nice.com | internal | enabled | up | 2021-01-11T06:30:54.000000 | - || 5 | nova-compute | compute1.nice.com | nova | enabled | up | 2021-01-11T06:30:51.000000 | - |+----+------------------+---------------------+----------+---------+-------+----------------------------+-----------------+//4、启动网络服务并配置开机自动启动[root@controller ~]# systemctl enable neutron-server.service[root@controller ~]# systemctl start neutron-server.service

6、验证

//1、执行admin环境变量脚本[root@controller ~]# source admin-openrc.sh//2、列出加载的扩展模块,确认启动neutron-server进程[root@controller ~]# neutron ext-list+-----------------------+-----------------------------------------------+| alias | name |+-----------------------+-----------------------------------------------+| security-group | security-group || l3_agent_scheduler | L3 Agent Scheduler || ext-gw-mode | Neutron L3 Configurable external gateway mode || binding | Port Binding || provider | Provider Network || agent | agent || quotas | Quota management support || dhcp_agent_scheduler | DHCP Agent Scheduler || l3-ha | HA Router extension || multi-provider | Multi Provider Network || external-net | Neutron external network || router | Neutron L3 Router || allowed-address-pairs | Allowed Address Pairs || extraroute | Neutron Extra Route || extra_dhcp_opt | Neutron Extra DHCP opts || dvr | Distributed Virtual Router |+-----------------------+-----------------------------------------------+

——>2<——安装并配置network节点

配置先决条件

安装网络组件

配置网络通用组件

配置Modular Layer 2(ML2)plug-in

配置Layer-3(L3)agent

配置DHCP agent

配置metadata agent

配置Open vSwitch(OVS)服务

完成安装

验证

1、配置先决条件

在openstack-neutron节点配置

//1、编辑/etc/sysctl.conf文件,包含下列参数//开启IP_forward路由转发[root@network ~]# vim /etc/sysctl.confnet.ipv4.ip_forward=1net.ipv4.conf.all.rp_filter=0net.ipv4.conf.default.rp_filter=0//2、是更改生效[root@network ~]# sysctl -p //刷新权限net.ipv4.ip_forward = 1net.ipv4.conf.all.rp_filter = 0net.ipv4.conf.default.rp_filter = 0

2、安装网络组件

[root@network ~]# yum install openstack-neutron openstack-neutron-ml2 openstack-neutron-openvswitchInstalled:openstack-neutron.noarch 0:2014.2-5.el7.centos openstack-neutron-ml2.noarch 0:2014.2-5.el7.centos openstack-neutron-openvswitch.noarch 0:2014.2-5.el7.centosComplete!

3、配置网络通用组件

//网络通用组建配置包含认证机制,消息队列及插件。//1、编辑/etc/neutron/neutron.conf文件并完成下列操作[root@network ~]# vim /etc/neutron/neutron.conf//1.1、编辑[database]小节,注释任何connection选项,因为network节点不能直接连接数据库。//1.2、编辑[DEFAULT]小节,配置RabbitMQ消息队列访问[DEFAULT]......rpc_backend=rabbitrabbit_host=controller.nice.comrabbit_password=guest//1.3、编辑[DEFAULT]和[keystone_authtoken]小节,配置认证服务访问:[DEFAULT]......auth_strategy=keystone[keystone_authtoken]......aut_uri=http://controller.nice.com:5000/v2.0identity_uri=http://controller.nice.com:35357admin_tenant_name=serviceadmin_user=neutronadmin_password=NEUTRON_PASS//1.4、编辑[DEFAULT]小节,启用Moudlar Layer(ML2)插件,路由服务和重叠IP地址功能;[DEFAULT]......core_plugin=ml2service_plugins=routerallow_overlapping_ips=True//1.5、(可选)在[DEFAULT]小节中配置详细日志输出。方便排错。[DEFAULT]......verbose=True

4、配置Modular Layer2(ML2)plug-in

//ML2插件使用Open VSwitch(OVS)机制为虚拟机实例提供网络框架,//1、编辑/etc/neutron/plugins/ml2/ml2_conf.ini文件并完成下列操作。[root@network ~]# vim /etc/neutron/plugins/ml2/ml2_conf.ini//1.1、编辑[ml2]小节,启用flat和generic routing encapsulation(GRE)网络类型驱动,配置GRE租户网络和OVS驱动机制[ML2]......type_drivers=flat,gre //网络类型为flat和gretenant_network_types=gre //租户的网络类型为gremechanism_drivers=openvswitch//1.2、编辑[ml2_type_flat]小节,配置外部网络:[ml2_type_flat] //浮动网络,也就是外部网络,告诉他外部网络的位置......flat_networks=external //网桥是什么,会做一个相关的映射//1.3、编辑[ml2_type_gre]小节,配置隧道表示范围:[ml2_type_gre]......tunnel_id_ranges=1:1000 //使用的最大的范围//1.4、编辑[securitygroup]小节,启用安全组,启用ipset并配置OVS防火墙驱动:[securitygroup]......enable_security_group=True //开启安全组enable_ipset=True //允许相关设置firewall_driver=neutron.agent.linux.iptables_firewall.OVSHybridIptablesFirewallDriver//1.5、编辑[OVS]小节,配置Open vSwitch(OVS)代理 //在默认配置文件中是没有的[OVS]......[OVS]local_ip=172.16.0.6tunnel_type=greenable_tunneling=Truebridge_mappings=external:br-ex //网桥,最终到了br-ex上。

5、配置Layer-3(L3)agent:路由相关的配置

//1、编辑/etc/neutron/l3_agent.ini文件并完成下列配置:[root@network ~]# vim /etc/neutron/l3_agent.ini//1.1、编辑[DEFAULT]小节,配置驱动,启用网络命名空间,配置外部网络桥接[DEFAULT]......interface_driver=neutron.agent.linux.interface.OVSInterfaceDriver //网卡驱动进行修改use_namespaces=True //开启namespacesexternal_network_bridge=br-ex //指定我们的外部网络或者浮动网络的网桥名称是什么//1.2、(可选)在[DEFAULT]小节中配置详细日志输出,方便排错。[DEFAULT]......debug=True//verbose=True——debug=True //开启日志,这里为debug,只是改了名称而已,

——>3<——

1、配置DHCP agent

//1、编辑/etc/neutron/dhcp_agent.ini文件并完成下列步骤:[root@network ~]# vim /etc/neutron/dhcp_agent.ini//1.1、编辑[DEFAULT]小节,配置驱动和启用命名空间[DEFAULT]......interface_driver=neutron.agent.linux.interface.OVSInterfaceDriver //配置网卡,OVS类型驱动dhcp_driver=neutron.agent.linux.dhcp.Dnsmasq //DHCP的驱动use_namespaces=True //运行用户的命名空间//1.2、(可选)在[DEFAULT]小节中配置详细日志输出,方便排错。[DEFAULT]......debug=True//debug=True——verbose=True//2、(可选,在VMware虚拟机中可能是必要的!)配置DHCP选项,将MUT改为1454bytes,以改善网络性能;//2.1、编辑/etc/neutron/dhcp_agent.ini文件并完成下列步骤;//编辑[DEFAULT]小节,启用dnsmasq配置:[DEFAULT]......dnsmasq_config_file=/etc/neutron/dnsmasq-neutron.conf //添加它的访问路径//2.2、创建并编辑/etc/neutron/dnsmasq-neutron.conf文件并完成下列配置:[root@network ~]# vim /etc/neutron/dnsmasq-neutron.conf //创建这个文件,这个文件默认是没有的//启用DHCP MTU选项(26)并配置值为1454bytesdhcp-option-force=26,1454 //填写连接包的最大值user=neutron //用户group=neutron //组//2.3、终止任何已经存在的dnsmasq进行;禁止它的相关实例[root@network ~]# pkill dnsmasq

——>4<——

1、配置metadata agent

//1、编辑/etc/neutron/metadata_agent.ini文件并完成下列配置[root@network ~]# vim /etc/neutron/metadata_agent.ini//1.1、编辑[DEFAULT]小节,配置访问参数:[DEFAULT]......auth_url=http://controller.nice.com:5000/v2.0 //访问端点auth_region=regionOne //可用区admin_tenant_name=service //用户名称是serviceadmin_user=neutron //用户名是admin_password=NEUTRON_PASS //密码//1.2、编辑[DEFAULT]小节,配置元数据主机:[DEFAULT]......nova_metadata_ip=controller.nice.com //nova的传输节点地址//1.3、编辑[DEFAULT]小节,配置元数据代理共享机密暗号:[DEFAULT] //对策加密的方案,数据传输都是经过加密之后进行传输的,防止数据被窃取......metadata_proxy_shared_secret=METADATA_SECRET //随便起一个,对策加密的方案,默认是METADATA_SECRET//1.4、(可选)在[DEFAULT]小节中配置详细日志输出,方便排错.[DEFAULT]......debug=True//debug=True——verbose=True

2、在controller节点,编辑/etc/nova/nova.conf文件并完成下列配置

//1、编辑[neutron]小节,启用元数据代理并配置机密暗号:[root@controller ~]# vim /etc/nova/nova.conf[neutron]......service_metadata_proxy=True //默认是false,改为True开启metadata_proxy_shared_secret=METADATA_SECRET //先写它的代理暗号

3、在controller节点,重新启动compute.API服务

[root@controller ~]# systemctl restart openstack-nova-api.service //因为改了配置文件,所以需要重启api程序

——>5<——配置Open VSwitch(OVS)服务

在neutron节点配置

//1、启动OVS服务并配置开机自动启动[root@network ~]# systemctl enable openvswitch.service[root@network ~]# systemctl start openvswitch.service//2、添加外部网桥(external bridge)[root@network ~]# ovs-vsctl add-br br-ex//3、添加一个端口到外部网桥,用于连接外部物理网络[root@network ~]# ifconfigeno50332184 //外部网卡名称,用ifconfig查看获取[root@network ~]# ovs-vsctl add-port br-ex eno50332184 //将外部网卡和该网卡绑定;外部网桥就相当于该网卡了,网卡转换通过命令的方式或者通过修改配置文件的方式都可以操作。//注://将INTERFACE_NAME换成实际连接外部网卡接口名,如:eth2或eno50332208

——>6<——完成安装

//1、创建网络服务初始化脚本符号连接[root@network ~]# ln -s /etc/neutron/plugins/ml2/ml2_conf.ini /etc/neutron/plugin.ini[root@network ~]# ll /etc/neutron/plugin.inilrwxrwxrwx 1 root root 37 Jan 11 18:12 /etc/neutron/plugin.ini -> /etc/neutron/plugins/ml2/ml2_conf.ini[root@network ~]# cp /usr/lib/systemd/system/neutron-openvswitch-agent.service /usr/lib/systemd/system/neutron-openvswitch-agent.service.orig[root@network ~]# ll /usr/lib/systemd/system/neutron-openvswitch-agent.service-rw-r--r-- 1 root root 437 Oct 30 2014 /usr/lib/systemd/system/neutron-openvswitch-agent.service//2、修改网络方式,把默认的网络类型修改为多租组网的形式[root@network ~]# sed -i 's,plugins/openvswitch.ovs_neutron_plugin.ini,plugin.ini,g' /usr/lib/systemd/system/neutron-openvswitch-agent.service//3、启动网络服务并能设置开机自动启动[root@network ~]# systemctl enable neutron-openvswitch-agent.service neutron-l3-agent.service neutron-dhcp-agent.service neutron-metadata-agent.service neutron-ovs-cleanup.serviceln -s '/usr/lib/systemd/system/neutron-openvswitch-agent.service' '/etc/systemd/system/multi-user.target.wants/neutron-openvswitch-agent.service'ln -s '/usr/lib/systemd/system/neutron-l3-agent.service' '/etc/systemd/system/multi-user.target.wants/neutron-l3-agent.service'ln -s '/usr/lib/systemd/system/neutron-dhcp-agent.service' '/etc/systemd/system/multi-user.target.wants/neutron-dhcp-agent.service'ln -s '/usr/lib/systemd/system/neutron-metadata-agent.service' '/etc/systemd/system/multi-user.target.wants/neutron-metadata-agent.service'ln -s '/usr/lib/systemd/system/neutron-ovs-cleanup.service' '/etc/systemd/system/multi-user.target.wants/neutron-ovs-cleanup.service'[root@network ~]# systemctl start neutron-openvswitch-agent.service neutron-l3-agent.service neutron-dhcp-agent.service neutron-metadata-agent.service neutron-ovs-cleanup.service

——>7<——验证(在controller)节点执行下列命令:

//1、执行admin环境变量脚本[root@controller ~]# source admin-openrc.sh//2、列出neutron代理,确认启动neutron agents成功://4个效力,表示四个代理模式已经启动成功了,+--------------------------------------+--------------------+------------------+-------+----------------+---------------------------+| id | agent_type | host | alive | admin_state_up | binary |+--------------------------------------+--------------------+------------------+-------+----------------+---------------------------+| 25122ee3-54d0-4013-9e32-c752bb444874 | Metadata agent | network.nice.com | :-) | True | neutron-metadata-agent || 29e66a01-d01a-4bdb-8231-9adcf062b29b | Open vSwitch agent | network.nice.com | :-) | True | neutron-openvswitch-agent || 36e27e39-8e6b-4673-914b-ca3dd6ebf5ec | L3 agent | network.nice.com | :-) | True | neutron-l3-agent || 82964596-8d90-4001-b52a-6aa1e183c92a | DHCP agent | network.nice.com | :-) | True | neutron-dhcp-agent |+--------------------------------------+--------------------+------------------+-------+----------------+---------------------------+

——>8<——安装并配置compute1节点(若是你部署了多个compute节点的情况,重复去配置,方案是一样的)

配置先决条件

安装网络组件

配置网络通用组建

配置Modular Layer2(ML2)plug-in

配置Open VSwitch(OVS)service

配置计算服务使用网络

完成安装

验证

1、配置先决条件

compute1节点配置

//1、编辑/etc/sysctl.conf文件,使其包含下列参数://修改内核参数[root@compute1 ~]# vim /etc/sysctl.confnet.ipv4.conf.all.rp_filter=0net.ipv4.conf.default.rp_filter=0//2、使/etc/sysctl.conf文件中更改生效。//刷新一下内核参数[root@compute1 ~]# sysctl -pnet.ipv4.conf.all.rp_filter = 0net.ipv4.conf.default.rp_filter = 0

2、安装网络组件

[root@compute1 ~]# yum install openstack-neutron-ml2 openstack-neutron-openvswitchInstalled:openstack-neutron-ml2.noarch 0:2014.2-5.el7.centos openstack-neutron-openvswitch.noarch 0:2014.2-5.el7.centosDependency Installed:conntrack-tools.x86_64 0:1.4.2-3.el7 crudini.noarch 0:0.4-1.el7 dnsmasq-utils.x86_64 0:2.66-12.el7 ipset.x86_64 0:6.19-4.el7ipset-libs.x86_64 0:6.19-4.el7 libnetfilter_cthelper.x86_64 0:1.0.0-4.el7 libnetfilter_cttimeout.x86_64 0:1.0.0-2.el7 libnetfilter_queue.x86_64 0:1.0.2-2.el7openstack-neutron.noarch 0:2014.2-5.el7.centos openstack-utils.noarch 0:2014.1-3.el7.centos.1 openvswitch.x86_64 0:2.1.2-2.el7.centos.1 python-jsonrpclib.noarch 0:0.1.3-1.el7python-neutron.noarch 0:2014.2-5.el7.centosComplete!

3、配置网络通用组件

//1、编辑/etc/neutron/neutron.conf文件并完成下列操作:[root@compute1 ~]# vim /etc/neutron/neutron.conf//1.1、编辑[database]小节,注释左右connection配置项,因为计算节点不能直接连接数据库//1.2、编辑[DEFAULT]小节,配置RabbitMQ消息代理访问:[DEFAULT]......rpc_backend=rabbitrabbit_host=controller.nice.comrabbit_password=guest//1.3、编辑[DEFAULT]和[keystone_authtoken]小节,配置认证服务访问:[DEFAULT]......auth_strategy=keystone[keystone_authtoken]......auth_uri=http://controller.nice.com:5000/v2.0identity_uri=http://controller.nice.com:35357admin_tenant_name=serviceadmin_user=neutronadmin_password=NEUTRON_PASS//1.4、编辑[DEFAULT]小节,启用Modular Layer2(ML2),路由服务和重叠ip地址功能:[DEFAULT]......core_plugin=ml2service_plugins=routerallow_overlapping_ips=True//1.5、(可选)在[DEFAULT]小节中配置详细日志输出,方便排错。[DEFAULT]......verbose=True //开启日志

4、配置Modular Layer2(ML2)plug-in

//1、编辑/etc/neutron/plugins/ml2/ml2_conf.ini文件并完成下列操作:[root@compute1 ~]# vim /etc/neutron/plugins/ml2/ml2_conf.ini//1.1、编辑[ml2]小节,启用flat和generic routing encapsulation(GRE)网络类型驱动,GRE租户网络和OVS机制驱动。[ml2]......type_drivers=flat,gretenant_network_types=gremechanism_drivers=openvswitch//1.2、编辑[ml2_type_gre]小节,配置隧道标识符(id)范围:[ml2_type_gre]......tunnel_id_ranges=1:1000//1.3、编辑[securitygroup]小节,启用安装组,ipset并配置OVS iptables防火墙驱动:[securitygroup]......enable_security_group=Trueenable_ipset=Truefirewall_driver=neutron.agent.linux.iptables_firewall.OVSHybridIptablesFirewallDriver//1.4、编辑[OVS]小节,配置Open VSwitch(OVS)agent:[OVS]......[OVS][OVS]local_ip=172.16.0.10 //实例网络的IP地址tunnel_type=greenable_tunneling=True

5、配置Open VSwitch(OVS)service

//1、启动OVS服务并设置开机自动启动:[root@compute1 ~]# systemctl enable openvswitch.serviceln -s '/usr/lib/systemd/system/openvswitch.service' '/etc/systemd/system/multi-user.target.wants/openvswitch.service'[root@compute1 ~]# systemctl start openvswitch.service[root@compute1 ~]# systemctl status openvswitch.service

6、配置计算服务使用的网络

//1、编辑/etc/nova/nova.conf文件并完成下列操作:[root@compute1 ~]# vim /etc/nova/nova.conf//1.1、编辑[DEFAULT]小节,配置API接口和驱动:[DEFAULT]......network_api_class=nova.network.neutronv2.api.API //neutron提供的API接口security_group_api=neutron //neutron的安全组开启linuxnet_interface_driver=nova.network.linux_net.LinuxOVSInterfaceDriver //配置网卡驱动firewall_driver=nova.virt.firewall.NoopFirewallDriver //防火墙配置//1.2、编辑[neutron]小节,配置访问参数://配置neutron的相关连接参数[neutron]......url=http://controller.nice.com:9696 //访问端点,访问neutron的端点,内部访问的端点auth_strategy=keystone //认证方式admin_auth_url=http://controller.nice.com:35357/v2.0 //管理员的访问端点admin_tenant_name=service //租户名称admin_username=neutron //管理员用户名admin_password=NEUTRON_PASS //管理员密码

7、完成安装:

//1、创建网络服务初始化脚本的符号连接,[root@compute1 ~]# ln -s /etc/neutron/plugins/ml2/ml2_conf.ini /etc/neutron/plugin.ini[root@compute1 ~]# cp /usr/lib/systemd/system/neutron-openvswitch-agent.service /usr/lib/systemd/system/neutron-openvswitch-agent.service.orig[root@compute1 ~]# ll /usr/lib/systemd/system/neutron-openvswitch-agent.service-rw-r--r-- 1 root root 437 Oct 30 2014 /usr/lib/systemd/system/neutron-openvswitch-agent.service//2、修改网络类型[root@compute1 ~]# sed -i 's,plugins/openvswitch/ovs_neutron_plugin.ini,plugin.ini,g' /usr/lib/systemd/system/neutron-openvswitch-agent.service//2、重启计算服务:[root@compute1 ~]# systemctl restart openstack-nova-compute.service//3、启动OVS代理服务并设置开机自动启动:[root@compute1 ~]# systemctl enable neutron-openvswitch-agent.serviceln -s '/usr/lib/systemd/system/neutron-openvswitch-agent.service' '/etc/systemd/system/multi-user.target.wants/neutron-openvswitch-agent.service'[root@compute1 ~]# systemctl start neutron-openvswitch-agent.service[root@compute1 ~]# systemctl status neutron-openvswitch-agent.service

8、验证(在controller)节点执行下列命令

//1、执行admin环境变量脚本source admin-openrc.sh//2、列出neutron代理,确认启动neutron agents成功。[root@controller ~]# neutron agent-list+--------------------------------------+--------------------+-------------------+-------+----------------+---------------------------+| id | agent_type | host | alive | admin_state_up | binary |+--------------------------------------+--------------------+-------------------+-------+----------------+---------------------------+| 25122ee3-54d0-4013-9e32-c752bb444874 | Metadata agent | network.nice.com | :-) | True | neutron-metadata-agent || 29e66a01-d01a-4bdb-8231-9adcf062b29b | Open vSwitch agent | network.nice.com | :-) | True | neutron-openvswitch-agent || 36e27e39-8e6b-4673-914b-ca3dd6ebf5ec | L3 agent | network.nice.com | :-) | True | neutron-l3-agent || 82964596-8d90-4001-b52a-6aa1e183c92a | DHCP agent | network.nice.com | :-) | True | neutron-dhcp-agent || a6cdf9f1-426a-4614-ab81-659168f846cf | Open vSwitch agent | compute1.nice.com | :-) | True | neutron-openvswitch-agent |+--------------------------------------+--------------------+-------------------+-------+----------------+---------------------------+

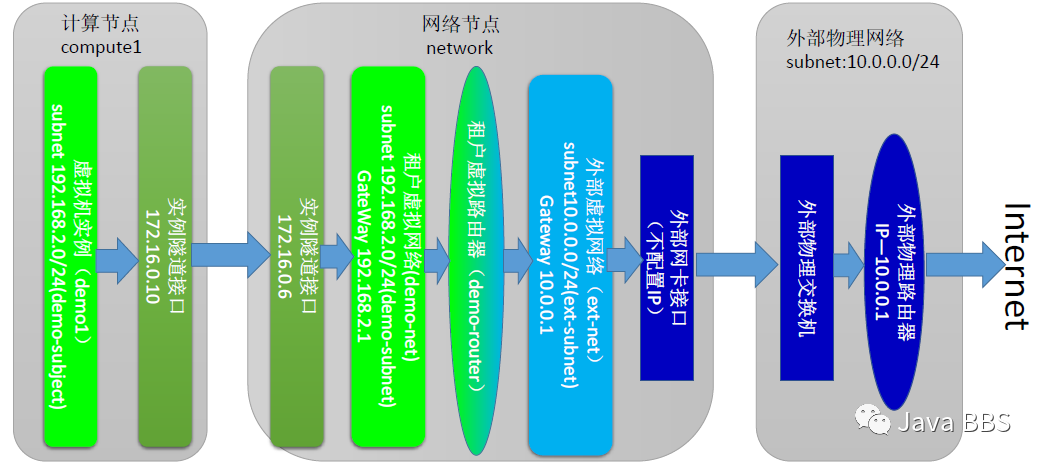

——>9<——创建第一个网络

——>10<——配置外部网络(在controller)节点执行后面的命令

1、创建一个外部网络

//1、执行admin环境变量脚本[root@controller ~]# source admin-openrc.sh//2、创建网络:创建一个外部浮动网络[root@controller ~]# neutron net-create ext-net --shared --router:external True --provider:physical_network external --provider:network_type flatCreated a new network:+---------------------------+--------------------------------------+| Field | Value |+---------------------------+--------------------------------------+| admin_state_up | True || id | 128ca157-22e0-4ef1-86af-c326e510ef89 || name | ext-net || provider:network_type | flat || provider:physical_network | external || provider:segmentation_id | || router:external | True || shared | True || status | ACTIVE || subnets | || tenant_id | 7bce6e7e6d724ad188e4f70ad9d51e17 |+---------------------------+--------------------------------------+//ext-net --shared:ext-net网络//--router:external:external在配置文件中指定的外部网络类型//True --provider:physical_network external:物理网络类型//--provider:network_type flat:flat也就是浮动网络

2、创建一个外部网络的子网:公网地址的分配地址

//1、创建子网://这里的公网地址对外提供的子网,在生生产环境中应该是你向供应商那里去申请的。//此环境没有供应商提供的公网地址,在neutron中我们设置了100.100.100.11/24 brd 100.100.100.255,假定它为公网地址,通过这个网段的地址链接到虚拟机上是没有问题的[root@controller ~]# neutron subnet-create ext-net --name ext-subnet \> --allocation-pool start=100.100.100.12,end=100.100.100.240 \> --disable-dhcp --gateway 100.100.100.11 100.100.100.0/24Created a new subnet:+-------------------+-------------------------------------------------------+| Field | Value |+-------------------+-------------------------------------------------------+| allocation_pools | {"start": "100.100.100.12", "end": "100.100.100.240"} || cidr | 100.100.100.0/24 || dns_nameservers | || enable_dhcp | False || gateway_ip | 100.100.100.11 || host_routes | || id | d81339e0-4025-454c-b858-0815d2730255 || ip_version | 4 || ipv6_address_mode | || ipv6_ra_mode | || name | ext-subnet || network_id | 128ca157-22e0-4ef1-86af-c326e510ef89 || tenant_id | 7bce6e7e6d724ad188e4f70ad9d51e17 |+-------------------+-------------------------------------------------------+//--name ext-subnet:名称为ext-subnet//--allocation-pool start=FLOATING_IP_START,end=FLOATING_IP_END:地址从哪开始从哪结束//--disable-dhcp --gateway EXTERNAL_NETWORK_GATEWAY EXTERNAL_NETWORK_CIDR:指定它的网关及网段,此环境网关指定到外部网卡上,我们没有真实网段//FLOATING_IP_STAR=起始IP//FLOATING_IP_END=结束IP//EXTERNAL_NETWORK_GATEWAY=外部网络网关//EXTERNAL_NETWORK_CIDR=外部网络网段//例如,外玩网段为:10.0.0.0/24,浮动地址范围为:10.0.0.100~10.0.0.200;网关为:10.0.0.1neutron subnet-create ext-net --name ext-subnet \--allocation-pool start=10.0.0.100,end=10.0.0.200 \--disable-dhcp gateway 10.0.0.1 10.0.0.0/25

——>10<——配置租户网络(在controller节点执行后面的命令)

创建租户网络

创建租户网络的子网

在租户网络创建一个路由器,用来连接外部我那个和租户网

1、创建一个租户网络

//1、执行demo环境变量脚本[root@controller ~]# source demo-openrc.sh//2、创建租户网络[root@controller ~]# neutron net-create demo-netCreated a new network:+-----------------+--------------------------------------+| Field | Value |+-----------------+--------------------------------------+| admin_state_up | True || id | 594c06f8-09a3-4d37-b5aa-a6f250356332 || name | demo-net || router:external | False || shared | False || status | ACTIVE || subnets | || tenant_id | 5f158b7cfb7448d18921158f9c92918f |+-----------------+--------------------------------------+

2、创建一个租户网络的子网

//1、创建子网neutron subnet-create demo-net --name demo-subnet \--gateway TENANT_NETWORK_GATEWAY TENANT_NETWORK_CIDR//TENANT_NETWORK_GATEWAY=租户网的网关//TENANT_NETWORK_CIDR=租户网的网段//这个子网就可以随便分配了,只要和之前创建的网段不要冲突就可以了//例如,租户网的网段为192.168.2.0/24,网关为192.168.2.1(网关通常默认为,1)[root@controller ~]# neutron subnet-create demo-net --name demo-subnet \> --gateway 192.168.2.1 192.168.2.0/24Created a new subnet:+-------------------+--------------------------------------------------+| Field | Value |+-------------------+--------------------------------------------------+| allocation_pools | {"start": "192.168.2.2", "end": "192.168.2.254"} || cidr | 192.168.2.0/24 || dns_nameservers | || enable_dhcp | True || gateway_ip | 192.168.2.1 || host_routes | || id | 33b3861c-9dfc-4768-b226-ccd5e85577f9 || ip_version | 4 || ipv6_address_mode | || ipv6_ra_mode | || name | demo-subnet || network_id | 594c06f8-09a3-4d37-b5aa-a6f250356332 || tenant_id | 5f158b7cfb7448d18921158f9c92918f |+-------------------+--------------------------------------------------+

3、在租户网络创建一个路由器,用来连接外部网和租户网

//1、创建路由器[root@controller ~]# neutron router-create demo-routerCreated a new router:+-----------------------+--------------------------------------+| Field | Value |+-----------------------+--------------------------------------+| admin_state_up | True || external_gateway_info | || id | 211ed5ae-7c05-44ca-9fc4-be68348cb44b || name | demo-router || routes | || status | ACTIVE || tenant_id | 5f158b7cfb7448d18921158f9c92918f |+-----------------------+--------------------------------------+//2、附加路由器到demo租户的子网:路由网卡添加,添加一个subnet的网络[root@controller ~]# neutron router-interface-add demo-router demo-subnetAdded interface 4d8bd260-1795-46b8-b61f-e2fb889d601e to router demo-router.//3、通过设置网关,使路由器附加到外部网:通过设置网关的方式,网关为ext-net,也就是我们的浮动网络[root@controller ~]# neutron router-gateway-set demo-router ext-netSet gateway for router demo-router

4、确认连接

//1、查看路由器获取到的IP:[root@controller ~]# neutron router-list+--------------------------------------+-------------+-------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------+| id | name | external_gateway_info |+--------------------------------------+-------------+-------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------+| 211ed5ae-7c05-44ca-9fc4-be68348cb44b | demo-router | {"network_id": "128ca157-22e0-4ef1-86af-c326e510ef89", "enable_snat": true, "external_fixed_ips": [{"subnet_id": "d81339e0-4025454c-b858-0815d2730255", "ip_address": "100.100.100.12"}]} |+--------------------------------------+-------------+-------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------+//这个路由上分配了一个IP地址是100.100.100.12//在物理本地笔记本上打开网络配置VMnet3,修改IPV4的地址Wie100.100.100.240和这个网段相互匹配//通过cmd窗口ping 100.100.100.12是否ping通;也就说明通过外部网络可以访问到路由器了,network节点配置完成。C:\Users\Administrator>ping 100.100.100.12正在 Ping 100.100.100.12 具有 32 字节的数据:来自 100.100.100.12 的回复: 字节=32 时间=1ms TTL=64来自 100.100.100.12 的回复: 字节=32 时间<1ms TTL=64来自 100.100.100.12 的回复: 字节=32 时间<1ms TTL=64来自 100.100.100.12 的回复: 字节=32 时间<1ms TTL=64//2、在任何一台外部主机上ping路由器获取到的外部地址

END

声明:JavaBBS论坛主要用于IT技术专题的交流学习,为开源技术爱好者提供广泛、权威的技术资料。若您在技术专题有更好的想法或者建议,欢迎交流!!!

推荐阅读

Recommended reading

JavaBBS

Git → https://www.javabbs.cn/git

JavaBBS大数据→ https://www.javabbs.cn/dsj

JavaBBS云存储→ https://www.javabbs.cn/ycc

JavaBBS数据库→ https://www.javabbs.cn/sjk

JavaBBS云计算→ https://www.javabbs.cn/yjs

JavaBBSIT.Log→ https://www.javabbs.cn/itl

JavaBBSNginx→ https://www.javabbs.cn/ngx

JavaBBSzabbix→ https://www.javabbs.cn/zbx

JavaBBSJavaSE→ https://www.javabbs.cn/jse JavaBBS社区文章→ https://www.javabbs.cn/bwz

JavaBBS社区资料→ https://www.javabbs.cn/bzl